- 理解Docker网络 Docker0

- 结论:容器和容器之间是可以相互ping通的

- 直接通过容器名称是ping不通的

- 如何解决呢? —link

- 反向可以ping通吗

- inspect 查看docker 网络配置

- 为什么tomcat03 能ping 通tomcat02 ?

- 因为tomcat03 在本地配置了tomcat02的域名解析

- 我们直接启动的命令 —net bridge ,这个就是我们的docker0

- docker0特点 默认 域名不可以访问,—link可以打通连接

- 我们可以自定义网络

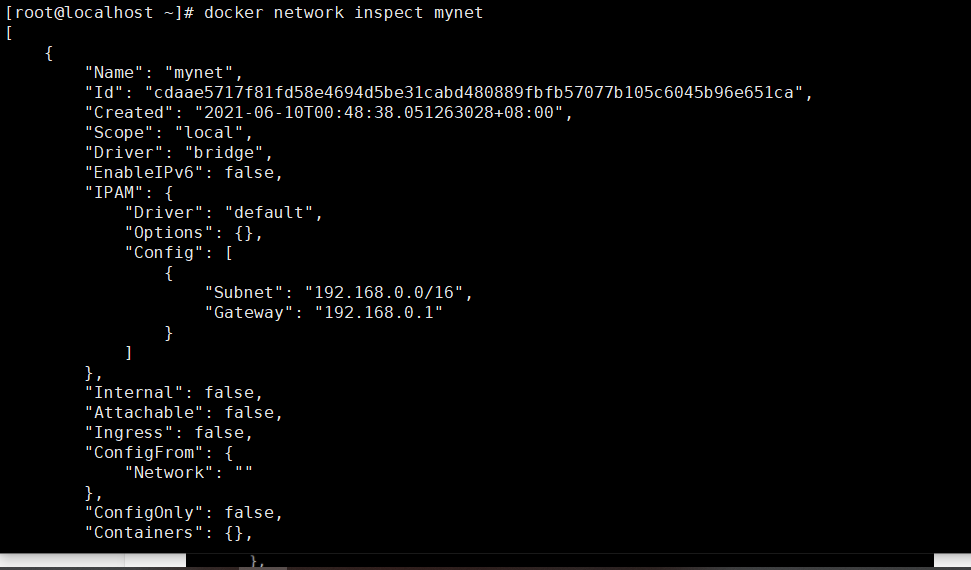

- —driver bridge 桥接

- —subnet 192.168.0.0/16 子网

- —gateway 192.168.0.1 网关

- 可以看到自定义网络的Containers下出现了两个刚刚运行起来的容器,并且已经分配好了ip

- 现在我们再来测试一下两个容器互ping

- tomcat-net-01 ping tomcat-net-02

- tomcat-net-02 ping tomcat-net-01

- 测试表明在自定义网络下两个容器可以互相ping通

- 测试打通 docker0网段下的容器 tomcat01 连接上我们自定义的网段 mynet

- inspect mynet 查看 可以看到tomcat01加到了mynet网段并且被分配了ip

- inspect tomcat01 的网络配置

- 可以看到tomcat01的网络配置里增加了nynet ,也就是所谓的一个容器两个IP地址

- 连通测试 tomcat01 ping tomcat-net-01

- 成功

- 创建网卡

- 通过脚本创建六个redis配置

- 通过脚本运行六个redis

- 进入redis 创建集群

- 查看 redis集群的节点信息

- 玩一下 如果master 节点挂了 slave 能不能顶替上来

- 设置一个键值对 set a b

- 可以看到这个键值对被存放到了172.38.0.13:6379这个地址对应的redis-3节点上

- 我们在外面将redis-3容器停止,模拟发生故障的情况

- 回到redis集群 get a 看看能否拿到 b

- 可以看到redis-3的slave节点redis-4顶替了上来 ,查看一下现在的节点状态

- redis集群搭建完成

理解Docker网络 Docker0

清空所有环境

测试

# 查看网络地址 发现三种网络 本地回环地址、内网地址、docker0[root@localhost ~]# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000link/ether 08:00:27:60:55:fb brd ff:ff:ff:ff:ff:ffinet 192.168.56.99/24 brd 192.168.56.255 scope global enp0s3valid_lft forever preferred_lft foreverinet6 fe80::c6b5:315a:a0f2:9aa0/64 scope linkvalid_lft forever preferred_lft forever3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000link/ether 08:00:27:2a:b4:08 brd ff:ff:ff:ff:ff:ffinet 10.0.3.15/24 brd 10.0.3.255 scope global dynamic enp0s8valid_lft 75628sec preferred_lft 75628secinet6 fe80::b16c:4f2a:5b97:5f59/64 scope linkvalid_lft forever preferred_lft forever4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWNlink/ether 02:42:34:2f:29:8b brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:34ff:fe2f:298b/64 scope linkvalid_lft forever preferred_lft forever

问题,docker是如何处理容器网络访问的?

# 启动一个tomcat[root@localhost ~]# docker run -d -P --name tomcat01 tomcat# 查看容器内部网络地址 ip addr 发现容器启动的时候会得到一个 eth0@ifxx docker分配的ip地址[root@localhost ~]# docker exec -it tomcat01 ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever91: eth0@if92: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever# 思考:Linux能不能ping通容器内部?[root@localhost ~]# ping 172.17.0.2PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.094 ms64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.048 ms64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.045 ms# Linux可以 ping 通docker容器内部

原理

- docker我们每启动一个docker 容器,docker 就会给docker容器分配一个ip,我们只要安装了docker,就会有一个网卡docker0 ,桥接模式,使用的技术是evth-pair 技术

再次测试ip addr

- 再启动一个容器测试 发现又多了一对网卡

# 我们发现这些容器的带来的网卡,都是一对一对的# evth-pair 就是一对的虚拟设备接口,他们都是成对出现的,一端连着协议,一端彼此相连# 正因为有这个特性,evth-pair 充当一个桥梁,连接各种虚拟网络设备# Docker容器之间的连接,OVS的连接,都是使用 evth-pair 技术

- 我们来测试docker中两个容器是否能ping通 ``` [root@localhost ~]# docker exec -it tomcat02 ping 172.17.0.2 PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data. 64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.066 ms 64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.053 ms 64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.053 ms

结论:容器和容器之间是可以相互ping通的

网络拓扑图<br />结论: tomcat01 和 tomcat02 是公用的一个路由器,docker0<br />所有的容器不指定网络的情况下,都是docker0路由的,docker会给我们的容器分配一个默认的可用ip<br />255.255.0.1/16 大概可以分配 255*255 - 2 个ip> <a name="Mo7Va"></a>#### 小结Docker使用的是Linux的桥接,宿主机是一个Docker容器的网桥 docker0<br />Docker中所有网络接口都是虚拟的,虚拟的转发效率高(内网传递文件)<br />只要容器删除,对应的网桥一对就没了!<br />容器默认网络配置过程:<br />先创建一个docker0的网桥,使用Veth pair创建一对虚拟网卡,一端放到新创建的容器中,并重命名eth0,另一端放到宿主机中,以veth+随机7个字符串名字命名,并将这个网络设备加入到docker0网桥中,网桥自动为容器分配一个IP,并设置docker0的IP为容器默认网关。同时在iptables添加SNAT转换网桥段IP,以便容器访问外网。<br />Veth par是用于不同network namespace间进行通信的方式,而network namespace是实现隔离网络。<a name="x2L6Y"></a>## --link> 思考一个场景:我们编写了一个微服务,database url=ip,项目不重启,数据库ip换掉了,我们希望可以处理这个问题,也就是通过容器名称来访问容器。

直接通过容器名称是ping不通的

[root@localhost ~]# docker exec -it tomcat01 ping tomcat02 ping: tomcat02: Temporary failure in name resolution

如何解决呢? —link

[root@localhost ~]# docker run -d -P —name tomcat03 —link tomcat02 tomcat c54fcf9a831490f32e38d669c053a48e7cbc75f43612094b171b63b65f3af20b [root@localhost ~]# docker exec -it tomcat03 ping tomcat02 PING tomcat02 (172.17.0.3) 56(84) bytes of data. 64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.072 ms 64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.044 ms 64 bytes from tomcat02 (172.17.0.3): icmp_seq=3 ttl=64 time=0.054 ms

反向可以ping通吗

[root@localhost ~]# docker exec -it tomcat02 ping tomcat03 ping: tomcat03: Temporary failure in name resolution

inspect 查看docker 网络配置

[root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 2b50586c472a bridge bridge local a30d3dea12d7 host host local c7f552708cb5 none null local [root@localhost ~]# docker network inspect 2b50586c472a [ { “Name”: “bridge”, “Id”: “2b50586c472a79b46b4c3c8b742e6b2cf856034f9fc71fc576873be1324d3bad”, “Created”: “2021-06-08T22:01:22.502021943+08:00”, “Scope”: “local”, “Driver”: “bridge”, “EnableIPv6”: false, “IPAM”: { “Driver”: “default”, “Options”: null, “Config”: [ { “Subnet”: “172.17.0.0/16” } ] }, “Internal”: false, “Attachable”: false, “Ingress”: false, “ConfigFrom”: { “Network”: “” }, “ConfigOnly”: false, “Containers”: { “5589f8722cc687743da2947a7176af64fcd83e11d1818f5aa1f4314a64852013”: { “Name”: “tomcat02”, “EndpointID”: “aee54b08842ffc64347bd08dc08ff8cdea9ac80202c8de175dfef50038b1a98c”, “MacAddress”: “02:42:ac:11:00:03”, “IPv4Address”: “172.17.0.3/16”, “IPv6Address”: “” }, “c54fcf9a831490f32e38d669c053a48e7cbc75f43612094b171b63b65f3af20b”: { “Name”: “tomcat03”, “EndpointID”: “e84d93b3687942d2e1c9ad2ae5a8b91ce083e41f623391f00465f09849a6906b”, “MacAddress”: “02:42:ac:11:00:04”, “IPv4Address”: “172.17.0.4/16”, “IPv6Address”: “” }, “e96c333a0ebb45a78d273f43291a5658a7afa44b54e92ce814622465daf3f1ce”: { “Name”: “tomcat01”, “EndpointID”: “4599a67a3b186ddacecfb0f004cdcb8b14f0fa2f1add0b72df5eb617f7021abf”, “MacAddress”: “02:42:ac:11:00:02”, “IPv4Address”: “172.17.0.2/16”, “IPv6Address”: “” } }, “Options”: { “com.docker.network.bridge.default_bridge”: “true”, “com.docker.network.bridge.enable_icc”: “true”, “com.docker.network.bridge.enable_ip_masquerade”: “true”, “com.docker.network.bridge.host_binding_ipv4”: “0.0.0.0”, “com.docker.network.bridge.name”: “docker0”, “com.docker.network.driver.mtu”: “1500” }, “Labels”: {} } ]

为什么tomcat03 能ping 通tomcat02 ?

因为tomcat03 在本地配置了tomcat02的域名解析

[root@localhost ~]# docker exec -it tomcat03 cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.3 tomcat02 5589f8722cc6 172.17.0.4 c54fcf9a8314

结论:--link就是在容器的hosts配置中增加了一个DNS解析 如172.17.0.3 tomcat02 5589f8722cc6<br />我们现在玩 docker 已经不建议使用--link了<br />一般使用自定义网络<br />docker0的问题:不支持容器名连接访问<a name="aejqu"></a>## 自定义网络> <a name="IanHF"></a>#### 查看所有的 docker 网络

[root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 2b50586c472a bridge bridge local a30d3dea12d7 host host local c7f552708cb5 none null local

<a name="YLzj9"></a>### 网络模式docker支持四种网络模式,使用--net选项指定- bridge,--net=bridge,默认docker与容器使用nat网络,一般分配IP是172.17.0.0/16网段,要想改为其他网段,可以直接修改网桥IP地址,例如:$ sudo ifconfig docker0 192.168.10.1 netmask 255.255.255.0- none ,--net=none,获得独立的network namespace,但是,并不为容器进行任何网络配置,需要我们自己手动配置。- host,--net=host,如果指定此模式,容器将不会获得一个独立的network namespace,而是和宿主机共个。容器将不会虚拟出自己的网卡,IP等,而是使用宿主机的IP和端口,也就是说如果容器是个web,那直接访问宿主机:端口,不需要做NAT转换,跟在宿主机跑web一样。容器中除了网络,其他都还是隔离的。- container,--net=container:NAME_or_ID,与指定的容器共同使用网络,也没有网卡,IP等,两个容器除了网络,其他都还是隔离的。<br />测试

我们直接启动的命令 —net bridge ,这个就是我们的docker0

docker run -d -P —name tomcat01 tomcat docker run -d -P —name tomcat01 —net bridge tomcat

docker0特点 默认 域名不可以访问,—link可以打通连接

我们可以自定义网络

—driver bridge 桥接

—subnet 192.168.0.0/16 子网

—gateway 192.168.0.1 网关

[root@localhost ~]# docker network create —driver bridge —subnet 192.168.0.0/16 —gateway 192.168.0.1 mynet cdaae5717f81fd58e4694d5be31cabd480889fbfb57077b105c6045b96e651ca [root@localhost ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 2b50586c472a bridge bridge local a30d3dea12d7 host host local cdaae5717f81 mynet bridge local c7f552708cb5 none null local

我们自己的网络就创建好了<br /><br />用自己的网络跑两个容器测试一下

[root@localhost ~]# docker run -d -P —net mynet —name tomcat-net-01 tomcat 7d1cd92d1b567abb39ae68a924d75be5e9984dca7801045d548752daa7c78272 [root@localhost ~]# docker run -d -P —net mynet —name tomcat-net-02 tomcat 93026acd906c200b91fdcde6001bcedb146f65760ef62deea5366d0208a1f4c9 [root@localhost ~]# docker network inspect mynet [ { “Name”: “mynet”, “Id”: “cdaae5717f81fd58e4694d5be31cabd480889fbfb57077b105c6045b96e651ca”, “Created”: “2021-06-10T00:48:38.051263028+08:00”, “Scope”: “local”, “Driver”: “bridge”, “EnableIPv6”: false, “IPAM”: { “Driver”: “default”, “Options”: {}, “Config”: [ { “Subnet”: “192.168.0.0/16”, “Gateway”: “192.168.0.1” } ] }, “Internal”: false, “Attachable”: false, “Ingress”: false, “ConfigFrom”: { “Network”: “” }, “ConfigOnly”: false, “Containers”: { “7d1cd92d1b567abb39ae68a924d75be5e9984dca7801045d548752daa7c78272”: { “Name”: “tomcat-net-01”, “EndpointID”: “06af7ff9574bc1ffbdb2c9d080b26acd9c80f0e79647989cc44b5ac51e36cd15”, “MacAddress”: “02:42:c0:a8:00:02”, “IPv4Address”: “192.168.0.2/16”, “IPv6Address”: “” }, “93026acd906c200b91fdcde6001bcedb146f65760ef62deea5366d0208a1f4c9”: { “Name”: “tomcat-net-02”, “EndpointID”: “0d045872bc8faac45a6de5f32ecee379060afc0b844da54b2d12fe555c830356”, “MacAddress”: “02:42:c0:a8:00:03”, “IPv4Address”: “192.168.0.3/16”, “IPv6Address”: “” } }, “Options”: {}, “Labels”: {} } ]

可以看到自定义网络的Containers下出现了两个刚刚运行起来的容器,并且已经分配好了ip

现在我们再来测试一下两个容器互ping

tomcat-net-01 ping tomcat-net-02

[root@localhost ~]# docker exec -it tomcat-net-01 ping tomcat-net-02 PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data. 64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.075 ms 64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.057 ms

tomcat-net-02 ping tomcat-net-01

[root@localhost ~]# docker exec -it tomcat-net-02 ping tomcat-net-01 PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data. 64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.040 ms 64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.060 ms

测试表明在自定义网络下两个容器可以互相ping通

结论:我们自定义的网络,docker都已经维护好了对应关系,推荐我们平时这样使用网络。<br />好处:不同集群使用不同网络,保证集群是安全和健康的。

<a name="t1uze"></a>

### 网络连通

> <a name="CXBB0"></a>

#### 思考:如果一个在docker0网段的容器想要和自定义网段的容器互ping应该如何设置?

[root@localhost ~]# docker network —help

Usage: docker network COMMAND

Manage networks

Commands: connect Connect a container to a network create Create a network disconnect Disconnect a container from a network inspect Display detailed information on one or more networks ls List networks prune Remove all unused networks rm Remove one or more networks

Run ‘docker network COMMAND —help’ for more information on a command.

[root@localhost ~]# docker network connect —help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options: —alias strings Add network-scoped alias for the container —driver-opt strings driver options for the network —ip string IPv4 address (e.g., 172.30.100.104) —ip6 string IPv6 address (e.g., 2001:db8::33) —link list Add link to another container —link-local-ip strings Add a link-local address for the container

使用network connect进行网络连通

测试打通 docker0网段下的容器 tomcat01 连接上我们自定义的网段 mynet

[root@localhost ~]# docker network connect mynet tomcat01

inspect mynet 查看 可以看到tomcat01加到了mynet网段并且被分配了ip

[root@localhost ~]# docker network inspect mynet [ { “Name”: “mynet”, “Id”: “cdaae5717f81fd58e4694d5be31cabd480889fbfb57077b105c6045b96e651ca”, “Created”: “2021-06-10T00:48:38.051263028+08:00”, “Scope”: “local”, “Driver”: “bridge”, “EnableIPv6”: false, “IPAM”: { “Driver”: “default”, “Options”: {}, “Config”: [ { “Subnet”: “192.168.0.0/16”, “Gateway”: “192.168.0.1” } ] }, “Internal”: false, “Attachable”: false, “Ingress”: false, “ConfigFrom”: { “Network”: “” }, “ConfigOnly”: false, “Containers”: { “7d1cd92d1b567abb39ae68a924d75be5e9984dca7801045d548752daa7c78272”: { “Name”: “tomcat-net-01”, “EndpointID”: “06af7ff9574bc1ffbdb2c9d080b26acd9c80f0e79647989cc44b5ac51e36cd15”, “MacAddress”: “02:42:c0:a8:00:02”, “IPv4Address”: “192.168.0.2/16”, “IPv6Address”: “” }, “93026acd906c200b91fdcde6001bcedb146f65760ef62deea5366d0208a1f4c9”: { “Name”: “tomcat-net-02”, “EndpointID”: “0d045872bc8faac45a6de5f32ecee379060afc0b844da54b2d12fe555c830356”, “MacAddress”: “02:42:c0:a8:00:03”, “IPv4Address”: “192.168.0.3/16”, “IPv6Address”: “” }, “a5ee74a9b869c0ff43f4dba87326ee25c4831c552b37040569df9561ed24392f”: { “Name”: “tomcat01”, “EndpointID”: “ed5c463ce8178cd321c101c3d71e70a77ba8f10f21758e2bd158b90a9a3c0b3a”, “MacAddress”: “02:42:c0:a8:00:04”, “IPv4Address”: “192.168.0.4/16”, “IPv6Address”: “” } }, “Options”: {}, “Labels”: {} } ]

inspect tomcat01 的网络配置

[root@localhost ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a5ee74a9b869 tomcat “catalina.sh run” 4 minutes ago Up 4 minutes 0.0.0.0:49155->8080/tcp, :::49155->8080/tcp tomcat01 [root@localhost ~]# docker inspect a5ee74a9b869 … “Networks”: { “bridge”: { “IPAMConfig”: null, “Links”: null, “Aliases”: null, “NetworkID”: “a6bb2c9c848bbe6fe44e8ce247e6433977757561990fe6013cbd93168317db46”, “EndpointID”: “109f4b17faa433da3582889d0df43a1cd3aff293c440530f452c7299070fa123”, “Gateway”: “172.17.0.1”, “IPAddress”: “172.17.0.2”, “IPPrefixLen”: 16, “IPv6Gateway”: “”, “GlobalIPv6Address”: “”, “GlobalIPv6PrefixLen”: 0, “MacAddress”: “02:42:ac:11:00:02”, “DriverOpts”: null }, “mynet”: { “IPAMConfig”: {}, “Links”: null, “Aliases”: [ “a5ee74a9b869” ], “NetworkID”: “cdaae5717f81fd58e4694d5be31cabd480889fbfb57077b105c6045b96e651ca”, “EndpointID”: “ed5c463ce8178cd321c101c3d71e70a77ba8f10f21758e2bd158b90a9a3c0b3a”, “Gateway”: “192.168.0.1”, “IPAddress”: “192.168.0.4”, “IPPrefixLen”: 16, “IPv6Gateway”: “”, “GlobalIPv6Address”: “”, “GlobalIPv6PrefixLen”: 0, “MacAddress”: “02:42:c0:a8:00:04”, “DriverOpts”: {} } …

可以看到tomcat01的网络配置里增加了nynet ,也就是所谓的一个容器两个IP地址

连通测试 tomcat01 ping tomcat-net-01

[root@localhost ~]# docker exec -it tomcat01 ping tomcat-net-01 PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data. 64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.063 ms 64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.061 ms 64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=3 ttl=64 time=0.058 ms

成功

结论:假设要跨网络操作别人,就需要使用docker network connect 进行连通。

<a name="Sf5lr"></a>

## 实战:部署Redis集群

shell脚本

创建网卡

docker network create redis —subnet 172.38.0.0/16

通过脚本创建六个redis配置

for port in $(seq 1 6);\ do \ mkdir -p /mydata/redis/node-${port}/conf touch /mydata/redis/node-${port}/conf/redis.conf cat << EOF >> /mydata/redis/node-${port}/conf/redis.conf port 6379 bind 0.0.0.0 cluster-enabled yes cluster-config-file nodes.conf cluster-node-timeout 5000 cluster-announce-ip 172.38.0.1${port} cluster-announce-port 6379 cluster-announce-bus-port 16379 appendonly yes EOF done

通过脚本运行六个redis

for port in $(seq 1 6);\ do \ docker run -p 637${port}:6379 -p 1637${port}:16379 —name redis-${port} \ -v /mydata/redis/node-${port}/data:/data \ -v /mydata/redis/node-${port}/conf/redis.conf:/etc/redis/redis.conf \ -d —net redis —ip 172.38.0.1${port} redis redis-server /etc/redis/redis.conf done

进入redis 创建集群

[root@localhost /]# docker exec -it redis-1 /bin/bash root@3c25a497c8cb:/data# redis-cli —cluster create 172.38.0.11:6379 172.38.0.12:6379 172.38.0.13:6379 172.38.0.14:6379 172.38.0.15:6379 172.38.0.16:6379 —cluster-replicas 1

Performing hash slots allocation on 6 nodes… Master[0] -> Slots 0 - 5460 Master[1] -> Slots 5461 - 10922 Master[2] -> Slots 10923 - 16383 Adding replica 172.38.0.15:6379 to 172.38.0.11:6379 Adding replica 172.38.0.16:6379 to 172.38.0.12:6379 Adding replica 172.38.0.14:6379 to 172.38.0.13:6379 M: 058e7b9db9b1dda112d8659de7a208aad0c947cf 172.38.0.11:6379 slots:[0-5460] (5461 slots) master M: be64677a8e577c53a118879f2cd9f962e9c17c01 172.38.0.12:6379 slots:[5461-10922] (5462 slots) master M: 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 172.38.0.13:6379 slots:[10923-16383] (5461 slots) master S: ea10a97136edd6278af10e8e49fb7b0881143464 172.38.0.14:6379 replicates 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 S: 537c52d697324b5e12767da5f0cf7f26ba0818d9 172.38.0.15:6379 replicates 058e7b9db9b1dda112d8659de7a208aad0c947cf S: 9852897ae0abb79af894d0614ecc87476d584934 172.38.0.16:6379 replicates be64677a8e577c53a118879f2cd9f962e9c17c01 Can I set the above configuration? (type ‘yes’ to accept): yes Nodes configuration updated Assign a different config epoch to each node Sending CLUSTER MEET messages to join the cluster Waiting for the cluster to join . Performing Cluster Check (using node 172.38.0.11:6379) M: 058e7b9db9b1dda112d8659de7a208aad0c947cf 172.38.0.11:6379 slots:[0-5460] (5461 slots) master 1 additional replica(s) M: 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 172.38.0.13:6379 slots:[10923-16383] (5461 slots) master 1 additional replica(s) M: be64677a8e577c53a118879f2cd9f962e9c17c01 172.38.0.12:6379 slots:[5461-10922] (5462 slots) master 1 additional replica(s) S: 537c52d697324b5e12767da5f0cf7f26ba0818d9 172.38.0.15:6379 slots: (0 slots) slave replicates 058e7b9db9b1dda112d8659de7a208aad0c947cf S: ea10a97136edd6278af10e8e49fb7b0881143464 172.38.0.14:6379 slots: (0 slots) slave replicates 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 S: 9852897ae0abb79af894d0614ecc87476d584934 172.38.0.16:6379 slots: (0 slots) slave replicates be64677a8e577c53a118879f2cd9f962e9c17c01 [OK] All nodes agree about slots configuration. Check for open slots… Check slots coverage… [OK] All 16384 slots covered.

查看 redis集群的节点信息

root@3c25a497c8cb:/data# redis-cli -c 127.0.0.1:6379> cluster info cluster_state:ok cluster_slots_assigned:16384 cluster_slots_ok:16384 cluster_slots_pfail:0 cluster_slots_fail:0 cluster_known_nodes:6 cluster_size:3 cluster_current_epoch:6 cluster_my_epoch:1 cluster_stats_messages_ping_sent:607 cluster_stats_messages_pong_sent:614 cluster_stats_messages_sent:1221 cluster_stats_messages_ping_received:609 cluster_stats_messages_pong_received:607 cluster_stats_messages_meet_received:5 cluster_stats_messages_received:1221 127.0.0.1:6379> cluster nodes 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 172.38.0.13:6379@16379 master - 0 1623728651000 3 connected 10923-16383 be64677a8e577c53a118879f2cd9f962e9c17c01 172.38.0.12:6379@16379 master - 0 1623728651558 2 connected 5461-10922 537c52d697324b5e12767da5f0cf7f26ba0818d9 172.38.0.15:6379@16379 slave 058e7b9db9b1dda112d8659de7a208aad0c947cf 0 1623728652000 1 connected ea10a97136edd6278af10e8e49fb7b0881143464 172.38.0.14:6379@16379 slave 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 0 1623728651000 3 connected 9852897ae0abb79af894d0614ecc87476d584934 172.38.0.16:6379@16379 slave be64677a8e577c53a118879f2cd9f962e9c17c01 0 1623728652582 2 connected 058e7b9db9b1dda112d8659de7a208aad0c947cf 172.38.0.11:6379@16379 myself,master - 0 1623728652000 1 connected 0-5460

玩一下 如果master 节点挂了 slave 能不能顶替上来

设置一个键值对 set a b

127.0.0.1:6379> set a b -> Redirected to slot [15495] located at 172.38.0.13:6379 OK

可以看到这个键值对被存放到了172.38.0.13:6379这个地址对应的redis-3节点上

我们在外面将redis-3容器停止,模拟发生故障的情况

[root@localhost ~]# docker stop redis-3 redis-3

回到redis集群 get a 看看能否拿到 b

root@3c25a497c8cb:/data# redis-cli -c 127.0.0.1:6379> get a -> Redirected to slot [15495] located at 172.38.0.14:6379 “b”

可以看到redis-3的slave节点redis-4顶替了上来 ,查看一下现在的节点状态

172.38.0.14:6379> cluster nodes 058e7b9db9b1dda112d8659de7a208aad0c947cf 172.38.0.11:6379@16379 master - 0 1623729036000 1 connected 0-5460 9852897ae0abb79af894d0614ecc87476d584934 172.38.0.16:6379@16379 slave be64677a8e577c53a118879f2cd9f962e9c17c01 0 1623729035797 2 connected 537c52d697324b5e12767da5f0cf7f26ba0818d9 172.38.0.15:6379@16379 slave 058e7b9db9b1dda112d8659de7a208aad0c947cf 0 1623729036000 1 connected be64677a8e577c53a118879f2cd9f962e9c17c01 172.38.0.12:6379@16379 master - 0 1623729036826 2 connected 5461-10922 5dcbb44dff5dbf6928ae735777ba0e07ff9f2a60 172.38.0.13:6379@16379 master,fail - 1623728900498 1623728898458 3 connected ea10a97136edd6278af10e8e49fb7b0881143464 172.38.0.14:6379@16379 myself,master - 0 1623729036000 7 connected 10923-16383

redis集群搭建完成

```