1、operator介绍

operator是CoreOS公司开发,用于扩展k8s的api或特定应用程序的控制器,它用来创建、配置、管理复杂的有状态应用。

Prometheus Operator 是 CoreOS 开源的一套用于管理在 Kubernetes 集群上的 Prometheus 控制器,它是为了简化在 Kubernetes 上部署、管理和运行 Prometheus 和 Alertmanager 集群。

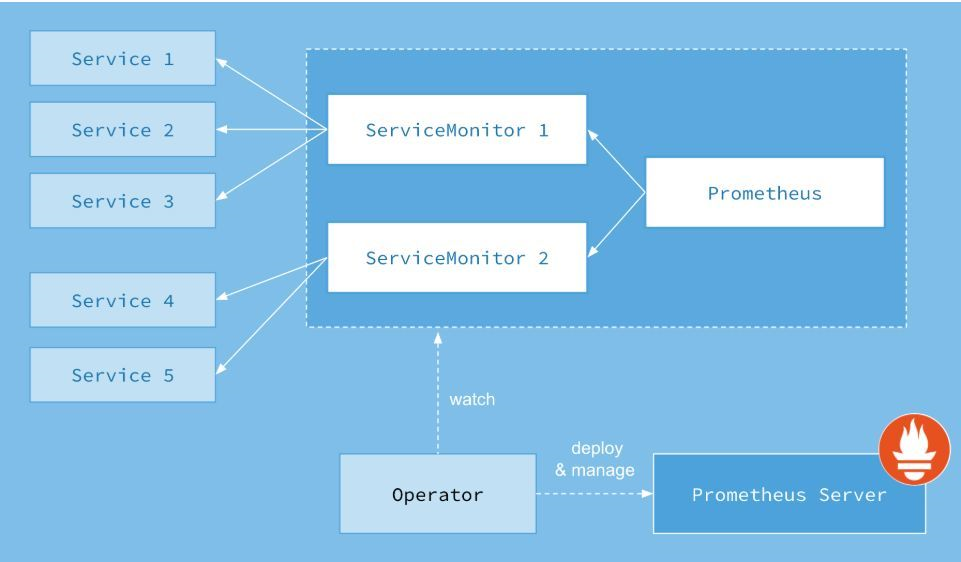

架构图如下:

Operator: Operator 资源会根据自定义资源(Custom Resource Definition / CRDs)来部署和管理 Prometheus Server,同时监控这些自定义资源事件的变化来做相应的处理,是整个系统的控制中心。

Prometheus: Prometheus 资源是声明性地描述 Prometheus 部署的期望状态。

Prometheus Server: Operator 根据自定义资源 Prometheus 类型中定义的内容而部署的 Prometheus Server 集群,这些自定义资源可以看作是用来管理 Prometheus Server 集群的 StatefulSets 资源。

ServiceMonitor: ServiceMonitor 也是一个自定义资源,它描述了一组被 Prometheus 监控的 targets 列表。该资源通过 Labels 来选取对应的 Service Endpoint,让 Prometheus Server 通过选取的 Service 来获取 Metrics 信息。

Service: Service 资源主要用来对应 Kubernetes 集群中的 Metrics Server Pod,来提供给 ServiceMonitor 选取让 Prometheus Server 来获取信息。简单的说就是 Prometheus 监控的对象,例如之前了解的 Node Exporter Service、Mysql Exporter Service 等等。

Alertmanager: Alertmanager 也是一个自定义资源类型,由 Operator 根据资源描述内容来部署 Alertmanager 集群。

2、operator部署

2.1 问题

1、对于有状态应用,怎么样指定机器安装?

2、local-pv如何配置?

3、alertmanager告警全局配置文件如何设定?

4、如何抓取相关服务的metrics?

5、高可用如何搭建、如何暴露服务ip及端口?

6、如何调取相关api接口增加、删除、更新相关告警项?

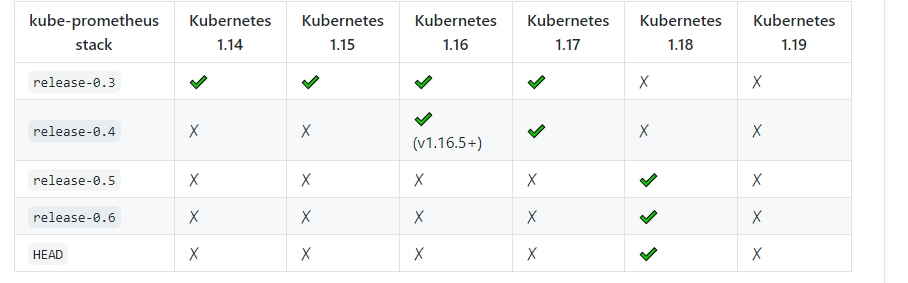

2.3 选取版本

查看官网:https://github.com/prometheus-operator/kube-prometheus,找到符合自己k8s集群版本

所以我们使用release-0.2

wget https://github.com/coreos/prometheus-operator/archive/v0.2.0.tar.gz

2.4 部署

2.4.1部署operator&Adapter

前提操作

查看污点

查看污点kubectl describe node master-01.aipaas.japan|grep Taints消除污点kubectl taint node master-1 node-role.kubernetes.io/master-

mkdir -pv operator node-exporter alertmanager grafana kube-state-metrics prometheus-k8s serviceMonitor adapter ingress local-pvmv *-serviceMonitor* serviceMonitor/mv 0prometheus-operator* operator/mv grafana-* grafana/mv kube-state-metrics-* kube-state-metrics/mv alertmanager-* alertmanager/mv node-exporter-* node-exporter/mv prometheus-adapter* adapter/mv prometheus-* prometheus-k8s/

安装operator&安装adapter

adapter

我们这里使用一个名为 Prometheus-Adapter 的 k8s 的 API 扩展应用,它可以使用用户自定义的 Prometheus 查询来使用 k8s 资源和自定义指标 API。

接下来我们将 Prometheus-Adapter 安装到集群中,并添加一个规则来跟踪每个 Pod 的请求。规则的定义我们可以参考官方文档,每个规则大致可以分为4个部分:

Discovery:它指定 Adapter 应该如何找到该规则的所有 Prometheus 指标

Association:指定 Adapter 应该如何确定和特定的指标关联的 Kubernetes 资源

Naming:指定 Adapter 应该如何在自定义指标 API 中暴露指标

Querying:指定如何将对一个获多个 Kubernetes 对象上的特定指标的请求转换为对 Prometheus 的查询

cd operator/kubectl apply -f ./[root@hf-aipaas-172-31-243-137 operator]# kubectl get crd -n monitoringNAME CREATED ATalertmanagers.monitoring.coreos.com 2020-08-12T13:47:04Zpodmonitors.monitoring.coreos.com 2020-08-12T13:47:04Zprometheuses.monitoring.coreos.com 2020-08-12T13:47:04Zprometheusrules.monitoring.coreos.com 2020-08-12T13:47:05Zservicemonitors.monitoring.coreos.com 2020-08-12T13:47:05Zcd adapterkubectl apply -f ./

2.4.2 部署local-pv

创建本地local-strorage给prometheus存储使用

cd local-pvpv配置:apiVersion: v1kind: PersistentVolumemetadata:name: prometheus-pv-243-137-k8sspec:capacity:storage: 10GivolumeMode: FilesystemaccessModes:- ReadWriteOncepersistentVolumeReclaimPolicy: RetainstorageClassName: local-storagelocal:path: /prometheus-k8snodeAffinity:required:nodeSelectorTerms:- matchExpressions:- key: kubernetes.io/hostnameoperator: Invalues:- hf-aipaas-172-31-243-137kubectl apply -f ./

2.4.3 部署prometheus服务

prometheus配置,prometheus-prometheus.yaml

apiVersion: monitoring.coreos.com/v1kind: Prometheusmetadata:labels:prometheus: k8sname: k8snamespace: monitoringspec:alerting:alertmanagers:- name: alertmanager-k8snamespace: monitoringport: alertretention: 30d # 存储时间storage: # 增加本地设置的存储local-storagevolumeClaimTemplate:spec:storageClassName: local-storage #local-pv 指定名字resources:requests:storage: 10Gi #存储大小baseImage: quay.io/prometheus/prometheusnodeSelector:prometheus: deployed # 选取节点名称podMonitorSelector: {}replicas: 2 # 部署两套evaluationInterval: 15s#secrets: #增加etcd监控使用#- etcd-certsruleSelector:matchLabels:prometheus: k8srole: alert-rulesruleNamespaceSelector: {}enableAdminAPI: truequery:maxSamples: 5000000000securityContext:fsGroup: 2000runAsNonRoot: truerunAsUser: 1000#additionalScrapeConfigs: #增加的联邦配置# name: metrics-prometheus-additional-configs# key: prometheus-janus.yamlserviceAccountName: prometheus-k8sserviceMonitorNamespaceSelector: {}serviceMonitorSelector:matchLabels:monitor: k8s # 匹配抓取的名字version: v2.14.0

标记node标签

# 添加标签kubectl label nodes master-02.aipaas.japan prometheus=deployedkubectl label nodes master-03.aipaas.japan prometheus=deployedkubectl label nodes master-03.aipaas.japan monitor=k8skubectl label nodes master-02.aipaas.japan monitor=k8skubectl label nodes master-01.aipaas.japan monitor=k8skubectl label nodes master-02.aipaas.japan monitoring=operatorkubectl label nodes master-03.aipaas.japan monitoring=operator# 查看标签kubectl describe node hf-aipaas-172-31-243-137kubectl describe node hf-aipaas-172-31-243-137

安装prometheus**

cd prometheus-k8skubectl apply -f ./# 查看状态[root@hf-aipaas-172-31-243-137 prometheus-k8s]# kubectl get pod -n monitoringNAME READY STATUS RESTARTS AGEprometheus-k8s-0 3/3 Running 0 28sprometheus-k8s-1 3/3 Running 0 46sprometheus-operator-789d9c99c7-mtt78 1/1 Running 0 26m[root@hf-aipaas-172-31-243-137 prometheus-k8s]# kubectl get svc -n monitoringNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEprometheus-k8s ClusterIP 10.99.144.165 <none> 9090/TCP 3m34sprometheus-operated ClusterIP None <none> 9090/TCP 3m34sprometheus-operator ClusterIP None <none> 8080/TCP 26m

2.4.4 部署alartmanager服务

编辑alertmanager-config-HA1.yaml

configmap

Pod可以通过三种方式来使用ConfigMap,分别为:

将ConfigMap中的数据设置为环境变量

将ConfigMap中的数据设置为命令行参数

使用Volume将ConfigMap作为文件或目录挂载

apiVersion: v1data:alertmanager.yml: |global: #全局配置smtp_smarthost: 'mail.iflytek.com:25'smtp_from: 'ifly_sre_monitor@iflytek.com'smtp_auth_username: 'ifly_sre_monitor@iflytek.com'smtp_auth_password: 'Z81c94c79#2018'smtp_require_tls: falsetemplates:- '/etc/alertmanager/template/*.tmpl' #模板配置route:group_by: ['alertname']group_wait: 60sgroup_interval: 5mrepeat_interval: 3hreceiver: ycli15receivers:- name: 'ycli15'email_configs:- to: 'ycli15@iflytek.com'send_resolved: truewebhook_configs: #告警webhook配置- url: 'http://172.21.210.98:80822/alarm'#- url: 'http://prom-alarm-dx.xfyun.cn/alarm'send_resolved: truekind: ConfigMapmetadata:name: alertmanager-k8s-ha1-confignamespace: monitoring

编辑alertmanager-deploy-HA1.yaml

---apiVersion: apps/v1beta2kind: Deploymentmetadata:labels:name: alertmanager-k8s-ha1-deploymentname: alertmanager-k8s-ha1namespace: monitoringspec:replicas: 1selector:matchLabels:app: alertmanager-k8s-ha1template:metadata:labels:app: alertmanager-k8s-ha1spec:tolerations:- key: "node-role.kubernetes.io/master"operator: "Equal"value: ""effect: "NoSchedule"nodeSelector:kubernetes.io/hostname: hf-aipaas-172-31-243-137hostNetwork: truednsPolicy: Defaultcontainers:- image: quay.io/prometheus/alertmanager:v0.20.0name: alertmanager-k8s-ha1imagePullPolicy: IfNotPresentcommand:- "/bin/alertmanager"args:- "--config.file=/etc/alertmanager/alertmanager.yml"- "--storage.path=/data"- "--web.listen-address=:9095"- "--cluster.listen-address=0.0.0.0:8003"- "--cluster.peer=172.31.243.137:8003"- "--cluster.peer-timeout=30s"- "--cluster.gossip-interval=50ms"- "--cluster.pushpull-interval=2s"- "--log.level=debug"ports:- containerPort: 9095name: webprotocol: TCP- containerPort: 8003name: meshprotocol: TCPvolumeMounts:- mountPath: "/etc/alertmanager"name: config-alert- mountPath: "/data"name: storagevolumes:- name: config-alertconfigMap: # 配置alertmanager-config-HA1.yaml参数name: alertmanager-k8s-ha1-config- name: storageemptyDir: {}

部署alertmanager

cd alertmanager-HAkubectl apply -f ./#查看配置[root@hf-aipaas-172-31-243-137 manifests]# kubectl get pod -n monitoringNAME READY STATUS RESTARTS AGEalertmanager-k8s-ha1-6445cd7944-tnrt9 1/1 Running 0 26salertmanager-k8s-ha2-f98cbd597-hjrq7 1/1 Running 0 26sprometheus-k8s-0 3/3 Running 0 9m21sprometheus-k8s-1 3/3 Running 0 9m39sprometheus-operator-789d9c99c7-mtt78 1/1 Running 0 35m

2.4.5 kube-state-metrics

kube-state-metrics 收集k8s集群内资源对象数据

部署kube-state-metrics服务

注意kube-state-metrics-deployment.yaml:name: kube-state-metricsresources:limits:cpu: 100mmemory: 150Mirequests:cpu: 100mmemory: 150MinodeSelector: #选择的标签monitoring: operatorsecurityContext:runAsNonRoot: truerunAsUser: 65534serviceAccountName: kube-state-metricscd kube-state-metricskubectl apply -f ./# 查看[root@hf-aipaas-172-31-243-137 kube-state-metrics]# kubectl get svc -n monitoringNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEalertmanager-k8s ClusterIP None <none> 9095/TCP 10mkube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 2m43sprometheus-k8s ClusterIP 10.99.144.165 <none> 9090/TCP 22mprometheus-operated ClusterIP None <none> 9090/TCP 22mprometheus-operator ClusterIP None <none> 8080/TCP 44m

2.4.6 部署serviceMonitor服务

serviceMonitor 类似于prometheus的scrape里面的选项

cd serviceMonitorkubectl apply -f ./

2.4.7 部署etcd监控

https://www.yuque.com/nicechuan/pc096b/xsnlug

[root@hf-aipaas-172-31-243-137 serviceMonitor]# cd etcd/[root@hf-aipaas-172-31-243-137 etcd]# kubectl apply -f ./

2.4.7 部署ingress服务

cd ingresskubectl apply -f ./

2.4.8 绑定hosts访问服务

172.16.59.204 prometheus.minikube.local.com172.16.59.204 alertmanager.minikube.local.com

3、operator接口调用

3.1 创建role账号, roleServiceaccount.yaml

apiVersion: v1kind: ServiceAccountmetadata:namespace: prometheus-k8sname: zprometheus

3.2 绑定role账号到namespace,roleBinding.yaml

kind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: zprometheusnamespace: prometheus-k8ssubjects:- kind: ServiceAccountname: zprometheusnamespace: prometheus-k8sroleRef:kind: Rolename: zprometheusapiGroup: rbac.authorization.k8s.io

3.3 指定账号所操作的范围、权限。role.yaml

kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:namespace: prometheus-k8s ## 指定它能产生作用的Namespacename: zprometheusrules: ## 定义权限规则- apiGroups: [""]resources: ["services","endpoints"] ## 对mynamespace下面的Pod对象verbs: ["get", "list", "watch", "create", "update", "patch", "delete"] ## 进行GET、WATCH、LIST操作- apiGroups: ["monitoring.coreos.com"]resources: ["prometheusrules","servicemonitors","alertmanagers","prometheuses","prometheuses/finalizers","alertmanagers/finalizers"] ## 对mynamespace下面的Pod对象verbs: ["get", "list", "watch", "create", "update", "patch", "delete"] ## 进行GET、WATCH、LIST操作[root@hf-aipaas-172-31-243-137 role-zprometheus]# cat roleBinding.yamlkind: RoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: zprometheusnamespace: prometheus-k8ssubjects:- kind: ServiceAccountname: zprometheusnamespace: prometheus-k8sroleRef:kind: Rolename: zprometheusapiGroup: rbac.authorization.k8s.io

3.4 获取scret的token

[root@hf-aipaas-172-31-243-137 role-zprometheus]# kubectl get secrets -n prometheus-k8sNAME TYPE DATA AGEdefault-token-bk8sn kubernetes.io/service-account-token 3 13hzprometheus-token-sfnmn kubernetes.io/service-account-token 3 7m53s[root@hf-aipaas-172-31-243-137 role-zprometheus]# kubectl get secret zprometheus-token-sfnmn -n prometheus-k8s -o jsonpath={.data.token} | base64 -d

3.5 alarm告警通过api进行调用

Python举例

def action_api():prometheusurl = 'https://172.31.243.137:6443/apis/monitoring.coreos.com/v1/namespaces/prometheus-k8s/prometheusrules/'prometheustoken = 'eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJwcm9tZXRoZXVzLWs4cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJ6cHJvbWV0aGV1cy10b2tlbi1zZm5tbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJ6cHJvbWV0aGV1cyIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjVkM2NmMGRiLWRkMGYtMTFlYS1iMTZiLWZhMTYzZThiYzRmMSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpwcm9tZXRoZXVzLWs4czp6cHJvbWV0aGV1cyJ9.n3L9oJpTDmrNGr2Gnj2Tpsgyi51ZUzRh2qe4NinOs12jDLRmInsd1HPz7pynfwQEAN2qHsqPs29Ole_aSlegw1Q-0MUYMgwTxOR2GzEnaRMTELe7OglIaUYnZ9oztzq1jhouAitGK2UD_FpUVg94jxTCeFUFjfq7RP7YAqdLP6oyR_eepQM2tF0g4u6H5wN-nZYSorHF0HUn0VUAXc7uPQyZXSeSOhBAy5qu5xKyKp4VJ9veaGSfM6hgW0bz_Gahlk8IOyiMGPo2-S7MwRlxHezWv9KsDad7LGxCK2JvLetch5ekVlJgFgGknzWMFsrojWt4dc-4H1sQoC2SicFG9g'create_header = {"Authorization": "Bearer " + prometheustoken,"Content-Type": "application/json"}role = ""rule_json = {"apiVersion": "monitoring.coreos.com/v1","kind": "PrometheusRule","metadata": {"labels": {"prometheus": "k8s","role": "alert-rules"},"name": "ocr-pod-restart2","namespace": "prometheus-k8s",},"spec": {"groups": [{"name": "ocr-pod-restart","rules": [{"alert": "ocr-pod-restart","annotations": {"summary": "pod {{$labels.pod}} 正在崩溃,三分钟之内崩溃了{{ $value }}次!所在物理节点为:{{$labels.node}}"},"expr": "changes(kube_pod_container_status_restarts_total{namespace=\"default\"}[3m]) * on (pod ) group_left(node) kube_pod_info > 0","for": "3s","labels": {"alarm_group": "新架构-OCR-短信-全天","product_line": "新架构OCR","severity": "warning"}}]}]}}try:rule_data = json.dumps(rule_json)res = requests.post(url=prometheusurl, data=rule_data, headers=create_header, verify=False)res_code = res.status_codeif res_code != 201:return res_code, "create prometheusRule failed! the errBody is: " + str(res.json())else:return 0, "created"except Exception as e:return -2, str(e)

查看添加的规则:

[root@hf-aipaas-172-31-243-137 role-zprometheus]# kubectl get prometheusrule -n prometheus-k8sNAME AGEocr-pod-restart2 3s

4、AIpass进行api调用

go举例

func main() {Query := `kube_replicaset_status_ready_replicas{replicaset="calico-kube-controllers-54cd585c9c"}`QueryUrl := "http://172.16.59.204:80/api/v1/query"req, err := http.NewRequest(http.MethodGet, QueryUrl, nil)if err != nil {panic(err)}url_q := req.URL.Query()url_q.Add("query", Query)req.URL.RawQuery = url_q.Encode()req.Host = "prometheus.minikube.local.com"resp, err := http.DefaultClient.Do(req)if err != nil {panic(err)}defer resp.Body.Close()body, err := ioutil.ReadAll(resp.Body)if err != nil {fmt.Println(222)panic(err)}fmt.Println(string(body))}

kubectl get prometheusrule -n prometheus-k8s test-nginx -o yaml