利用hadoop自带基准测试工具包进行集群性能测试,测试平台为CDH6.3.2上hadoop3.0.0版本

目录 /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar

使用TestDFSIO、mrbench、nnbench、Terasort 、sort 几个使用较广的基准测试程序

不带参数运行,会显示示例说明:

sudo -u hdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar

An example program must be given as the first argument.Valid program names are:DFSCIOTest: Distributed i/o benchmark of libhdfs.DistributedFSCheck: Distributed checkup of the file system consistency.JHLogAnalyzer: Job History Log analyzer.MRReliabilityTest: A program that tests the reliability of the MR framework by injecting faults/failuresSliveTest: HDFS Stress Test and Live Data Verification.TestDFSIO: Distributed i/o benchmark.fail: a job that always failsfilebench: Benchmark SequenceFile(Input|Output)Format (block,record compressed and uncompressed), Text(Input|Output)Format (compressed and uncompressed)largesorter: Large-Sort testerloadgen: Generic map/reduce load generatormapredtest: A map/reduce test check.minicluster: Single process HDFS and MR cluster.mrbench: A map/reduce benchmark that can create many small jobsnnbench: A benchmark that stresses the namenode.sleep: A job that sleeps at each map and reduce task.testbigmapoutput: A map/reduce program that works on a very big non-splittable file and does identity map/reducetestfilesystem: A test for FileSystem read/write.testmapredsort: A map/reduce program that validates the map-reduce framework's sort.testsequencefile: A test for flat files of binary key value pairs.testsequencefileinputformat: A test for sequence file input format.testtextinputformat: A test for text input format.threadedmapbench: A map/reduce benchmark that compares the performance of maps with multiple spills over maps with 1 spill

1、TestDFSIO

TestDFSIO用于测试HDFS的IO性能,使用一个MapReduce作业来并发地执行读写操作,每个map任务用于读或写每个文件,map的输出用于收集与处理文件相关的统计信息,reduce用于累积统计信息,并产生summary。

查看说明:

hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar TestDFSIO

TestDFSIO.1.7Usage: TestDFSIO [genericOptions] -read [-random | -backward | -skip [-skipSize Size]] | -write | -append | -clean [-compression codecClassName] [-nrFiles N] [-size Size[B|KB|MB|GB|TB]] [-resFile resultFileName] [-bufferSize Bytes]

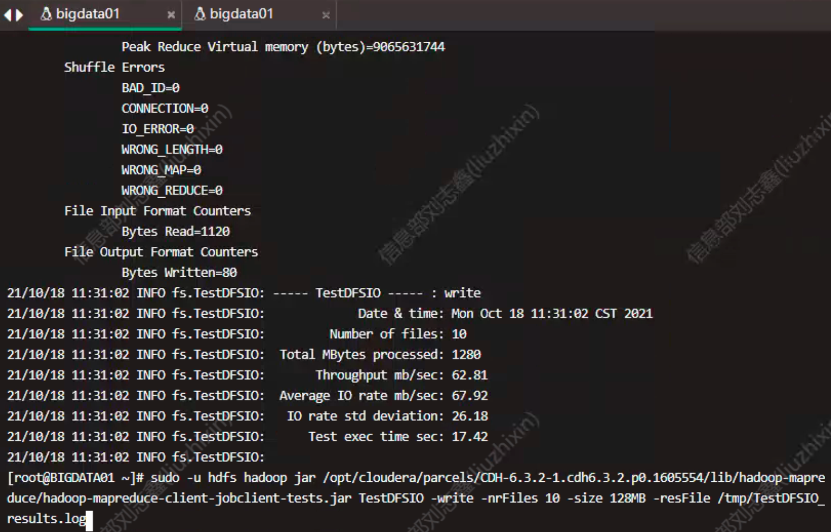

1.1 测试HDFS写性能

测试内容:

向HDFS集群写10个128M的文件:

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar TestDFSIO -write -nrFiles 10 -size 128MB -resFile /tmp/TestDFSIO_results.log

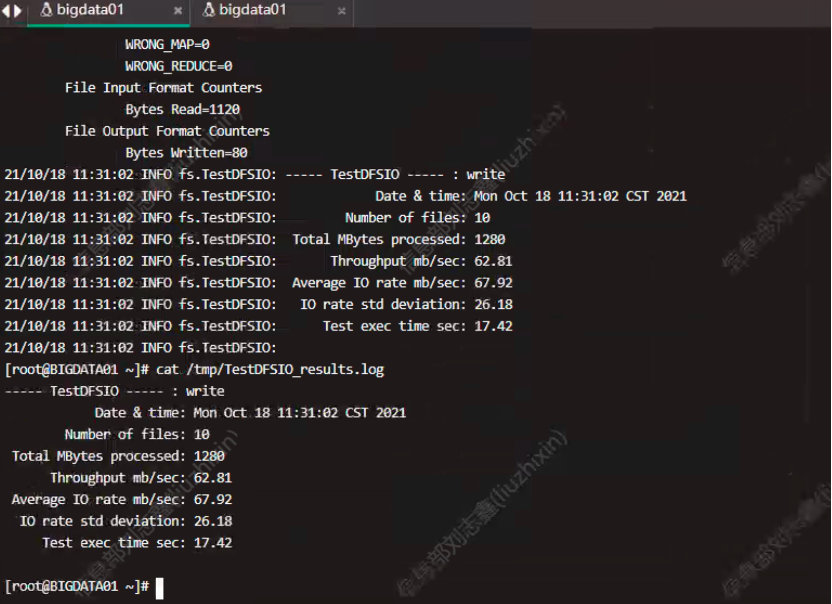

查看结果:

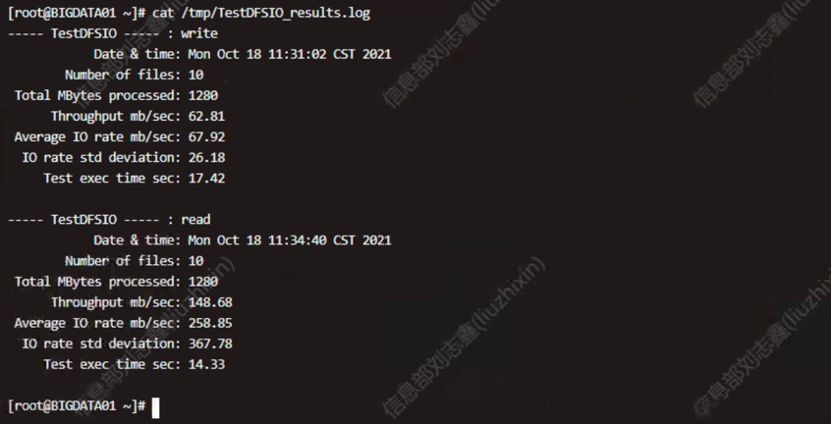

cat /tmp/TestDFSIO_results.log

结论:

向HDFS集群写10个128MB大小的文件,吞吐量为:62.81MB/s,平均IO速率为:67.92MB/s,IO速率std偏差:26.18,总用时:17.42s。

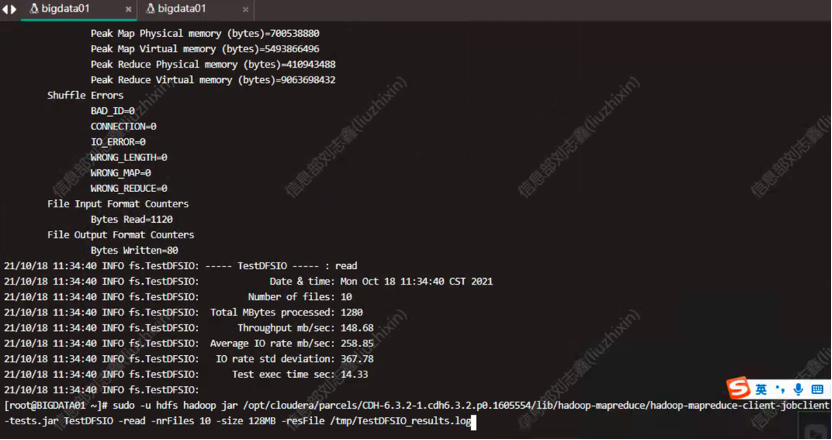

1.2 测试HDFS读性能

测试内容:

读取HDFS集群10个128M的文件

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar TestDFSIO -read -nrFiles 10 -size 128MB -resFile /tmp/TestDFSIO_results.log

查看结果:

cat /tmp/TestDFSIO_results.log

结论:

读取HDFS集群10个128M的文件吞吐量为:148.68MB/s,平均IO速率为:258.85MB/s,IO速率std偏差367.78,总用时14.33s。

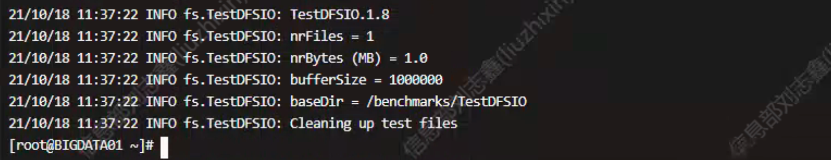

3. 清除测试数据

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar TestDFSIO -clean

19/06/27 13:57:21 INFO fs.TestDFSIO: TestDFSIO.1.719/06/27 13:57:21 INFO fs.TestDFSIO: nrFiles = 119/06/27 13:57:21 INFO fs.TestDFSIO: nrBytes (MB) = 1.019/06/27 13:57:21 INFO fs.TestDFSIO: bufferSize = 100000019/06/27 13:57:21 INFO fs.TestDFSIO: baseDir = /benchmarks/TestDFSIO19/06/27 13:57:22 INFO fs.TestDFSIO: Cleaning up test files

2、nnbench

nnbench用于测试NameNode的负载,它会生成很多与HDFS相关的请求,给NameNode施加较大的压力。这个测试能在HDFS上模拟创建、读取、重命名和删除文件等操作。

查看说明:

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar nnbench -help

NameNode Benchmark 0.4Usage: nnbench <options>Options:-operation <Available operations are create_write open_read rename delete. This option is mandatory>* NOTE: The open_read, rename and delete operations assume that the files they operate on, are already available. The create_write operation must be run before running the other operations.-maps <number of maps. default is 1. This is not mandatory>-reduces <number of reduces. default is 1. This is not mandatory>-startTime <time to start, given in seconds from the epoch. Make sure this is far enough into the future, so all maps (operations) will start at the same time>. default is launch time + 2 mins. This is not mandatory-blockSize <Block size in bytes. default is 1. This is not mandatory>-bytesToWrite <Bytes to write. default is 0. This is not mandatory>-bytesPerChecksum <Bytes per checksum for the files. default is 1. This is not mandatory>-numberOfFiles <number of files to create. default is 1. This is not mandatory>-replicationFactorPerFile <Replication factor for the files. default is 1. This is not mandatory>-baseDir <base DFS path. default is /becnhmarks/NNBench. This is not mandatory>-readFileAfterOpen <true or false. if true, it reads the file and reports the average time to read. This is valid with the open_read operation. default is false. This is not mandatory>-help: Display the help statement

测试内容:

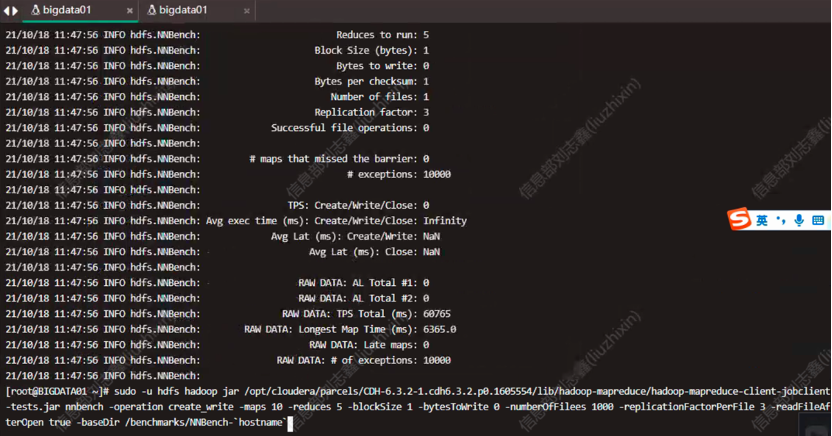

测试使用10个mapper和5个reducer来创建1000个文件:

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar nnbench -operation create_write -maps 10 -reduces 5 -blockSize 1 -bytesToWrite 0 -numberOfFiles 1000 -replicationFactorPerFile 3 -readFileAfterOpen true -baseDir /benchmarks/NNBench-`hostname`

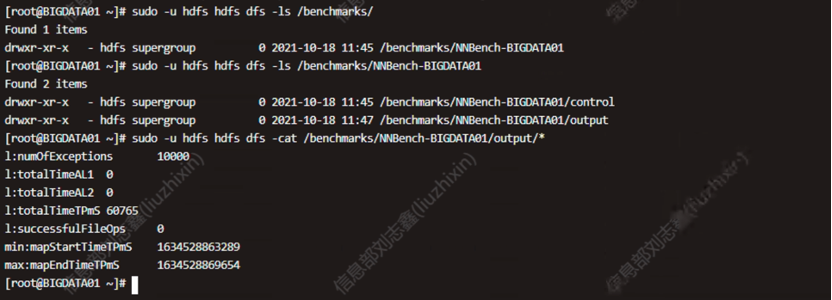

查看HDFS上存储的结果:

sudo -u hdfs hdfs dfs -cat /benchmarks/NNBench-BIGDATA01/output/*

结论:

测试使用10个mapper和5个reducer来创建1000个文件:

3.、mrbench

mrbench会多次重复执行一个小作业,用于检查在机群上小作业的运行是否可重复以及运行是否高效。

查看说明:

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar mrbench -help

MRBenchmark.0.0.2Usage: mrbench [-baseDir <base DFS path for output/input, default is /benchmarks/MRBench>] [-jar <local path to job jar file containing Mapper and Reducer implementations, default is current jar file>] [-numRuns <number of times to run the job, default is 1>] [-maps <number of maps for each run, default is 2>] [-reduces <number of reduces for each run, default is 1>] [-inputLines <number of input lines to generate, default is 1>] [-inputType <type of input to generate, one of ascending (default), descending, random>] [-verbose]

测试内容:

测试运行一个作业50次:

sudo -uhdfs hadoop jar /opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-client-jobclient-tests.jar mrbench -numRuns 50 -maps 10 -reduces 5 -inputLines 10 -inputType descending

结论:

沉余执行50次输入10行的map10,reduces5任务,平均用时14.784s,小作业的运行正常且可高效重复运行。

hadoop-mapreduce/hadoop-mapreduce-examples.jar

An example program must be given as the first argument.Valid program names are:aggregatewordcount: An Aggregate based map/reduce program that counts the words in the input files.aggregatewordhist: An Aggregate based map/reduce program that computes the histogram of the words in the input files.bbp: A map/reduce program that uses Bailey-Borwein-Plouffe to compute exact digits of Pi.dbcount: An example job that count the pageview counts from a database.distbbp: A map/reduce program that uses a BBP-type formula to compute exact bits of Pi.grep: A map/reduce program that counts the matches of a regex in the input.join: A job that effects a join over sorted, equally partitioned datasetsmultifilewc: A job that counts words from several files.pentomino: A map/reduce tile laying program to find solutions to pentomino problems.pi: A map/reduce program that estimates Pi using a quasi-Monte Carlo method.randomtextwriter: A map/reduce program that writes 10GB of random textual data per node.randomwriter: A map/reduce program that writes 10GB of random data per node.secondarysort: An example defining a secondary sort to the reduce.sort: A map/reduce program that sorts the data written by the random writer.sudoku: A sudoku solver.teragen: Generate data for the terasortterasort: Run the terasortteravalidate: Checking results of terasortwordcount: A map/reduce program that counts the words in the input files.wordmean: A map/reduce program that counts the average length of the words in the input files.wordmedian: A map/reduce program that counts the median length of the words in the input files.wordstandarddeviation: A map/reduce program that counts the standard deviation of the length of the words in the input files.

4、Terasort

Terasort是测试Hadoop的一个有效的排序程序。通过Hadoop自带的Terasort排序程序,测试不同的Map任务和Reduce任务数量,对Hadoop性能的影响。 实验数据由程序中的teragen程序生成,数量为1G和10G。

一个TeraSort测试需要按三步:

- TeraGen生成随机数据

- TeraSort对数据排序

- TeraValidate来验证TeraSort输出的数据是否有序,如果检测到问题,将乱序的key输出到目录

4.1 TeraGen生成随机数,将结果输出到目录/tmp/examples/terasort-intput

sudo -uhdfs hadoop jar \/opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar \teragen 10000000 /tmp/examples/terasort-input

HDFS上的数据

4.2 TeraSort排序,将结果输出到目录/tmp/examples/terasort-output

sudo -uhdfs hadoop jar \/opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar \terasort /tmp/examples/terasort-input /tmp/examples/terasort-output

HDFS上的数据

4.3TeraValidate验证,如果检测到问题,将乱序的key输出到目录/tmp/examples/terasort-validate

sudo -uhdfs hadoop jar \/opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar \teravalidate /tmp/examples/terasort-output /tmp/examples/terasort-validate

HDFS上的结果

5、另外,常使用的还有sort程序评测MapReduce

5.1 randomWriter产生随机数,每个节点运行10个Map任务,每个Map产生大约1G大小的二进制随机数

sudo -uhdfs hadoop jar \/opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar \randomwriter /tmp/examples/random-data

5.2 sort排序

sudo -uhdfs hadoop jar \/opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar \sort /tmp/examples/random-data /tmp/examples/sorted-data

5.3 testmapredsort验证数据是否真正排好序了

sudo -uhdfs hadoop jar \/opt/cloudera/parcels/CDH/lib/hadoop-mapreduce/hadoop-mapreduce-examples.jar \testmapredsort \-sortInput /tmp/examples/random-data \-sortOutput /tmp/examples/sorted-data