Rook

Rook是一个开源的云原生存储协调器,为各种存储解决方案提供平台,框架和支持,以便与云原生环境本地集成。

该项目早期是一个基于 Ceph 的 Kubernetes存储插件,Rook 在实现中加入了水平扩展、迁移、灾难备份、监控,等大量的企业级功能,使得这个项目变成了一个完整的、生产级别可用的容器存储插件,后期也加入了包EdgeFS,

Minio,CockroachDB,Cassandra,NFS多种存储实现的支持。可以理解为是Kubernetes上的存储提供框架,提供基于Kubernetes的多种存储部署。

通过安装存储插件,集群容器里可以挂载一个基于网络或者其他机制的远程数据卷,使得在容器里创建的文件,实际上是保存在远程存储服务器上,或者以分布式的方式保存在多个节点上,而与当前宿主机没有任何绑定关系。这样,无论在哪个宿主机上启动新的容器,都可以请求挂载指定的持久化存储卷,从而访问到数据卷里保存的内容。

Ceph Storage

Ceph 是一个一个是高度可扩展的分布式存储解决方案,适用于具有多年生产部署的块存储,对象存储和共享文件系统,支持3种接口。

- Object:有原生的API,而且也兼容Swift和S3的API。

- Block:支持精简配置、快照、克隆。

- File:Posix接口,支持快照。

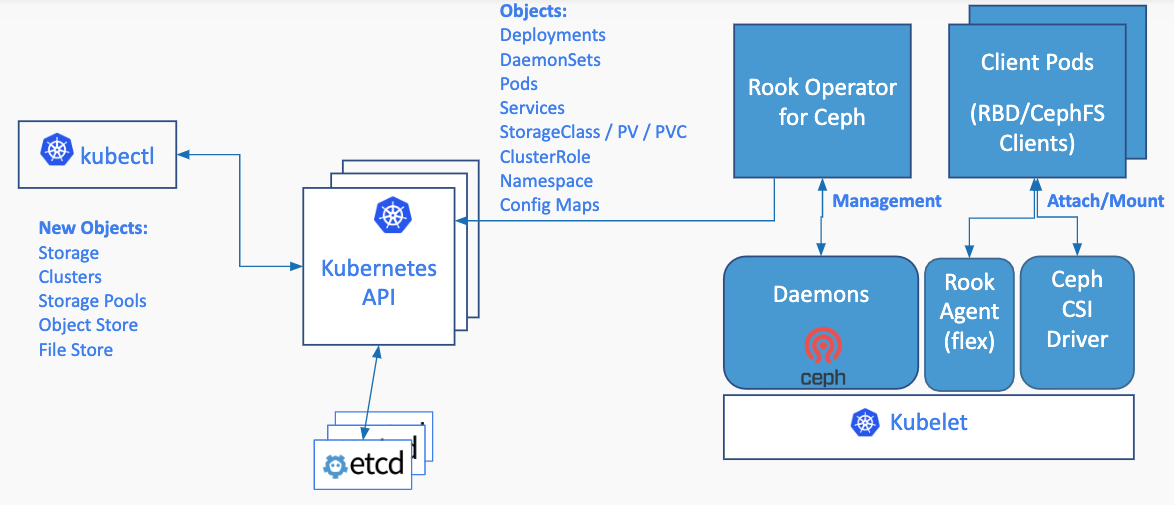

通过在Kubernetes集群中运行Ceph,Kubernetes应用程序可以安装由Rook管理的块设备和文件系统,或者可以使用S3 / Swift API进行对象存储。 Rook operator自动配置存储组件并监控群集,以确保存储保持可用且健康。

Rook operator监视存储后台驻留程序以确保群集正常运行,监控API server的请求并应用变更。 在必要时Ceph mons会启动或进行故障转移,在群集扩缩容时也会进行其他调整。Rook自动配置Ceph-CSI驱动程序以将存储装载到pod。

ceph详解

github项目

toolbox

ceph cluster配置参数

下载1.0版部署

[root@master ~]# cd rook-release-1.0/cluster/examples/kubernetes/ceph/[root@master ~]# kubectl apply -f common.yaml[root@master ~]# kubectl apply -f operator.yaml[root@master ~]# kubectl -n rook-ceph get pod

确认rook-ceph-operator, rook-ceph-agent, rook-discover处于running状态后,创建ceph-test集群

[root@master ~]# kubectl apply -f cluster.yaml

[root@master ~]# cat cluster-test.yaml |grep -v "#"

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v14.2.1-20190430

allowUnsupported: true

dataDirHostPath: /var/lib/rook

mon:

count: 1

allowMultiplePerNode: true

dashboard:

enabled: true

network:

hostNetwork: false

rbdMirroring:

workers: 0

storage:

useAllNodes: true

useAllDevices: false

deviceFilter:

config:

directories:

- path: /var/lib/rook

- dataDirHostPath : 指定宿主机上保存ceph配置文件和数据的路径,如不存在会被创建,重新创建集群时如果指定路径不变,需要确保这个目录为空,否则mon会无法启动。

- useAllNodes :是否将群集中的所有节点用于存储。 如果在下面的节点字段下指定了单个节点,则必须设置为false。

- useAllDevices:是否由OSD自动使用在群集中的节点上发现的所有设备,如果为true,则将使用除了创建分区或本地文件系统之外的所有设备。 如果指定,则由deviceFilter覆盖。

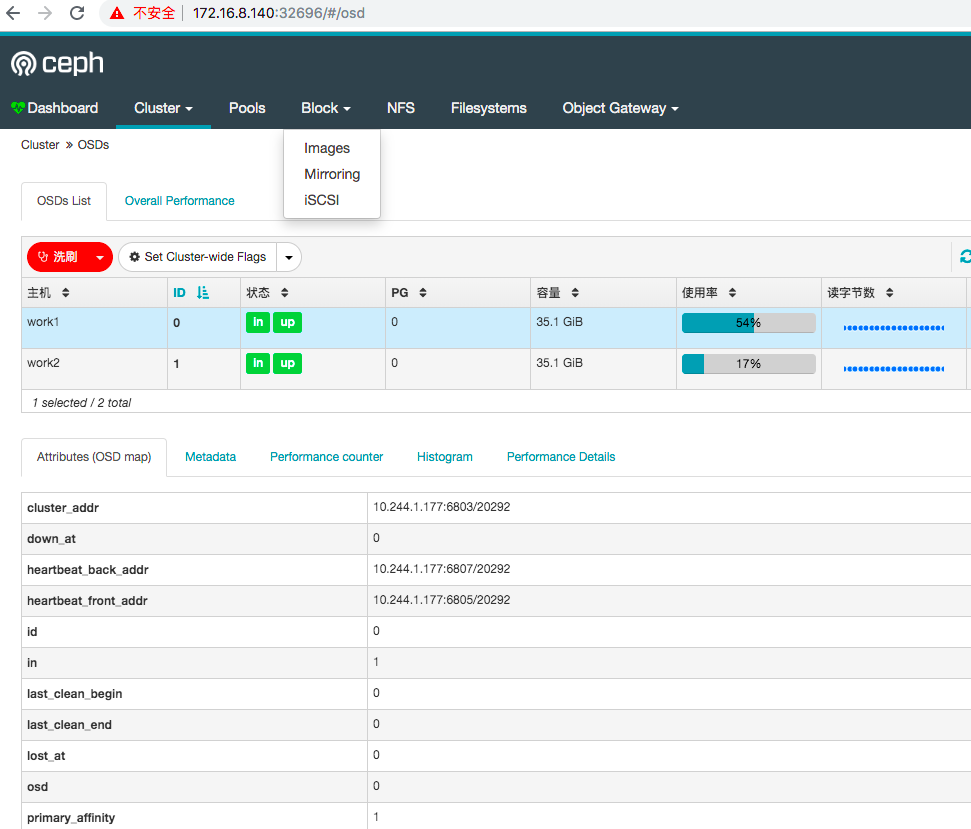

访问ceph dashboard

[root@master ~]# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.98.213.244 <none> 9283/TCP 91s

rook-ceph-mgr-dashboard NodePort 10.96.146.2 <none> 8443:30714/TCP 91s

rook-ceph-mon-a ClusterIP 10.100.197.38 <none> 6789/TCP,3300/TCP 2m43s

rook-ceph-mon-b ClusterIP 10.96.30.71 <none> 6789/TCP,3300/TCP 2m34s

rook-ceph-mon-c ClusterIP 10.99.27.237 <none> 6789/TCP,3300/TCP 2m24s

[root@master ~]# kubectl patch svc rook-ceph-mgr-dashboard -p '{"spec":{"type":"NodePort"}}' -n rook-ceph

service/rook-ceph-mgr-dashboard patched

获取登录账号密码

[root@master ~]# MGR_POD=`kubectl get pod -n rook-ceph | grep mgr | awk '{print $1}'`

[root@master ~]# kubectl -n rook-ceph logs $MGR_POD | grep password

debug 2019-08-23 04:01:49.024 7f843fc6c700 0 log_channel(audit) log [DBG] : from='client.4191 -' entity='client.admin' cmd=[{"username": "admin", "prefix": "dashboard set-login-credentials", "password": "B2zkRf2guY", "target": ["mgr", ""], "format": "json"}]: dispatch

疑难杂症

创建生产环境集群

[root@master ceph]# cat cluster.yaml |grep -v "#"

apiVersion: ceph.rook.io/v1

kind: CephCluster

metadata:

name: rook-ceph

namespace: rook-ceph

spec:

cephVersion:

image: ceph/ceph:v14.2.1-20190430

allowUnsupported: false

dataDirHostPath: /var/lib/rook

mon:

count: 3

allowMultiplePerNode: false

dashboard:

enabled: true

network:

hostNetwork: false

rbdMirroring:

workers: 0

annotations:

resources:

useAllNodes: true

useAllDevices: false

deviceFilter:

location:

config:

nodes:

- name: "172.16.8.166"

- name: "vdb"

- name: "172.16.8.186"

devices:

- name: "vdb"

集群osd无法在相关osd设备创建

[root@master ceph]# kubectl -n rook-ceph get pod -l app=rook-ceph-osd-prepare

NAME READY STATUS RESTARTS AGE

rook-ceph-osd-prepare-work1-cxpx6 0/2 Completed 0 58m

rook-ceph-osd-prepare-work2-t7m4b 0/2 Completed 0 58m

[root@master ceph]# kubectl -n rook-ceph logs rook-ceph-osd-prepare-work1-w7qpv provision |grep W

2019-08-23 06:50:57.448517 W | cephconfig: failed to add config file override from '/etc/rook/config/override.conf': open /etc/rook/config/override.conf: no such file or directory

[root@master ceph]# kubectl -n rook-ceph get configmap rook-config-override -o yaml

apiVersion: v1

data:

config: ""

kind: ConfigMap

metadata:

creationTimestamp: "2019-08-23T06:49:34Z"

name: rook-config-override

namespace: rook-ceph

ownerReferences:

- apiVersion: ceph.rook.io/v1

blockOwnerDeletion: true

kind: CephCluster

name: rook-ceph

uid: 2f7624a0-9c14-4f19-b734-63b4cd719462

resourceVersion: "2846141"

selfLink: /api/v1/namespaces/rook-ceph/configmaps/rook-config-override

uid: 90d33355-ac4b-4084-b8bf-1135c27394f0

查看rook日志

[root@work1 ~]# cat /var/lib/rook/log/rook-ceph/ceph-volume.log |grep "stderr"

[2019-08-23 03:29:59,550][ceph_volume.process][INFO ] stderr WARNING: Failed to connect to lvmetad. Falling back to device scanning.

[2019-08-23 03:30:00,068][ceph_volume.process][INFO ] stderr WARNING: Failed to connect to lvmetad. Falling back to device scanning.

[root@work2 ~]# cat /etc/lvm/lvm.conf |grep "use_lvmetad"

# See the use_lvmetad comment for a special case regarding filters.

# This is incompatible with lvmetad. If use_lvmetad is enabled,

# Configuration option global/use_lvmetad.

# while use_lvmetad was disabled, it must be stopped, use_lvmetad

use_lvmetad = 1

查看ceph组件配置

[root@work1 ~]# cat /var/lib/rook/rook-ceph/rook-ceph.config

[global]

fsid = bbc289c2-4b8f-486d-abb3-b03d4586158d

run dir = /var/lib/rook/rook-ceph

mon initial members = a b c

mon host = 10.107.126.115:6789,10.107.235.14:6789,10.96.60.221:6789

log file = /dev/stderr

mon cluster log file = /dev/stderr

public addr = 10.244.1.172

cluster addr = 10.244.1.172

mon keyvaluedb = rocksdb

mon_allow_pool_delete = true

mon_max_pg_per_osd = 1000

debug default = 0

debug rados = 0

debug mon = 0

debug osd = 0

debug bluestore = 0

debug filestore = 0

debug journal = 0

debug leveldb = 0

filestore_omap_backend = rocksdb

osd pg bits = 11

osd pgp bits = 11

osd pool default size = 1

osd pool default min size = 1

osd pool default pg num = 100

osd pool default pgp num = 100

crush location = root=default host=work1

rbd_default_features = 3

fatal signal handlers = false