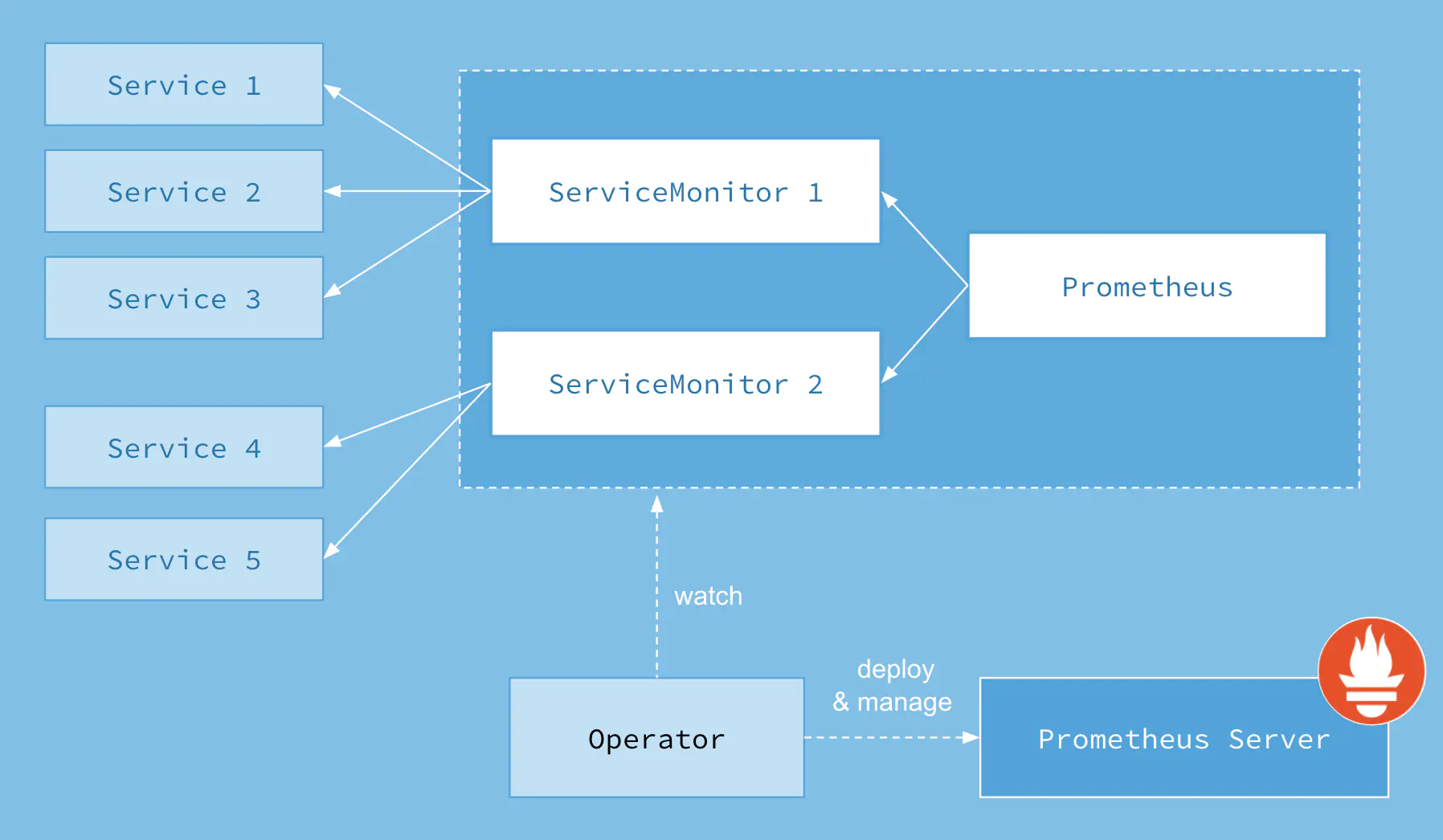

背景说明

Prometheus Operator是CoreOS基于prometheus开发的专门面向kubernetes的一套监控方案,简化prometheus在kubernetes环境下的集群部署。

随着云原生概念盛行,对于容器、服务、节点以及集群的监控变得越来越重要。Prometheus 作为 Kubernetes 监控的事实标准,有着强大的功能和良好的生态。但是它不支持分布式,不支持数据导入、导出,不支持通过 API 修改监控目标和报警规则,所以在使用它时,通常需要写脚本和代码来简化操作。Prometheus Operator 为监控 Kubernetes service、deployment 和 Prometheus 实例的管理提供了简单的定义,简化在 Kubernetes 上部署、管理和运行 Prometheus 和 Alertmanager 集群。

解决方案

搜索Chart

[root@cka-master helm]# helm search repo prometheus-operatorNAME CHART VERSION APP VERSION DESCRIPTIONapphub/prometheus-operator 8.7.0 0.35.0 Provides easy monitoring definitions for Kubern...github/prometheus-operator 9.3.2 0.38.1 DEPRECATED Provides easy monitoring definitions...[root@cka-master helm]#

下载Chart

[root@cka-master helm]# helm pull apphub/prometheus-operator[root@cka-master helm]# lschart-demo prometheus-operator-8.7.0.tgz repo[root@cka-master helm]# tar -zxvf prometheus-operator-8.7.0.tgz[root@cka-master helm]# lsprometheus-operator prometheus-operator-8.7.0.tgz

编辑Chart

[root@cka-master helm]# cd prometheus-operator/[root@cka-master prometheus-operator]# lsChart.lock charts Chart.yaml CONTRIBUTING.md crds README.md requirements.lock requirements.yaml templates values.yaml[root@cka-master prometheus-operator]#

命名空间

为了便于后期管理,这里将promethus operator单独创建一个命名空间并且切换至空间

[root@cka-master prometheus-operator]# kubectl create ns prometheus-operatornamespace/prometheus-operator created[root@cka-master prometheus-operator]# kubectl get nsNAME STATUS AGEdefault Active 45hkube-node-lease Active 45hkube-public Active 45hkube-system Active 45hkubernetes-dashboard Active 10hprometheus-operator Active 17s[root@cka-master prometheus-operator]#[root@cka-master prometheus-operator]# kubens prometheus-operator✔ Active namespace is "prometheus-operator"[root@cka-master prometheus-operator]#

安装Chart

[root@cka-master helm]# helm search repo prometheus-operatorNAME CHART VERSION APP VERSION DESCRIPTIONapphub/prometheus-operator 8.7.0 0.35.0 Provides easy monitoring definitions for Kubern...github/prometheus-operator 9.3.2 0.38.1 DEPRECATED Provides easy monitoring definitions...[root@cka-master helm]# helm install prometheus-operator apphub/prometheus-operatorError: INSTALLATION FAILED: failed to install CRD crds/crd-alertmanager.yaml: unable to recognize "": no matches for kind "CustomResourceDefinition" in version "apiextensions.k8s.io/v1beta1"[root@cka-master helm]#

下载下来并修改版本

[root@cka-master helm]# lschart-demo prometheus-operator prometheus-operator-8.7.0.tgz repo[root@cka-master helm]# cd prometheus-operator/[root@cka-master prometheus-operator]# find . -name "*.yaml" -exec sed -i "s/v1beta1/v1/g" {} \;[root@cka-master prometheus-operator]# cd ../[root@cka-master helm]# helm install prometheus-operator prometheus-operator/Error: INSTALLATION FAILED: failed to install CRD crds/crd-alertmanager.yaml: CustomResourceDefinition.apiextensions.k8s.io "alertmanagers.monitoring.coreos.com" is invalid: spec.versions[0].schema.openAPIV3Schema: Required value: schemas are required[root@cka-master helm]#

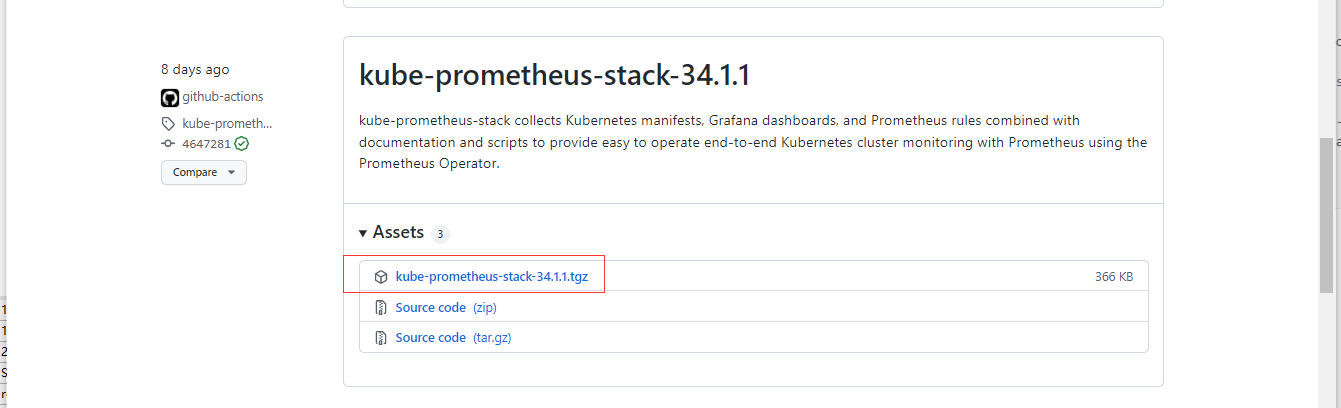

通过GitHub下载最新的Chart

https://github.com/prometheus-community/helm-charts/releases

[root@cka-master helm]# ls

kube-prometheus-stack-34.1.1.tgz

[root@cka-master helm]# tar -zxvf kube-prometheus-stack-34.1.1.tgz

[root@cka-master helm]# ls

kube-prometheus-stack kube-prometheus-stack-34.1.1.tgz

[root@cka-master helm]# helm install prometheus-operator kube-prometheus-stack/

Error: INSTALLATION FAILED: failed pre-install: timed out waiting for the condition

查看报错原因

[root@cka-master kube-prometheus-stack]# kubectl get pod

NAME READY STATUS RESTARTS AGE

prometheus-operator-kube-p-admission-create--1-c8mj4 0/1 ImagePullBackOff 0 2m29s

[root@cka-master kube-prometheus-stack]# kubectl describe prometheus-operator-kube-p-admission-create--1-c8mj4

error: the server doesn't have a resource type "prometheus-operator-kube-p-admission-create--1-c8mj4"

[root@cka-master kube-prometheus-stack]# kubectl describe pod prometheus-operator-kube-p-admission-create--1-c8mj4

Name: prometheus-operator-kube-p-admission-create--1-c8mj4

Namespace: prometheus-operator

Priority: 0

Node: cka-node1/192.168.184.129

Start Time: Tue, 15 Mar 2022 21:13:39 +0800

Labels: app=kube-prometheus-stack-admission-create

app.kubernetes.io/instance=prometheus-operator

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/part-of=kube-prometheus-stack

app.kubernetes.io/version=34.1.1

chart=kube-prometheus-stack-34.1.1

controller-uid=34ef2ab7-a518-43ac-9f82-844542669095

heritage=Helm

job-name=prometheus-operator-kube-p-admission-create

release=prometheus-operator

Annotations: cni.projectcalico.org/containerID: fbbe911b2811c7ed0c926e77ced90a0d4bca41add4c4021107871b3782b2afc6

cni.projectcalico.org/podIP: 10.244.115.73/32

cni.projectcalico.org/podIPs: 10.244.115.73/32

Status: Pending

IP: 10.244.115.73

IPs:

IP: 10.244.115.73

Controlled By: Job/prometheus-operator-kube-p-admission-create

Containers:

create:

Container ID:

Image: k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

Image ID:

Port: <none>

Host Port: <none>

Args:

create

--host=prometheus-operator-kube-p-operator,prometheus-operator-kube-p-operator.prometheus-operator.svc

--namespace=prometheus-operator

--secret-name=prometheus-operator-kube-p-admission

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-95qvl (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-95qvl:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 3m3s default-scheduler Successfully assigned prometheus-operator/prometheus-operator-kube-p-admission-create--1-c8mj4 to cka-node1

Warning Failed 2m48s kubelet Failed to pull image "k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1": rpc error: code = Unknown desc = Error response from daemon: Get "https://k8s.gcr.io/v2/": dial tcp 142.251.8.82:443: i/o timeout

Warning Failed 2m6s kubelet Failed to pull image "k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1": rpc error: code = Unknown desc = Error response from daemon: Get "https://k8s.gcr.io/v2/": dial tcp 64.233.189.82:443: i/o timeout

Normal Pulling 72s (x4 over 3m30s) kubelet Pulling image "k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1"

Warning Failed 57s (x4 over 3m15s) kubelet Error: ErrImagePull

Warning Failed 57s (x2 over 3m15s) kubelet Failed to pull image "k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1": rpc error: code = Unknown desc = Error response from daemon: Get "https://k8s.gcr.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Warning Failed 46s (x6 over 3m15s) kubelet Error: ImagePullBackOff

Normal BackOff 35s (x7 over 3m15s) kubelet Back-off pulling image "k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1"

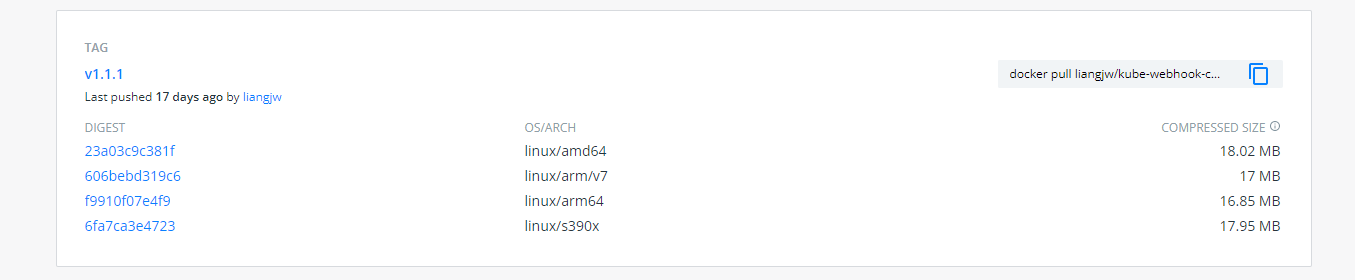

由此可知是因为k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1无法正常下载,docker hub进行搜索

https://hub.docker.com/r/liangjw/kube-webhook-certgen/tags

[root@cka-master kube-prometheus-stack]# docker pull liangjw/kube-webhook-certgen:v1.1.1

v1.1.1: Pulling from liangjw/kube-webhook-certgen

ec52731e9273: Pull complete

b90aa28117d4: Pull complete

Digest: sha256:64d8c73dca984af206adf9d6d7e46aa550362b1d7a01f3a0a91b20cc67868660

Status: Downloaded newer image for liangjw/kube-webhook-certgen:v1.1.1

docker.io/liangjw/kube-webhook-certgen:v1.1.1

[root@cka-master kube-prometheus-stack]#

[root@cka-master kube-prometheus-stack]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

kubernetesui/dashboard v2.5.1 7fff914c4a61 5 days ago 243MB

calico/kube-controllers v3.22.1 c0c6672a66a5 11 days ago 132MB

calico/cni v3.22.1 2a8ef6985a3e 11 days ago 236MB

calico/pod2daemon-flexvol v3.22.1 17300d20daf9 11 days ago 19.7MB

calico/node v3.22.1 7a71aca7b60f 11 days ago 198MB

registry.aliyuncs.com/google_containers/kube-apiserver v1.22.7 015adc722b79 3 weeks ago 128MB

registry.aliyuncs.com/google_containers/kube-scheduler v1.22.7 f442011bbbc0 3 weeks ago 52.7MB

registry.aliyuncs.com/google_containers/kube-proxy v1.22.7 2025ac44bb2b 3 weeks ago 104MB

registry.aliyuncs.com/google_containers/kube-controller-manager v1.22.7 cca413ffb30c 3 weeks ago 122MB

nginx latest 605c77e624dd 2 months ago 141MB

liangjw/kube-webhook-certgen v1.1.1 c41e9fcadf5a 5 months ago 47.7MB

registry.aliyuncs.com/google_containers/etcd 3.5.0-0 004811815584 9 months ago 295MB

kubernetesui/metrics-scraper v1.0.7 7801cfc6d5c0 9 months ago 34.4MB

registry.aliyuncs.com/google_containers/coredns v1.8.4 8d147537fb7d 9 months ago 47.6MB

registry.aliyuncs.com/google_containers/pause 3.5 ed210e3e4a5b 12 months ago 683kB

[root@cka-master kube-prometheus-stack]# docker tag liangjw/kube-webhook-certgen:v1.1.1 k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

再次尝试安装

[root@cka-master helm]# helm uninstall prometheus-operator

release "prometheus-operator" uninstalled

[root@cka-master helm]# helm install prometheus-operator kube-prometheus-stack/

新开终端查看安装进度

https://hub.docker.com/r/liangjw/kube-state-metrics/tags

[root@cka-master helm]# kubectl get pod

NAME READY STATUS RESTARTS AGE

alertmanager-prometheus-operator-kube-p-alertmanager-0 2/2 Running 0 3m7s

prometheus-operator-grafana-5ddfb7b5d4-fpht2 3/3 Running 0 4m26s

prometheus-operator-kube-p-operator-66d6dcdf8c-qrgp2 1/1 Running 0 4m26s

prometheus-operator-kube-state-metrics-7c4879c9d7-q24sj 0/1 ImagePullBackOff 0 4m26s

prometheus-operator-prometheus-node-exporter-cdnlw 1/1 Running 0 4m26s

prometheus-operator-prometheus-node-exporter-qfmkp 1/1 Running 0 4m26s

prometheus-operator-prometheus-node-exporter-qpkvb 1/1 Running 0 4m26s

prometheus-prometheus-operator-kube-p-prometheus-0 0/2 PodInitializing 0 3m5s

[root@cka-master helm]# kubectl describe pod prometheus-operator-kube-state-metrics-7c4879c9d7-q24sj

Name: prometheus-operator-kube-state-metrics-7c4879c9d7-q24sj

Namespace: prometheus-operator

Priority: 0

Node: cka-node2/192.168.184.131

Start Time: Tue, 15 Mar 2022 21:00:08 +0800

Labels: app.kubernetes.io/component=metrics

app.kubernetes.io/instance=prometheus-operator

app.kubernetes.io/managed-by=Helm

app.kubernetes.io/name=kube-state-metrics

app.kubernetes.io/part-of=kube-state-metrics

app.kubernetes.io/version=2.4.1

helm.sh/chart=kube-state-metrics-4.7.0

pod-template-hash=7c4879c9d7

release=prometheus-operator

Annotations: cni.projectcalico.org/containerID: 4a9b460bf3ecf4d57ba67aa6ef40301c5228ee9e5787aaea0ff53df788804d0d

cni.projectcalico.org/podIP: 10.244.148.135/32

cni.projectcalico.org/podIPs: 10.244.148.135/32

Status: Pending

IP: 10.244.148.135

IPs:

IP: 10.244.148.135

Controlled By: ReplicaSet/prometheus-operator-kube-state-metrics-7c4879c9d7

Containers:

kube-state-metrics:

Container ID:

Image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.1

Image ID:

Port: 8080/TCP

Host Port: 0/TCP

Args:

--port=8080

--resources=certificatesigningrequests,configmaps,cronjobs,daemonsets,deployments,endpoints,horizontalpodautoscalers,ingresses,jobs,limitranges,mutatingwebhookconfigurations,namespaces,networkpolicies,nodes,persistentvolumeclaims,persistentvolumes,poddisruptionbudgets,pods,replicasets,replicationcontrollers,resourcequotas,secrets,services,statefulsets,storageclasses,validatingwebhookconfigurations,volumeattachments

--telemetry-port=8081

State: Waiting

Reason: ImagePullBackOff

Ready: False

Restart Count: 0

Liveness: http-get http://:8080/healthz delay=5s timeout=5s period=10s #success=1 #failure=3

Readiness: http-get http://:8080/ delay=5s timeout=5s period=10s #success=1 #failure=3

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-zzxmm (ro)

Conditions:

Type Status

Initialized True

Ready False

ContainersReady False

PodScheduled True

Volumes:

kube-api-access-zzxmm:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning Failed 37m kubelet Failed to pull image "k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.1": rpc error: code = Unknown desc = Error response from daemon: Get "https://k8s.gcr.io/v2/": net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Normal SandboxChanged 37m kubelet Pod sandbox changed, it will be killed and re-created.

Warning Failed 36m (x2 over 37m) kubelet Failed to pull image "k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.1": rpc error: code = Unknown desc = Error response from daemon: Get "https://k8s.gcr.io/v2/": context deadline exceeded

Warning Failed 36m (x3 over 37m) kubelet Error: ErrImagePull

Warning Failed 35m (x7 over 37m) kubelet Error: ImagePullBackOff

Normal Pulling 35m (x4 over 38m) kubelet Pulling image "k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.1"

Normal BackOff 33m (x14 over 37m) kubelet Back-off pulling image "k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.1"

Normal Scheduled 5m47s default-scheduler Successfully assigned prometheus-operator/prometheus-operator-kube-state-metrics-7c4879c9d7-q24sj to cka-node2

[root@cka-master helm]# docker pull kube-state-metrics:v2.4.1

Error response from daemon: pull access denied for kube-state-metrics, repository does not exist or may require 'docker login': denied: requested access to the resource is denied

[root@cka-master helm]# docker pull liangjw/kube-state-metrics:v2.4.1

v2.4.1: Pulling from liangjw/kube-state-metrics

2df365faf0e3: Pull complete

b5a1c05a2e3a: Pull complete

Digest: sha256:69a18fa1e0d0c9f972a64e69ca13b65451b8c5e79ae8dccf3a77968be4a301df

Status: Downloaded newer image for liangjw/kube-state-metrics:v2.4.1

docker.io/liangjw/kube-state-metrics:v2.4.1

[root@cka-master helm]# docker tag liangjw/kube-state-metrics:v2.4.1 k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.1

[root@cka-master helm]# docker get pod

docker: 'get' is not a docker command.

See 'docker --help'

[root@cka-master helm]# kubectl get pod

NAME READY STATUS RESTARTS AGE

alertmanager-prometheus-operator-kube-p-alertmanager-0 2/2 Running 0 10m

prometheus-operator-grafana-5ddfb7b5d4-fpht2 3/3 Running 0 11m

prometheus-operator-kube-p-operator-66d6dcdf8c-qrgp2 1/1 Running 0 11m

prometheus-operator-kube-state-metrics-7c4879c9d7-q24sj 1/1 Running 0 11m

prometheus-operator-prometheus-node-exporter-cdnlw 1/1 Running 0 11m

prometheus-operator-prometheus-node-exporter-qfmkp 1/1 Running 0 11m

prometheus-operator-prometheus-node-exporter-qpkvb 1/1 Running 0 11m

prometheus-prometheus-operator-kube-p-prometheus-0 2/2 Running 0 10m

[root@cka-master helm]#

暴露端口

[root@cka-master helm]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 12m

prometheus-operated ClusterIP None <none> 9090/TCP 12m

prometheus-operator-grafana ClusterIP 10.107.149.15 <none> 80/TCP 13m

prometheus-operator-kube-p-alertmanager ClusterIP 10.107.184.233 <none> 9093/TCP 13m

prometheus-operator-kube-p-operator ClusterIP 10.111.47.56 <none> 443/TCP 13m

prometheus-operator-kube-p-prometheus ClusterIP 10.96.122.36 <none> 9090/TCP 13m

prometheus-operator-kube-state-metrics ClusterIP 10.99.1.24 <none> 8080/TCP 13m

prometheus-operator-prometheus-node-exporter ClusterIP 10.102.67.143 <none> 9100/TCP 13m

[root@cka-master helm]#

[root@cka-master helm]# kubectl edit svc prometheus-operator-grafana

service/prometheus-operator-grafana edited

[root@cka-master helm]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 15m

prometheus-operated ClusterIP None <none> 9090/TCP 15m

prometheus-operator-grafana NodePort 10.107.149.15 <none> 80:32159/TCP 17m

prometheus-operator-kube-p-alertmanager ClusterIP 10.107.184.233 <none> 9093/TCP 17m

prometheus-operator-kube-p-operator ClusterIP 10.111.47.56 <none> 443/TCP 17m

prometheus-operator-kube-p-prometheus ClusterIP 10.96.122.36 <none> 9090/TCP 17m

prometheus-operator-kube-state-metrics ClusterIP 10.99.1.24 <none> 8080/TCP 17m

prometheus-operator-prometheus-node-exporter ClusterIP 10.102.67.143 <none> 9100/TCP 17m

[root@cka-master helm]#

编辑spec.type=ClusterIP为spec.type=NodePort

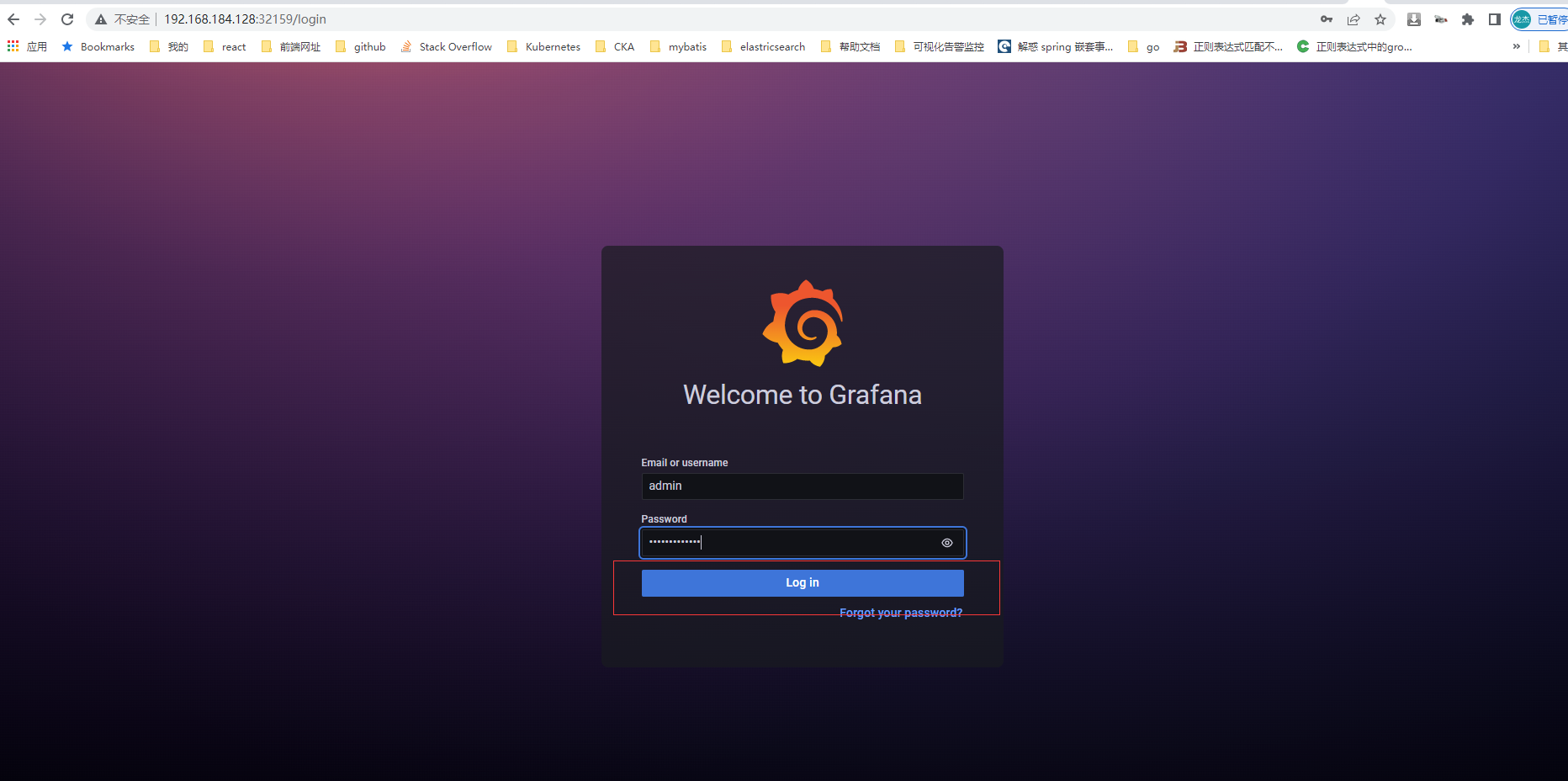

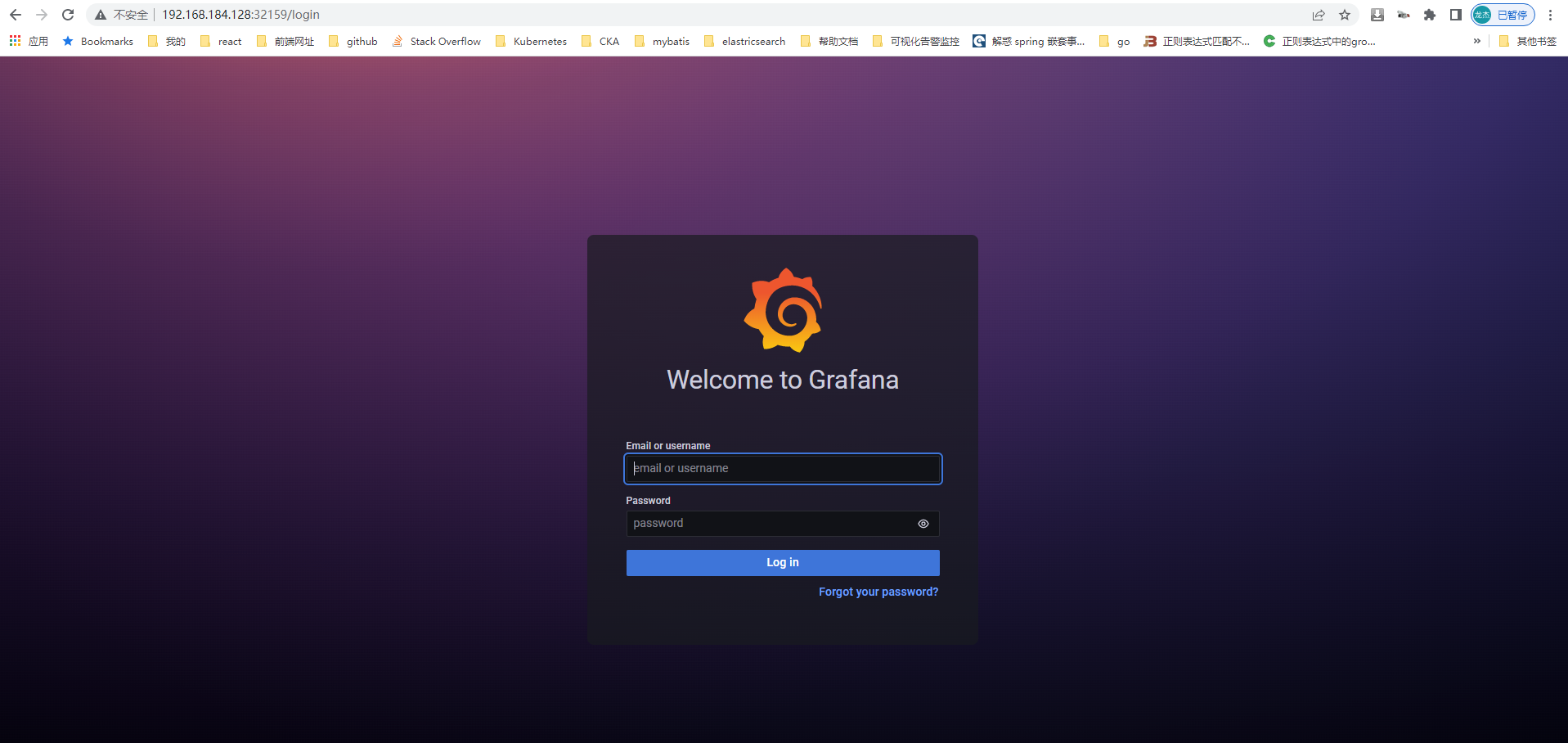

端口访问

http://192.168.184.128:32159/login

查看登录密码

[root@cka-master helm]# kubectl get secrets prometheus-operator-grafana -o yaml

apiVersion: v1

data:

admin-password: cHJvbS1vcGVyYXRvcg==

admin-user: YWRtaW4=

ldap-toml: ""

kind: Secret

metadata:

annotations:

meta.helm.sh/release-name: prometheus-operator

meta.helm.sh/release-namespace: prometheus-operator

creationTimestamp: "2022-03-15T13:32:56Z"

labels:

app.kubernetes.io/instance: prometheus-operator

app.kubernetes.io/managed-by: Helm

app.kubernetes.io/name: grafana

app.kubernetes.io/version: 8.4.2

helm.sh/chart: grafana-6.24.1

name: prometheus-operator-grafana

namespace: prometheus-operator

resourceVersion: "232920"

uid: c99ae352-5f59-46b4-9764-459ec8409761

type: Opaque

[root@cka-master helm]#

即用户名:YWRtaW4= 密码:cHJvbS1vcGVyYXRvcg==

[root@cka-master helm]# echo -n "YWRtaW4=" |base64 -d

admin

[root@cka-master helm]# echo -n "cHJvbS1vcGVyYXRvcg==" |base64 -d

prom-operator

[root@cka-master helm]#

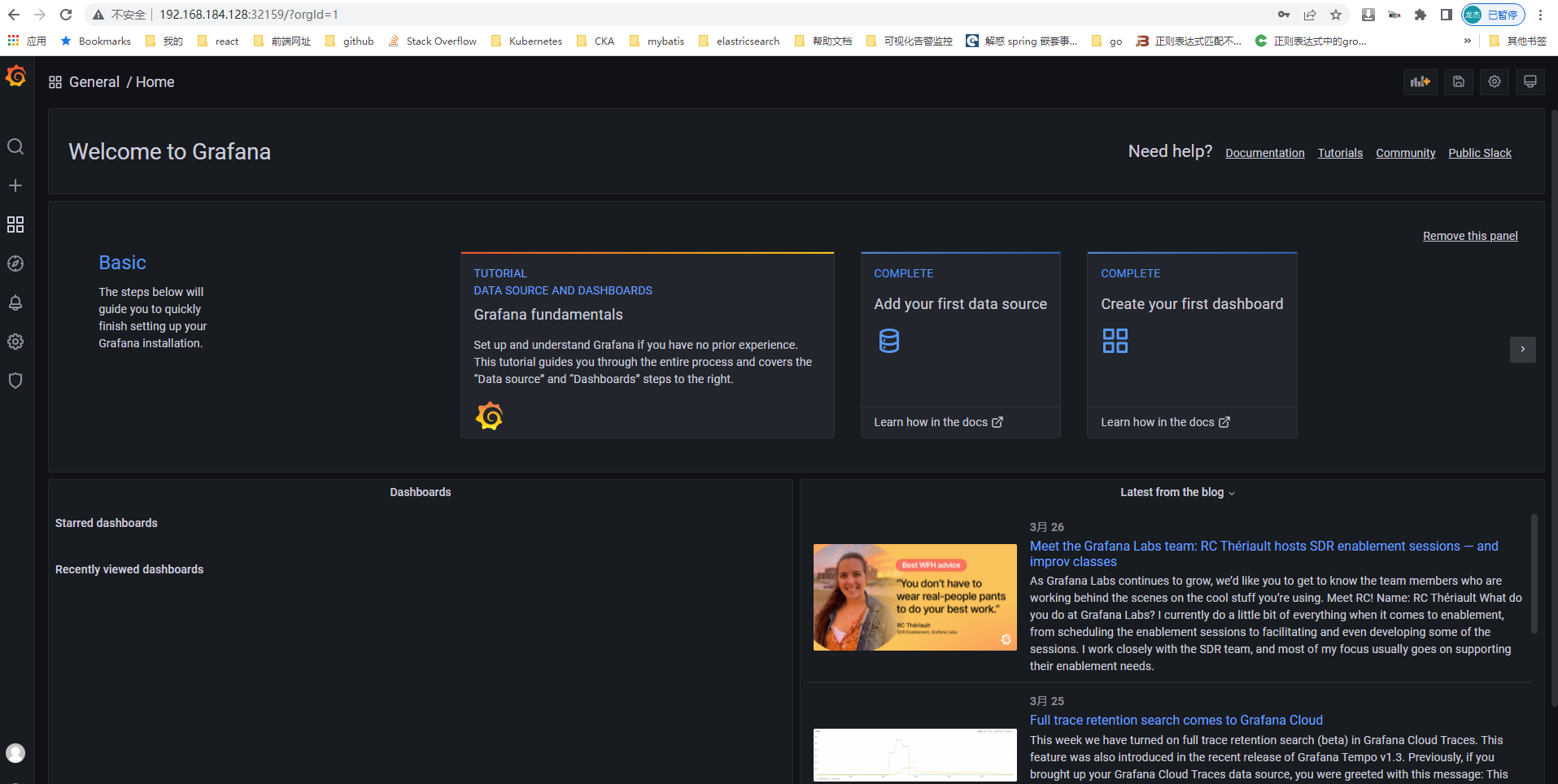

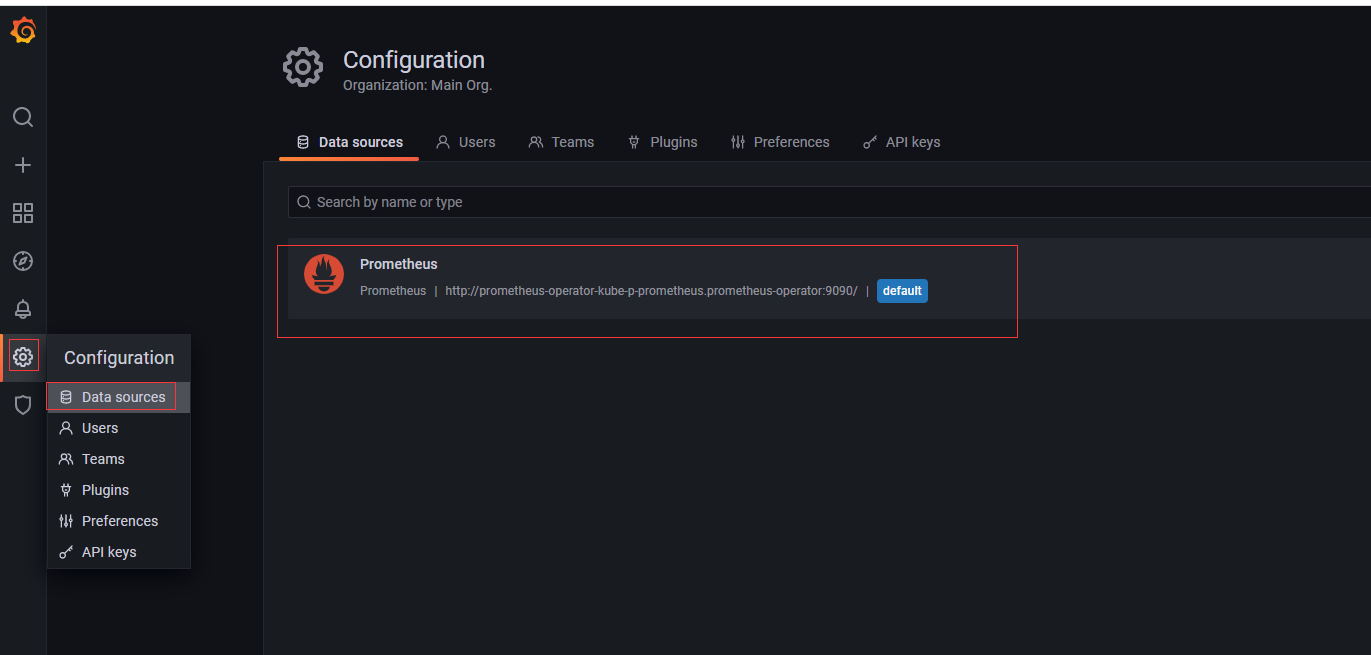

相关配置

这里可以看到prometheus已经默认添加到数据源了。