Introduction

介绍

Comma separated values, also known as CSVs, are one of the most common and basic formats for storing and exchanging tabular datasets for statistical analysis tools and spreadsheet applications. Due to its popularity, it is not uncommon for governing bodies and other important organizations to share official datasets in CSV format.

逗号分隔值【Comma separated values】,也就是所说的CSV,它是一种在统计分析工具与电子表格应用【spreadsheet applications.】中的用于交换、存储的表格数据集【tabular datasets】的一种常见格式。由于其流行性,在管理机构【governing bodies】与其它的重要的组织分享通过CSV分享官方数据【official datasets】是常见的。

Despite its simplicity, popularity, and widespread use, it is common for CSV files created using one application to display incorrectly in another. This is because there is no official universal CSV specification to which applications must adhere. As a result, several implementations exist with slight variations.

尽管它很简单、流行,同时被广泛地使用。但是,一个CSV文件被创建出来在另一个应用程序中显示错误现象,是比较常见的。这是因为并没有官方、统一的CSV规范使应用程序必须遵守。因此【As a resul】,就存在几种略有差异【slight variations】的实现。

Most modern operating systems and programming languages, including JavaScript and the Node.js runtime environment, have applications and packages for reading, writing, and parsing CSV files.

许多现代操作系统和编程语言,包括JS与Node。它们提供了应用程序与包,以便于读、写和解析CSV文件。

In this article, we will learn how to manage CSV files in Node. We shall also highlight the slight variations in the different CSV implementations. Some popular packages we will look at include csv-parser, Papa Parse, and Fast-CSV.

在本篇文章中,我们将学习到在Node中如何管理CSV文件。我们会特别强调这几种CSV实现的细微差异。我们将看到一些流行的包,包括csv-parser、Papa Parse和Fast-CSV。

What is a CSV file?

什么是CSV文件?

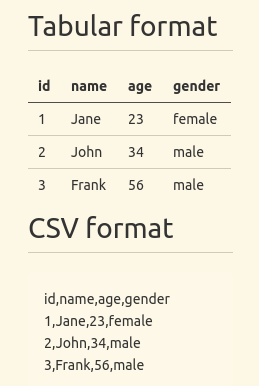

CSV files are ordinary text files comprised of data arranged in rectangular form. When you save a tabular data set in CSV format, a new line character will separate successive rows while a comma will separate consecutive entries in a row. The image below shows a tabular data set and its corresponding CSV format.

CSV文件是由矩形【rectangular】排列【arranged】的数据组成的普通【ordinary】文本文件。换行字符将分隔连续的行,逗号将分隔行内连续【consecutive】的项。下图显示了一个数据表格和对应【corresponding】的CSV格式。

In the above CSV data, the first row is made up of field names, though that may not always be the case. Therefore, it is necessary to investigate your data set before you start to read and parse it.

在上面的CSV数据中,第一行是由字段名组成,实际上不非是这种情况【be the case】。因此,在你开始读取和解析之前是由必要进行检查【investigate】。

Although the name Comma Separated Values seems to suggest that a comma should always separate subsequent entries in each record, some applications generate CSV files that use a semicolon as delimiter instead of a comma.

尽管逗号分隔值这个名称已经暗示了,使用逗号在每一个记录后进行分隔。但是,一些应用程序在生成CSV文件时使用分号【semicolon】而不是逗号。

As already mentioned, there is no official universal standard to which CSV implementations should adhere, even though the unofficial RFC 4180 technical standard does exist. However, this standard came into existence many years after the CSV format gained popularity.

正如前文提到的,尽管存在一个非官方的RFC 4180标准,但没有CSV实现必须遵守的官方标准。然而,这个标准是在CSV流行许多年之后才开始出现。

How to manage CSV files in Node.js

在Node中如何管理CSV

In the previous section, we had a brief introduction to CSV files. In this section, you will learn how to read, write, and parse CSV files in Node using both built-in and third-party packages.

Using the fs module

使用fs模块

The fs module is the de facto module for working with files in Node. The code below uses the readFile function of the fs module to read from a data.csv file:

fs模块在Node中实际上【de facto】是处理文件的模块。下面👇🏻的代码使用fs模块内的readFile方法冲data.csv文件中读取数据。

const fs = require("fs");fs.readFile("data.csv", "utf-8", (err, data) => {if (err) console.log(err);else console.log(data);});

The corresponding example below writes to a CSV file using the writeFile function of the fs module:

相关的【corresponding】例子使用writeFile方法向CSV文件内写入。

const fs = require("fs");const data = `id,name,age1,Johny,452,Mary,20`;fs.writeFile("data.csv", data, "utf-8", (err) => {if (err) console.log(err);else console.log("Data saved");});

If you are not familiar with reading and writing files in Node, check out my complete tutorial to reading and writing JSON files in Node.

如果你并熟悉在Node中如何读写文件,可以阅读相应的文章。

If you use fs.readFile, fs.writeFile, or its synchronous counterpart like in the above examples, Node will read the entire file into memory before processing it. With the createReadStream and createWriteStream functions of the fs module, you can use streams instead to reduce memory footprint and data processing time.

如果你使用fs.readFile,fs.writeFile,或者像上面例子同步文件,Node将在运行代码之前将文件读取进内存中。

借由fs模块内的createReadStream与createWriteStream方法,你可以使用流减少内存占用空间与数据处理时间。

The example below uses the createReadStream function to read from a data.csv file:

下面👇🏻的例子使用createReadStream方法从data.csv文件读取数据。

const fs = require("fs");fs.createReadStream("data.csv", { encoding: "utf-8" }).on("data", (chunk) => {console.log(chunk);}).on("error", (error) => {console.log(error);});

Additionally, most of the third-party packages we shall look at in the following subsections also use streams.

此外,我们在文章的下面部分章节将会看到许多第三方包也使用流。

Using the csv-parser package

使用csv-parser包

This is a relatively tiny third-party package you can install from the npm package registry. It is capable of parsing and converting CSV files to JSON.

这是一个相对轻量级的第三方包,你可以从npm安装。它可以很轻松的【capable of】解析、转化CSV文件成JSON。

The code below illustrates how to read data from a CSV file and convert it to JSON using csv-parser. We are creating a readable stream using the createReadStream method of the fs module and piping it to the return value of csv-parser():

下面的代码说明了如何使用csv-parser读取数据将CSV文件转化为JSON.我们使用createReadStream方法创建一个可读的流通过管道传输给csv-paser()处理返回值。

const fs = require("fs");const csvParser = require("csv-parser");const result = [];fs.createReadStream("./data.csv").pipe(csvParser()).on("data", (data) => {result.push(data);}).on("end", () => {console.log(result);});

There is an optional configuration object that you can pass to csv-parser. By default, csv-parser treats the first row of your data set as field names(headers).

有一个可选择的配置选项你可以传输给csv-parser。默认情况下,csv-parser将数据集【data set】第一行视为字段字段名(标题)。

If your dataset doesn’t have headers, or successive data points are not comma-delimited, you can pass the information using the optional configuration object. The object has additional configuration keys you can read about in the documentation.

In the example above, we read the CSV data from a file. You can also fetch the data from a server using an HTTP client like Axios or Needle. The code below illustrates how to go about it:

如果数据集没有标题,或者连续的数据点没有逗号分隔【comma-delimited】,你可以使用配置参数向对象传输信息。配置项内的额外选项,你可以在本篇文章中读到。在上面的例子中,我们从文件中读取CSV数据。你也可以使用一些HTTP客户端比如Axios或者Needle通过服务获取数据。下面的代码解释了如何使用获取数据:

const csvParser = require("csv-parser");const needle = require("needle");const result = [];const url = "https://people.sc.fsu.edu/~jburkardt/data/csv/deniro.csv";needle.get(url).pipe(csvParser()).on("data", (data) => {result.push(data);}).on("done", (err) => {if (err) console.log("An error has occurred");else console.log(result);});

You need to first install Needle before executing the code above. The get request method returns a stream that you can pipe to csv-parser(). You can also use another package if Needle isn’t for you.

在你执行上面👆🏻的代码之前你需要安装Needle。使用get方法返回stream传输给csv-parser().如果Needle不适合你,你可以使用另外的包。

The above examples highlight just a tiny fraction of what csv-parser can do. As already mentioned, the implementation of one CSV document may be different from another. Csv-parser has built-in functionalities for handling some of these differences.

上面的实例只是强调了csv-parser一部分【a tiny fraction】功能。前文提到,CSV的实现也许不同于另一个。csv-parser可以处理一些细微的不同。

Though csv-parser was created to work with Node, you can use it in the browser with tools such as Browserify.

尽管csv-parser可以在Node中被使用,你也可以使用工具Browserify,在浏览器使用。

Using the Papa Parse package

使用Papa Parse包

Papa Parse is another package for parsing CSV files in Node. Unlike csv-parser, which works out of the box with Node, Papa Parse was created for the browser. Therefore, it has limited functionalities if you intend to use it in Node.

Papa Parse 是另一个CSV文件解析的包。不想csv-parser在Node中开箱即用【works out of the box】,Papa Parse是为了浏览器被创建出来。

We illustrate how to use Papa Parse to parse CSV files in the example below. As before, we have to use the createReadStream method of the fs module to create a read stream, which we then pipe to the return value of papaparse.parse().

下面的例子🌰说明了如何使用Papa Parse解析CSV文件。和以前一样,使用createReadStream方法创建一个读取流,然后传输给papaparse.parse()解析返回值。

The papaparse.parse function you use for parsing takes an optional second argument. In the example below, we pass the second argument with the header property. If the value of the header property is true, papaparse will treat the first row in our CSV file as column(field) names.

在papaparse.parse方法中第二个参数设置配置。在下面的例子中,我们向第二个参数设置了header属性。如果header属性值是true,papaparse将会处理CSV文件第一行作为column(field) names.

The object has other fields that you can look up in the documentation. Unfortunately, some properties are still limited to the browser and not yet available in Node.

该对象还有其它的字段,你可以在文章中查找。不幸的是,一些属性只能被限定在浏览器使用Node不能被使用。

const fs = require("fs");const Papa = require("papaparse");const results = [];const options = { header: true };fs.createReadStream("data.csv").pipe(Papa.parse(Papa.NODE_STREAM_INPUT, options)).on("data", (data) => {results.push(data);}).on("end", () => {console.log(results);});

Similarly, you can also fetch the CSV dataset as readable streams from a remote server using an HTTP client like Axios or Needle and pipe it to the return value of papa-parse.parse() like before.

类似的,你可以获取CSV数据作为一个可写的文件流通过Axios或者Needle从远端获得。借由papaparse.parse()进行解析。

In the example below, I illustrate how to use Needle to fetch data from a server. It is worth noting that making a network request with one of the HTTP methods like needle.get returns a readable stream:

下面的例子,我解释如何使用Needle从服务端获取数据。值得注意的是,像Needle进行网络请求needle.get可以获取一个可写的文件流:

const needle = require("needle");const Papa = require("papaparse");const results = [];const options = { header: true };const csvDatasetUrl = "https://people.sc.fsu.edu/~jburkardt/data/csv/deniro.csv";needle.get(csvDatasetUrl).pipe(Papa.parse(Papa.NODE_STREAM_INPUT, options)).on("data", (data) => {results.push(data);}).on("end", () => {console.log(results);});

Using the Fast-CSV package

使用Fast-CSV包

This is a flexible third-party package for parsing and formatting CSV data sets that combines @fast-csv/format and @fast-csv/parse packages into a single package. You can use @fast-csv/format and @fast-csv/parse for formatting and parsing CSV datasets, respectively.

这是一个灵活的第三方包为解析与格式化CSV数据,它由@fast-csv/format与@fast-csv/parse两个包组成。可以分别使用@fast-csv/format与@fast-csv/parse格式与解析CSV数据。

The example below illustrates how to a read CSV file and parse it to JSON using Fast-CSV:

下面的代码说明了如何使用Fast-CSV读取CSV文件并转化成JSON。

const fs = require("fs");const fastCsv = require("fast-csv");const options = {objectMode: true,delimiter: ";",quote: null,headers: true,renameHeaders: false,};const data = [];fs.createReadStream("data.csv").pipe(fastCsv.parse(options)).on("error", (error) => {console.log(error);}).on("data", (row) => {data.push(row);}).on("end", (rowCount) => {console.log(rowCount);console.log(data);});

Above, we are passing the optional argument to the fast-csv.parse function. The options object is primarily for handling the variations between CSV files. If you don’t pass it, csv-parser will use the default values. For this illustration, I am using the default values for most options.

上面👆🏻,我们使用可选参数传输给fast-csv.parse方法。这个options对象主要处理CSV文件之间的差异。如果你没有设置csv-parser,将会使用默认的值。在本示例中,我使用了许多options内的默认值。

In most CSV datasets, the first row contains the column headers. By default, Fast-CSV considers the first row to be a data record. You need to set the headers option to true, like in the above example, if the first row in your data set contains the column headers.

在许多CSV数据中,首行常常包含列标题。默认情况下【By default】,Fast-CSV认为首行是一个数据记录。类似于上面的代码,如果在你的数据中首行包含表头,你需要设置headers为true。

Similarly, as we mentioned in the opening section, some CSV files may not be comma-delimited. You can change the default delimiter using the delimiter option as we did in the above example.

相类似的,我们在开头部分【in the opening section】也提到。一些CSV文件并不是逗号分隔,正如我们所写的示例,你可以使用修改delimiter的属性。

Instead of piping the readable stream as we did in the previous example, we can also pass it as an argument to the parseStream function as in the example below:

不像前文示例中提到的管道化可读流,我们也可以将其作为参数传递给parseStream函数,正如下面的例子。

const fs = require("fs");const fastCsv = require("fast-csv");const options = {objectMode: true,delimiter: ",",quote: null,headers: true,renameHeaders: false,};const data = [];const readableStream = fs.createReadStream("data.csv");fastCsv.parseStream(readableStream, options).on("error", (error) => {console.log(error);}).on("data", (row) => {data.push(row);}).on("end", (rowCount) => {console.log(rowCount);console.log(data);});

The functions above are the primary functions you can use for parsing CSV files with Fast-CSV. You can also use the parseFile and parseString functions, but we won’t cover them here. For more about them, you should to read the documentation.

以上的函数是Fast-CSV中主要的解析CSV文件的方法。你也可以使用parseFile与parseString方法,在这里我们并没有提到如何使用。如果想更多了解细节,你需要读取相应的文档。

Conclusion

结论

The Comma Separated Values format is one of the most popular formats for data exchange. CSV datasets consist of simple text files readable to both humans and machines. Despite its popularity, there is no universal standard.

在数据交换中CSV格式是非常流行的。CSV数据集是一个简单的文本文件,非常易于人类和基础读取。尽管它很流行,但是却没有通用的标准。

The unofficial RFC 4180 technical standard attempts to standardize the format, but some subtle differences exist among the different CSV implementations. These differences exist because the CSV format started before the RFC 4180 technical standard came into existence. Therefore, it is common for CSV datasets generated by one application to display incorrectly in another application.

非官方的RFC 4180技术标准尝试统一格式,但是在已经实现的CSV实现中有着细微的差异。这些不同的差异在RFC 4180出现之前就已经出现。因此,常常在一个应用程序生成的CSV数据集,在另一个应用程序显示错误。

You can use the built-in functionalities or third-party packages for reading, writing, and parsing simple CSV datasets in Node. Most of the CSV packages we looked at are flexible enough to handle the subtle differences resulting from the different CSV implementations.

你可以使用内置功能【the built-in functionalities】或者第三方包在Node中读、写和解析CSV数据。我们看到许多CSV包足够灵活的处理不同CSV实现的差异。

原文:https://blog.logrocket.com/complete-guide-csv-files-node-js/