1 构建基础镜像

1.1 构建基础CentOS镜像

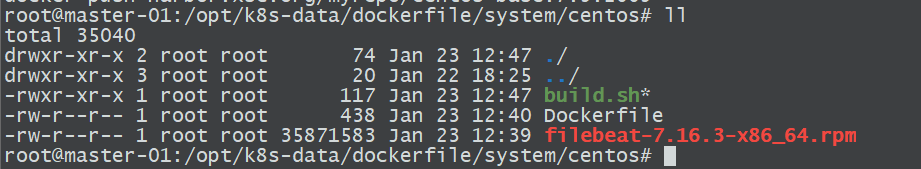

1、构建目录,自行将filebeat文件下载到构建目录

2、Dockerfile文件

#自定义CentOS基础镜像FROM centos:7.9.2009MAINTAINER evn.xiang 505597492@qq.comADD filebeat-7.16.3-x86_64.rpm /tmpRUN yum install -y /tmp/filebeat-7.16.3-x86_64.rpm vim wget tree lrzsz gcc gcc-c++ automake pcre pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop && rm -rf /etc/localtime /tmp/filebeat-7.16.3-x86_64.rpm && ln -snf /usr/share/zoneinfo/Asia/Shanghaif /etc/localtime && useradd nginx -u 2022

3、构建脚本,执行构建脚本

docker build -t harbor.xsc.org/myrepo/centos-base:7.9.2009 .

docker push harbor.xsc.org/myrepo/centos-base:7.9.2009

1.2 构建nginx基础镜像

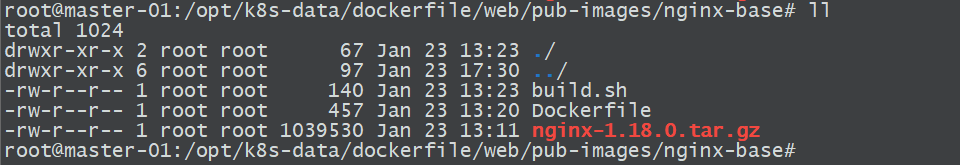

1、构建目录,请自行将nginx的包nginx-1.18.0.tar.gz放入构建目录

2、Dokerfile文件

#Build Nginx Base Image

FROM harbor.xsc.org/myrepo/centos-base:7.9.2009

MAINTAINER evn.xiang 505597482@qq.com

RUN yum install -y vim wget tree lrzsz gcc gcc-c++ automake pcre-devel zlib zlib-devel openssl openssl-devel iproute net-tools iotop

ADD nginx-1.18.0.tar.gz /usr/local/src/

RUN cd /usr/local/src/nginx-1.18.0 && ./configure && make && make install && ln -sv /usr/local/nginx/sbin/nginx /usr/sbin/nginx && rm -rf /usr/local/src/nginx-1.18.0.tar.gz

3、构建脚本

#!/bin/bash

docker build -t harbor.xsc.org/pub-images/nginx-base:v1.18.0 .

sleep 1

docker push harbor.xsc.org/pub-images/nginx-base:v1.18.0

1.3 构建jdk基础镜像

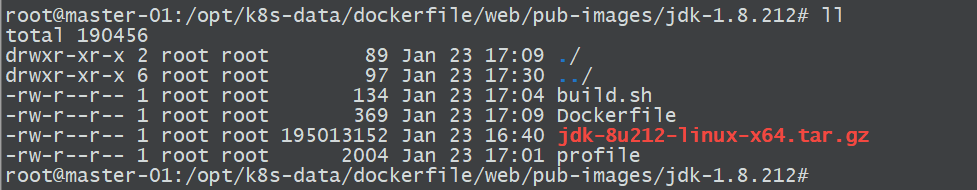

1、构建目录,请自行将jdk的包jdk-8u212-linux-x64.tar.gz放入构建目录

2、Dockerfile文件

#Build JDK Base image

FROM harbor.xsc.org/myrepo/centos-base:7.9.2009

MAINTAINER evn.xiang "505597482@qq.com"

ADD jdk-8u212-linux-x64.tar.gz /usr/local/src/

RUN ln -sv /usr/local/src/jdk1.8.0_212 /usr/local/jdk

ADD profile /etc/prifile

ENV JAVA_HOME /usr/local/jdk

ENV JRE_HOME $JAVA_HOME/jre

ENV CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

ENV PATH $PATH:$JAVA_HOME/bin

3、profile文件

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`/usr/bin/id -u`

UID=`/usr/bin/id -ru`

fi

USER="`/usr/bin/id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

fi

HOSTNAME=`/usr/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`/usr/bin/id -gn`" = "`/usr/bin/id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh /etc/profile.d/sh.local ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

fi

fi

done

unset i

unset -f pathmunge

export PATH=/app/mysql/mysql/bin/:$PATH

export JAVA_HOME /usr/local/jdk

export JRE_HOME $JAVA_HOME/jre

export CLASSPATH $JAVA_HOME/lib/:$JRE_HOME/lib/

export PATH $PATH:$JAVA_HOME/bin

4、构建脚本

#!/bin/bash

docker build -t harbor.xsc.org/pub-images/jdk-base:v8.212 .

sleep 1

docker push harbor.xsc.org/pub-images/jdk-base:v8.212

1.4 构建tomcat基础镜像

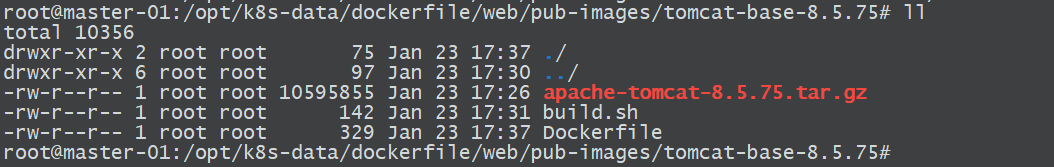

1、构建目录,请自行将tomcat的包apache-tomcat-8.5.75.tar.gz放入构建目录

2、Dockerfile文件

# Tomcat 8.5.43基础镜像

FROM harbor.xsc.org/pub-images/jdk-base:v8.212

MAINTAINER evn.xiang "505597482@qq.com"

RUN mkdir /apps /data/tomcat/webapps /data/tomcat/logs -pv

ADD apache-tomcat-8.5.75.tar.gz /apps

RUN useradd tomcat -u 2023 && ln -sv /apps/apache-tomcat-8.5.75 /apps/tomcat && chown -R tomcat.tomcat /apps /data

3、构建脚本

#!/bin/bash

docker build -t harbor.xsc.org/pub-images/tomcat-base:v8.5.75 .

sleep 3

docker push harbor.xsc.org/pub-images/tomcat-base:v8.5.75

2 部署Nginx+Tomcat+NFS

2.1 构建nginx应用镜像并部署

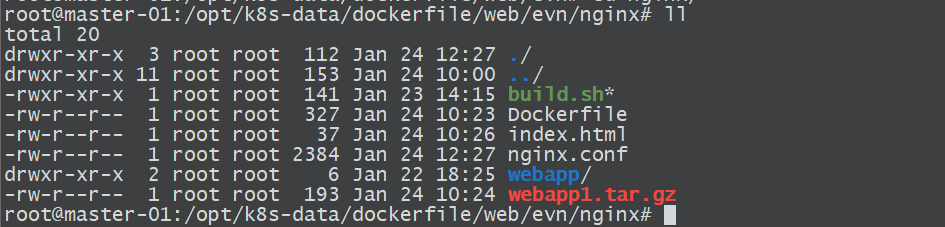

1、构建目录,index.html以及webapp1.tar.gz都只是一些测试页面,可自行定义

2、nginx.conf配置文件

user nginx;

worker_processes auto;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

daemon off;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent" "$http_x_forwarded_for"';

access_log logs/access.log main;

sendfile on;

tcp_nopush on;

tcp_nodelay on;

keepalive_timeout 65;

types_hash_max_size 4096;

charset utf-8,gbk;

# Load modular configuration files from the /etc/nginx/conf.d directory.

# See http://nginx.org/en/docs/ngx_core_module.html#include

# for more information.

upstream tomcat_webserver {

server web-tomcat-service.default.svc.xsc.local:8080;

}

server {

listen 80;

server_name localhost;

location / {

root /usr/local/nginx/html;

index index.html index.htm;

}

location /webapp {

root /usr/local/nginx/html;

index index.html index.htm;

}

location /myapp/ {

proxy_pass http://tomcat_webserver;

proxy_set_header Host $host;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Real-IP $remote_addr;

}

error_page 404 /404.html;

location = /404.html {

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

}

}

}

3、Dockerfile文件

#Nginx 1.18.0 server

FROM harbor.xsc.org/pub-images/nginx-base:v1.18.0

ADD nginx.conf /usr/local/nginx/conf/nginx.conf

ADD webapp1.tar.gz /usr/local/nginx/html/webapp

ADD index.html /usr/local/nginx/html/index.html

#静态页面挂载路径

RUN mkdir -p /usr/local/nginx/html/webapp/{static,images}

EXPOSE 80 443

CMD ["nginx"]

4、构建脚本

#!/bin/bash

TAG=$1

docker build -t harbor.xsc.org/webapp1/nginx-web1:${TAG} .

sleep 1

docker push harbor.xsc.org/webapp1/nginx-web1:${TAG}

5、编写yaml文件部署在k8s上

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-nginx

labels:

app: web-nginx

spec:

replicas: 1

selector:

matchLabels:

app: web-nginx

template:

metadata:

labels:

app: web-nginx

spec:

containers:

- name: web-nginx

image: harbor.xsc.org/webapp1/nginx-web1:v1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

env:

- name: "account"

value: "admin"

- name: "password"

value: "abc123.."

volumeMounts:

- mountPath: /usr/local/nginx/html/webapp/static

name: staticdir-volume

- mountPath: /usr/local/nginx/html/webapp/images

name: imagesdir-volume

volumes:

- name: staticdir-volume

nfs:

server: 10.0.0.253

path: /k8snfs/data/nginx/static/

- name: imagesdir-volume

nfs:

server: 10.0.0.253

path: /k8snfs/data/nginx/images/

---

apiVersion: v1

kind: Service

metadata:

name: web-nginx-service

spec:

type: NodePort

selector:

app: web-nginx

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

nodePort: 30008

- name: https

protocol: TCP

port: 443

targetPort: 443

nodePort: 30009

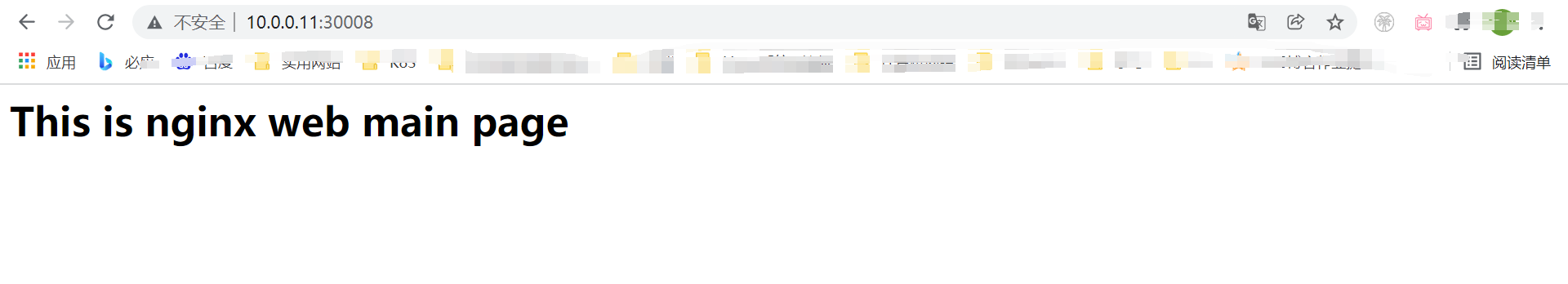

6、部署并访问# kubectl apply -f webapp1.yaml

2.2 构建Tomcat应用镜像并部署

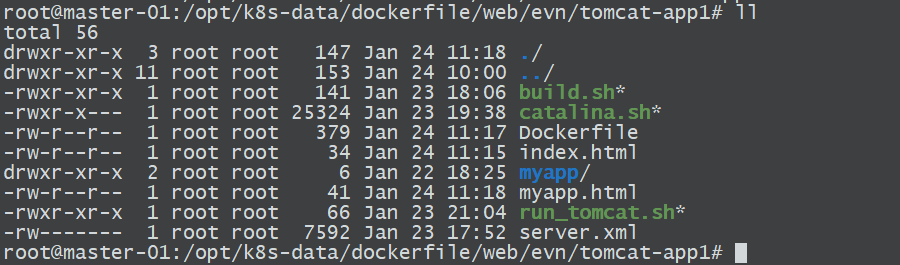

1、构建目录,index.html以及myapp.html是一些测试页面,可自行定义

2、Dockerfile文件

#tomcat web1

FROM harbor.xsc.org/pub-images/tomcat-base:v8.5.75

ADD index.html /data/tomcat/webapps/

ADD myapp.html /data/tomcat/webapps/myapp/

ADD server.xml /apps/tomcat/conf/server.xml

ADD catalina.sh /apps/tomcat/bin/catalina.sh

ADD run_tomcat.sh /apps/tomcat/bin/run_tomcat.sh

RUN chown -R tomcat.tomcat /data/ /apps/

EXPOSE 8080 8443

CMD ["/apps/tomcat/bin/run_tomcat.sh"]

3、server.xml文件,此处只修改了appBase=/data/tomcat/webapps

<?xml version="1.0" encoding="UTF-8"?>

<!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<!-- Note: A "Server" is not itself a "Container", so you may not

define subcomponents such as "Valves" at this level.

Documentation at /docs/config/server.html

-->

<Server port="8005" shutdown="SHUTDOWN">

<Listener className="org.apache.catalina.startup.VersionLoggerListener" />

<!-- Security listener. Documentation at /docs/config/listeners.html

<Listener className="org.apache.catalina.security.SecurityListener" />

-->

<!-- APR library loader. Documentation at /docs/apr.html -->

<Listener className="org.apache.catalina.core.AprLifecycleListener" SSLEngine="on" />

<!-- Prevent memory leaks due to use of particular java/javax APIs-->

<Listener className="org.apache.catalina.core.JreMemoryLeakPreventionListener" />

<Listener className="org.apache.catalina.mbeans.GlobalResourcesLifecycleListener" />

<Listener className="org.apache.catalina.core.ThreadLocalLeakPreventionListener" />

<!-- Global JNDI resources

Documentation at /docs/jndi-resources-howto.html

-->

<GlobalNamingResources>

<!-- Editable user database that can also be used by

UserDatabaseRealm to authenticate users

-->

<Resource name="UserDatabase" auth="Container"

type="org.apache.catalina.UserDatabase"

description="User database that can be updated and saved"

factory="org.apache.catalina.users.MemoryUserDatabaseFactory"

pathname="conf/tomcat-users.xml" />

</GlobalNamingResources>

<!-- A "Service" is a collection of one or more "Connectors" that share

a single "Container" Note: A "Service" is not itself a "Container",

so you may not define subcomponents such as "Valves" at this level.

Documentation at /docs/config/service.html

-->

<Service name="Catalina">

<!--The connectors can use a shared executor, you can define one or more named thread pools-->

<!--

<Executor name="tomcatThreadPool" namePrefix="catalina-exec-"

maxThreads="150" minSpareThreads="4"/>

-->

<!-- A "Connector" represents an endpoint by which requests are received

and responses are returned. Documentation at :

Java HTTP Connector: /docs/config/http.html

Java AJP Connector: /docs/config/ajp.html

APR (HTTP/AJP) Connector: /docs/apr.html

Define a non-SSL/TLS HTTP/1.1 Connector on port 8080

-->

<Connector port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

<!-- A "Connector" using the shared thread pool-->

<!--

<Connector executor="tomcatThreadPool"

port="8080" protocol="HTTP/1.1"

connectionTimeout="20000"

redirectPort="8443" />

-->

<!-- Define an SSL/TLS HTTP/1.1 Connector on port 8443

This connector uses the NIO implementation. The default

SSLImplementation will depend on the presence of the APR/native

library and the useOpenSSL attribute of the AprLifecycleListener.

Either JSSE or OpenSSL style configuration may be used regardless of

the SSLImplementation selected. JSSE style configuration is used below.

-->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11NioProtocol"

maxThreads="150" SSLEnabled="true">

<SSLHostConfig>

<Certificate certificateKeystoreFile="conf/localhost-rsa.jks"

type="RSA" />

</SSLHostConfig>

</Connector>

-->

<!-- Define an SSL/TLS HTTP/1.1 Connector on port 8443 with HTTP/2

This connector uses the APR/native implementation which always uses

OpenSSL for TLS.

Either JSSE or OpenSSL style configuration may be used. OpenSSL style

configuration is used below.

-->

<!--

<Connector port="8443" protocol="org.apache.coyote.http11.Http11AprProtocol"

maxThreads="150" SSLEnabled="true" >

<UpgradeProtocol className="org.apache.coyote.http2.Http2Protocol" />

<SSLHostConfig>

<Certificate certificateKeyFile="conf/localhost-rsa-key.pem"

certificateFile="conf/localhost-rsa-cert.pem"

certificateChainFile="conf/localhost-rsa-chain.pem"

type="RSA" />

</SSLHostConfig>

</Connector>

-->

<!-- Define an AJP 1.3 Connector on port 8009 -->

<!--

<Connector protocol="AJP/1.3"

address="::1"

port="8009"

redirectPort="8443" />

-->

<!-- An Engine represents the entry point (within Catalina) that processes

every request. The Engine implementation for Tomcat stand alone

analyzes the HTTP headers included with the request, and passes them

on to the appropriate Host (virtual host).

Documentation at /docs/config/engine.html -->

<!-- You should set jvmRoute to support load-balancing via AJP ie :

<Engine name="Catalina" defaultHost="localhost" jvmRoute="jvm1">

-->

<Engine name="Catalina" defaultHost="localhost">

<!--For clustering, please take a look at documentation at:

/docs/cluster-howto.html (simple how to)

/docs/config/cluster.html (reference documentation) -->

<!--

<Cluster className="org.apache.catalina.ha.tcp.SimpleTcpCluster"/>

-->

<!-- Use the LockOutRealm to prevent attempts to guess user passwords

via a brute-force attack -->

<Realm className="org.apache.catalina.realm.LockOutRealm">

<!-- This Realm uses the UserDatabase configured in the global JNDI

resources under the key "UserDatabase". Any edits

that are performed against this UserDatabase are immediately

available for use by the Realm. -->

<Realm className="org.apache.catalina.realm.UserDatabaseRealm"

resourceName="UserDatabase"/>

</Realm>

<Host name="localhost" appBase="/data/tomcat/webapps"

unpackWARs="true" autoDeploy="true">

<!-- SingleSignOn valve, share authentication between web applications

Documentation at: /docs/config/valve.html -->

<!--

<Valve className="org.apache.catalina.authenticator.SingleSignOn" />

-->

<!-- Access log processes all example.

Documentation at: /docs/config/valve.html

Note: The pattern used is equivalent to using pattern="common" -->

<Valve className="org.apache.catalina.valves.AccessLogValve" directory="logs"

prefix="localhost_access_log" suffix=".txt"

pattern="%h %l %u %t "%r" %s %b" />

</Host>

</Engine>

</Service>

</Server>

4、catalila.sh文件,在原有catalina.sh文件基础上加上一行配置即可

JAVA_HOME="/usr/local/jdk/bin"

5、run_tomcat.sh文件

#!/bin/bash

/apps/tomcat/bin/catalina.sh start

tail -f /etc/hosts

6、构建脚本

#!/bin/bash

TAG=$1

docker build -t harbor.xsc.org/webapp1/tomcat-app1:${TAG} .

sleep 3

docker push harbor.xsc.org/webapp1/tomcat-app1:${TAG}

7、编写yaml文件并部署在k8s上

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-tomcat

labels:

app: web-tomcat

spec:

replicas: 1

selector:

matchLabels:

app: web-tomcat

template:

metadata:

labels:

app: web-tomcat

spec:

containers:

- name: web-tomcat

image: harbor.xsc.org/webapp1/tomcat-app1:v1

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: tomcat

env:

- name: "account"

value: "admin"

- name: "password"

value: "abc123.."

volumeMounts:

- mountPath: /data/tomcat/webapps/static

name: staticdir-volume

- mountPath: /data/tomcat/webapps/images

name: imagesdir-volume

resources:

requests:

cpu: 0.3m

memory: 512Mi

limits:

cpu: 0.5m

memory: 1024Mi

volumes:

- name: staticdir-volume

nfs:

server: 10.0.0.253

path: /k8snfs/data/nginx/static/

- name: imagesdir-volume

nfs:

server: 10.0.0.253

path: /k8snfs/data/nginx/images/

---

apiVersion: v1

kind: Service

metadata:

name: web-tomcat-service

spec:

type: ClusterIP

selector:

app: web-tomcat

ports:

- name: tomcat

protocol: TCP

port: 8080

targetPort: 8080

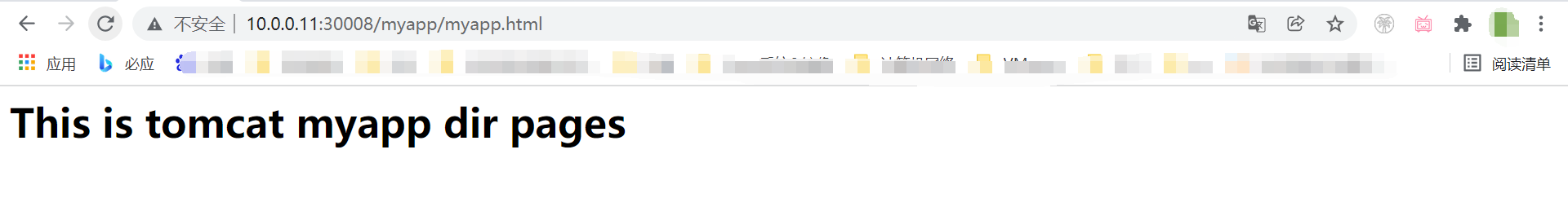

8、部署并访问# kubectl apply -f web-tomcat.yaml,此处访问10.0.0.11:30008/myapp/myapp.html时,匹配到nginx的location /myapp/这个路径,然后代理至tomcat服务,由tomcat响应

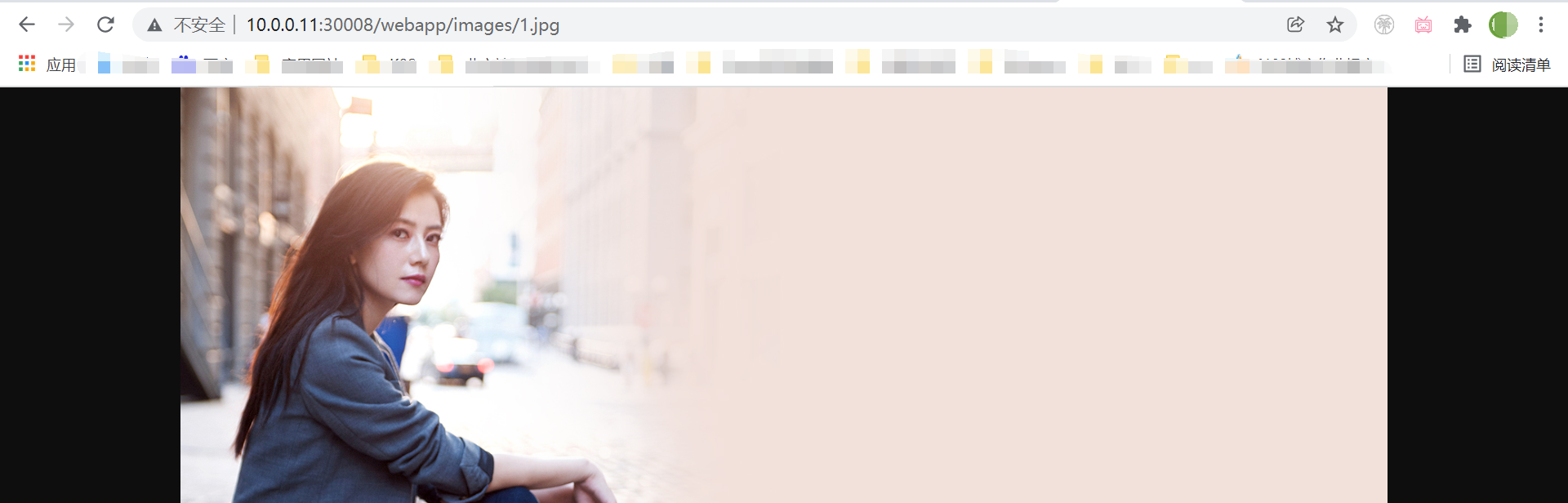

2.3 nginx与tomcat共享nfs目录

进入tomcat应用挂载nfs的目录下载一个图片,然后通过nginx前端即可访问到该图片

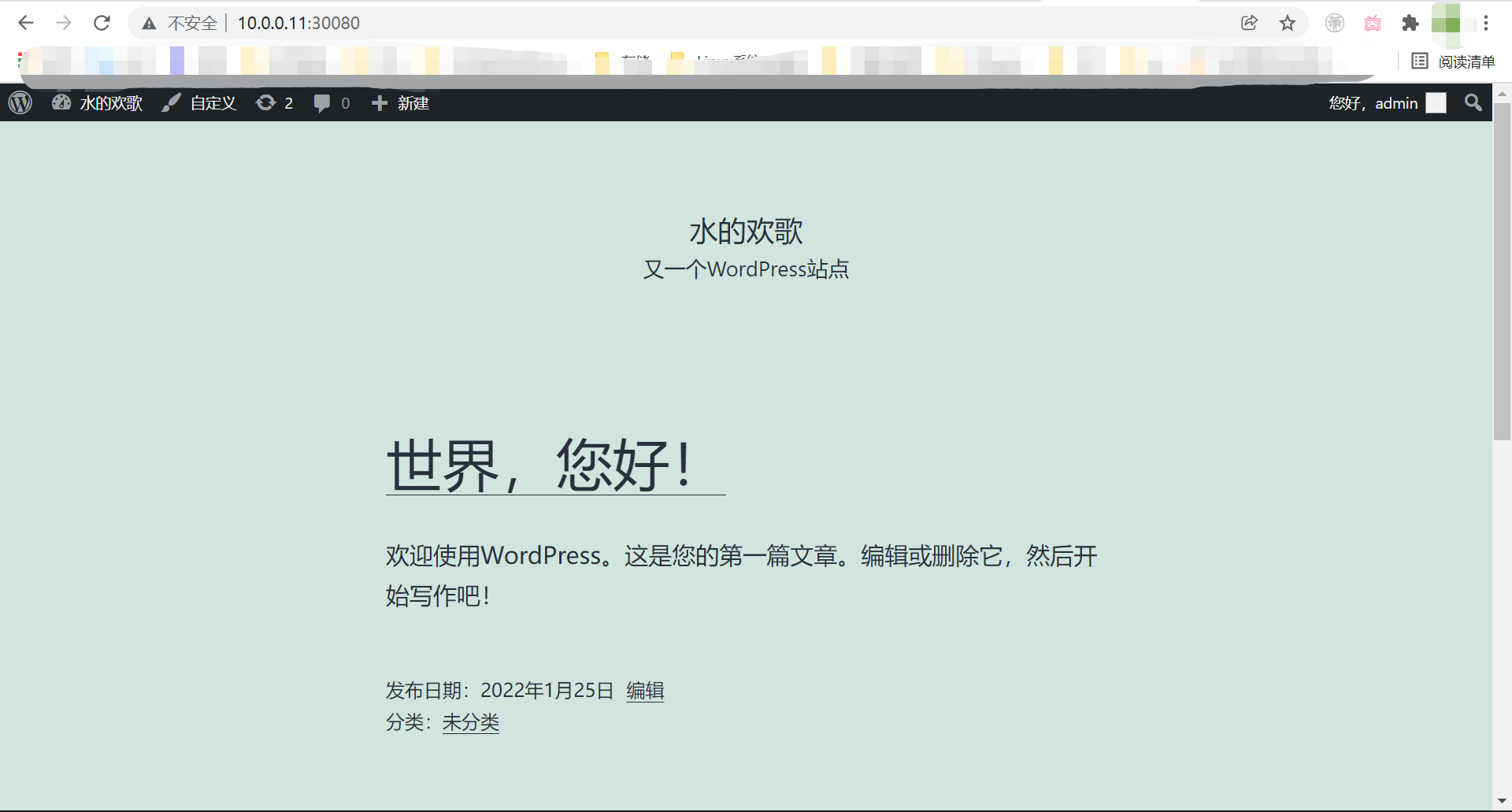

3 部署zookeeper

构建文件:链接:https://pan.baidu.com/s/1Ta0vch_Je9gjiv8MYYlXBA 提取码:y6f0

1、构建zookeeper镜像

#!/bin/bash

TAG=$1

docker build -t harbor.xsc.org/myrepo/zookeeper:${TAG} .

sleep 1

docker push harbor.xsc.org/myrepo/zookeeper:${TAG}

2、创建pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-1

namespace: default

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.253

path: /k8snfs/data/zookeeper/zkpv1

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-2

namespace: default

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.253

path: /k8snfs/data/zookeeper/zkpv2

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: zookeeper-pv-3

namespace: default

spec:

capacity:

storage: 20Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.253

path: /k8snfs/data/zookeeper/zkpv3

3、创建pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-1

namespace: default

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-1

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-2

namespace: default

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-2

resources:

requests:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: zookeeper-pvc-3

namespace: default

spec:

accessModes:

- ReadWriteOnce

volumeName: zookeeper-pv-3

resources:

requests:

storage: 10Gi

4、部署zookeeper

apiVersion: v1

kind: Service

metadata:

name: zookeeper

namespace: default

spec:

ports:

- name: client

port: 2181

selector:

app: zookeeper

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper1

namespace: default

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32181

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "1"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper2

namespace: default

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32182

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "2"

---

apiVersion: v1

kind: Service

metadata:

name: zookeeper3

namespace: default

spec:

type: NodePort

ports:

- name: client

port: 2181

nodePort: 32183

- name: followers

port: 2888

- name: election

port: 3888

selector:

app: zookeeper

server-id: "3"

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper1

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "1"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.xsc.org/myrepo/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "1"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-1

volumes:

- name: zookeeper-pvc-1

persistentVolumeClaim:

claimName: zookeeper-pvc-1

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper2

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "2"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.xsc.org/myrepo/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "2"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-2

volumes:

- name: zookeeper-pvc-2

persistentVolumeClaim:

claimName: zookeeper-pvc-2

---

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

name: zookeeper3

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: zookeeper

template:

metadata:

labels:

app: zookeeper

server-id: "3"

spec:

volumes:

- name: data

emptyDir: {}

- name: wal

emptyDir:

medium: Memory

containers:

- name: server

image: harbor.xsc.org/myrepo/zookeeper:v3.4.14

imagePullPolicy: Always

env:

- name: MYID

value: "3"

- name: SERVERS

value: "zookeeper1,zookeeper2,zookeeper3"

- name: JVMFLAGS

value: "-Xmx2G"

ports:

- containerPort: 2181

- containerPort: 2888

- containerPort: 3888

volumeMounts:

- mountPath: "/zookeeper/data"

name: zookeeper-pvc-3

volumes:

- name: zookeeper-pvc-3

persistentVolumeClaim:

claimName: zookeeper-pvc-3

5、查看部署的服务

root@master-01:# kubectl get pv,pvc,pod

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/jenkins-datadir-pv 50Gi RWO Retain Bound default/jenkins-datadir-pvc 16h

persistentvolume/jenkins-rootdir-pv 50Gi RWO Retain Bound default/jenkins-rootdir-pvc 16h

persistentvolume/zookeeper-pv-1 20Gi RWO Retain Bound default/zookeeper-pvc-1 19h

persistentvolume/zookeeper-pv-2 20Gi RWO Retain Bound default/zookeeper-pvc-2 19h

persistentvolume/zookeeper-pv-3 20Gi RWO Retain Bound default/zookeeper-pvc-3 19h

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/jenkins-datadir-pvc Bound jenkins-datadir-pv 50Gi RWO 16h

persistentvolumeclaim/jenkins-rootdir-pvc Bound jenkins-rootdir-pv 50Gi RWO 16h

persistentvolumeclaim/zookeeper-pvc-1 Bound zookeeper-pv-1 20Gi RWO 19h

persistentvolumeclaim/zookeeper-pvc-2 Bound zookeeper-pv-2 20Gi RWO 19h

persistentvolumeclaim/zookeeper-pvc-3 Bound zookeeper-pv-3 20Gi RWO 19h

NAME READY STATUS RESTARTS AGE

pod/jenkins-deploy-77789d6b87-wp57q 1/1 Running 1 (59m ago) 16h

pod/zookeeper1-d9df54d8f-g6g4q 1/1 Running 1 (59m ago) 19h

pod/zookeeper2-7595c9979b-dcqb7 1/1 Running 1 (59m ago) 19h

pod/zookeeper3-96b5459d7-s7k57 1/1 Running 1 (59m ago) 19h

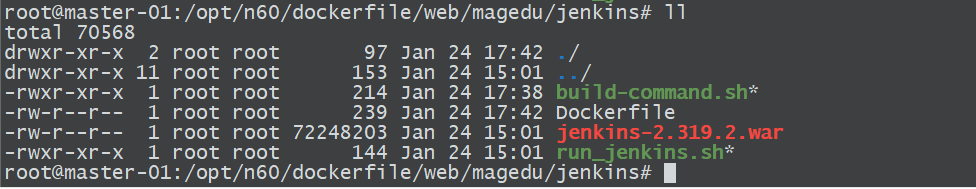

4 部署jenkins

1、构建文件: 链接:链接:https://pan.baidu.com/s/1J3ZzrWDXv8i5rmpPUHbbsg 提取码:gacw

2、Dockerfiler文件

#Jenkins Version 2.190.1

FROM harbor.xsc.org/pub-images/jdk-base:v8.212

MAINTAINER evn.xiang 505597482@qq.com

ADD jenkins-2.319.2.war /apps/jenkins/jenkins.war

ADD run_jenkins.sh /usr/bin/

EXPOSE 8080

CMD ["/usr/bin/run_jenkins.sh"]

3、运行脚本

#!/bin/bash

cd /apps/jenkins && java -server -Xms1024m -Xmx1024m -Xss512k -jar jenkins.war --webroot=/apps/jenkins/jenkins-data --httpPort=8080

4、构建脚本

#!/bin/bash

docker build -t harbor.xsc.org/myrepo/jenkins:v2.319.2 .

sleep 1

docker push harbor.xsc.org/myrepo/jenkins:v2.319.2

5、创建pv

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-datadir-pv

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.253

path: /k8snfs/data/jenkins/datadir

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-rootdir-pv

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

server: 10.0.0.253

path: /k8snfs/data/jenkins/rootdir

6、创建pvc

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-datadir-pvc

namespace: default

spec:

volumeName: jenkins-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-rootdir-pvc

namespace: default

spec:

volumeName: jenkins-rootdir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

7、部署jenkins

kind: Deployment

apiVersion: apps/v1

metadata:

labels:

app: jenkins-deploy

name: jenkins-deploy

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: jenkins

template:

metadata:

labels:

app: jenkins

spec:

containers:

- name: jenkins-container

image: harbor.xsc.org/myrepo/jenkins:v2.319.2

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

volumeMounts:

- mountPath: "/apps/jenkins/jenkins-data/"

name: jenkins-datadir

- mountPath: "/root/.jenkins"

name: jenkins-rootdir

volumes:

- name: jenkins-datadir

persistentVolumeClaim:

claimName: jenkins-datadir-pvc

- name: jenkins-rootdir

persistentVolumeClaim:

claimName: jenkins-rootdir-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: jenkins-svc

name: jenkins-service

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 38080

selector:

app: jenkins

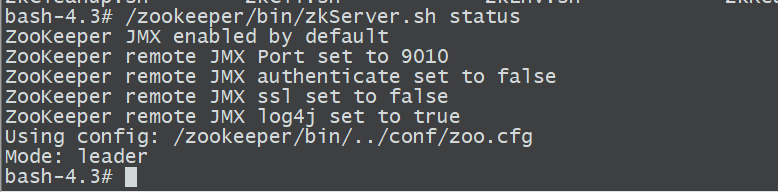

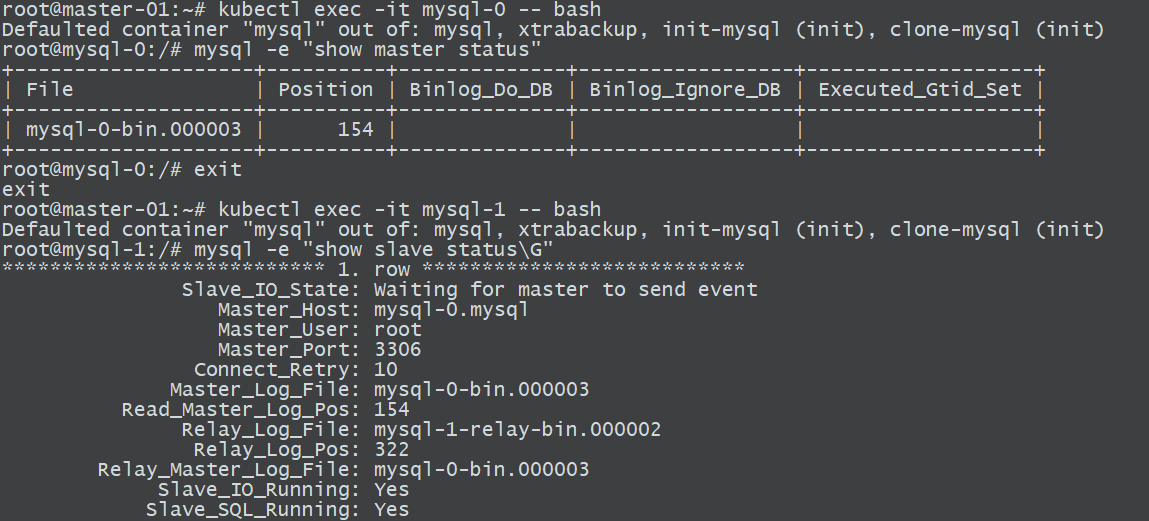

5 部署mysql主从

1、拉取xtrabackup以及mysql镜像并上传至本地harbor

docker pull registry.cn-hangzhou.aliyuncs.com/hxpdocker/xtrabackup:1.

docker tag registry.cn-hangzhou.aliyuncs.com/hxpdocker/xtrabackup:1.0 harbor.xsc.org/myrepo/xtrabackup:1.0

docker push harbor.xsc.org/myrepo/xtrabackup:1.0

docker pull mysql:5.7

docker tag mysql:5.7 harbor.xsc.org/myrepo/mysql:5.7

docker push harbor.xsc.org/myrepo/mysql:5.7

2、创建pv

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-1

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /k8snfs/data/mysql/mysqlpv1

server: 10.0.0.253

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-2

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /k8snfs/data/mysql/mysqlpv2

server: 10.0.0.253

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-3

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /k8snfs/data/mysql/mysqlpv3

server: 10.0.0.253

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-4

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /k8snfs/data/mysql/mysqlpv4

server: 10.0.0.253

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysql-datadir-5

namespace: default

spec:

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

nfs:

path: /k8snfs/data/mysql/mysqlpv5

server: 10.0.0.253

3、配置文件

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

namespace: default

labels:

app: mysql

data:

master.cnf: |

# Apply this config only on the master.

[mysqld]

log-bin

log_bin_trust_function_creators=1

lower_case_table_names=1

slave.cnf: |

# Apply this config only on slaves.

[mysqld]

super-read-only

log_bin_trust_function_creators=1

4、service文件

# Headless service for stable DNS entries of StatefulSet members.

apiVersion: v1

kind: Service

metadata:

namespace: default

name: mysql

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

clusterIP: None

selector:

app: mysql

---

# Client service for connecting to any MySQL instance for reads.

# For writes, you must instead connect to the master: mysql-0.mysql.

apiVersion: v1

kind: Service

metadata:

name: mysql-read

namespace: default

labels:

app: mysql

spec:

ports:

- name: mysql

port: 3306

selector:

app: mysql

5、部署mysql

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

namespace: default

spec:

selector:

matchLabels:

app: mysql

serviceName: mysql

replicas: 2

template:

metadata:

labels:

app: mysql

spec:

initContainers:

- name: init-mysql

image: harbor.xsc.org/myrepo/mysql:5.7

command:

- bash

- "-c"

- |

set -ex

# Generate mysql server-id from pod ordinal index.

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

echo [mysqld] > /mnt/conf.d/server-id.cnf

# Add an offset to avoid reserved server-id=0 value.

echo server-id=$((100 + $ordinal)) >> /mnt/conf.d/server-id.cnf

# Copy appropriate conf.d files from config-map to emptyDir.

if [[ $ordinal -eq 0 ]]; then

cp /mnt/config-map/master.cnf /mnt/conf.d/

else

cp /mnt/config-map/slave.cnf /mnt/conf.d/

fi

volumeMounts:

- name: conf

mountPath: /mnt/conf.d

- name: config-map

mountPath: /mnt/config-map

- name: clone-mysql

image: harbor.xsc.org/myrepo/xtrabackup:1.0

command:

- bash

- "-c"

- |

set -ex

# Skip the clone if data already exists.

[[ -d /var/lib/mysql/mysql ]] && exit 0

# Skip the clone on master (ordinal index 0).

[[ `hostname` =~ -([0-9]+)$ ]] || exit 1

ordinal=${BASH_REMATCH[1]}

[[ $ordinal -eq 0 ]] && exit 0

# Clone data from previous peer.

ncat --recv-only mysql-$(($ordinal-1)).mysql 3307 | xbstream -x -C /var/lib/mysql

# Prepare the backup.

xtrabackup --prepare --target-dir=/var/lib/mysql

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

containers:

- name: mysql

image: harbor.xsc.org/myrepo/mysql:5.7

env:

- name: MYSQL_ALLOW_EMPTY_PASSWORD

value: "1"

ports:

- name: mysql

containerPort: 3306

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 500m

memory: 1Gi

livenessProbe:

exec:

command: ["mysqladmin", "ping"]

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

readinessProbe:

exec:

# Check we can execute queries over TCP (skip-networking is off).

command: ["mysql", "-h", "127.0.0.1", "-e", "SELECT 1"]

initialDelaySeconds: 5

periodSeconds: 2

timeoutSeconds: 1

- name: xtrabackup

image: harbor.xsc.org/myrepo/xtrabackup:1.0

ports:

- name: xtrabackup

containerPort: 3307

command:

- bash

- "-c"

- |

set -ex

cd /var/lib/mysql

# Determine binlog position of cloned data, if any.

if [[ -f xtrabackup_slave_info ]]; then

# XtraBackup already generated a partial "CHANGE MASTER TO" query

# because we're cloning from an existing slave.

mv xtrabackup_slave_info change_master_to.sql.in

# Ignore xtrabackup_binlog_info in this case (it's useless).

rm -f xtrabackup_binlog_info

elif [[ -f xtrabackup_binlog_info ]]; then

# We're cloning directly from master. Parse binlog position.

[[ `cat xtrabackup_binlog_info` =~ ^(.*?)[[:space:]]+(.*?)$ ]] || exit 1

rm xtrabackup_binlog_info

echo "CHANGE MASTER TO MASTER_LOG_FILE='${BASH_REMATCH[1]}',\

MASTER_LOG_POS=${BASH_REMATCH[2]}" > change_master_to.sql.in

fi

# Check if we need to complete a clone by starting replication.

if [[ -f change_master_to.sql.in ]]; then

echo "Waiting for mysqld to be ready (accepting connections)"

until mysql -h 127.0.0.1 -e "SELECT 1"; do sleep 1; done

echo "Initializing replication from clone position"

# In case of container restart, attempt this at-most-once.

mv change_master_to.sql.in change_master_to.sql.orig

mysql -h 127.0.0.1 <<EOF

$(<change_master_to.sql.orig),

MASTER_HOST='mysql-0.mysql',

MASTER_USER='root',

MASTER_PASSWORD='',

MASTER_CONNECT_RETRY=10;

START SLAVE;

EOF

fi

# Start a server to send backups when requested by peers.

exec ncat --listen --keep-open --send-only --max-conns=1 3307 -c \

"xtrabackup --backup --slave-info --stream=xbstream --host=127.0.0.1 --user=root"

volumeMounts:

- name: data

mountPath: /var/lib/mysql

subPath: mysql

- name: conf

mountPath: /etc/mysql/conf.d

resources:

requests:

cpu: 100m

memory: 100Mi

volumes:

- name: conf

emptyDir: {}

- name: config-map

configMap:

name: mysql

volumeClaimTemplates:

- metadata:

name: data

spec:

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi

6 部署wordpress

6.1 构建wordpress-ngnix镜像

1、nginx配置文件

user nginx nginx;

worker_processes auto;

#error_log logs/error.log;

#error_log logs/error.log notice;

#error_log logs/error.log info;

#pid logs/nginx.pid;

#daemon off;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

#log_format main '$remote_addr - $remote_user [$time_local] "$request" '

# '$status $body_bytes_sent "$http_referer" '

# '"$http_user_agent" "$http_x_forwarded_for"';

#access_log logs/access.log main;

sendfile on;

#tcp_nopush on;

keepalive_timeout 65;

client_max_body_size 10M;

client_body_buffer_size 16k;

client_body_temp_path /apps/nginx/tmp 1 2 2;

gzip on;

server {

listen 80;

server_name blogs.magedu.net;

#charset koi8-r;

#access_log logs/host.access.log main;

location / {

root /home/nginx/wordpress;

index index.php index.html index.htm;

#if ($http_user_agent ~ "ApacheBench|WebBench|TurnitinBot|Sogou web spider|Grid Service") {

# proxy_pass http://www.baidu.com;

# #return 403;

#}

}

location ~ \.php$ {

root /home/nginx/wordpress;

fastcgi_pass 127.0.0.1:9000;

fastcgi_index index.php;

#fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

include fastcgi_params;

}

#error_page 404 /404.html;

# redirect server error pages to the static page /50x.html

#

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

# proxy the PHP scripts to Apache listening on 127.0.0.1:80

#

#location ~ \.php$ {

# proxy_pass http://127.0.0.1;

#}

# pass the PHP scripts to FastCGI server listening on 127.0.0.1:9000

#

#location ~ \.php$ {

# root html;

# fastcgi_pass 127.0.0.1:9000;

# fastcgi_index index.php;

# fastcgi_param SCRIPT_FILENAME /scripts$fastcgi_script_name;

# include fastcgi_params;

#}

# deny access to .htaccess files, if Apache's document root

# concurs with nginx's one

#

#location ~ /\.ht {

# deny all;

#}

}

# another virtual host using mix of IP-, name-, and port-based configuration

#

#server {

# listen 8000;

# listen somename:8080;

# server_name somename alias another.alias;

# location / {

# root html;

# index index.html index.htm;

# }

#}

# HTTPS server

#

#server {

# listen 443 ssl;

# server_name localhost;

# ssl_certificate cert.pem;

# ssl_certificate_key cert.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

# location / {

# root html;

# index index.html index.htm;

# }

#}

}

2、运行脚本

#!/bin/bash

#echo "nameserver 10.20.254.254" > /etc/resolv.conf

chown nginx.nginx /home/nginx/wordpress/ -R

/apps/nginx/sbin/nginx

tail -f /etc/hosts

3、Dockerfile

FROM harbor.xsc.org/pub-images/nginx-base-wordpress:v1.20.2

ADD nginx.conf /apps/nginx/conf/nginx.conf

ADD run_nginx.sh /apps/nginx/sbin/run_nginx.sh

RUN mkdir -pv /home/nginx/wordpress

RUN chown nginx.nginx /home/nginx/wordpress/ -R

EXPOSE 80 443

CMD ["/apps/nginx/sbin/run_nginx.sh"]

4、构建脚本

#!/bin/bash

TAG=$1

docker build -t harbor.xsc.org/pub-images/wordpress-nginx:${TAG} .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

docker push harbor.xsc.org/pub-images/wordpress-nginx:${TAG}

echo "镜像上传完成"

6.2 构建php镜像

1、配置文件www.conf

; Start a new pool named 'www'.

; the variable $pool can we used in any directive and will be replaced by the

; pool name ('www' here)

[www]

; Per pool prefix

; It only applies on the following directives:

; - 'slowlog'

; - 'listen' (unixsocket)

; - 'chroot'

; - 'chdir'

; - 'php_values'

; - 'php_admin_values'

; When not set, the global prefix (or @php_fpm_prefix@) applies instead.

; Note: This directive can also be relative to the global prefix.

; Default Value: none

;prefix = /path/to/pools/$pool

; Unix user/group of processes

; Note: The user is mandatory. If the group is not set, the default user's group

; will be used.

; RPM: apache user chosen to provide access to the same directories as httpd

user = nginx

; RPM: Keep a group allowed to write in log dir.

group = nginx

; The address on which to accept FastCGI requests.

; Valid syntaxes are:

; 'ip.add.re.ss:port' - to listen on a TCP socket to a specific IPv4 address on

; a specific port;

; '[ip:6:addr:ess]:port' - to listen on a TCP socket to a specific IPv6 address on

; a specific port;

; 'port' - to listen on a TCP socket to all IPv4 addresses on a

; specific port;

; '[::]:port' - to listen on a TCP socket to all addresses

; (IPv6 and IPv4-mapped) on a specific port;

; '/path/to/unix/socket' - to listen on a unix socket.

; Note: This value is mandatory.

listen = 0.0.0.0:9000

; Set listen(2) backlog.

; Default Value: 65535

;listen.backlog = 65535

; Set permissions for unix socket, if one is used. In Linux, read/write

; permissions must be set in order to allow connections from a web server.

; Default Values: user and group are set as the running user

; mode is set to 0660

;listen.owner = nobody

;listen.group = nobody

;listen.mode = 0660

; When POSIX Access Control Lists are supported you can set them using

; these options, value is a comma separated list of user/group names.

; When set, listen.owner and listen.group are ignored

;listen.acl_users = apache

;listen.acl_groups =

; List of addresses (IPv4/IPv6) of FastCGI clients which are allowed to connect.

; Equivalent to the FCGI_WEB_SERVER_ADDRS environment variable in the original

; PHP FCGI (5.2.2+). Makes sense only with a tcp listening socket. Each address

; must be separated by a comma. If this value is left blank, connections will be

; accepted from any ip address.

; Default Value: any

; listen.allowed_clients = 127.0.0.1

; Specify the nice(2) priority to apply to the pool processes (only if set)

; The value can vary from -19 (highest priority) to 20 (lower priority)

; Note: - It will only work if the FPM master process is launched as root

; - The pool processes will inherit the master process priority

; unless it specified otherwise

; Default Value: no set

; process.priority = -19

; Set the process dumpable flag (PR_SET_DUMPABLE prctl) even if the process user

; or group is differrent than the master process user. It allows to create process

; core dump and ptrace the process for the pool user.

; Default Value: no

; process.dumpable = yes

; Choose how the process manager will control the number of child processes.

; Possible Values:

; static - a fixed number (pm.max_children) of child processes;

; dynamic - the number of child processes are set dynamically based on the

; following directives. With this process management, there will be

; always at least 1 children.

; pm.max_children - the maximum number of children that can

; be alive at the same time.

; pm.start_servers - the number of children created on startup.

; pm.min_spare_servers - the minimum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is less than this

; number then some children will be created.

; pm.max_spare_servers - the maximum number of children in 'idle'

; state (waiting to process). If the number

; of 'idle' processes is greater than this

; number then some children will be killed.

; ondemand - no children are created at startup. Children will be forked when

; new requests will connect. The following parameter are used:

; pm.max_children - the maximum number of children that

; can be alive at the same time.

; pm.process_idle_timeout - The number of seconds after which

; an idle process will be killed.

; Note: This value is mandatory.

pm = dynamic

; The number of child processes to be created when pm is set to 'static' and the

; maximum number of child processes when pm is set to 'dynamic' or 'ondemand'.

; This value sets the limit on the number of simultaneous requests that will be

; served. Equivalent to the ApacheMaxClients directive with mpm_prefork.

; Equivalent to the PHP_FCGI_CHILDREN environment variable in the original PHP

; CGI. The below defaults are based on a server without much resources. Don't

; forget to tweak pm.* to fit your needs.

; Note: Used when pm is set to 'static', 'dynamic' or 'ondemand'

; Note: This value is mandatory.

pm.max_children = 50

; The number of child processes created on startup.

; Note: Used only when pm is set to 'dynamic'

; Default Value: min_spare_servers + (max_spare_servers - min_spare_servers) / 2

pm.start_servers = 5

; The desired minimum number of idle server processes.

; Note: Used only when pm is set to 'dynamic'

; Note: Mandatory when pm is set to 'dynamic'

pm.min_spare_servers = 5

; The desired maximum number of idle server processes.

; Note: Used only when pm is set to 'dynamic'

; Note: Mandatory when pm is set to 'dynamic'

pm.max_spare_servers = 35

; The number of seconds after which an idle process will be killed.

; Note: Used only when pm is set to 'ondemand'

; Default Value: 10s

;pm.process_idle_timeout = 10s;

; The number of requests each child process should execute before respawning.

; This can be useful to work around memory leaks in 3rd party libraries. For

; endless request processing specify '0'. Equivalent to PHP_FCGI_MAX_REQUESTS.

; Default Value: 0

;pm.max_requests = 500

; The URI to view the FPM status page. If this value is not set, no URI will be

; recognized as a status page. It shows the following informations:

; pool - the name of the pool;

; process manager - static, dynamic or ondemand;

; start time - the date and time FPM has started;

; start since - number of seconds since FPM has started;

; accepted conn - the number of request accepted by the pool;

; listen queue - the number of request in the queue of pending

; connections (see backlog in listen(2));

; max listen queue - the maximum number of requests in the queue

; of pending connections since FPM has started;

; listen queue len - the size of the socket queue of pending connections;

; idle processes - the number of idle processes;

; active processes - the number of active processes;

; total processes - the number of idle + active processes;

; max active processes - the maximum number of active processes since FPM

; has started;

; max children reached - number of times, the process limit has been reached,

; when pm tries to start more children (works only for

; pm 'dynamic' and 'ondemand');

; Value are updated in real time.

; Example output:

; pool: www

; process manager: static

; start time: 01/Jul/2011:17:53:49 +0200

; start since: 62636

; accepted conn: 190460

; listen queue: 0

; max listen queue: 1

; listen queue len: 42

; idle processes: 4

; active processes: 11

; total processes: 15

; max active processes: 12

; max children reached: 0

;

; By default the status page output is formatted as text/plain. Passing either

; 'html', 'xml' or 'json' in the query string will return the corresponding

; output syntax. Example:

; http://www.foo.bar/status

; http://www.foo.bar/status?json

; http://www.foo.bar/status?html

; http://www.foo.bar/status?xml

;

; By default the status page only outputs short status. Passing 'full' in the

; query string will also return status for each pool process.

; Example:

; http://www.foo.bar/status?full

; http://www.foo.bar/status?json&full

; http://www.foo.bar/status?html&full

; http://www.foo.bar/status?xml&full

; The Full status returns for each process:

; pid - the PID of the process;

; state - the state of the process (Idle, Running, ...);

; start time - the date and time the process has started;

; start since - the number of seconds since the process has started;

; requests - the number of requests the process has served;

; request duration - the duration in µs of the requests;

; request method - the request method (GET, POST, ...);

; request URI - the request URI with the query string;

; content length - the content length of the request (only with POST);

; user - the user (PHP_AUTH_USER) (or '-' if not set);

; script - the main script called (or '-' if not set);

; last request cpu - the %cpu the last request consumed

; it's always 0 if the process is not in Idle state

; because CPU calculation is done when the request

; processing has terminated;

; last request memory - the max amount of memory the last request consumed

; it's always 0 if the process is not in Idle state

; because memory calculation is done when the request

; processing has terminated;

; If the process is in Idle state, then informations are related to the

; last request the process has served. Otherwise informations are related to

; the current request being served.

; Example output:

; ************************

; pid: 31330

; state: Running

; start time: 01/Jul/2011:17:53:49 +0200

; start since: 63087

; requests: 12808

; request duration: 1250261

; request method: GET

; request URI: /test_mem.php?N=10000

; content length: 0

; user: -

; script: /home/fat/web/docs/php/test_mem.php

; last request cpu: 0.00

; last request memory: 0

;

; Note: There is a real-time FPM status monitoring sample web page available

; It's available in: @EXPANDED_DATADIR@/fpm/status.html

;

; Note: The value must start with a leading slash (/). The value can be

; anything, but it may not be a good idea to use the .php extension or it

; may conflict with a real PHP file.

; Default Value: not set

;pm.status_path = /status

; The ping URI to call the monitoring page of FPM. If this value is not set, no

; URI will be recognized as a ping page. This could be used to test from outside

; that FPM is alive and responding, or to

; - create a graph of FPM availability (rrd or such);

; - remove a server from a group if it is not responding (load balancing);

; - trigger alerts for the operating team (24/7).

; Note: The value must start with a leading slash (/). The value can be

; anything, but it may not be a good idea to use the .php extension or it

; may conflict with a real PHP file.

; Default Value: not set

;ping.path = /ping

; This directive may be used to customize the response of a ping request. The

; response is formatted as text/plain with a 200 response code.

; Default Value: pong

;ping.response = pong

; The access log file

; Default: not set

;access.log = log/$pool.access.log

; The access log format.

; The following syntax is allowed

; %%: the '%' character

; %C: %CPU used by the request

; it can accept the following format:

; - %{user}C for user CPU only

; - %{system}C for system CPU only

; - %{total}C for user + system CPU (default)

; %d: time taken to serve the request

; it can accept the following format:

; - %{seconds}d (default)

; - %{miliseconds}d

; - %{mili}d

; - %{microseconds}d

; - %{micro}d

; %e: an environment variable (same as $_ENV or $_SERVER)

; it must be associated with embraces to specify the name of the env

; variable. Some exemples:

; - server specifics like: %{REQUEST_METHOD}e or %{SERVER_PROTOCOL}e

; - HTTP headers like: %{HTTP_HOST}e or %{HTTP_USER_AGENT}e

; %f: script filename

; %l: content-length of the request (for POST request only)

; %m: request method

; %M: peak of memory allocated by PHP

; it can accept the following format:

; - %{bytes}M (default)

; - %{kilobytes}M

; - %{kilo}M

; - %{megabytes}M

; - %{mega}M

; %n: pool name

; %o: output header

; it must be associated with embraces to specify the name of the header:

; - %{Content-Type}o

; - %{X-Powered-By}o

; - %{Transfert-Encoding}o

; - ....

; %p: PID of the child that serviced the request

; %P: PID of the parent of the child that serviced the request

; %q: the query string

; %Q: the '?' character if query string exists

; %r: the request URI (without the query string, see %q and %Q)

; %R: remote IP address

; %s: status (response code)

; %t: server time the request was received

; it can accept a strftime(3) format:

; %d/%b/%Y:%H:%M:%S %z (default)

; %T: time the log has been written (the request has finished)

; it can accept a strftime(3) format:

; %d/%b/%Y:%H:%M:%S %z (default)

; %u: remote user

;

; Default: "%R - %u %t \"%m %r\" %s"

;access.format = "%R - %u %t \"%m %r%Q%q\" %s %f %{mili}d %{kilo}M %C%%"

; The log file for slow requests

; Default Value: not set

; Note: slowlog is mandatory if request_slowlog_timeout is set

slowlog = /opt/remi/php56/root/var/log/php-fpm/www-slow.log

; The timeout for serving a single request after which a PHP backtrace will be

; dumped to the 'slowlog' file. A value of '0s' means 'off'.

; Available units: s(econds)(default), m(inutes), h(ours), or d(ays)

; Default Value: 0

;request_slowlog_timeout = 0

; The timeout for serving a single request after which the worker process will

; be killed. This option should be used when the 'max_execution_time' ini option

; does not stop script execution for some reason. A value of '0' means 'off'.

; Available units: s(econds)(default), m(inutes), h(ours), or d(ays)

; Default Value: 0

;request_terminate_timeout = 0

; Set open file descriptor rlimit.

; Default Value: system defined value

;rlimit_files = 1024

; Set max core size rlimit.

; Possible Values: 'unlimited' or an integer greater or equal to 0

; Default Value: system defined value

;rlimit_core = 0

; Chroot to this directory at the start. This value must be defined as an

; absolute path. When this value is not set, chroot is not used.

; Note: you can prefix with '$prefix' to chroot to the pool prefix or one

; of its subdirectories. If the pool prefix is not set, the global prefix

; will be used instead.

; Note: chrooting is a great security feature and should be used whenever

; possible. However, all PHP paths will be relative to the chroot

; (error_log, sessions.save_path, ...).

; Default Value: not set

;chroot =

; Chdir to this directory at the start.

; Note: relative path can be used.

; Default Value: current directory or / when chroot

;chdir = /var/www

; Redirect worker stdout and stderr into main error log. If not set, stdout and

; stderr will be redirected to /dev/null according to FastCGI specs.

; Note: on highloaded environement, this can cause some delay in the page

; process time (several ms).

; Default Value: no

;catch_workers_output = yes

; Clear environment in FPM workers

; Prevents arbitrary environment variables from reaching FPM worker processes

; by clearing the environment in workers before env vars specified in this

; pool configuration are added.

; Setting to "no" will make all environment variables available to PHP code

; via getenv(), $_ENV and $_SERVER.

; Default Value: yes

;clear_env = no

; Limits the extensions of the main script FPM will allow to parse. This can

; prevent configuration mistakes on the web server side. You should only limit

; FPM to .php extensions to prevent malicious users to use other extensions to

; exectute php code.

; Note: set an empty value to allow all extensions.

; Default Value: .php

;security.limit_extensions = .php .php3 .php4 .php5

; Pass environment variables like LD_LIBRARY_PATH. All $VARIABLEs are taken from

; the current environment.

; Default Value: clean env

;env[HOSTNAME] = $HOSTNAME

;env[PATH] = /usr/local/bin:/usr/bin:/bin

;env[TMP] = /tmp

;env[TMPDIR] = /tmp

;env[TEMP] = /tmp

; Additional php.ini defines, specific to this pool of workers. These settings

; overwrite the values previously defined in the php.ini. The directives are the

; same as the PHP SAPI:

; php_value/php_flag - you can set classic ini defines which can

; be overwritten from PHP call 'ini_set'.

; php_admin_value/php_admin_flag - these directives won't be overwritten by

; PHP call 'ini_set'

; For php_*flag, valid values are on, off, 1, 0, true, false, yes or no.

; Defining 'extension' will load the corresponding shared extension from

; extension_dir. Defining 'disable_functions' or 'disable_classes' will not

; overwrite previously defined php.ini values, but will append the new value

; instead.

; Note: path INI options can be relative and will be expanded with the prefix

; (pool, global or @prefix@)

; Default Value: nothing is defined by default except the values in php.ini and

; specified at startup with the -d argument

;php_admin_value[sendmail_path] = /usr/sbin/sendmail -t -i -f www@my.domain.com

;php_flag[display_errors] = off

php_admin_value[error_log] = /opt/remi/php56/root/var/log/php-fpm/www-error.log

php_admin_flag[log_errors] = on

;php_admin_value[memory_limit] = 128M

; Set the following data paths to directories owned by the FPM process user.

;

; Do not change the ownership of existing system directories, if the process

; user does not have write permission, create dedicated directories for this

; purpose.

;

; See warning about choosing the location of these directories on your system

; at http://php.net/session.save-path

php_value[session.save_handler] = files

php_value[session.save_path] = /opt/remi/php56/root/var/lib/php/session

php_value[soap.wsdl_cache_dir] = /opt/remi/php56/root/var/lib/php/wsdlcache

2、运行脚本run_php.sh

#!/bin/bash

#echo "nameserver 10.20.254.254" > /etc/resolv.conf

/opt/remi/php56/root/usr/sbin/php-fpm

#/opt/remi/php56/root/usr/sbin/php-fpm --nodaemonize

tail -f /etc/hosts

3、Dockerfile文件

#PHP Base Image

FROM harbor.xsc.org/myrepo/centos-base:7.9.2009

MAINTAINER evn.xiang

RUN yum install -y https://mirrors.tuna.tsinghua.edu.cn/remi/enterprise/remi-release-7.rpm && yum install php56-php-fpm php56-php-mysql -y

ADD www.conf /opt/remi/php56/root/etc/php-fpm.d/www.conf

#RUN useradd nginx -u 2019

ADD run_php.sh /usr/local/bin/run_php.sh

EXPOSE 9000

CMD ["/usr/local/bin/run_php.sh"]

4、构建脚本

#!/bin/bash

TAG=$1

docker build -t harbor.xsc.org/pub-images/wordpress-php-5.6:${TAG} .

echo "镜像制作完成,即将上传至Harbor服务器"

sleep 1

docker push harbor.xsc.org/pub-images/wordpress-php-5.6:${TAG}

echo "镜像上传完成"

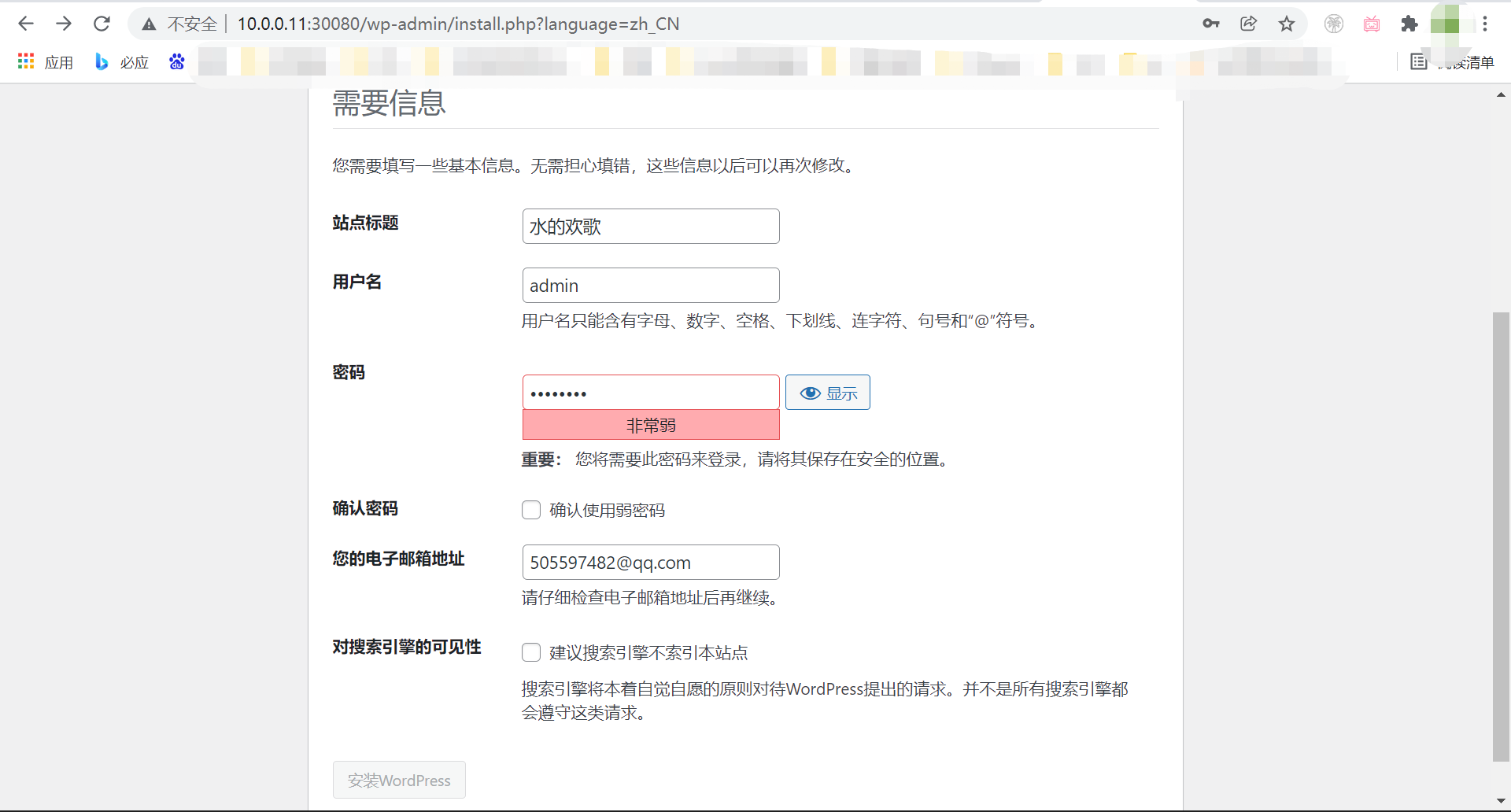

6.3 运行wordpress

1、运行nginx+php环境

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-deploy

namespace: default

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-app

template:

metadata:

labels:

app: wordpress-app

spec:

containers:

- name: wordpress-app-nginx

image: harbor.xsc.org/pub-images/wordpress-nginx:v1

imagePullPolicy: Always

ports:

- containerPort: 80

protocol: TCP

name: http

- containerPort: 443

protocol: TCP

name: https

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false

- name: wordpress-app-php

image: harbor.xsc.org/pub-images/wordpress-php-5.6:v1

#image: harbor.kdefault.net/kdefault/php:5.6.40-fpm

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 9000

protocol: TCP

name: http

volumeMounts:

- name: wordpress

mountPath: /home/nginx/wordpress

readOnly: false

volumes:

- name: wordpress

nfs:

server: 10.0.0.253

path: /k8snfs/data/wordpress/data

---

kind: Service

apiVersion: v1

metadata:

labels:

app: wordpress-app

name: wordpress-app-svc

namespace: default

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 80

nodePort: 30080

# - name: https

#port: 443

#protocol: TCP

# targetPort: 443

#nodePort: 30433

selector:

app: wordpress-app

2、将wordpress文件上传到pod挂载的nfs目录当中,即可进行初始化