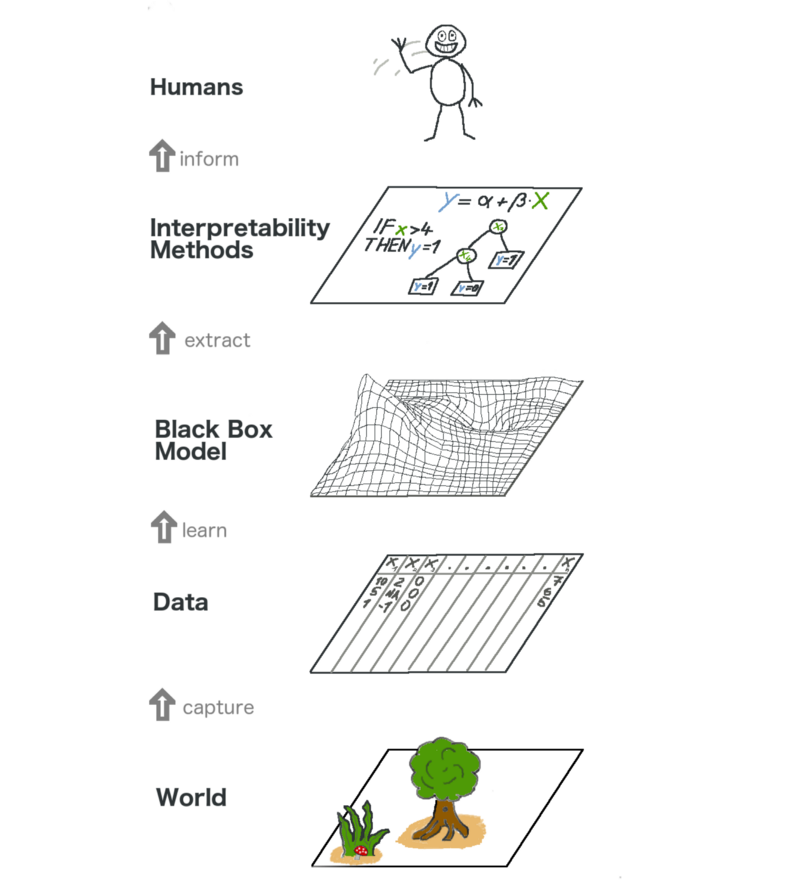

Benefits that Interpretability 可解釋性 brings along are:

- Reliability 可靠性

- Debugging 易于調試

- Informing Feature Engineering 啟發特徵工程思路

- Directing Future Data Collection 指導後續數據搜集

- Informing Human decision-making 指導人為決策

- Building Trust 建立信位

Permutation Importance对很多scikit-learn中涉及到的预估模型都有用。其背后的思想很简单:随机重排或打乱样本中的特定一列数据,其余列保持不变。如果模型的预测准确率显著下降,那就认为这个特征很重要。与之对应,如果重排和打乱这一列特征对模型准确率没有影响的话,那就认为这列对应的特征没有什么作用。

Tax Competition Example

# Loading data, dividing, modeling and EDA belowimport pandas as pdfrom sklearn.ensemble import RandomForestRegressorfrom sklearn.linear_model import LinearRegressionfrom sklearn.model_selection import train_test_splitdata = pd.read_csv('../input/new-york-city-taxi-fare-prediction/train.csv', nrows=50000)

# Loading data, dividing, modeling and EDA belowimport pandas as pdfrom sklearn.ensemble import RandomForestRegressorfrom sklearn.linear_model import LinearRegressionfrom sklearn.model_selection import train_test_splitdata = pd.read_csv('../input/new-york-city-taxi-fare-prediction/train.csv', nrows=50000)# Remove data with extreme outlier coordinates or negative faresdata = data.query('pickup_latitude > 40.7 and pickup_latitude < 40.8 and ' +'dropoff_latitude > 40.7 and dropoff_latitude < 40.8 and ' +'pickup_longitude > -74 and pickup_longitude < -73.9 and ' +'dropoff_longitude > -74 and dropoff_longitude < -73.9 and ' +'fare_amount > 0')y = data.fare_amountbase_features = ['pickup_longitude','pickup_latitude','dropoff_longitude','dropoff_latitude','passenger_count']X = data[base_features]train_X, val_X, train_y, val_y = train_test_split(X, y, random_state=1)first_model = RandomForestRegressor(n_estimators=50, random_state=1).fit(train_X, train_y)# Environment Set-Up for feedback system.from learntools.core import binderbinder.bind(globals())from learntools.ml_explainability.ex2 import *print("Setup Complete")# show dataprint("Data sample:")data.head()

P02 Create PermutationImportance Object

import eli5from eli5.sklearn import PermutationImportanceperm = PermutationImportance(first_model, random_state = 1).fit(val_X, val_y)eli5.show_weights(perm, feature_names = base_features)

On average, the latitude features matter more than the longititude features. Can you come up with any hypotheses for this?

The latitude 緯度 features matter more than the longitude 經度 features.

1. Travel might tend to have greater latitude distances than longitude distances. If the longitudes values were generally closer together, shuffling them wouldn’t matter as much.

2. Different parts of the city might have different pricing rules (e.g. price per mile), and pricing rules could vary more by latitude than longitude.

3. Tolls might be greater on roads going North <-> South (changing latitude) than on roads going East <-> West (changing longitude).

Thus latitude would have a larger effect on the prediction because it captures the amount of the tolls.

data['abs_lon_change'] = abs(data.dropoff_longitude - data.pickup_longitude)data['abs_lat_change'] = abs(data.dropoff_latitude - data.pickup_latitude)features_2 = ['pickup_longitude','pickup_latitude','dropoff_longitude','dropoff_latitude','abs_lat_change','abs_lon_change']X = data[features_2]new_train_X, new_val_X, new_train_y, new_val_y = train_test_split(X, y, random_state=1)second_model = RandomForestRegressor(n_estimators=30, random_state=1).fit(new_train_X, new_train_y)# Create a PermutationImportance object on second_model and fit it to new_val_X and new_val_yperm2 = PermutationImportance(second_model, random_state=1).fit(new_val_X, new_val_y)# show the weights for the permutation importance you just calculatedeli5.show_weights(perm2, feature_names = features_2)

Do you think this could explain why those coordinates had larger permutation importance values in this case?

Consider an alternative where you created and used a feature that was 100X as large for these features, and used that larger feature for training and importance calculations. Would this change the outputted permutation importance values?

Why or why not?

Answer:

The scale of features does not affect permutation importance per se. The only reason that resealing a feature would affect PI is indirectly, if rescaling helped or hurt the ability of the particular learning method we’re using to make use of that feature.

That won’t happen with tree based models, like the Random Forest used here. If you are familiar with Ridge Regression, you might be able to think of how that would be affected.

That said, the absolute change features are have high importance because they capture total distance traveled, which is the primary determinant of taxi fares…It is not an artifact of the feature magnitude.

Question:

You’ve seen that the feature importance for latitudinal distance is greater than the importance of longitudinal distance. From this, can we conclude whether travelling a fixed latitudinal distance tends to be more expensive than traveling the same longitudinal distance?

Answer:

We cannot tell from the permutation importance results whether traveling a fixed latitudinal distance is more or less expensive than traveling the same longitudinal distance.

Possible reasons latitude feature are more important than longitude features:

1. latitudinal distances in the dataset tend to be larger

2. it is more expensive to travel a fixed latitudinal distance

3. Both of the above

If abs_lon_change values were very small, longitudes could be less important to the model even if the cost per mile of travel in that direction were high.