hadoop fs -mkdir /mingzhu

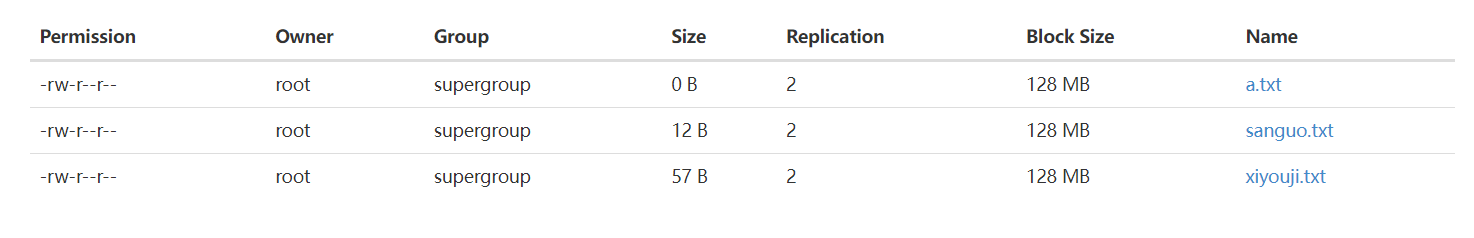

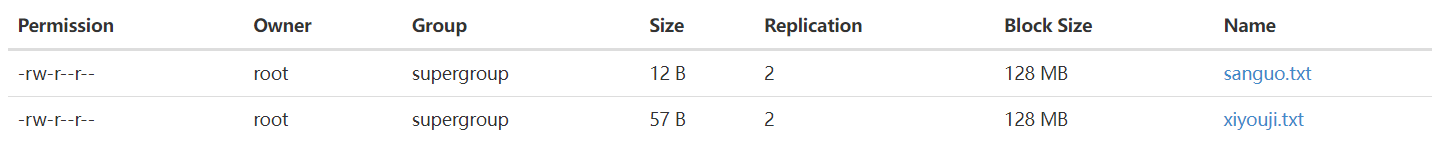

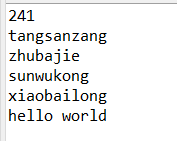

vim xiyouji.txt (创建这个文件)

(在文件里面改)

tangsanzang

ls

// moveFromLocal (以剪贴的方式上传,传完后本地文件会删除)

hadoop fs -moveFromLocal ./xiyouji.txt /mingzhu (把当前目录下的xiyouji.txt文件上传到mingzhu这个文件夹)

ls

vim sanguo.txt

// copyFromLocal (拷贝上传,传完后本地还存在)

hadoop fs -copyFromLocal ./sanguo.txt /mingzhu

vim hongloumeng.txt

jiabaoyu

ls

//put

hadoop fs -put ./hongloumeng.txt /mingzu (上传文件)

// cat (查看文件中的内容)

hadoop fs -cat /mingzu/xiyouji.txt

// appendToFile

vim tudi.txt

hadoop fs -appendToFile ./tudi.txt /mingzhu/xiyouji.txt (把tudi.txt里面的文件内容加到mingzhu这个文件夹里面的xiyouji.txt文件里面)

// copyToLocal (把集群上指定的文件下载到本地)

hadoop fs -copyToLocal /mingzhu/xiyouji.txt ./xiyouji.txt (把集群上的这个位置的这个文件/mingzhu/xiyouji.txt下载到当前目录下,文件名为xiyouji.txt)

//get (和copyToLocal一样)

hadoop fs -get /mingzhu/xiyouji.txt ./xiyouji2.txt

// du (显示占用空间的大小,以byte显示)

-s (显示总的大小)

-h (转换成可读性较好的结果显示出来)

hadoop fs -du /minghzu (会显示里面每个文件的大小,以byte形式显示)

log4j

log4j.rootLogger=info,stdout,Rlog4j.appender.stdout=org.apache.log4j.ConsoleAppenderlog4j.appender.stdout.layout=org.apache.log4j.PatternLayoutlog4j.appender.stdout.layout.ConversionPattern=%5p - %m%nlog4j.appender.R=org.apache.log4j.RollingFileAppenderlog4j.appender.R.File=HdfsDemo.loglog4j.appender.R.MaxFileSize=1MBlog4j.appender.R.MaxBackupIndex=1log4j.appender.R.layout=org.apache.log4j.PatternLayoutlog4j.appender.R.layout.ConversionPattern=%p %t %c -%m%n

main

public static void main(String[] args) throws IOException, URISyntaxException {Configuration conf=new Configuration();conf.set("dfs.replication","2");URI uri = new URI("hdfs://192.168.139.145:8020");FileSystem fs = FileSystem.get(uri, conf);//读取文件/*String content=readContent(fs,"/mingzhu/xiyouji.txt");if(content==null){System.out.println("content is Null");}else {System.out.println(content);}*///上传文件//upload(fs,"D:\\eclipse\\shuihuzhuan.txt","/mingzhu");//upload(fs,"D:\\eclipse\\HdfsDemo\\input","/wordcount");//创建文件/*if(createFile(fs, "/mingzhu/a.txt")==true){System.out.println("创建成功");}*///创建文件夹/*if(mkdir(fs, "/new")==true) {System.out.println("创建成功");}*///删除文件/*if(deleteFile(fs,"/wordcount",true)) {System.out.println("删除成功");}*///删除文件夹/*if(deleteFile(fs,"/new",true)){System.out.println("删除成功");}*///获取文件最后更新时间//getLastTime(fs,"/mingzhu/xiyouji.txt");//查看block分布主机//fileLoc(fs,"/mingzhu/xiyouji.txt");//获取所有的DataNode结点信息//getDataNodeList(fs);//追加内容/*if(appendStringToFile(fs,"/mingzhu/xiyouji.txt")==true) {System.out.println("追加成功");}*/}

读取文件

public static String readContent(FileSystem fs, String path) throws IOException {Path f = new Path(path);if (!fs.exists(f)) {return null;} else {String str = null;StringBuilder sb = new StringBuilder(1024);try {FSDataInputStream in=fs.open(f);BufferedReader bf = new BufferedReader(new InputStreamReader(in));long time = System.currentTimeMillis();while ((str = bf.readLine()) != null) {sb.append(str + "\n");}System.out.println(System.currentTimeMillis()-time);} catch (IOException e) {} finally {fs.close();}return sb.toString();}}

创建文件

public static boolean createFile(FileSystem fs,String path) throws IOException, URISyntaxException {Path f = new Path(path);// 目的路径fs.create(f).close();return true;}

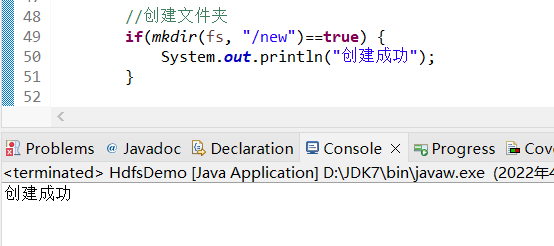

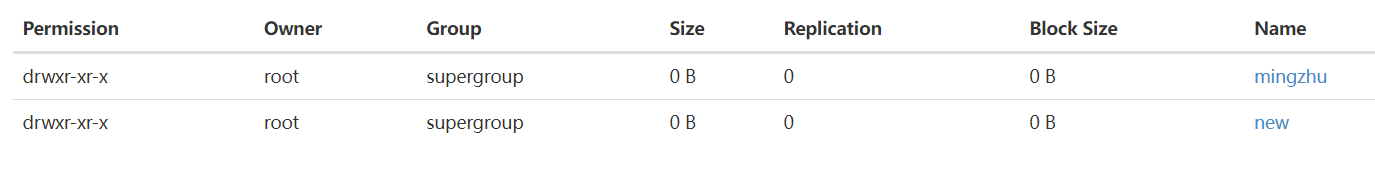

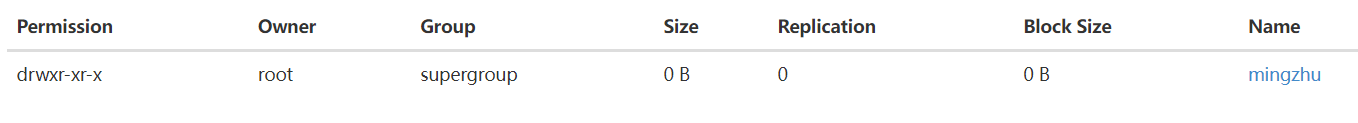

创建文件夹

public static boolean mkdir(FileSystem fs,String path) throws IOException, URISyntaxException {Path f = new Path(path);// 目的路径boolean ok = fs.mkdirs(f);return ok;}

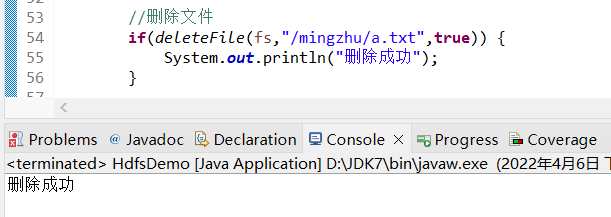

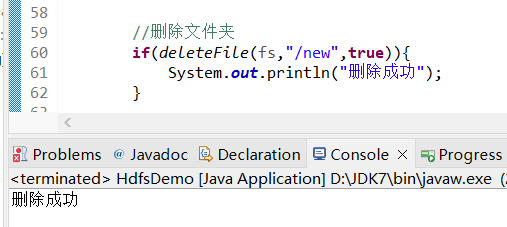

删除文件及文件夹

public static boolean deleteFile(FileSystem fs,String path,boolean recursive) throws IOException, URISyntaxException {Path f = new Path(path);// 目的路径boolean ok = fs.delete(f, recursive);return ok;}

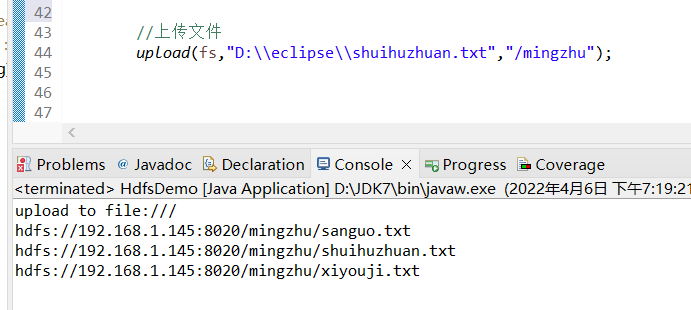

上传文件

public static void upload(FileSystem fs, String localFile, String hdfsPath) throws IOException {Path src = new Path(localFile);// 要上传的Path dst = new Path(hdfsPath);// 目的路径fs.copyFromLocalFile(src, dst);System.out.println("upload to " + fs.getConf().get("fs.defaultFS"));// 默认配置文件的名称FileStatus files[] = fs.listStatus(dst);// 目的地址的所有文件状态for (FileStatus file : files) {System.out.println(file.getPath());}}

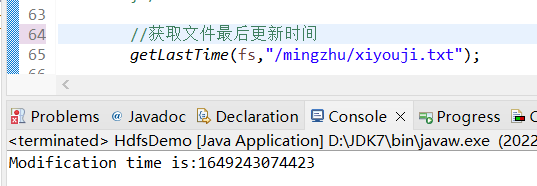

获取文件最后更新时间

public static void getLastTime(FileSystem fs,String filepath) throws URISyntaxException, IOException {Path path = new Path(filepath);// 目的路径FileStatus status = fs.getFileStatus(path);System.out.println("Modification time is:"+status.getModificationTime());}

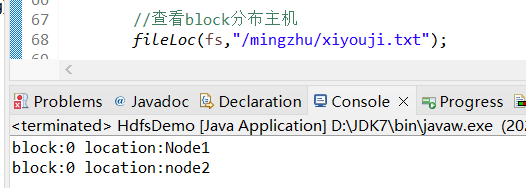

查看block分布主机

public static void fileLoc(FileSystem fs,String filepath) throws URISyntaxException, IOException {Path path = new Path(filepath);// 目的路径FileStatus status = fs.getFileStatus(path);BlockLocation blocks[] = fs.getFileBlockLocations(status, 0,status.getLen());for(int i = 0 ; i <blocks.length;i++) {String hosts[] = blocks[i].getHosts();for(String host:hosts) {System.out.println("block:"+i+" location:"+host);}}}

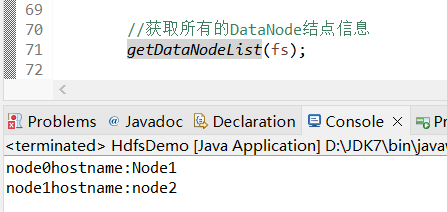

获取所有的DataNode结点信息

public static void getDataNodeList(FileSystem fs) throws URISyntaxException, IOException {DatanodeInfo dataNodeInfo[] = ((DistributedFileSystem)fs).getDataNodeStats();String names[] = new String[dataNodeInfo.length];for(int i = 0;i<dataNodeInfo.length;i++) {names[i] = dataNodeInfo[i].getHostName();System.out.println("node"+i+"hostname:"+names[i]);}}

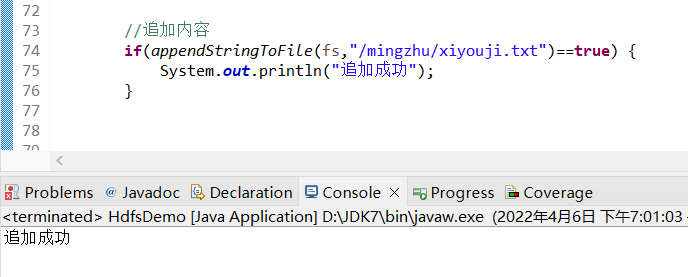

追加文件

public static boolean appendStringToFile(FileSystem fs,String filepath) throws URISyntaxException, IOException {Path f = new Path(filepath);// 目的路径Configuration conf = new Configuration();conf.set("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER");conf.set("dfs.client.block.write.replace-datanode-on-failure.enable", "true");FSDataOutputStream fos = fs.append(f);fos.write("\nhello world".getBytes());fos.close();return true;}

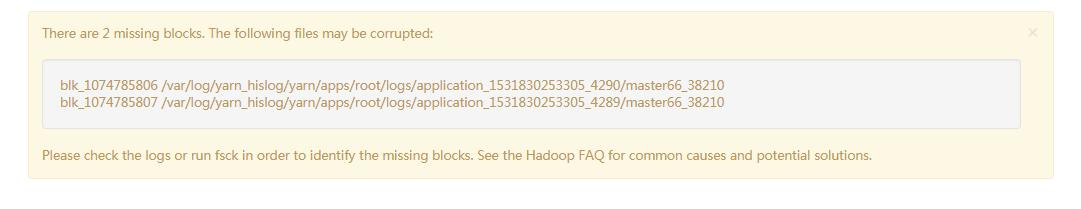

There are 2 missing blocks. The following files may be corrupted:

//HDFS丢失了文件

hdfs fsck /

hdfs dfs -rm -r -f