ip addr :查看ip地址

vim /etc/sysconfig/network-scripts/ifcfg-ens33

BOOTPROTO=dhcp //把dhcp改为static

ONBOOT=yes

IPADDR=192.168.109.135 (随便给) (145) (node2:147)

NETMASK=255.255.255.0

GATEWAY=192.168.109.2

DNS1=8.8.8.8

service network restart //重启网络

firewall-cmd —state :查看防火墙状态

systemctl stop firewalld.service :关闭防火墙

systemctl disable firewalld.service :关闭防火墙开机自启

ping 网关/ping外网/ping主机(ping不通可能是主机防火墙没关)

禁用VMnet8后,ping不通主机(虚拟机和主机连通断开,并不影响虚拟机访问外网)(VMnet8这个网卡负责虚拟机和主机之间的通信)

安装Java JDK

1、安装前检查是否已经存在Java SDK,建议卸载系统自带的openjdk

rpm -qa | grep openjdk 查看是否已经安装有含有 openjdk 字符的软件包,例如如下输出:

java-1.7.0-openjdk-headless-1.7.0.261-2.6.22.2.el7_8.x86_64

java-1.8.0-openjdk-headless-1.8.0.262.b10-1.el7.x86_64

java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64

并使用 java -version查看版本号,可以使用如下命令卸载已经安装的Java SDK:

sudo rpm -e —nodeps java-1.8.0-openjdk-1.8.0.262.b10-1.el7.x86_64

2、安装下载jdk1.7.0

yum search jdk

yum install java-1.7.0-openjdk-devel.x86_64 -y

java环境变量配置

ll /etc/alternatives/java //查看jdk安装在哪

ll /usr/lib/jvm/java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64 //看这个路径下有什么东西

vim etc/profile

(将新添加的环境变量放到末尾)

export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/libtools.jar

export PATH=$JAVA_HOME/bin:$PATH

(export 把这个变量变成全局变量)

source etc/profile //立即生效

hadoop下载安装

wget https://archive.apache.org/dist/hadoop/common/hadoop-2.6.0/hadoop-2.6.0.tar.gz

mv /opt/ //将下载的文件移动到opt目录

在opt目录下解压

tar -zxvf hadoop-2.6.0.tar.gz

cd hadoop.2.6.0

vim /etc/profile

(在文件末尾加)

export HADOOP_HOME=/opt/hadoop-2.6.0

export PATH=$JAVA_HOME/bin:$HADOOP_HOME/bin:$PATH (修改)

source etc/profile //立即生效

hadoop(检验)

hadoop环境变量配置

cd /opt/hadoop-2.6.0/etc/hadoop

vim hadoop-env.sh

(在文件中找到并添加)

export JAVA_HOME=/usr/lib/jvm/java-1.7.0-openjdk-1.7.0.261-2.6.22.2.el7_8.x86_64

export HADOOP_HOME=/opt/hadoop-2.6.0

vim core-site.xml

vim hdfs-site.xml

cp mapred-site.xml.template mapred-site.xml

vim mapred-site.xml

vim yarn-site.xml

vim /etc/hostname //修改机器名

master

vim /etc/hosts //将每台机器的ip地址和机器名绑定

192.168.109.135 master

192.168.109.136 slave1

192.168.109.138 slave2

修改slaves文件

vim /opt/hadoop-2.6.0/etc/hadoop/slaves

slave1

slave2

reboot0(重启)

hostname(查看机器名)

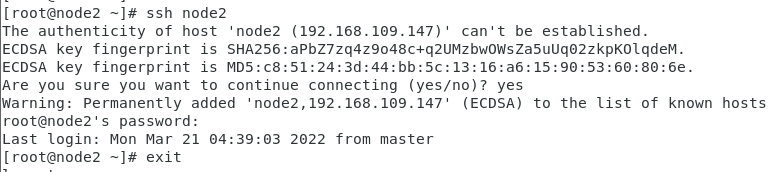

配置免密登录

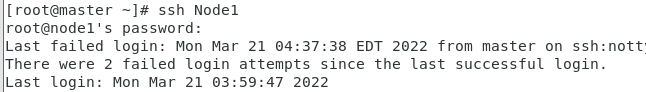

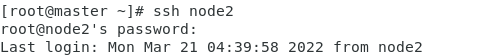

ssh Node1

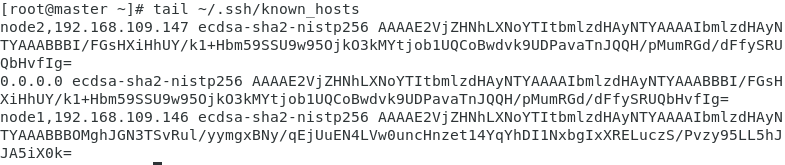

tail ~/.ssh/known_hosts (刚才登录过的机器的信息)

ssh-keygen -t rsa

ssh-copy-id -i ~/.ssh/id_rsa.pub root@Node1 //将公钥上传到从节点

hadoop namenode -format //第一次启动格式化

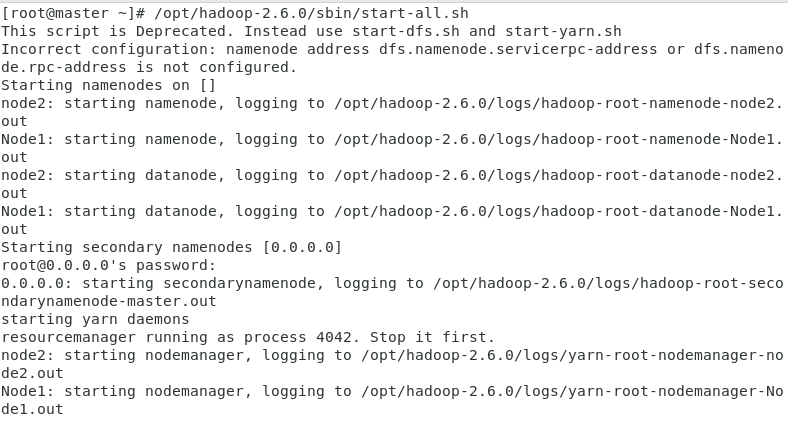

/opt/hadoop-2.6.0/sbin/start-yarn.sh //执行启动脚本

第一次格式化后,如果修改了配置文件,就需要删掉日志文件和数据文件

cd /opt/hadoop-2.6.0/logs

ls //查看一下

rm -rf *

ls //查看删除没

cd ..

rm -rf tmp

ls

mkdir tmp //重新创建tmp文件夹

如果开始hadoop-2.6.0里面没有tmp文件夹,就需要自己创建

重启网卡报Job for network.service failed because the control process exited with error code.。。 错误

[root@mina0 hadoop]# systemctl stop NetworkManager

[root@mina0 hadoop]# systemctl disable NetworkManager