Backend是MNN对计算设备的抽象。MNN当前已经支持CPU、Vulkan、OpenCL、Metal等Backend,但外部npu backend的添加方式存在差异。

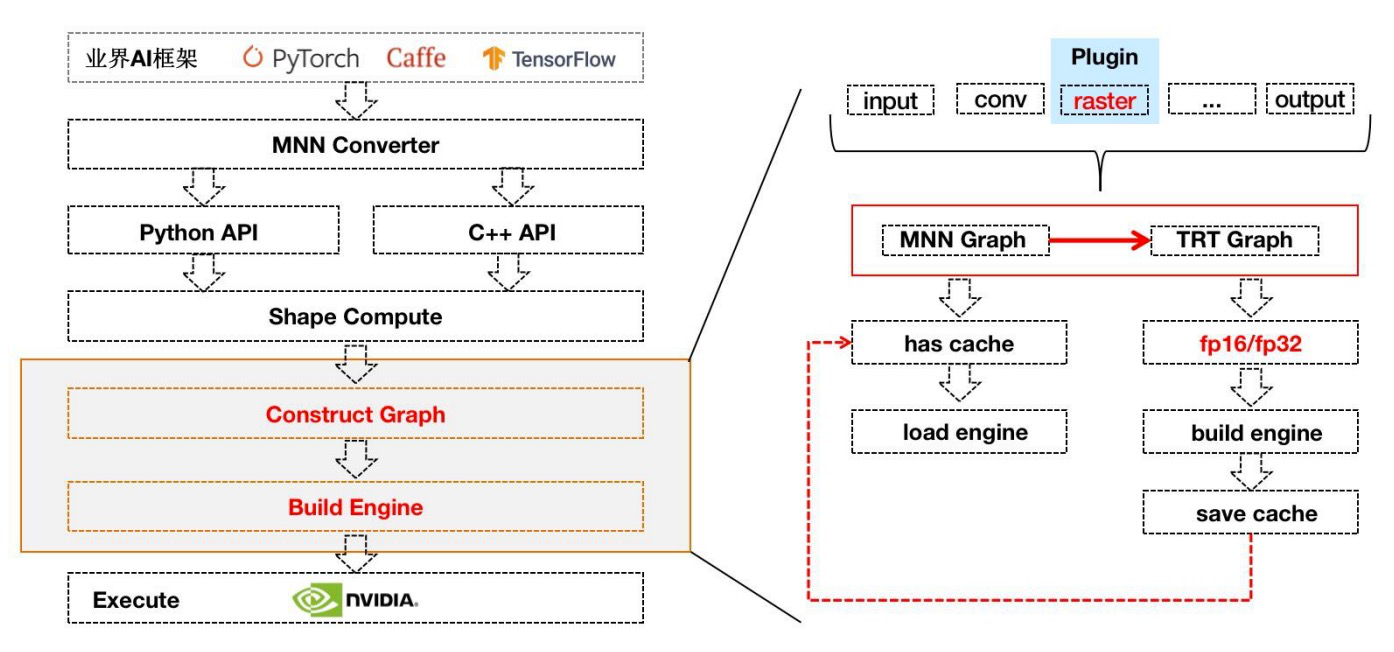

1. 框架图

2. 新增后端

2.1 实现

继承Backend抽象类,并实现所有纯虚函数。

//类函数执行流程:构造 -> onCreate -> onResizeBegin -> onResizeEnd -> onAcquire -> onCopyBuffer -> onExecuteBegin -> onExecuteEnd -> onCopyBuffer -> onClearBufferclass NPUBackend : public Backend {public://NPUBackend构造函数,NPURuntime用于存放cache参赛以及附加编译选项NPUBackend(const NPURuntime* runtime);virtual ~NPUBackend();//onCreate : 根据传入op的类型,创建对应npu算子virtual Execution* onCreate(const std::vector<Tensor*>& inputs, const std::vector<Tensor*>& outputs, const MNN::Op* op) override;//推理前准备函数virtual void onExecuteBegin() const override;//推理后处理函数virtual void onExecuteEnd() const override;//内存申请统一函数virtual Backend::MemObj* onAcquire(const Tensor* tensor, StorageType storageType) override;//清除缓存virtual bool onClearBuffer() override;//内存拷贝函数(通常用于数据格式转换)virtual void onCopyBuffer(const Tensor* srcTensor, const Tensor* dstTensor) const override;//维度计算/内存申请前准备virtual void onResizeBegin() override;//计算维度/内存申请后处理virtual void onResizeEnd() override;}

2.2 onCreate

Backend需要通过onCreate为op创建出exection,一个exection通常代表一个算子实例:

Execution* NPUBackend::onCreate(const std::vector<Tensor*>& inputs, const std::vector<Tensor*>& outputs, const MNN::Op* op) {//获取已注册的npu算子mapauto map = getCreatorMap();auto iter = map->find(op->type());if (iter == map->end()) {MNN_ERROR("map not find !!! \n");if(op != nullptr){if(op->name() != nullptr){MNN_PRINT("[NPU] Don't support type %d, %s\n", op->type(), op->name()->c_str());}}return nullptr;}//当查找到npu支持该算子,即创建exectionauto exe = iter->second->onCreate(inputs, outputs, op, this);if (nullptr == exe) {MNN_ERROR("nullptr == exe !!! \n");if(op != nullptr){if(op->name() != nullptr){MNN_PRINT("[NPU] The Creator Don't support type %d, %s\n", op->type(), op->name()->c_str());}}return nullptr;}return exe;}

2.3 onCopyBuffer

拷贝可能在backend内部,也可能在npu backend与CPU backend之间。拷贝需要处理Tensor间的布局转换,相同布局时,可以直接拷贝数据;不同布局,如NHWC和NC4HW4,则一般需要做特殊转换。该部分工作需要在onCopyBuffer函数中实现。具体参考:https://github.com/alibaba/MNN/blob/master/source/backend/hiai/backend/NPUBackend.cpp

2.4 onResizeEnd

用于对构图后的模型,进行编译,生产npu可执行模型文件

void NPUBackend::onResizeEnd() {bulidIRModelAndLoad();}

2.5 onExecuteEnd

模型推理代码,即npu sdk提供的推理api在此添加

void NPUBackend::onExecuteEnd() const {process(0);}

2.6 注册

定义Backend Creator,注册方法中调用MNNInsertExtraBackendCreator就可以完成Backend的注册,这里的注册方法需要在BackendRegister.cpp中声明并调用:

struct NPUBackendCreator : RuntimeCreator {//用于做初始化npu环境,并判断设备兼容性情况,如不兼容,直接回退virtual Runtime* onCreate(const Backend::Info& info) const override {shared_ptr<hiai::AiModelMngerClient> mgrClient = make_shared<hiai::AiModelMngerClient>();if(mgrClient.get() == nullptr){MNN_ERROR("mgrClient.get() == NULL");return nullptr;auto ret = mgrClient->Init(nullptr);if (ret != hiai::AI_SUCCESS) {MNN_ERROR("[NPU] AiModelMngerClient Init Failed!\n");return nullptr;}const char* currentversion = mgrClient->GetVersion();if(currentversion != nullptr){MNN_PRINT("[NPU] ddk currentversion : %s \n", currentversion);}else{MNN_ERROR("[NPU] current version don't support, return nullptr\n");return nullptr;}if(string(currentversion).compare("100.330.000.000") <= 0){MNN_PRINT("[NPU] current version don't support,version=%s \n",currentversion);return nullptr;}}return new NPURuntime(info);}static const auto __npu_global_initializer = []() {MNNInsertExtraRuntimeCreator(MNN_FORWARD_USER_0, new NPUBackendCreator, true);return true;}();

3. 新增算子

3.1 实现

每个新增算子都要继承Execution,并重写两个函数onResize,onExecute。onExecute用于mnn到npu参数转换,并重新构图。onExecute在npu没用到,只需返回NO_ERROR;

class NPUCommonExecution : public Execution {public:NPUCommonExecution(Backend *backend, const Op *op);virtual ~NPUCommonExecution() = default;virtual ErrorCode onResize(const std::vector<Tensor *> &inputs, const std::vector<Tensor *> &outputs) override;virtual ErrorCode onExecute(const std::vector<Tensor *> &inputs, const std::vector<Tensor *> &outputs) override;};ErrorCode NPUActivation::onResize(const std::vector<Tensor *> &inputs, const std::vector<Tensor *> &outputs) {auto opName = mOp->name()->str();auto xOp = mNpuBackend->getInputOps(mOp);shared_ptr<ge::op::Activation> relu(new ge::op::Activation(opName + "_relu"));(*relu).set_input_x(*xOp.get()).set_attr_coef(.000000).set_attr_mode(mType);mNpuBackend->setOutputOps(mOp, {relu}, outputs);return NO_ERROR;}

3.2 注册

Op Execution中,就可以通过注册追加Op类型:

template <class T>class NPUCreatorRegister {public:NPUCreatorRegister(OpType type) {T *t = new T;NPUBackend::addCreator(type, t);}~NPUCreatorRegister() = default;};template <typename T>class TypedCreator : public NPUBackend::Creator {public:virtual ~TypedCreator() = default;virtual Execution *onCreate(const std::vector<Tensor *> &inputs, const std::vector<Tensor *> &outputs, const MNN::Op *op,Backend *backend) const override {auto newOp = new T(backend, op, inputs, outputs);return newOp;}};class ActivationCreator : public NPUBackend::Creator {public:virtual Execution *onCreate(const std::vector<Tensor *> &inputs, const std::vector<Tensor *> &outputs,const MNN::Op *op, Backend *backend) const override {return new NPUActivation(backend, op, inputs, outputs, 1);}};NPUCreatorRegister<ActivationCreator> __relu_op(OpType_ReLU);

4. 工程构建

使用cmake编译时,完成代码修改后,也需要相应修改CMakeLists.txt

在后端目录(如hiai/tensorrt)中添加CMakeLists.txt,添加依赖库引入,并指定编译文件

file(GLOB_RECURSE MNN_NPU_SRCS ${CMAKE_CURRENT_LIST_DIR}/*.cpp)add_library(MNN_NPUSHARED${MNN_NPU_SRCS})add_library(hiai SHARED IMPORTED )set_target_properties(hiai PROPERTIESIMPORTED_LOCATION "${CMAKE_CURRENT_SOURCE_DIR}/3rdParty/${ANDROID_ABI}/libhiai.so")target_include_directories(MNN_NPU PRIVATE ${CMAKE_CURRENT_LIST_DIR}/backend/)target_include_directories(MNN_NPU PRIVATE ${CMAKE_CURRENT_LIST_DIR}/3rdParty/include/)

在主目录中,修改CMakeLists.txt,增加NPU宏开关,并添加后端依赖

# NPUIF(MNN_NPU)add_subdirectory(${CMAKE_CURRENT_LIST_DIR}/source/backend/hiai/)IF(MNN_SEP_BUILD)list(APPEND MNN_DEPS MNN_NPU)ELSE()list(APPEND MNN_TARGETS MNN_NPU)list(APPEND MNN_OBJECTS_TO_LINK $<TARGET_OBJECTS:MNN_NPU>)list(APPEND MNN_EXTRA_DEPENDS ${CMAKE_CURRENT_LIST_DIR}/source/backend/hiai/3rdParty/${ANDROID_ABI}/libhiai.so)list(APPEND MNN_EXTRA_DEPENDS ${CMAKE_CURRENT_LIST_DIR}/source/backend/hiai/3rdParty/${ANDROID_ABI}/libhiai_ir_build.so)list(APPEND MNN_EXTRA_DEPENDS ${CMAKE_CURRENT_LIST_DIR}/source/backend/hiai/3rdParty/${ANDROID_ABI}/libhiai_ir.so)ENDIF()ENDIF()

5. 参考代码

5.1 华为HIAI