- 一、OpenStack简介

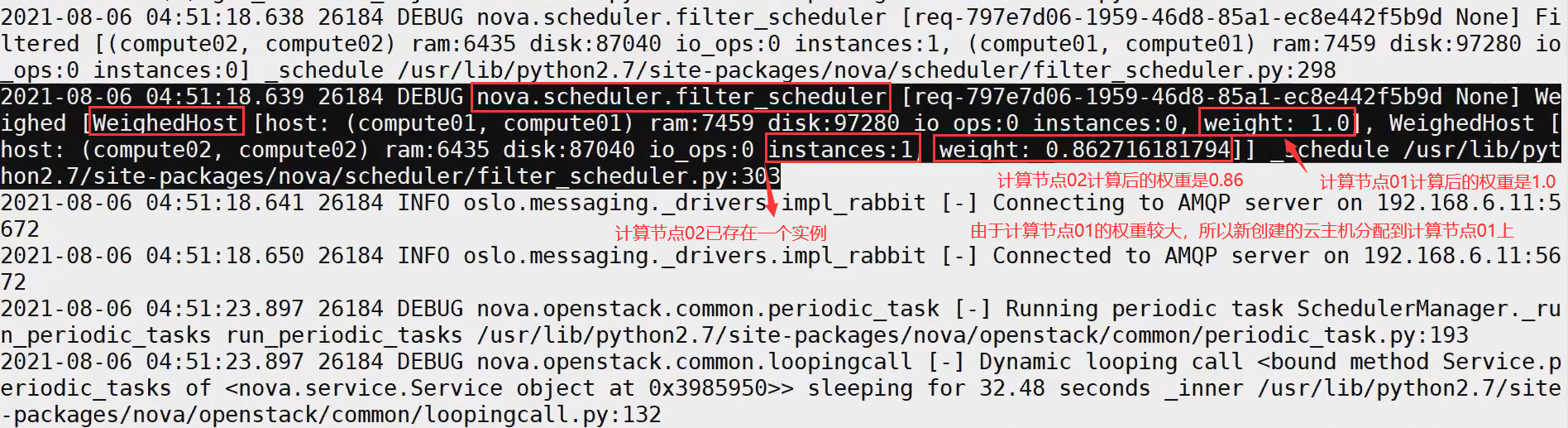

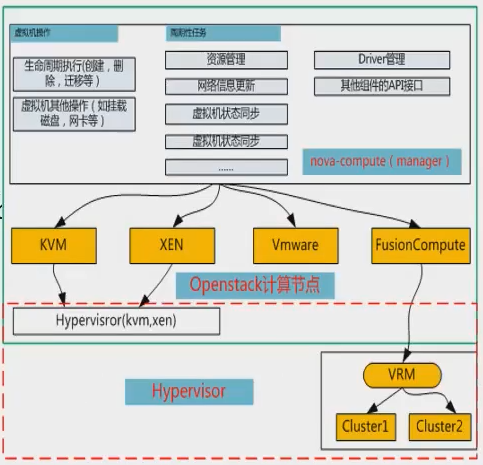

- nova-compute有个定时任务,定期从数据库中查找到运行在该节点上的所有虚拟机信息,统计得到空闲内存大小和空闲磁盘大小。然后更新数据库compute_node信息,以保证调度的准确性。

- OpenStack自动部署方法(All in one)

- VMware Workstation 虚拟机使用方法图文详解

- 虚拟网卡、虚拟交换机、虚拟机网卡

- Kubernetes(K8s) 解决了哪些问题?

- 当使用OpenStack进行云计算部署时,它支持以下几种网络类型:

- OpenStack

- OpenStack概述

- (ListOpt) List of network type driver entrypoints to be loaded from

- the neutron.ml2.type_drivers namespace.

- type_drivers = local,flat,vlan,gre,vxlan

- Example: type_drivers = flat,vlan,gre,vxlan

- (ListOpt) Ordered list of network_types to allocate as tenant

- networks. The default value ‘local’ is useful for single-box testing

- but provides no connectivity between hosts.

- tenant_network_types = local

- Example: tenant_network_types = vlan,gre,vxlan

- (ListOpt) Ordered list of networking mechanism driver entrypoints

- to be loaded from the neutron.ml2.mechanism_drivers namespace.

- mechanism_drivers =

- Example: mechanism_drivers = openvswitch,mlnx

- Example: mechanism_drivers = arista

- Example: mechanism_drivers = cisco,logger

- Example: mechanism_drivers = openvswitch,brocade

- Example: mechanism_drivers = linuxbridge,brocade

- (ListOpt) Ordered list of extension driver entrypoints

- to be loaded from the neutron.ml2.extension_drivers namespace.

- extension_drivers =

- Example: extension_drivers = anewextensiondriver

- (ListOpt) List of physical_network names with which flat networks

- can be created. Use * to allow flat networks with arbitrary

- physical_network names.

- flat_networks =

- Example:flat_networks = physnet1,physnet2

- Example:flat_networks = *

- (ListOpt) List of

[: : ] tuples - specifying physical_network names usable for VLAN provider and

- tenant networks, as well as ranges of VLAN tags on each

- physical_network available for allocation as tenant networks.

- network_vlan_ranges =

- Example: network_vlan_ranges = physnet1:1000:2999,physnet2

- (ListOpt) Comma-separated list of

: tuples enumerating ranges of GRE tunnel IDs that are available for tenant network allocation - tunnel_id_ranges =

- (ListOpt) Comma-separated list of

: tuples enumerating - ranges of VXLAN VNI IDs that are available for tenant network allocation.

- vni_ranges =

- (StrOpt) Multicast group for the VXLAN interface. When configured, will

- enable sending all broadcast traffic to this multicast group. When left

- unconfigured, will disable multicast VXLAN mode.

- vxlan_group =

- Example: vxlan_group = 239.1.1.1

- Controls if neutron security group is enabled or not.

- It should be false when you use nova security group.

- enable_security_group = True

- Use ipset to speed-up the iptables security groups. Enabling ipset support

- requires that ipset is installed on L2 agent node.

- enable_ipset = True

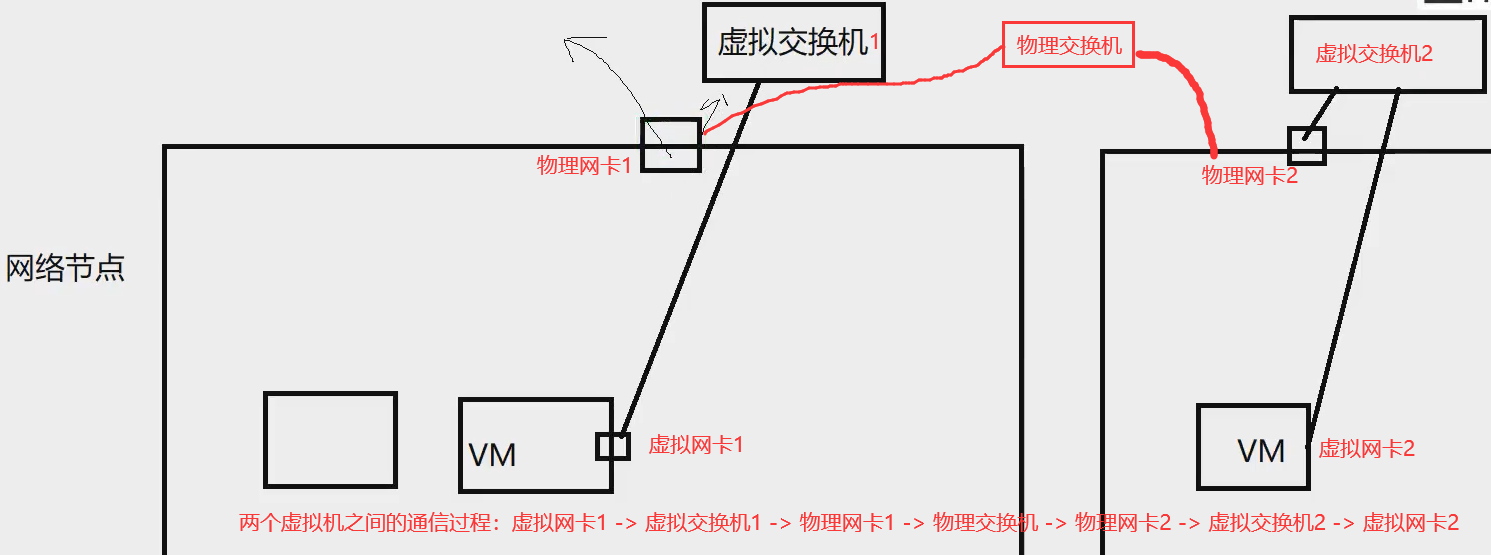

- 计算节点若运行多个虚拟机,它们在同一个计算节点上互通,不再需要通过物理网卡转发出去。通过neutron在内部就创建了一个虚拟交换机

- 汇总compute节点的使用信息

- 只要运行了nova-compute这个服务,那么这个节点就是计算节点

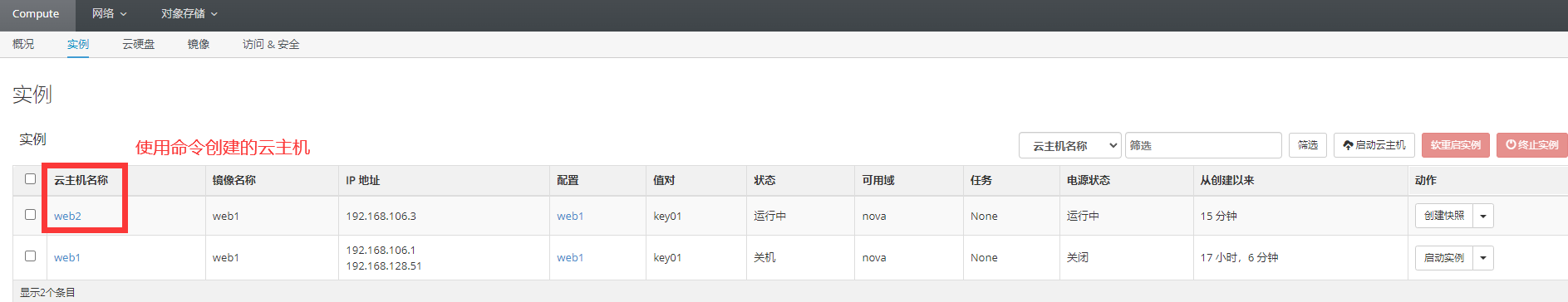

- 查看发放的云主机

- 剩余空间只有19G,请求需要201G

- 查看nova命令来自于哪个软件包

- 根据/usr/bin/nova,可知nova命令来自python-novaclient

- 从OpenStack仓库安装nova软件包

- 此时就可以执行nova命令

- 从控制节点拷贝配置文件keystonerc_user1到ntp节点

- 在真正的客户端节点上(非集群内的节点)可以利用nova命令查看到云主机信息

- 创建浮动ip

- 查询浮动ip与云主机进行绑定的相关参数

- 云主机web2未与浮动ip进行绑定时

- 将浮动ip与云主机进行绑定:nova floating-ip-associate 云主机名称 浮动ip

- 云主机web2与浮动ip进行绑定后

- 远程登录云主机

- 先删除子网

- 再删除网络

https://www.cnblogs.com/cloudhere/category/1439151.html

一、OpenStack简介

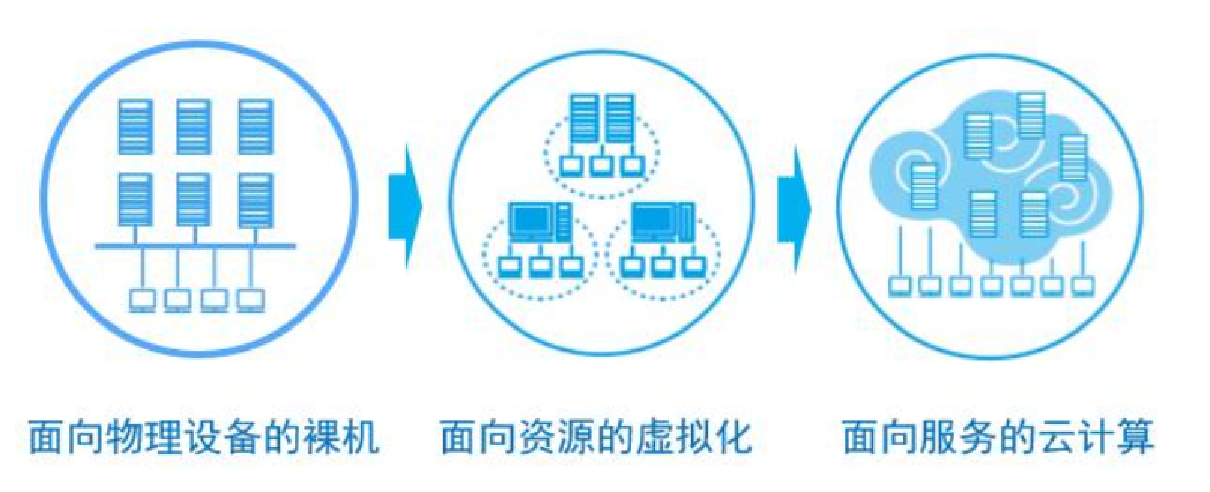

1、云计算的前世今生

所有的新事物都不是突然冒出来的,都有前世和今生。云计算也是IT技术不断发展的产物。 要理解云计算,需要对IT系统架构的发展过程有所认识。

IT系统架构的发展到目前为止大致可以分为3个阶段:

- 物理机架构:这一阶段,应用部署和运行在物理机上。 比如企业要上一个ERP系统,如果规模不大,可以找3台物理机,分别部署Web服务器、应用服务器和数据库服务器。 如果规模大一点,各种服务器可以采用集群架构,但每个集群成员也还是直接部署在物理机上。 我见过的客户早期都是这种架构,一套应用一套服务器,通常系统的资源使用率都很低,达到20%的都是好的。

- 虚拟化架构:决定了物理服务器的计算能力越来越强,虚拟化技术的发展大大提高了物理服务器的资源使用率。 这个阶段,物理机上运行若干虚拟机,应用系统直接部署到虚拟机上。 虚拟化的好处还体现在减少了需要管理的物理机数量,同时节省了维护成本。

云计算架构 虚拟化提高了单台物理机的资源使用率,随着虚拟化技术的应用,IT环境中有越来越多的虚拟机,这时新的需求产生了: 如何对IT环境中的虚拟机进行统一和高效的管理。 有需求就有供给,云计算登上了历史舞台。

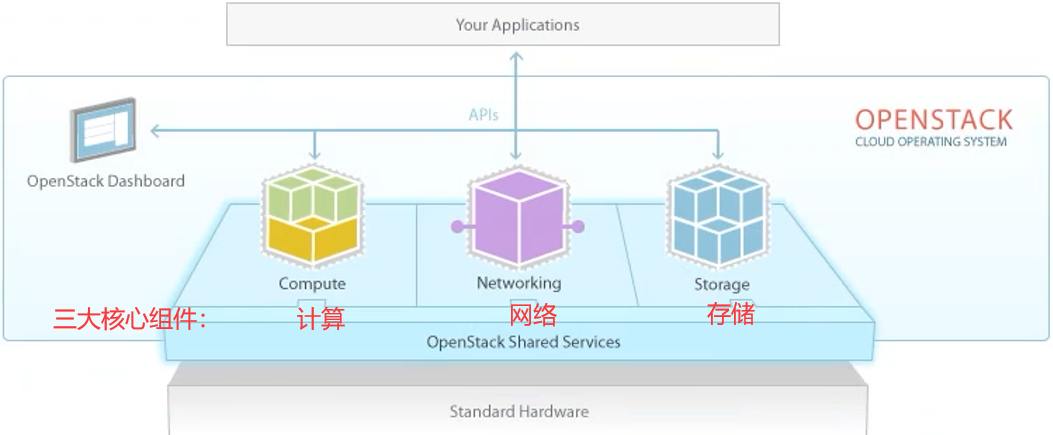

2、OpenStack 简介

什么是云计算:云计算是一种按使用量付费的模式,这种模式提供可用的、便捷的、按需的网络访问, 通过互联网进入可配置的计算资源共享池(资源包括网络、计算、存储、应用软件、服务)

- 云计算所包含的几个层次服务:

- SaaS(Software as a Service):软件即服务,把在线软件作为一种服务。

- Paas(Platform as a Service):平台即服务,把平台作为一种服务。

- Iaas(Infrastructure as a Service):基础设施即服务,把硬件设备作为一种服务。

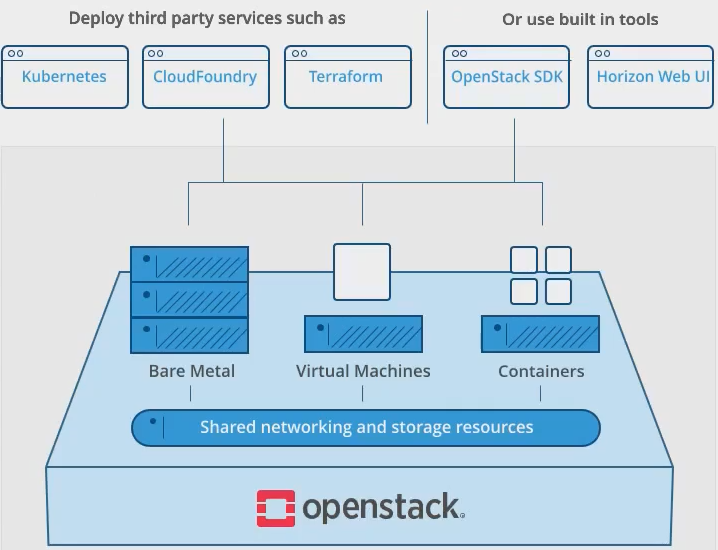

- OpenStack:是由Rackspace和NASA共同开发的云计算平台, 是一个开源的 IaaS(基础设施及服务)云计算平台,让任何人都可以自行建立和提供云端运算服务,每半年发布一次,用Python语言编写

- OpenStack历史

- OpenStack社区与链接

- 社区: www.openstack.org, wiki.openstack.org

- 邮件列表: http://wiki.openstack.org/MailingLists#General_List http://wiki.openstack.org/MailingLists#Development_List http://wiki.openstack.org/MailingLists#Operators

- 如何贡献代码: http://wiki.openstack.org/HowToContribute

- 源代码管理 :http://wiki.openstack.org/GerritWorkflow

- 文档 :http://docs.openstack.org

VMware与OpenStack的主要区别:

- 虚拟化技术:VMware提供虚拟化解决方案,而OpenStack则是一个虚拟化管理平台。VMware的虚拟化技术非常成熟,已经广泛应用于企业级数据中心;OpenStack则需要结合各种虚拟化技术来实现其功能

- 开源性质:VMware是一种专有的闭源技术,而OpenStack是一个开源的云计算平台。因此,OpenStack的用户可以根据自己的需求修改和定制源代码,而VMware则不能。

- 架构:VMware采用的是客户/服务器架构,而OpenStack采用的是分布式架构。VMware的虚拟化技术可以实现可靠性、高可用性以及性能等。而OpenStack更加注重规模扩展和安全性。

- 支持的平台:VMware支持的平台主要用VMware自己的产品,而OpenStack是一种能够在各种平台上运行的云计算平台。因此,OpenStack更加灵活,可适用于多种不同的组织和业务。

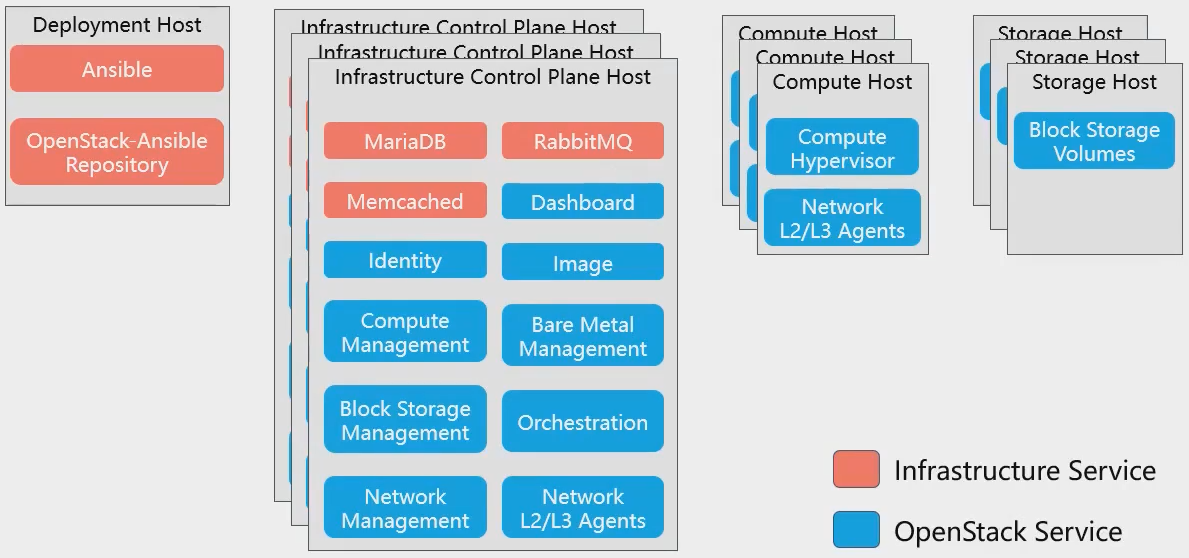

搭建OpenStack云平台的步骤:

- 硬件准备和规划:需要选择一些服务器、网络交换机等基础设施,并规划好物理拓扑结构和IP地址等。

- 安装操作系统:在服务器上安装Linux操作系统。OpenStack支持的操作系统有不少种,推荐使用Ubuntu或CentOS。

- 安装OpenStack软件包:在操作系统上安装OpenStack软件包。OpenStack时一个由多个组件组成的平台,需要安装不同的组件包,包括Nova、Glance、Neutron、Keystone等。每个组件的安装方式不同,需要按照官方文档的指引逐一安装。

- 配置数据库:OpenStack使用MySQL作为其默认数据库,需要在服务器上安装和配置MySQL。

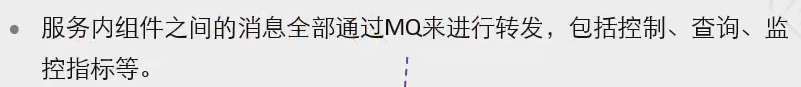

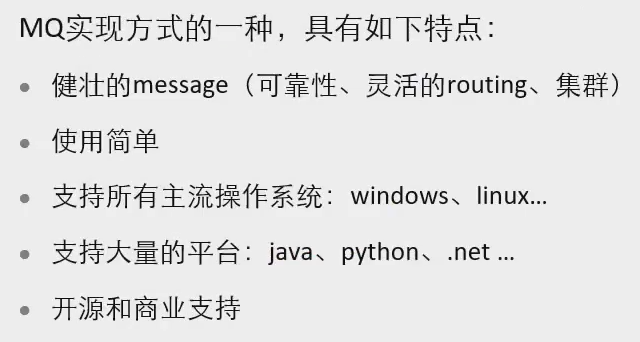

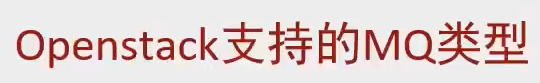

- 配置消息队列:OpenStack使用RabbitMQ或者ZeroMQ作为消息队列,可以在服务器上安装和配置。消息队列用于不同组件之间的通信。

- 配置网络:OpenStack支持多种网络模型,包括Flat、VLAN和GRE等。需要根据实际情况和业务需求配置网络。

- 配置认证和授权:OpenStack身份认证和授权使用Keystone组件进行管理。需要在服务器上安装和配置Keystone,并设置角色和权限等。

- 启动和验证OpenStack服务:启动各个OpenStack组件的服务,并基于验证的目的登录OpenStack的控制台。此时可以在OpenStack中创建虚拟机、存储卷、网络等资源,验证OpenStack的功能性和稳定性。

在搭建OpenStack云平台需要注意以下几个问题:

- 安装和配置的每个组件需要保证版本的一致性,以避免不兼容的情况。

- 在配置网络的时候,需要根据实际情况选择合适的网络模型和拓扑结构,避免出现瓶颈和单点故障。

- 安全性极其重要,需要对OpenStack平台进行适当的安全加固,包括网络安全、身份验证和访问控制登。

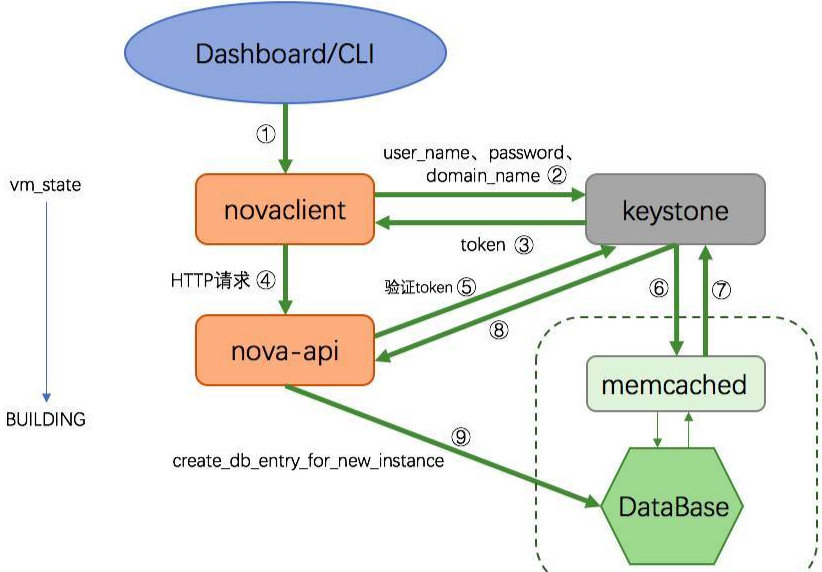

OpenStack虚拟机创建流程(三阶段)

https://blog.csdn.net/dylloveyou/article/details/78587308

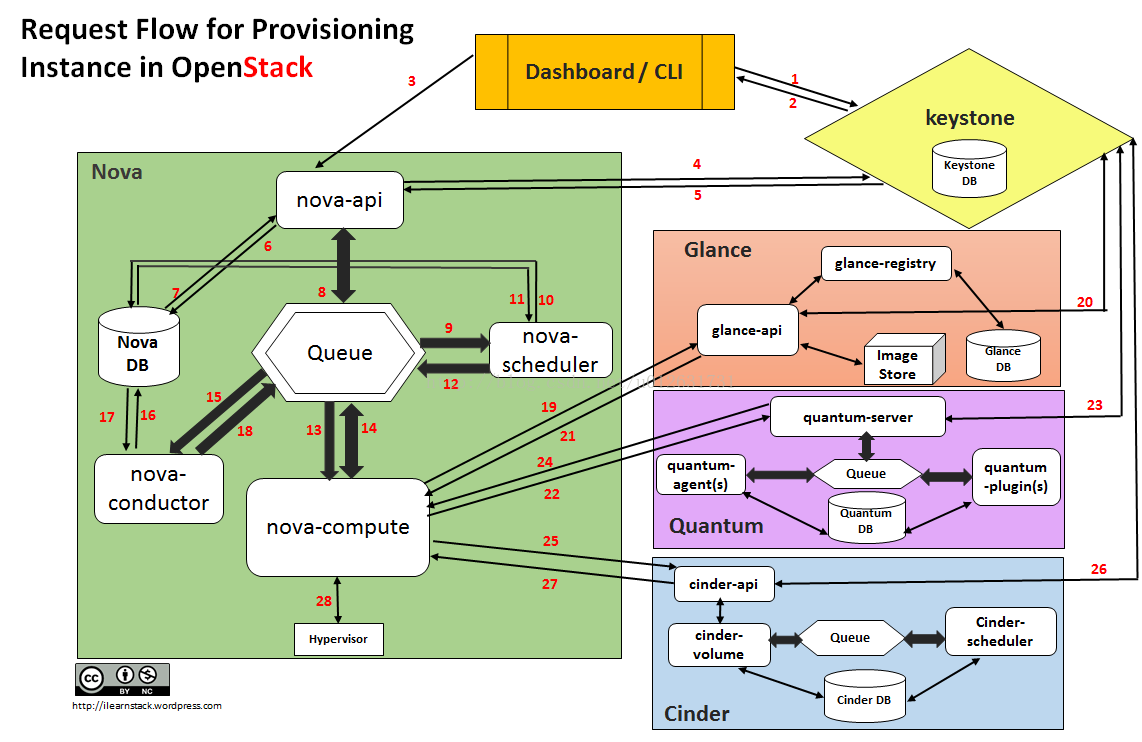

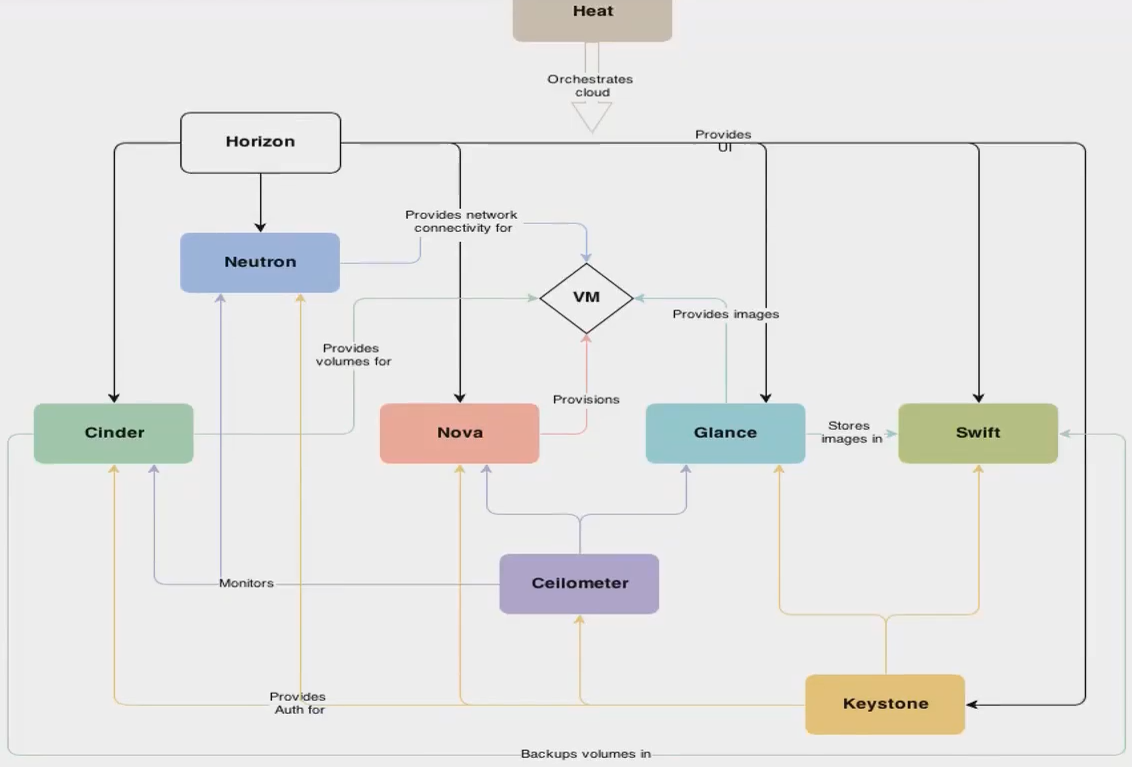

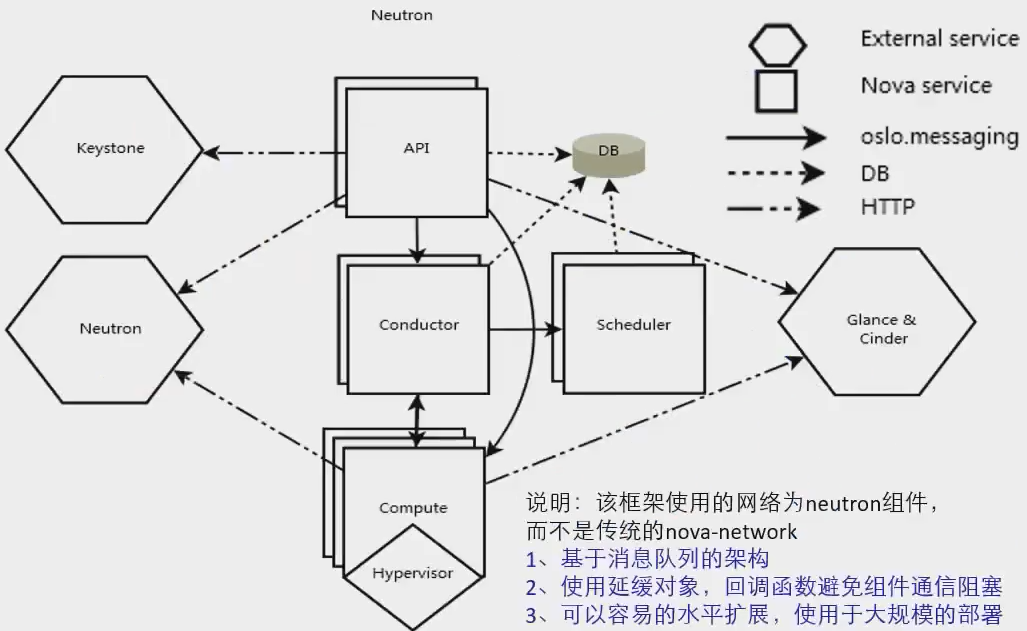

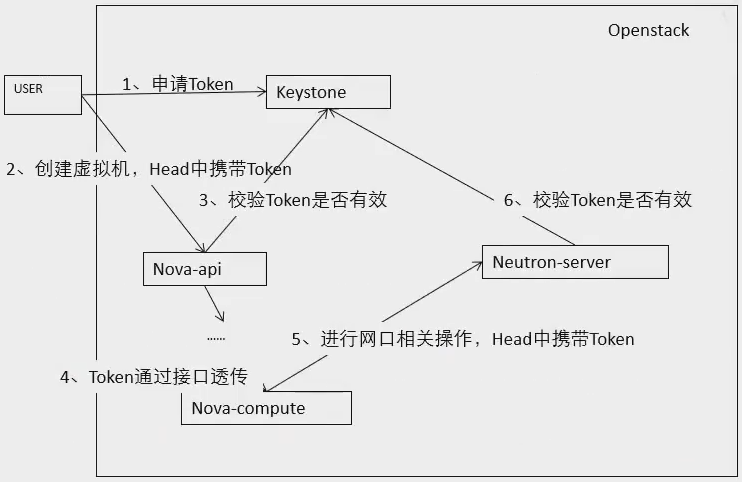

创建虚拟机是OpenStack最基本的功能,也是较为核心的功能。整个流程以Nova组件为中心,期间会涉及到与其他组件的交互,如Keystone、Glance、Neutron、Cinder。下面就详细介绍一下虚拟机的整个创建流程(OpenStack Ocata版本)。

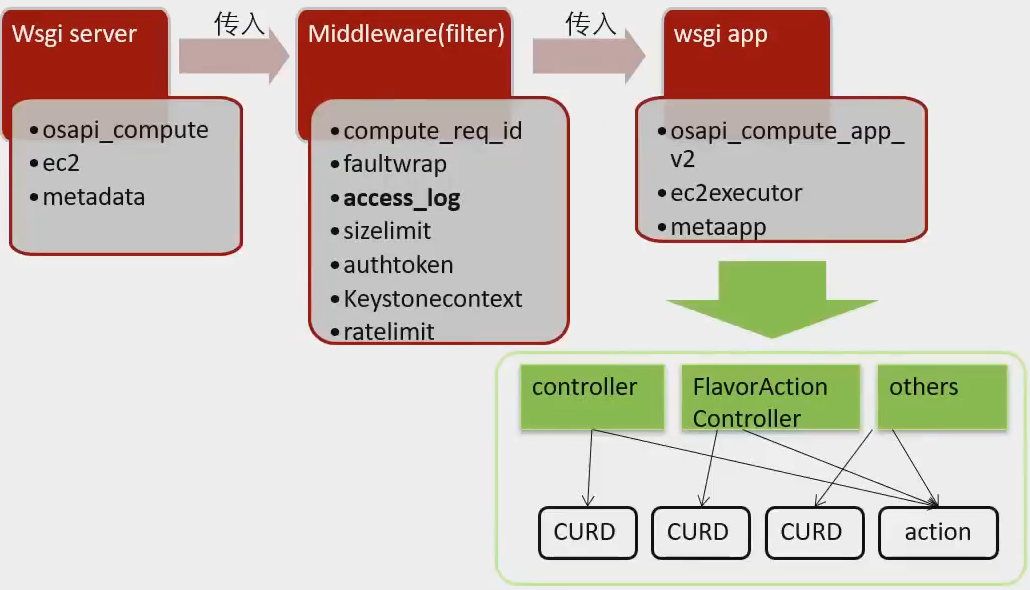

可以将整个过程大致分为三个阶段,第一个阶段为从novaclient到nova-api,第二个阶段为从nova-api到nova-compute,第三个阶段为从nova-compute到底层的Hypervisor(虚拟机管理器,VMM)。

第一阶段:从novaclient到nova-api

创建虚拟机一般是从界面或命令行发出请求开始的,两种方式都会借助novaclient向nova-api发出HTTP请求。nova-api暴露了一组RESTful的API,以接受novaclient发出的各种请求。创建虚拟机时需要向{your_compute_service_url}/servers发送POST请求。在使用命令行创建时可使用“—debug”选项来跟踪这些HTTP请求,如使用nova —debug boot。

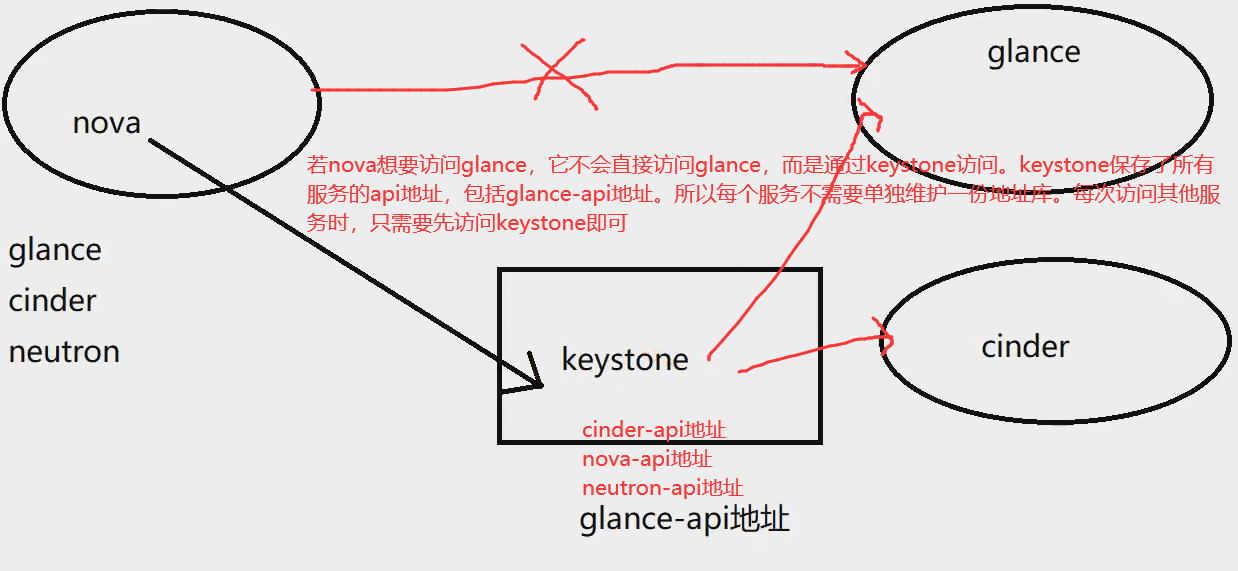

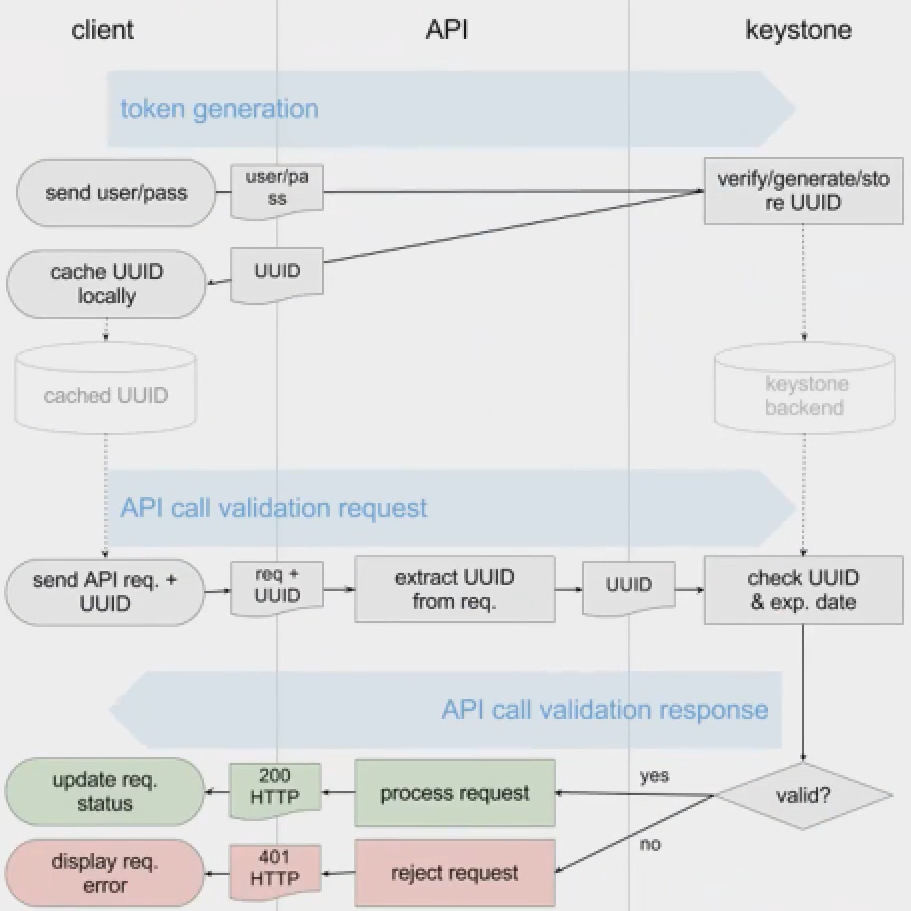

为了保证对OpenStack各个服务的安全访问,在请求创建虚拟机之前,novaclient会先向Keystone发送用户的用户名、密码、域名信息来申请一个有效的token,之后向nova-api发送请求的头部就会带有token信息。nova-api收到请求后会向Keystone验证token的有效性,token验证通过后,nova-api会向数据库写入虚拟机的初始数据。Keystone在验证token时,会先去缓存数据库memcached中查找,若缓存未命中再去数据库中的token表查找对应记录。由于OpenStack各个组件之间都需要token验证,并且token存在有效期,过期的token不会自动清理,所以随着时间的增长token表的记录会越来越多,那么在查询token表时就可能存在慢查询。除了使用memcached做缓存之外,我们还可以使用keystone-manage token_flush对token表做定期清理,避免数据库慢查询。

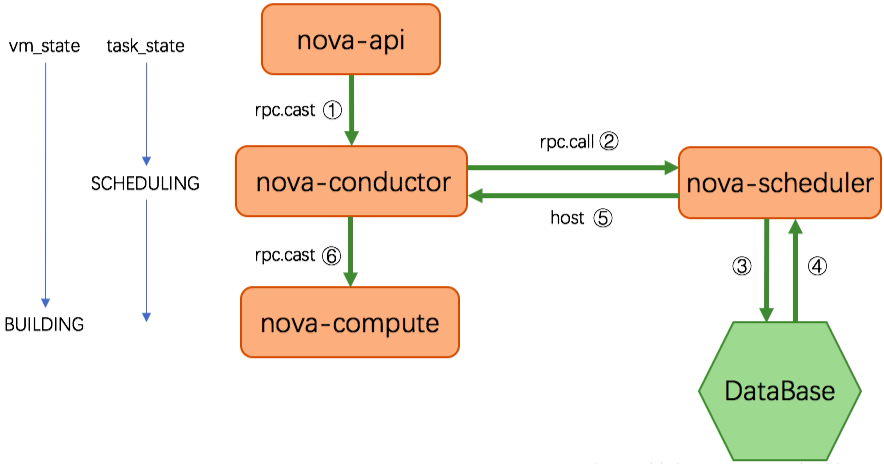

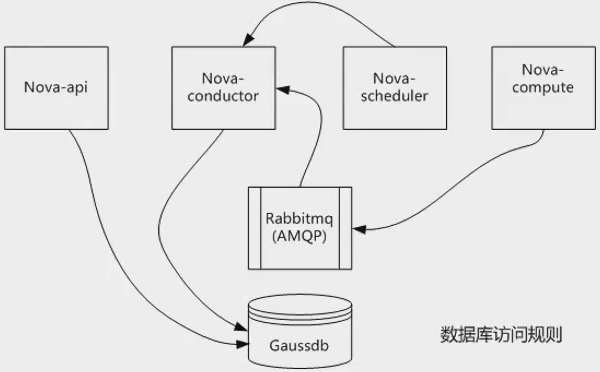

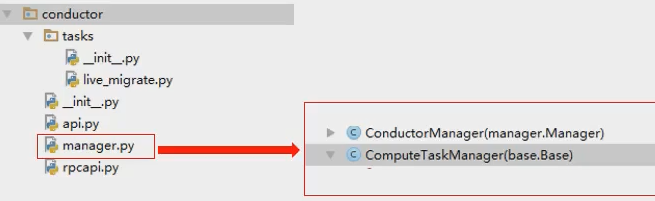

第二阶段:从nova-api到nova-compute

nova-api工作结束后会向nova-conductor发起RPC请求,请求创建虚拟机。nova-conductor是nova-compute和数据库之间的桥梁,它可以防止nova-compute直接访问数据库,从而提高对数据库访问的安全性。随着nova-conductor功能的完善,它也接管了nova-compute中一些耗时较长的任务,如build_instances、resize_instance、live_migrate_instance等。

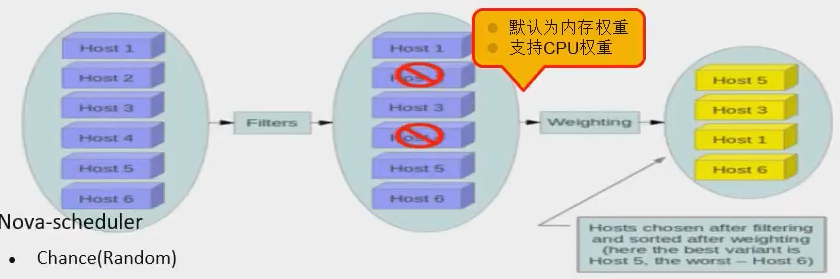

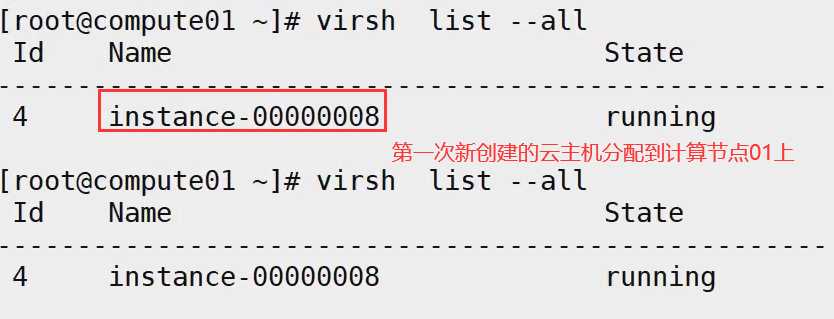

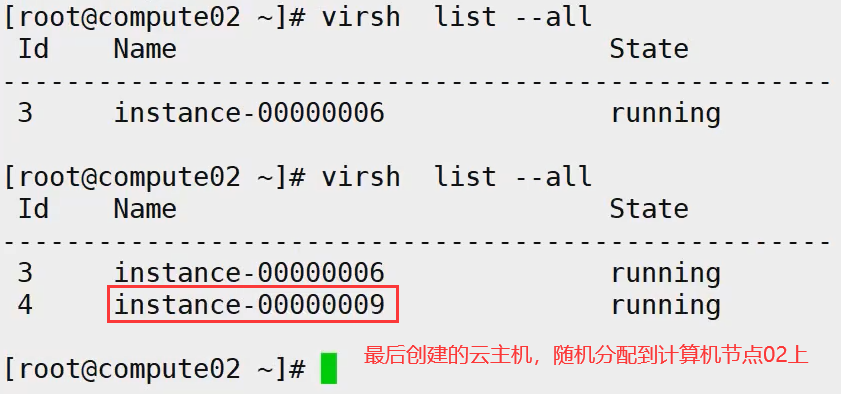

- nova-conductor收到请求后首先以rpc.call的方式请求nova-scheduler完成虚拟机创建的调度工作。nova-scheduler使用过滤和权重计算的方法来选定创建虚拟机的主机,过滤器和权重计算方法可以在nova.conf中配置。nova-scheduler自己会维护一份计算节点数据,并根数据库中对比。调度完成后将选定的host返回给nova-conductor,由nova-conductor向nova-compute发起rpc.cast的创建请求。

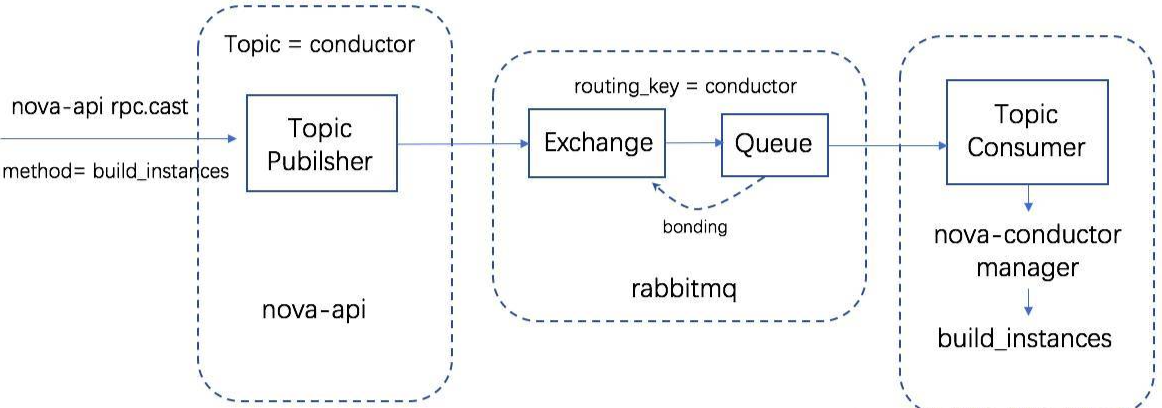

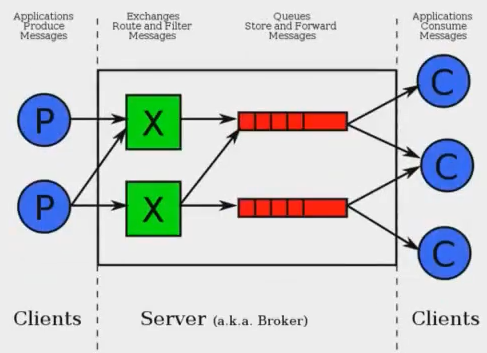

- 一般来说,OpenStack各个组件之间会通过RESTful API进行通信,而组件内部的各个服务进程之间则是通过基于AMPQ的RPC方式进行通信。RPC方式又分为两种,rpc.cast和rpc.call,rpc.call为request/response方式,多用于同步场景;而使用rpc.cast方式发出请求后则无需一直等待响应,但之后需要定期查询执行结果,一般用于异步场景。实现RPC通信还需借助消息队列,OpenStack将其使用的通信方式都封装在公有库oslo_messaging中,目前支持的消息队列包括Rabbitmq、Zeromq、Kafka等。

- 以nova-api和nova-conductor之间的通信为例。nova-conductor服务在启动时会注册一个RPC server等待处理请求,nova-api发送创建虚拟机的rpc请求时会先创建一个topic publisher用于话题发布,发布的topic为conductor,method为build_instance,然后publisher将消息发送给话题交换器,话题交换器再根据routing_key转发给绑定的消息队列,最后由topic consumer接收并调用nova-conductor manager中的build_instance方法处理。

创建虚拟机过程中的所有HTTP请求和RPC请求均会占用服务器的TCP连接数。一般来说,作为客户端的TCP连接数最大为65535个,但也会受到内存、文件描述符等因素的影响,所以为保证正常创建虚拟机,可以使用监控工具来监控服务器的资源或进行TCP连接的调优。

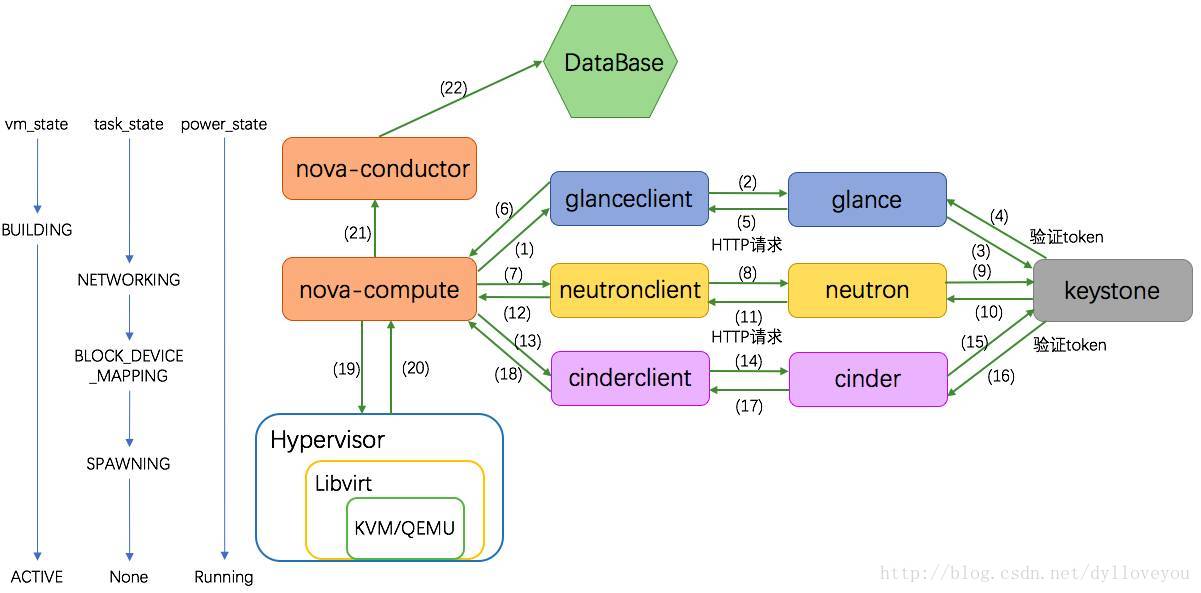

第三阶段:从nova-compute到Hypervisor

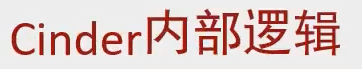

nova-compute收到请求后再分别调用glanceclient、neutronclient和cinderclient向glance、neutron、cinder获取创建虚拟机的镜像、网络、存储信息,认证过程与nova-api类似。

此时虚拟机的vm_state为Building,虚拟机还只存在于数据库中,完成最后的创建还要交给Hypervisor。OpenStack支持的Hypervisor包括Libvirt、hyperv、xen、vmware等,其中对libvirt管理的KVM的支持性最好,这也是OpenStack默认的hypervisor。当libvirt收到创建请求时,会拉取镜像创建根存储、根据请求中的虚拟机信息生成xml文件,再根据xml文件创建网络(如ovs上创建端口)、define domain、start domain等,直到libvirt检测到虚拟机状态为running则创建成功。

- 当OpenStack使用Ceph共享存储时,镜像无需下载到本地再上传到后端,而是直接使用clone,配合Ceph支持的COW技术几乎可以完成虚拟机的秒级启动。前提是使用raw格式的镜像,因为Ceph不支持从qcow2的镜像引导,若使用qcow2镜像创建虚拟机,则需要将镜像下载到本地转换成raw格式再上传,不仅增加启动时间,而且还可能因为空间不足无法完成镜像的格式转换从而造成虚拟机启动失败,所以建议直接使用raw格式的镜像创建虚拟机。

为避免创建过程中服务的单点故障,建议使用keepalived+haproxy的方式实现OpenStack各个服务的高可用。

总结

第一阶段:

- 在novaclient请求创建虚拟机之前,novaclient会先向Keystone发送用户的用户名、密码、域名信息来申请一个有效的token

- nova-api收到novaclient的http请求后会向Keystone验证token的有效性,token验证通过后,nova-api会向数据库写入虚拟机的初始数据

- 第二阶段:

- nova-api向nova-conductor发起RPC请求,请求创建虚拟机

- nova-conductor收到nova-api的rpc.cast请求后首先以rpc.call的方式请求nova-scheduler完成虚拟机创建的调度工作(选定创建虚拟机的主机)

- 调度完成后将选定的host返回给nova-conductor,

- 由nova-conductor向nova-compute发起rpc.cast的创建虚拟机请求

- 第三阶段:

- nova-compute分别调用glanceclient、neutronclient和cinderclient向glance、neutron、cinder获取创建虚拟机的镜像、网络、存储信息,认证过程与nova-api类似

- 此时虚拟机还只存在于数据库中,完成最后的创建还要交给Hypervisor。当OpenStack的hypervisor(默认是libvirt)收到创建请求时,会拉取镜像创建根存储、根据请求中的虚拟机信息生成xml文件,再根据xml文件创建网络(如ovs上创建端口)、define domain、start domain等,直到libvirt检测到虚拟机状态为running则创建成功。

创建虚拟机是OpenStack的基本功能,掌握整个虚拟机的创建流程,有助于理解OpenStack各个组件以及组件内部的通信过程,同时,对理解虚拟机的其他操作流程也有很大帮助。同时,对于OpenStack运维人员来说,掌握虚拟机几个核心操作的流程,可以帮助我们在遇到类似虚拟机创建错误等问题时进行快速定位。

OpenStack虚机网卡的创建过程

https://blog.csdn.net/dylloveyou/article/details/78735482

https://zhuanlan.zhihu.com/p/31695924

OpenStack虚拟机创建流程(28步)

- 客户端使用自己的用户名密码请求认证

- keystone通过查询在keystone数据库user表中保存的user相关信息,包括password加密后的hash值,并返回一个token_id(令牌),和serviceCatalog(一些服务的endpoint地址,cinder、glance-api后面下载镜像和创建块存储时会用到)。

- 客户端带上keystone返回的token_id和创建虚机的相关参数,Post请求nova-api创建虚拟机

- nova-api接收到请求后,首先使用请求携带的token_id来访问该api,以验证请求是否有效。

- keystone验证通过后返回更新后的认证信息。

- nova api检查创建虚拟机参数是否有效与合法。

- 检查虚拟机name是否符合命名规范,flavor_id是否在数据库中存在,image_uuid是否是正确的uuid格式

- 检查instance、vcpu、ram的数量是否超过配额。

- 当且仅当所有传参都有效合法时,更新nova数据库,新建一条instance记录,vm_states设为BUILDING,task_state设为SCHEDULING.

- nova api 远程调用传递请求、参数给nova scheduler,把消息“请给我创建一台虚拟机”丢到消息队列,然后定期查询虚机的状态。

- nova scheduler从queue中获取到这条消息

- nova scheduler访问nova数据库,通过调度算法,过滤出一些合适的计算节点,然后进行排序。

- 更新虚机节点信息,返回一个最优节点id给nova scheduler。

- nova scheduler选定host之后,通过rpc调用nova-compute服务,把“创建虚机请求”消息丢个mq。

- nova compute收到创建虚拟机请求的消息

- nova compute通过rpc查询nova数据库中虚机的信息例如主机模板和id

- nova conductor从消息队列中拿到请求查询数据库

- nova conductor查询nova数据库

- 数据库返回虚机信息

- nova compute从消息队列中获取信息。

- nova compute 请求glance 的rest api,下载所需要的镜像,一般是qcow2的。

- glance api 也会去验证请求的token的有效性。

- glance api 返回镜像信息给nova-compute。

- 同理,nova compute请求neutron api配置网络,例如获取虚机ip地址

- 验证token的有效性

- neutron返回网络信息

- 25-27同glance、neutron验证token返回块设备信息

- 第28条:据上面配置的虚拟机信息,生成xml,写入libvirt,xml文件,然后调用libvirt driver去使用libvirt.xml文件启动虚拟机。

OpenStack创建虚拟机时,其与物理机的交互过程

- 创建虚拟机实例:在OpenStack用户界面或命令行中输入指令来创建虚拟机实例。在这一步骤中,OpenStack会检查资源的可用性(如cpu、内存、存储),并根据虚拟机的配置参数创建虚拟机实例。

- 分配主机:经过资源分配器(Resource Scheduler)的计算,选择出符号条件的物理机,根据虚拟机实例的配置要求来进行物理机的分配。可以通过OpenStack Compute service API接口的方法查看分配的物理实例。此时,OpenStack会将虚拟机实例与一个物理主机关联起来,这个物理主机就是将要运行虚拟机的主机。

- 下载镜像:从镜像库(或其他存储介质)中下载需要运行的虚拟机镜像,并将其复制到目标物理主机上。OpenStack会自动同步本地缓存和镜像仓库。

- 创建文件系统:在目标物理主机上创建虚拟机实例需要的文件系统和存储设备,通过卷管理器(Volume Manager)实现卷的管理和分发。

- 初始化虚拟机:在目标物理主机上初始化虚拟机实例。此时,OpenStack会触发一个实例启动的操作,这个操作会将镜像文件装入虚拟机的内存中,并启动虚拟机的操作系统。

- 虚拟机启动并运行:启动并运行虚拟机,VMware通过OVS与物理网络交互,而OpenStack通过Neutron与虚拟网络交互以及与物理网络交互,从而完成虚拟机到物理机的通信和交互。

总之,OpenStack创建虚拟机时需要与物理机进行多种形式的交互,从资源检查、主机分配、镜像下载等环节,到初始化虚拟机实例、启动虚拟机和与虚拟网络以及物理网络的交互,这些步骤都是非常复杂和重要。

当在VMware上创建虚拟机时,其与物理机的交互过程

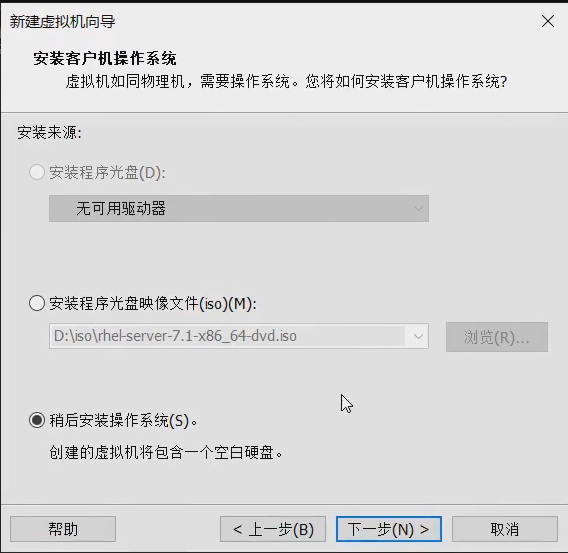

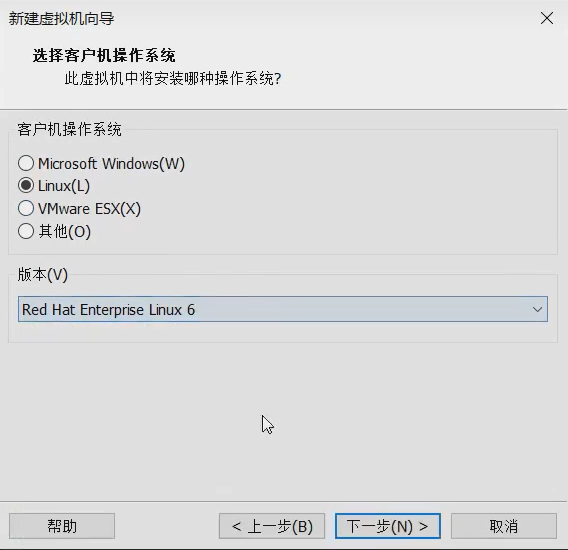

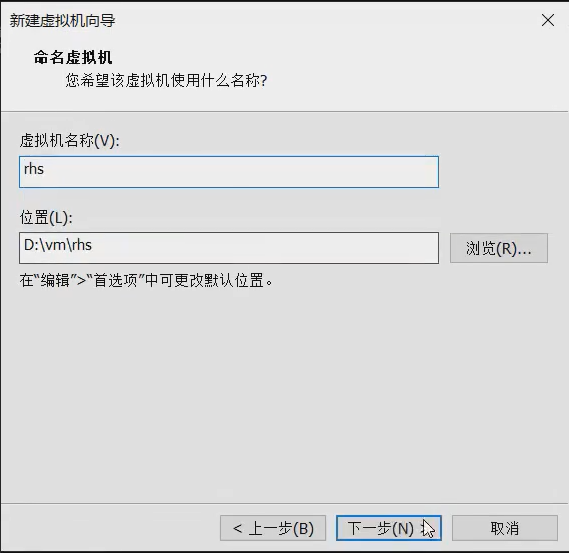

- 定义虚拟机:首先需要定义虚拟机的参数,包括CPU、内存、磁盘大小等。

- 分配资源:在虚拟机定义之后,需要分配物理主机的CPU、内存等资源给虚拟机。

- 安装VMware Tools:VMware Tools是VMware虚拟机和VMware vSphere之间的一种软件,它可以提高虚拟机的性能和功能,并且可以将虚拟机与物理主机直接交互。管理员需要在虚拟机上安装VMware Tools,这样可以使虚拟机与物理主机进行更加紧密的交互。

- 配置虑拟机网络:配置虚拟机的网络使其能够与物理主机连接。一般情况下,可以使用虚拟交换机来完成此步骤,管理员可以通过VMware vSphere Client管理界面操作

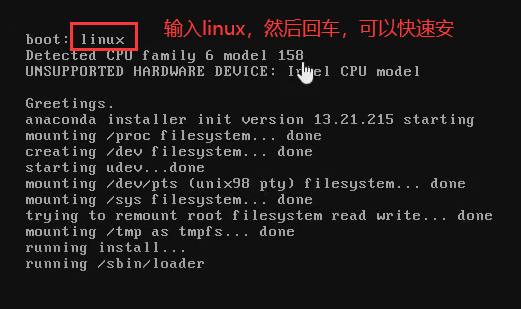

- 安装操作系统:与物理机不同,虚拟机需要通过其他方式安装操作系统。管理员可以通过将操作系统ISO映像文件挂载到虚拟机中来安装操作系统,或通过其他安装盘片的方式来进行安装。

- 登录虚拟机并完成设定:管理员可以使用虚拟机自带的命令行界面或通过远程登录的方式,登录到虚拟机中,对虚拟机内部进行网络、应用和操作系统等设定。

总之,在VMware创建虚拟机时,需要先定义虚拟机参数,然后将物理主机的资源分配给虚拟机;安装VMware Tools和操作系统,配置虚拟机网络,最后,管理员登录到虚拟机完成设置。这些操作都涉及到虚拟机与物理主机之间的交互,以及虚拟机与运行在物理主机上的软件的交互。虽然VMware与OpenStack创建虚拟机的过程非常不同,但是它们两者都需要涉及到与物理主机的交互。

OpenStack虚拟机创建流程(控制面板)

- 登录OpenStack控制面板:首先,我们需要登录到OpenStack的管理面板。打开浏览器,输入地址和用户名密码以进入控制面板。

- 选择计算菜单:登录成功后,我们需要选择“计算”菜单,然后选择“实例”。

- 点击“启动实例”:在实例页面,我们可以看到所有当前活动的实例。点击“启动实例”按钮来创建虚拟机。

- 选择虚拟机镜像:在“启动实例”页面,我们需要选择一个虚拟机镜像。这是指一个完整的虚拟机文件系统。选择一个可用的虚拟机镜像,并为该虚拟机分配适当的资源,例如CPU、内存和磁盘空间等。

- 填写实例详情:在此步骤中,我们需要填写一些必要的信息,例如实例名称、实例数量等。

- 设置访问和网络选项:在创建虚拟机时,我们需要指定网络和访问选项,例如虚拟网络接口,IP地址等。

- 配置其他高级选项:在此步骤中,您可以选择其他高级选项,例如实例元数据、安全组规则等。

- 点击“启动实例”:此时,您已经完成了所有配置选项。点击“启动实例”按钮,OpenStack系统将开始创建和启动虚拟机。

- 完成创建:当虚拟机创建过程完成后,您将看到一个详细的虚拟机配置页面,其中包含虚拟机的IP地址、状态和其他重要信息。

至此,我们完成了OpenStack虚拟机的创建过程。如果您需要创建更多的虚拟机,只需重复上述步骤即可。OpenStack是一个功能非常强大的云计算平台,它能够帮助企业快速、高效地创建和部署虚拟机,并提高资源利用率,同时降低运维成本。

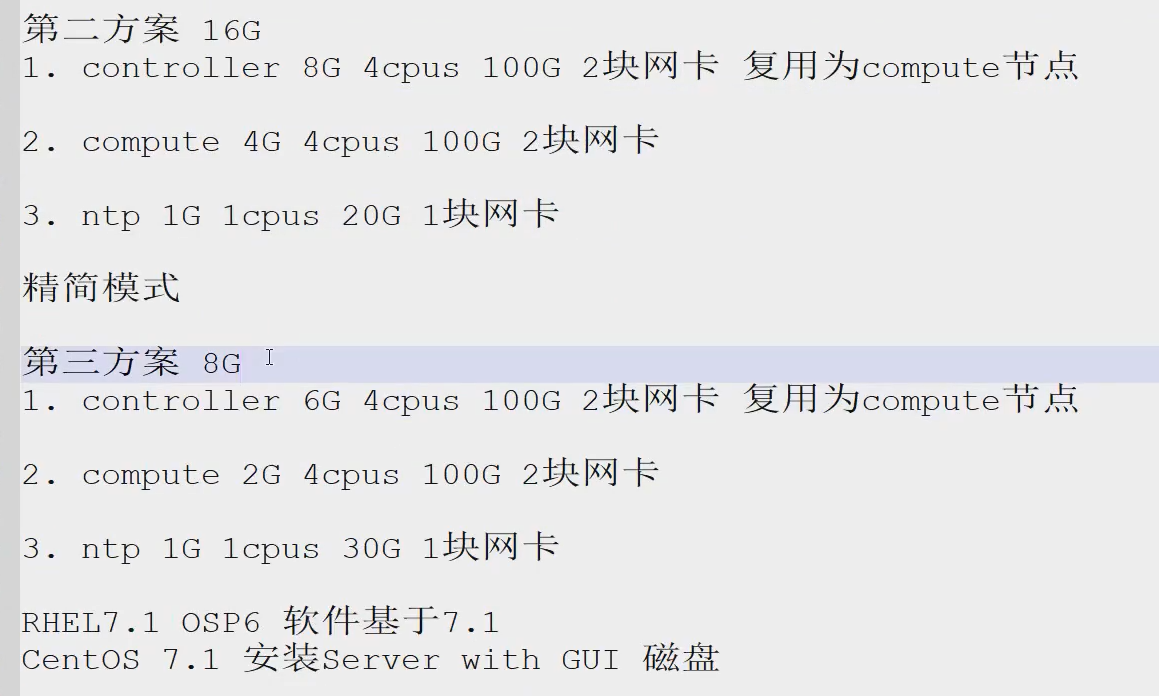

OpenStack搭建环境

https://blog.csdn.net/zhongliwen1981/category_10945143.html

OpenStack界面操作

https://edu.51cto.com/center/course/lesson/index?id=258360

一文理解OpenStack网络

https://zhuanlan.zhihu.com/p/533145405

https://www.zhihu.com/question/22385883

OpenStack自动部署方法(All in one)

在KVM虚拟机里面搭建云,然后再划分kvm虚拟机

硬件配置要求

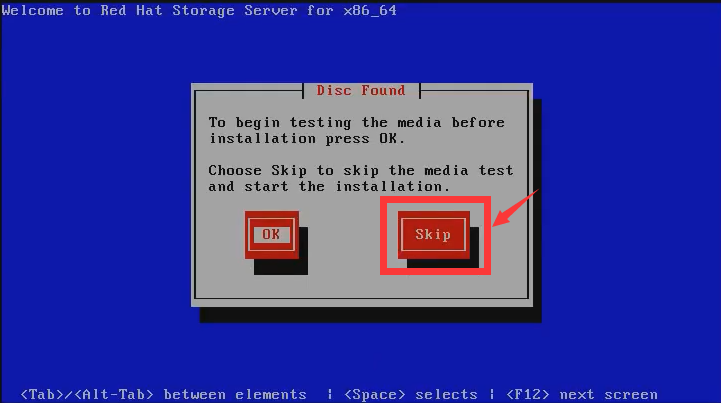

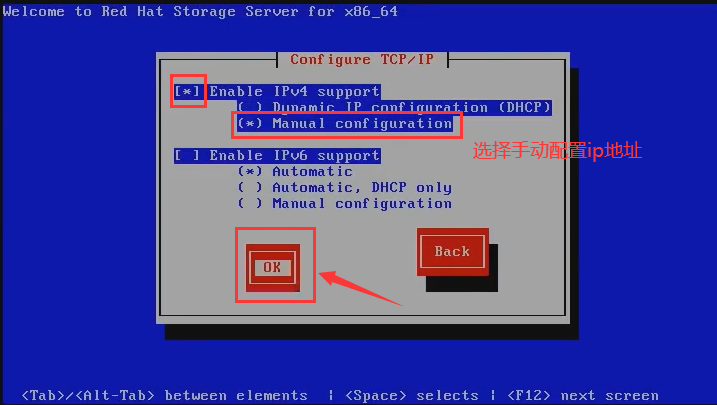

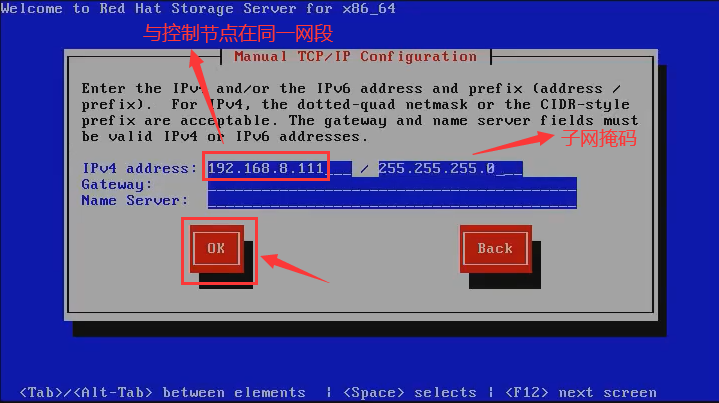

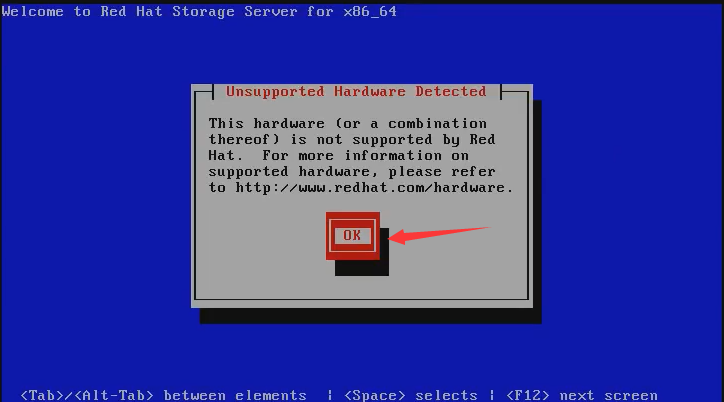

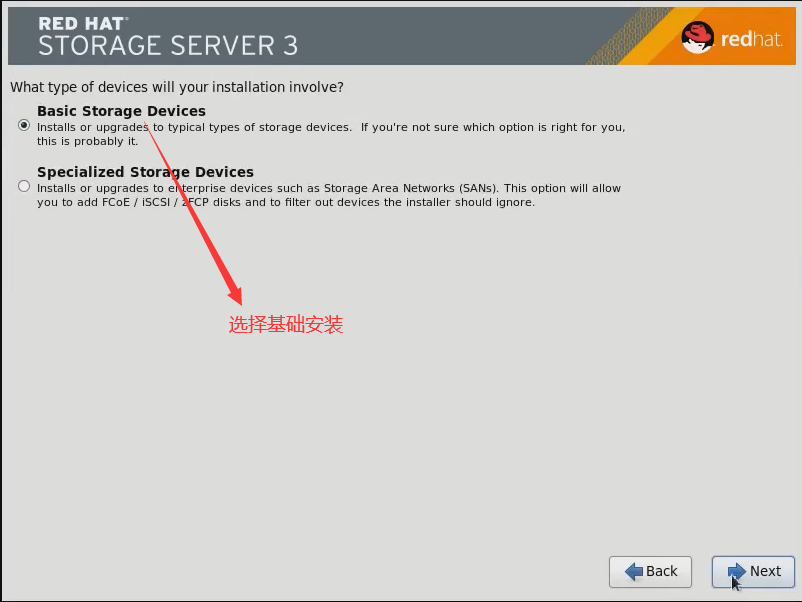

系统安装

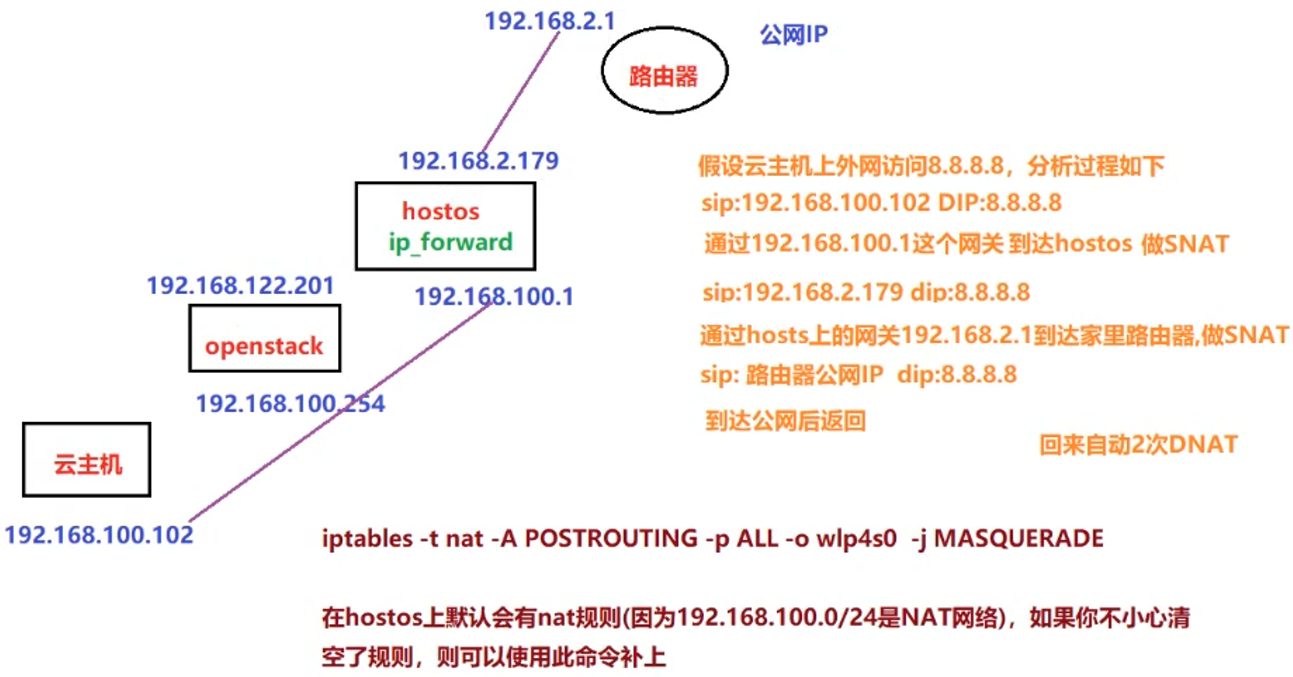

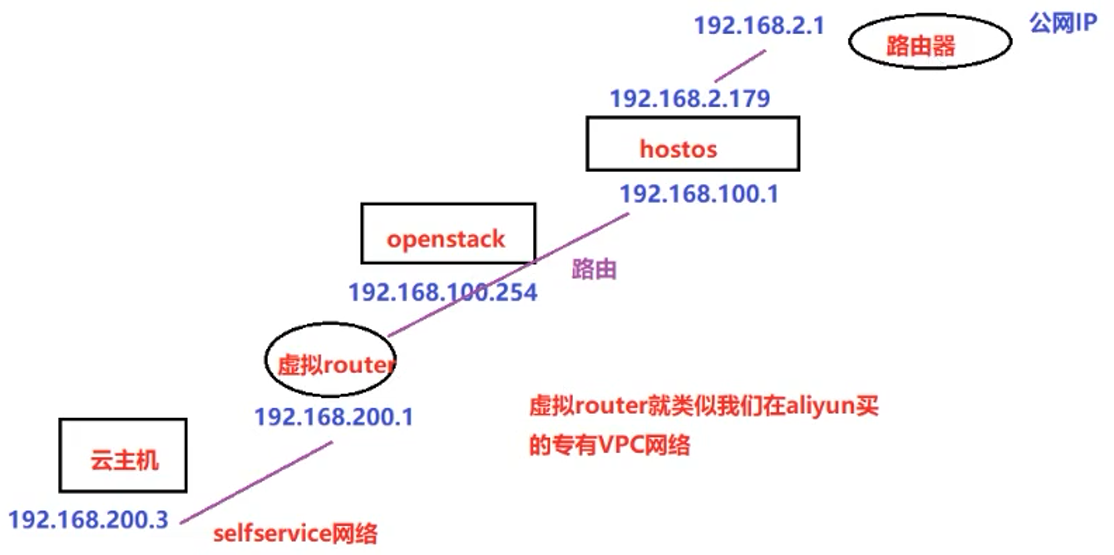

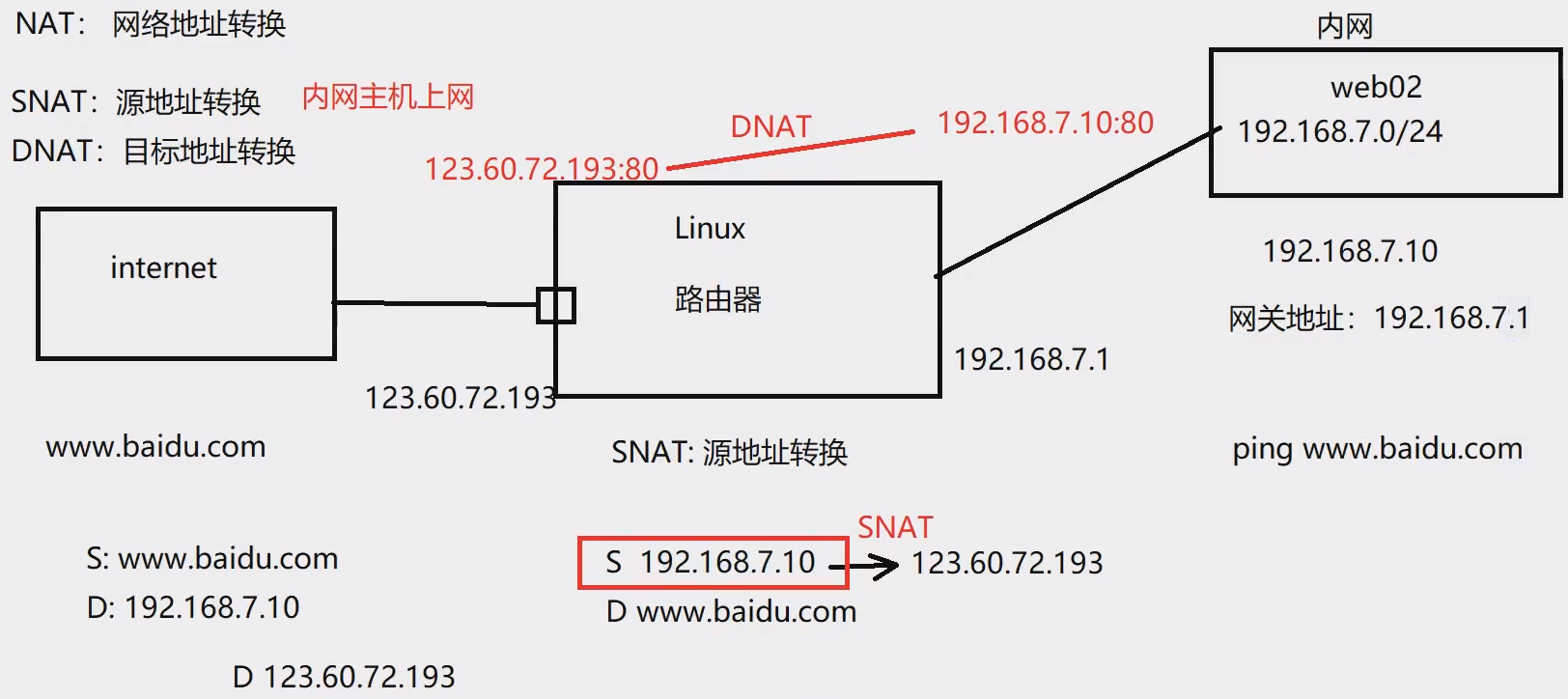

provider网络:相当于阿里云的传统经典网络,它与OpenStack平台上外网的网卡物理上同网段。

self-service networks网络:相当于阿里云的VPC网络,它是完全虚拟出来的一个私有网络。

VMware Workstation 虚拟机使用方法图文详解

https://www.cnblogs.com/hejj-bk/p/11576392.html

虚拟网卡、虚拟交换机、虚拟机网卡

https://blog.csdn.net/qmw19910301/article/details/83856031

https://blog.csdn.net/lyf_ldh/article/details/78695357

vmware为我们提供了三种网络工作模式,它们分别是:Bridged(桥接模式)、NAT(网络地址转换模式)、Host-Only(仅主机模式)。

打开vmware虚拟机,我们可以在选项栏的“编辑”下的“虚拟网络编辑器”中看到VMnet0(桥接模式)、VMnet1(仅主机模式)、VMnet8(NAT模式),那么这些都是有什么作用呢?其实,我们现在看到的VMnet0表示的是用于桥接模式下的虚拟交换机;VMnet1表示的是用于仅主机模式下的虚拟交换机;VMnet8表示的是用于NAT模式下的虚拟交换机。

同时,在主机上对应的有VMware Network Adapter VMnet1和VMware Network Adapter VMnet8两块虚拟网卡,它们分别作用于仅主机模式与NAT模式下。在“网络连接”中我们可以看到这两块虚拟网卡,如果将这两块卸载了,可以在vmware的“编辑”下的“虚拟网络编辑器”中点击“还原默认设置”,可重新将虚拟网卡还原。

小伙伴看到这里,肯定有疑问,为什么在真机上没有VMware Network Adapter VMnet0虚拟网卡呢?那么接下来,我们就一起来看一下这是为什么。

一、Bridged(桥接模式)

什么是桥接模式?桥接模式就是将主机网卡与虚拟机虚拟的网卡利用虚拟网桥进行通信。在桥接的作用下,类似于把物理主机虚拟为一个交换机,所有桥接设置的虚拟机连接到这个交换机的一个接口上,物理主机也同样插在这个交换机当中,所以所有桥接下的网卡与网卡都是交换模式的,相互可以访问而不干扰。在桥接模式下,虚拟机ip地址需要与主机在同一个网段,如果需要联网,则网关与DNS需要与主机网卡一致。其网络结构如下图所示:

接下来,我们就来实际操作,如何设置桥接模式。

首先,安装完系统之后,在开启系统之前,点击“编辑虚拟机设置”来设置网卡模式。

点击“网络适配器”,选择“桥接模式”,然后“确定”

在进入系统之前,我们先确认一下主机的ip地址、网关、DNS等信息。

然后,进入系统编辑网卡配置文件,命令为vi /etc/sysconfig/network-scripts/ifcfg-eth0,如果没有ifcfg-eth0文件,可能是不同的机器,找ifcfg-开头的文件

添加内容如下:

编辑完成,保存退出,然后重启虚拟机网卡,使用ping命令ping外网ip,测试能否联网。

配置iphttps://blog.csdn.net/lyf_ldh/article/details/117839749

能ping通外网ip,证明桥接模式设置成功。

如果重启失败,报如下错误:

解决方案请参考:Linux之——CentOS Linux解决Device eth0 does not seem to be present_ldh_lyf的博客-CSDN博客

那主机与虚拟机之间的通信是否正常呢?我们就用远程工具来测试一下。

主机与虚拟机通信正常。

这就是桥接模式的设置步骤,相信大家应该学会了如何去设置桥接模式了。桥接模式配置简单,但如果你的网络环境是ip资源很缺少或对ip管理比较严格的话,那桥接模式就不太适用了。如果真是这种情况的话,我们该如何解决呢?接下来,我们就来认识vmware的另一种网络模式:NAT模式。

二、NAT(地址转换模式)

刚刚我们说到,如果你的网络ip资源紧缺,但是你又希望你的虚拟机能够联网,这时候NAT模式是最好的选择。NAT模式借助虚拟NAT设备和虚拟DHCP服务器,使得虚拟机可以联网。其网络结构如下图所示:

在NAT模式中,主机网卡直接与虚拟NAT设备相连,然后虚拟NAT设备与虚拟DHCP服务器一起连接在虚拟交换机VMnet8上,这样就实现了虚拟机联网。那么我们会觉得很奇怪,为什么需要虚拟网卡VMware Network Adapter VMnet8呢?原来我们的VMware Network Adapter VMnet8虚拟网卡主要是为了实现主机与虚拟机之间的通信。在之后的设置步骤中,我们可以加以验证。

首先,设置虚拟机中NAT模式的选项,打开vmware,点击“编辑”下的“虚拟网络编辑器”,设置NAT参数及DHCP参数。

将虚拟机的网络连接模式修改成NAT模式,点击“编辑虚拟机设置”。

点击“网络适配器”,选择“NAT模式”

然后开机启动系统,编辑网卡配置文件,命令为vi /etc/sysconfig/network-scripts/ifcfg-eth0

具体配置如下:

编辑完成,保存退出,然后重启虚拟机网卡,动态获取ip地址,使用ping命令ping外网ip,测试能否联网。

之前,我们说过VMware Network Adapter VMnet8虚拟网卡的作用,那我们现在就来测试一下。

如此看来,虚拟机能联通外网,确实不是通过VMware Network Adapter VMnet8虚拟网卡,那么为什么要有这块虚拟网卡呢?

之前我们就说VMware Network Adapter VMnet8的作用是主机与虚拟机之间的通信,接下来,我们就用远程连接工具来测试一下。

然后,将VMware Network Adapter VMnet8启用之后,发现远程工具可以连接上虚拟机了。

那么,这就是NAT模式,利用虚拟的NAT设备以及虚拟DHCP服务器来使虚拟机连接外网,而VMware Network Adapter VMnet8虚拟网卡是用来与虚拟机通信的。

三、Host-Only(仅主机模式)

Host-Only模式其实就是NAT模式去除了虚拟NAT设备,然后使用VMware Network Adapter VMnet1虚拟网卡连接VMnet1虚拟交换机来与虚拟机通信的,Host-Only模式将虚拟机与外网隔开,使得虚拟机成为一个独立的系统,只与主机相互通讯。其网络结构如下图所示:

通过上图,我们可以发现,如果要使得虚拟机能联网,我们可以将主机网卡共享给VMware Network Adapter VMnet1网卡,从而达到虚拟机联网的目的。接下来,我们就来测试一下。

首先设置“虚拟网络编辑器”,可以设置DHCP的起始范围。

设置虚拟机为Host-Only模式。

开机启动系统,然后设置网卡文件。

保存退出,然后重启网卡,利用远程工具测试能否与主机通信。

主机与虚拟机之间可以通信,现在设置虚拟机联通外网。

我们可以看到上图有一个提示,强制将VMware Network Adapter VMnet1的ip设置成192.168.137.1,那么接下来,我们就要将虚拟机的DHCP的子网和起始地址进行修改,点击“虚拟网络编辑器”

重新配置网卡,将VMware Network Adapter VMnet1虚拟网卡作为虚拟机的路由。

重启网卡,然后通过 远程工具测试能否联通外网以及与主机通信。

测试结果证明可以使得虚拟机连接外网。

Kubernetes(K8s) 解决了哪些问题?

https://www.zhihu.com/question/329365548/answer/2937183948

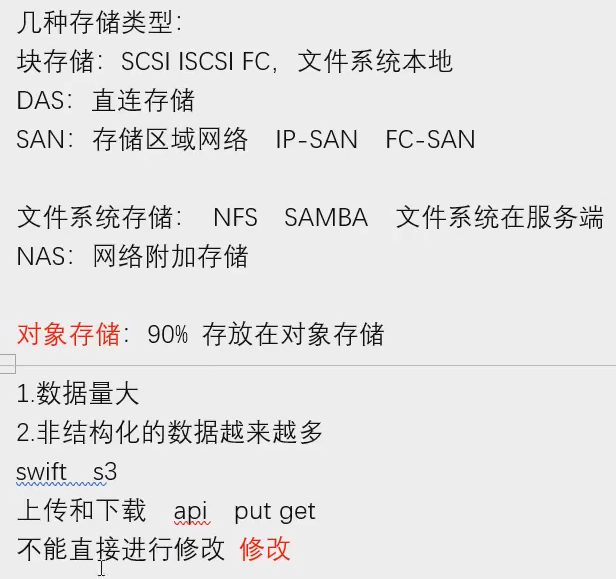

当使用OpenStack进行云计算部署时,它支持以下几种网络类型:

- Flat Network:Flat Network是最简单的网络类型之一。它只有一个网络,没有任何子网,也没有路由器。这种网络类型不支持DHCP服务,所以需要手动为虚拟机设置IP地址。

- VLAN Network:VLAN Network是一种虚拟局域网,可以将多个网络划分为不同的子网以实现更好的网络管理。这种网络类型需要支持VLAN交换机来实现不同的子网划分。

- VXLAN Network:VXLAN Network是一种虚拟扩展局域网,可以通过在现有网络基础上创建虚拟网络以提供更好的网络隔离和安全性。这种网络类型需要使用VXLAN隧道技术来实现虚拟网络的隔离。

- GRE Network:GRE Network是一种通用路由封装,可以将不同的网络封装在一个GRE隧道中。这种网络类型可以在不同的物理网络之间实现虚拟网络的隔离。

在实际的云计算部署中,可以根据不同的需求选择不同的网络类型。例如,如果需要快速搭建一个简单的网络环境,可以选择Flat Network;如果需要更好的网络管理和隔离,可以选择VLAN或VXLAN Network;如果需要在不同的物理网络之间实现虚拟网络的隔离,可以选择GRE Network。

云计算、虚拟化、OpenStack的区别与联系,以及它们各种的组成部分和使用流程

云计算、虚拟化和OpenStack都是现代IT领域中非常重要的概念,它们之间存在着联系和区别。我将逐一解释它们的概念和特点。

- 云计算

云计算是指一种基于互联网的计算模式,它通过将计算资源(服务器、存储设备、应用程序等)提供给用户,以便用户能够在任何地方、任何时间访问这些资源。云计算分为三种主要的服务模式:IaaS(基础设施即服务)、PaaS(平台即服务)和SaaS(软件即服务)。常见的云平台有AWS、Azure、Google Cloud等。

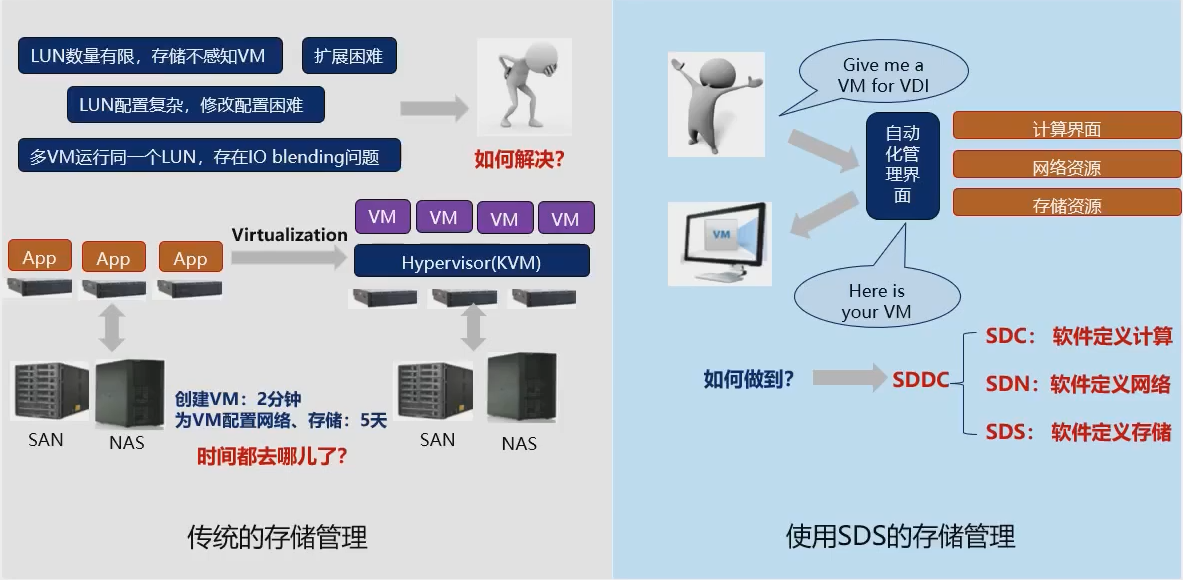

- 虚拟化

虚拟化是一种技术,它可以将一台物理主机分割成多个虚拟机,每个虚拟机都可以运行不同的操作系统和应用程序,就像独立的物理主机一样。虚拟化技术可以提高硬件资源的利用率,降低运营成本,同时还可以提高应用程序的可靠性和安全性。常见的虚拟化软件有VMware、Hyper-V、KVM等。

- OpenStack

OpenStack是一种开源的云计算平台,它提供了IaaS服务模式的基础设施。OpenStack可以通过虚拟化技术将硬件资源池化,以便用户能够按需使用这些资源。OpenStack由多个组件组成,包括Nova(计算)、Neutron(网络)、Cinder(存储)等。OpenStack是一个非常灵活和可扩展的平台,可以用于构建公有云、私有云和混合云等不同的云计算环境。

联系和区别:

虚拟化是云计算的基础技术之一,它可以帮助云计算平台实现资源池化和按需分配资源的功能。OpenStack则是一种开源的云计算平台,它使用虚拟化技术来实现资源管理和分配。因此,虚拟化和OpenStack都是云计算的重要组成部分。

使用流程:

使用云计算、虚拟化和OpenStack的流程如下:

- 首先需要选择一个合适的云计算平台,例如AWS、Azure、Google Cloud等。

- 在选择云计算平台的同时,需要考虑虚拟化技术的支持情况,例如是否支持VMware、Hyper-V等虚拟化软件。

- 如果需要使用OpenStack,需要下载和安装OpenStack软件,并按照文档配置和启动各个组件。

- 创建虚拟机实例,可以使用云计算平台提供的控制台或API来创建虚拟机实例。

- 在创建虚拟机实例时,可以选择不同的操作系统和应用程序,并分配适当的硬件资源。

- 在虚拟机实例创建完成后,可以使用远程连接工具访问虚拟机实例,并安装和配置需要的软件和服务。

- 如果需要扩展资源或创建新的虚拟机实例,可以使用云计算平台提供的API或控制台来完成相关操作。

以上就是云计算、虚拟化和OpenStack的概念、联系与区别以及使用流程的详细解释。

OpenStack

https://www.zhihu.com/question/22385883

作为测试工程师,请详细回答分布式存储产品如何进行测试分析,以及其常见的测试方法和测试策略

分布式存储系统在互联网应用领域有着广泛的应用,为了确保其可靠性、高效性和稳定性,需要进行全面的测试。下面是分布式存储产品测试分析的几个方面:

- 功能测试:主要测试分布式存储系统是否能够按照需求正确地完成数据存储、恢复、备份等基本功能。

- 性能测试:主要测试分布式存储系统在大规模数据量、高并发访问等情况下的响应速度、吞吐量等性能指标。

- 可靠性测试:主要测试分布式存储系统在各种异常情况下的响应能力,例如节点故障、网络故障等。

- 安全测试:主要测试分布式存储系统的安全性能,包括数据加密、权限控制等方面。

常见的测试方法和测试策略如下:

- 单元测试:主要测试分布式存储系统中的各个组件或模块的功能是否正确。

- 集成测试:主要测试分布式存储系统中各个组件或模块之间的协作和整体功能是否符合要求。

- 系统测试:主要测试分布式存储系统在实际运行环境下的性能、可靠性等方面是否符合要求。

- 冒烟测试:主要测试分布式存储系统的基本功能是否正常工作。

- 压力测试:主要测试分布式存储系统在高并发、大数据量等情况下的性能表现。

- 安全测试:主要测试分布式存储系统的安全性能,包括数据加密、权限控制等方面。

在测试策略上,可以采用黑盒测试、白盒测试、灰盒测试相结合的方式,从不同的角度对分布式存储系统进行测试,确保其质量和可靠性。同时,还要注重测试数据的准备和管理,尽可能模拟真实场景下的数据,确保测试结果真实可靠。

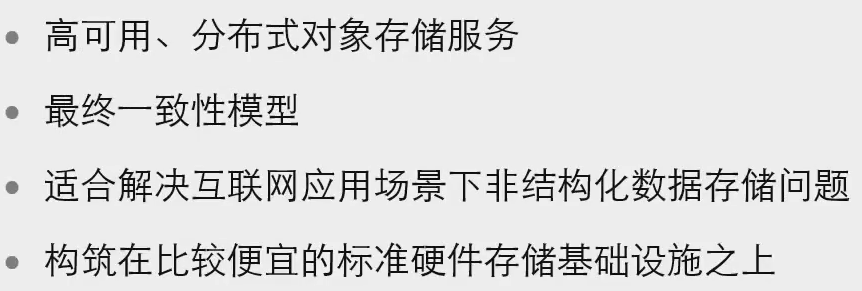

作为云计算工程师,请详细回答常见分布式存储产品的编程语言,以及其常用框架

常见的分布式存储产品的编程语言及其常用框架如下:

- Hadoop Distributed File System (HDFS) 编程语言:Java 常用框架:Hadoop

- Apache Cassandra 编程语言:Java 常用框架:Cassandra

- Apache HBase 编程语言:Java 常用框架:HBase

- Amazon S3 编程语言:Java, Python, .NET, Ruby, PHP, Go等 常用框架:AWS SDK

- Google Cloud Storage 编程语言:Java, Python, .NET, Ruby, PHP, Go等 常用框架:Google Cloud Storage Client Libraries

- GlusterFS 编程语言:C 常用框架:GlusterFS

- Ceph 编程语言:C++ 常用框架:Ceph

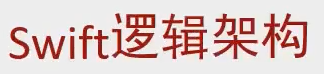

- Swift 编程语言:Python 常用框架:OpenStack Swift

以上是常见的分布式存储产品的编程语言及其常用框架。需要注意的是,这些产品都有自己的特点和优劣,需要根据实际需求来选择。

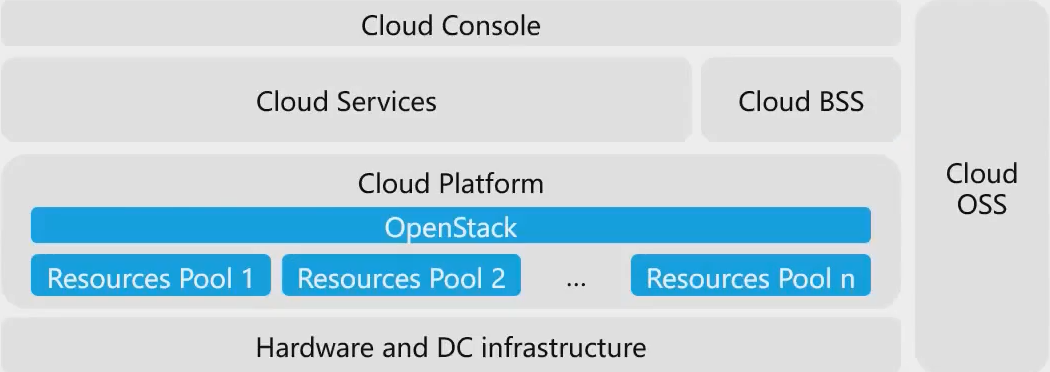

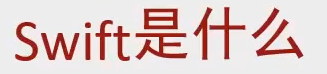

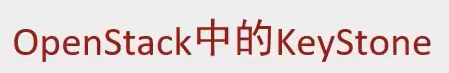

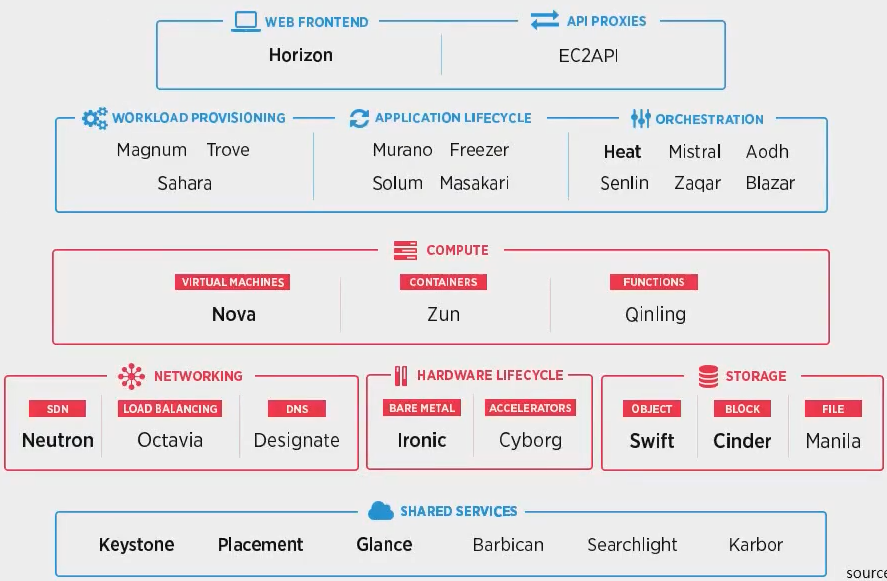

OpenStack概述

OpenStack简介

- OpenStack是开源云操作系统,可控制整个数据中心的大型计算、存储和网络资源池。用户能够通过Web界面、命令行或API接口配置资源。用于部署公有云、私有云,并实现对云项目管理

- OpenStack实现的是云计算IAAS,开源的云计算平台,apache 2.0开源协议,阿里云(飞天云平台)。

- OpenStack相当于操作系统的内核模块kernel,企业可以在此基础上进行二次开发应用程序

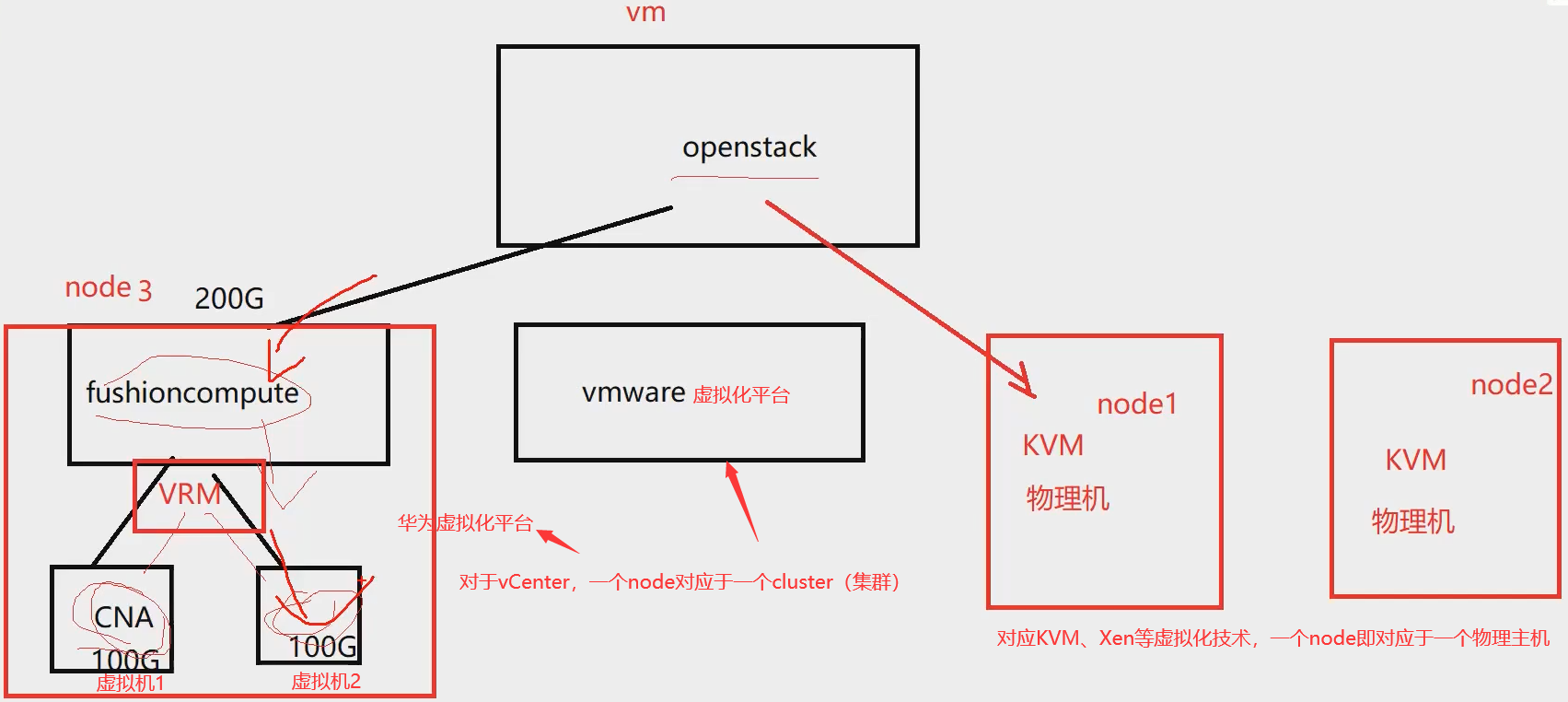

- 虚拟化层:通过VMware、KVM、XEN提供cpu、内存、网络等资源;OpenStack层:管理、协调虚拟机和物理机

- FusionSphere OpenStack是华为基于OpenStack开发的虚拟化平台,可以再在此平台基础上进行二次开发

- RHEL7OSP-6.0是红帽基于OpenStack开发的虚拟化平台

- 开源的OpenStack版本:

- OpenStack每年两个大版本,一般在4月和10月中旬发布,版本命名从字母A-Z

- OpenStack和虚拟化的区别:

- OpenStack只是系统的控制面,它不包括系统的数据面组件,如Hypervisor、存储和网络设备等

- OpenStack自身不提供虚拟化技术,它调用多种技术实现多资源池管理,对外提供统一管理接口

- 虚拟化:可以用于环境隔离、资源复用;它可以降低隔离损耗、提升运行效率;并且提供高级虚拟化特性

- 虚拟化是OpenStack底层的技术实现手段之一,但并非核心关注点。

- OpenStack只是构建云计算的关键组件(内核、骨干、框架、总线),而并非云计算本身。为了构建云计算,还需要很多东西:

OpenStack的设计思想:

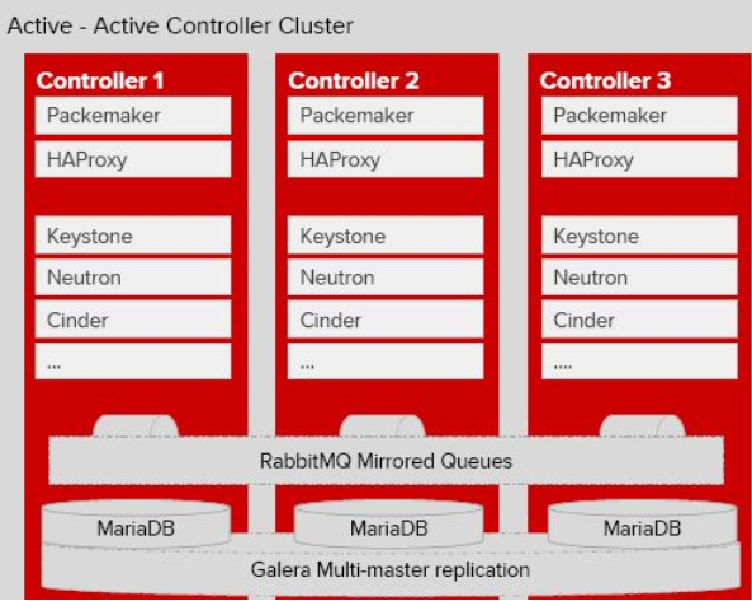

OpenStack共享服务组件:

- 数据库服务(Database Service):MairaDB 及 MongoDB

- 消息传输(Message Queues):RabbitMQ

- 缓存(cache): Memcached 时间(time sync):NTP

- 存储(storge provider):ceph、GFS、LVM、ISICI等

- 高可用及负载均衡:pacemaker、HAproxy、keepalive、lvs等

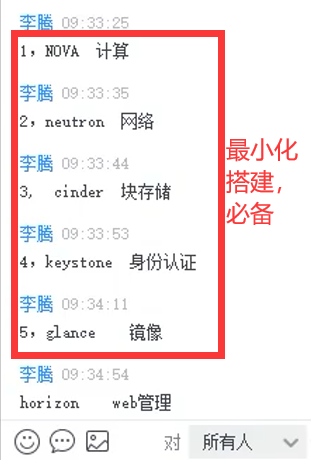

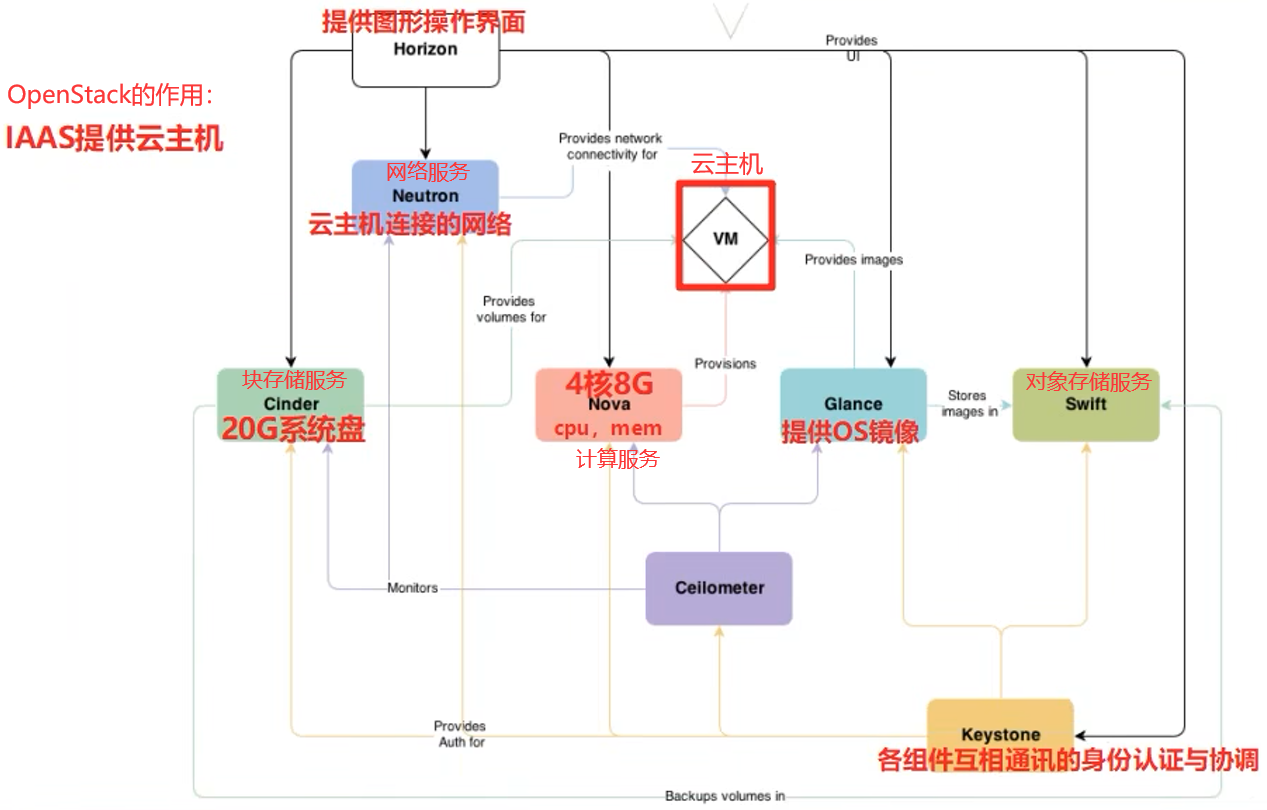

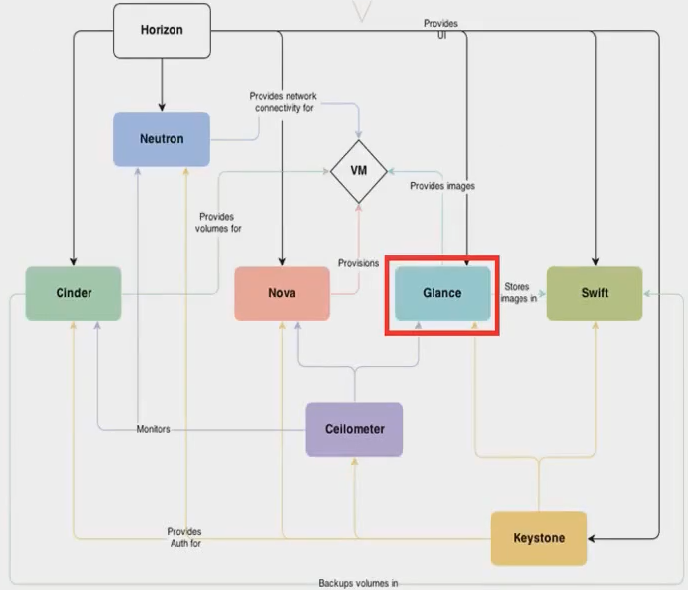

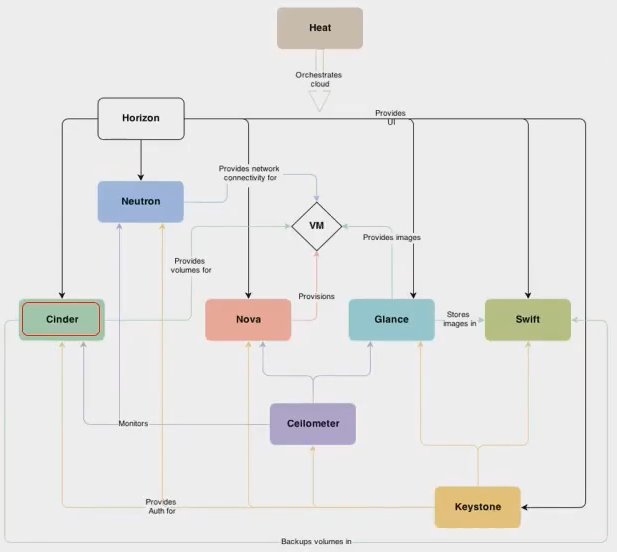

OpenStack核心组件:

- 身份服务(Identity Service):Keystone

- 计算(Compute): Nova

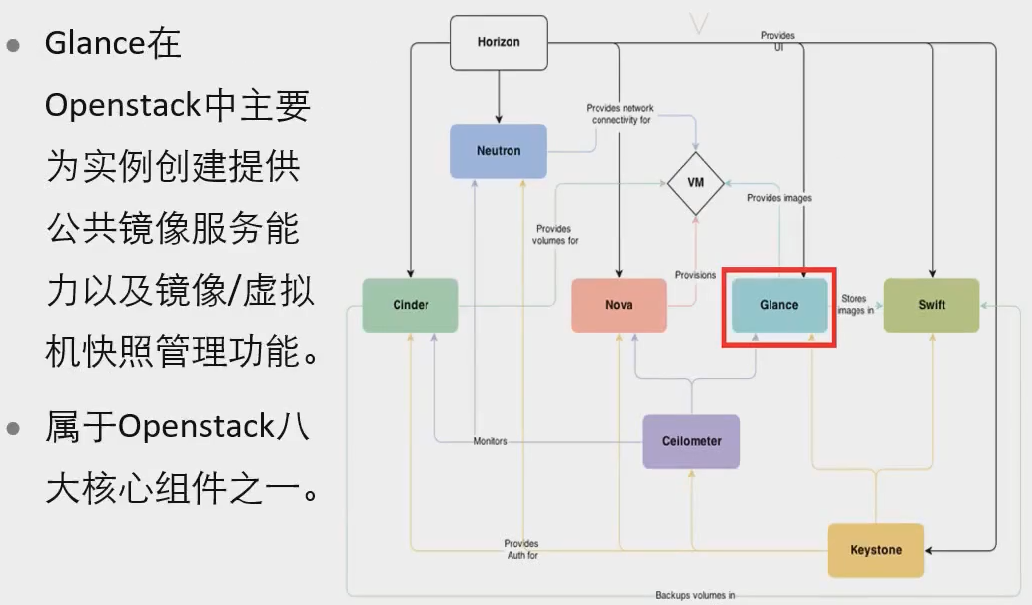

- 镜像服务(Image Service):Glance

- 网络 & 地址管理(Network):Neutron

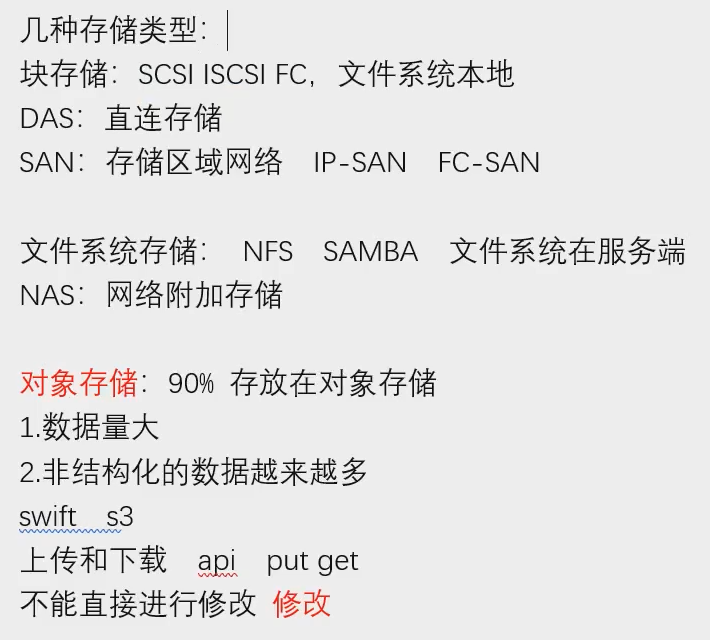

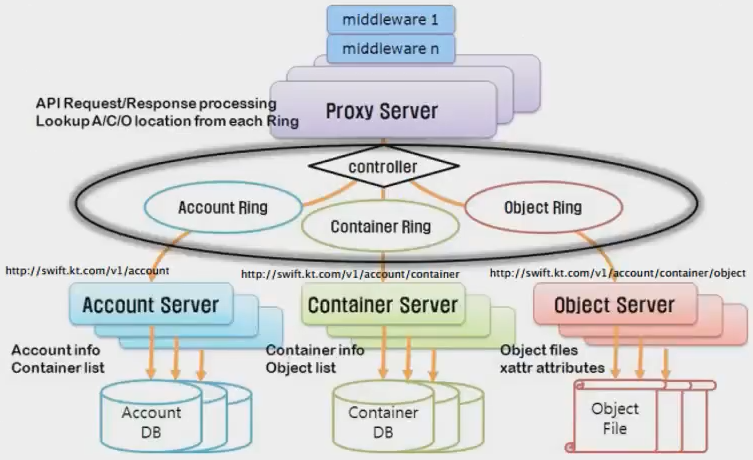

- 对象存储(Object Storage):Swift

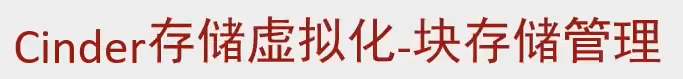

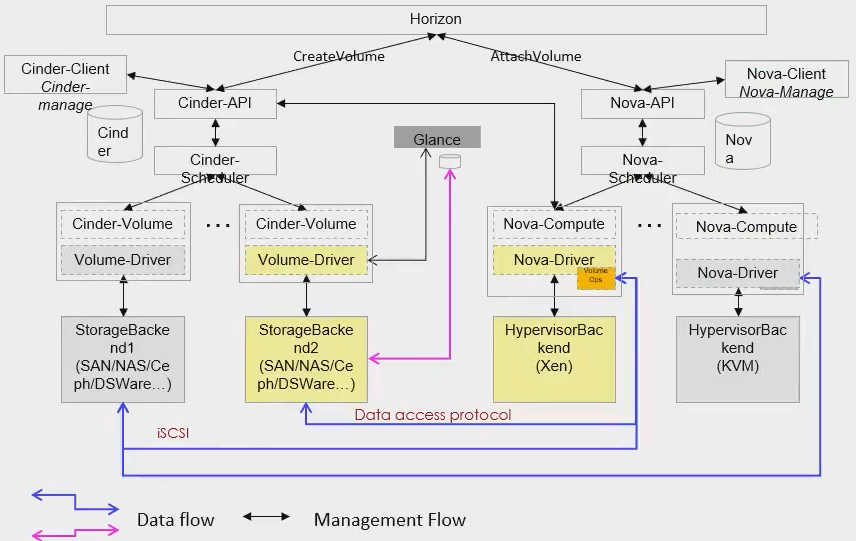

- 块存储 (Block Storage) :Cinder

- UI 界面(Dashboard):Horizon

- 测量(Metering):Ceilometer

- 部署编排(Orchestration):Heat

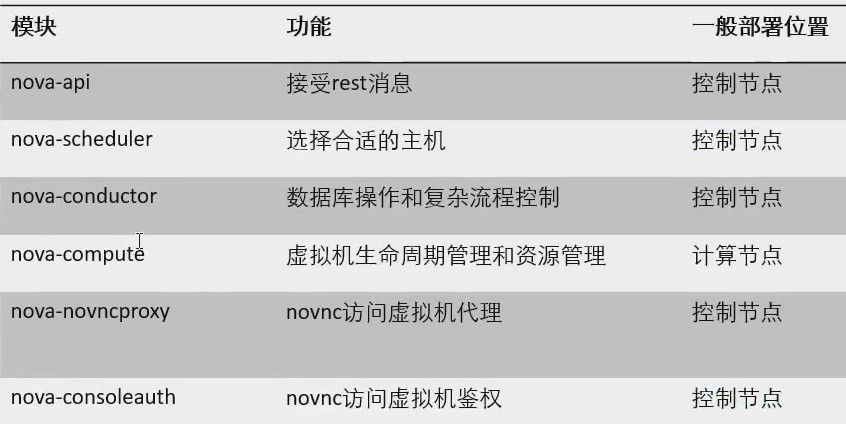

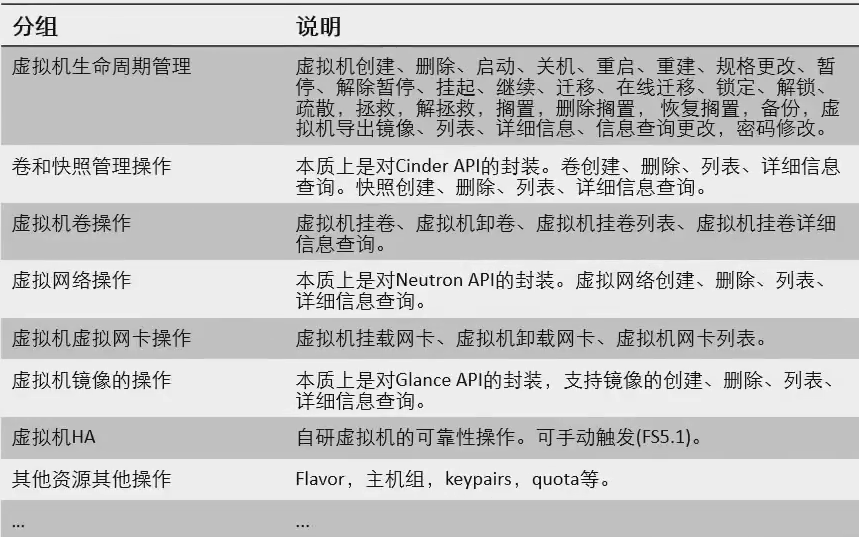

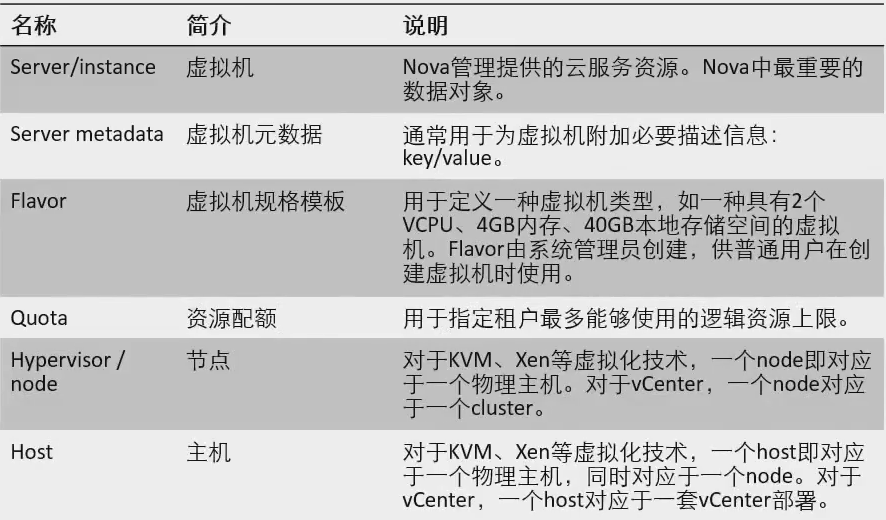

计算服务Compute:代Nova

- 用于为用户管理虚拟机实例,根据用户需求提供虚拟服务。负责虚拟机创建、开机、关机、配置cpu、内存等规格。

- 网络服务Networking:代号Neutron

- 为云平台提供网络虚拟化,为用户提供网络接口。

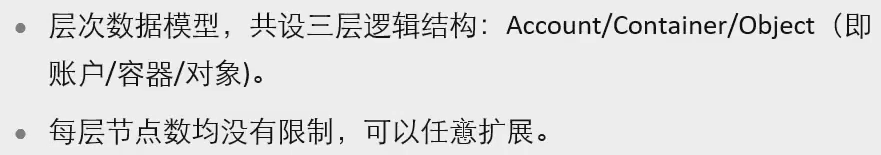

- 对象存储Object Storage:代号Swift

- 用于为云平台提供对象存储服务,允许使用其进行文件存储及检索。

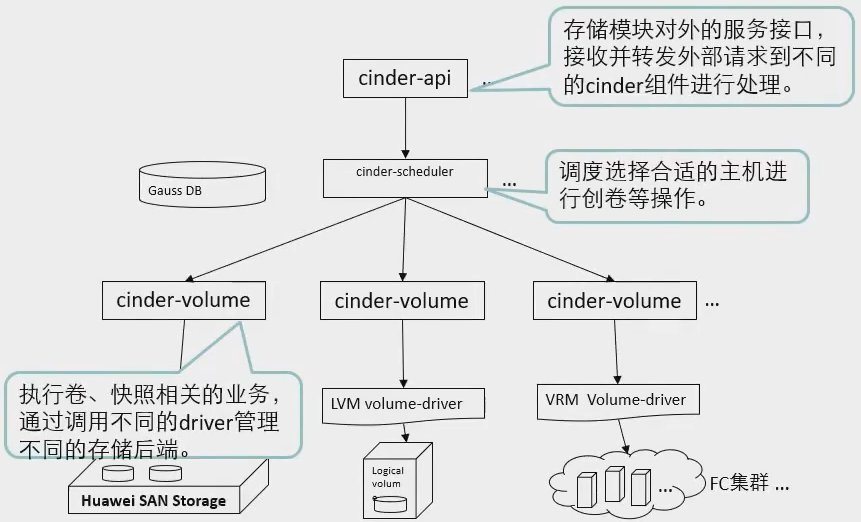

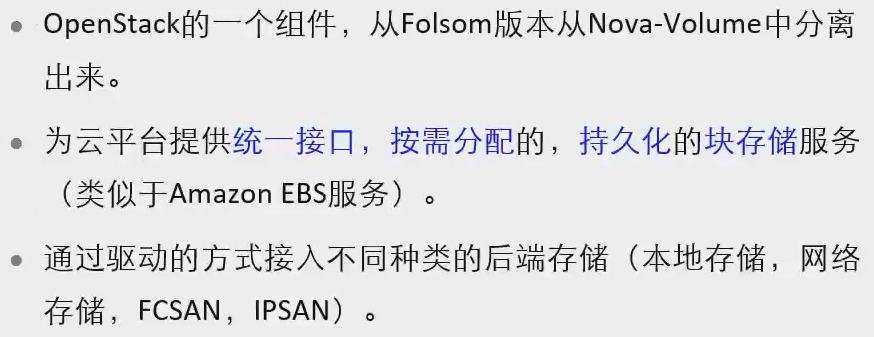

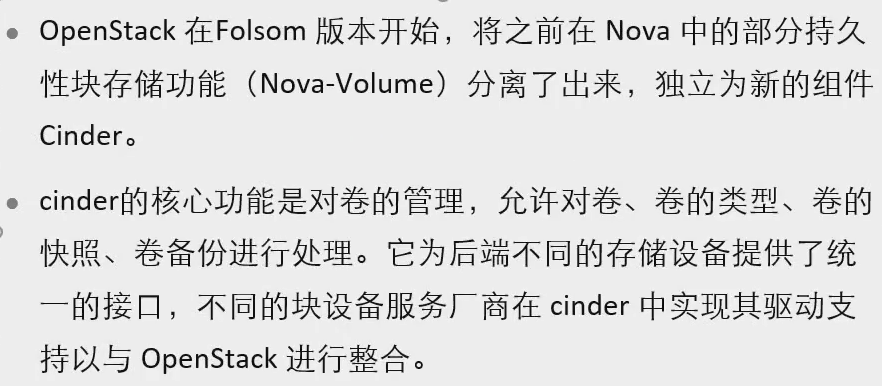

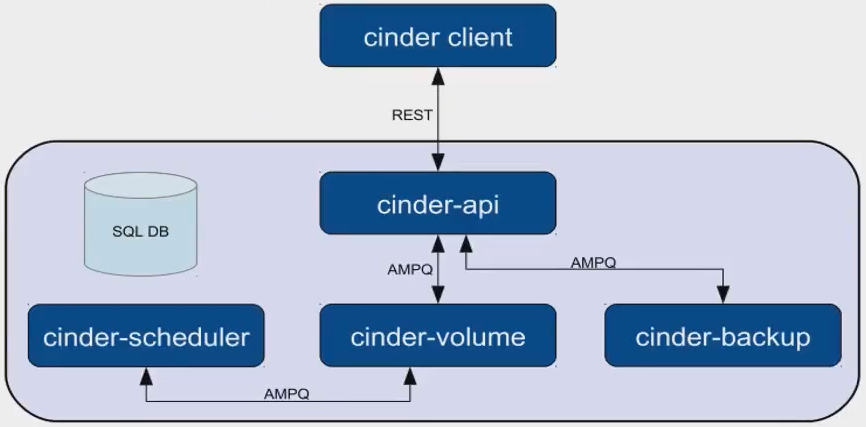

- 块存储服务Block Storage:代号Cinder

- 用于为云平台提供块存储服务,管理块设备等,例如:创建卷、删除卷等

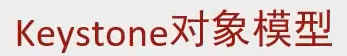

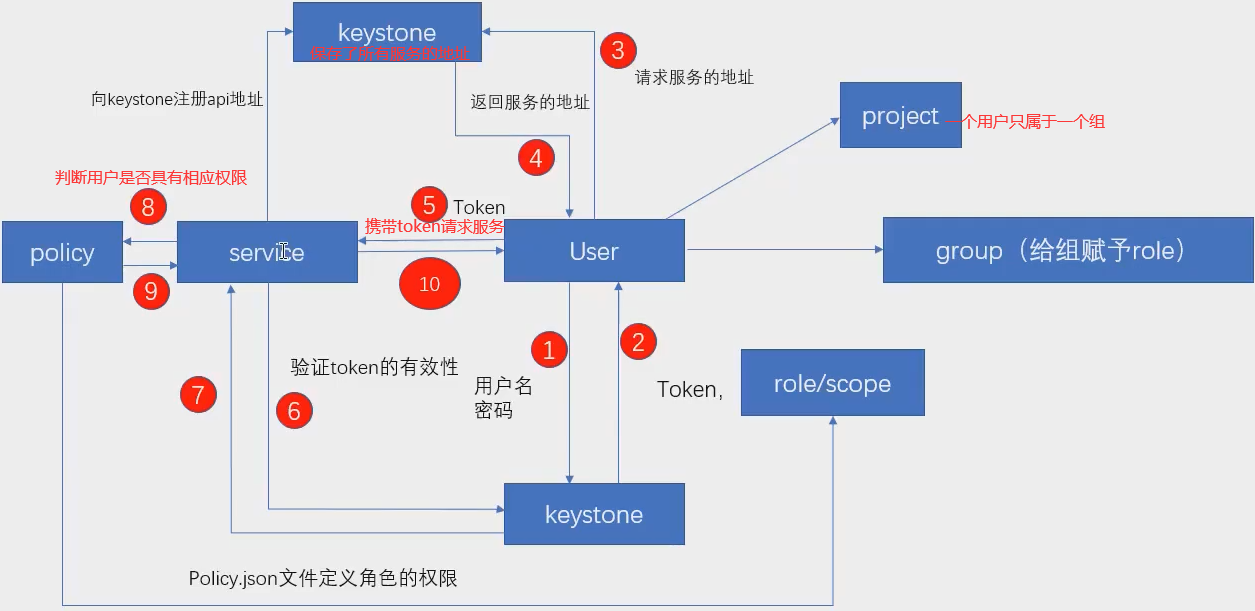

- 身份认证Identity:代号Keystone

- 为OpenStack中其它服务提供身份验证、服务注册、服务令牌等功能。各组件之间互相访问需要身份认证。

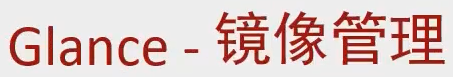

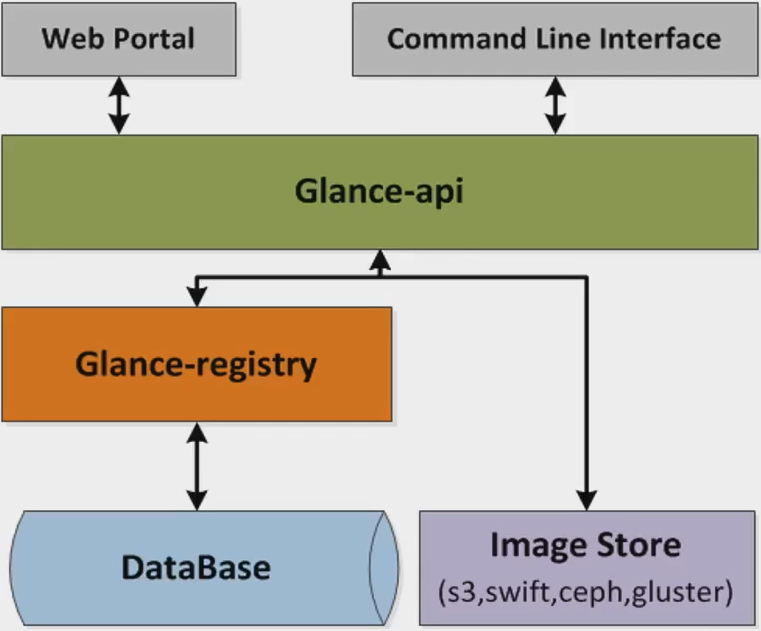

- 镜像服务Image Service:代号Glance

- 为云平台虚拟机提供镜像服务,例如:上传镜像、删除镜像等

- UI页面Dashboard:代号Horizon

- 用于为OpenStack通过Web管理门户,例如:启动云主机、创建网络、设置访问控制等。

- 测量服务Metering:代号Ceilometer

- 用于收集OpenStack内部发生的资源使用进行统计,然后为计费和监控提供数据支撑。

- 编排部署Orchestration:代号Heat

- 为云平台提供软件运行环境自动化部署。

- 云数据库Database Service:代号Trove

- 用于为用户提供在OpenStack环境中提供可扩展和可靠的关系型数据库或非关系型数据库引擎服务

目的:搭建OpenStack私有云,像阿里云一样提供kvm云主机(类似ECS),VPC网络,云主机的系统硬盘使用的是块存储。文件存储和对象存储可为KVM虚拟机提供存储的扩展

OpenStack服务间交互示例

搭建OpenStack环境

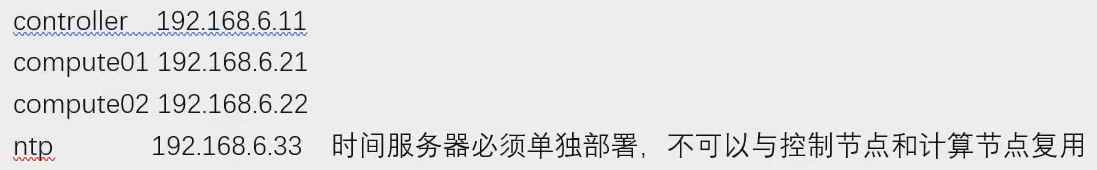

IP地址及主机名规划

// controller节点[root@localhost ~]# hostnamectl set-hostname controller[root@localhost ~]# bash// compute节点[root@localhost ~]# hostnamectl set-hostname compute[root@localhost ~]# bash// ntp节点[root@localhost ~]# hostnamectl set-hostname ntp[root@localhost ~]# bash

2、配置hosts

// 添加以下三行192.168.128.11 controller192.168.128.21 compute192.168.128.33 ntp// 进入hosts里面修改hosts文件,使能够与三台虚拟机相互通信(映射)[root@controller ~]# vi /etc/hosts[root@controller ~]#// 查看hosts里面的配置信息[root@controller ~]# cat /etc/hosts127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.128.11 controller192.168.128.21 compute192.168.128.33 ntp// 拷贝到compute节点[root@controller ~]# scp /etc/hosts root@compute:/etc/The authenticity of host 'compute (192.168.128.21)' can't be established.ECDSA key fingerprint is 07:76:41:56:52:03:80:1e:3f:9c:bc:3a:67:c3:6c:0c.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added 'compute,192.168.128.21' (ECDSA) to the list of known hosts.root@compute's password:hosts 100% 226 0.2KB/s 00:00// 拷贝到ntp节点[root@controller ~]# scp /etc/hosts root@ntp:/etc/The authenticity of host 'ntp (192.168.128.33)' can't be established.ECDSA key fingerprint is 6a:9f:16:fd:ad:07:5b:12:0f:05:a1:61:2c:87:32:64.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added 'ntp,192.168.128.33' (ECDSA) to the list of known hosts.root@ntp's password:hosts// 测试三个节点是否联通[root@controller ~]# ping computePING compute (192.168.128.21) 56(84) bytes of data.64 bytes from compute (192.168.128.21): icmp_seq=1 ttl=64 time=0.228 ms64 bytes from compute (192.168.128.21): icmp_seq=2 ttl=64 time=0.322 ms64 bytes from compute (192.168.128.21): icmp_seq=3 ttl=64 time=0.307 ms64 bytes from compute (192.168.128.21): icmp_seq=4 ttl=64 time=0.310 ms64 bytes from compute (192.168.128.21): icmp_seq=5 ttl=64 time=0.313 ms64 bytes from compute (192.168.128.21): icmp_seq=6 ttl=64 time=0.291 ms64 bytes from compute (192.168.128.21): icmp_seq=7 ttl=64 time=0.319 ms^C--- compute ping statistics ---7 packets transmitted, 7 received, 0% packet loss, time 6003msrtt min/avg/max/mdev = 0.228/0.298/0.322/0.035 ms[root@controller ~]#[root@controller ~]# ping ntpPING ntp (192.168.128.33) 56(84) bytes of data.64 bytes from ntp (192.168.128.33): icmp_seq=1 ttl=64 time=0.257 ms64 bytes from ntp (192.168.128.33): icmp_seq=2 ttl=64 time=0.331 ms64 bytes from ntp (192.168.128.33): icmp_seq=3 ttl=64 time=0.352 ms64 bytes from ntp (192.168.128.33): icmp_seq=4 ttl=64 time=0.375 ms64 bytes from ntp (192.168.128.33): icmp_seq=5 ttl=64 time=0.338 ms64 bytes from ntp (192.168.128.33): icmp_seq=6 ttl=64 time=0.337 ms^C--- ntp ping statistics ---6 packets transmitted, 6 received, 0% packet loss, time 5004msrtt min/avg/max/mdev = 0.257/0.331/0.375/0.042 ms[root@controller ~]#

3、关闭所有节点的防火墙和selinux

// 关闭所有节点的防火墙[root@controller ~]# systemctl disable firewalldrm '/etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service'rm '/etc/systemd/system/basic.target.wants/firewalld.service'[root@controller ~]#[root@controller ~]# systemctl stop firewalld[root@controller ~]#// 关闭所有节点的selinux。设置SELINUX=disabled即可(需要重启才生效)[root@controller ~]# vim /etc/selinux/config[root@controller ~]#[root@ntp ~]# cat /etc/selinux/config# This file controls the state of SELinux on the system.# SELINUX= can take one of these three values:# enforcing - SELinux security policy is enforced.# permissive - SELinux prints warnings instead of enforcing.# disabled - No SELinux policy is loaded.SELINUX=disabled# SELINUXTYPE= can take one of three two values:# targeted - Targeted processes are protected,# minimum - Modification of targeted policy. Only selected processes are protected.# mls - Multi Level Security protection.SELINUXTYPE=targeted// 使selinux设置立即生效[root@controller ~]# setenforce 0

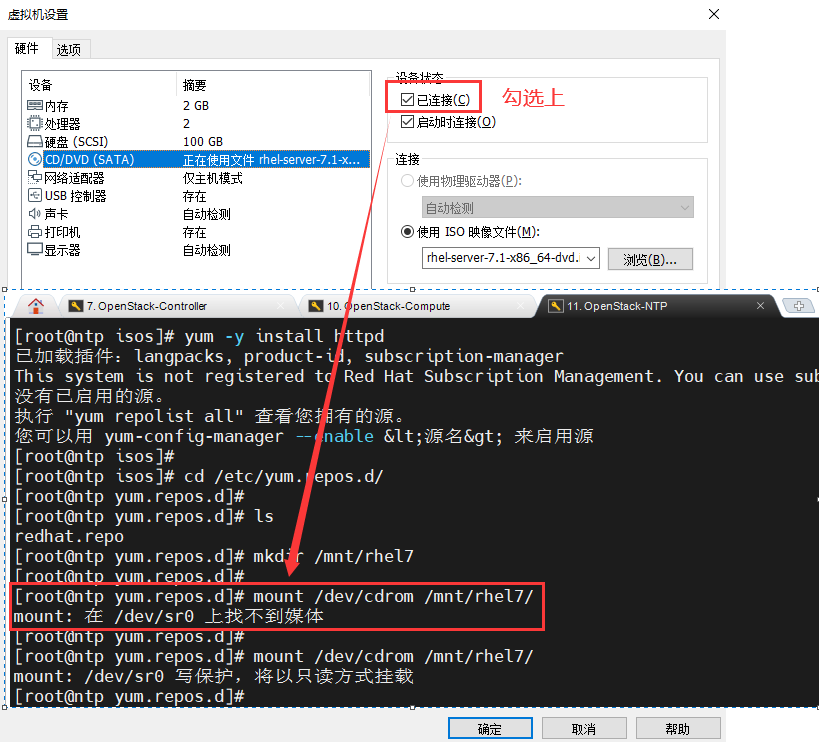

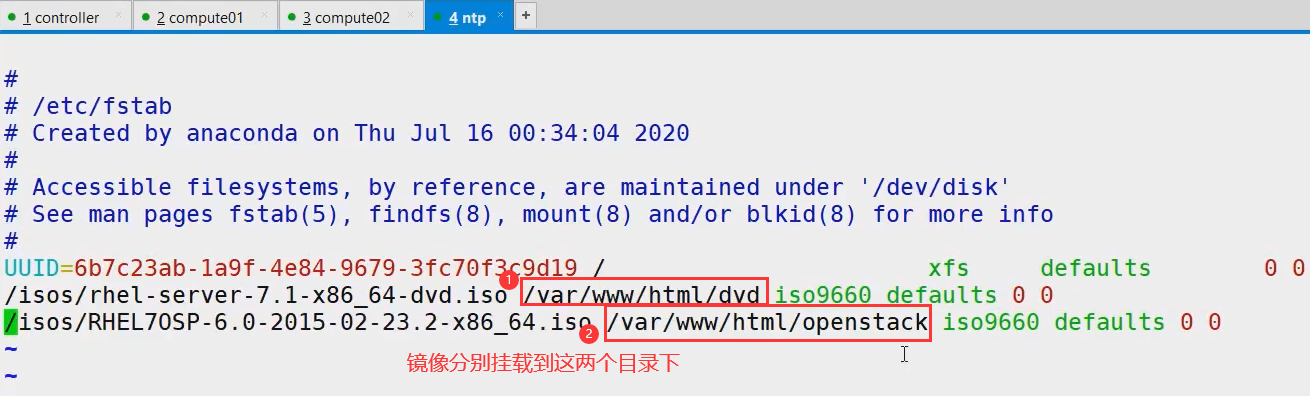

4、配置yum源(yum仓库)

现有两个镜像(rhel7.1和RHEL7OSP),没法直接挂载

在ntp节点上搭建http服务,共享yum源

[root@ntp ~]# mkdir /isos[root@ntp ~]#[root@ntp ~]# cd /isos[root@ntp isos]#// 从本地上传两个iso镜像到ntp节点的isos目录下[root@ntp isos]# ll总用量 4325980-rw-r--r--. 1 root root 539586560 5月 20 19:47 RHEL7OSP-6.0-2015-02-23.2-x86_64.iso-rw-r--r--. 1 root root 3890216960 5月 20 19:47 rhel-server-7.1-x86_64-dvd.iso[root@ntp isos]#// 配置挂载点[root@ntp isos]# vim /etc/fstab[root@ntp isos]#[root@ntp isos]# cat /etc/fstab## /etc/fstab# Created by anaconda on Sat May 20 10:35:51 2023## Accessible filesystems, by reference, are maintained under '/dev/disk'# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info#/dev/mapper/rhel-root / xfs defaults 0 0UUID=9c06a3e5-dd9f-49c5-b652-45a46d7a8a15 /boot xfs defaults 0 0/dev/mapper/rhel-home /home xfs defaults 0 0/dev/mapper/rhel-swap swap swap defaults 0 0/isos/rhel-server-7.1-x86_64-dvd.iso /var/www/html/dvd iso9660 defaults 0 0/isos/RHEL7OSP-6.0-2015-02-23.2-x86_64.iso /var/www/html/openstack iso9660 defaults 0 0[root@ntp isos]# yum -y install httpd已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.没有已启用的源。执行 "yum repolist all" 查看您拥有的源。您可以用 yum-config-manager --enable <源名> 来启用源[root@ntp isos]#[root@ntp isos]# cd /etc/yum.repos.d/[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# lsredhat.repo[root@ntp yum.repos.d]# mkdir /mnt/rhel7[root@ntp yum.repos.d]#// 需要在虚拟机设置上勾选上已连接,解决以下问题[root@ntp yum.repos.d]# mount /dev/cdrom /mnt/rhel7/mount: 在 /dev/sr0 上找不到媒体[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# mount /dev/cdrom /mnt/rhel7/mount: /dev/sr0 写保护,将以只读方式挂载[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# df -h文件系统 容量 已用 可用 已用% 挂载点/dev/mapper/rhel-root 50G 5.1G 45G 11% /devtmpfs 904M 0 904M 0% /devtmpfs 914M 0 914M 0% /dev/shmtmpfs 914M 8.6M 905M 1% /runtmpfs 914M 0 914M 0% /sys/fs/cgroup/dev/mapper/rhel-home 48G 33M 48G 1% /home/dev/sda1 497M 122M 376M 25% /boot/dev/sr0 3.7G 3.7G 0 100% /mnt/rhel7[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# vim dvd.repo[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# cat dvd.repo[rhel7]name=rhel7baseurl=file:///mnt/rhel7gpgcheck=0[root@ntp yum.repos.d]# yum clean all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在清理软件源: rhel7Cleaning up everything[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# yum list all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.rhel7 | 4.1 kB 00:00:00(1/2): rhel7/group_gz | 134 kB 00:00:00(2/2): rhel7/primary_db | 3.4 MB 00:00:00已安装的软件包NetworkManager.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1NetworkManager-config-server.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1***************// 安装http服务[root@ntp yum.repos.d]# yum -y install httpd已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在解决依赖关系--> 正在检查事务---> 软件包 httpd.x86_64.0.2.4.6-31.el7 将被 安装--> 正在处理依赖关系 httpd-tools = 2.4.6-31.el7,它被软件包 httpd-2.4.6-31.el7.x86_64 需要--> 正在处理依赖关系 /etc/mime.types,它被软件包 httpd-2.4.6-31.el7.x86_64 需要--> 正在处理依赖关系 libapr-1.so.0()(64bit),它被软件包 httpd-2.4.6-31.el7.x86_64 需要--> 正在处理依赖关系 libaprutil-1.so.0()(64bit),它被软件包 httpd-2.4.6-31.el7.x86_64 需要--> 正在检查事务---> 软件包 apr.x86_64.0.1.4.8-3.el7 将被 安装---> 软件包 apr-util.x86_64.0.1.5.2-6.el7 将被 安装---> 软件包 httpd-tools.x86_64.0.2.4.6-31.el7 将被 安装---> 软件包 mailcap.noarch.0.2.1.41-2.el7 将被 安装--> 解决依赖关系完成*********// 设置http开机自启动[root@ntp yum.repos.d]# systemctl enable httpdln -s '/usr/lib/systemd/system/httpd.service' '/etc/systemd/system/multi-user.target.wants/httpd.service'[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# systemctl start httpd// 挂载iso镜像[root@ntp yum.repos.d]# cd /var/www/html/[root@ntp html]#[root@ntp html]# mkdir dvd[root@ntp html]#[root@ntp html]# mkdir openstack[root@ntp html]#[root@ntp html]# ll总用量 0drwxr-xr-x. 2 root root 6 5月 20 20:25 dvddrwxr-xr-x. 2 root root 6 5月 20 20:25 openstack[root@ntp html]#[root@ntp html]# mount -amount: /dev/loop0 写保护,将以只读方式挂载mount: /dev/loop1 写保护,将以只读方式挂载[root@ntp html]#[root@ntp html]# df -h文件系统 容量 已用 可用 已用% 挂载点/dev/mapper/rhel-root 50G 5.2G 45G 11% /devtmpfs 904M 0 904M 0% /devtmpfs 914M 0 914M 0% /dev/shmtmpfs 914M 8.6M 905M 1% /runtmpfs 914M 0 914M 0% /sys/fs/cgroup/dev/mapper/rhel-home 48G 33M 48G 1% /home/dev/sda1 497M 122M 376M 25% /boot/dev/sr0 3.7G 3.7G 0 100% /mnt/rhel7/dev/loop0 3.7G 3.7G 0 100% /var/www/html/dvd/dev/loop1 515M 515M 0 100% /var/www/html/openstack// 通过192.168.128.33加上路径(dvd或者openstack即可访问yum源)[root@ntp html]# ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWNlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eno16777736: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000link/ether 00:0c:29:bd:d5:85 brd ff:ff:ff:ff:ff:ffinet 192.168.128.33/24 brd 192.168.128.255 scope global eno16777736valid_lft forever preferred_lft foreverinet6 fe80::20c:29ff:febd:d585/64 scope linkvalid_lft forever preferred_lft forever

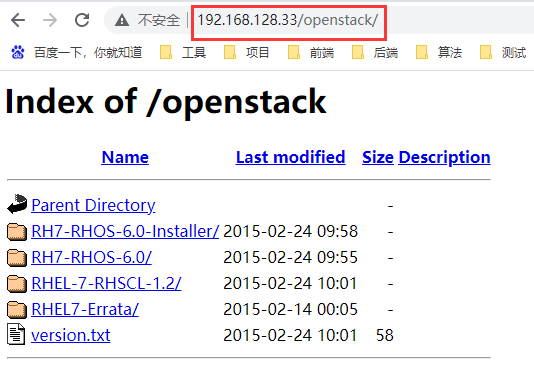

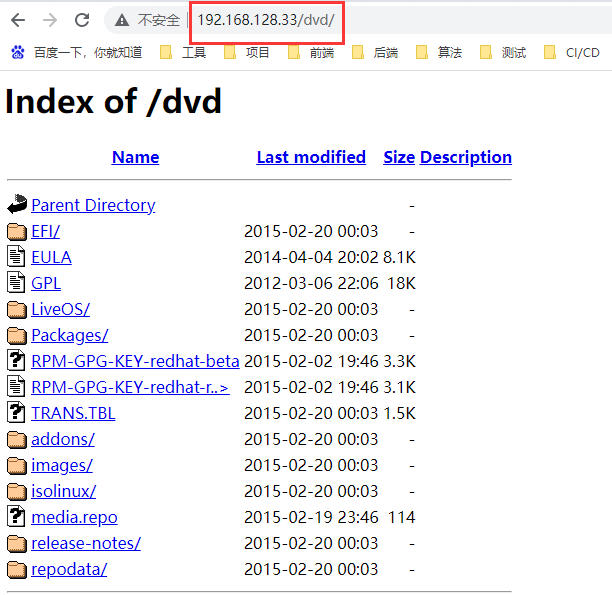

通过http访问这两个yum源

通过http访问这两个yum源

在controller节点上创建dvd.repo和openstack.repo

[root@controller ~]# cd /etc/yum.repos.d[root@controller yum.repos.d]#[root@controller yum.repos.d]# vim dvd.repo[root@controller yum.repos.d]#[root@controller yum.repos.d]# cat dvd.repo[rhel7]name=rhel7baseurl=http://192.168.128.33/dvdgpgcheck=0[root@controller yum.repos.d]#[root@controller yum.repos.d]#[root@controller yum.repos.d]#[root@controller yum.repos.d]# vim openstack.repo[root@controller yum.repos.d]#[root@controller yum.repos.d]# cat openstack.repo[RH7-RHOS-6.0]name=RH7-RHOS-6.0baseurl=http://192.168.128.33/openstack/RH7-RHOS-6.0/gpgcheck=0[RH7-RHOS-6.0-Installer]name=RH7-RHOS-6.0-Installerbaseurl=http://192.168.128.33/openstack/RH7-RHOS-6.0-Installer/gpgcheck=0[RHEL7-Errata]name=RHEL7-Erratabaseurl=http://192.168.128.33/openstack/RHEL7-Errata/gpgcheck=0[RHEL-7-RHSCL-1.2]name=RHEL-7-RHSCL-1.2baseurl=http://192.168.128.33/openstack/RHEL-7-RHSCL-1.2/gpgcheck=0[root@controller yum.repos.d]#[root@controller yum.repos.d]#[root@controller yum.repos.d]# yum clean all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在清理软件源: RH7-RHOS-6.0 RH7-RHOS-6.0-Installer RHEL-7-RHSCL-1.2 RHEL7-Errata rhel7Cleaning up everything[root@controller yum.repos.d]#[root@controller yum.repos.d]# yum list all | grep openstackopenstack-ceilometer-alarm.noarch 2014.2.2-1.el7ost RH7-RHOS-6.0openstack-ceilometer-api.noarch 2014.2.2-1.el7ost RH7-RHOS-6.0openstack-ceilometer-central.noarch 2014.2.2-1.el7ost RH7-RHOS-6.0openstack-ceilometer-collector.noarch 2014.2.2-1.el7ost RH7-RHOS-6.0****// 将dvd.repo openstack.repo复制到compute节点上[root@controller yum.repos.d]# scp dvd.repo openstack.repo root@compute:$PWDroot@compute's password:dvd.repo 100% 64 0.1KB/s 00:00openstack.repo 100% 437 0.4KB/s 00:00[root@controller yum.repos.d]#// 将dvd.repo openstack.repo复制到ntp节点上[root@controller yum.repos.d]# scp dvd.repo openstack.repo root@ntp:$PWDroot@ntp's password:dvd.repo 100% 64 0.1KB/s 00:00openstack.repo 100% 437 0.4KB/s 00:00[root@controller yum.repos.d]#

[root@compute ~]# cd /etc/yum.repos.d[root@compute yum.repos.d]# ll总用量 0[root@compute yum.repos.d]# ll总用量 8-rw-r--r--. 1 root root 64 5月 20 20:43 dvd.repo-rw-r--r--. 1 root root 437 5月 20 20:43 openstack.repo[root@compute yum.repos.d]#[root@compute yum.repos.d]# yum clean all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在清理软件源: RH7-RHOS-6.0 RH7-RHOS-6.0-Installer RHEL-7-RHSCL-1.2 RHEL7-Errata rhel7Cleaning up everything[root@compute yum.repos.d]#[root@compute yum.repos.d]# yum list all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.RH7-RHOS-6.0 | 1.3 kB 00:00:00RH7-RHOS-6.0-Installer | 1.2 kB 00:00:00RHEL-7-RHSCL-1.2 | 3.3 kB 00:00:00RHEL7-Errata | 2.9 kB 00:00:00rhel7 | 4.1 kB 00:00:00(1/6): RH7-RHOS-6.0/primary | 117 kB 00:00:00(2/6): RH7-RHOS-6.0-Installer/primary | 47 kB 00:00:00(3/6): RHEL7-Errata/primary_db | 10 kB 00:00:00(4/6): RHEL-7-RHSCL-1.2/primary_db | 776 kB 00:00:00(5/6): rhel7/group_gz | 134 kB 00:00:00(6/6): rhel7/primary_db | 3.4 MB 00:00:00RH7-RHOS-6.0 393/393RH7-RHOS-6.0-Installer 194/194已安装的软件包NetworkManager.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1NetworkManager-config-server.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1******

[root@ntp yum.repos.d]# yum clean all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在清理软件源: rhel7Cleaning up everything[root@ntp yum.repos.d]#[root@ntp yum.repos.d]# yum list all已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.rhel7 | 4.1 kB 00:00:00(1/2): rhel7/group_gz | 134 kB 00:00:00(2/2): rhel7/primary_db | 3.4 MB 00:00:00已安装的软件包NetworkManager.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1NetworkManager-config-server.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1NetworkManager-libnm.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1NetworkManager-team.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1NetworkManager-tui.x86_64 1:1.0.0-14.git20150121.b4ea599c.el7 @anaconda/7.1Red_Hat_Enterprise_Linux-Release_Notes-7-en-US.noarch 1.0-9.el7 @anaconda/7.1******

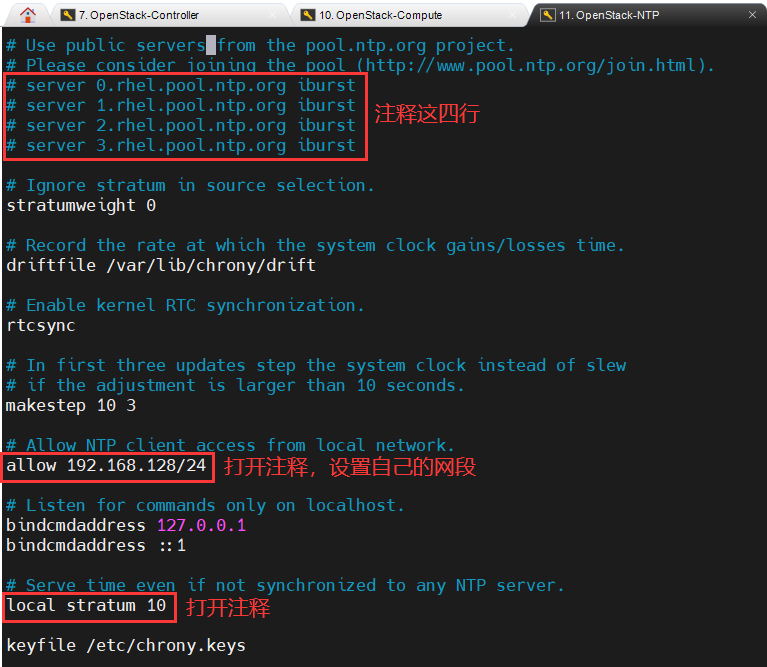

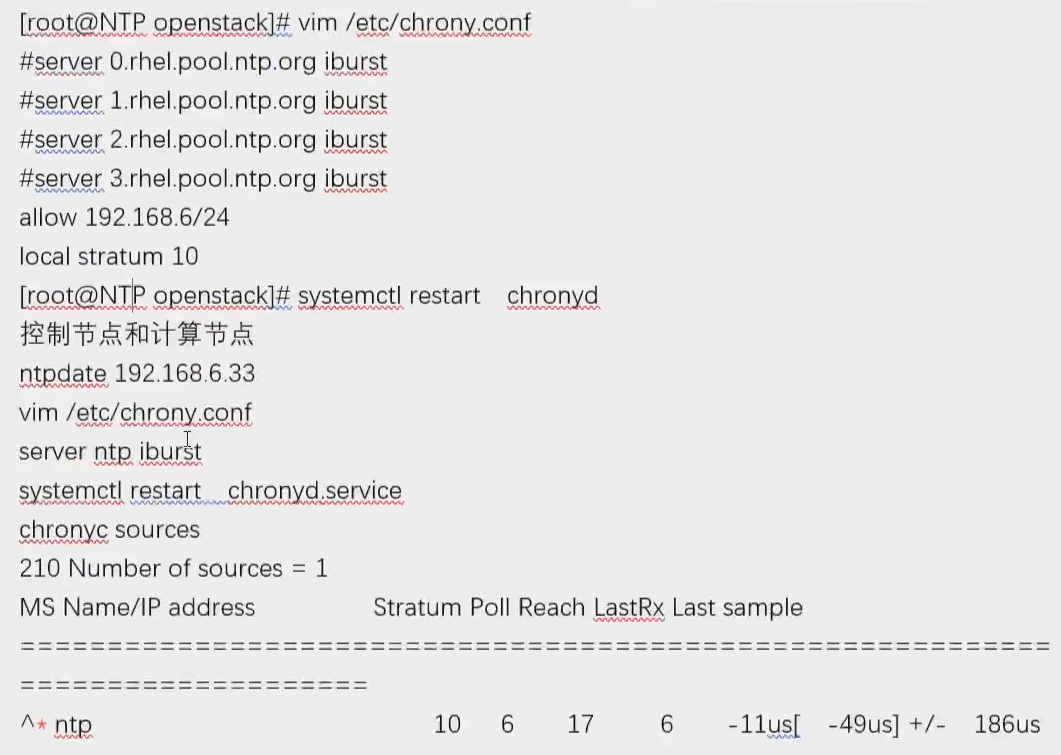

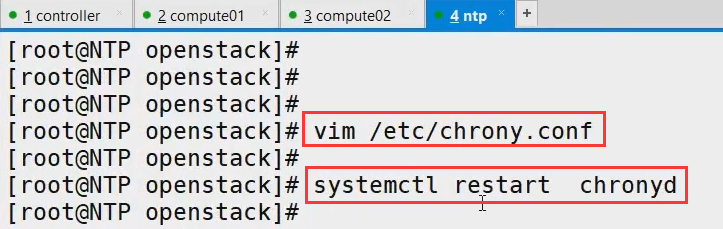

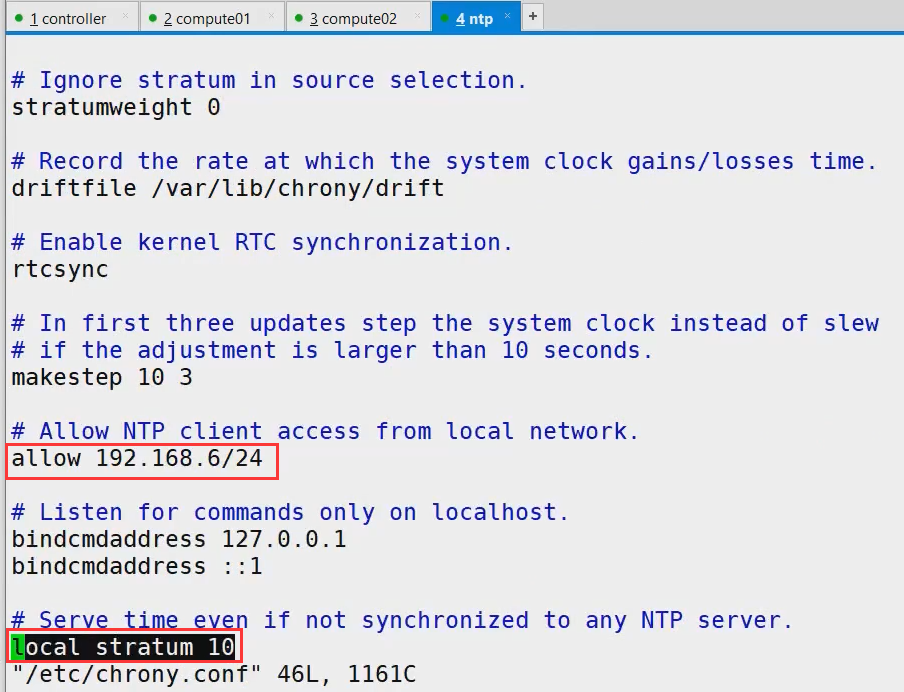

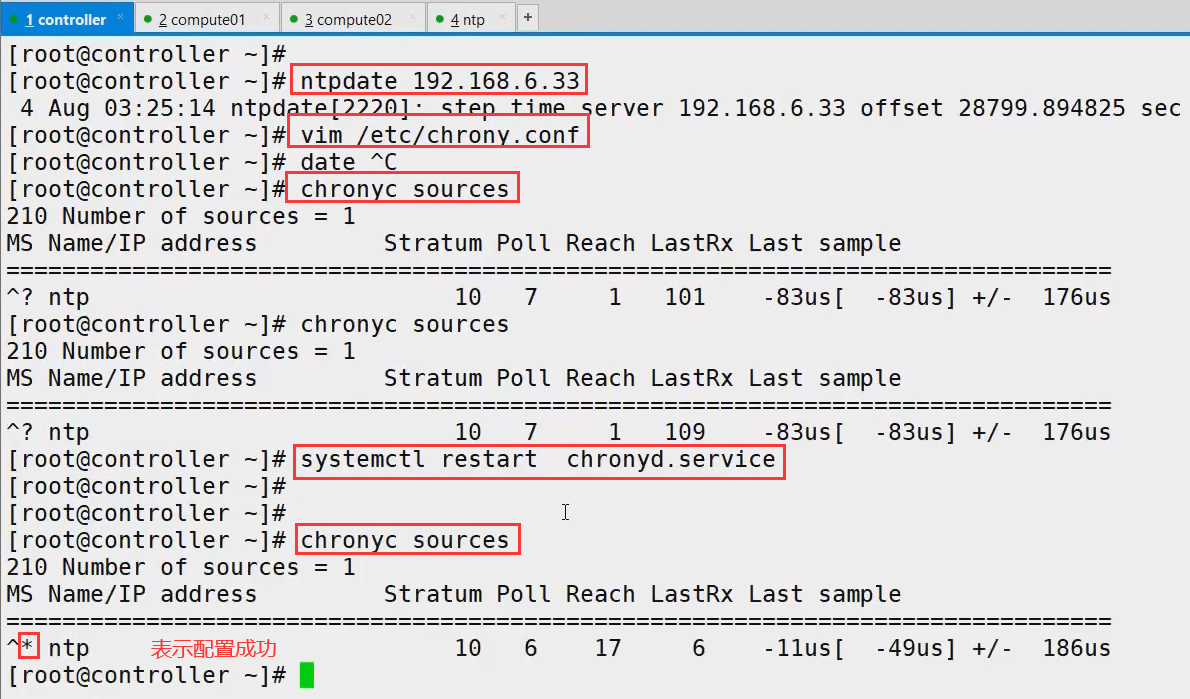

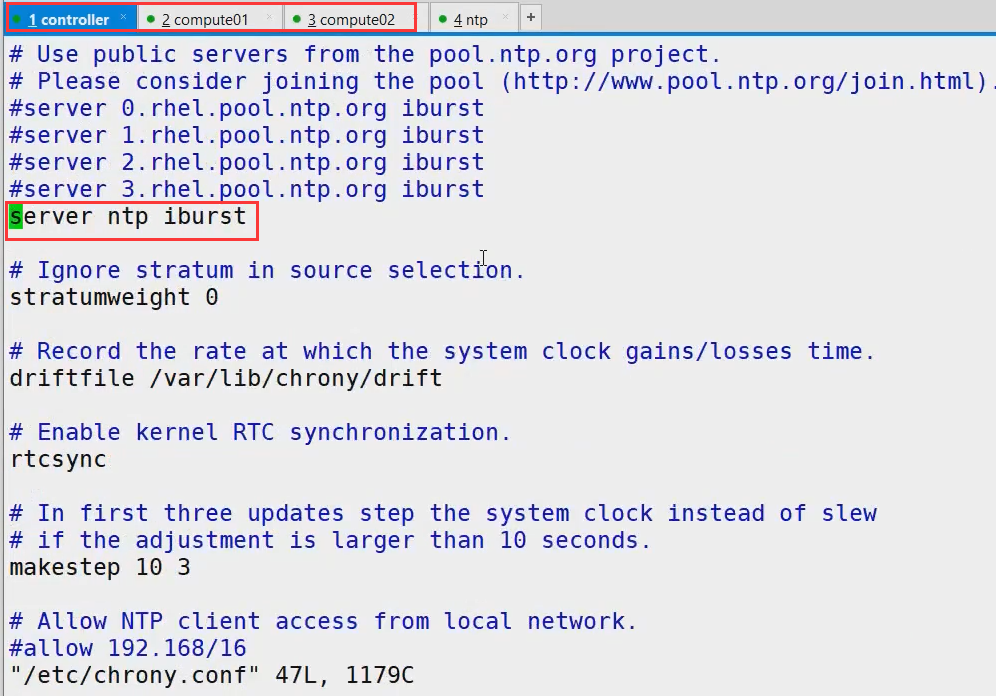

配置时间服务器

[root@ntp openstack]# vim /etc/chrony.conf[root@ntp openstack]#[root@ntp openstack]# cat /etc/chrony.conf# Use public servers from the pool.ntp.org project.# Please consider joining the pool (http://www.pool.ntp.org/join.html).# server 0.rhel.pool.ntp.org iburst# server 1.rhel.pool.ntp.org iburst# server 2.rhel.pool.ntp.org iburst# server 3.rhel.pool.ntp.org iburst# Ignore stratum in source selection.stratumweight 0# Record the rate at which the system clock gains/losses time.driftfile /var/lib/chrony/drift# Enable kernel RTC synchronization.rtcsync# In first three updates step the system clock instead of slew# if the adjustment is larger than 10 seconds.makestep 10 3# Allow NTP client access from local network.allow 192.168.128/24# Listen for commands only on localhost.bindcmdaddress 127.0.0.1bindcmdaddress ::1# Serve time even if not synchronized to any NTP server.local stratum 10keyfile /etc/chrony.keys# Specify the key used as password for chronyc.commandkey 1# Generate command key if missing.generatecommandkey# Disable logging of client accesses.noclientlog# Send a message to syslog if a clock adjustment is larger than 0.5 seconds.logchange 0.5logdir /var/log/chrony#log measurements statistics tracking[root@ntp openstack]#// 重启服务[root@ntp openstack]# systemctl restart chronyd[root@ntp openstack]#

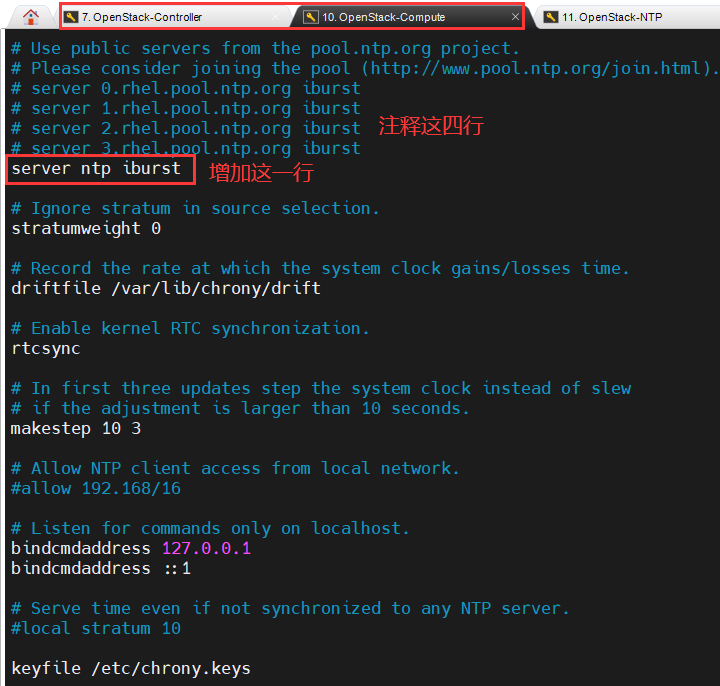

[root@compute ~]# ntpdate 192.168.128.3320 May 21:01:19 ntpdate[35491]: adjust time server 192.168.128.33 offset -0.437375 sec[root@compute ~]#[root@compute ~]#[root@compute ~]# vim /etc/chrony.conf[root@compute ~]#[root@compute ~]# cat /etc/chrony.conf# Use public servers from the pool.ntp.org project.# Please consider joining the pool (http://www.pool.ntp.org/join.html).# server 0.rhel.pool.ntp.org iburst# server 1.rhel.pool.ntp.org iburst# server 2.rhel.pool.ntp.org iburst# server 3.rhel.pool.ntp.org iburstserver ntp iburst# Ignore stratum in source selection.stratumweight 0# Record the rate at which the system clock gains/losses time.driftfile /var/lib/chrony/drift# Enable kernel RTC synchronization.rtcsync# In first three updates step the system clock instead of slew# if the adjustment is larger than 10 seconds.makestep 10 3# Allow NTP client access from local network.#allow 192.168/16# Listen for commands only on localhost.bindcmdaddress 127.0.0.1bindcmdaddress ::1# Serve time even if not synchronized to any NTP server.#local stratum 10keyfile /etc/chrony.keys# Specify the key used as password for chronyc.commandkey 1# Generate command key if missing.generatecommandkey# Disable logging of client accesses.noclientlog# Send a message to syslog if a clock adjustment is larger than 0.5 seconds.logchange 0.5logdir /var/log/chrony#log measurements statistics tracking[root@compute ~]#// 需要重启controller与compute节点的chronyd服务[root@controller ~]# systemctl restart chronyd.service[root@controller ~]#// ^* ntp此处的*号就表示controller与compute节点可以向ntp节点进行时间同步[root@controller ~]# chronyc sources210 Number of sources = 1MS Name/IP address Stratum Poll Reach LastRx Last sample===============================================================================^* ntp 10 6 17 9 -4546us[-4532us] +/- 9716us[root@controller ~]#

控制节点配置DNS

[root@controller ~]# vim /etc/sysconfig/network-scripts/ifcfg-eno16777736[root@controller ~]#[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eno16777736TYPE=EthernetBOOTPROTO=noneDEFROUTE=yesIPV4_FAILURE_FATAL=noIPV6INIT=yesIPV6_AUTOCONF=yesIPV6_DEFROUTE=yesIPV6_FAILURE_FATAL=noNAME=eno16777736UUID=a2b5d887-44e8-4077-a582-5c97ff02b9d6DEVICE=eno16777736ONBOOT=yesIPADDR=192.168.128.11PREFIX=24GATEWAY=192.168.128.2DNS1=192.168.128.1IPV6_PEERDNS=yesIPV6_PEERROUTES=yesIPV6_PRIVACY=no[root@controller ~]#[root@controller ~]# cat /etc/resolv.conf# Generated by NetworkManager# 此处会去检测DNSnameserver 192.168.128.1[root@controller ~]#

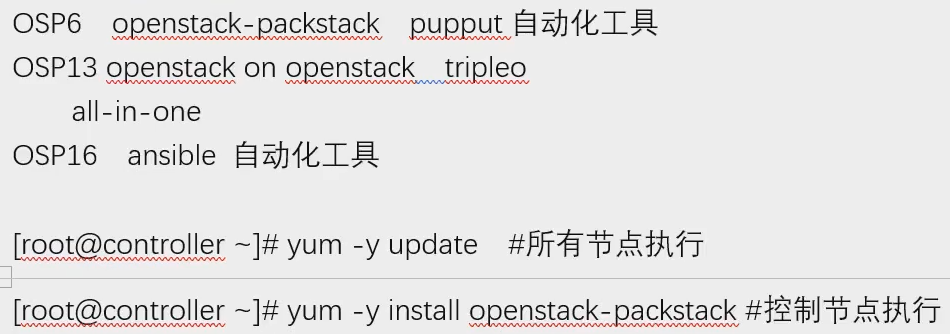

安装OpenStack

OSP6是红帽二次开发的OpenStack版本,提供了对应的部署工具openstack-packstack,底层是用pupput自动化工具

OSP13对应的部署工具是openstack on openstack

// 在控制节点和计算节点上执行:yum -y update[root@controller ~]# yum -y update已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在解决依赖关系--> 正在检查事务---> 软件包 python-six.noarch.0.1.3.0-4.el7 将被 升级---> 软件包 python-six.noarch.0.1.7.3-1.el7 将被 更新--> 解决依赖关系完成******// 只在控制节点上执行:yum -y install openstack-packstack[root@controller ~]# yum -y install openstack-packstack已加载插件:langpacks, product-id, subscription-managerThis system is not registered to Red Hat Subscription Management. You can use subscription-manager to register.正在解决依赖关系--> 正在检查事务---> 软件包 openstack-packstack.noarch.0.2014.2-0.16.dev1401.gdd19d48.el7ost 将被 安装--> 正在处理依赖关系 openstack-packstack-puppet = 2014.2-0.16.dev1401.gdd19d48.el7ost,它被软件包 openstack-packstack-2014.2-0.16.dev1401.gdd19d48.el7ost.noarch 需要******

生成并执行应答文件

[root@controller ~]# packstack --help | grep answer--gen-answer-file=GEN_ANSWER_FILEGenerate a template of an answer file, using this--answer-file=ANSWER_FILEanswerfile will also be generated and should be used-o, --options Print details on options available in answer file(rstsecond time with the same answer file and don't want// 第一步:生成应答文件[root@controller ~]# packstack --gen-answer-file=answer.txtPackstack changed given value to required value /root/.ssh/id_rsa.pub[root@controller ~]#[root@controller ~]# lsanaconda-ks.cfg answer.txt[root@controller ~]#[root@controller ~]# ll .ssh/总用量 12-rw-------. 1 root root 1679 5月 20 21:41 id_rsa-rw-r--r--. 1 root root 397 5月 20 21:41 id_rsa.pub-rw-r--r--. 1 root root 364 5月 20 19:14 known_hosts[root@controller ~]#// 第二步:编辑应答文件[root@controller ~]# vim answer.txtCONFIG_NTP_SERVERS=192.168.128.33 // 配置时间服务器,所以先前在控制和计算节点上可以不用配置server ntp iburstCONFIG_CONTROLLER_HOST=192.168.128.11 // 指定控制节点的IPCONFIG_COMPUTE_HOSTS=192.168.128.21 // 指定计算节点的IP,可以设置控制节点的ip复用为计算节点。多个计算节点IP用逗号分割。比如192.168.128.11,192.168.128.21CONFIG_NETWORK_HOSTS=192.168.128.11 // 网络组件配置到的节点CONFIG_HORIZON_SSL=y // y表示https、n表示httpCONFIG_PROVISION_DEMO=n // n表示不生成测试环境CONFIG_KEYSTONE_ADMIN_PW=redhat // 设置管理员admin的密码CONFIG_KEYSTONE_REGION=WuHan // 设置区域// 第三步:执行应答文件[root@controller ~]# packstack --answer-file=answer.txtWelcome to the Packstack setup utilityThe installation log file is available at: /var/tmp/packstack/20230520-215414-J8OuqX/openstack-setup.logInstalling:Clean Up [ DONE ]root@192.168.128.11's password:root@192.168.128.21's password:Setting up ssh keys [ DONE ]Discovering hosts' details [ DONE ]Adding pre install manifest entries [ DONE ]Installing time synchronization via NTP [ DONE ]Preparing servers [ DONE ]Adding AMQP manifest entries [ DONE ]Adding MariaDB manifest entries [ DONE ]****需要一段时间Finalizing [ DONE ]**** Installation completed successfully ******Additional information:* Warning: NetworkManager is active on 192.168.128.11, 192.168.128.21. OpenStack networking currently does not work on systems that have the Network Manager service enabled.* File /root/keystonerc_admin has been created on OpenStack client host 192.168.128.11. To use the command line tools you need to source the file.* NOTE : A certificate was generated to be used for ssl, You should change the ssl certificate configured in /etc/httpd/conf.d/ssl.conf on 192.168.128.11 to use a CA signed cert.* To access the OpenStack Dashboard browse to https://192.168.128.11/dashboard .Please, find your login credentials stored in the keystonerc_admin in your home directory.* To use Nagios, browse to http://192.168.128.11/nagios username: nagiosadmin, password: a2c4942210ca422f* The installation log file is available at: /var/tmp/packstack/20230520-215414-J8OuqX/openstack-setup.log* The generated manifests are available at: /var/tmp/packstack/20230520-215414-J8OuqX/manifests// 可以在计算节点上查看安装日志[root@compute ~]# tail -f /var/log/messagesMay 20 22:02:54 compute systemd-sysctl: Overwriting earlier assignment of net/bridge/bridge-nf-call-arptables in file '/etc/sysctl.d/99-sysctl.conf'.May 20 22:02:54 compute NetworkManager[1162]: <info> (br-tun): carrier is OFF (but ignored)May 20 22:02:54 compute NetworkManager[1162]: <info> (br-tun): new Generic device (driver: 'openvswitch' ifindex: 8)May 20 22:02:54 compute NetworkManager[1162]: <info> (br-tun): exported as /org/freedesktop/NetworkManager/Devices/7May 20 22:02:54 compute ovs-vsctl: ovs|00001|vsctl|INFO|Called as /bin/ovs-vsctl --timeout=10 add-port br-int patch-tun -- set Interface patch-tun type=patch options:peer=patch-intMay 20 22:02:54 compute ovs-vsctl: ovs|00001|vsctl|INFO|Called as /bin/ovs-vsctl --timeout=10 add-port br-tun patch-int -- set Interface patch-int type=patch options:peer=patch-tunMay 20 22:02:57 compute systemd-logind: New session 114 of user root.May 20 22:02:57 compute systemd: Starting Session 114 of user root.May 20 22:02:57 compute systemd: Started Session 114 of user root.May 20 22:02:57 compute systemd-logind: Removed session 114.********// 访问https://192.168.128.11/dashboard,用户名:admin、密码:redhat

[root@controller ~]# ll总用量 32-rw-------. 1 root root 1312 5月 20 17:50 anaconda-ks.cfg-rw-------. 1 root root 24239 5月 20 21:52 answer.txt-rw-------. 1 root root 202 5月 20 22:03 keystonerc_admin[root@controller ~]#// 查看用户名与密码[root@controller ~]# cat keystonerc_adminexport OS_USERNAME=adminexport OS_TENANT_NAME=adminexport OS_PASSWORD=redhatexport OS_AUTH_URL=http://192.168.128.11:5000/v2.0/export OS_REGION_NAME=WuHanexport PS1='[\u@\h \W(keystone_admin)]\$ '[root@controller ~]#

配置OpenStack

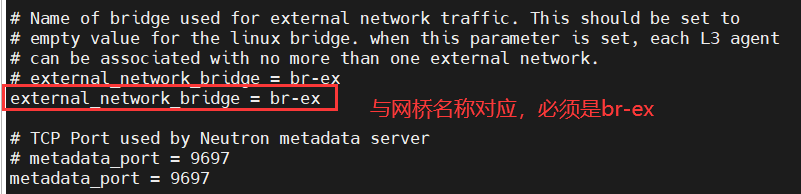

1、配置OVS桥

[root@controller ~]# vim answer.txtCONFIG_NETWORK_HOSTS=192.168.128.11 // 网络组件配置到的节点// 在网络节点上配置OVS桥[root@controller network-scripts]# cd /etc/sysconfig/network-scripts[root@controller network-scripts]#[root@controller network-scripts]# cat ifcfg-eno16777736TYPE=EthernetBOOTPROTO=noneDEFROUTE=yesIPV4_FAILURE_FATAL=noIPV6INIT=yesIPV6_AUTOCONF=yesIPV6_DEFROUTE=yesIPV6_FAILURE_FATAL=noNAME=eno16777736UUID=a2b5d887-44e8-4077-a582-5c97ff02b9d6DEVICE=eno16777736ONBOOT=yesIPADDR=192.168.128.11PREFIX=24GATEWAY=192.168.128.2DNS1=192.168.128.1IPV6_PEERDNS=yesIPV6_PEERROUTES=yesIPV6_PRIVACY=no# 文件名必须是ifcfg-br-ex[root@controller network-scripts]# vim ifcfg-br-ex[root@controller network-scripts]#[root@controller network-scripts]# cat ifcfg-br-exTYPE=OVSBridgeBOOTPROTO=none# NAME必须等于br-ex,在/etc/neutron/l3_agent.ini文件中的external_network_bridge = br-ex对应NAME=br-ex# 网卡名称DEVICE=br-ex# 开机启动ONBOOT=yes# 将ip地址配置在桥上IPADDR=192.168.128.11PREFIX=24GATEWAY=192.168.128.2DNS1=192.168.128.1DEVICETYPE=ovs[root@controller network-scripts]#

2、配置物理网卡

[root@controller network-scripts]# cd /etc/sysconfig/network-scripts[root@controller network-scripts]#// 原物理网卡数据[root@controller network-scripts]# cat ifcfg-eno16777736TYPE=EthernetBOOTPROTO=noneDEFROUTE=yesIPV4_FAILURE_FATAL=noIPV6INIT=yesIPV6_AUTOCONF=yesIPV6_DEFROUTE=yesIPV6_FAILURE_FATAL=noNAME=eno16777736UUID=a2b5d887-44e8-4077-a582-5c97ff02b9d6DEVICE=eno16777736ONBOOT=yesIPADDR=192.168.128.11PREFIX=24GATEWAY=192.168.128.2DNS1=192.168.128.1IPV6_PEERDNS=yesIPV6_PEERROUTES=yesIPV6_PRIVACY=no[root@controller openvswitch]# cd /etc/sysconfig/network-scripts[root@controller network-scripts]#[root@controller network-scripts]# vim ifcfg-eno16777736[root@controller network-scripts]#// 新物理网卡数据,也要连接到OVS桥上(br-ex)[root@controller network-scripts]# cat ifcfg-eno16777736TYPE=OVSPortNAME=eno16777736DEVICE=eno16777736ONBOOT=yesDEVICETYPE=ovsOVS_BRIDGE=br-ex// 重启网卡[root@controller network-scripts]# systemctl restart network[root@controller network-scripts]#// 若重启网卡失败,则需要关闭NetworkManager服务[root@controller ~]# systemctl status NetworkManagerNetworkManager.service - Network ManagerLoaded: loaded (/usr/lib/systemd/system/NetworkManager.service; disabled)Active: inactive (dead) since 六 2023-05-20 23:10:19 CST; 9min agoMain PID: 1105 (code=exited, status=0/SUCCESS)CGroup: /system.slice/NetworkManager.service5月 20 23:10:18 controller NetworkManager[1105]: <info> caught signal 15, shutting down normally.5月 20 23:10:18 controller NetworkManager[1105]: <info> (eno16777736): device state change: disconnected -> unmanaged (reason 'removed') [30 10 36]5月 20 23:10:19 controller NetworkManager[1105]: <info> (eno16777736): enslaved to non-master-type device ovs-system; ignoring5月 20 23:10:19 controller NetworkManager[1105]: <info> (eno16777736): link disconnected5月 20 23:10:19 controller NetworkManager[1105]: <info> (eno16777736): enslaved to non-master-type device ovs-system; ignoring5月 20 23:10:19 controller NetworkManager[1105]: <info> (eno16777736): enslaved to non-master-type device ovs-system; ignoring5月 20 23:10:19 controller NetworkManager[1105]: <info> (eno33554960): device state change: disconnected -> unmanaged (reason 'removed') [30 10 36]5月 20 23:10:19 controller NetworkManager[1105]: <info> (eno33554960): link disconnected5月 20 23:10:19 controller NetworkManager[1105]: <info> exiting (success)5月 20 23:10:19 controller systemd[1]: Stopped Network Manager.[root@controller ~]#[root@controller ~]# systemctl disable NetworkManager[root@controller ~]# systemctl stop NetworkManager注意点:关闭NetworkManager后,需要再次重启网卡systemctl restart network

物理网卡、桥接网卡,相当于虚拟交换机,一个物理网卡可以做成一个虚拟交换机、虚拟网卡。

ovs在集中管理和控制方面有更多功能,多用于云平台

Linux Bridge具有良好的稳定性,适用于大规模网络部署

br-ex:外部的,特指internet

改br-ex 它是 OVS类型的交换机

通过br-ex连通外网

流量上外网方向

VM1——DVS——上行链路——物理网口——物理交换机——网络节点的服务器端口——上行链路——虚拟交换机——vrouter——br-ex——出去外网

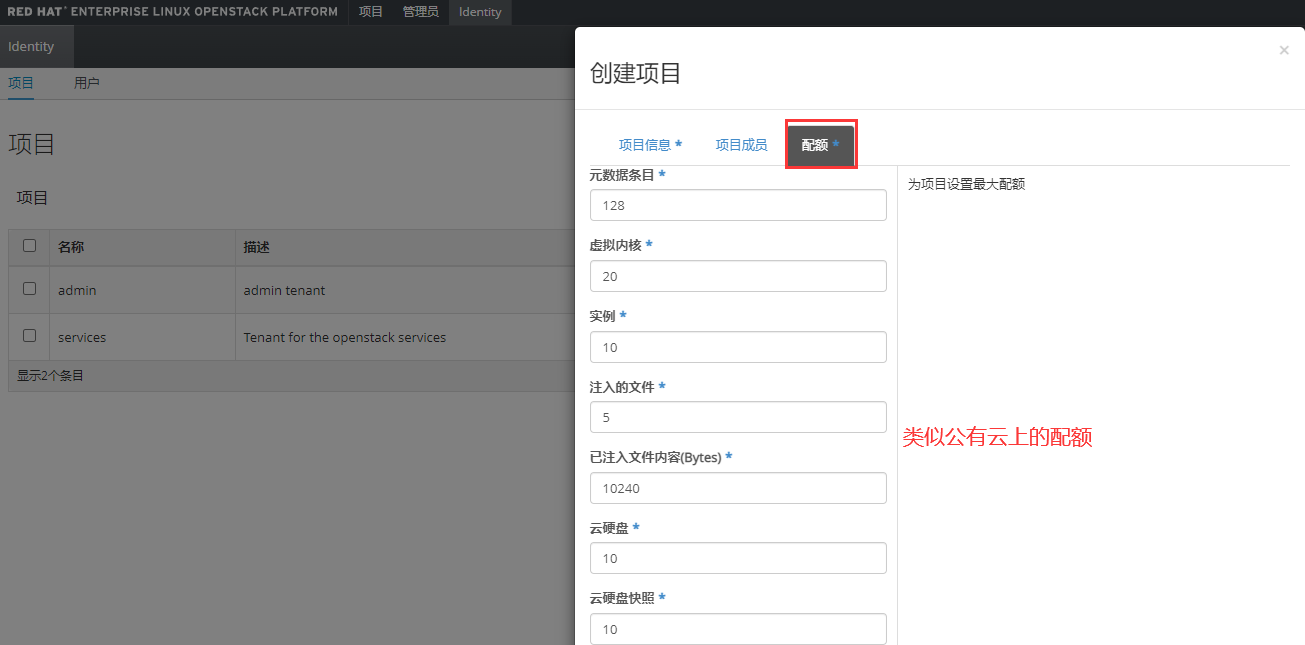

图形界面操作OpenStack

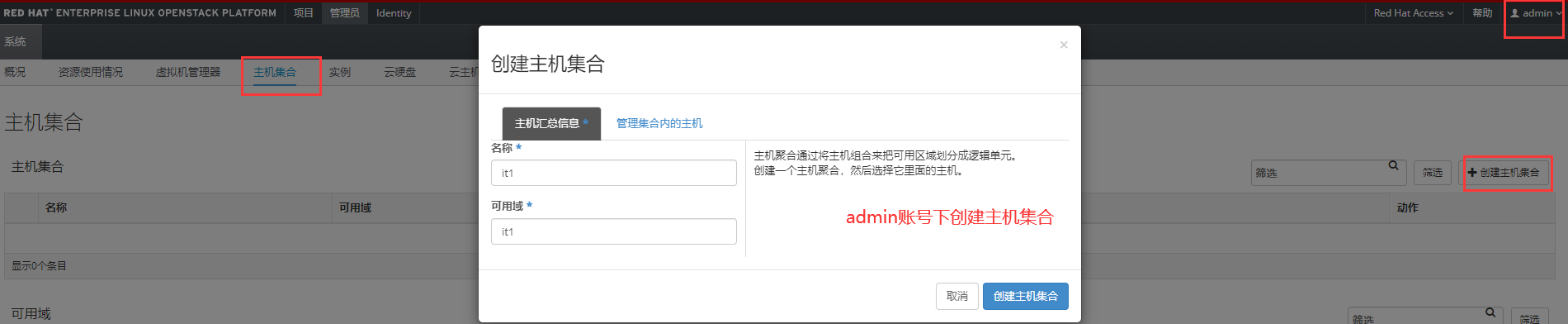

使用admin账号操作:

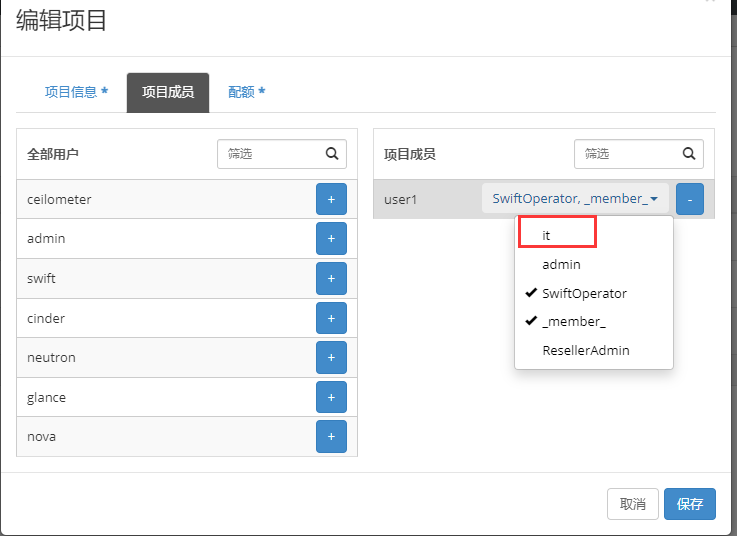

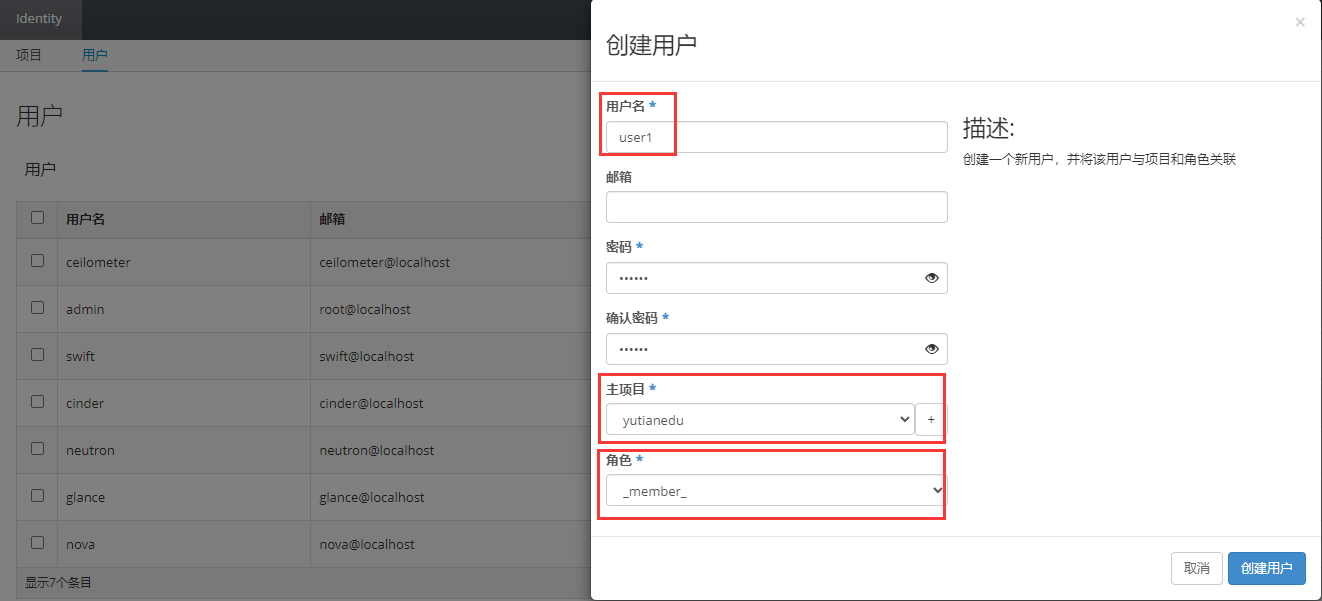

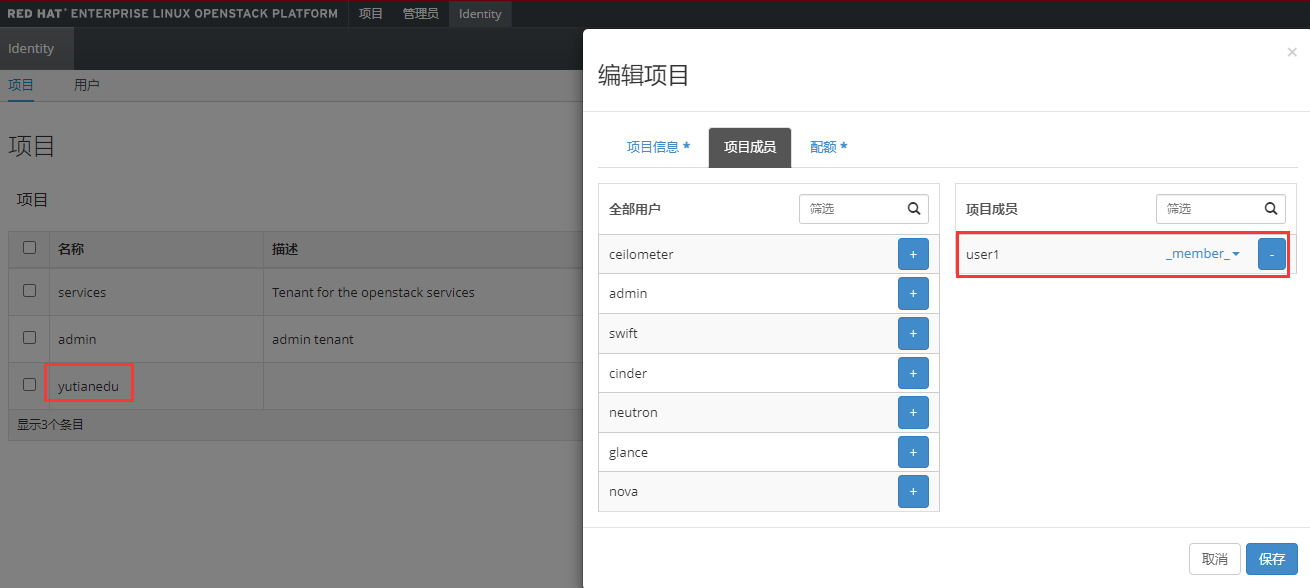

- 创建租户

- 创建用户,然后将该用户关联上租户

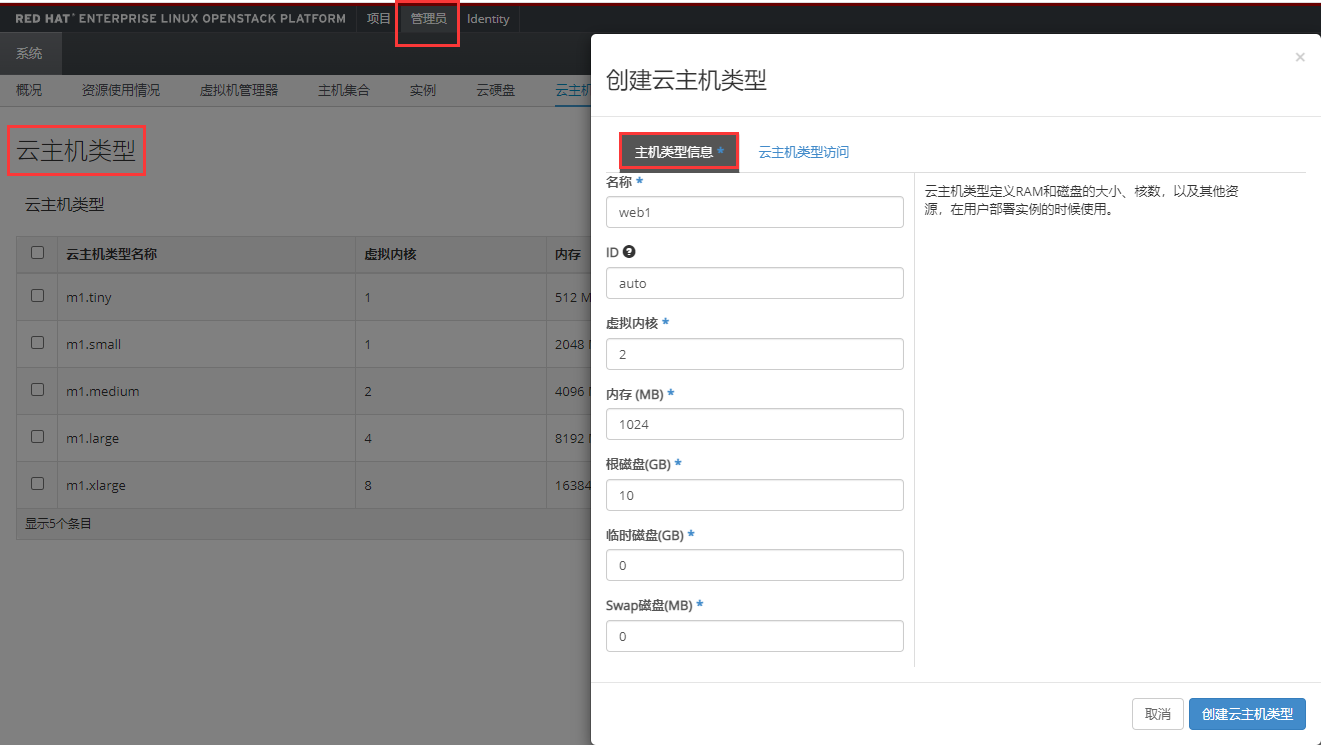

- 创建规格:2cpu、1024M、10G 根磁盘

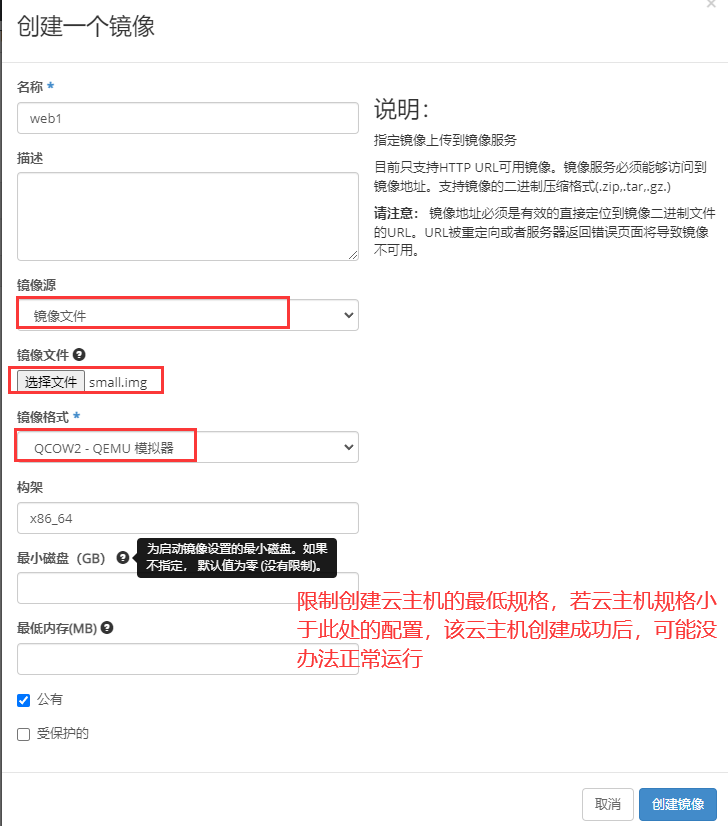

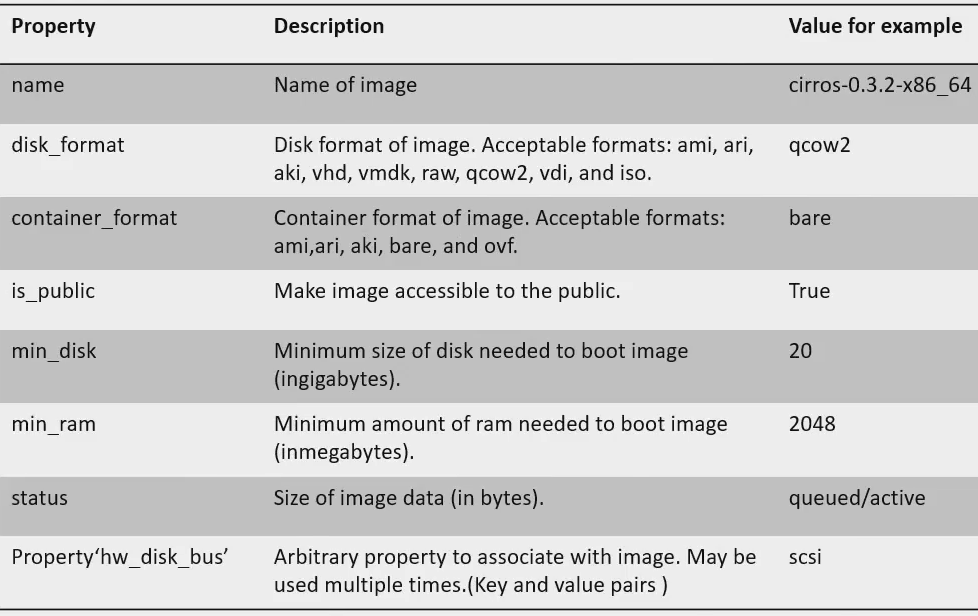

- 上传镜像,设置成公共镜像。上传本地镜像文件或者使cirros镜像:用http://download.cirros-cloud.net/

- 创建外部网络(public,就是公有云上的弹性ip(浮动ip),目的是云主机可以上网) ```shell [root@controller ~]# [root@controller ~]# cd /etc/neutron/plugins/openvswitch/ [root@controller openvswitch]# [root@controller openvswitch]# cat ovs_neutron_plugin.ini

[root@controller ml2]# cd /etc/neutron/plugins/ml2 [root@controller ml2]# [root@controller ml2]# cat ml2_conf.ini [ml2]

(ListOpt) List of network type driver entrypoints to be loaded from

the neutron.ml2.type_drivers namespace.

#

type_drivers = local,flat,vlan,gre,vxlan

// 可以添加多个,用逗号隔开 type_drivers = vxlan

Example: type_drivers = flat,vlan,gre,vxlan

(ListOpt) Ordered list of network_types to allocate as tenant

networks. The default value ‘local’ is useful for single-box testing

but provides no connectivity between hosts.

#

tenant_network_types = local

// 租户网络配置,默认是vxlan tenant_network_types = vxlan

Example: tenant_network_types = vlan,gre,vxlan

(ListOpt) Ordered list of networking mechanism driver entrypoints

to be loaded from the neutron.ml2.mechanism_drivers namespace.

mechanism_drivers =

mechanism_drivers =openvswitch

Example: mechanism_drivers = openvswitch,mlnx

Example: mechanism_drivers = arista

Example: mechanism_drivers = cisco,logger

Example: mechanism_drivers = openvswitch,brocade

Example: mechanism_drivers = linuxbridge,brocade

(ListOpt) Ordered list of extension driver entrypoints

to be loaded from the neutron.ml2.extension_drivers namespace.

extension_drivers =

Example: extension_drivers = anewextensiondriver

[ml2_type_flat]

(ListOpt) List of physical_network names with which flat networks

can be created. Use * to allow flat networks with arbitrary

physical_network names.

#

flat_networks =

Example:flat_networks = physnet1,physnet2

Example:flat_networks = *

[ml2_type_vlan]

(ListOpt) List of [::] tuples

specifying physical_network names usable for VLAN provider and

tenant networks, as well as ranges of VLAN tags on each

physical_network available for allocation as tenant networks.

#

network_vlan_ranges =

Example: network_vlan_ranges = physnet1:1000:2999,physnet2

[ml2_type_gre]

(ListOpt) Comma-separated list of : tuples enumerating ranges of GRE tunnel IDs that are available for tenant network allocation

tunnel_id_ranges =

[ml2_type_vxlan]

(ListOpt) Comma-separated list of : tuples enumerating

ranges of VXLAN VNI IDs that are available for tenant network allocation.

#

vni_ranges =

vni_ranges =10:100

(StrOpt) Multicast group for the VXLAN interface. When configured, will

enable sending all broadcast traffic to this multicast group. When left

unconfigured, will disable multicast VXLAN mode.

#

vxlan_group =

vxlan_group =224.0.0.1

Example: vxlan_group = 239.1.1.1

[securitygroup]

Controls if neutron security group is enabled or not.

It should be false when you use nova security group.

enable_security_group = True

enable_security_group = True

Use ipset to speed-up the iptables security groups. Enabling ipset support

requires that ipset is installed on L2 agent node.

enable_ipset = True

[root@controller ml2]#

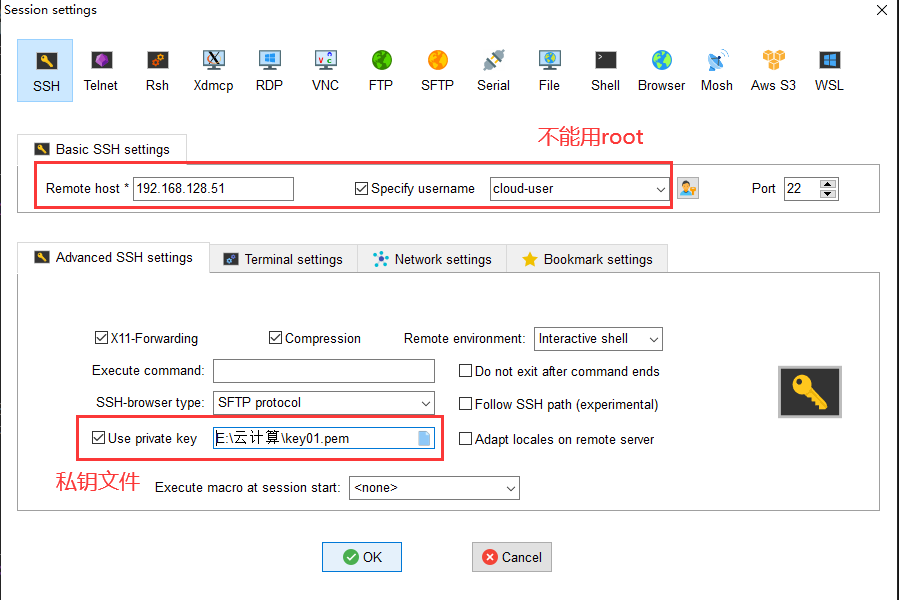

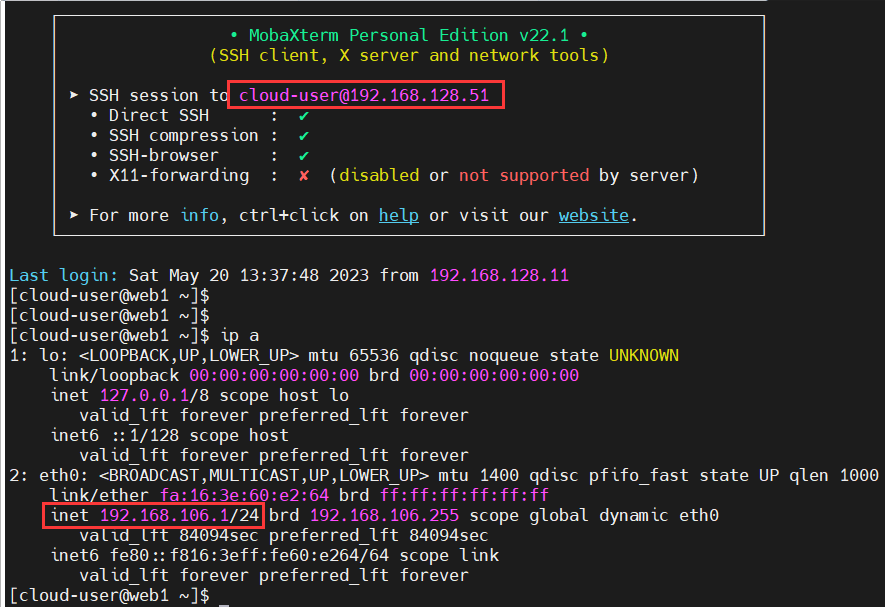

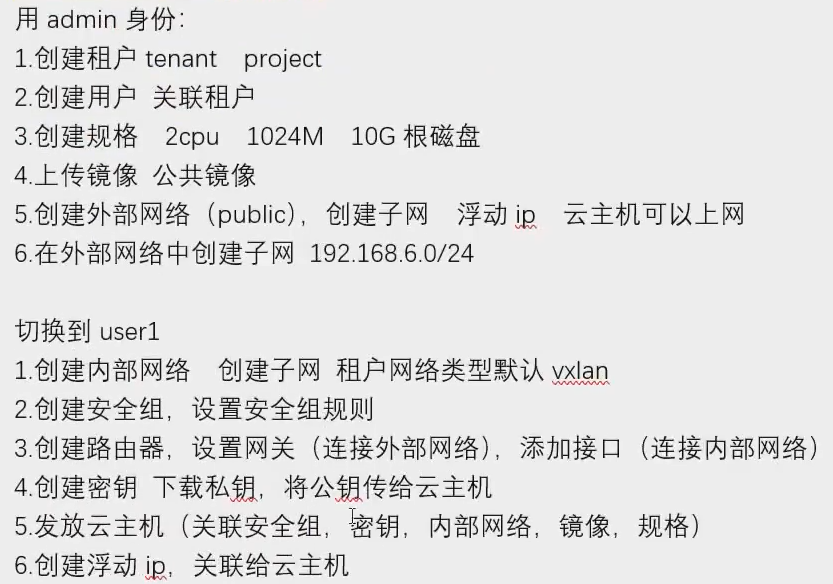

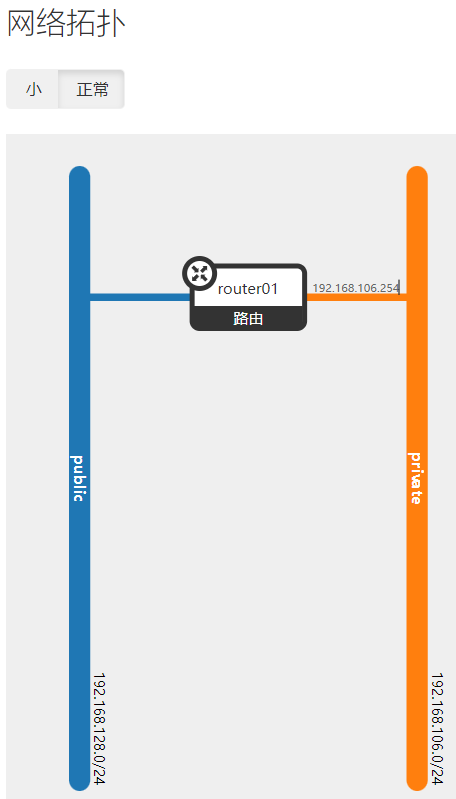

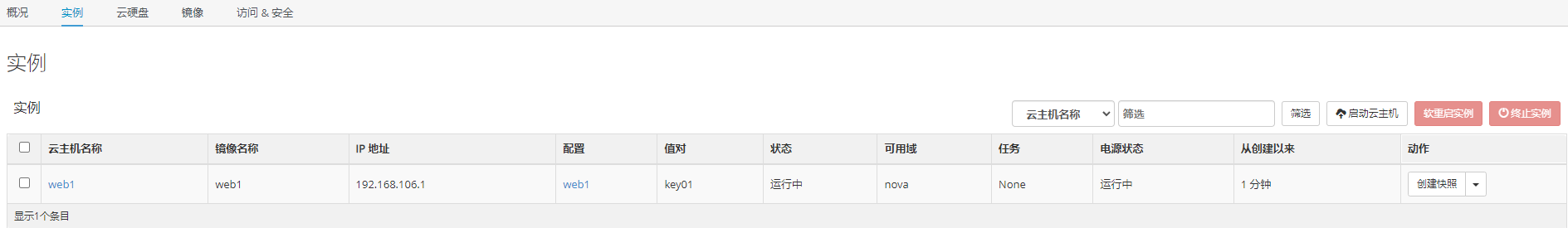

1. 192.168.128.0/24 这个网段上模拟弹性ip6. 在上述的外部网络上创建子网:192.168.128.0/247. <br />使用普通用户user1进行操作:密码1234561. 创建内部网络,租户网络类型默认是vxlan,不能选择<br /><br />2. 创建路由器,设置网关(连接外部网络),添加接口(连接内部网络)<br /><br /><br />3. 创建密钥对,下载私钥,私钥放在客户端,将公钥传给云主机<br />4. 创建安全组,设置安全组规则 <br />5. 分配浮动ip。相当于在公有云上购买一个弹性ip,绑定到云主机使用6. 创建实例。类似于公有云上的创建弹性云服务器ECS<br /><br /><br /><br /><br /><br /><br />7. 测试登录云主机```shell// 注意点一:若ssh未指定密码,默认采用的是.ssh/id_rsa中的秘钥[root@controller ~]# ssh root@192.168.128.51The authenticity of host '192.168.128.51 (192.168.128.51)' can't be established.ECDSA key fingerprint is 44:bb:59:5b:53:3e:f3:e5:aa:e1:ce:58:9e:f9:b3:ca.Are you sure you want to continue connecting (yes/no)? yesWarning: Permanently added '192.168.128.51' (ECDSA) to the list of known hosts.Permission denied (publickey,gssapi-keyex,gssapi-with-mic).[root@controller ~]#[root@controller ~]# ls .ssh/id_rsa.ssh/id_rsa[root@controller ~]# lsanaconda-ks.cfg answer.txt key01.pem keystonerc_admin[root@controller ~]#// 注意点二:key01.pem文件权限不能太大[root@controller ~]# ssh -i key01.pem root@192.168.128.51@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ WARNING: UNPROTECTED PRIVATE KEY FILE! @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@Permissions 0644 for 'key01.pem' are too open.It is required that your private key files are NOT accessible by others.This private key will be ignored.bad permissions: ignore key: key01.pemPermission denied (publickey,gssapi-keyex,gssapi-with-mic).[root@controller ~]#[root@controller ~]# chmod 400 key01.pem[root@controller ~]#// 注意点三:不能使用root直接登录,可以使用cloud-user进行连接[root@controller ~]# ssh -i key01.pem root@192.168.128.51Please login as the user "cloud-user" rather than the user "root".^CConnection to 192.168.128.51 closed.[root@controller ~]#[root@controller ~]# ssh -i key01.pem cloud-user@192.168.128.51[cloud-user@web1 ~]$// 云主机登录成功后,切换root账号,其密码是redhat[cloud-user@web1 ~]$ su - root密码:su: 鉴定故障[cloud-user@web1 ~]$[cloud-user@web1 ~]$ su - root密码:上一次登录:六 5月 20 13:37:26 EDT 2023从 192.168.128.11pts/0 上最后一次失败的登录:六 5月 20 13:38:25 EDT 2023pts/0 上最有一次成功登录后有 1 次失败的登录尝试。[root@web1 ~]#[root@web1 ~]# exitlogout[cloud-user@web1 ~]$ ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWNlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc pfifo_fast state UP qlen 1000link/ether fa:16:3e:60:e2:64 brd ff:ff:ff:ff:ff:ffinet 192.168.106.1/24 brd 192.168.106.255 scope global dynamic eth0valid_lft 84872sec preferred_lft 84872secinet6 fe80::f816:3eff:fe60:e264/64 scope linkvalid_lft forever preferred_lft forever[cloud-user@web1 ~]$

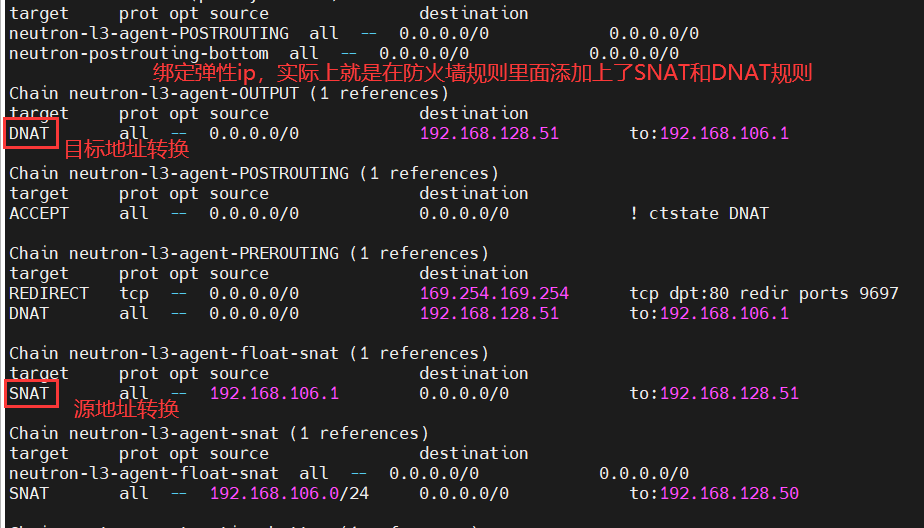

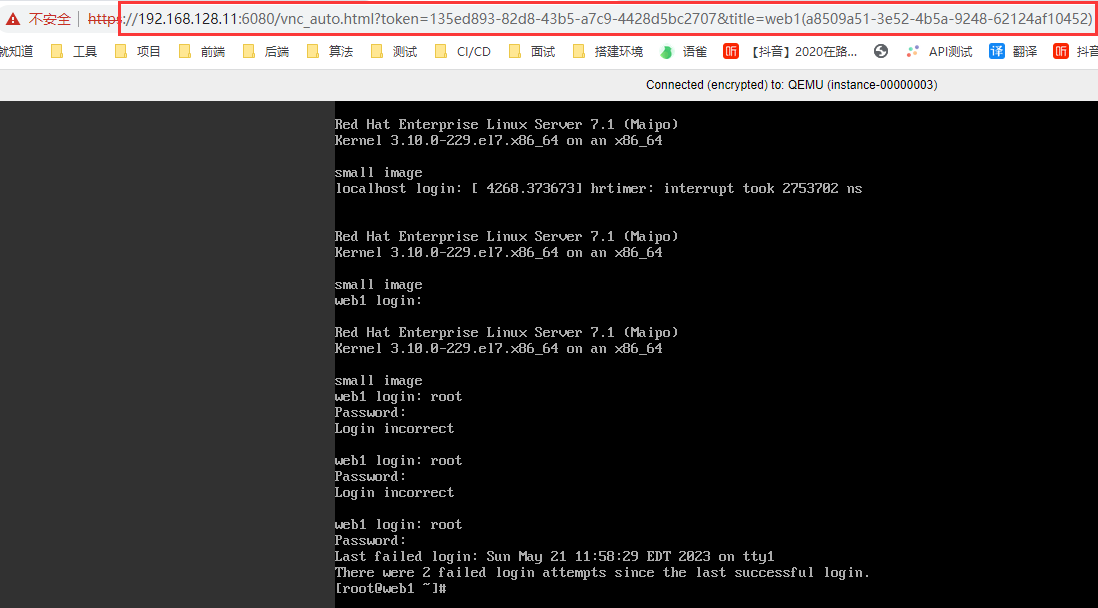

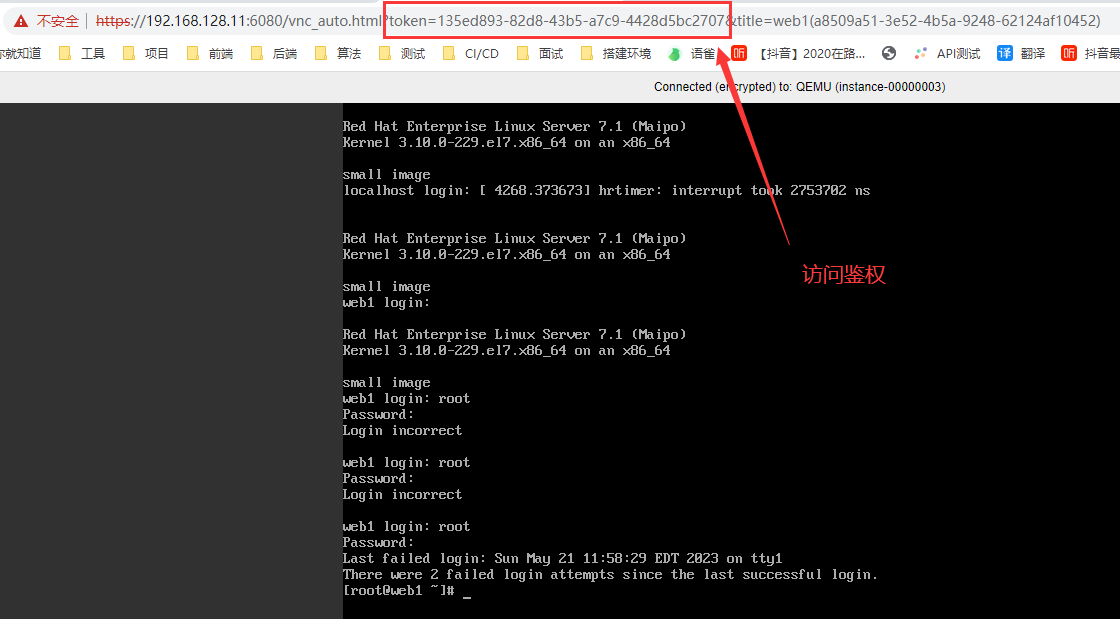

本地登录云主机:

由于绑定了浮动ip(弹性ip),所以外部可以访问这台云主机

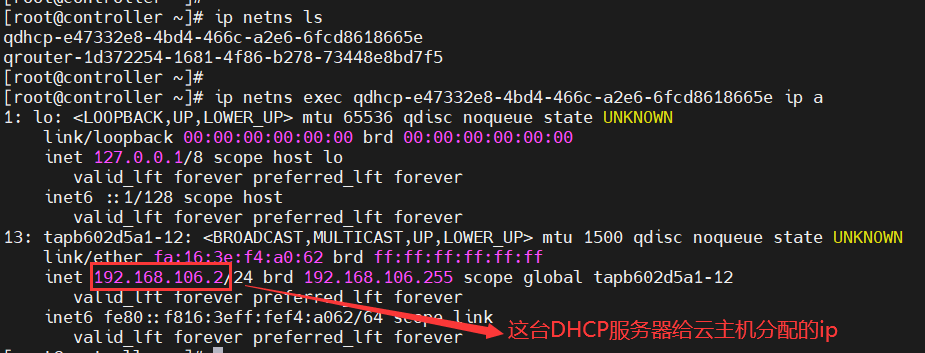

- 查看虚拟路由器配置规则和ip

# 查看路由器配置[root@controller ~]# ip netns lsqdhcp-e47332e8-4bd4-466c-a2e6-6fcd8618665e# 虚拟路由器qrouter-1d372254-1681-4f86-b278-73448e8bd7f5[root@controller ~]## 查看路由器配置,在尾部加上执行命令,比如ip a[root@controller ~]# ip netns exec qrouter-1d372254-1681-4f86-b278-73448e8bd7f5 ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWNlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever11: qg-60a5a596-86: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWNlink/ether fa:16:3e:4e:b0:98 brd ff:ff:ff:ff:ff:ffinet 192.168.128.50/24 brd 192.168.128.255 scope global qg-60a5a596-86valid_lft forever preferred_lft foreverinet 192.168.128.51/32 brd 192.168.128.51 scope global qg-60a5a596-86valid_lft forever preferred_lft foreverinet6 fe80::f816:3eff:fe4e:b098/64 scope linkvalid_lft forever preferred_lft forever12: qr-934ee00e-fb: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWNlink/ether fa:16:3e:d4:85:db brd ff:ff:ff:ff:ff:ffinet 192.168.106.254/24 brd 192.168.106.255 scope global qr-934ee00e-fbvalid_lft forever preferred_lft foreverinet6 fe80::f816:3eff:fed4:85db/64 scope linkvalid_lft forever preferred_lft forever[root@controller ~]## 绑定弹性ip,实际上就是在防火墙规则里面添加上了SNAT和DNAT规则# 查看路由器上设置的规则[root@controller ~]# ip netns exec qrouter-1d372254-1681-4f86-b278-73448e8bd7f5 iptables -t nat -nLChain PREROUTING (policy ACCEPT)target prot opt source destinationneutron-l3-agent-PREROUTING all -- 0.0.0.0/0 0.0.0.0/0Chain INPUT (policy ACCEPT)target prot opt source destinationChain OUTPUT (policy ACCEPT)target prot opt source destinationneutron-l3-agent-OUTPUT all -- 0.0.0.0/0 0.0.0.0/0Chain POSTROUTING (policy ACCEPT)target prot opt source destinationneutron-l3-agent-POSTROUTING all -- 0.0.0.0/0 0.0.0.0/0neutron-postrouting-bottom all -- 0.0.0.0/0 0.0.0.0/0Chain neutron-l3-agent-OUTPUT (1 references)target prot opt source destination# DNAT:目标地址转换:访问公网的ip:192.168.128.51,转换到访问云主机的内网地址192.168.106.1DNAT all -- 0.0.0.0/0 192.168.128.51 to:192.168.106.1Chain neutron-l3-agent-POSTROUTING (1 references)target prot opt source destinationACCEPT all -- 0.0.0.0/0 0.0.0.0/0 ! ctstate DNATChain neutron-l3-agent-PREROUTING (1 references)target prot opt source destinationREDIRECT tcp -- 0.0.0.0/0 169.254.169.254 tcp dpt:80 redir ports 9697DNAT all -- 0.0.0.0/0 192.168.128.51 to:192.168.106.1Chain neutron-l3-agent-float-snat (1 references)target prot opt source destination# SNAT:源地址转换(内网主机上网):源地址是内网的ip:192.168.106.1,转换到公网的ip:192.168.128.51SNAT all -- 192.168.106.1 0.0.0.0/0 to:192.168.128.51Chain neutron-l3-agent-snat (1 references)target prot opt source destinationneutron-l3-agent-float-snat all -- 0.0.0.0/0 0.0.0.0/0SNAT all -- 192.168.106.0/24 0.0.0.0/0 to:192.168.128.50Chain neutron-postrouting-bottom (1 references)target prot opt source destinationneutron-l3-agent-snat all -- 0.0.0.0/0 0.0.0.0/0[root@controller ~]# ip netns lsqdhcp-e47332e8-4bd4-466c-a2e6-6fcd8618665eqrouter-1d372254-1681-4f86-b278-73448e8bd7f5[root@controller ~]#[root@controller ~]# ip netns exec qdhcp-e47332e8-4bd4-466c-a2e6-6fcd8618665e ip a1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWNlink/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever13: tapb602d5a1-12: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWNlink/ether fa:16:3e:f4:a0:62 brd ff:ff:ff:ff:ff:ffinet 192.168.106.2/24 brd 192.168.106.255 scope global tapb602d5a1-12valid_lft forever preferred_lft foreverinet6 fe80::f816:3eff:fef4:a062/64 scope linkvalid_lft forever preferred_lft forever[root@controller ~]#

查看控制节点运行的服务

[root@controller ~]# openstack-service statusneutron-dhcp-agent (pid 12858) is activeneutron-l3-agent (pid 12887) is activeneutron-metadata-agent (pid 12976) is activeneutron-openvswitch-agent (pid 12613) is activeneutron-server (pid 12916) is activeopenstack-ceilometer-alarm-evaluator (pid 18655) is activeopenstack-ceilometer-alarm-notifier (pid 18579) is activeopenstack-ceilometer-api (pid 18613) is activeopenstack-ceilometer-central (pid 18545) is activeopenstack-ceilometer-collector (pid 18513) is activeopenstack-ceilometer-notification (pid 18484) is activeopenstack-cinder-api (pid 10294) is activeopenstack-cinder-backup (pid 10373) is activeopenstack-cinder-scheduler (pid 10517) is activeopenstack-cinder-volume (pid 10492) is activeopenstack-glance-api (pid 9535) is activeopenstack-glance-registry (pid 9500) is activeopenstack-keystone (pid 8174) is activeopenstack-losetup (pid 0) is activeopenstack-nova-api (pid 11029) is activeopenstack-nova-cert (pid 11701) is activeopenstack-nova-conductor (pid 11617) is activeopenstack-nova-consoleauth (pid 11551) is activeopenstack-nova-novncproxy (pid 14094) is activeopenstack-nova-scheduler (pid 11585) is activeopenstack-swift-account-auditor (pid 16024) is activeopenstack-swift-account-reaper (pid 15995) is activeopenstack-swift-account-replicator (pid 16586) is activeopenstack-swift-account (pid 16621) is activeopenstack-swift-container-auditor (pid 16152) is activeopenstack-swift-container-replicator (pid 16123) is activeopenstack-swift-container-updater (pid 16777) is activeopenstack-swift-container (pid 16318) is activeopenstack-swift-object-auditor (pid 16251) is activeopenstack-swift-object-replicator (pid 16739) is activeopenstack-swift-object-updater (pid 16286) is activeopenstack-swift-object (pid 16704) is activeopenstack-swift-proxy (pid 16521) is active[root@controller ~]#

查看计算节点运行的服务 ```shell [root@compute ~]# openstack-service status

计算节点若运行多个虚拟机,它们在同一个计算节点上互通,不再需要通过物理网卡转发出去。通过neutron在内部就创建了一个虚拟交换机

neutron-openvswitch-agent (pid 38867) is active

汇总compute节点的使用信息

openstack-ceilometer-compute (pid 38150) is active

只要运行了nova-compute这个服务,那么这个节点就是计算节点

openstack-nova-compute (pid 38193) is active [root@compute ~]#

查看发放的云主机

[root@compute ~]# virsh list —all Id 名称 状态

2 instance-00000001 running

[root@compute ~]#

11. 查看每个组件的配置文件以及管理OpenStack的服务```shell[root@controller /]# cd /etc/nova[root@controller nova]#[root@controller nova]# lsapi-paste.ini nova.conf nova.crt nova.key policy.json release rootwrap.conf[root@controller nova]#[root@controller nova]# cd /etc/cinder/[root@controller cinder]#[root@controller cinder]# lsapi-paste.ini cinder.conf policy.json rootwrap.conf volumes[root@controller cinder]#[root@controller cinder]# cd /etc/neutron/[root@controller neutron]#[root@controller neutron]# lsapi-paste.ini dnsmasq-neutron.conf l3_agent.ini metadata_agent.ini plugin.ini policy.jsondhcp_agent.ini fwaas_driver.ini lbaas_agent.ini neutron.conf plugins rootwrap.conf[root@controller neutron]#[root@controller neutron]## 修改了某个配置文件后,需要重启对应的服务[root@controller neutron]# systemctl restart openstack-openstack-ceilometer-alarm-evaluator.service openstack-keystone.service openstack-swift-account.serviceopenstack-ceilometer-alarm-notifier.service openstack-losetup.service openstack-swift-container-auditor.serviceopenstack-ceilometer-api.service openstack-nova-api.service openstack-swift-container-replicator.serviceopenstack-ceilometer-central.service openstack-nova-cert.service openstack-swift-container.serviceopenstack-ceilometer-collector.service openstack-nova-compute.service openstack-swift-container-updater.serviceopenstack-ceilometer-notification.service openstack-nova-conductor.service openstack-swift-object-auditor.serviceopenstack-cinder-api.service openstack-nova-consoleauth.service openstack-swift-object-replicator.serviceopenstack-cinder-backup.service openstack-nova-novncproxy.service openstack-swift-object.serviceopenstack-cinder-scheduler.service openstack-nova-scheduler.service openstack-swift-object-updater.serviceopenstack-cinder-volume.service openstack-swift-account-auditor.service openstack-swift-proxy.serviceopenstack-glance-api.service openstack-swift-account-reaper.serviceopenstack-glance-registry.service openstack-swift-account-replicator.service[root@controller neutron]# systemctl restart openstack-[root@controller ~]## 重启整个neutron组件下的所有服务[root@controller ~]# openstack-service restart neutron[root@controller ~]#

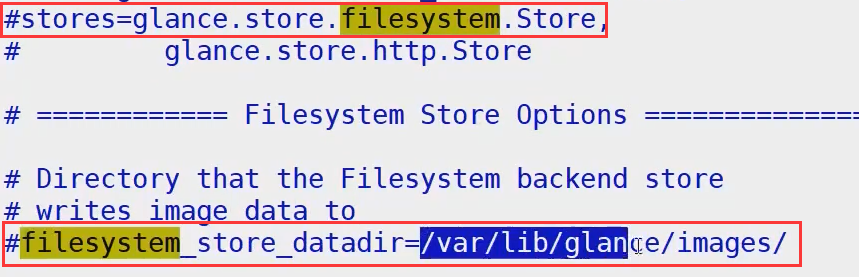

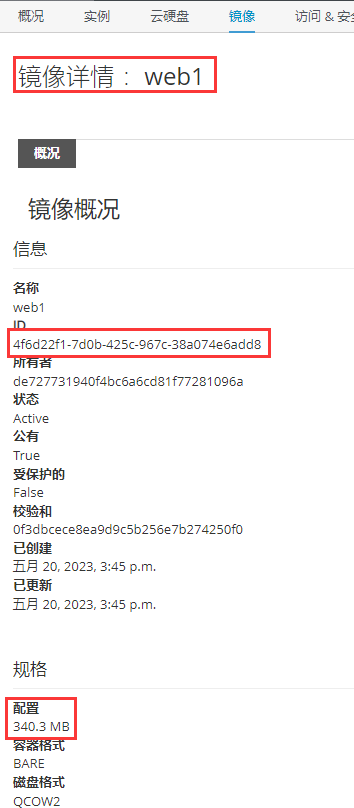

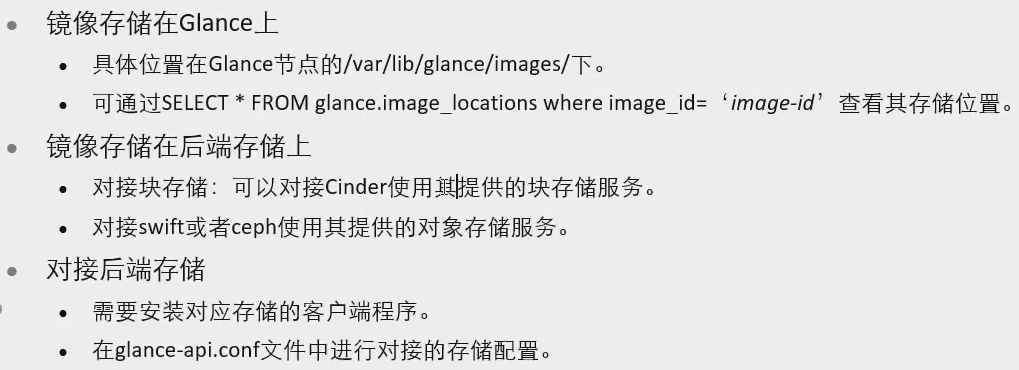

查看镜像

[root@controller ~]# cat /etc/glance/glance-api.conf[root@controller images]#[root@controller ~]# cd /var/lib/glance/images[root@controller images]#[root@controller images]# ll -h总用量 341M-rw-r-----. 1 glance glance 341M 5月 20 23:45 4f6d22f1-7d0b-425c-967c-38a074e6add8[root@controller images]#

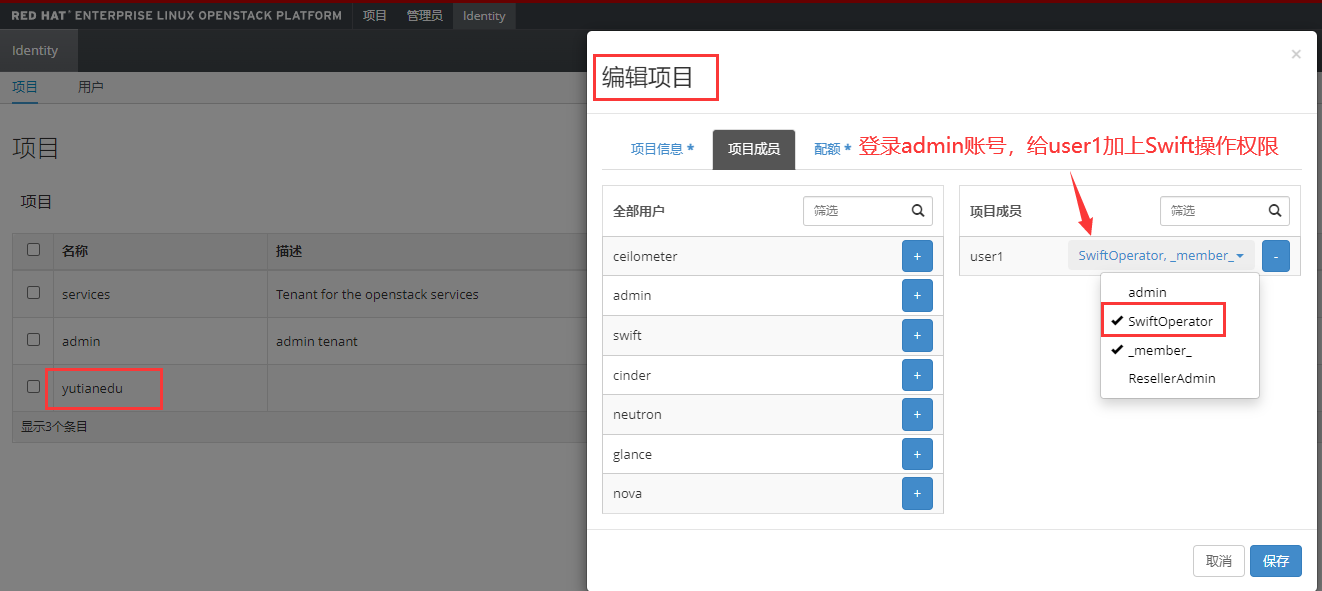

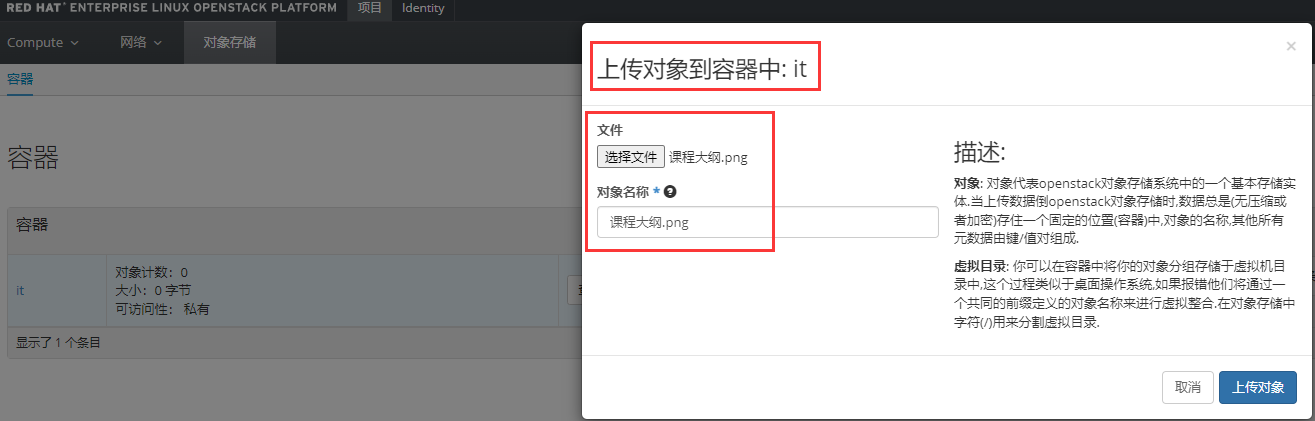

使用对象存储

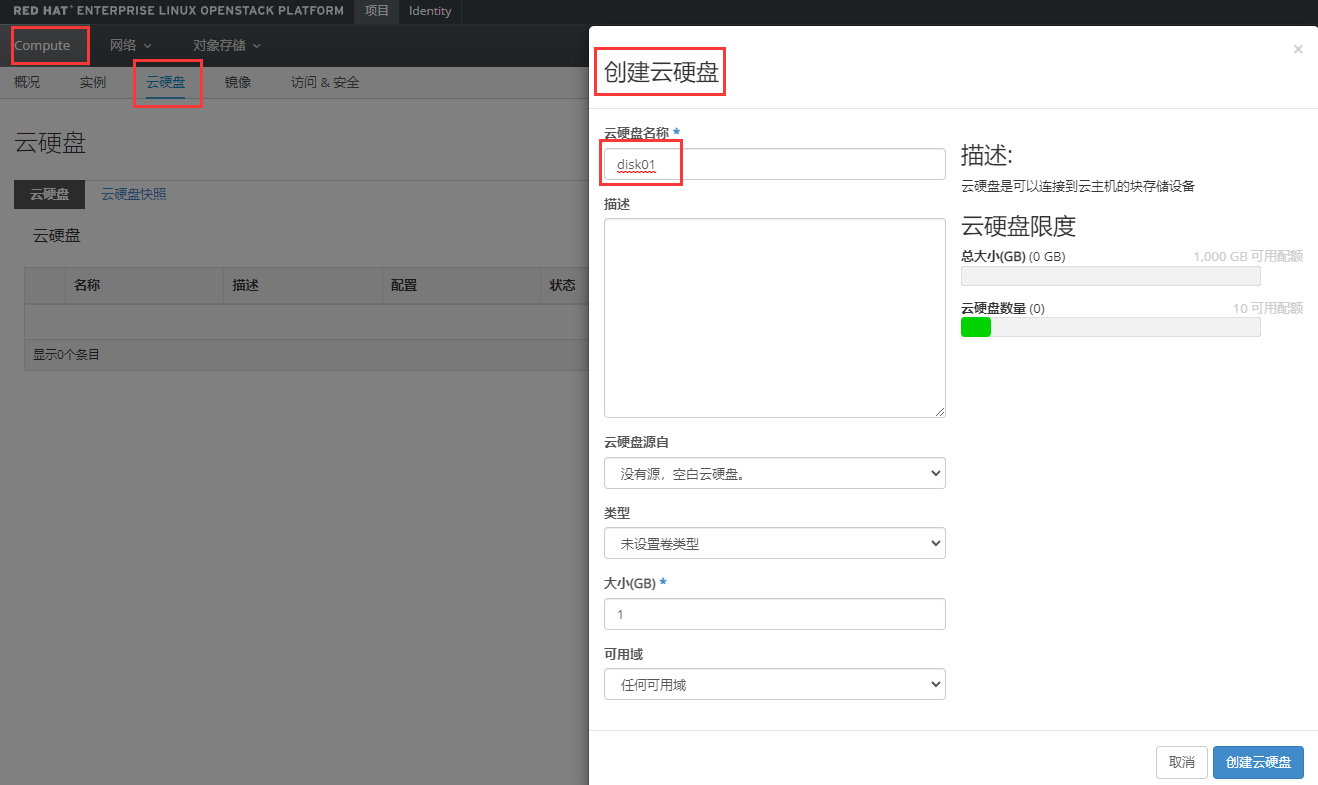

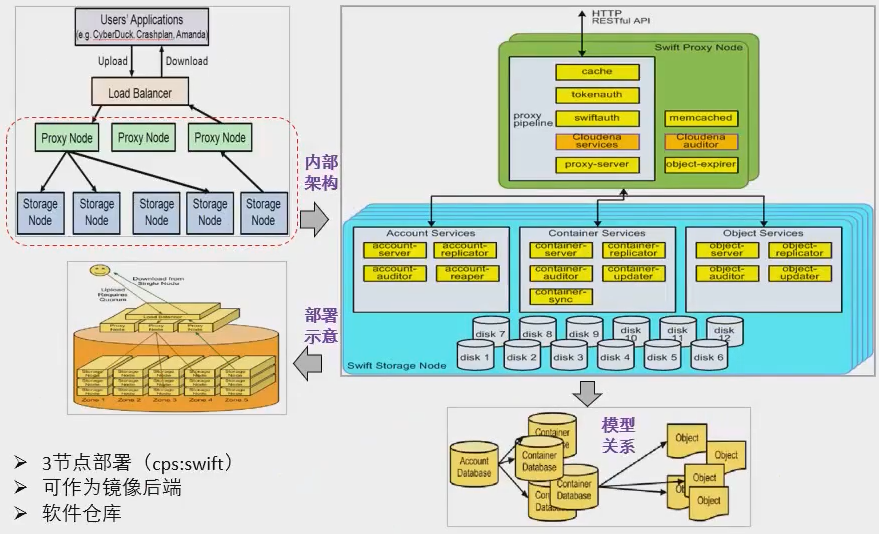

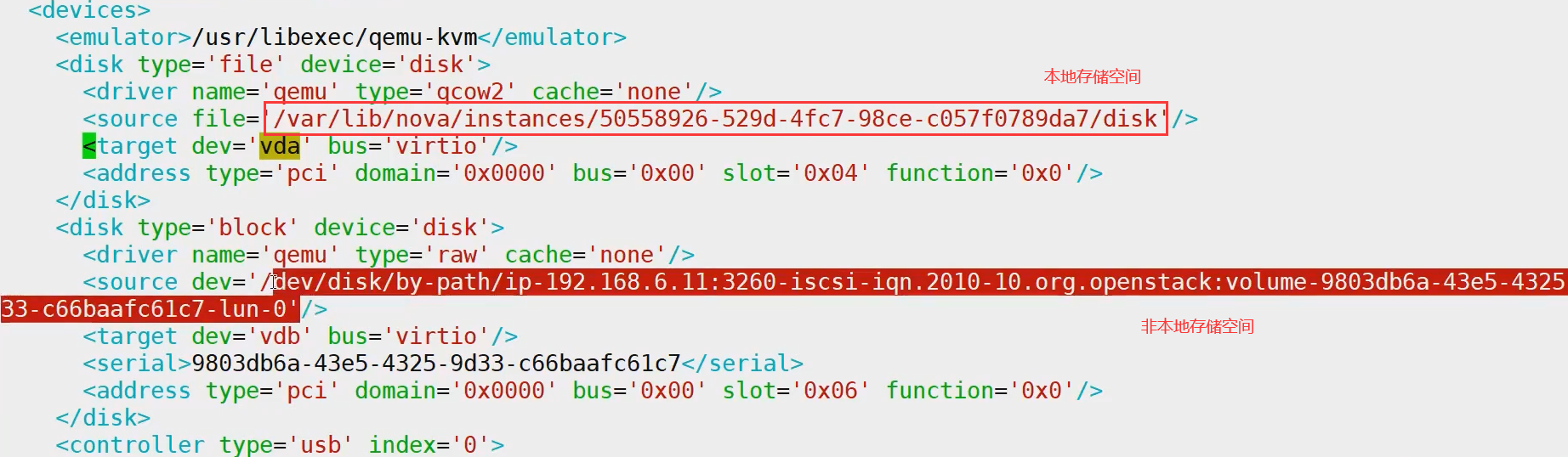

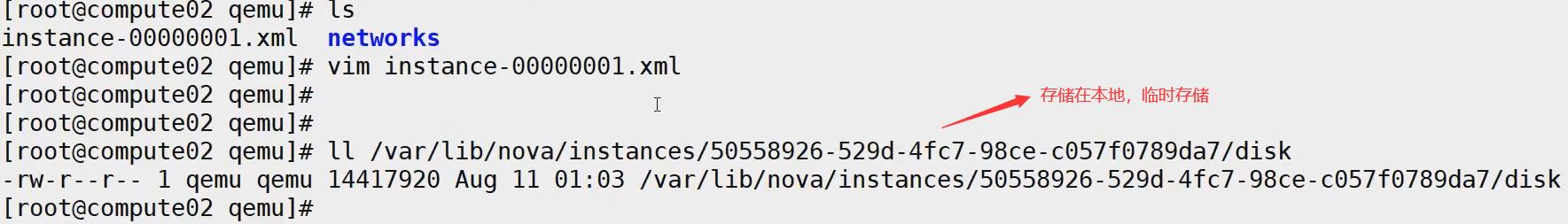

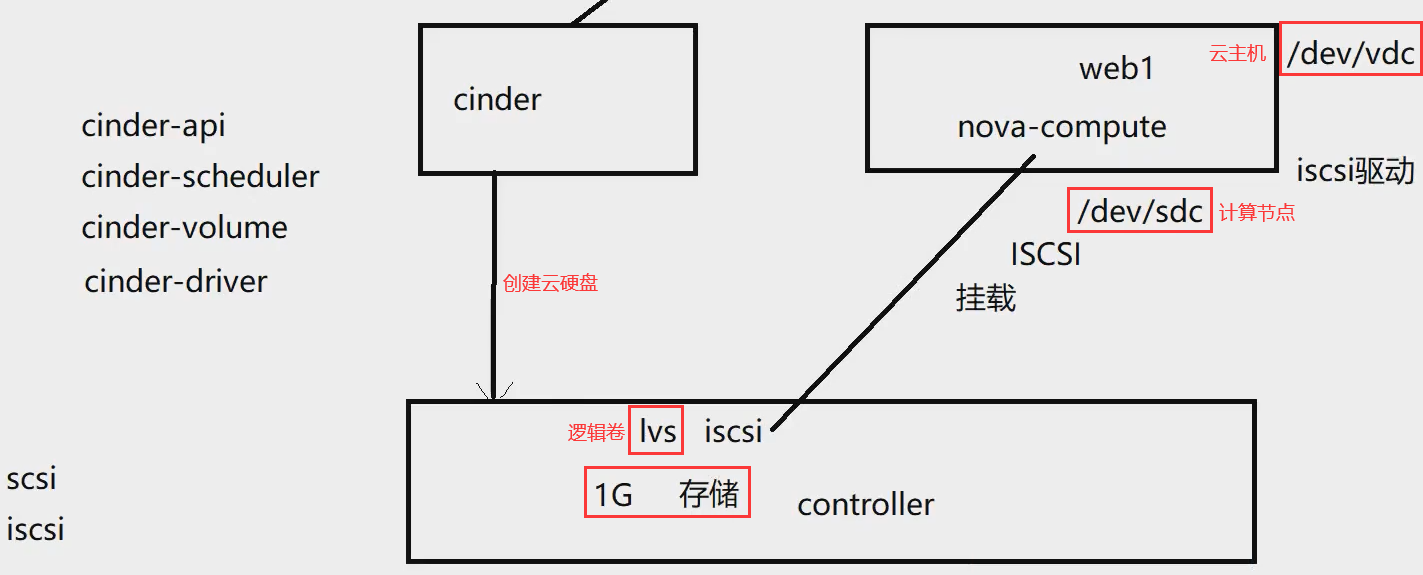

- 使用cinder(云硬盘),可以挂载给云主机使用

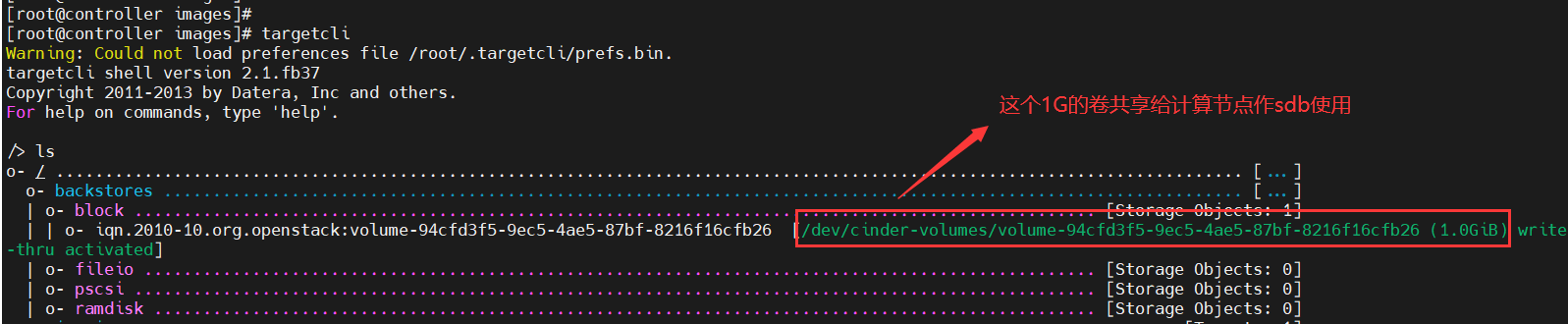

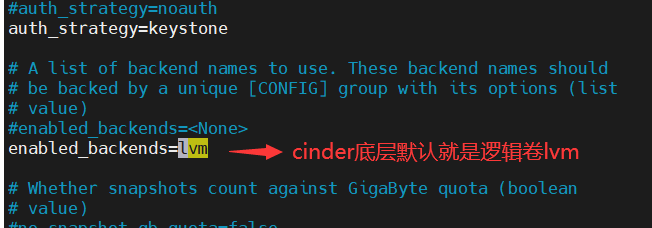

// 云主机web1挂载云硬盘之前[cloud-user@web1 ~]$ lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTvda 253:0 0 10G 0 disk└─vda1 253:1 0 10G 0 part /[cloud-user@web1 ~]$// 云主机web1挂载云硬盘之后。vdb就是通过cinder组件实现的,1G的空间来自于[cloud-user@web1 ~]$ lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTvda 253:0 0 10G 0 disk└─vda1 253:1 0 10G 0 part /vdb 253:16 0 1G 0 disk[cloud-user@web1 ~]$// 虚拟机的文件目录(云主机在计算节点中)[root@compute ~]# virsh list --allId 名称 状态----------------------------------------------------2 instance-00000001 running[root@compute ~]# cd /etc/libvirt/qemu/[root@compute qemu]## vdb对应的文件是:/dev/disk/by-path/ip-192.168.128.11:3260-iscsi-iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26-lun-0。通过iscsi做的共享[root@compute qemu]# cat instance-00000001.xml<!--WARNING: THIS IS AN AUTO-GENERATED FILE. CHANGES TO IT ARE LIKELY TO BEOVERWRITTEN AND LOST. Changes to this xml configuration should be made using:virsh edit instance-00000001or other application using the libvirt API.-->******<disk type='file' device='disk'><driver name='qemu' type='qcow2' cache='none'/><source file='/var/lib/nova/instances/1988d4cb-19ba-47ab-95ff-1393f91c0876/disk'/><target dev='vda' bus='virtio'/><address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/></disk><disk type='block' device='disk'><driver name='qemu' type='raw' cache='none'/><source dev='/dev/disk/by-path/ip-192.168.128.11:3260-iscsi-iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26-lun-0'/><target dev='vdb' bus='virtio'/><serial>94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26</serial><address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/></disk>******# 查看vda磁盘(disk就是vda那块磁盘)。删除云主机会将vda这块磁盘删除,不能进行回收,这块磁盘不是cinder管理的,是一个临时存储[root@compute qemu]# cd /var/lib/nova/instances/1988d4cb-19ba-47ab-95ff-1393f91c0876[root@compute 1988d4cb-19ba-47ab-95ff-1393f91c0876]#[root@compute 1988d4cb-19ba-47ab-95ff-1393f91c0876]# ll总用量 15872-rw-rw----. 1 nova qemu 46174 5月 21 03:17 console.log-rw-r--r--. 1 qemu qemu 16252928 5月 21 03:25 disk-rw-r--r--. 1 nova nova 79 5月 21 01:11 disk.info-rw-r--r--. 1 nova nova 2455 5月 21 01:11 libvirt.xml[root@compute 1988d4cb-19ba-47ab-95ff-1393f91c0876]## vda是一个差分卷,还有一个母盘(修改操作只会在差分盘中进行,写时重定向,ROW技术)[root@compute _base]# ll -h总用量 803M-rw-r--r--. 1 qemu qemu 10G 5月 21 01:11 1a0473c6c138356a537be1a3ed36b6f3f910698d[root@compute _base]## 这个就是母盘,相当于挂载[root@compute _base]# du -sh 1a0473c6c138356a537be1a3ed36b6f3f910698d803M 1a0473c6c138356a537be1a3ed36b6f3f910698d[root@compute _base]#[root@compute _base]# pwd/var/lib/nova/instances/_base[root@compute _base]## sdb映射给云主机当磁盘使用,删除云主机不会删除sdb[root@compute qemu]# ll /dev/disk/by-path/ip-192.168.128.11:3260-iscsi-iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26-lun-0lrwxrwxrwx. 1 root root 9 5月 21 03:17 /dev/disk/by-path/ip-192.168.128.11:3260-iscsi-iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26-lun-0 -> ../../sdb[root@compute qemu]#[root@compute qemu]#[root@compute qemu]# lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTsda 8:0 0 100G 0 disk├─sda1 8:1 0 500M 0 part /boot└─sda2 8:2 0 99.5G 0 part├─rhel-swap 253:0 0 3.9G 0 lvm [SWAP]├─rhel-root 253:1 0 50G 0 lvm /└─rhel-home 253:2 0 45.6G 0 lvm /homesdb 8:16 0 1G 0 disksr0 11:0 1 1024M 0 rom[root@compute qemu]## 控制节点192.168.128.11,通过iscsi将sdb这1G的空间共享到计算节点[root@compute qemu]# iscsiadm -m sessiontcp: [1] 192.168.128.11:3260,1 iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 (non-flash)[root@compute qemu]## 查看控制节点[root@controller images]# targetcliWarning: Could not load preferences file /root/.targetcli/prefs.bin.targetcli shell version 2.1.fb37Copyright 2011-2013 by Datera, Inc and others.For help on commands, type 'help'./> lso- / ......................................................................................................................... [...]o- backstores .............................................................................................................. [...]| o- block .................................................................................................. [Storage Objects: 1]| | o- iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 [/dev/cinder-volumes/volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 (1.0GiB) write-thru activated]| o- fileio ................................................................................................. [Storage Objects: 0]| o- pscsi .................................................................................................. [Storage Objects: 0]| o- ramdisk ................................................................................................ [Storage Objects: 0]o- iscsi ............................................................................................................ [Targets: 1]| o- iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 ............................................. [TPGs: 1]| o- tpg1 .......................................................................................... [no-gen-acls, auth per-acl]| o- acls .......................................................................................................... [ACLs: 1]| | o- iqn.1994-05.com.redhat:bd3b23228545 ...................................................... [1-way auth, Mapped LUNs: 1]| | o- mapped_lun0 ................. [lun0 block/iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 (rw)]| o- luns .......................................................................................................... [LUNs: 1]| | o- lun0 [block/iqn.2010-10.org.openstack:volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 (/dev/cinder-volumes/volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26)]| o- portals .................................................................................................... [Portals: 1]| o- 0.0.0.0:3260 ..................................................................................................... [OK]o- loopback ......................................................................................................... [Targets: 0]/> exit// 控制节点中的这个文件就是一个逻辑卷(dm-3)[root@controller images]# ll /dev/cinder-volumes/volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26lrwxrwxrwx. 1 root root 7 5月 21 03:15 /dev/cinder-volumes/volume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 -> ../dm-3[root@controller images]#[root@controller images]#[root@controller images]# lvsLV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convertvolume-94cfd3f5-9ec5-4ae5-87bf-8216f16cfb26 cinder-volumes -wi-ao---- 1.00ghome rhel -wi-ao---- 41.57groot rhel -wi-ao---- 50.00gswap rhel -wi-ao---- 7.88g[root@controller images]#[root@controller images]## cinder-volumes是一个逻辑卷组。只要创建了一个云硬盘,就要创建一个逻辑卷[root@controller images]# vgsVG #PV #LV #SN Attr VSize VFreecinder-volumes 1 1 0 wz--n- 20.60g 19.60grhel 1 3 0 wz--n- 99.51g 64.00m[root@controller images]#[root@controller images]#[root@controller images]# lsblkNAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTsda 8:0 0 100G 0 disk├─sda1 8:1 0 500M 0 part /boot└─sda2 8:2 0 99.5G 0 part├─rhel-swap 253:0 0 7.9G 0 lvm [SWAP]├─rhel-root 253:1 0 50G 0 lvm /└─rhel-home 253:2 0 41.6G 0 lvm /homesr0 11:0 1 1024M 0 romloop0 7:0 0 2G 0 loop /srv/node/swiftloopbackloop2 7:2 0 20.6G 0 loop└─cinder--volumes-volume--94cfd3f5--9ec5--4ae5--87bf--8216f16cfb26 253:3 0 1G 0 lvm[root@controller images]#[root@controller images]# cat /etc/cinder/cinder.conf# cinder底层默认就是逻辑卷lvmenabled_backends=lvm// 完整链路:控制节点将1G的块存储(卷)共享给计算节点当做sdb使用,控制节点映射给云主机当做磁盘使用

- 排除错误 ```shell // 错误日志文件 [root@controller images]# cd /var/log [root@controller log]# [root@controller log]# ls keystone/ keystone.log [root@controller log]# [root@controller log]# ls nova/ nova-api.log nova-cert.log nova-conductor.log nova-consoleauth.log nova-manage.log nova-novncproxy.log nova-scheduler.log [root@controller log]# [root@controller log]# ls cinder/ api.log backup.log cinder-manage.log scheduler.log volume.log [root@controller log]# [root@controller log]# ls neutron/ dhcp-agent.log metadata-agent.log openvswitch-agent.log server.log l3-agent.log neutron-ns-metadata-proxy-1d372254-1681-4f86-b278-73448e8bd7f5.log ovs-cleanup.log [root@controller log]# [root@controller log]# [root@controller log]# cd cinder/ [root@controller cinder]# [root@controller cinder]# ls api.log backup.log cinder-manage.log scheduler.log volume.log [root@controller cinder]# [root@controller cinder]# tail -f * | grep -Ei ‘err|warn’

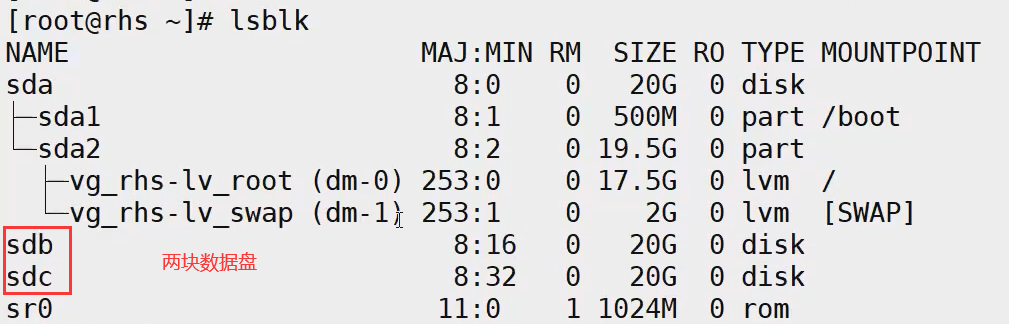

剩余空间只有19G,请求需要201G

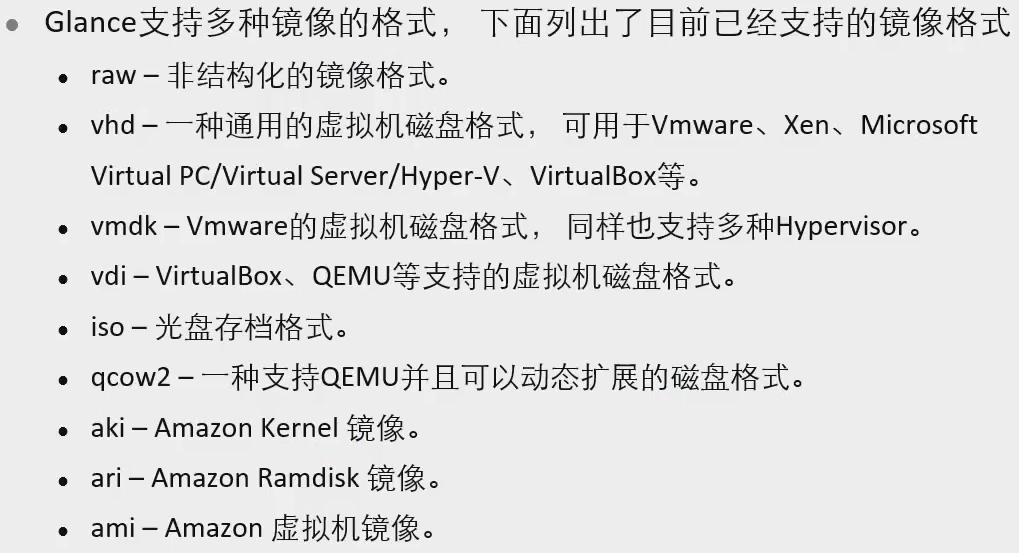

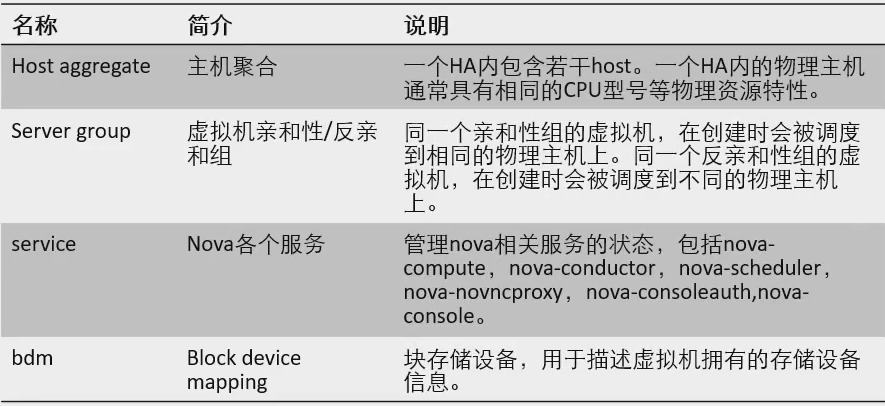

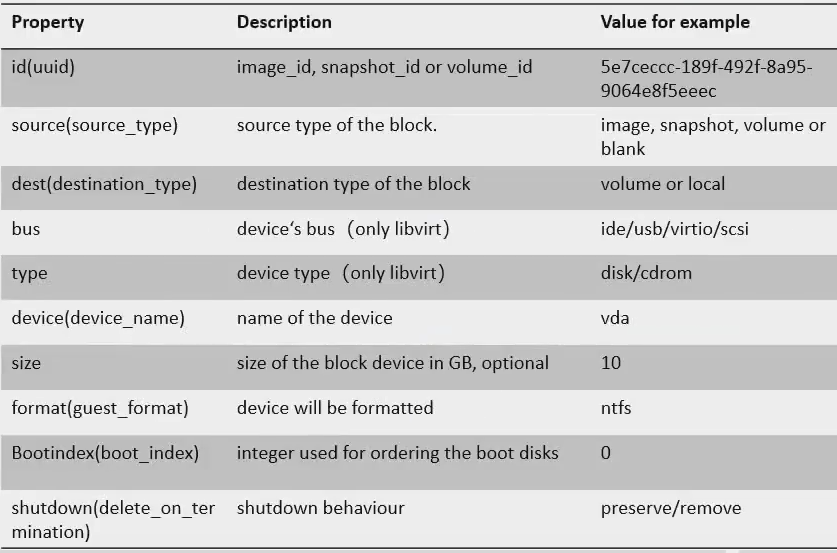

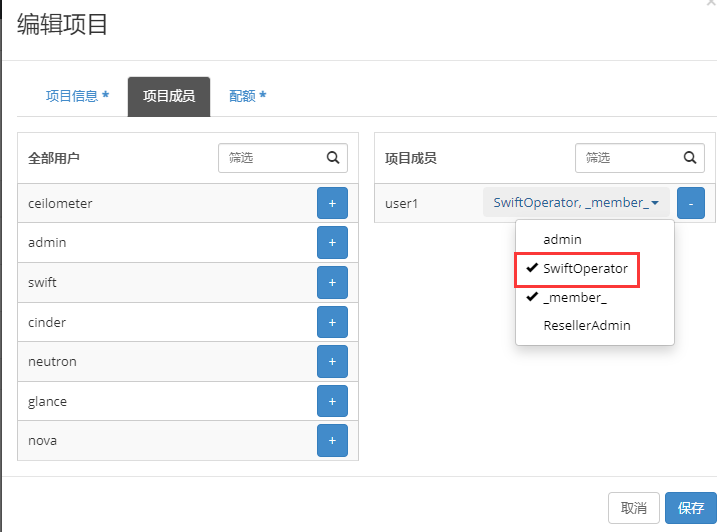

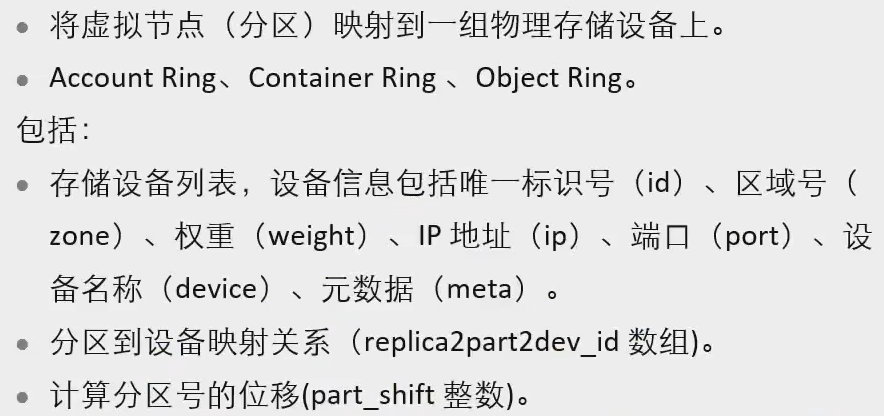

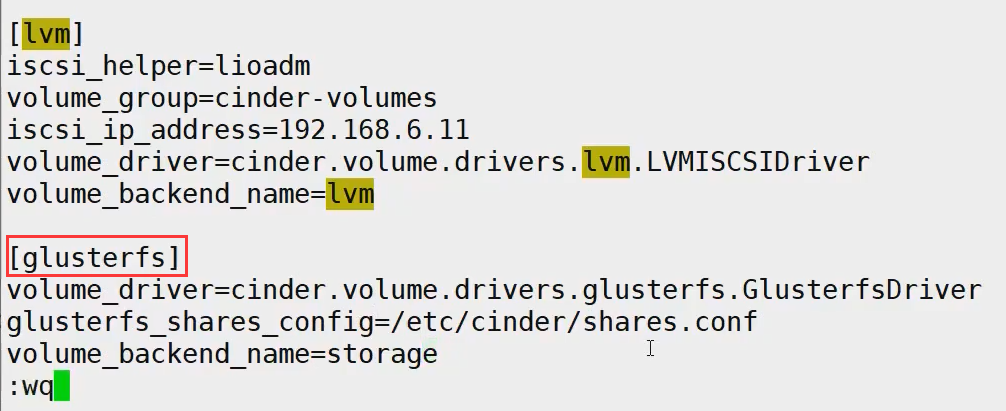

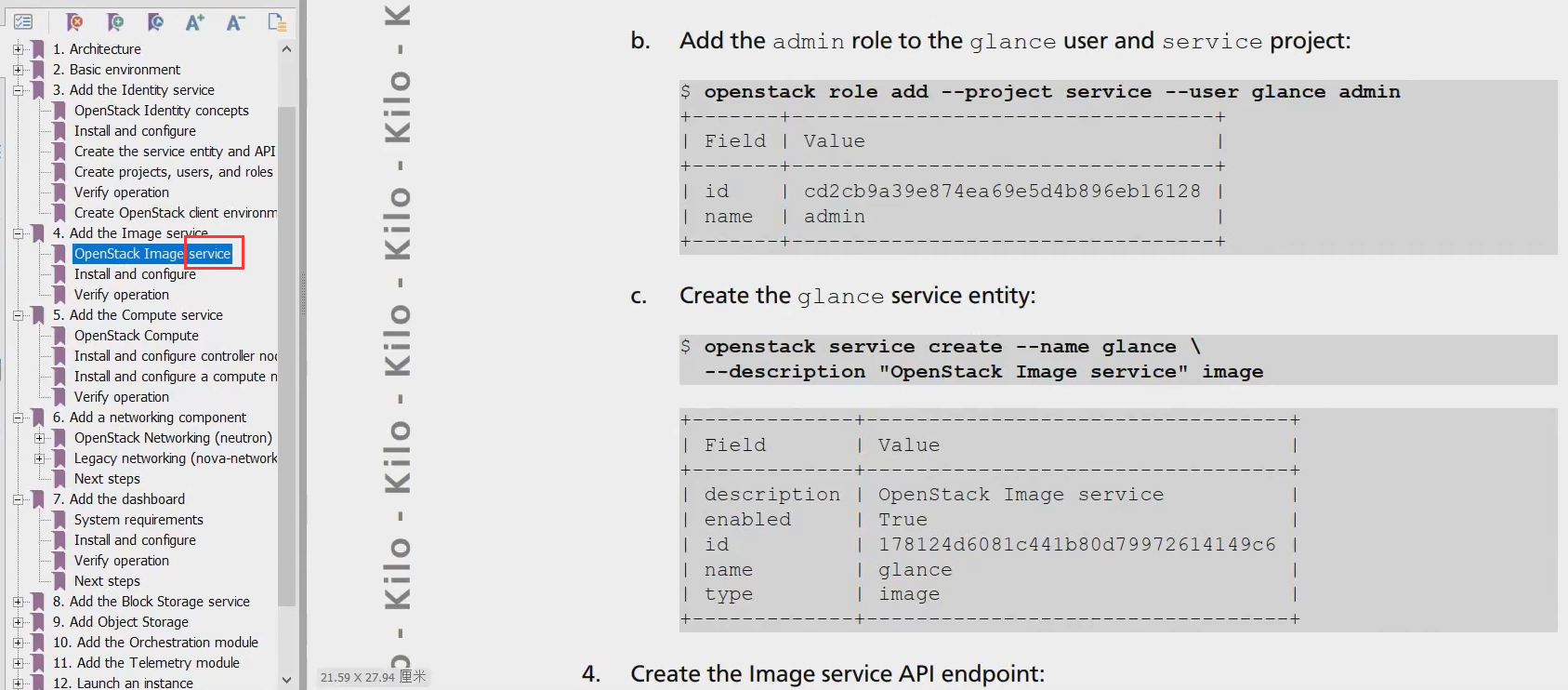

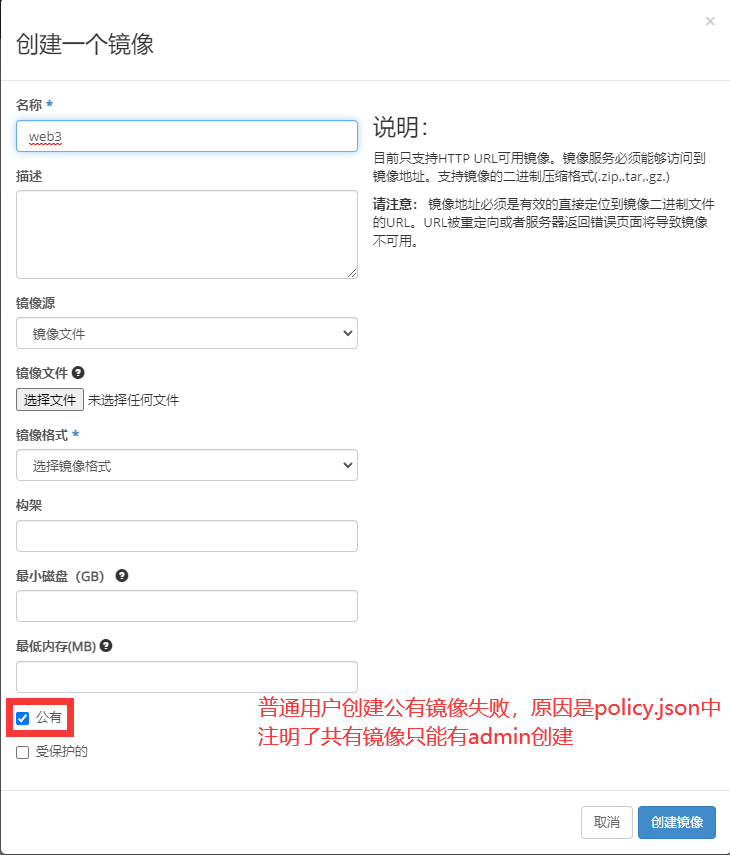

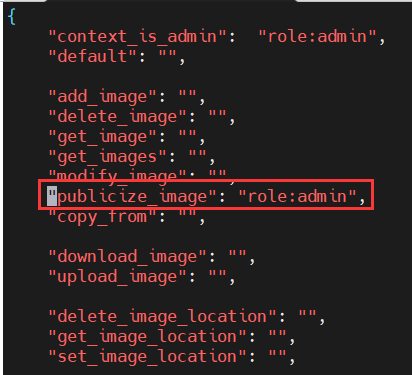

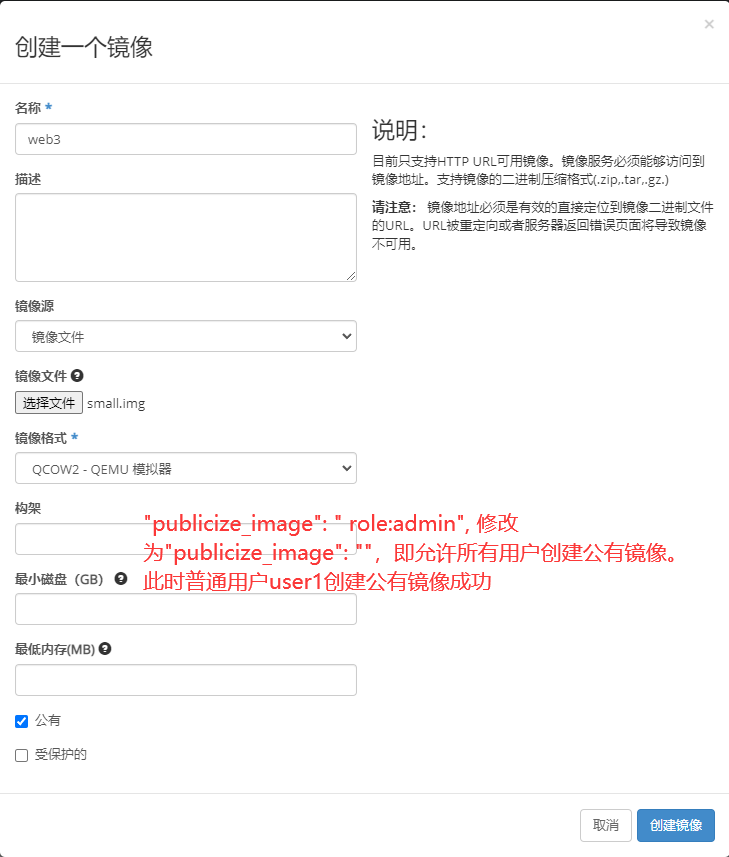

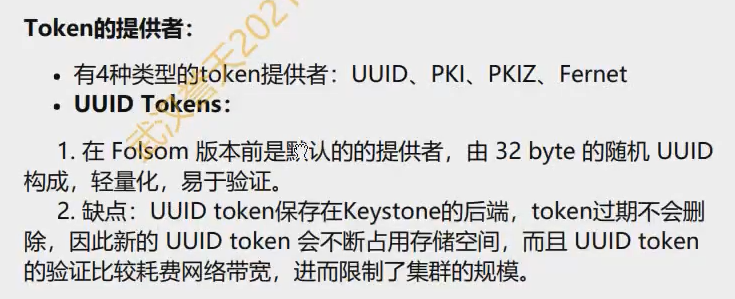

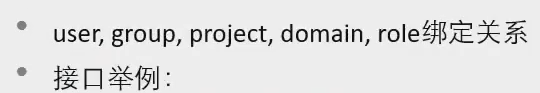

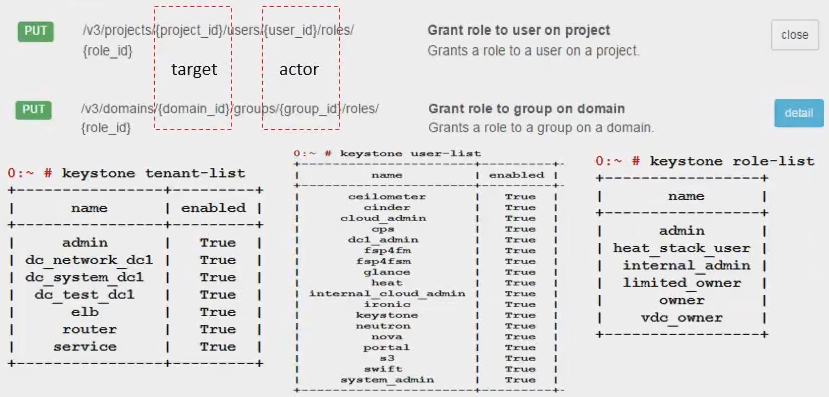

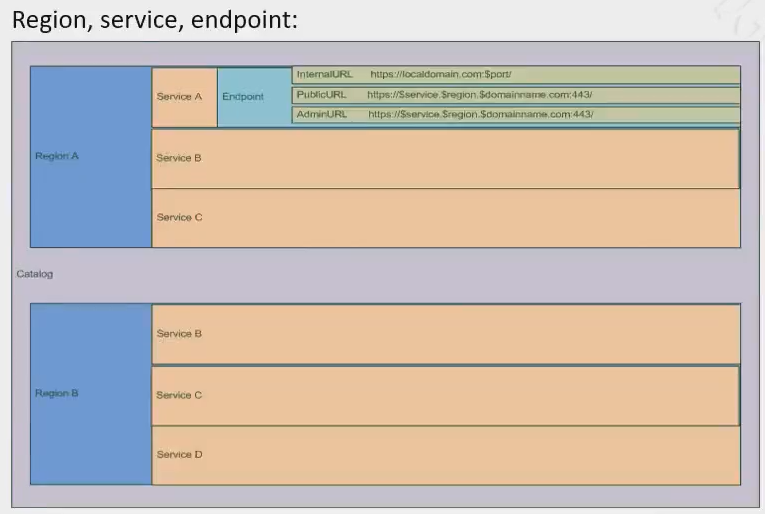

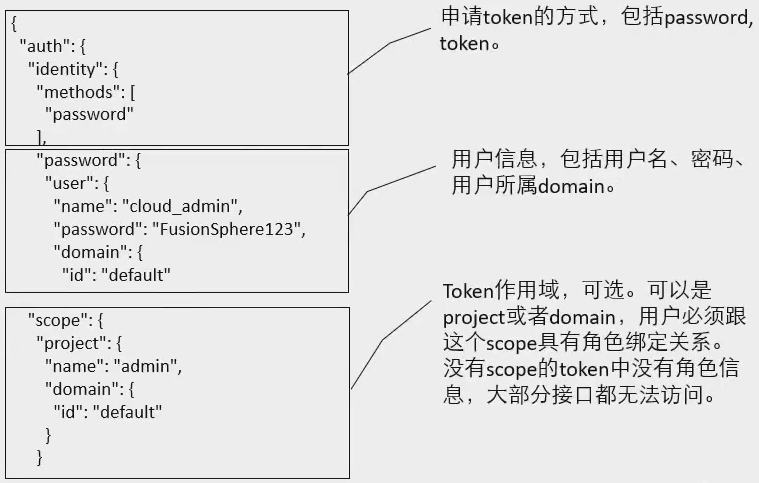

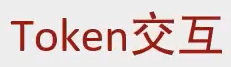

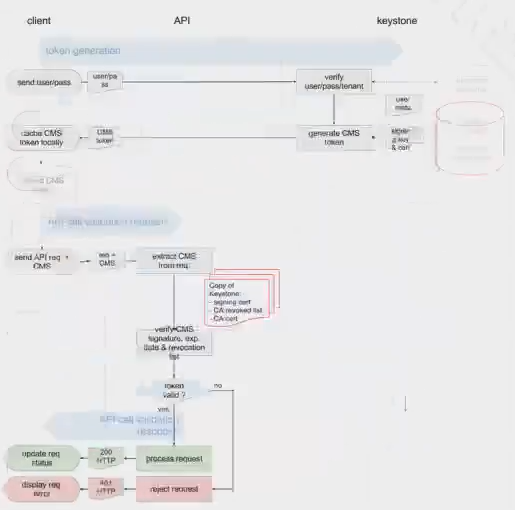

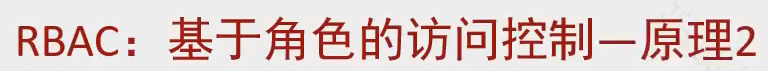

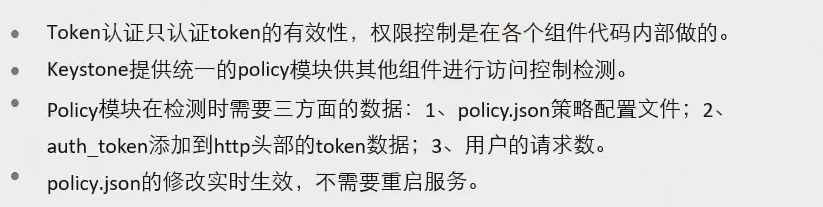

2023-05-21 04:07:53.381 10517 WARNING cinder.scheduler.filters.capacity_filter [req-741ea749-8cd8-4c4a-a572-3d237f1f219f 6afb01319fed4145a6ac15dc653c4f4f e84a0d07650242b5a43a0aa53831be1c - - -] Insufficient free space for volume creation on host controller@lvm#lvm (requested / avail): 201/19.0 2023-05-21 04:07:53.387 10517 WARNING cinder.scheduler.filter_scheduler [req-741ea749-8cd8-4c4a-a572-3d237f1f219f 6afb01319fed4145a6ac15dc653c4f4f e84a0d07650242b5a43a0aa53831be1c - - -] No weighed hosts found for volume with properties: {} 2023-05-21 04:07:53.406 10517 ERROR cinder.scheduler.flows.create_volume [req-741ea749-8cd8-4c4a-a572-3d237f1f219f 6afb01319fed4145a6ac15dc653c4f4f e84a0d07650242b5a43a0aa53831be1c - - -] Failed to run task cinder.scheduler.flows.create_volume.ScheduleCreateVolumeTask;volume:create: No valid host was found. No weighed hosts available 2023-05-21 04:07:53.438 10327 INFO cinder.api.v1.volumes [req-c6baa289-1f35-4253-901d-266cadb61d45 6afb01319fed4145a6ac15dc653c4f4f e84a0d07650242b5a43a0aa53831be1c - - -] vol={‘migration_status’: None, ‘availability_zone’: u’nova’, ‘terminated_at’: None, ‘updated_at’: datetime.datetime(2023, 5, 20, 20, 7, 53), ‘provider_geometry’: None, ‘replication_extended_status’: None, ‘replication_status’: u’disabled’, ‘snapshot_id’: None, ‘ec2_id’: None, ‘mountpoint’: None, ‘deleted_at’: None, ‘id’: u’4380db5e-ff3f-4816-a8cd-3593d235a488’, ‘size’: 201L, ‘user_id’: u’6afb01319fed4145a6ac15dc653c4f4f’, ‘attach_time’: None, ‘attached_host’: None, ‘display_description’: u’’, ‘volume_admin_metadata’: [], ‘project_id’: u’e84a0d07650242b5a43a0aa53831be1c’, ‘launched_at’: None, ‘scheduled_at’: None, ‘status’: u’error’, ‘volume_type_id’: None, ‘deleted’: False, ‘provider_location’: None, ‘host’: None, ‘consistencygroup_id’: None, ‘source_volid’: None, ‘provider_auth’: None, ‘display_name’: u’disk02’, ‘instance_uuid’: None, ‘bootable’: False, ‘created_at’: datetime.datetime(2023, 5, 20, 20, 7, 53), ‘attach_status’: u’detached’, ‘volume_type’: None, ‘consistencygroup’: None, ‘volume_metadata’: [], ‘_name_id’: None, ‘encryption_key_id’: None, ‘replication_driver_data’: None}