计算机视觉的迁移学习教程

原文:https://pytorch.org/tutorials/beginner/transfer_learning_tutorial.html

在本教程中,您将学习如何使用迁移学习训练卷积神经网络进行图像分类。 您可以在 cs231n 笔记中阅读有关转学的更多信息。

引用这些注解,

实际上,很少有人从头开始训练整个卷积网络(使用随机初始化),因为拥有足够大小的数据集相对很少。 相反,通常在非常大的数据集上对 ConvNet 进行预训练(例如 ImageNet,其中包含 120 万个具有 1000 个类别的图像),然后将 ConvNet 用作初始化或固定特征提取器以完成感兴趣的任务。

这两个主要的迁移学习方案如下所示:

- 卷积网络的微调:代替随机初始化,我们使用经过预训练的网络初始化网络,例如在 imagenet 1000 数据集上进行训练的网络。 其余的训练照常进行。

- 作为固定特征提取器的 ConvNet:在这里,我们将冻结除最终全连接层之外的所有网络的权重。 最后一个全连接层将替换为具有随机权重的新层,并且仅训练该层。

# License: BSD# Author: Sasank Chilamkurthyfrom __future__ import print_function, divisionimport torchimport torch.nn as nnimport torch.optim as optimfrom torch.optim import lr_schedulerimport numpy as npimport torchvisionfrom torchvision import datasets, models, transformsimport matplotlib.pyplot as pltimport timeimport osimport copyplt.ion() # interactive mode

加载数据

我们将使用torchvision和torch.utils.data包来加载数据。

我们今天要解决的问题是训练一个模型来对蚂蚁和蜜蜂进行分类。 我们为蚂蚁和蜜蜂提供了大约 120 张训练图像。 每个类别有 75 个验证图像。 通常,如果从头开始训练的话,这是一个非常小的数据集。 由于我们正在使用迁移学习,因此我们应该能够很好地概括。

该数据集是 imagenet 的很小一部分。

注意

从的下载数据,并将其提取到当前目录。

# Data augmentation and normalization for training# Just normalization for validationdata_transforms = {'train': transforms.Compose([transforms.RandomResizedCrop(224),transforms.RandomHorizontalFlip(),transforms.ToTensor(),transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),'val': transforms.Compose([transforms.Resize(256),transforms.CenterCrop(224),transforms.ToTensor(),transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225])]),}data_dir = 'data/hymenoptera_data'image_datasets = {x: datasets.ImageFolder(os.path.join(data_dir, x),data_transforms[x])for x in ['train', 'val']}dataloaders = {x: torch.utils.data.DataLoader(image_datasets[x], batch_size=4,shuffle=True, num_workers=4)for x in ['train', 'val']}dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'val']}class_names = image_datasets['train'].classesdevice = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

可视化一些图像

让我们可视化一些训练图像,以了解数据扩充。

def imshow(inp, title=None):"""Imshow for Tensor."""inp = inp.numpy().transpose((1, 2, 0))mean = np.array([0.485, 0.456, 0.406])std = np.array([0.229, 0.224, 0.225])inp = std * inp + meaninp = np.clip(inp, 0, 1)plt.imshow(inp)if title is not None:plt.title(title)plt.pause(0.001) # pause a bit so that plots are updated# Get a batch of training datainputs, classes = next(iter(dataloaders['train']))# Make a grid from batchout = torchvision.utils.make_grid(inputs)imshow(out, title=[class_names[x] for x in classes])

训练模型

现在,让我们编写一个通用函数来训练模型。 在这里,我们将说明:

- 安排学习率

- 保存最佳模型

以下,参数scheduler是来自torch.optim.lr_scheduler的 LR 调度器对象。

def train_model(model, criterion, optimizer, scheduler, num_epochs=25):since = time.time()best_model_wts = copy.deepcopy(model.state_dict())best_acc = 0.0for epoch in range(num_epochs):print('Epoch {}/{}'.format(epoch, num_epochs - 1))print('-' * 10)# Each epoch has a training and validation phasefor phase in ['train', 'val']:if phase == 'train':model.train() # Set model to training modeelse:model.eval() # Set model to evaluate moderunning_loss = 0.0running_corrects = 0# Iterate over data.for inputs, labels in dataloaders[phase]:inputs = inputs.to(device)labels = labels.to(device)# zero the parameter gradientsoptimizer.zero_grad()# forward# track history if only in trainwith torch.set_grad_enabled(phase == 'train'):outputs = model(inputs)_, preds = torch.max(outputs, 1)loss = criterion(outputs, labels)# backward + optimize only if in training phaseif phase == 'train':loss.backward()optimizer.step()# statisticsrunning_loss += loss.item() * inputs.size(0)running_corrects += torch.sum(preds == labels.data)if phase == 'train':scheduler.step()epoch_loss = running_loss / dataset_sizes[phase]epoch_acc = running_corrects.double() / dataset_sizes[phase]print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))# deep copy the modelif phase == 'val' and epoch_acc > best_acc:best_acc = epoch_accbest_model_wts = copy.deepcopy(model.state_dict())print()time_elapsed = time.time() - sinceprint('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))print('Best val Acc: {:4f}'.format(best_acc))# load best model weightsmodel.load_state_dict(best_model_wts)return model

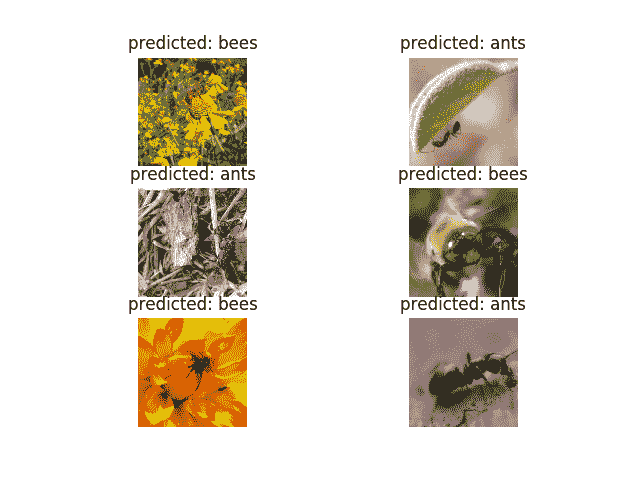

可视化模型预测

通用函数,显示一些图像的预测

def visualize_model(model, num_images=6):was_training = model.trainingmodel.eval()images_so_far = 0fig = plt.figure()with torch.no_grad():for i, (inputs, labels) in enumerate(dataloaders['val']):inputs = inputs.to(device)labels = labels.to(device)outputs = model(inputs)_, preds = torch.max(outputs, 1)for j in range(inputs.size()[0]):images_so_far += 1ax = plt.subplot(num_images//2, 2, images_so_far)ax.axis('off')ax.set_title(f'predicted: {class_names[preds[j]]}')imshow(inputs.cpu().data[j])if images_so_far == num_images:model.train(mode=was_training)returnmodel.train(mode=was_training)

微调 ConvNet

加载预训练的模型并重置最终的全连接层。

model_ft = models.resnet18(pretrained=True)num_ftrs = model_ft.fc.in_features# Here the size of each output sample is set to 2.# Alternatively, it can be generalized to nn.Linear(num_ftrs, len(class_names)).model_ft.fc = nn.Linear(num_ftrs, 2)model_ft = model_ft.to(device)criterion = nn.CrossEntropyLoss()# Observe that all parameters are being optimizedoptimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)# Decay LR by a factor of 0.1 every 7 epochsexp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

训练和评估

在 CPU 上大约需要 15-25 分钟。 但是在 GPU 上,此过程不到一分钟。

model_ft = train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler,num_epochs=25)

出:

Epoch 0/24----------train Loss: 0.6303 Acc: 0.6926val Loss: 0.1492 Acc: 0.9346Epoch 1/24----------train Loss: 0.5511 Acc: 0.7869val Loss: 0.2577 Acc: 0.8889Epoch 2/24----------train Loss: 0.4885 Acc: 0.8115val Loss: 0.3390 Acc: 0.8758Epoch 3/24----------train Loss: 0.5158 Acc: 0.7992val Loss: 0.5070 Acc: 0.8366Epoch 4/24----------train Loss: 0.5878 Acc: 0.7992val Loss: 0.2706 Acc: 0.8758Epoch 5/24----------train Loss: 0.4396 Acc: 0.8279val Loss: 0.2870 Acc: 0.8954Epoch 6/24----------train Loss: 0.4612 Acc: 0.8238val Loss: 0.2809 Acc: 0.9150Epoch 7/24----------train Loss: 0.4387 Acc: 0.8402val Loss: 0.1853 Acc: 0.9281Epoch 8/24----------train Loss: 0.2998 Acc: 0.8648val Loss: 0.1926 Acc: 0.9085Epoch 9/24----------train Loss: 0.3383 Acc: 0.9016val Loss: 0.1762 Acc: 0.9281Epoch 10/24----------train Loss: 0.2969 Acc: 0.8730val Loss: 0.1872 Acc: 0.8954Epoch 11/24----------train Loss: 0.3117 Acc: 0.8811val Loss: 0.1807 Acc: 0.9150Epoch 12/24----------train Loss: 0.3005 Acc: 0.8770val Loss: 0.1930 Acc: 0.9085Epoch 13/24----------train Loss: 0.3129 Acc: 0.8689val Loss: 0.2184 Acc: 0.9150Epoch 14/24----------train Loss: 0.3776 Acc: 0.8607val Loss: 0.1869 Acc: 0.9216Epoch 15/24----------train Loss: 0.2245 Acc: 0.9016val Loss: 0.1742 Acc: 0.9346Epoch 16/24----------train Loss: 0.3105 Acc: 0.8607val Loss: 0.2056 Acc: 0.9216Epoch 17/24----------train Loss: 0.2729 Acc: 0.8893val Loss: 0.1722 Acc: 0.9085Epoch 18/24----------train Loss: 0.3210 Acc: 0.8730val Loss: 0.1977 Acc: 0.9281Epoch 19/24----------train Loss: 0.3231 Acc: 0.8566val Loss: 0.1811 Acc: 0.9216Epoch 20/24----------train Loss: 0.3206 Acc: 0.8648val Loss: 0.2033 Acc: 0.9150Epoch 21/24----------train Loss: 0.2917 Acc: 0.8648val Loss: 0.1694 Acc: 0.9150Epoch 22/24----------train Loss: 0.2412 Acc: 0.8852val Loss: 0.1757 Acc: 0.9216Epoch 23/24----------train Loss: 0.2508 Acc: 0.8975val Loss: 0.1662 Acc: 0.9281Epoch 24/24----------train Loss: 0.3283 Acc: 0.8566val Loss: 0.1761 Acc: 0.9281Training complete in 1m 10sBest val Acc: 0.934641

visualize_model(model_ft)

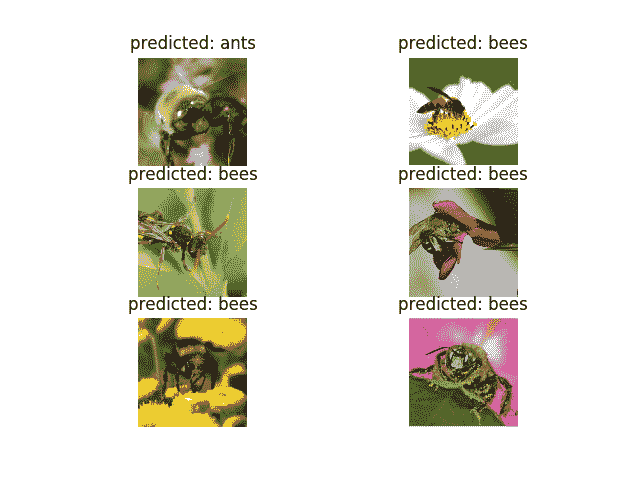

作为固定特征提取器的 ConvNet

在这里,我们需要冻结除最后一层之外的所有网络。 我们需要设置requires_grad == False冻结参数,以便不在backward()中计算梯度。

model_conv = torchvision.models.resnet18(pretrained=True)for param in model_conv.parameters():param.requires_grad = False# Parameters of newly constructed modules have requires_grad=True by defaultnum_ftrs = model_conv.fc.in_featuresmodel_conv.fc = nn.Linear(num_ftrs, 2)model_conv = model_conv.to(device)criterion = nn.CrossEntropyLoss()# Observe that only parameters of final layer are being optimized as# opposed to before.optimizer_conv = optim.SGD(model_conv.fc.parameters(), lr=0.001, momentum=0.9)# Decay LR by a factor of 0.1 every 7 epochsexp_lr_scheduler = lr_scheduler.StepLR(optimizer_conv, step_size=7, gamma=0.1)

训练和评估

与以前的方案相比,在 CPU 上将花费大约一半的时间。 这是可以预期的,因为不需要为大多数网络计算梯度。 但是,确实需要计算正向。

model_conv = train_model(model_conv, criterion, optimizer_conv,exp_lr_scheduler, num_epochs=25)

出:

Epoch 0/24----------train Loss: 0.7258 Acc: 0.6148val Loss: 0.2690 Acc: 0.9020Epoch 1/24----------train Loss: 0.5342 Acc: 0.7500val Loss: 0.1905 Acc: 0.9412Epoch 2/24----------train Loss: 0.4262 Acc: 0.8320val Loss: 0.1903 Acc: 0.9412Epoch 3/24----------train Loss: 0.4103 Acc: 0.8197val Loss: 0.2658 Acc: 0.8954Epoch 4/24----------train Loss: 0.3938 Acc: 0.8115val Loss: 0.2871 Acc: 0.8954Epoch 5/24----------train Loss: 0.4623 Acc: 0.8361val Loss: 0.1651 Acc: 0.9346Epoch 6/24----------train Loss: 0.5348 Acc: 0.7869val Loss: 0.1944 Acc: 0.9477Epoch 7/24----------train Loss: 0.3827 Acc: 0.8402val Loss: 0.1846 Acc: 0.9412Epoch 8/24----------train Loss: 0.3655 Acc: 0.8443val Loss: 0.1873 Acc: 0.9412Epoch 9/24----------train Loss: 0.3275 Acc: 0.8525val Loss: 0.2091 Acc: 0.9412Epoch 10/24----------train Loss: 0.3375 Acc: 0.8320val Loss: 0.1798 Acc: 0.9412Epoch 11/24----------train Loss: 0.3077 Acc: 0.8648val Loss: 0.1942 Acc: 0.9346Epoch 12/24----------train Loss: 0.4336 Acc: 0.7787val Loss: 0.1934 Acc: 0.9346Epoch 13/24----------train Loss: 0.3149 Acc: 0.8566val Loss: 0.2062 Acc: 0.9281Epoch 14/24----------train Loss: 0.3617 Acc: 0.8320val Loss: 0.1761 Acc: 0.9412Epoch 15/24----------train Loss: 0.3066 Acc: 0.8361val Loss: 0.1799 Acc: 0.9281Epoch 16/24----------train Loss: 0.3952 Acc: 0.8443val Loss: 0.1666 Acc: 0.9346Epoch 17/24----------train Loss: 0.3552 Acc: 0.8443val Loss: 0.1928 Acc: 0.9412Epoch 18/24----------train Loss: 0.3106 Acc: 0.8648val Loss: 0.1964 Acc: 0.9346Epoch 19/24----------train Loss: 0.3675 Acc: 0.8566val Loss: 0.1813 Acc: 0.9346Epoch 20/24----------train Loss: 0.3565 Acc: 0.8320val Loss: 0.1758 Acc: 0.9346Epoch 21/24----------train Loss: 0.2922 Acc: 0.8566val Loss: 0.2295 Acc: 0.9216Epoch 22/24----------train Loss: 0.3283 Acc: 0.8402val Loss: 0.2267 Acc: 0.9281Epoch 23/24----------train Loss: 0.2875 Acc: 0.8770val Loss: 0.1878 Acc: 0.9346Epoch 24/24----------train Loss: 0.3172 Acc: 0.8689val Loss: 0.1849 Acc: 0.9412Training complete in 0m 34sBest val Acc: 0.947712

visualize_model(model_conv)plt.ioff()plt.show()

进一步学习

如果您想了解有关迁移学习的更多信息,请查看我们的计算机视觉教程的量化迁移学习。

脚本的总运行时间:(1 分钟 56.157 秒)

下载 Python 源码:transfer_learning_tutorial.py

下载 Jupyter 笔记本:transfer_learning_tutorial.ipynb

由 Sphinx 画廊生成的画廊