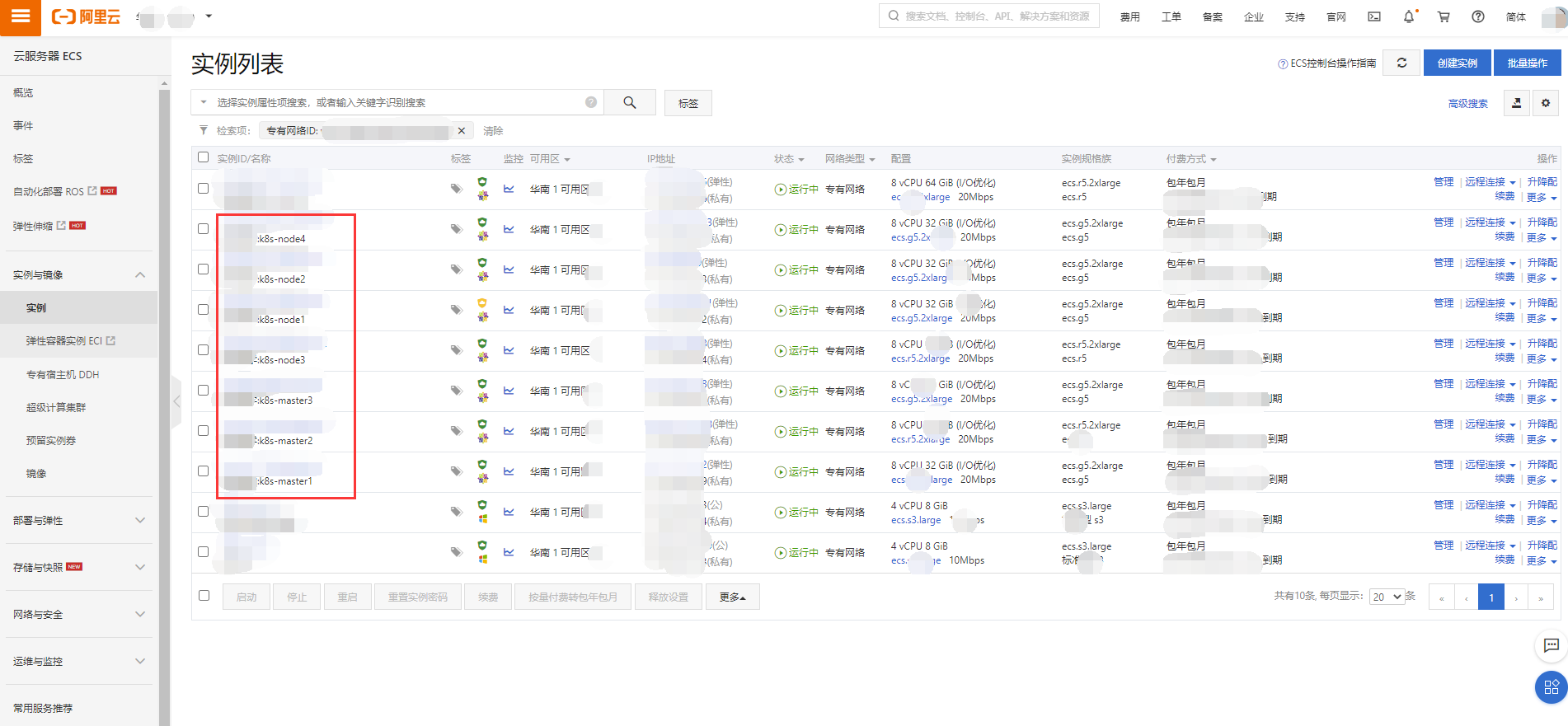

前面的文章,我们使用Kubeadm在虚拟机中搭建了实验环境的Kubernetes集群,而实际生产环境中,往往要求会比这高得多。下面我们需要在真实的物理机上搭建(本人使用了8台阿里云的服务器)。

一、部署准备

- BIOS 中开启 VT-X (如果是虚拟机注意设置)

- 科学上网 (由于 GFW)

在阿里云中配置 8 台 CentOS 物理机, 3 台 master, 4 台 node, 1台lb, (IP为我写文章时胡诌的,根据自己的机子IP来配置) 分别为:

- 192.168.1.140 k8s-master-lb (VIP)

- 192.168.1.128 k8s-master1 (4 核 8GB)

- 192.168.1.129 k8s-master2 (4 核 8GB)

- 192.168.1.130 k8s-master3 (4 核 8GB)

- 192.168.1.131 k8s-node1 (4 核 4GB)

- 192.168.1.132 k8s-node2 (4 核 4GB)

- 192.168.1.133 k8s-node3 (4 核 4GB)

- 192.168.1.134 k8s-node4 (4 核 4GB)

并在自己本地的客户机中将 192.168.1.140 k8s-master-lb 加入 hosts,以便访问。

主机互信

所有节点配置 hosts, 使三台机子能够互通

$ cat <<EOF >> /etc/hosts192.168.1.128 k8s-master1192.168.1.129 k8s-master2192.168.1.130 k8s-master3192.168.1.131 k8s-node1192.168.1.132 k8s-node2192.168.1.133 k8s-node3192.168.1.134 k8s-node4EOF

SSH 证书分发

ssh-keygen -t rsafor i in k8s-master1 k8s-master2 k8s-master3 k8s-node1 k8s-node2 k8s-node3 k8s-node4 k8s-node5;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

测试互通性:

ping k8s-master1# 或ssh k8s-master1

二、开始部署

使用 k8s-ha-install

有大佬将部署高可用 k8s 写成了自动化脚本: k8s-ha-install

git clone https://github.com/dotbalo/k8s-ha-install.git -b v1.13.x

以下的操作都在 k8s-ha-install 目录下执行

修改 create-config.sh, 将以下变量修改为自己的, 比如我自己的:

#!/bin/bash######################################## set variables below to create the config files, all files will create at ./config directory######################################## master keepalived virtual ip addressexport K8SHA_VIP=192.168.1.140# master1 ip addressexport K8SHA_IP1=192.168.1.128# master2 ip addressexport K8SHA_IP2=192.168.1.129# master3 ip addressexport K8SHA_IP3=192.168.1.130# master keepalived virtual ip hostnameexport K8SHA_VHOST=k8s-master-lb# master1 hostnameexport K8SHA_HOST1=k8s-master1# master2 hostnameexport K8SHA_HOST2=k8s-master2# master3 hostnameexport K8SHA_HOST3=k8s-master3# master1 network interface nameexport K8SHA_NETINF1=ens33# master2 network interface nameexport K8SHA_NETINF2=ens33# master3 network interface nameexport K8SHA_NETINF3=ens33# keepalived auth_pass configexport K8SHA_KEEPALIVED_AUTH=412f7dc3bfed32194d1600c483e10ad1d# calico reachable ip address 服务器网关地址export K8SHA_CALICO_REACHABLE_IP=192.168.1.1# kubernetes CIDR pod subnet, if CIDR pod subnet is "172.168.0.0/16" please set to "172.168.0.0"export K8SHA_CIDR=172.168.0.0

将 k8s 版本改为自己的版本(好几个地方, 全部都要改), 比如我的是 v1.14.2:

kubernetesVersion: v1.13.2

# 改为

kubernetesVersion: v1.14.2

修改完成之后执行:

$ ./create-config.sh

create kubeadm-config.yaml files success. config/k8s-master1/kubeadm-config.yaml

create kubeadm-config.yaml files success. config/k8s-master2/kubeadm-config.yaml

create kubeadm-config.yaml files success. config/k8s-master3/kubeadm-config.yaml

create keepalived files success. config/k8s-master1/keepalived/

create keepalived files success. config/k8s-master2/keepalived/

create keepalived files success. config/k8s-master3/keepalived/

create nginx-lb files success. config/k8s-master1/nginx-lb/

create nginx-lb files success. config/k8s-master2/nginx-lb/

create nginx-lb files success. config/k8s-master3/nginx-lb/

create calico.yaml file success. calico/calico.yaml

可以看到自动创建了很多文件, 然后将这些文件进行分发:

# 设置相关hostname变量

export HOST1=k8s-master1

export HOST2=k8s-master2

export HOST3=k8s-master3

# 把kubeadm配置文件放到各个master节点的/root/目录

scp -r config/$HOST1/kubeadm-config.yaml $HOST1:/root/

scp -r config/$HOST2/kubeadm-config.yaml $HOST2:/root/

scp -r config/$HOST3/kubeadm-config.yaml $HOST3:/root/

# 把keepalived配置文件放到各个master节点的/etc/keepalived/目录

scp -r config/$HOST1/keepalived/* $HOST1:/etc/keepalived/

scp -r config/$HOST2/keepalived/* $HOST2:/etc/keepalived/

scp -r config/$HOST3/keepalived/* $HOST3:/etc/keepalived/

# 把nginx负载均衡配置文件放到各个master节点的/root/目录

scp -r config/$HOST1/nginx-lb $HOST1:/root/

scp -r config/$HOST2/nginx-lb $HOST2:/root/

scp -r config/$HOST3/nginx-lb $HOST3:/root/

nginx-lb 配置

在所有 master 节点执行

cd

docker-compose --file=/root/nginx-lb/docker-compose.yaml up -d

docker-compose --file=/root/nginx-lb/docker-compose.yaml ps

keepalived 配置

在所有 master 节点修改以下文件:

$ vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

#vrrp_script chk_apiserver {

# script "/etc/keepalived/check_apiserver.sh"

# interval 2

# weight -5

# fall 3

# rise 2

#}

vrrp_instance VI_1 {

state MASTER

interface ens160

mcast_src_ip 192.168.1.128

virtual_router_id 51

priority 102

advert_int 2

authentication {

auth_type PASS

auth_pass 412f7dc3bfed32194d1600c483e10ad1d

}

virtual_ipaddress {

192.168.1.140

}

track_script {

chk_apiserver

}

}

修改的地方:

- 注释了

vrrp_script部分 mcast_src_ip配置为各个 master 节点的 IPvirtual_ipaddress配置为 VIP

然后重启服务:

systemctl restart keepalived

测试 VIP 是否可以访问得通, 一定要通才能进行下一步:

ping 192.168.1.140 -c 4

注意步骤, 必须先执行 nginx-lb 相关的操作, 再注释keepalived.conf相关部分, 再重启 keepalived !!!

启动主节点

配置好之后, 提前下载镜像(所有节点都需执行):

kubeadm config images pull --config /root/kubeadm-config.yaml

然后启动 k8s-master1:

kubeadm init --config /root/kubeadm-config.yaml

查看当前启动的节点

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady master 2m11s v1.14.2