一、重新设置主机名

**三台节点都要重新设置主机名**

hostnamectl set-hostname masterhostnamectl set-hostname slave1hostnamectl set-hostname slave2

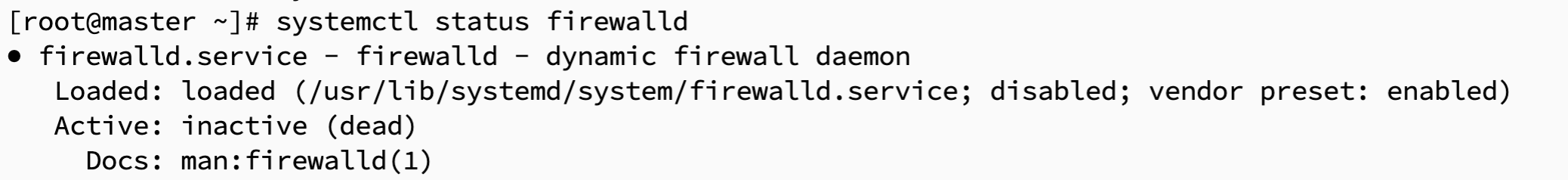

二、关闭防火墙

2.1 关闭防火墙

systemctl stop firewalld

2.2 检查防火墙是否关闭

systemctl disable firewalld

2.3 检查防火墙是否关闭

systemctl status firewalld

三、设置IP映射

3.1 主节点配置 hosts 文件

vim /etc/hosts

3.2 把三台节点的ip地址和主机名添加进去

192.168.31.164 master192.168.31.66 slave1192.168.31.186 slave2

3.3 将主节点hosts文件分发到其他子节点

sync /etc/hosts

四、配置免密登录

4.1每台节点上生成两个文件,一个公钥(id_rsa.pub),一个私钥(id_rsa)

ssh-keygen -t rsa

4.2 将公匙上传到主节点

**注意:在每台机器上都要输入**

ssh-copy-id master

在master主机上把authorized_keys 分发到slave1和slave2上

xsync ~/.ssh/authorized_keys

4.3 测试免密登录到其他节点

ssh master

ssh slave1

ssh slave2

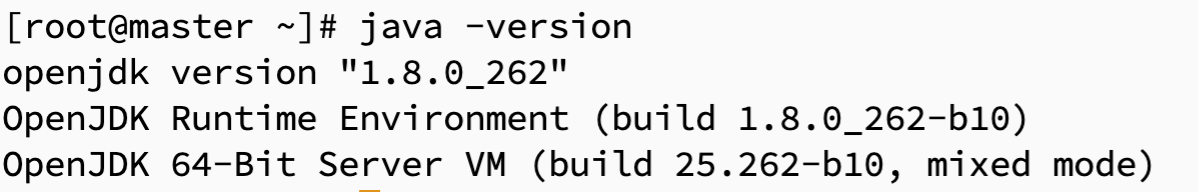

五、安装 JDK

5.1 下载 JDK 安装包

wget https://download.java.net/openjdk/jdk8u41/ri/openjdk-8u41-b04-linux-x64-14_jan_2020.tar.gz

5.2 解压下载的JDK安装包

tar -zxvf openjdk-8u41-b04-linux-x64-14_jan_2020.tar.gz -C /usr/local/src/

5.3 移动并重命名JDK包

mv /usr/local/src/java-se-8u41-ri /usr/local/src/java

5.4 配置Java环境变量

vim /etc/profile

# JAVA_HOME

export JAVA_HOME=/usr/local/src/java

export PATH=$PATH:$JAVA_HOME/bin

export JRE_HOME=/usr/local/src/java/jre

export CLASSPATH=.:$CLASSPATH:$JAVA_HOME/lib:$JRE_HOME/lib

source /etc/profile

5.5 查看Java是否成功安装

java -version

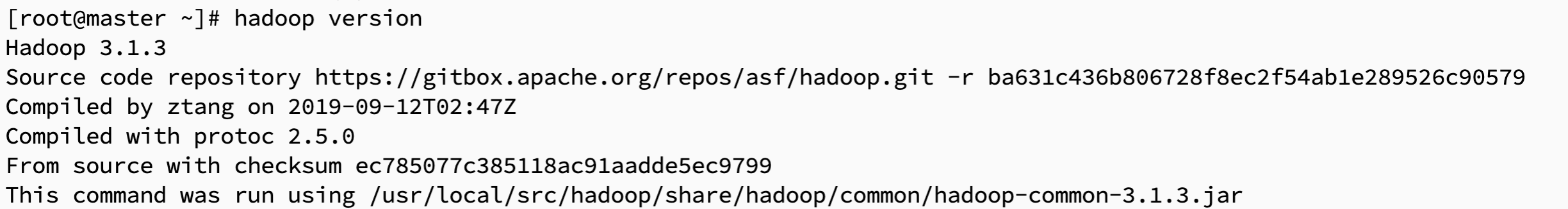

六、安装Hadoop

6.1 下载Hadoop安装包

wget https://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.1.3/hadoop-3.1.3.tar.gz

6.2 解压Hadoop安装包

tar -zxvf hadoop-3.1.3.tar.gz -C /usr/local/src/

mv /usr/local/src/hadoop-3.1.3 /usr/local/src/hadoop

6.3 配置Hadoop环境变量

vim /etc/profile

# HADOOP_HOME

export HADOOP_HOME=/usr/local/src/hadoop/

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

source /etc/profile

6.4 修改配置文件yarn-env.sh和 hadoop-env.sh

echo "export JAVA_HOME=/usr/local/src/java" >> /usr/local/src/hadoop/etc/hadoop/yarn-env.sh

echo "export JAVA_HOME=/usr/local/src/java" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_NAMENODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_DATANODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_SECONDARYNAMENODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export YARN_RESOURCEMANAGER_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export YARN_NODEMANAGER_USER=root">> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_JOURNALNODE_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HDFS_ZKFC_USER=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

echo "export HADOOP_SHELL_EXECNAME=root" >> /usr/local/src/hadoop/etc/hadoop/hadoop-env.sh

6.5 测试Hadoop是否安装成功

hadoop version

七、配置Hadoop

7.1 修改core-site.xml

vim /usr/local/src/hadoop/etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/src/hadoop/tmp</value>

</property>

</configuration>

7.2 修改 hdfs-site.xml

vim /usr/local/src/hadoop/etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>/usr/local/src/hadoop/tmp/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/usr/local/src/hadoop/tmp/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>slave2:50090</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>master:8031</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration>

7.3 修改 yarn-site.xml

vim /usr/local/src/hadoop/etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>master:8088</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log-aggregation.retain-seconds</name>

<value>106800</value>

</property>

<property>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/usr/local/src/hadoop/logs</value>

</property>

</configuration>

7.4 修改 mapred-site.xml

vim /usr/local/src/hadoop/etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>slave2:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>slave2:19888</value>

</property>

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/usr/local/src/hadoop/tmp/mr-history/tmp</value>

</property>

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/usr/local/src/hadoop/tmp/mr-history/done</value>

</property>

</configuration>

7.5 修改 workers

vim /usr/local/src/hadoop/etc/hadoop/workers

master

slave1

slave2

注意:

在 Hadoop3.0 以上的版本,使用的是 workers 配置文件,而在 Hadoop3.0 以下,使用的是 slaves 配置文件

八、同步节点数据

xsync /usr/local/src/hadoop

xsync /usr/local/src/java

xsync /etc/profile

source /etc/profile

九、格式化及启动 Hadoop

9.1 格式化namenode

hdfs namenode -format

9.2 启动并查看jps

start-all.sh

jps