前言

torchvision.transforms.CenterCrop()函数是中心裁剪函数,可以将图片按照指定的size进行裁剪,并保留中心点

官方Api介绍

_Crops the given image at the center.

If the image is torch Tensor, it is expected

to have […, H, W] shape, where … means an arbitrary number of leading dimensions.

If image size is smaller than output size along any edge, image is padded with 0 and then center cropped.

Args:

size (sequence or int): Desired output size of the crop. If size is an

int instead of sequence like (h, w), a square crop (size, size) is

made. If provided a sequence of length 1, it will be interpreted as (size[0], size[0])._

需准备的东西

演示代码

导入库

# 导入库import torchvision.transforms as transformsimport torchvision as tvimport matplotlib.pyplot as pltimport matplotlib# 设置字体 这两行需要手动设置matplotlib.rcParams['font.sans-serif']=['SimHei']matplotlib.rcParams['axes.unicode_minus']=False

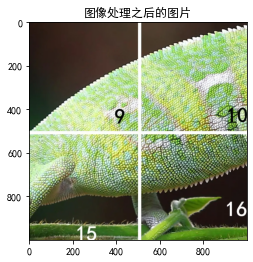

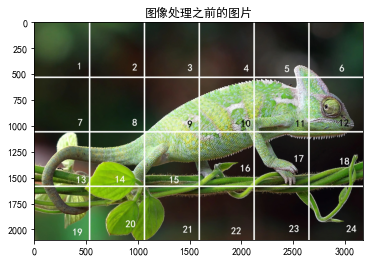

将图片按照中心1000像素点进行裁剪

# 1. 中心裁剪transform = transforms.Compose([transforms.CenterCrop(1000)])# 读取图片picTensor = tv.io.read_image('testpic.png')# 转换图片picTransformed = transform(picTensor)# 显示print('图像处理之前的图片大小:', picTensor.shape)print('图像之后的图片大小:', picTransformed.shape)picNumpy = picTensor.permute(1, 2, 0).numpy()plt.imshow(picNumpy)plt.title('图像处理之前的图片')plt.show()picNumpy = picTransformed.permute(1, 2, 0).numpy()plt.imshow(picNumpy)plt.title('图像处理之后的图片')plt.show()

裁剪之前的图片大小: torch.Size([3, 2100, 3174]) 裁剪之后的图片大小: torch.Size([3, 1000, 1000])

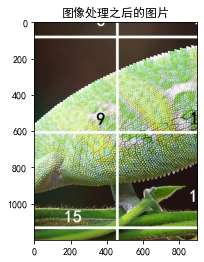

将图片按照指定size=(1200, 900)进行裁剪

# 1. 中心裁剪transform = transforms.Compose([transforms.CenterCrop(size=(1200, 900))])# 读取图片picTensor = tv.io.read_image('testpic.png')# 转换图片picTransformed = transform(picTensor)# 显示print('裁剪之前的图片大小:', picTensor.shape)print('裁剪之后的图片大小:', picTransformed.shape)picNumpy = picTensor.permute(1, 2, 0).numpy()plt.imshow(picNumpy)plt.show()picNumpy = picTransformed.permute(1, 2, 0).numpy()plt.imshow(picNumpy)plt.show()

裁剪之前的图片大小: torch.Size([3, 2100, 3174]) 裁剪之后的图片大小: torch.Size([3, 1200, 900])