鸢尾花种类预测

1.获取数据集

from sklearn.datasets import load_irisiris = load_iris()print("鸢尾花数据集的返回值:\n", iris)# 返回值是一个继承自字典的Bench print("鸢尾花的特征值:\n", iris["data"]) print("鸢尾花的目标值:\n", iris.target) print("鸢尾花特征的名字:\n", iris.feature_names) print("鸢尾花目标值的名字:\n", iris.target_names) print("鸢尾花的描述:\n", iris.DESCR)

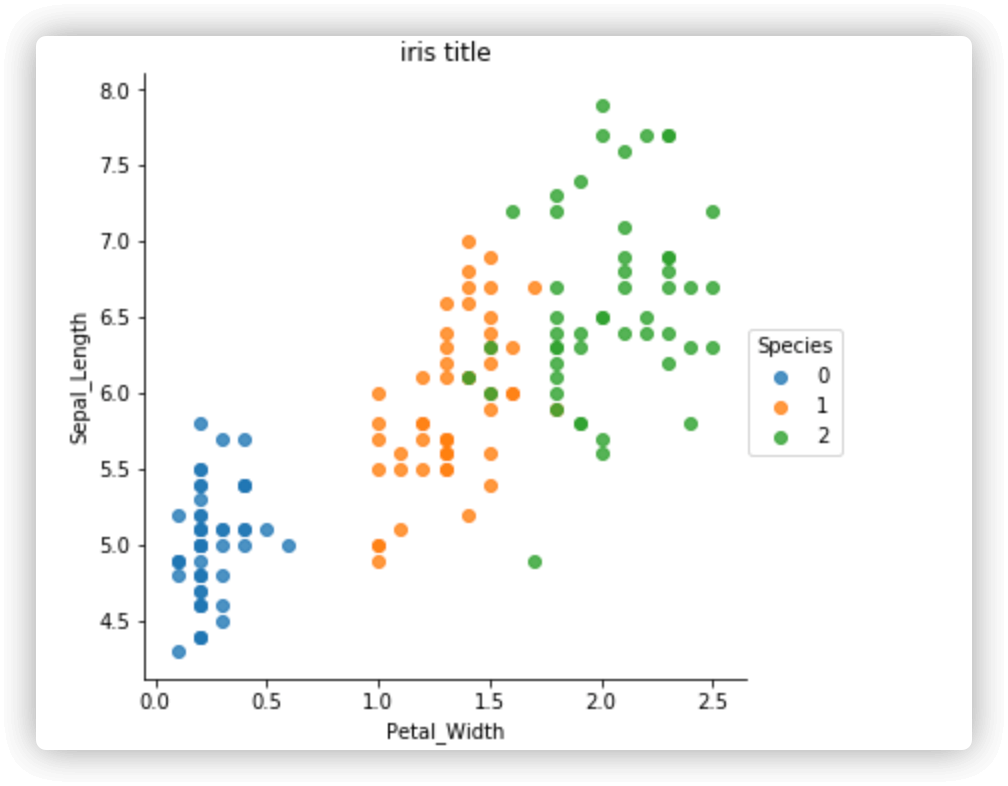

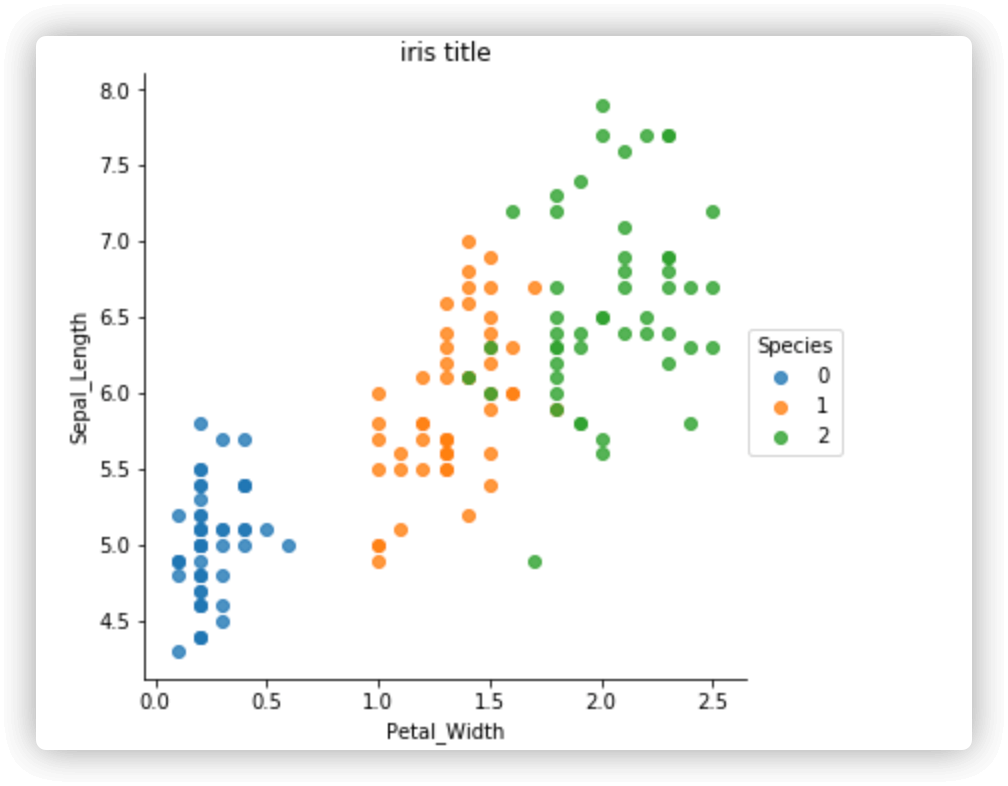

2.基本数据处理

import seaborn as snsimport matplotlib.pyplot as pltimport pandas as pdiris_d = pd.DataFrame(iris['data'], columns = ['Sepal_Length', 'Sepal_Width', 'Petal_Length', 'Petal_Width']) iris_d['Species'] = iris.targetdef plot_iris(iris, col1, col2): sns.lmplot(x = col1, y = col2, data = iris, hue = "Species", fit_reg = False) plt.xlabel(col1) plt.ylabel(col2) plt.title('鸢尾花种类分布图') plt.show()plot_iris(iris_d, 'Petal_Width', 'Sepal_Length')

from sklearn.model_selection import train_test_splitx_train, x_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size = 0.2and, random_state=22)

3.特征工程

from sklearn.preprocessing import StandardScalerfrom sklearn.neighbors import KNeighborsClassifiertransfer = StandardScaler()transfer.fit_transform(x_train)transfer.transform(x_test)

4.机器学习

estimator = KNeighborsClassifier(n_neighbors=9)estimator.fit(x_train, y_train)

5.模型评估

# 方法1:比对真实值和预测值y_predict = estimator.predict(x_test) print("预测结果为:\n", y_predict) print("比对真实值和预测值:\n", y_predict == y_test) # 方法2:直接计算准确率score = estimator.score(x_test, y_test) print("准确率为:\n", score)# Output:预测结果为: [0 2 1 2 1 1 1 2 1 0 2 1 2 2 0 2 1 1 1 1 0 2 0 1 2 0 2 2 2 2]比对真实值和预测值: [ True True True True True True True True True True True True True True True True True True False True True True True True True True True True True True]准确率为: 0.9666666666666667

6.鸢尾花案例增加K值调优

# 4.2 模型选择与调优——网格搜索和交叉验证# 准备要调的超参数from sklearn.model_selection import GridSearchCVestimator = KNeighborsClassifier()param_dict = {"n_neighbors": [1, 3, 5]}estimator = GridSearchCV(estimator, param_grid=param_dict, cv=3)estimator.fit(x_train, y_train)# 方法a:比对预测结果和真实值y_predict = estimator.predict(x_test)print("比对预测结果和真实值:\n", y_predict == y_test) # 方法b:直接计算准确率score = estimator.score(x_test, y_test) print("直接计算准确率:\n", score)print("在交叉验证中验证的最好结果:\n", estimator.best_score_) print("最好的参数模型:\n", estimator.best_estimator_) print("每次交叉验证后的准确率结果:\n", estimator.cv_results_)# Output:比对预测结果和真实值: [ True True True True True True True True True True True True True True True True True True False True True True True True True True True True True True]直接计算准确率: 0.9666666666666667在交叉验证中验证的最好结果: 0.9666666666666667最好的参数模型: KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski', metric_params=None, n_jobs=1, n_neighbors=3, p=2, weights='uniform')每次交叉验证后的准确率结果: {'mean_fit_time': array([0.00049504, 0.00028332, 0.00028261]), 'std_fit_time': array([1.04830516e-04, 4.61627189e-06, 2.72766786e-06]), 'mean_score_time': array([0.00073298, 0.00054653, 0.00069141]), 'std_score_time': array([5.69916273e-05, 9.40939864e-06, 9.60944202e-05]), 'param_n_neighbors': masked_array(data=[1, 3, 5], mask=[False, False, False], fill_value='?', dtype=object), 'params': [{'n_neighbors': 1}, {'n_neighbors': 3}, {'n_neighbors': 5}], 'split0_test_score': array([1. , 0.97560976, 0.97560976]), 'split1_test_score': array([0.9 , 0.975, 0.975]), 'split2_test_score': array([0.94871795, 0.94871795, 0.94871795]), 'mean_test_score': array([0.95 , 0.96666667, 0.96666667]), 'std_test_score': array([0.04108569, 0.01245693, 0.01245693]), 'rank_test_score': array([3, 1, 1], dtype=int32), 'split0_train_score': array([1. , 0.93670886, 0.97468354]), 'split1_train_score': array([1. , 0.9875, 0.9625]), 'split2_train_score': array([1. , 0.96296296, 0.97530864]), 'mean_train_score': array([1. , 0.96239061, 0.97083073]), 'std_train_score': array([0. , 0.02073935, 0.00589624])}