一、AlertManager的三个概念

1.分组 Grouping

grouping是AlertManger把同类型的报警进行分组,合并多条报警到一条通知中

2.抑制 Inhibition

Inhibition是当某条报警已经发送,抑制由此报警引发的后续报警。

例如:现在触发了主机监控报警,应当抑制服务down掉的报警

3.静默 Silences

silences提供了一个简单的机制,根据标签进行静默处理。根据标签进行匹配,如果匹配到,则不发送报警通知。

silences需要在web ui界面进行配置,grouping、inhibition则需要在配置文件中配置

二、route匹配规则

1.route示例

route:group_by: ['alertname', 'cluster', 'service']group_wait: 10sgroup_interval: 10srepeat_interval: 1mreceiver: 'mail' #默认路由:未匹配到下面标签的发送给mailroutes:- receiver: 'test-1' #正则匹配到一组lable即发送给test-1。{test1="mysql1"}或{test1="db"}group_wait: 10smatch_re:test1: mysql1|db- receiver: 'test-2' #匹配到lable为{severity="warning"} 则发送给test-2match:severity: warning

路由匹配这一块匹配较少还是比较容易写出来的,如果比较多的话容易乱,最终效果可以在官方的路由树测试匹配报警。

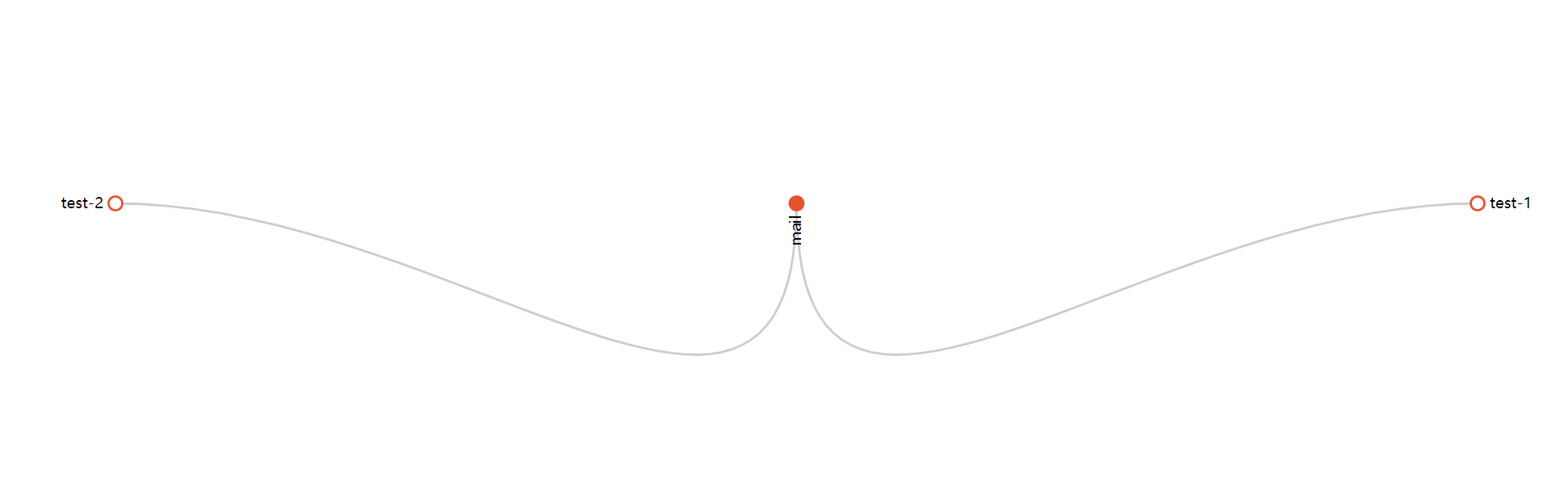

- 将alertmanager.yml配置文件复制进去,然后点击

Draw Routing Tree即可绘制路由图,根据标签即可查看报警路由,填写需要测试的报警所含有的标签,如:{test1="db"},点击Match Lable Set即可查看报警发送给了哪个receiver - 路由树链接:https://www.prometheus.io/webtools/alerting/routing-tree-editor/

- 如上述示例的路由树为这样:

2.route多路分支

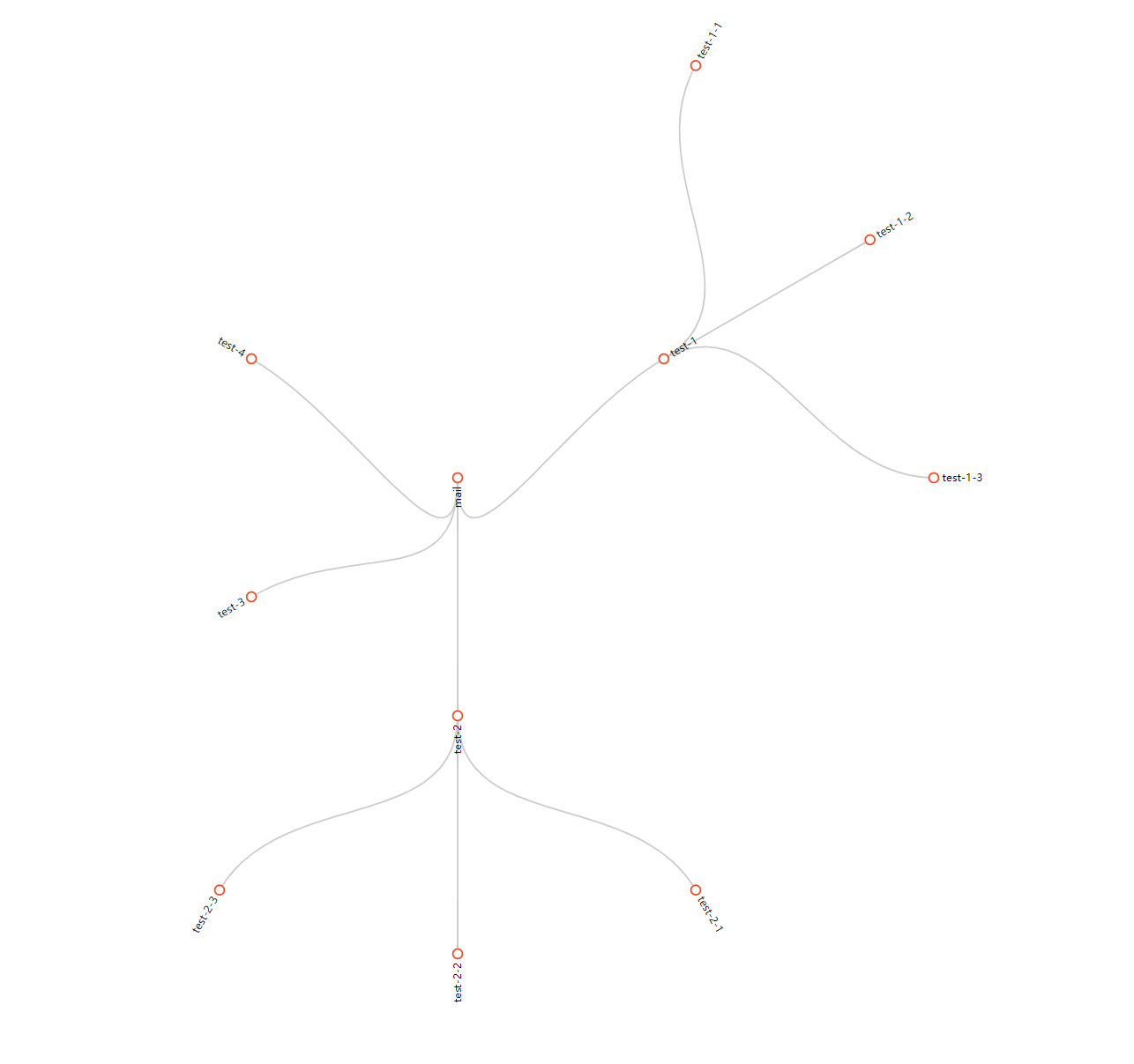

- 还可以玩的更复杂,继续细分

上边路由基本上是单分支,不利用官方的路由树还能梳理出来,但是遇到大规模的路由就比较难了,如下: ``` route: group_by: [‘alertname’, ‘cluster’, ‘service’] group_wait: 10s group_interval: 10s repeat_interval: 1m receiver: ‘mail’

routes:

receiver: ‘test-1’ group_wait: 10s match_re: test1: mysql1 routes:

- receiver: ‘test-1-1’ match: status: prod

- receiver: ‘test-1-2’ match: status: dev

- receiver: ‘test-1-3’ match: status: test

receiver: ‘test-2’ match: severity: warning routes:

- receiver: ‘test-2-1’ match: status: prod

- receiver: ‘test-2-2’ match: status: dev

- receiver: ‘test-2-3’ match: status: test

- receiver: ‘test-3’ group_wait: 10s match_re: test3: mysql3

- receiver: ‘test-4’ group_wait: 10s match_re: test4: mysql4

- 路由树<a name="vlrFV"></a>### 三、receiver接收器<a name="iNk0L"></a>#### 1.receiver配置示例

templates:

- ‘/data/server/alertmanage/email.tmpl’

receivers:

- name: ‘mail’

email_configs:

- to: ‘all@test.com’ send_resolved: true html: ‘{{ template “email.html” . }}’

- name: ‘test-1’

email_configs:

- to: ‘ops@test.com’ send_resolved: true html: ‘{{ template “email.html” . }}’

name: ‘test-2’ email_configs:

- to: ‘web@test.com,web1@test.com’ #发送多个报警人用逗号隔开 send_resolved: true #发送恢复信息 html: ‘{{ template “email.html” . }}’ #发送消息的模板 ```

根据上边的路由,匹配到哪个receiver,则利用哪个receiver发送消息

2.template配置示例

{{ define "email.html" }}{{ range .Alerts }}=========start==========<br>告警程序: prometheus_alert <br>告警级别: {{ .Labels.severity }} 级 <br>告警类型: {{ .Labels.alertname }} <br>故障主机: {{ .Labels.instance }} <br>告警主题: {{ .Annotations.summary }} <br>告警详情: {{ .Annotations.description }} <br>触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }} <br>=========end==========<br>{{ end }}{{ end }}

需要注意两点

1.{{ .StartsAt.Format “2006-01-02 15:04:05” }} 其中时间必须为”2006-01-02 15:04:05”,否则会出错,这个是go语言的诞生时间,而prometheus大部分都是go写的,所以时间格式转化都需要指定这个时间

2.其中变量的值的获取,是从rule中获取的。如下一个Prometheus的rule:

- alert: "内存使用率过高a"expr: node_memory_MemAvailable_bytes / node_memory_MemTotal_bytes < 0.9for: 1mlabels:severity: warningtest1: mysql1annotations:summary: High memory usagedescription: "内存使用率高"

其中{{ .Labels.severity }} 的值就应该为 waring; {{ .Annotations.summary }} 的值为:High memory usage

四、

1.inhibit配置示例

# node-exporter: 1 > 2- source_match:job: 'node-exporter'level: '1'target_match:job: 'node-exporter'level: '2'equal: ['metric', 'instance']

此示例表示在同一个job中,level1的抑制level2级别的报警。equal表示在metric和instance相同的前提下。