cd /home/hadoop/app/hadoop/etc/hadoopvi core-site.xml

更改core-site.xml文件,插入以下代码

<!-- 设置用户 --><property><name>hadoop.http.staticuser.user</name><value>root</value></property><!-- 不开启权限检查 --><property><name>dfs.permissions.enabled</name><value>false</value></property>

更改完之后就可以通过网页端上传文件了

vi hdfs-site.xml

插入以下代码

<property><name>dfs.permissions</name><value>false</value></property>

前期准备工作已经完成,打开启动hadoop服务

先把比做环节完成了

实验三必完成环节.pdf

截图发给老师

接下来是选做环节

实验三选做环节.docx

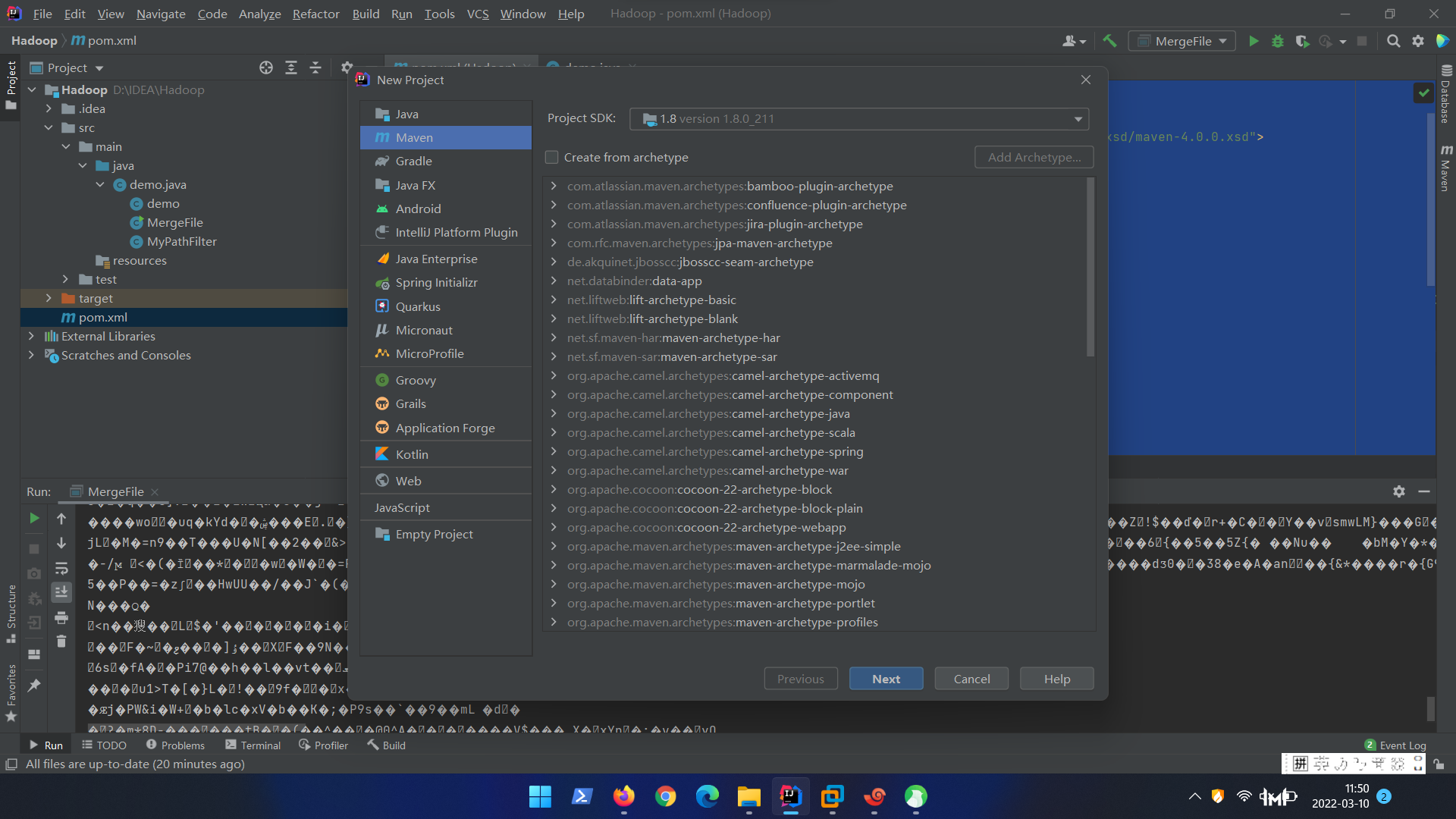

按照第二种配置IDEA

创建MAVEN项目

直接next

pom.xml文件

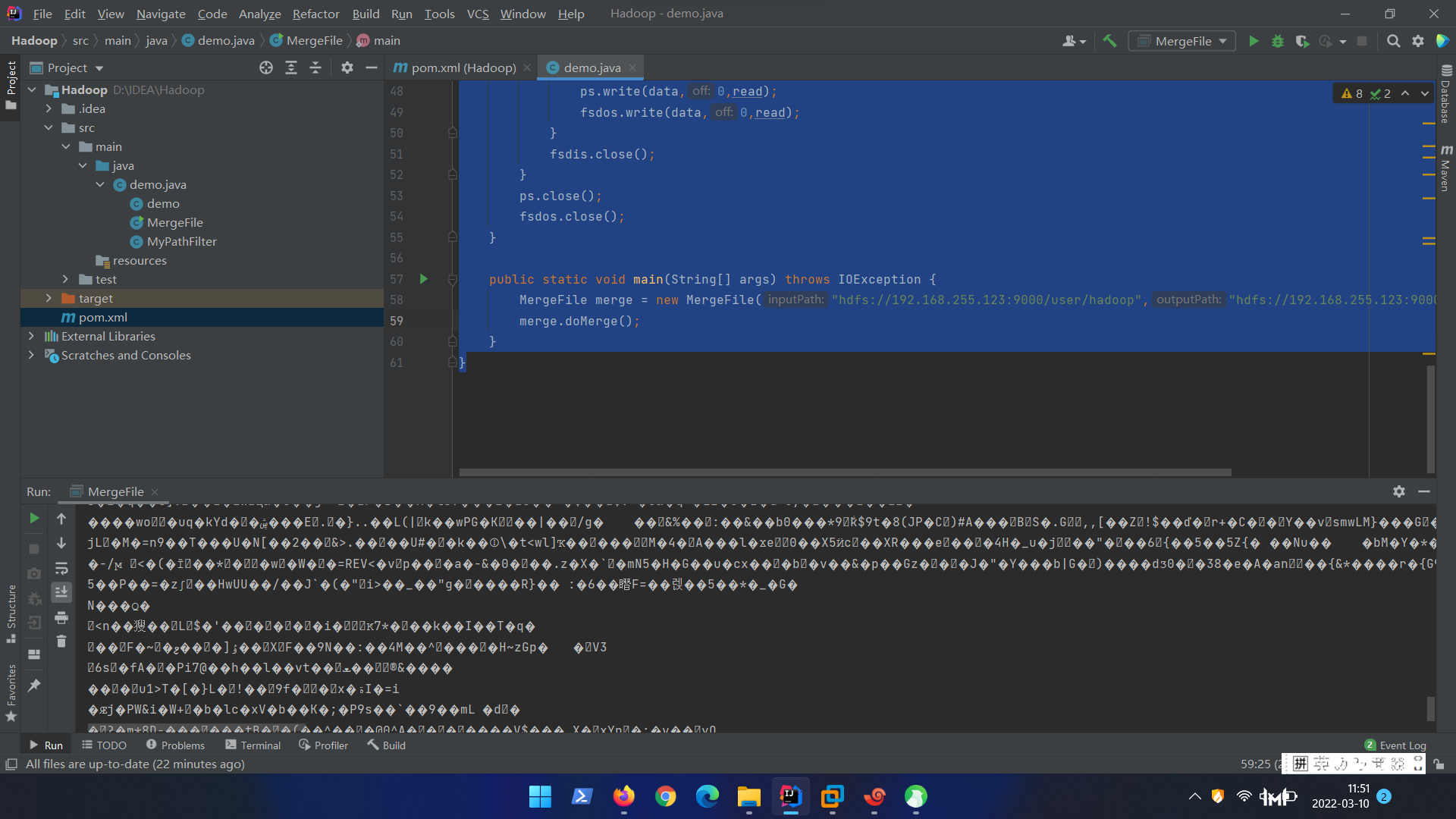

import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.*;import javax.security.auth.login.AppConfigurationEntry;import java.io.IOException;import java.io.PrintStream;import java.net.URI;import java.net.URL;public class demo {}class MyPathFilter implements PathFilter{String reg = null;MyPathFilter(String reg){this.reg = reg;}@Overridepublic boolean accept(Path path) {if (!(path.toString().matches(reg))){return true;}return false;}}class MergeFile{Path inputPath = null;Path outputPath = null;public MergeFile(String inputPath,String outputPath){this.inputPath = new Path(inputPath);this.outputPath = new Path(outputPath);}public void doMerge() throws IOException{Configuration conf = new Configuration();conf.set("fs.defaultFS","hdfs://192.168.255.123:9000");conf.set("fs.hdfs.impl","org.apache.hadoop.hdfs.DistributedFileSystem");FileSystem fsSource = FileSystem.get(URI.create(inputPath.toString()),conf);FileSystem fsDst = FileSystem.get(URI.create(outputPath.toString()),conf);FileStatus[] sourceStatus = fsSource.listStatus(inputPath,new MyPathFilter(".*\\.abc"));FSDataOutputStream fsdos = fsDst.create(outputPath);PrintStream ps = new PrintStream(System.out);for (FileStatus sta:sourceStatus) {System.out.println("路径:"+sta.getPath()+"文件大小:"+sta.getLen()+"权限:"+sta.getPermission()+"内容:");FSDataInputStream fsdis = fsSource.open(sta.getPath());byte[] data = new byte[1024];int read = -1;while ((read = fsdis.read(data))>0){ps.write(data,0,read);fsdos.write(data,0,read);}fsdis.close();}ps.close();fsdos.close();}public static void main(String[] args) throws IOException {MergeFile merge = new MergeFile("hdfs://192.168.255.123:9000/user/hadoop","hdfs://192.168.255.123:9000/user/hadoop/merge.txt");merge.doMerge();}}

运行就完了

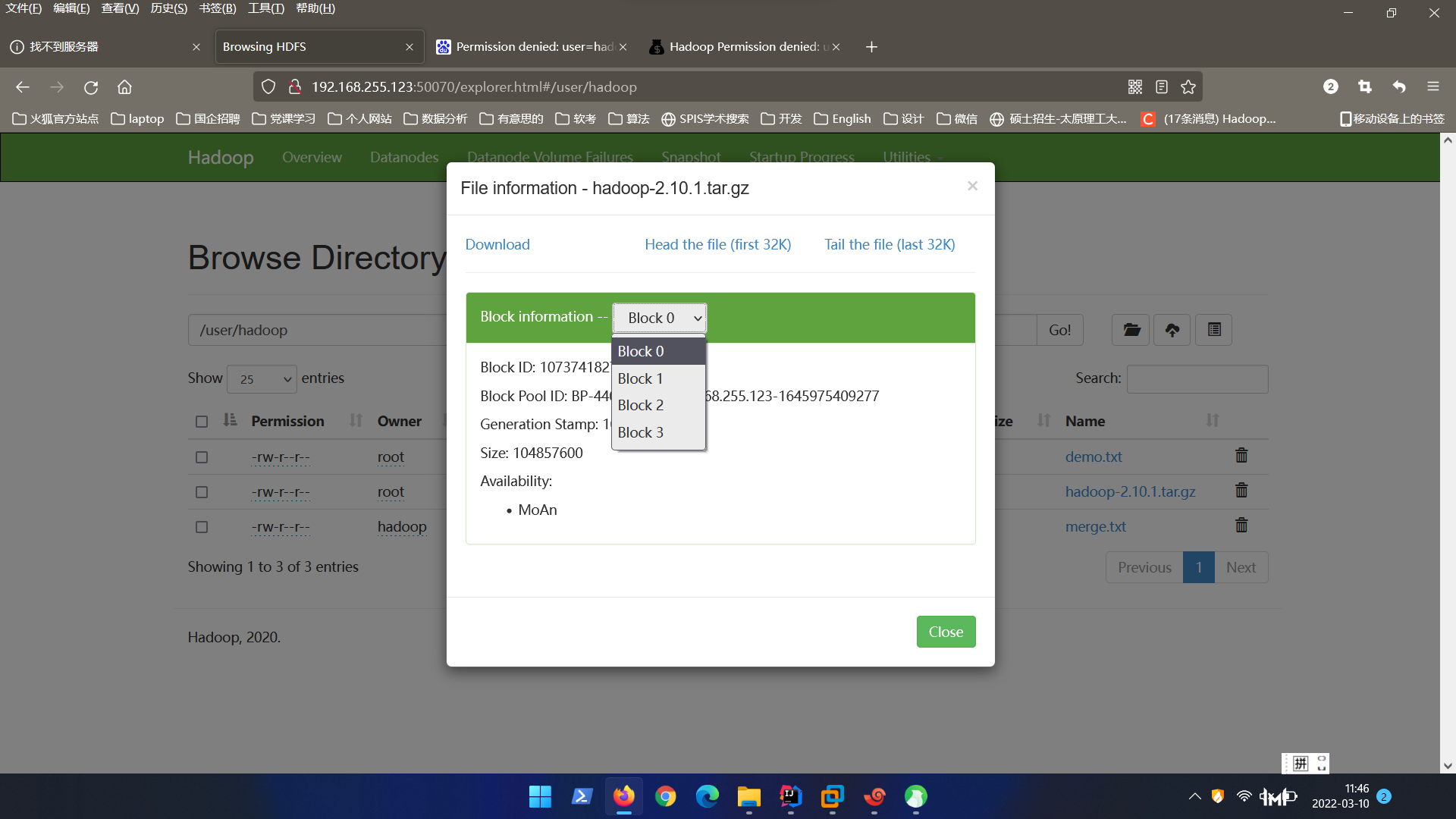

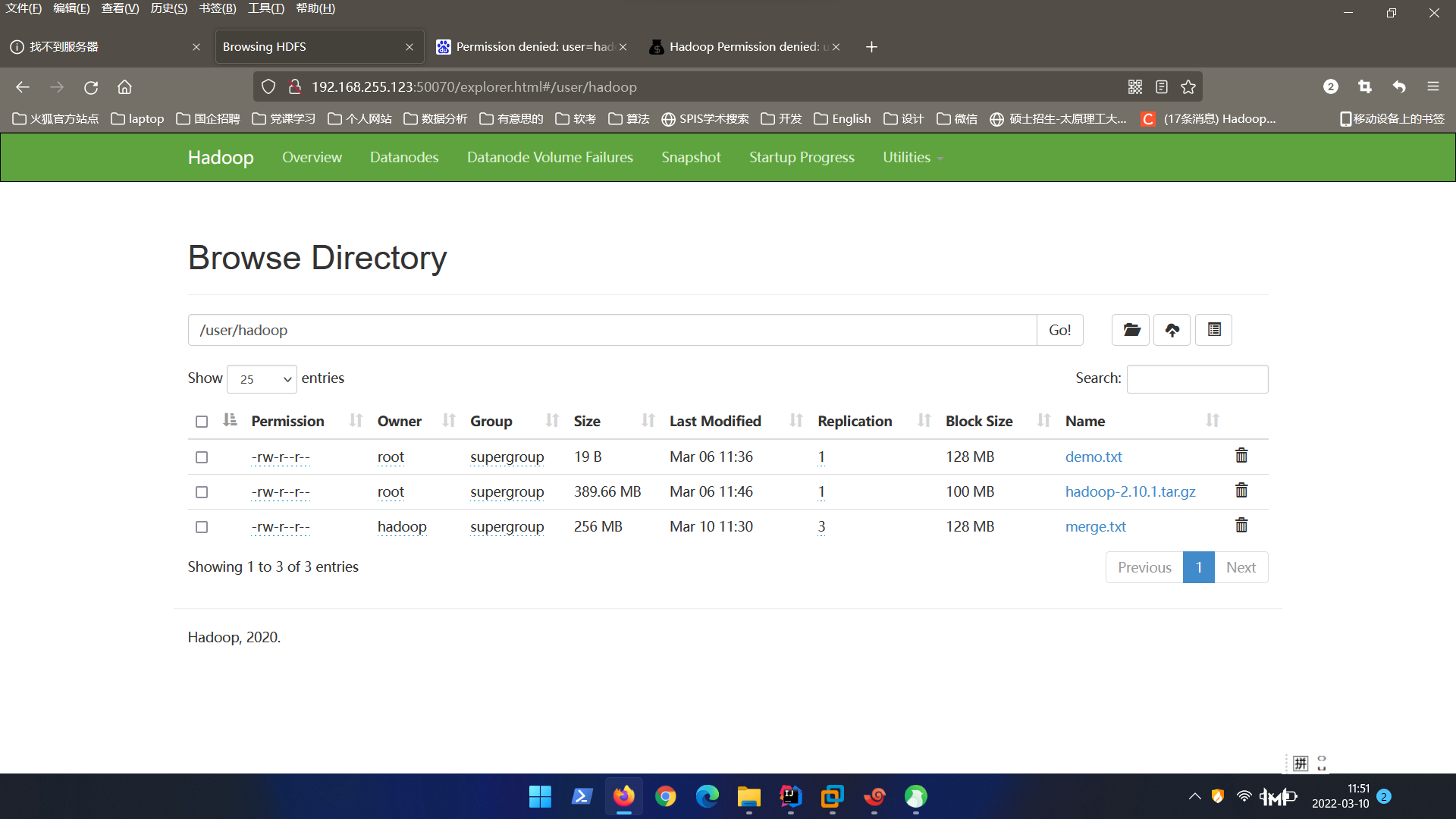

看到有merge.txt文件