在 linux 系统上,一切都可以看作是一个文件。每个进程都有很多文件描述符分别指向 文件、sockets、驱动设备、== 。Linux提供select/poll,进程通过将一个或多个fd传递给 select 或 poll 系统调用,阻塞在 select;这样 select/poll 可以帮我们侦测许多 fd 是否就绪。select 有个比较大的缺陷就是一个进程所打开的 FD 是有 一定限制的,由 FD_SETSIZE 设置,默认值是 2048。 select/poll 是顺序扫描 fd 是否就绪,而且支持 的 fd 数量有限。epoll 是基于事件驱 动方式,而不是顺序扫描,当有 fd 就绪时,立即回调函数 rollback。

目前支持 I/O 复用的系统调用有 select、poll、epoll,在 Linux 网络编程过程中,很长一段时间都使用 select 做事件触发,然而 select 逐渐 暴露出了一些缺陷,使得 linux 不得不在新的内核中寻找出替代方案,那就是 epoll。其实,epoll 与 select 原理类似,只不过,epoll 作出了一些重大改进, 具体如下:

- 支持一个进程打开的socket描述符(FD)不受限制(仅受限于操作系统的最

大文件句柄数):select 有个比较大的缺陷就是一个进程所打开的 FD 是有

一定限制的,由 FD_SETSIZE 设置,默认值是 2048。对于那些需要支持的上

万连接数目的大型服务器来说显然太少了。这时候你可以选择修改这个宏然

后重新编译内核,不过资料也同时指出这样会带来网络效率的下降,二是可

以选择多进程的解决方案(传统的 Apache 方案),不过虽然 linux 上面创建

进程的代价比较小,但仍旧是不可忽视的,加上进程间数据同步远比不上线

程间同步的高效,所以也不是一种完美的方案。不过 epoll 则没有这个限制,

它所支持的 FD 上限是最大可以打开文件的数目,这个数字一般远大于 2048,

举个例子,在 1GB 内存的机器上大约是 10 万左右,具体数目可以 cat /proc/sys/fs/file-max 察看,一般来说这个数目和系统内存关系很大;

2.IO效率可能随FD数目增加而线性下降:传统的select/poll另一个致命弱 点就是当你拥有一个很大的 socket 集合,由于网络延时,任一时间只有部 分的 socket 是”活跃”的,但是 select/poll 每次调用都会线性扫描全部的 集合,导致效率呈现线性下降。但是 epoll 不存在这个问题,它只会对”活 跃”的 socket 进行操作—-这是因为在内核实现中 epoll 是根据每个 fd 上面 的 callback 函数实现的。那么,只有”活跃”的 socket 才会主动的去调用 callback 函数,其他 idle 状态 socket 则不会,在这点上,epoll 实现了一 个”伪”AIO,因为这时候推动力在 os 内核。在一些 benchmark 中,如果所有 的 socket 基本上都是活跃的—-比如一个高速 LAN 环境,epoll 并不比 select/poll 效率高太多,相反,如果过多使用 epoll_ctl,效率相比还有稍 微的下降。但是一旦使用 idle connections 模拟 WAN 环境,epoll 的效率就 远在 select/poll 之上了;

使用 mmap 加速内核与用户空间的消息传递:无论是select,poll还是epoll 都需要内核把 FD 消息通知给用户空间,如何避免不必要的内存拷贝就很重 要,在这点上,epoll 是通过内核于用户空间 mmap 同一块内存实现的;

epoll的API更加简单:包括创建一个epoll描述符,添加监听事件,阻塞等 待所监听的事件发生,关闭 epoll 描述符。

<br />****

**

**

One basic concept of Linux (actually Unix) is the rule that everything in Unix/Linux is a file. Each process has a table of file descriptors that point to files, sockets, devices and other operating system objects.

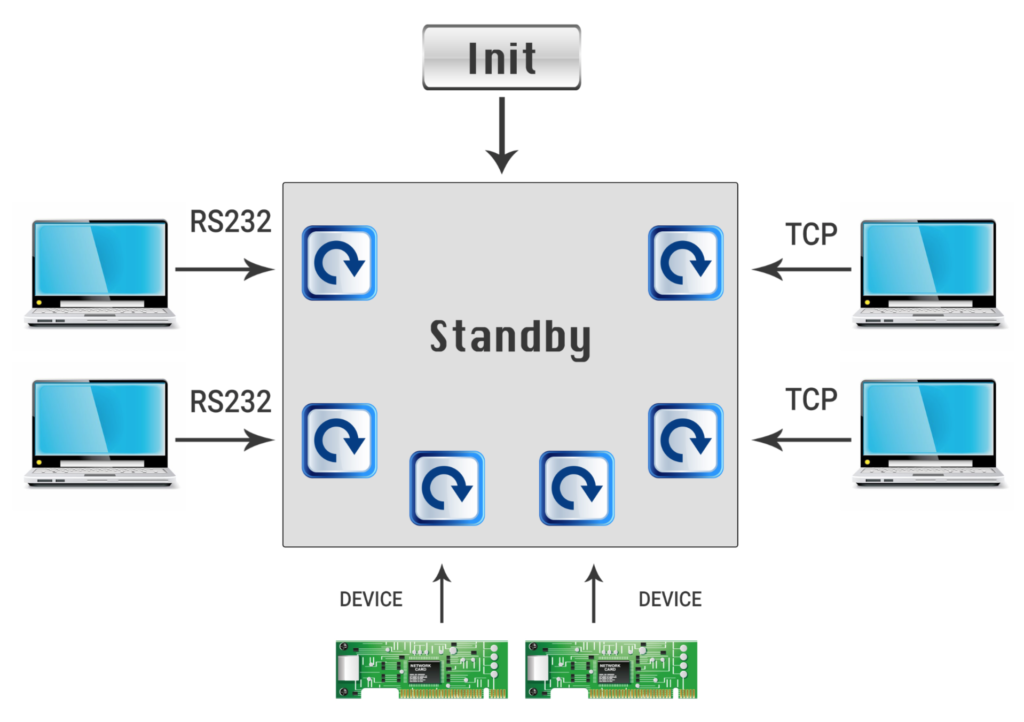

Typical system that works with many IO sources has an initializaion phase and then enter some kind of standby mode – wait for any client to send request and response it。

Simple solution is to create a thread (or process) for each client , block on read until a request is sent and write a response. This is working ok with a small amount of clients but if we want to scale it to hundred of clients, creating a thread for each client is a bad idea。

IO Multiplexing

The solution is to use a kernel mechanism for polling over a set of file descriptors. There are 3 options you can use in Linux:

- select(2)

- poll(2)

- epoll

All the above methods serve the same idea, create a set of file descriptors , tell the kernel what would you like to do with each file descriptor (read, write, ..) and use one thread to block on one function call until at least one file descriptor requested operation available

Select System Call

The select( ) system call provides a mechanism for implementing synchronous multiplexing I/O

| 1 | int select(int nfds, fd_set readfds, fd_set writefds, fd_set exceptfds, struct timeval timeout); |

|---|---|

A call to select( ) will block until the given file descriptors are ready to perform I/O, or until an optionally specified timeout has elapsed

The watched file descriptors are broken into three sets

- File descriptors listed in the readfds set are watched to see if data is available for reading.

- File descriptors listed in the writefds set are watched to see if a write operation will complete without blocking.

- File descriptors in the exceptfds set are watched to see if an exception has occurred, or if out-of-band data is available (these states apply only to sockets).

A given set may be NULL, in which case select( ) does not watch for that event.

On successful return, each set is modified such that it contains only the file descriptors that are ready for I/O of the type delineated by that set

Example:

#include <stdio.h>

#include <sys/types.h>

#include <sys/socket.h>

#include <netinet/in.h>

#include <wait.h>

#include <signal.h>

#include <errno.h>

#include <sys/select.h>

#include <sys/time.h>

#include <unistd.h>

#define MAXBUF 256

void child_process(void)

{

sleep(2);

char msg[MAXBUF];

struct sockaddr_in addr = {0};

int n, sockfd,num=1;

srandom(getpid());

/* Create socket and connect to server */

sockfd = socket(AF_INET, SOCK_STREAM, 0);

addr.sin_family = AF_INET;

addr.sin_port = htons(2000);

addr.sin_addr.s_addr = inet_addr("127.0.0.1");

connect(sockfd, (struct sockaddr*)&addr, sizeof(addr));

printf("child {%d} connected \n", getpid());

while(1){

int sl = (random() % 10 ) + 1;

num++;

sleep(sl);

sprintf (msg, "Test message %d from client %d", num, getpid());

n = write(sockfd, msg, strlen(msg)); /* Send message */

}

}

int main()

{

char buffer[MAXBUF];

int fds[5];

struct sockaddr_in addr;

struct sockaddr_in client;

int addrlen, n,i,max=0;;

int sockfd, commfd;

fd_set rset;

for(i=0;i<5;i++)

{

if(fork() == 0)

{

child_process();

exit(0);

}

}

sockfd = socket(AF_INET, SOCK_STREAM, 0);

memset(&addr, 0, sizeof (addr));

addr.sin_family = AF_INET;

addr.sin_port = htons(2000);

addr.sin_addr.s_addr = INADDR_ANY;

bind(sockfd,(struct sockaddr*)&addr ,sizeof(addr));

listen (sockfd, 5);

for (i=0;i<5;i++)

{

memset(&client, 0, sizeof (client));

addrlen = sizeof(client);

fds[i] = accept(sockfd,(struct sockaddr*)&client, &addrlen);

if(fds[i] > max)

max = fds[i];

}

while(1){

FD_ZERO(&rset);

for (i = 0; i< 5; i++ ) {

FD_SET(fds[i],&rset);

}

puts("round again");

select(max+1, &rset, NULL, NULL, NULL);

for(i=0;i<5;i++) {

if (FD_ISSET(fds[i], &rset)){

memset(buffer,0,MAXBUF);

read(fds[i], buffer, MAXBUF);

puts(buffer);

}

}

}

return 0;

}

We start with creating 5 child processes , each process connect to the server and send messages to the server. The server process uses accept(2) to create a different file descriptor for each client. The first parameter in select(2) should be the highest-numbered file descriptor in any of the three sets, plus 1 so we check the max fd num

The main infinite loop creates a set of all file descriptors, call select and on return check which file descriptor is ready for read. For simplicity I didn’t add error checking

On return , select changes the set to contains only the file descriptors that is ready so we need to build the set again every iteration.

The reason we need to tell select what is the highest-numbered file descriptor is the inner implementation of fd_set. Each fd is declared by a bit so fd_set is an array of 32 integers (32 32bit = 1024 bits). The function check any bit to see if its set until it reach the maximum. This means that if we have 5 file descriptors but the highest number is 900 , the function will check any bit from 0 to 900 to find the file descriptors to watch. There is a posix alternative to select – pselect which add a signal mask while waiting

*Select – summary:

- We need to build each set before each call

- The function check any bit up to the higher number – O(n) 需要轮询所有的文件描述符,导致 算法时间复杂度高,达到 O(n)

- We need to iterate over the file descriptors to check if it exists on the set returned from select

- The main advantage of select is the fact that it is very portable – every unix like OS has it

Poll System call

Unlike select(), with its inefficient three bitmask-based sets of file descriptors, poll( ) employs a single array of nfds pollfd structures. the prototype is simpler:

| 1 | int poll (struct pollfd *fds, unsigned int nfds, int timeout); |

|---|---|

The structure pollfd has a different fields for the events and the returning events so we don’t need to build it each time:

| 1 2 3 4 5 |

struct pollfd { int fd; short events; short revents; }; |

|---|---|

For each file descriptor build an object of type pollfd and fill the required events. after poll returns check the revents field

To change the above example to use poll:

for (i=0;i<5;i++)

{

memset(&client, 0, sizeof (client));

addrlen = sizeof(client);

pollfds[i].fd = accept(sockfd,(struct sockaddr*)&client, &addrlen);

pollfds[i].events = POLLIN;

}

sleep(1);

while(1){

puts("round again");

poll(pollfds, 5, 50000);

for(i=0;i<5;i++) {

if (pollfds[i].revents & POLLIN){

pollfds[i].revents = 0;

memset(buffer,0,MAXBUF);

read(pollfds[i].fd, buffer, MAXBUF);

puts(buffer);

}

}

}

Like we did with select , we need to check each pollfd object to see if its file descriptor is ready but we don’t need to build the set each iteration

Poll vs Select

- poll( ) does not require that the user calculate the value of the highest- numbered file descriptor +1

- poll( ) is more efficient for large-valued file descriptors. Imagine watching a single file descriptor with the value 900 via select()—the kernel would have to check each bit of each passed-in set, up to the 900th bit.

- select( )’s file descriptor sets are statically sized.

- With select( ), the file descriptor sets are reconstructed on return, so each subsequent call must reinitialize them. The poll( ) system call separates the input (events field) from the output (revents field), allowing the array to be reused without change.

- The timeout parameter to select( ) is undefined on return. Portable code needs to reinitialize it. This is not an issue with pselect( )

- select( ) is more portable, as some Unix systems do not support poll( )

Epoll* System Calls

While working with select and poll we manage everything on user space and we send the sets on each call to wait. To add another socket we need to add it to the set and call select/poll again.

Epoll* system calls help us to create and manage the context in the kernel. We divide the task to 3 steps:

- create a context in the kernel using epoll_create

- add and remove file descriptors to/from the context using epoll_ctl

- wait for events in the context using epoll_wait

Lets change the above example to use epoll:

struct epoll_event events[5];

int epfd = epoll_create(10);

...

...

for (i=0;i<5;i++)

{

static struct epoll_event ev;

memset(&client, 0, sizeof (client));

addrlen = sizeof(client);

ev.data.fd = accept(sockfd,(struct sockaddr*)&client, &addrlen);

ev.events = EPOLLIN;

epoll_ctl(epfd, EPOLL_CTL_ADD, ev.data.fd, &ev);

}

while(1){

puts("round again");

nfds = epoll_wait(epfd, events, 5, 10000);

for(i=0;i<nfds;i++) {

memset(buffer,0,MAXBUF);

read(events[i].data.fd, buffer, MAXBUF);

puts(buffer);

}

}

We first create a context (the parameter is ignored but has to be positive). When a client connect we create an epoll_event object and add it to the context and on the infinite loop we are only waiting on the context.

Epoll vs Select/Poll

- We can add and remove file descriptor while waiting

- epoll_wait returns only the objects with ready file descriptors

- epoll has better performance – O(1) instead of O(n) Epoll 最大的优点就在于它只管你“活跃”的连接 ,而跟连接总数无关,因此在实际的网络环境中, Epoll 的效率就会远远高于 select 和 poll 。算法时间复杂度只有 O(1)

- epoll can behave as level triggered or edge triggered

- epoll is Linux specific so non portable