第一章.DWM层介绍

DWM层(Data WareHouse Middle),一般称为数据中间层,该层会在DWD的基础上,对数据做轻度的”聚合”操作,生成宽表

DWM层主要服务于DWS,因为部分需求直接从DWD层到DWS层中间有一定的计算量,而且这部分的计算结果很有可能被多个DWS层主题复用,所以部分DWD会形成一层DWM

主要涉及业务

- 访问UV计算

- 跳出明细计算

- 订单宽表

- 支付宽表

第二章.DWM层:UV计算

1.需求分析

UV,全称是Unique Visitor,即独立访客,对于实时计算中,也可以称为DAU( Daily Active User),即每日活跃用户,并且实时计算中的uv通常是指当日的访客数

问题:怎么从用户行为日志中识别出当日的访客1.识别出该访客打开的第一个页面,表示这个访客开始进入我们的应用2.由于访客可以在一天中多次进入应用,所以我们要在一天的范围内去重3.如何在第二天对某个用户重新做UV计算

实现思路

- 使用event-time语义(考虑数据的乱序)

- 按照mid分组

- 添加窗口

- 过滤出来当天的首次访问记录(去重)

- 使用flink的状态,而且状态只保留一天即可

- 什么时候清除状态,现在的日期和状态中保存的日期不一致的时候清除

- 把当天的首次访问记录写入到dwm层(kafka)

2.具体实现

package com.atguigu.gmall.app.dwm;import com.alibaba.fastjson.JSON;import com.alibaba.fastjson.JSONObject;import com.atguigu.gmall.app.BaseAppV1;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.util.FlinkSinkUtil;import com.atguigu.gmall.util.IteratorToListUtil;import org.apache.flink.api.common.eventtime.WatermarkStrategy;import org.apache.flink.api.common.functions.MapFunction;import org.apache.flink.api.common.state.ValueState;import org.apache.flink.api.common.state.ValueStateDescriptor;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;import org.apache.flink.streaming.api.windowing.time.Time;import org.apache.flink.streaming.api.windowing.windows.TimeWindow;import org.apache.flink.util.Collector;import java.time.Duration;import java.util.Collections;import java.util.Comparator;import java.util.List;public class DwmUvApp extends BaseAppV1 {public static void main(String[] args) {new DwmUvApp().init(3001,1,"DwmUvApp","DwmUvApp", Constant.TOPIC_DWD_PAGE);}@Overridepublic void run(StreamExecutionEnvironment env, DataStreamSource<String> stream) {stream.map(new MapFunction<String, JSONObject>() {@Overridepublic JSONObject map(String value) throws Exception {return JSON.parseObject(value);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<JSONObject>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((obj,ts)->obj.getLong("ts"))).keyBy(obj -> obj.getJSONObject("common").getString("mid")).window(TumblingEventTimeWindows.of(Time.seconds(5))).process(new ProcessWindowFunction<JSONObject, JSONObject, String, TimeWindow>() {private ValueState<Long> firstVisitState;@Overridepublic void open(Configuration parameters) throws Exception {firstVisitState = getRuntimeContext().getState(new ValueStateDescriptor<Long>("firstVisitState", Long.class));}@Overridepublic void process(String key, Context context, Iterable<JSONObject> elements, Collector<JSONObject> out) throws Exception {//识别天的变化,然后清空状态//今天String today = IteratorToListUtil.toDateString(context.window().getStart());//昨天String yesterday = firstVisitState.value() == null ? null : IteratorToListUtil.toDateString(firstVisitState.value());if(!today.equals(yesterday)){firstVisitState.clear();}if(firstVisitState.value()==null){List<JSONObject> list = IteratorToListUtil.toList(elements);JSONObject min = Collections.min(list, Comparator.comparing(ele -> ele.getLong("ts")));out.collect(min);firstVisitState.update(min.getLong("ts"));}}}).map(new MapFunction<JSONObject, String>() {@Overridepublic String map(JSONObject value) throws Exception {return value.toJSONString();}}).addSink(FlinkSinkUtil.getKafkaSink(Constant.TOPIC_DWM_UV));}}

3.测试

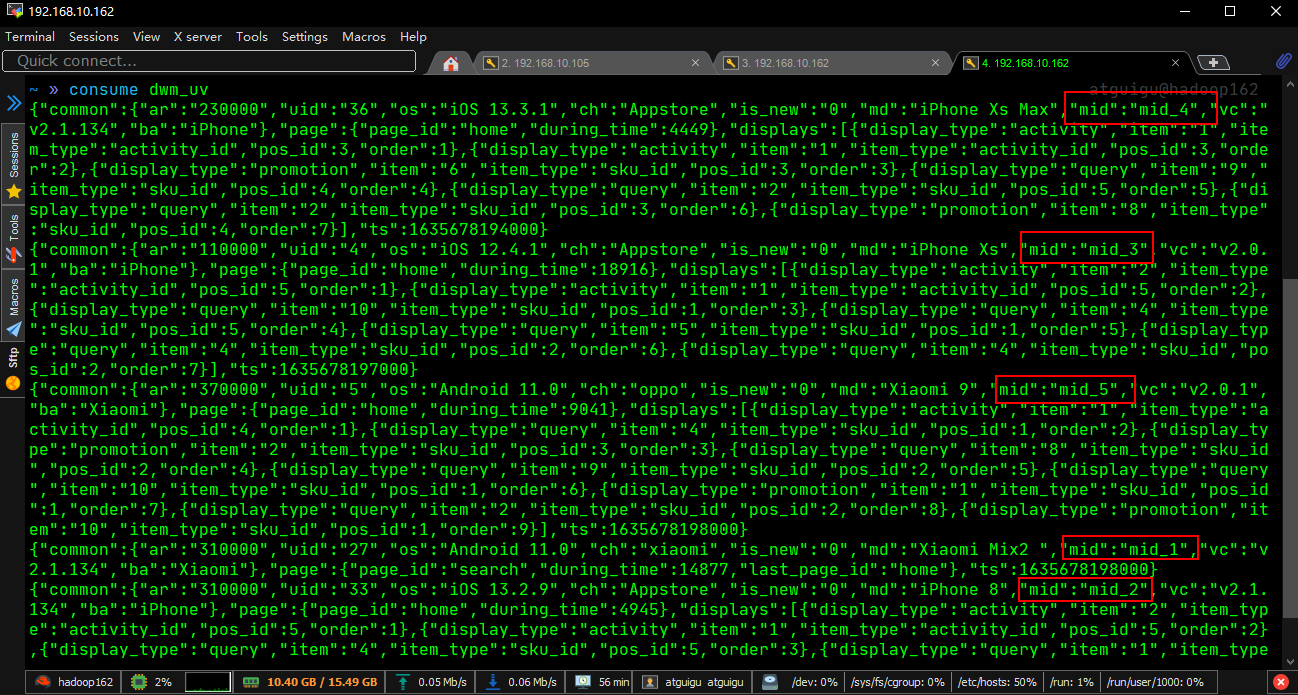

分别启动hadoop,zookeeper,kafka,gmall-logger,flink-yarn,dwdLogAPP,dwmUvApp

然后在终端起一个消费者消费 dwm_uv

可以看出,消费到的mid没有重复

第三章.DWM层:跳出明细

1.需求分析

1.什么是跳出跳出就是用户成功的访问了网站的入口页面(例如首页)后就退出,不再继续访问网站的其他页面跳出率计算公式: 跳出率=访问一个页面后离开网站的次数 / 入口总访问次数观察关键词的跳出率就可以得到用户对网站内容的认可,或者说你的网站是否对用户有吸引力,而网站的内容是否能够对用户有吸引力,而网站的内容是否能够对用户有帮助留住用户也可以直接从跳出率中看出来所以跳出率是衡量网站内容质量的重要标准2.计算跳出率的思路首先要识别哪些是跳出行为,要把这些跳出的访客最后一个访问的页面识别出来,那么要抓住几个特征:一.该页面是用户近期访问的第一个页面,这个可以通过该页面是否有上一个页面(last_page_id)来判断,如果这个表示为空,就说明,这是这个访客访问的第一个页面二.首次访问之后很长一段时间,用户没继续再有其他页面的访问,这个可以通过Flink自带的CEP技术来实现,用户跳出事件,本质上就是一个条件事件加一个超时事件的组合

2.具体实现

package com.atguigu.gmall.app.dwm;import com.alibaba.fastjson.JSON;import com.alibaba.fastjson.JSONObject;import com.atguigu.gmall.app.BaseAppV1;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.util.FlinkSinkUtil;import com.atguigu.gmall.util.IteratorToListUtil;import org.apache.flink.api.common.eventtime.WatermarkStrategy;import org.apache.flink.api.common.functions.MapFunction;import org.apache.flink.api.common.state.ValueState;import org.apache.flink.api.common.state.ValueStateDescriptor;import org.apache.flink.cep.CEP;import org.apache.flink.cep.PatternSelectFunction;import org.apache.flink.cep.PatternStream;import org.apache.flink.cep.PatternTimeoutFunction;import org.apache.flink.cep.pattern.Pattern;import org.apache.flink.cep.pattern.conditions.SimpleCondition;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.datastream.KeyedStream;import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.streaming.api.functions.windowing.ProcessWindowFunction;import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows;import org.apache.flink.streaming.api.windowing.time.Time;import org.apache.flink.streaming.api.windowing.windows.TimeWindow;import org.apache.flink.util.Collector;import org.apache.flink.util.OutputTag;import java.time.Duration;import java.util.Collections;import java.util.Comparator;import java.util.List;import java.util.Map;public class DwmUserJumpDetailApp extends BaseAppV1 {public static void main(String[] args) {new DwmUserJumpDetailApp().init(3002,1,"DwmUserJumpDetailApp","DwmUserJumpDetailApp", Constant.TOPIC_DWD_PAGE);}@Overridepublic void run(StreamExecutionEnvironment env, DataStreamSource<String> stream) {//1.获取流KeyedStream<JSONObject, String> keyedStream = stream.map(new MapFunction<String, JSONObject>() {@Overridepublic JSONObject map(String value) throws Exception {return JSON.parseObject(value);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<JSONObject>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((obj, ts) -> obj.getLong("ts"))).keyBy(obj -> obj.getJSONObject("common").getString("mid"));//2.定义模式Pattern<JSONObject, JSONObject> pattern = Pattern.<JSONObject>begin("entry1").where(new SimpleCondition<JSONObject>() {@Overridepublic boolean filter(JSONObject value) throws Exception {//判断入口:lastpageId是nullString lastPageId = value.getJSONObject("page").getString("last_page_id");return lastPageId == null || lastPageId.length() == 0;}}).next("entry2").where(new SimpleCondition<JSONObject>() {@Overridepublic boolean filter(JSONObject value) throws Exception {//不是入口String lastPageId = value.getJSONObject("page").getString("last_page_id");return lastPageId == null || lastPageId.length() == 0;}}).within(Time.seconds(5));//3.把模式作用在流上PatternStream<JSONObject> ps = CEP.pattern(keyedStream, pattern);//4.取到匹配到的数据SingleOutputStreamOperator<JSONObject> normal = ps.select(new OutputTag<JSONObject>("jump") {},new PatternTimeoutFunction<JSONObject, JSONObject>() {@Overridepublic JSONObject timeout(Map<String, List<JSONObject>> map, long l) throws Exception {return map.get("entry1").get(0);}},//这个是正常访问的,不需要,可以不实现new PatternSelectFunction<JSONObject, JSONObject>() {@Overridepublic JSONObject select(Map<String, List<JSONObject>> map) throws Exception {return map.get("entry1").get(0);}});normal.union(normal.getSideOutput(new OutputTag<JSONObject>("jump") {})).map(new MapFunction<JSONObject, String>() {@Overridepublic String map(JSONObject value) throws Exception {return value.toJSONString();}})//.print();.addSink(FlinkSinkUtil.getKafkaSink(Constant.TOPIC_DWM_UJ_DETAIL));}}

3.测试

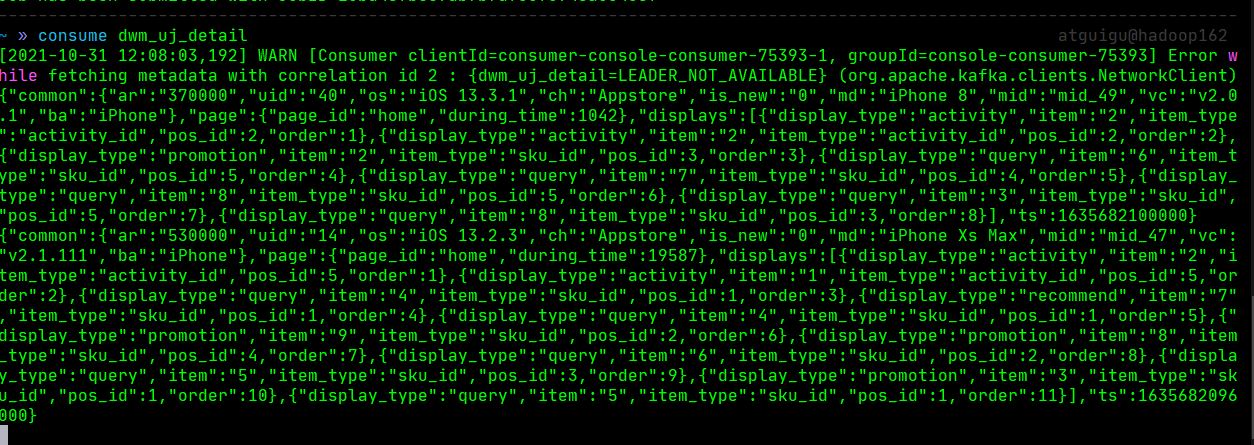

分别启动hadoop,zookeeper,kafka,gmall-logger,flink-yarn,dwdLogAPP,dwmUvApp,DwmUserJumpDetailApp

然后在终端起一个消费者消费 dwm_uj_detail

可以看到消费到的数据都是没有last_page_id的数据,即跳出数据

第四章.DWM层:订单宽表

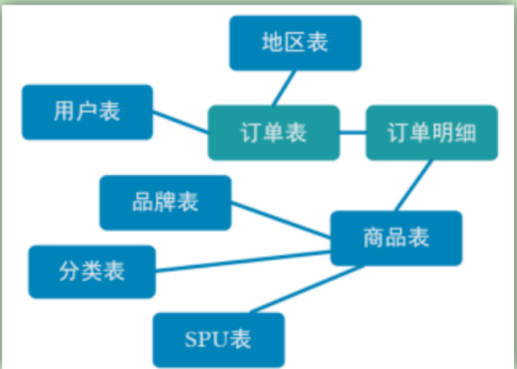

订单是统计分析的重要对象,围绕订单有很多的维度统计需求,比如用户,地区,商品,品类,品牌等

为了之后统计计算更加方便,减少大表之间的关联,所以在实时计算中将围绕订单的相关数据整合成为一张订单的宽表

如上图,由于在之前的操作我们已经把数据分拆成了事实数据和维度数据,事实数据(订单表,订单明细)进入kafka数据流(DWD层中),维度数据进入Hbase中长期保存,那么我们在DWM层中需要把实时和维度数据进行整合关联在一起,形成宽表,那么这里就要处理有两种关联:事实数据与事实数据关联,事实数据与维度数据关联1.事实数据与事实数据关联,其实就是流与流之间的关联2.事实数据与维度数据关联,其实就是流计算中查询外部数据源

1.订单与订单明细关联

一.封装可以同时消费多个topic的BaseApp

package com.atguigu.gmall.app;import com.atguigu.gmall.util.FlinkSourceUtil;import org.apache.flink.configuration.Configuration;import org.apache.flink.runtime.state.hashmap.HashMapStateBackend;import org.apache.flink.streaming.api.CheckpointingMode;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.environment.CheckpointConfig;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import java.util.ArrayList;import java.util.Arrays;import java.util.HashMap;public abstract class BaseAppV2 {public abstract void run(StreamExecutionEnvironment env, HashMap<String, DataStreamSource<String>> stream);public void init(int port,int p,String ck,String groupId,String topic,String... otherTopics){System.setProperty("HADOOP_USER_NAME","atguigu");Configuration conf = new Configuration();conf.setInteger("rest.port",port);//设置web端端口号StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment(conf);env.setParallelism(p);//配置checkpointenv.enableCheckpointing(3000, CheckpointingMode.EXACTLY_ONCE);env.setStateBackend(new HashMapStateBackend());env.getCheckpointConfig().setCheckpointStorage("hdfs://hadoop162:8020/gmall/" + ck);env.getCheckpointConfig().setCheckpointTimeout(10 * 1000);env.getCheckpointConfig().enableExternalizedCheckpoints(CheckpointConfig.ExternalizedCheckpointCleanup.RETAIN_ON_CANCELLATION);ArrayList<String> topics = new ArrayList<>();topics.add(topic);topics.addAll(Arrays.asList(otherTopics));HashMap<String, DataStreamSource<String>> topicAndStreamMap = new HashMap<>();for (String t : topics) {DataStreamSource<String> stream = env.addSource(FlinkSourceUtil.getKafkaSource(groupId, t));topicAndStreamMap.put(t,stream);}//不同的APP有不同的业务逻辑run(env,topicAndStreamMap);try {env.execute(ck);} catch (Exception e) {e.printStackTrace();}}}

二.实时表的join

为了取数据方便,封装几个pojo类

- OrderInfo.java

package com.atguigu.gmall.pojo;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import java.math.BigDecimal;import java.text.ParseException;import java.text.SimpleDateFormat;@Data@AllArgsConstructor@NoArgsConstructorpublic class OrderInfo {private Long id; // 订单idprivate Long province_id;private String order_status;private Long user_id;private BigDecimal total_amount; // 支付的金额private BigDecimal activity_reduce_amount;private BigDecimal coupon_reduce_amount;private BigDecimal original_total_amount; // 没有优化和加运费之前的金额private BigDecimal feight_fee;private String expire_time;private String create_time; // 年月日时分秒private String operate_time;private String create_date; // 把其他字段处理得到private String create_hour;private Long create_ts;// 为了create_ts时间戳赋值, 所以需要手动补充public void setCreate_time(String create_time) throws ParseException {this.create_time = create_time;this.create_date = this.create_time.substring(0, 10); // 年月日this.create_hour = this.create_time.substring(11, 13); // 小时final SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");this.create_ts = sdf.parse(create_time).getTime();}}

- OrderDetail.java

package com.atguigu.gmall.pojo;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import java.math.BigDecimal;import java.text.ParseException;import java.text.SimpleDateFormat;@Data@AllArgsConstructor@NoArgsConstructorpublic class OrderDetail {private Long id;private Long order_id;private Long sku_id;private BigDecimal order_price;private Long sku_num;private String sku_name;private String create_time;private BigDecimal split_total_amount;private BigDecimal split_activity_amount;private BigDecimal split_coupon_amount;private Long create_ts;// 为了create_ts时间戳赋值, 所以需要手动补充public void setCreate_time(String create_time) throws ParseException {this.create_time = create_time;final SimpleDateFormat sdf = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");this.create_ts = sdf.parse(create_time).getTime();}}

- OrderWide.java

package com.atguigu.gmall.pojo;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import java.math.BigDecimal;import java.text.ParseException;import java.text.SimpleDateFormat;@Data@AllArgsConstructor@NoArgsConstructorpublic class OrderWide {private Long detail_id;private Long order_id;private Long sku_id;private BigDecimal order_price;private Long sku_num;private String sku_name;private Long province_id;private String order_status;private Long user_id;private BigDecimal total_amount;private BigDecimal activity_reduce_amount;private BigDecimal coupon_reduce_amount;private BigDecimal original_total_amount;private BigDecimal feight_fee;private BigDecimal split_feight_fee;private BigDecimal split_activity_amount;private BigDecimal split_coupon_amount;private BigDecimal split_total_amount;private String expire_time;private String create_time;private String operate_time;private String create_date; // 把其他字段处理得到private String create_hour;private String province_name;//查询维表得到private String province_area_code;private String province_iso_code;private String province_3166_2_code;private Integer user_age;private String user_gender;private Long spu_id; //作为维度数据 要关联进来private Long tm_id;private Long category3_id;private String spu_name;private String tm_name;private String category3_name;public OrderWide(OrderInfo orderInfo, OrderDetail orderDetail) {mergeOrderInfo(orderInfo);mergeOrderDetail(orderDetail);}public void mergeOrderInfo(OrderInfo orderInfo) {if (orderInfo != null) {this.order_id = orderInfo.getId();this.order_status = orderInfo.getOrder_status();this.create_time = orderInfo.getCreate_time();this.create_date = orderInfo.getCreate_date();this.create_hour = orderInfo.getCreate_hour();this.activity_reduce_amount = orderInfo.getActivity_reduce_amount();this.coupon_reduce_amount = orderInfo.getCoupon_reduce_amount();this.original_total_amount = orderInfo.getOriginal_total_amount();this.feight_fee = orderInfo.getFeight_fee();this.total_amount = orderInfo.getTotal_amount();this.province_id = orderInfo.getProvince_id();this.user_id = orderInfo.getUser_id();}}public void mergeOrderDetail(OrderDetail orderDetail) {if (orderDetail != null) {this.detail_id = orderDetail.getId();this.sku_id = orderDetail.getSku_id();this.sku_name = orderDetail.getSku_name();this.order_price = orderDetail.getOrder_price();this.sku_num = orderDetail.getSku_num();this.split_activity_amount = orderDetail.getSplit_activity_amount();this.split_coupon_amount = orderDetail.getSplit_coupon_amount();this.split_total_amount = orderDetail.getSplit_total_amount();}}public void calcUserAge(String birthday) throws ParseException {long now = System.currentTimeMillis();long birth = new SimpleDateFormat("yyyy-MM-dd").parse(birthday).getTime();this.user_age = (int) ((now - birth) / 1000 / 60 / 60 / 24 / 365);}}

- 实时表的join

/*** 事实表的join(orderDetail和orderInfo)* @param streams*/private SingleOutputStreamOperator<OrderWide> joinFacts(HashMap<String, DataStreamSource<String>> streams) {KeyedStream<OrderInfo, Long> orderInfoStream = streams.get(Constant.TOPIC_DWD_ORDER_INFO).map(new MapFunction<String, OrderInfo>() {@Overridepublic OrderInfo map(String value) throws Exception {return JSON.parseObject(value, OrderInfo.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderInfo>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> info.getCreate_ts())).keyBy(orderInfo -> orderInfo.getId());KeyedStream<OrderDetail, Long> orderDetailLongKeyedStream = streams.get(Constant.TOPIC_DWD_ORDER_DETAIL).map(new MapFunction<String, OrderDetail>() {@Overridepublic OrderDetail map(String value) throws Exception {return JSON.parseObject(value, OrderDetail.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderDetail>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> info.getCreate_ts())).keyBy(orderDetail -> orderDetail.getOrder_id());return orderInfoStream.intervalJoin(orderDetailLongKeyedStream).between(Time.seconds(-10),Time.seconds(10)).process(new ProcessJoinFunction<OrderInfo, OrderDetail, OrderWide>() {@Overridepublic void processElement(OrderInfo left, OrderDetail right, Context ctx, Collector<OrderWide> out) throws Exception {out.collect(new OrderWide(left,right));}});}

2.关联维度表

维度关联实际上就是在流中查询存储在hbase中的数据表

但是即使通过主键的方式查询,hbase速度的查询也是不及流之间的join,外部数据源的查询常常是流式计算的性能瓶颈,所以在这个基础上还能进行一定的优化

一.将维度数据初始化到Hbase

需要开启hbase,Maxwell,DwdDbApp

共用到六张维度表: user_info,base_province,sku_info,spu_info,base_category3,base_trademark

bin/maxwell-bootstrap --user root --password aaaaaa --host hadoop162 --database gmall2021 --table user_info --client_id maxwell_1bin/maxwell-bootstrap --user root --password aaaaaa --host hadoop162 --database gmall2021 --table base_province --client_id maxwell_1bin/maxwell-bootstrap --user root --password aaaaaa --host hadoop162 --database gmall2021 --table sku_info --client_id maxwell_1bin/maxwell-bootstrap --user root --password aaaaaa --host hadoop162 --database gmall2021 --table spu_info --client_id maxwell_1bin/maxwell-bootstrap --user root --password aaaaaa --host hadoop162 --database gmall2021 --table base_category3 --client_id maxwell_1bin/maxwell-bootstrap --user root --password aaaaaa --host hadoop162 --database gmall2021 --table base_trademark --client_id maxwell_1

二.更新JdbcUtil工具类

实现从Jdbc中查询数据

package com.atguigu.gmall.util;import com.alibaba.fastjson.JSONObject;import com.atguigu.gmall.common.Constant;import org.apache.commons.beanutils.BeanUtils;import java.sql.*;import java.util.ArrayList;import java.util.List;public class JdbcUtil {public static Connection getPhoenixConnection(String phoenixUrl) throws SQLException, ClassNotFoundException {String phoenixDriver = Constant.PHOENIX_DRIVER;return getJdbcConnection(phoenixDriver,phoenixUrl);}private static Connection getJdbcConnection(String driver, String url) throws ClassNotFoundException, SQLException {Class.forName(driver);return DriverManager.getConnection(url);}public static void main(String[] args) throws SQLException, ClassNotFoundException {List<JSONObject> list = queryList(getPhoenixConnection(Constant.PHOENIX_URL),"select * from dim_user_info where id=?",new String[]{"1"},JSONObject.class);for (JSONObject jsonObject : list) {System.out.println(jsonObject);}}public static <T> List<T> queryList(Connection conn,String sql,Object[] args,Class<T> tClass ){ArrayList<T> result = new ArrayList<>();//查询数据库try {PreparedStatement ps = conn.prepareStatement(sql);//给SQL中的占位符进行赋值for (int i = 0; args != null && i < args.length; i++) {ps.setObject(i+1,args[i]);}ResultSet resultSet = ps.executeQuery();ResultSetMetaData metaData = resultSet.getMetaData();while(resultSet.next()){//创建一个T类型的对象,把每列的数据都封装在T对象中T t = tClass.newInstance();for (int i = 1; i < metaData.getColumnCount(); i++) {//遍历每列数据String columnName = metaData.getColumnName(i);Object value = resultSet.getObject(columnName);BeanUtils.setProperty(t,columnName,value);}result.add(t);}} catch (Exception e) {e.printStackTrace();}return result;}}

三.封装DimUtil类,方便从Phoenix中获取数据

package com.atguigu.gmall.util;import com.alibaba.fastjson.JSONObject;import java.sql.Connection;import java.util.List;public class DimUtil {public static JSONObject getDimFromPhoenix(Connection phoenixConn,String table,Long id){String sql = "select * from " + table + " where id=?";Object[] args = {id.toString()};List<JSONObject> result = JdbcUtil.queryList(phoenixConn, sql, args, JSONObject.class);return result.size()==1 ? result.get(0): null;}}

四.Join维度表代码

package com.atguigu.gmall.app.dwm;import com.alibaba.fastjson.JSON;import com.alibaba.fastjson.JSONObject;import com.atguigu.gmall.app.BaseAppV2;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.pojo.OrderDetail;import com.atguigu.gmall.pojo.OrderInfo;import com.atguigu.gmall.pojo.OrderWide;import com.atguigu.gmall.util.DimUtil;import com.atguigu.gmall.util.JdbcUtil;import org.apache.flink.api.common.eventtime.WatermarkStrategy;import org.apache.flink.api.common.functions.MapFunction;import org.apache.flink.api.common.functions.RichMapFunction;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.datastream.KeyedStream;import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import java.sql.Connection;import java.time.Duration;import java.util.HashMap;import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;import org.apache.flink.streaming.api.windowing.time.Time;import org.apache.flink.util.Collector;public class DwmOrderWriteApp extends BaseAppV2 {public static void main(String[] args) {new DwmOrderWriteApp().init(3003,1,"DwmOrderWriteApp","DwmOrderWriteApp", Constant.TOPIC_DWD_ORDER_INFO,Constant.TOPIC_DWD_ORDER_DETAIL);}@Overridepublic void run(StreamExecutionEnvironment env, HashMap<String, DataStreamSource<String>> streams) {//streams.get(Constant.TOPIC_DWD_ORDER_INFO).print(Constant.TOPIC_DWD_ORDER_INFO);//streams.get(Constant.TOPIC_DWD_ORDER_DETAIL).print(Constant.TOPIC_DWD_ORDER_DETAIL);//1.事实表的join: interval joinSingleOutputStreamOperator<OrderWide> orderWideStreamWithoutDims = joinFacts(streams);//2.join维度数据joinDims(orderWideStreamWithoutDims);//3.把结果写入到kafka中,给dws准备数据}private void joinDims(SingleOutputStreamOperator<OrderWide> orderWideStreamWithoutDims) {//join 6 张维度表//根据维度表中的某个id去查找对应的那一条数据orderWideStreamWithoutDims.map(new RichMapFunction<OrderWide, OrderWide>() {private Connection phoenixConnection;@Overridepublic void open(Configuration parameters) throws Exception {phoenixConnection = JdbcUtil.getPhoenixConnection(Constant.PHOENIX_URL);}@Overridepublic OrderWide map(OrderWide orderWide) throws Exception {//1.补充userJSONObject userInfo = DimUtil.getDimFromPhoenix(phoenixConnection,"dim_user_info",orderWide.getUser_id());orderWide.setUser_gender(userInfo.getString("GENDER"));orderWide.calcUserAge(userInfo.getString("BIRTHDAY"));// 2. 补齐省份JSONObject provinceInfo = DimUtil.getDimFromPhoenix(phoenixConnection,"dim_base_province",orderWide.getProvince_id());orderWide.setProvince_name(provinceInfo.getString("NAME"));orderWide.setProvince_iso_code(provinceInfo.getString("ISO_CODE"));orderWide.setProvince_area_code(provinceInfo.getString("AREA_CODE"));orderWide.setProvince_3166_2_code(provinceInfo.getString("ISO_3166_2"));// 3. skuJSONObject skuInfo = DimUtil.getDimFromPhoenix(phoenixConnection,"dim_sku_info",orderWide.getSku_id());orderWide.setSku_name(skuInfo.getString("SKU_NAME"));orderWide.setSpu_id(skuInfo.getLong("SPU_ID"));orderWide.setTm_id(skuInfo.getLong("TM_ID"));orderWide.setCategory3_id(skuInfo.getLong("CATEGORY3_ID"));// 4. spuJSONObject spuInfo = DimUtil.getDimFromPhoenix(phoenixConnection,"dim_spu_info",orderWide.getSpu_id());orderWide.setSpu_name(spuInfo.getString("SPU_NAME"));// 5. tmJSONObject tmInfo = DimUtil.getDimFromPhoenix(phoenixConnection,"dim_base_trademark",orderWide.getTm_id());orderWide.setTm_name(tmInfo.getString("TM_NAME"));// 5. tmJSONObject c3Info = DimUtil.getDimFromPhoenix(phoenixConnection,"dim_base_category3",orderWide.getCategory3_id());orderWide.setCategory3_name(c3Info.getString("NAME"));return orderWide;}}).print();}/*** 事实表的join(orderDetail和orderInfo)* @param streams*/private SingleOutputStreamOperator<OrderWide> joinFacts(HashMap<String, DataStreamSource<String>> streams) {KeyedStream<OrderInfo, Long> orderInfoStream = streams.get(Constant.TOPIC_DWD_ORDER_INFO).map(new MapFunction<String, OrderInfo>() {@Overridepublic OrderInfo map(String value) throws Exception {return JSON.parseObject(value, OrderInfo.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderInfo>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> info.getCreate_ts())).keyBy(orderInfo -> orderInfo.getId());KeyedStream<OrderDetail, Long> orderDetailLongKeyedStream = streams.get(Constant.TOPIC_DWD_ORDER_DETAIL).map(new MapFunction<String, OrderDetail>() {@Overridepublic OrderDetail map(String value) throws Exception {return JSON.parseObject(value, OrderDetail.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderDetail>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> info.getCreate_ts())).keyBy(orderDetail -> orderDetail.getOrder_id());return orderInfoStream.intervalJoin(orderDetailLongKeyedStream).between(Time.seconds(-10),Time.seconds(10)).process(new ProcessJoinFunction<OrderInfo, OrderDetail, OrderWide>() {@Overridepublic void processElement(OrderInfo left, OrderDetail right, Context ctx, Collector<OrderWide> out) throws Exception {out.collect(new OrderWide(left,right));}});}}

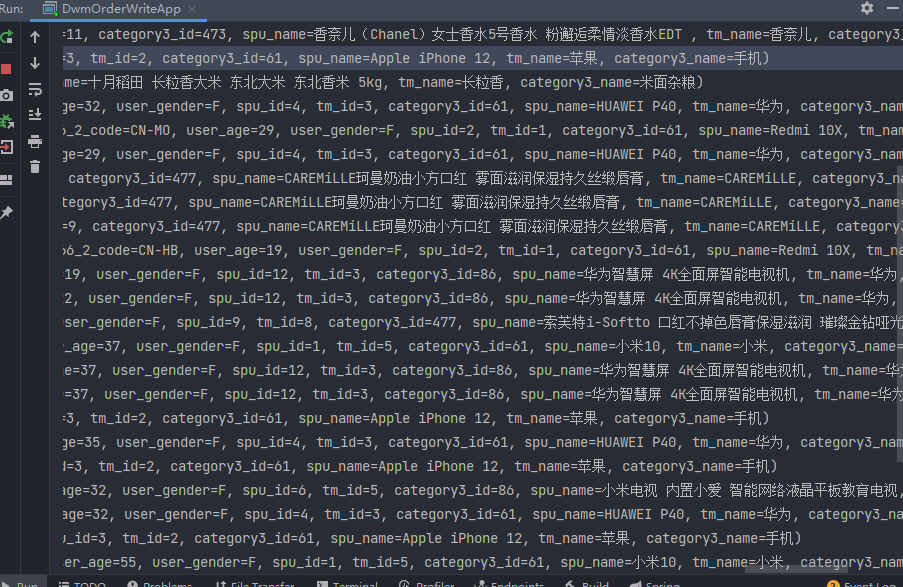

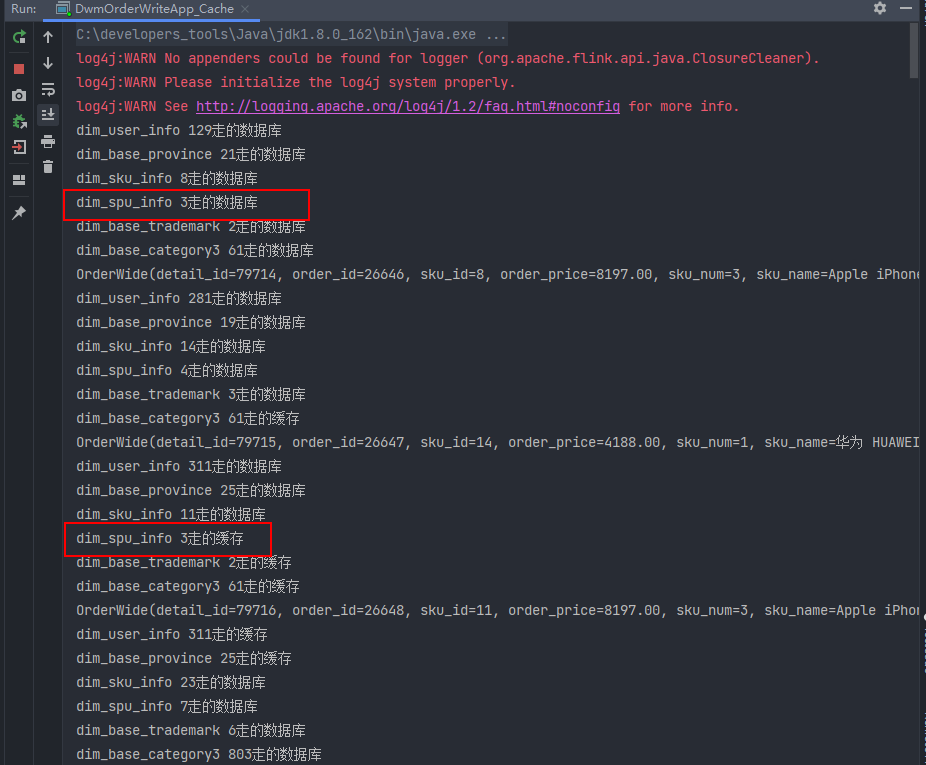

五.启动APP进行测试,发现维度信息已经添加成功

3.维度管理优化一:加入缓存

上一节实现的功能是直接查询的Hbase,外部数据源的查询往往是流式计算的性能瓶颈,所以我们需要在之前实现的基础上进行一定的优化,使用redis缓存

一.为什么使用redis缓存

要实现的目标:第一次从数据库读,这条以后应该从缓存(内存)1.把维度数据缓存到flink的状态中优点:本地内存,快,不需要网络,数据结构也比较丰富缺点:一.对flink的内存有压力二.维度有变化,缓存的数据没有办法收到这个变化,因为缓存是在dwm层的应用,维度数据是dwdDbApp负责写入到hbase的,如果有变化只有这个APP知道2.把维度数据缓存到外部专用的缓存:redis优点:专用缓存,容器比较大,速度也快,如果维度发生变化,dwdDbApp可以直接访问Redis去更新缓存缺点:需要用过网络3.综合考虑,就应该使用redis缓存

二.redis中数据结构的选择

| 数据结构 | key | value | 缺点 | 优点 |

|---|---|---|---|---|

| String | 表名加Id(dim_user_info:1) | json格式字串 | key比较多,管理不方便 | 1.读写方便2.可以单独给每个key设置过期时间 |

| list | 表名(dim_user_info) | 每个数据的json格式 | 读很难 | 一张表一个key,写方便 |

| set | 表名(dim_user_info) | 每个数据的json格式 | 读很难 | 一张表一个key,写方便 |

| hash | 表名 | field+value | 没有办法单独给每条数据设置过期时间 | 一个表一个字段,读写也方便 |

综合考虑使用String结构比较合适

三.封装Redis客户端工具类

- 导入Jedis依赖

<dependency><groupId>redis.clients</groupId><artifactId>jedis</artifactId><version>3.2.0</version></dependency>

- 封装Redis工具类

package com.atguigu.gmall.util;import redis.clients.jedis.Jedis;import redis.clients.jedis.JedisPool;import redis.clients.jedis.JedisPoolConfig;public class RedisUtil {private static JedisPool pool;static {JedisPoolConfig conf = new JedisPoolConfig();conf.setMaxTotal(300);conf.setMaxIdle(10);conf.setMaxWaitMillis(10 * 1000);conf.setMinIdle(5);conf.setTestOnBorrow(true);conf.setTestOnCreate(true);conf.setTestOnReturn(true);pool = new JedisPool(conf,"hadoop162",6379);}public static Jedis getRedisClient(){return pool.getResource();}}

四.使用缓存读取维度数据

- DimUtil.java

package com.atguigu.gmall.util;import com.alibaba.fastjson.JSON;import com.alibaba.fastjson.JSONObject;import redis.clients.jedis.Jedis;import java.sql.Connection;import java.util.List;public class DimUtil {public static JSONObject getDimFromPhoenix(Connection phoenixConn,String table,Long id){String sql = "select * from " + table + " where id=?";Object[] args = {id.toString()};List<JSONObject> result = JdbcUtil.queryList(phoenixConn, sql, args, JSONObject.class);return result.size()==1 ? result.get(0): null;}public static JSONObject getDim(Connection phoenixConnection,Jedis redisClient,String table,Long id) {//1.先从redis中获取JSONObject dim = getDimFromRedis(redisClient, table, id);//3.获取不到从Phoenix中获取,结果返回,把结果写入到redisif(dim == null){System.out.println(table + " " + id + "走的数据库");dim = getDimFromPhoenix(phoenixConnection,table,id);//把维度数据写入到redis中writeToRedis(redisClient,table,id,dim);}else{System.out.println(table + " " + id + "走的缓存");}//2.获取到了信息,直接返回return dim;}private static void writeToRedis(Jedis redisClient,String table,Long id,JSONObject dim) {String key = table + ":" + id;String value = dim.toJSONString();redisClient.setex(key,3*24*60*60,value);}private static JSONObject getDimFromRedis(Jedis redisClient, String table, Long id) {String key = table + ":" + id;String dim = redisClient.get(key); //如果缓存不存在,就是nullreturn dim == null ? null : JSON.parseObject(dim);}}

- DwmOrderWriteApp_Cache .java

package com.atguigu.gmall.app.dwm;import com.alibaba.fastjson.JSON;import com.alibaba.fastjson.JSONObject;import com.atguigu.gmall.app.BaseAppV2;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.pojo.OrderDetail;import com.atguigu.gmall.pojo.OrderInfo;import com.atguigu.gmall.pojo.OrderWide;import com.atguigu.gmall.util.DimUtil;import com.atguigu.gmall.util.JdbcUtil;import com.atguigu.gmall.util.RedisUtil;import org.apache.flink.api.common.eventtime.WatermarkStrategy;import org.apache.flink.api.common.functions.MapFunction;import org.apache.flink.api.common.functions.RichMapFunction;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.datastream.KeyedStream;import org.apache.flink.streaming.api.datastream.SingleOutputStreamOperator;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;import org.apache.flink.streaming.api.windowing.time.Time;import org.apache.flink.util.Collector;import redis.clients.jedis.Jedis;import java.sql.Connection;import java.time.Duration;import java.util.HashMap;public class DwmOrderWriteApp_Cache extends BaseAppV2 {public static void main(String[] args) {new DwmOrderWriteApp_Cache().init(3004,1,"DwmOrderWriteApp_Cache","DwmOrderWriteApp_Cache", Constant.TOPIC_DWD_ORDER_INFO,Constant.TOPIC_DWD_ORDER_DETAIL);}@Overridepublic void run(StreamExecutionEnvironment env, HashMap<String, DataStreamSource<String>> streams) {//streams.get(Constant.TOPIC_DWD_ORDER_INFO).print(Constant.TOPIC_DWD_ORDER_INFO);//streams.get(Constant.TOPIC_DWD_ORDER_DETAIL).print(Constant.TOPIC_DWD_ORDER_DETAIL);//1.事实表的join: interval joinSingleOutputStreamOperator<OrderWide> orderWideStreamWithoutDims = joinFacts(streams);//2.join维度数据joinDims(orderWideStreamWithoutDims);//3.把结果写入到kafka中,给dws准备数据}private void joinDims(SingleOutputStreamOperator<OrderWide> orderWideStreamWithoutDims) {//join 6 张维度表//根据维度表中的某个id去查找对应的那一条数据orderWideStreamWithoutDims.map(new RichMapFunction<OrderWide, OrderWide>() {@Overridepublic void close() throws Exception {if(phoenixConnection != null){phoenixConnection.close();}if(redisClient != null){//如果客户端是new Jedis()这样出来,则是关闭客户端,// 如果是通过连接池获取的,则归还给连接池redisClient.close();}}private Jedis redisClient;private Connection phoenixConnection;@Overridepublic void open(Configuration parameters) throws Exception {phoenixConnection = JdbcUtil.getPhoenixConnection(Constant.PHOENIX_URL);//获取redis客户端redisClient = RedisUtil.getRedisClient();}@Overridepublic OrderWide map(OrderWide orderWide) throws Exception {//1.补充userJSONObject userInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_user_info",orderWide.getUser_id());orderWide.setUser_gender(userInfo.getString("GENDER"));orderWide.calcUserAge(userInfo.getString("BIRTHDAY"));// 2. 补齐省份JSONObject provinceInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_base_province",orderWide.getProvince_id());orderWide.setProvince_name(provinceInfo.getString("NAME"));orderWide.setProvince_iso_code(provinceInfo.getString("ISO_CODE"));orderWide.setProvince_area_code(provinceInfo.getString("AREA_CODE"));orderWide.setProvince_3166_2_code(provinceInfo.getString("ISO_3166_2"));// 3. skuJSONObject skuInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_sku_info",orderWide.getSku_id());orderWide.setSku_name(skuInfo.getString("SKU_NAME"));orderWide.setSpu_id(skuInfo.getLong("SPU_ID"));orderWide.setTm_id(skuInfo.getLong("TM_ID"));orderWide.setCategory3_id(skuInfo.getLong("CATEGORY3_ID"));// 4. spuJSONObject spuInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_spu_info",orderWide.getSpu_id());orderWide.setSpu_name(spuInfo.getString("SPU_NAME"));// 5. tmJSONObject tmInfo =DimUtil.getDim(phoenixConnection,redisClient,"dim_base_trademark",orderWide.getTm_id());orderWide.setTm_name(tmInfo.getString("TM_NAME"));// 5. tmJSONObject c3Info =DimUtil.getDim(phoenixConnection,redisClient,"dim_base_category3",orderWide.getCategory3_id());orderWide.setCategory3_name(c3Info.getString("NAME"));return orderWide;}}).print();}/*** 事实表的join(orderDetail和orderInfo)* @param streams*/private SingleOutputStreamOperator<OrderWide> joinFacts(HashMap<String, DataStreamSource<String>> streams) {KeyedStream<OrderInfo, Long> orderInfoStream = streams.get(Constant.TOPIC_DWD_ORDER_INFO).map(new MapFunction<String, OrderInfo>() {@Overridepublic OrderInfo map(String value) throws Exception {return JSON.parseObject(value, OrderInfo.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderInfo>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> info.getCreate_ts())).keyBy(orderInfo -> orderInfo.getId());KeyedStream<OrderDetail, Long> orderDetailLongKeyedStream = streams.get(Constant.TOPIC_DWD_ORDER_DETAIL).map(new MapFunction<String, OrderDetail>() {@Overridepublic OrderDetail map(String value) throws Exception {return JSON.parseObject(value, OrderDetail.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderDetail>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> info.getCreate_ts())).keyBy(orderDetail -> orderDetail.getOrder_id());return orderInfoStream.intervalJoin(orderDetailLongKeyedStream).between(Time.seconds(-10),Time.seconds(10)).process(new ProcessJoinFunction<OrderInfo, OrderDetail, OrderWide>() {@Overridepublic void processElement(OrderInfo left, OrderDetail right, Context ctx, Collector<OrderWide> out) throws Exception {out.collect(new OrderWide(left,right));}});}}

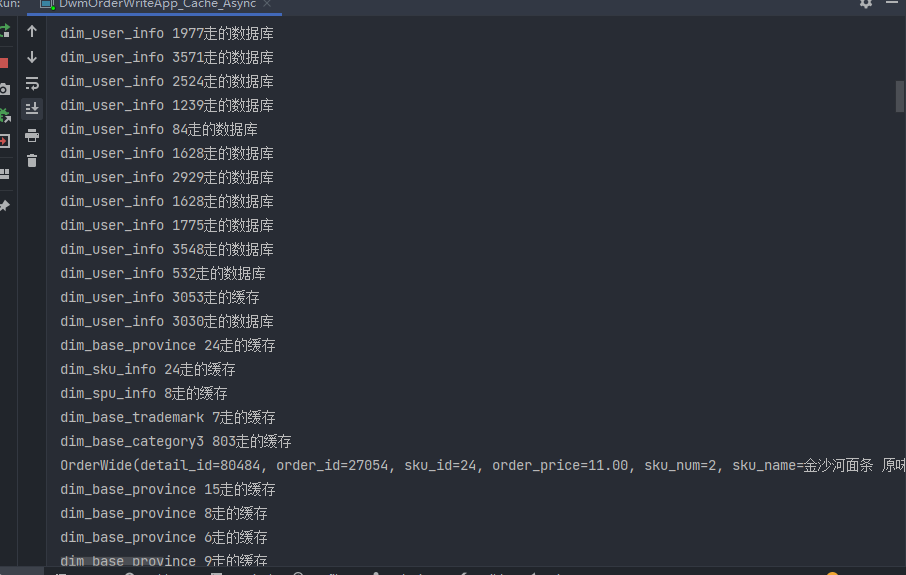

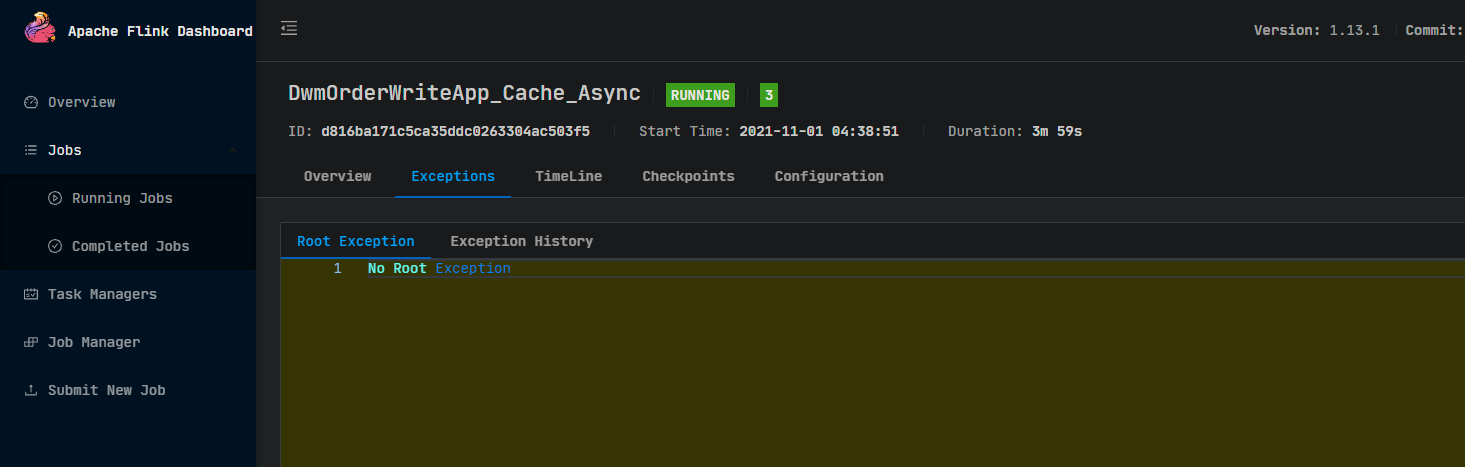

- 启动APP测试

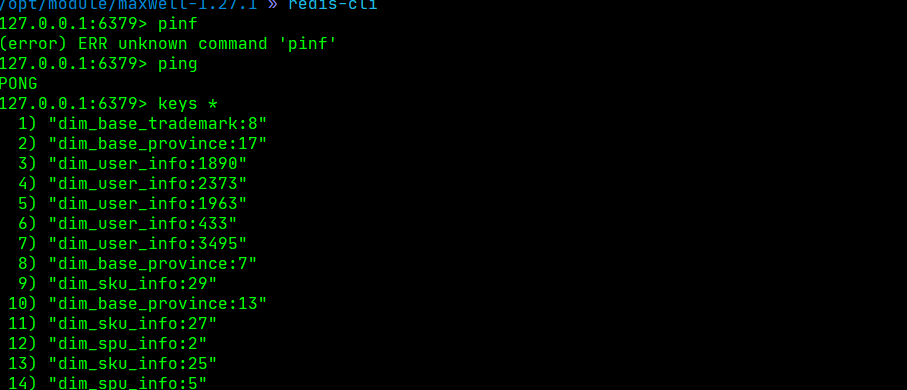

可以看到,已经可以从redis中获取数据了

五.在DwdDbApp中更新缓存

- PhoenixSink.java

package com.atguigu.gmall.sink;import com.alibaba.fastjson.JSONObject;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.pojo.TableProcess;import com.atguigu.gmall.util.JdbcUtil;import com.atguigu.gmall.util.RedisUtil;import org.apache.flink.api.common.state.ValueState;import org.apache.flink.api.common.state.ValueStateDescriptor;import org.apache.flink.api.java.tuple.Tuple2;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.functions.sink.RichSinkFunction;import org.apache.flink.streaming.api.functions.sink.SinkFunction;import redis.clients.jedis.Jedis;import java.io.IOException;import java.sql.Connection;import java.sql.PreparedStatement;import java.sql.SQLException;import java.util.Map;public class PhoenixSink extends RichSinkFunction<Tuple2<JSONObject, TableProcess>> {private Connection conn;private ValueState<Boolean> isFirst;private Jedis redisClient;/*** 建立到Phoenix的连接* @param parameters* @throws Exception*/@Overridepublic void open(Configuration parameters) throws Exception {String url = Constant.PHOENIX_URL;conn = JdbcUtil.getPhoenixConnection(url);isFirst = getRuntimeContext().getState(new ValueStateDescriptor<Boolean>("isFirst",Boolean.class));redisClient = RedisUtil.getRedisClient();}/*** 关闭连接* @throws Exception*/@Overridepublic void close() throws Exception {if(conn != null){conn.close();}}@Overridepublic void invoke(Tuple2<JSONObject, TableProcess> value, Context context) throws Exception {//1.建表checkTable(value);//2.写数据writeData(value);//3.更新缓存updateCache(value);}private void updateCache(Tuple2<JSONObject, TableProcess> value) {JSONObject data = value.f0;TableProcess tp = value.f1;String key = tp.getSink_table() + ":" + data.getLong("id");//这次操作是更新维度还是去redis中存这个缓存if("update".equals(tp.getOperate_type()) && redisClient.exists(key)){//应该把字段名全部大写JSONObject upperDim = new JSONObject();for (Map.Entry<String, Object> entry : data.entrySet()) {upperDim.put(entry.getKey().toUpperCase(),entry.getValue());}redisClient.set(key, upperDim.toString());}}private void writeData(Tuple2<JSONObject, TableProcess> value) throws SQLException {JSONObject data = value.f0;TableProcess tp = value.f1;//拼接插入语句//upsert into t(a,b,c) values(?,?,?)StringBuilder sql = new StringBuilder();sql.append("upsert into ").append(tp.getSink_table()).append("(").append(tp.getSink_columns()).append(")values(").append(tp.getSink_columns().replaceAll("[^,]+", "?")).append(")");PreparedStatement ps = conn.prepareStatement(sql.toString());//给占位符赋值,根据列名去data中取值String[] cs = tp.getSink_columns().split(",");for (int i = 0; i < cs.length; i++) {String columnName = cs[i];Object v = data.get(columnName);ps.setString(i+1,v==null?null:v.toString());}//执行SQLps.execute();conn.commit();ps.close();}/*** 在Phoenix中进行建表* 执行建表语句* @param value*/private void checkTable(Tuple2<JSONObject, TableProcess> value) throws IOException, SQLException {if(isFirst.value() == null){TableProcess tp = value.f1;StringBuilder sql = new StringBuilder();sql.append("create table if not exists ").append(tp.getSink_table()).append("(").append(tp.getSink_columns().replaceAll(",", " varchar, ")).append(" varchar, constraint pk primary key(").append(tp.getSink_pk() == null? "id" : tp.getSink_pk()).append("))").append(tp.getSink_extend() == null ? "" : tp.getSink_extend());System.out.println(sql.toString());PreparedStatement ps = conn.prepareStatement(sql.toString());ps.execute();conn.commit();ps.close();isFirst.update(true);}}}

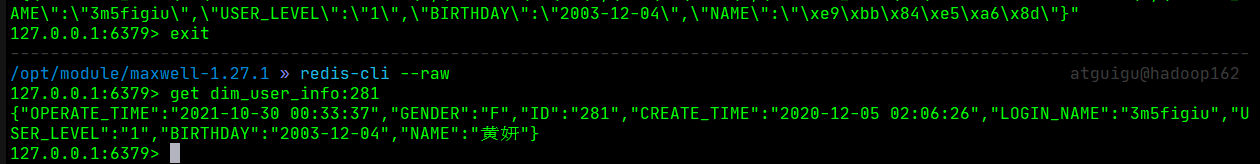

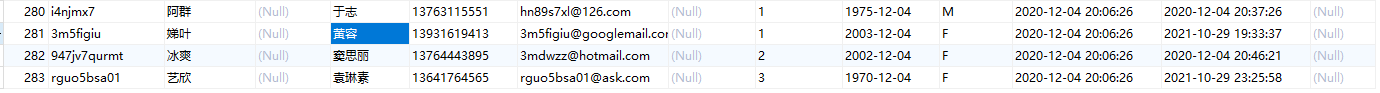

- 测试(重新将程序打包上传到linux上并启动)

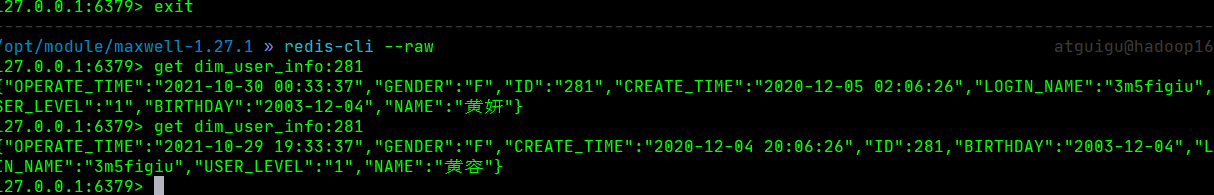

更改业务数据之前redis里的userinfo

将数据库中对应的姓名修改为黄蓉

再从redis中查看,发现姓名已经修改成功

4.维度管理优化二:使用异步查询

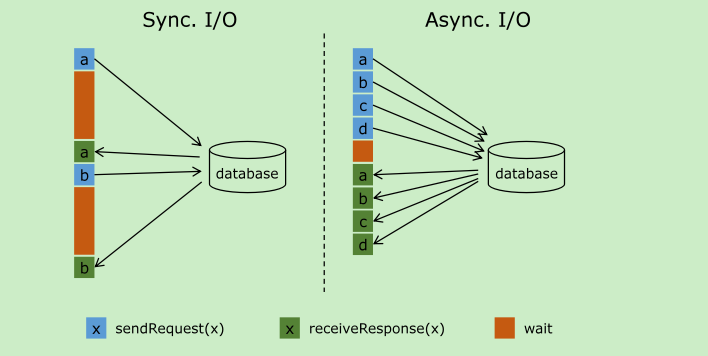

一.异步IO的介绍

在Flink 流处理过程中,经常需要和外部系统进行交互,用维度表补全事实表中的字段。例如:在电商场景中,需要一个商品的skuid去关联商品的一些属性,例如商品所属行业、商品的生产厂家、生产厂家的一些情况;在物流场景中,知道包裹id,需要去关联包裹的行业属性、发货信息、收货信息等等。默认情况下,在Flink的MapFunction中,单个并行只能用同步方式去交互: 将请求发送到外部存储,IO阻塞,等待请求返回,然后继续发送下一个请求。这种同步交互的方式往往在网络等待上就耗费了大量时间。为了提高处理效率,可以增加MapFunction的并行度,但增加并行度就意味着更多的资源,并不是一种非常好的解决方式。Flink 在1.2中引入了Async I/O,在异步模式下,将IO操作异步化,单个并行可以连续发送多个请求,哪个请求先返回就先处理,从而在连续的请求间不需要阻塞式等待,大大提高了流处理效率。Async I/O 是阿里巴巴贡献给社区的一个呼声非常高的特性,解决与外部系统交互时网络延迟成为了系统瓶颈的问题。异步查询实际上是把维表的查询操作托管给单独的线程池完成,这样不会因为某一个查询造成阻塞,单个并行可以连续发送多个请求,提高并发效率。这种方式特别针对涉及网络IO的操作,减少因为请求等待带来的消耗。

使用异步API的先决条件

- 正确地实现数据库(或键/值存储)的异步 I/O 交互需要支持异步请求的数据库客户端。许多主流数据库都提供了这样的客户端。

- 如果没有这样的客户端,可以通过创建多个客户端并使用线程池处理同步调用的方法,将同步客户端转换为有限并发的客户端。然而,这种方法通常比正规的异步客户端效率低。

- Phoenix目前没有提供异步的客户端, 所以只能通过创建多个客户端并使用线程池处理同步调用的方法,将同步客户端转换为有限并发的客户端

Flink的异步I/O API

Flink 的异步 I/O API 允许用户在流处理中使用异步请求客户端。API 处理与数据流的集成,同时还能处理好顺序、事件时间和容错等。

在具备异步数据库客户端的基础上,实现数据流转换操作与数据库的异步 I/O 交互需要以下三部分:

- 实现分发请求的 AsyncFunction

- 获取数据库交互的结果并发送给ResultFuture的回调函数

- 将异步API操作应用于DataStream作为DataStream的一次转换操作

二.封装线程池工具类

package com.atguigu.gmall.util;import java.util.concurrent.LinkedBlockingDeque;import java.util.concurrent.ThreadPoolExecutor;import java.util.concurrent.TimeUnit;/*** 用于异步的线程池工具类*/public class ThreadPoolUtil {public static ThreadPoolExecutor getThreadPool(){return new ThreadPoolExecutor(300,//线程池内核心线程数500,//线程池内最大线程数30,//空闲线程存活时间TimeUnit.SECONDS,new LinkedBlockingDeque<>(50));}}

三.异步代码的具体实现

- DimAsyncFunction.java(用于处理异步逻辑)

package com.atguigu.gmall.function;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.util.JdbcUtil;import com.atguigu.gmall.util.RedisUtil;import com.atguigu.gmall.util.ThreadPoolUtil;import org.apache.flink.configuration.Configuration;import org.apache.flink.streaming.api.functions.async.ResultFuture;import org.apache.flink.streaming.api.functions.async.RichAsyncFunction;import redis.clients.jedis.Jedis;import java.sql.Connection;import java.sql.SQLException;import java.util.concurrent.ThreadPoolExecutor;public abstract class DimAsyncFunction<T> extends RichAsyncFunction<T,T> {private ThreadPoolExecutor threadPool;@Overridepublic void open(Configuration parameters) throws Exception {threadPool = ThreadPoolUtil.getThreadPool();}@Overridepublic void asyncInvoke(T input, ResultFuture<T> resultFuture) throws Exception {//在线程中去使用客户端去读取维度数据threadPool.submit(new Runnable() {@Overridepublic void run() {Connection phoenixConnection = null;Jedis redisClient = null;try {phoenixConnection = JdbcUtil.getPhoenixConnection(Constant.PHOENIX_URL);redisClient = RedisUtil.getRedisClient();addDim(phoenixConnection,redisClient,input,resultFuture);} catch (SQLException throwables) {throwables.printStackTrace();} catch (ClassNotFoundException e) {e.printStackTrace();} finally {if(phoenixConnection != null){try {phoenixConnection.close();} catch (SQLException throwables) {throwables.printStackTrace();}}if(redisClient != null){redisClient.close();}}}});}protected abstract void addDim(Connection phoenixConnection,Jedis redisClient,T input,ResultFuture<T> resultFuture);}

- 具体实现代码

private SingleOutputStreamOperator<OrderWide> joinDims(SingleOutputStreamOperator<OrderWide> orderWideStreamWithoutDims) {//join 6 张维度表//根据维度表中的某个id去查找对应的那一条数据return AsyncDataStream.unorderedWait(orderWideStreamWithoutDims,new DimAsyncFunction<OrderWide>(){@Overrideprotected void addDim(Connection phoenixConnection,Jedis redisClient,OrderWide orderWide,ResultFuture<OrderWide> resultFuture) {//1.补充userJSONObject userInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_user_info",orderWide.getUser_id());orderWide.setUser_gender(userInfo.getString("GENDER"));try {orderWide.calcUserAge(userInfo.getString("BIRTHDAY"));} catch (ParseException e) {e.printStackTrace();}// 2. 补齐省份JSONObject provinceInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_base_province",orderWide.getProvince_id());orderWide.setProvince_name(provinceInfo.getString("NAME"));orderWide.setProvince_iso_code(provinceInfo.getString("ISO_CODE"));orderWide.setProvince_area_code(provinceInfo.getString("AREA_CODE"));orderWide.setProvince_3166_2_code(provinceInfo.getString("ISO_3166_2"));// 3. skuJSONObject skuInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_sku_info",orderWide.getSku_id());orderWide.setSku_name(skuInfo.getString("SKU_NAME"));orderWide.setSpu_id(skuInfo.getLong("SPU_ID"));orderWide.setTm_id(skuInfo.getLong("TM_ID"));orderWide.setCategory3_id(skuInfo.getLong("CATEGORY3_ID"));// 4. spuJSONObject spuInfo = DimUtil.getDim(phoenixConnection,redisClient,"dim_spu_info",orderWide.getSpu_id());orderWide.setSpu_name(spuInfo.getString("SPU_NAME"));// 5. tmJSONObject tmInfo =DimUtil.getDim(phoenixConnection,redisClient,"dim_base_trademark",orderWide.getTm_id());orderWide.setTm_name(tmInfo.getString("TM_NAME"));// 5. tmJSONObject c3Info =DimUtil.getDim(phoenixConnection,redisClient,"dim_base_category3",orderWide.getCategory3_id());orderWide.setCategory3_name(c3Info.getString("NAME"));resultFuture.complete(Collections.singletonList(orderWide));}},60,TimeUnit.SECONDS);}

- 启动APP测试,发现数据已经在异步处理了

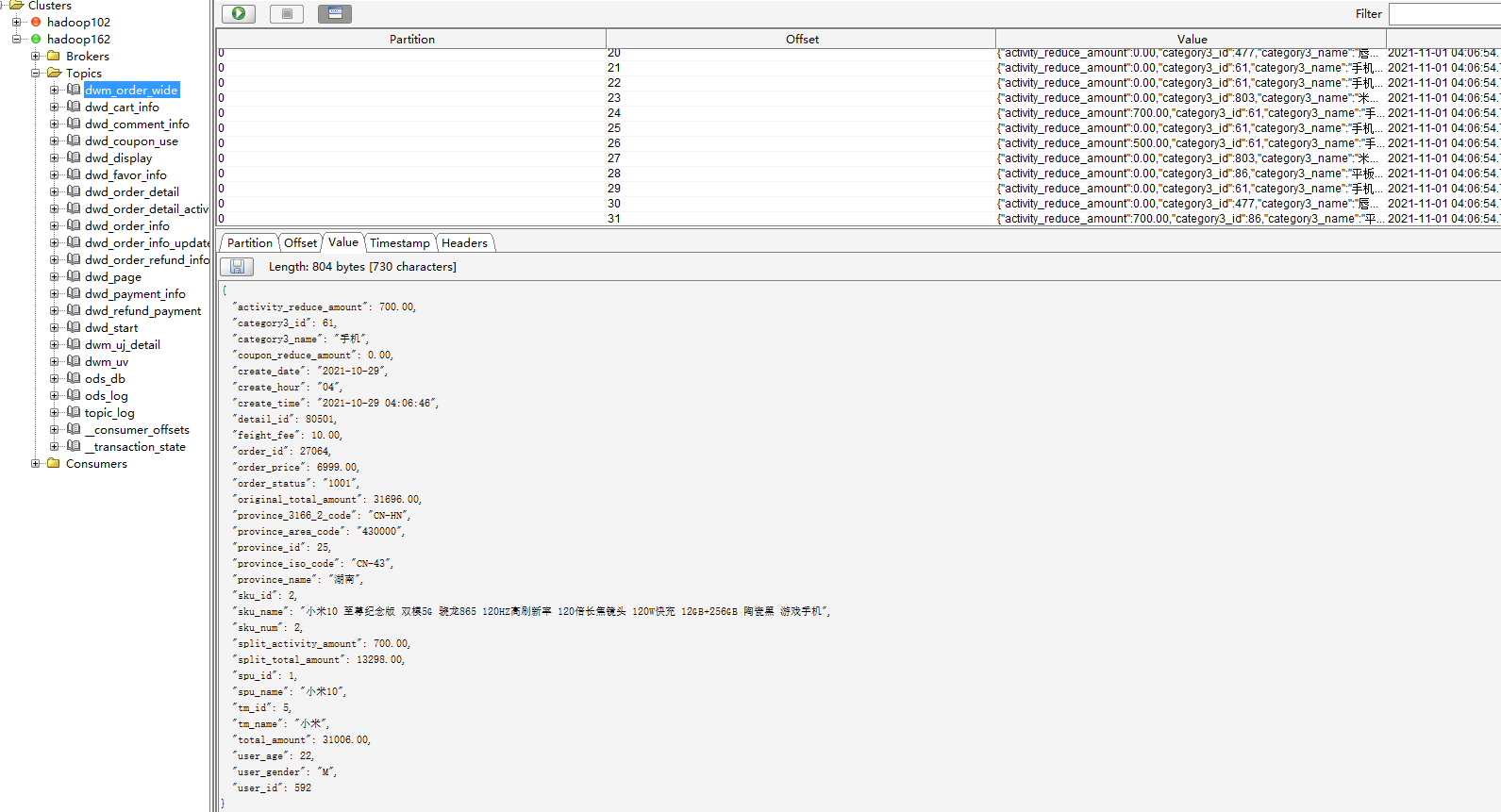

四.数据写入到kafka

private void write2Kafka(SingleOutputStreamOperator<OrderWide> stream) {stream.map(new MapFunction<OrderWide, String>() {@Overridepublic String map(OrderWide value) throws Exception {return JSON.toJSONString(value);}}).addSink(FlinkSinkUtil.getKafkaSink(Constant.TOPIC_DWM_ORDER_WIDE));}

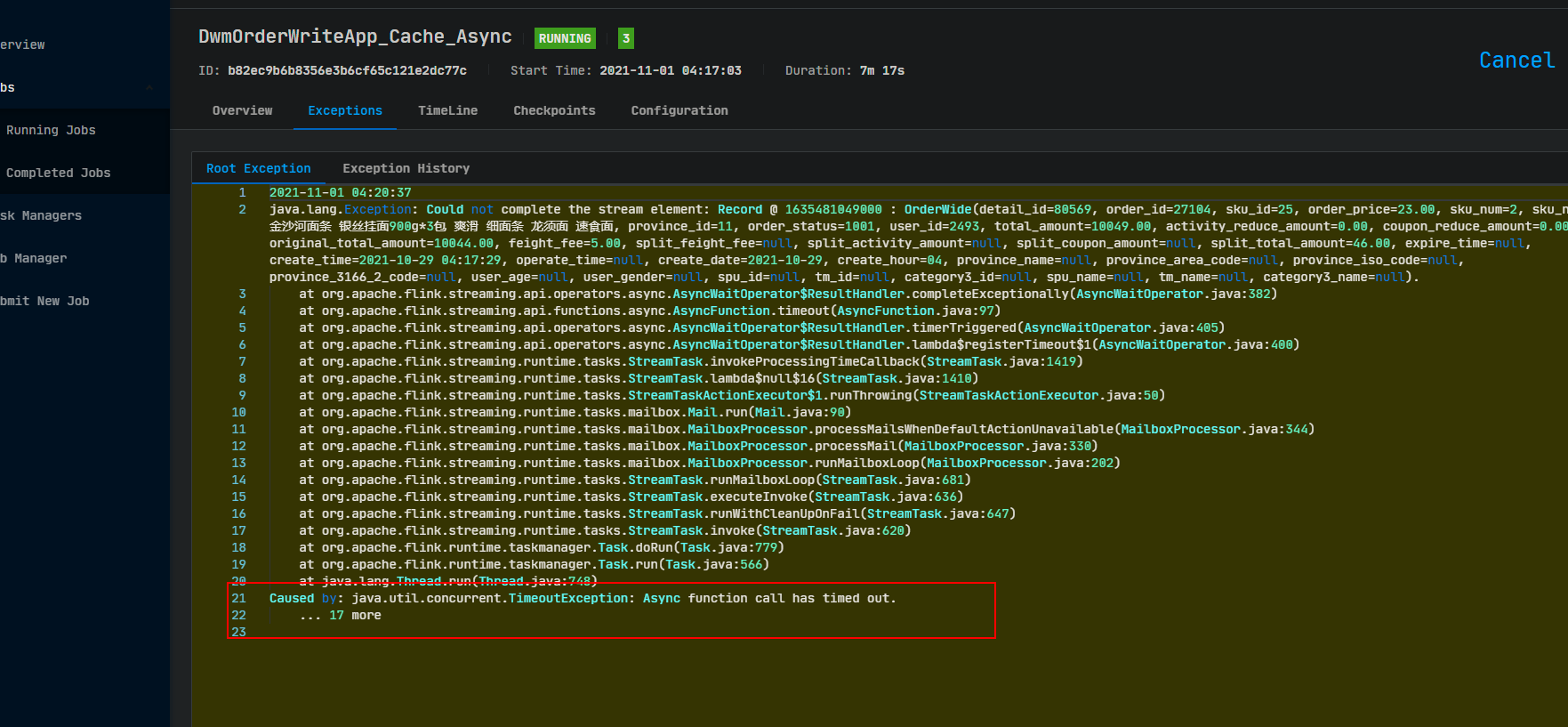

五.将项目打包到集群测试

发现异步超时,原因是phoenix打包时依赖问题

解决方法是打包时不打包phoenix依赖,在集群的flink-yarn的lib中添加phoenix依赖

/opt/module/phoenix-5.0.0 » cp phoenix-5.0.0-HBase-2.0-client.jar /opt/module/flink-yarn/lib

重启flink-yarn-session

/opt/module/flink-yarn » bin/yarn-session.sh -d

重新启动应用

发现正常,没有报异步超时,kafka中也有消费到的数据

第五章.DWM层:支付宽表

1.需求分析

支付宽表的目的,最主要的原因是支付表没有订单明细,支付金额没有细分到商品上,没有办法统计商品级别的支付情况所以本次宽表的核心是把支付表的信息与订单明细关联上解决方案有三个

- 一个是把订单明细表输出到hbase上,在支付宽表计算时查询hbase,这相当于把订单明细作为一种维度进行管理

- 一个是用流的方式接收订单明细,然后用双流join的方式进行合并,因为订单与支付产生有一定的时差,所以必须用intervalJoin来管理流的状态时间,保证当支付到达时订单清洗还保存在状态中

- 使用流的方式让支付表与订单宽表进行join,就省去了查询维度表的步骤

2.具体实现

用到的pojo类

- PaymentInfo.java

package com.atguigu.gmall.pojo;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import java.math.BigDecimal;@Data@AllArgsConstructor@NoArgsConstructorpublic class PaymentInfo {private Long id;private Long order_id;private Long user_id;private BigDecimal total_amount;private String subject;private String payment_type;private String create_time;private String callback_time;}

- PaymentWide.java

package com.atguigu.gmall.pojo;import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import org.apache.commons.beanutils.BeanUtils;import java.lang.reflect.InvocationTargetException;import java.math.BigDecimal;@Data@AllArgsConstructor@NoArgsConstructorpublic class PaymentWide {private Long payment_id;private String subject;private String payment_type;private String payment_create_time;private String callback_time;private Long detail_id;private Long order_id;private Long sku_id;private BigDecimal order_price;private Long sku_num;private String sku_name;private Long province_id;private String order_status;private Long user_id;private BigDecimal total_amount;private BigDecimal activity_reduce_amount;private BigDecimal coupon_reduce_amount;private BigDecimal original_total_amount;private BigDecimal feight_fee;private BigDecimal split_feight_fee;private BigDecimal split_activity_amount;private BigDecimal split_coupon_amount;private BigDecimal split_total_amount;private String order_create_time;private String province_name;//查询维表得到private String province_area_code;private String province_iso_code;private String province_3166_2_code;private Integer user_age;private String user_gender;private Long spu_id; //作为维度数据 要关联进来private Long tm_id;private Long category3_id;private String spu_name;private String tm_name;private String category3_name;public PaymentWide(PaymentInfo paymentInfo, OrderWide orderWide) {mergeOrderWide(orderWide);mergePaymentInfo(paymentInfo);}public void mergePaymentInfo(PaymentInfo paymentInfo) {if (paymentInfo != null) {try {BeanUtils.copyProperties(this, paymentInfo);payment_create_time = paymentInfo.getCreate_time();payment_id = paymentInfo.getId();} catch (IllegalAccessException | InvocationTargetException e) {e.printStackTrace();}}}public void mergeOrderWide(OrderWide orderWide) {if (orderWide != null) {try {BeanUtils.copyProperties(this, orderWide);order_create_time = orderWide.getCreate_time();} catch (IllegalAccessException | InvocationTargetException e) {e.printStackTrace();}}}}

实现代码

package com.atguigu.gmall.app.dwm;import com.alibaba.fastjson.JSON;import com.atguigu.gmall.app.BaseAppV2;import com.atguigu.gmall.common.Constant;import com.atguigu.gmall.pojo.OrderWide;import com.atguigu.gmall.pojo.PaymentInfo;import com.atguigu.gmall.pojo.PaymentWide;import com.atguigu.gmall.util.FlinkSinkUtil;import com.atguigu.gmall.util.IteratorToListUtil;import org.apache.flink.api.common.eventtime.WatermarkStrategy;import org.apache.flink.api.common.functions.MapFunction;import org.apache.flink.streaming.api.datastream.DataStreamSource;import org.apache.flink.streaming.api.datastream.KeyedStream;import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;import org.apache.flink.streaming.api.functions.co.ProcessJoinFunction;import org.apache.flink.streaming.api.windowing.time.Time;import org.apache.flink.util.Collector;import java.time.Duration;import java.util.HashMap;public class DwmPaymentWideApp extends BaseAppV2 {public static void main(String[] args) {new DwmPaymentWideApp().init(3006,1,"DwmPaymentWideApp","DwmPaymentWideApp", Constant.TOPIC_DWD_PAYMENT_INFO,Constant.TOPIC_DWM_ORDER_WIDE);}@Overridepublic void run(StreamExecutionEnvironment env, HashMap<String, DataStreamSource<String>> streams) {//streams.get(Constant.TOPIC_DWD_PAYMENT_INFO).print(Constant.TOPIC_DWD_PAYMENT_INFO);//streams.get(Constant.TOPIC_DWM_ORDER_WIDE).print(Constant.TOPIC_DWM_ORDER_WIDE);KeyedStream<PaymentInfo, Long> paymentInfoStream = streams.get(Constant.TOPIC_DWD_PAYMENT_INFO).map(new MapFunction<String, PaymentInfo>() {@Overridepublic PaymentInfo map(String value) throws Exception {return JSON.parseObject(value, PaymentInfo.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<PaymentInfo>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((info, ts) -> IteratorToListUtil.toTs(info.getCreate_time()))).keyBy(info -> info.getOrder_id());KeyedStream<OrderWide, Long> orderWideStream = streams.get(Constant.TOPIC_DWM_ORDER_WIDE).map(new MapFunction<String, OrderWide>() {@Overridepublic OrderWide map(String value) throws Exception {return JSON.parseObject(value, OrderWide.class);}}).assignTimestampsAndWatermarks(WatermarkStrategy.<OrderWide>forBoundedOutOfOrderness(Duration.ofSeconds(3)).withTimestampAssigner((wide, ts) -> IteratorToListUtil.toTs(wide.getCreate_time()))).keyBy(wide -> wide.getOrder_id());paymentInfoStream.intervalJoin(orderWideStream).between(Time.minutes(-45),Time.minutes(10)).process(new ProcessJoinFunction<PaymentInfo, OrderWide, PaymentWide>() {@Overridepublic void processElement(PaymentInfo left, OrderWide right, Context ctx, Collector<PaymentWide> out) throws Exception {out.collect(new PaymentWide(left,right));}}).map(new MapFunction<PaymentWide, String>() {@Overridepublic String map(PaymentWide value) throws Exception {return JSON.toJSONString(value);}}).addSink(FlinkSinkUtil.getKafkaSink(Constant.TOPIC_DWM_PAYMENT_WIDE));//.print();}}

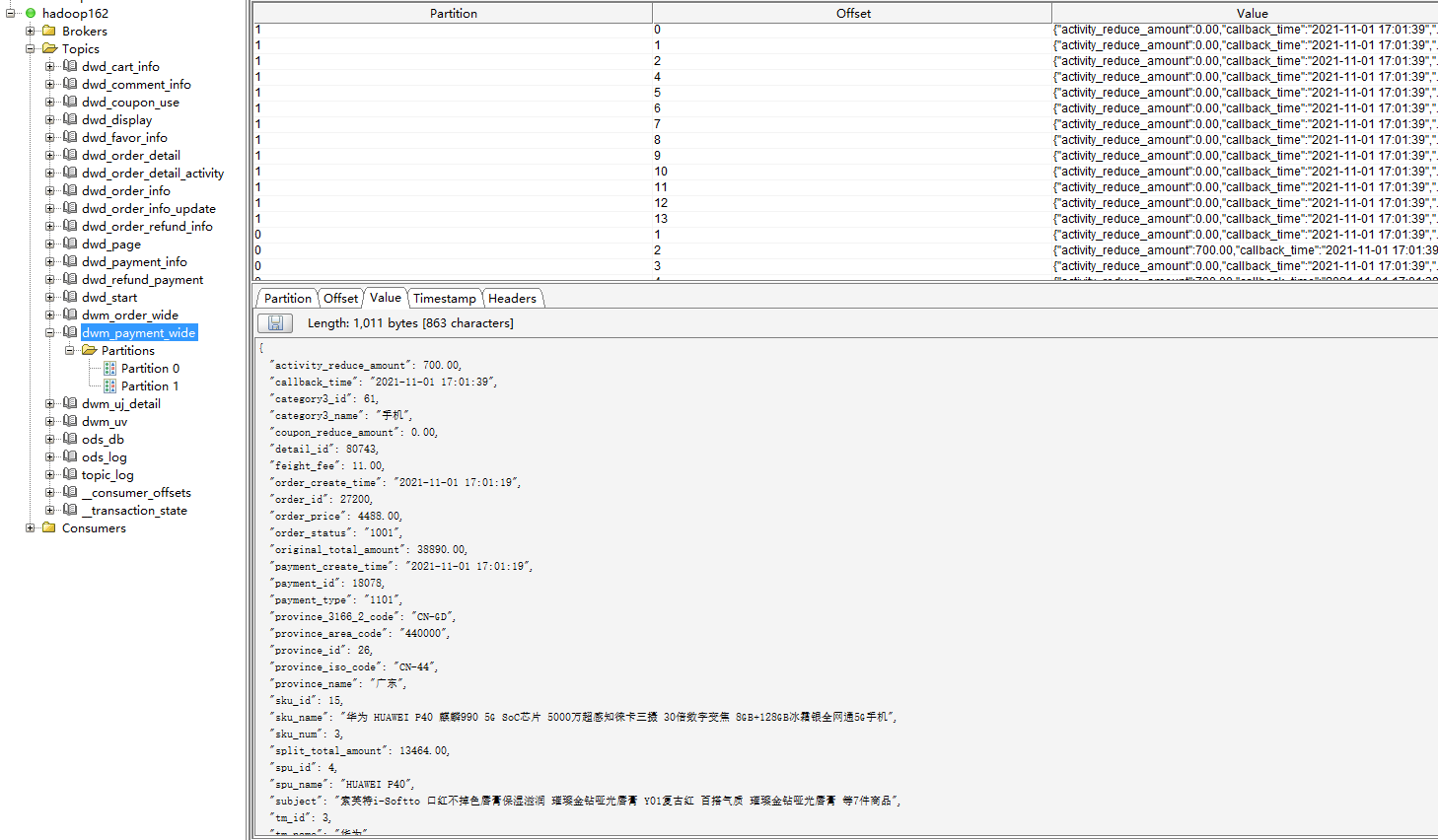

3.将项目打包到集群上进行测试

发现数据已经成功采集到kafka