本来应该直接安装 Hadoop 3+ 的,但我们有些集群使用的是 Hadoop2.6 ,本集群还要他们的预发布环境,就只能选用 2.6 版本了。

1. 解压程序

[hadoop@bigdata1 package]$ tar -xzvf hadoop-2.6.0.tar.gz

[hadoop@bigdata1 package]$ mv hadoop-2.6.0 ../hadoop260 [hadoop@bigdata1 package]$ cd ../hadoop260### 新建 hdfs 目录

[hadoop@bigdata1 hadoop260]$ mkdir /home/hadoop/hadoop260/hdfs [hadoop@bigdata1 hadoop260]$ mkdir /home/hadoop/hadoop260/hdfs/name [hadoop@bigdata1 hadoop260]$ mkdir /home/hadoop/hadoop260/hdfs/data [hadoop@bigdata1 hadoop260]$ ls -lrt total 56 drwxr-xr-x 4 hadoop hadoop 4096 Nov 14 2014 share drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 2014 sbin -rw-r—r— 1 hadoop hadoop 1366 Nov 14 2014 README.txt -rw-r—r— 1 hadoop hadoop 101 Nov 14 2014 NOTICE.txt -rw-r—r— 1 hadoop hadoop 15429 Nov 14 2014 LICENSE.txt drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 2014 libexec drwxr-xr-x 3 hadoop hadoop 4096 Nov 14 2014 lib drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 2014 include drwxr-xr-x 3 hadoop hadoop 4096 Nov 14 2014 etc drwxr-xr-x 2 hadoop hadoop 4096 Nov 14 2014 bin drwxrwxr-x 4 hadoop hadoop 4096 Sep 6 18:33 hdfs [hadoop@bigdata1 hadoop260]$

2.修改配置文件

core-site.xml

fs.defaultFS hdfs://bigdata1:8020 true io.file.buffer.size 131072

hdfs-site.xml

dfs.namenode.name.dir /home/hadoop/hadoop260/hdfs/name/ dfs.blocksize 268435456 dfs.namenode.handler.count 100 dfs.datanode.data.dir /home/hadoop/hadoop260/hdfs/data/ dfs.replication 1 dfs.http.address bigdata1:50070 dfs.namenode.secondary.http-address bigdata1:50090 dfs.https.enable false

yarn-site.xml

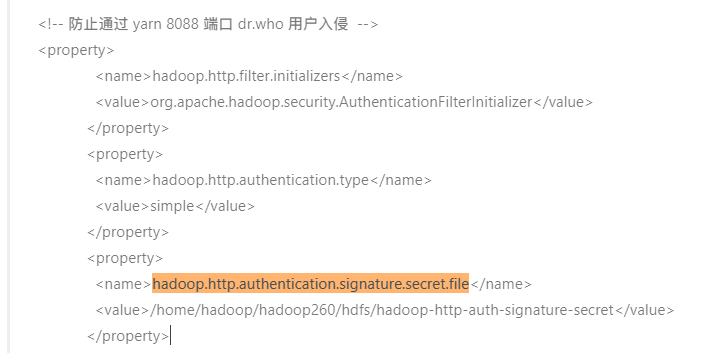

yarn.application.classpath /home/hadoop/hadoop260/etc/hadoop:/home/hadoop/hadoop260/share/hadoop/common/lib/:/home/hadoop/hadoop260/share/hadoop/common/:/home/hadoop/hadoop260/share/hadoop/hdfs:/home/hadoop/hadoop260/share/hadoop/hdfs/lib/:/home/hadoop/hadoop260/share/hadoop/hdfs/:/home/hadoop/hadoop260/share/hadoop/mapreduce/lib/:/home/hadoop/hadoop260/share/hadoop/mapreduce/:/home/hadoop/hadoop260/share/hadoop/yarn:/home/hadoop/hadoop260/share/hadoop/yarn/lib/:/home/hadoop/hadoop260/share/hadoop/yarn/ hadoop.http.filter.initializers org.apache.hadoop.security.AuthenticationFilterInitializer hadoop.http.authentication.type simple hadoop.http.authentication.signature.secret.file /home/hadoop/hadoop260/hdfs/hadoop-http-auth-signature-secret yarn.resourcemanager.hostname bigdata1 yarn.resourcemanager.webapp.address bigdata1:49966 yarn.nodemanager.aux-services mapreduce_shuffle yarn.nodemanager.resource.cpu-vcores 8 yarn.scheduler.maximum-allocation-vcores 8 yarn.scheduler.minimum-allocation-vcores 1 yarn.nodemanager.resource.memory-mb 15360 yarn.nodemanager.vmem-pmem-ratio 3.1 yarn.scheduler.minimum-allocation-mb 2048 yarn.scheduler.maximum-allocation-mb 15360

mapreduce.jobhistory.address

bigdata1:10020

mapreduce.jobhistory.webapp.address

bigdata1:19888

mapreduce.jobhistory.done-dir /user/history/done mapreduce.jobhistory.intermediate-done-dir /user/history/done_intermediate

slaves

[hadoop@bigdata1 hadoop]$ cat slaves bigdata1 bigdata2 [hadoop@bigdata1 hadoop]$

3.设置环境变量(root用户所有节点都需要)

vi /etc/profile source /etc/profile

export HADOOP_HOME=/home/hadoop/hadoop260 export PATH=$HADOOP_HOME/bin:$PATH export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export HADOOP_CLASSPATH=

hadoop classpath

4.分发文件到所有节点、启动任务

[hadoop@bigdata1 hadoop260]$ scp -r /home/hadoop/hadoop260 hadoop@bigdata2:/home/hadoop/ [hadoop@bigdata1 hadoop260]$ [hadoop@bigdata1 hadoop260]$ source /etc/profile [hadoop@bigdata1 hadoop260]$ $HADOOP_HOME/bin/hdfs namenode -format

5.常用命令

${HADOOP_HOME}/sbin/start-all.sh ${HADOOP_HOME}/sbin/stop-all.sh

${HADOOP_HOME}/sbin/start-dfs.sh ${HADOOP_HOME}/sbin/start-yarn.sh

$HADOOP_HOME/sbin/mr-jobhistory-daemon.sh start historyserver $HADOOP_HOME/sbin/mr-jobhistory-daemon.sh stop historyserver

yarn UI ResourceManager

http://bigdata1:49966/cluster

hdfs UI

http://bigdata1:50070/dfshealth.html#tab-overview

MapReduce JobHistory Server

http://bigdata1:19888/

集群升级维护时手动进入安全模式吗,命令如下 hadoop dfsadmin -safemode enter 退出安全模式: hadoop dfsadmin -safemode leave

hadoop distcp -r hdfs://47.110.158.65:8020/dpi hdfs:/bigdata1:8020/ scp -r hadoop@47.110.158.65:/home/hadoop/imei/dpi /home/hadoop/dpi/ sshpass -p ”vCS1ZVic&1XocyFGTYLoKN“ scp -o StrictHostKeyChecking=no hadoop@47.110.158.65:/srv/dpi ./ nohup $HADOOP_HOME/bin/hdfs balancer > balancer.log 2>&1 &

HDFS删除并清空回收站 删除文件并放入回收站: hdfs dfs -rm -f /path 删除文件不放入回收站: hdfs dfs -rm -f -skipTrash /path 清空回收站: hdfs dfs -expunge (执行完之后会打一个checkpoint,并不会立即执行,稍后会执行清空回收站操作)

6.问题

Error: JAVA_HOME is not set and could not be found.

[hadoop@bigdata1 root]$ ${HADOOP_HOME}/sbin/start-dfs.sh Starting namenodes on [bigdata1] bigdata1: Error: JAVA_HOME is not set and could not be found. bigdata1: Error: JAVA_HOME is not set and could not be found. bigdata2: Error: JAVA_HOME is not set and could not be found. Starting secondary namenodes [bigdata1] bigdata1: Error: JAVA_HOME is not set and could not be found.

但 JDK 以正确安装,JAVA_HOME 环境变量也正确设置了

解决方法:

在hadoop-env.sh中,再显示地重新声明一遍JAVA_HOME

应该是

export JAVA_HOME=${JAVA_HOME}

export JAVA_HOME=/usr/java/jdk1.8.0_191-amd64

[hadoop@bigdata1 root]$ java –version Error: Could not find or load main class –version [hadoop@bigdata1 root]$ [hadoop@bigdata1 root]$ [hadoop@bigdata1 root]$ ${HADOOP_HOME}/sbin/start-dfs.sh Starting namenodes on [bigdata1] bigdata1: Error: JAVA_HOME is not set and could not be found. bigdata1: Error: JAVA_HOME is not set and could not be found. bigdata2: Error: JAVA_HOME is not set and could not be found. jStarting secondary namenodes [bigdata1] bigdata1: Error: JAVA_HOME is not set and could not be found. [hadoop@bigdata1 root]$ java -version java version “1.8.0_191” Java(TM) SE Runtime Environment (build 1.8.0_191-b12) Java HotSpot(TM) 64-Bit Server VM (build 25.191-b12, mixed mode) [hadoop@bigdata1 root]$ export declare -x CLASSPATH=”/usr/java/jdk1.8.0_191-amd64/lib/“ declare -x HADOOP_CLASSPATH=”/home/hadoop/hadoop260/etc/hadoop:/home/hadoop/hadoop260/share/hadoop/common/lib/:/home/hadoop/hadoop260/share/hadoop/common/:/home/hadoop/hadoop260/share/hadoop/hdfs:/home/hadoop/hadoop260/share/hadoop/hdfs/lib/:/home/hadoop/hadoop260/share/hadoop/hdfs/:/home/hadoop/hadoop260/share/hadoop/yarn/lib/:/home/hadoop/hadoop260/share/hadoop/yarn/:/home/hadoop/hadoop260/share/hadoop/mapreduce/lib/:/home/hadoop/hadoop260/share/hadoop/mapreduce/:/home/hadoop/hadoop260/contrib/capacity-scheduler/.jar” declare -x HADOOP_CONF_DIR=”/home/hadoop/hadoop260/etc/hadoop” declare -x HADOOP_HOME=”/home/hadoop/hadoop260” declare -x HISTCONTROL=”ignoredups” declare -x HISTSIZE=”1000” declare -x HOME=”/home/hadoop” declare -x HOSTNAME=”bigdata1” declare -x JAVA_HOME=”/usr/java/jdk1.8.0_191-amd64” declare -x LANG=”en_US.UTF-8” declare -x LESSOPEN=”||/usr/bin/lesspipe.sh %s” declare -x LOGNAME=”hadoop” declare -x LS_COLORS=”rs=0:di=01;34:ln=01;36:mh=00:pi=40;33:so=01;35:do=01;35:bd=40;33;01:cd=40;33;01:or=40;31;01:mi=01;05;37;41:su=37;41:sg=30;43:ca=30;41:tw=30;42:ow=34;42:st=37;44:ex=01;32:.tar=01;31:.tgz=01;31:.arc=01;31:.arj=01;31:.taz=01;31:.lha=01;31:.lz4=01;31:.lzh=01;31:.lzma=01;31:.tlz=01;31:.txz=01;31:.tzo=01;31:.t7z=01;31:.zip=01;31:.z=01;31:.Z=01;31:.dz=01;31:.gz=01;31:.lrz=01;31:.lz=01;31:.lzo=01;31:.xz=01;31:.bz2=01;31:.bz=01;31:.tbz=01;31:.tbz2=01;31:.tz=01;31:.deb=01;31:.rpm=01;31:.jar=01;31:.war=01;31:.ear=01;31:.sar=01;31:.rar=01;31:.alz=01;31:.ace=01;31:.zoo=01;31:.cpio=01;31:.7z=01;31:.rz=01;31:.cab=01;31:.jpg=01;35:.jpeg=01;35:.gif=01;35:.bmp=01;35:.pbm=01;35:.pgm=01;35:.ppm=01;35:.tga=01;35:.xbm=01;35:.xpm=01;35:.tif=01;35:.tiff=01;35:.png=01;35:.svg=01;35:.svgz=01;35:.mng=01;35:.pcx=01;35:.mov=01;35:.mpg=01;35:.mpeg=01;35:.m2v=01;35:.mkv=01;35:.webm=01;35:.ogm=01;35:.mp4=01;35:.m4v=01;35:.mp4v=01;35:.vob=01;35:.qt=01;35:.nuv=01;35:.wmv=01;35:.asf=01;35:.rm=01;35:.rmvb=01;35:.flc=01;35:.avi=01;35:.fli=01;35:.flv=01;35:.gl=01;35:.dl=01;35:.xcf=01;35:.xwd=01;35:.yuv=01;35:.cgm=01;35:.emf=01;35:.axv=01;35:.anx=01;35:.ogv=01;35:.ogx=01;35:.aac=01;36:.au=01;36:.flac=01;36:.mid=01;36:.midi=01;36:.mka=01;36:.mp3=01;36:.mpc=01;36:.ogg=01;36:.ra=01;36:.wav=01;36:.axa=01;36:.oga=01;36:.spx=01;36:.xspf=01;36:” declare -x MAIL=”/var/spool/mail/root” declare -x OLDPWD declare -x PATH=”/home/hadoop/hadoop260/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/usr/java/jdk1.8.0_191-amd64/bin:/root/bin” declare -x PWD=”/root” declare -x SHELL=”/bin/bash” declare -x SHLVL=”2” declare -x SSH_CLIENT=”115.192.37.227 61227 22” declare -x SSH_CONNECTION=”115.192.37.227 61227 172.16.134.135 22” declare -x SSH_TTY=”/dev/pts/0” declare -x TERM=”linux” declare -x USER=”hadoop” declare -x XDG_RUNTIME_DIR=”/run/user/0” declare -x XDG_SESSION_ID=”34” [hadoop@bigdata1 root]$

Could not read HTTP signature secret file

这是由于 yarn-site.xml 配置文件里的安全认证,需要在所有节点下单独新建文件才行

[hadoop@bigdata1 .ssh]$ cat /home/hadoop/hadoop260/hdfs/hadoop-http-auth-signature-secret 1qaz2wsx!@# [hadoop@bigdata1 .ssh]$

[hadoop@bigdata1 .ssh]$ ${HADOOP_HOME}/sbin/start-dfs.sh

Starting namenodes on [bigdata1] bigdata1: starting namenode, logging to /home/hadoop/hadoop260/logs/hadoop-hadoop-namenode-bigdata1.out bigdata1: starting datanode, logging to /home/hadoop/hadoop260/logs/hadoop-hadoop-datanode-bigdata1.out bigdata2: starting datanode, logging to /home/hadoop/hadoop260/logs/hadoop-hadoop-datanode-bigdata2.out Starting secondary namenodes [bigdata1] bigdata1: starting secondarynamenode, logging to /home/hadoop/hadoop260/logs/hadoop-hadoop-secondarynamenode-bigdata1.out bigdata1: Exception in thread “main” java.lang.RuntimeException: Could not read HTTP signature secret file: /home/hadoop/hadoop260/hdfs/hadoop-http-auth-signature-secret bigdata1: at org.apache.hadoop.security.AuthenticationFilterInitializer.initFilter(AuthenticationFilterInitializer.java:93) bigdata1: at org.apache.hadoop.http.HttpServer2.initializeWebServer(HttpServer2.java:394) bigdata1: at org.apache.hadoop.http.HttpServer2.(HttpServer2.java:345) bigdata1: at org.apache.hadoop.http.HttpServer2. (HttpServer2.java:105) bigdata1: at org.apache.hadoop.http.HttpServer2$Builder.build(HttpServer2.java:293) bigdata1: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.initialize(SecondaryNameNode.java:269) bigdata1: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode. (SecondaryNameNode.java:192) bigdata1: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.main(SecondaryNameNode.java:671) [hadoop@bigdata1 .ssh]$ vi /home/hadoop/hadoop260/hdfs/hadoop-http-auth-signature-secret