下载图片

首先你得先下图片,下图片,下图片…… 这里推荐一个插件

比如我想要找紫藤的图片,就百度:紫藤

可以多加载几页,一起下载下来

**

我下载了 1000 张紫藤,1000 张玫瑰,分别放在 0 和 1 文件夹中

整理加工图片

下载完成之后需要人工筛选一下,里面会夹杂一些乱七八糟的图片,以及主体不是目标的图片,筛选两三遍,最后可能也就找几百张,像前面别人做好的数据集那样一下 60000 张可麻烦了,可以用一些方法让他们翻倍,比如改变一下图片的亮度、对比度、把图片左右反转一下等等

先来看一下对图片的处理,写几个函数到时候调用就可以,需要用到 PIL 的 ImageEnhance 模块,其中的 enhance 的参数可以理解为百分比,1.2 就是 120%,也就是调大了,0.8 就是 80%,也就是调小了

import osfrom PIL import Image, ImageEnhance#左右翻转def turn_left_right(img):return img.transpose(Image.FLIP_LEFT_RIGHT)#改变图片亮度def brighten_darken(img, val):return ImageEnhance.Brightness(img).enhance(val)#改变图片饱和度def saturation_up_down(img, val):return ImageEnhance.Color(img).enhance(val)#改变图片对比度def contrast_up_down(img, val):return ImageEnhance.Contrast(img).enhance(val)#改变图片锐度def sharpness_up_down(img, val):return ImageEnhance.Sharpness(img).enhance(val)

上面写完了对图片进行调整的函数,再来写个函数把文件夹下的所有图片都进行调整

def picture_enhance(dir):img_list = os.listdir(dir) #把要操作的目录传进来,使用os.listdir获得文件列表for i in range(len(img_list)):try:img = Image.open(dir + '\\' + img_list[i]) #挨个打开temp = str(i)#convert('RGB')是为了抛弃透明度之类的没用的信息turn_left_right(img).convert('RGB').save(dir + '\\turn_left_right' + temp + '.jpg')brighten_darken(img, 1.2).convert('RGB').save(dir + '\\brighten' + temp + '_1' + '.jpg')brighten_darken(img, 1.4).convert('RGB').save(dir + '\\brighten' + temp + '_2' + '.jpg')brighten_darken(img, 0.8).convert('RGB').save(dir + '\\darken' + temp + '_1' + '.jpg')brighten_darken(img, 0.6).convert('RGB').save(dir + '\\darken' + temp + '_2' + '.jpg')saturation_up_down(img, 1.2).convert('RGB').save(dir + '\\saturation_up' + temp + '_1' + '.jpg')saturation_up_down(img, 1.4).convert('RGB').save(dir + '\\saturation_up' + temp + '_2' + '.jpg')saturation_up_down(img, 0.8).convert('RGB').save(dir + '\\saturation_down' + temp + '_1' + '.jpg')saturation_up_down(img, 0.6).convert('RGB').save(dir + '\\saturation_down' + temp + '_2' + '.jpg')contrast_up_down(img, 1.2).convert('RGB').save(dir + '\\contrast_up' + temp + '_1' + '.jpg')contrast_up_down(img, 1.4).convert('RGB').save(dir + '\\contrast_up' + temp + '_2' + '.jpg')contrast_up_down(img, 0.8).convert('RGB').save(dir + '\\contrast_down' + temp + '_1' + '.jpg')contrast_up_down(img, 0.6).convert('RGB').save(dir + '\\contrast_down' + temp + '_2' + '.jpg')sharpness_up_down(img, 1.2).convert('RGB').save(dir + '\\sharpness_up' + temp + '_1' + '.jpg')sharpness_up_down(img, 1.4).convert('RGB').save(dir + '\\sharpness_up' + temp + '_2' + '.jpg')sharpness_up_down(img, 0.8).convert('RGB').save(dir + '\\sharpness_down' + temp + '_1' + '.jpg')sharpness_up_down(img, 0.6).convert('RGB').save(dir + '\\sharpness_down' + temp + '_2' + '.jpg')except:passpicture_enhance('D:\\anquan\\deeplearn\\my_flower\\0')picture_enhance('D:\\anquan\\deeplearn\\my_flower\\1')

经过这个函数,一张图片会再保存出来 17 张,上面这个步骤处理完成之后每种花就会得到 18000 张图片

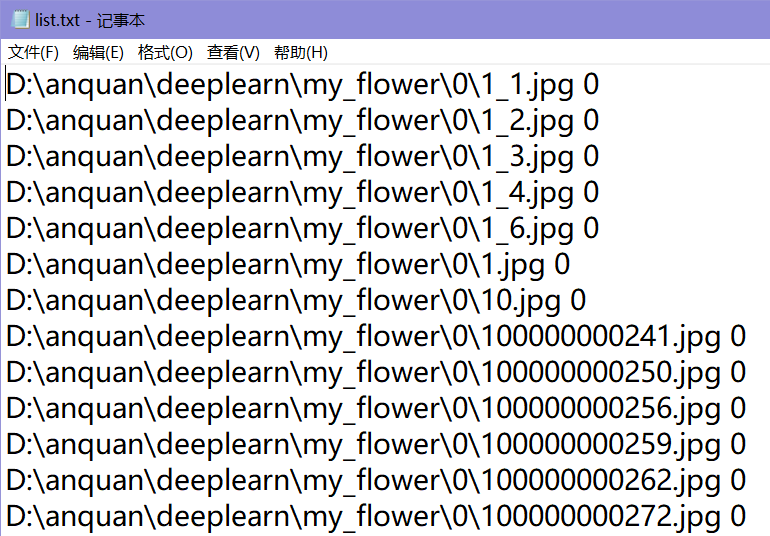

给图片打标签

先编写个函数,在每个文件夹下面生成一个 list.txt,内容是图片的路径加标签,对于放紫藤图片的文件夹就是:图片地址 0 然后统一整理到一起

def generate_list(dir, label):files = os.listdir(dir)listText = open(dir + '\\' + 'list.txt', 'w')for file in files:name_label = dir + '\\' + file + ' ' + str(int(label)) + '\n' #路径\\文件名 标签 name_label:就是我们项写入到list.txt里面的内容listText.write(name_label)listText.close()generate_list('D:\\anquan\\deeplearn\\my_flower\\0', 0)generate_list('D:\\anquan\\deeplearn\\my_flower\\1', 1)#统一整理到上层文件夹的list.txtfile = open('D:\\anquan\\deeplearn\\my_flower\\list.txt', 'w')file0 = open('D:\\anquan\\deeplearn\\my_flower\\0\\list.txt', 'r')file1 = open('D:\\anquan\\deeplearn\\my_flower\\1\\list.txt', 'r')roses_list = []for i in file0.readlines():file.write(i)sunflowers_list = []for i in file1.readlines():file.write(i)file0.close()file1.close()file.close()

制作数据集

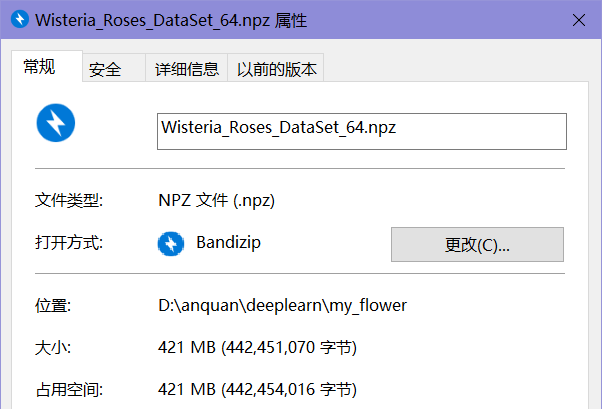

读取 list.txt 中的图片,作为数据,后面的作为标签,保存为 npz 数据集

import osfrom PIL import Image, ImageEnhanceimport numpy as np#编写一个函数,读取list.txt并返回图片数据和标签def readData(txt_path):list_file = open(txt_path, 'r')content = list_file.readlines() #读取list.txt里面的所有内容,并存储到contentimage = []#数据label = []#标签for i in range(len(content)):try:line = content[i]img = Image.open(line.split()[0])#line.split()默认以空格作为分割,line.split()[0]表示图片,line.split()[1]表示标签img = img.convert('RGB').resize((64,64), Image.ANTIALIAS)#制作数据集为64*64的图片,Image.ANTIALIAS表示压缩过程中尽量保持图片质量img = np.array(img)image.append(img)line.split()[1] = np.array(int(line.split()[1]))label.append(line.split()[1])except:pass #emmmm 图片名不要带中文的括号呀image_np_array = np.array(image)label_np_array = np.array(label)return (image_np_array, label_np_array)(data_image, data_label) = readData('D:\\anquan\\deeplearn\\my_flower\\list.txt')np.savez('Wisteria_Roses_DataSet_64.npz', train_image = data_image, train_label = data_label)

最后生成的数据集 400 多 MB

处理数据

之前保存出来的数据集可以这样导入

dataset = np.load('Wisteria_Roses_DataSet_64.npz')image = dataset['train_image']label = dataset['train_label']

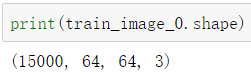

首先我们要分出一些来作为训练数据跟测试数据,现在我们有 18000*2,可以每种分出15000 作为训练数据,剩下的 3000 作为测试数据

train_image_0 = []train_label_0 = []train_image_1 = []train_label_1 = []test_image = []test_label = []for i in range(len(image)):if (label[i] == '0') & (len(train_label_0) < 15000): #标签为0的前15000作为训练数据train_image_0.append(image[i])train_label_0.append(label[i])continueif (label[i] == '1') & (len(train_label_1) < 15000): #标签为1的前15000作为训练数据train_image_1.append(image[i])train_label_1.append(label[i])continuetest_image.append(image[i]) #剩余3000+3000 = 6000作为测试数据test_label.append(label[i])train_image_0 = np.array([train_image_0])train_image_0 = np.squeeze(train_image_0)train_label_0 = np.array([train_label_0])train_label_0 = np.squeeze(train_label_0)train_image_1 = np.array([train_image_1])train_image_1 = np.squeeze(train_image_1)train_label_1 = np.array([train_label_1])train_label_1 = np.squeeze(train_label_1)test_image = np.array([test_image])test_image = np.squeeze(test_image)test_label = np.array([test_label])test_label = np.squeeze(test_label)

数据归一化、一位有效编码

train_image_normalize = train_image.astype(float) / 255train_label_onehotencoding = np_utils.to_categorical(train_label)valid_image_normalize = valid_image.astype(float) / 255valid_label_onehotencoding = np_utils.to_categorical(valid_label)test_image_normalize = test_image.astype(float) / 255test_label_onehotencoding = np_utils.to_categorical(test_label)

建立模型并训练

model = Sequential()model.add(Conv2D(filters=32,kernel_size=(3,3), padding='same', input_shape=(64,64,3), activation='relu'))model.add(MaxPooling2D(pool_size=(2,2)))model.add(Conv2D(filters=16,kernel_size=(3,3), padding='same', activation='relu'))model.add(MaxPooling2D(pool_size=(2,2)))model.add(Dropout(0.5))model.add(Flatten())model.add(Dropout(0.25))model.add(Dense(units=2, kernel_initializer='normal', activation='softmax'))model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy'])#shuffle=Ture 表示随机取训练样本,避免某一分类过多训练train_history = model.fit(train_image_normalize, train_label_onehotencoding, validation_data=(valid_image_normalize, valid_label_onehotencoding), shuffle=True,epochs=20, batch_size=200, verbose=2)#验证模型准确率scores = model.evaluate(test_image_normalize, test_label_onehotencoding)print(scores)

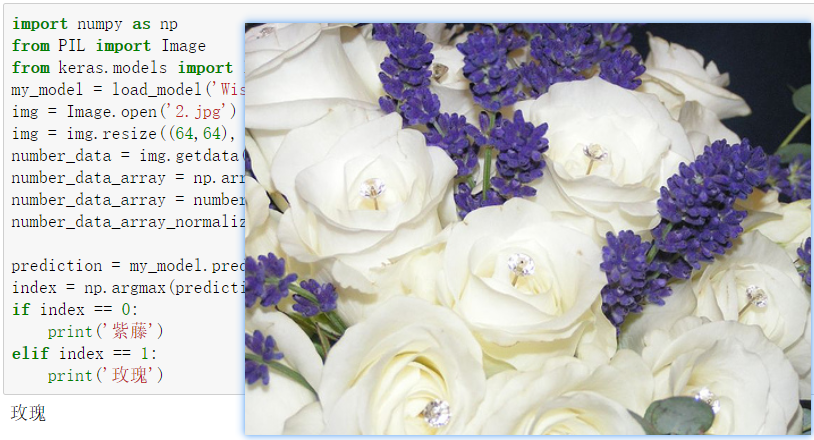

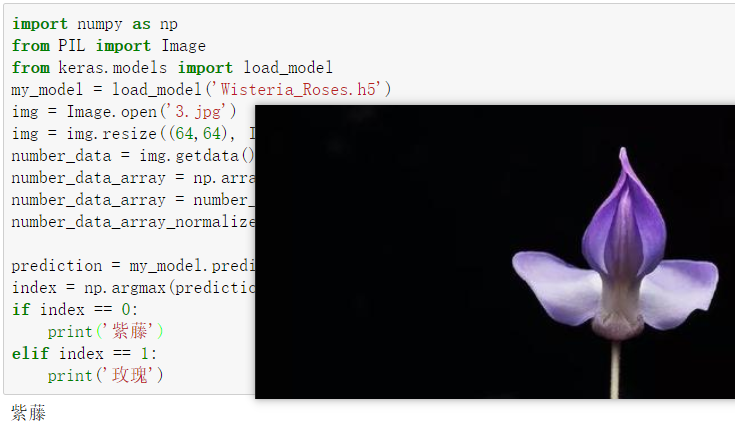

自己选点图片预测

import numpy as npfrom PIL import Imagefrom keras.models import load_modelmy_model = load_model('Wisteria_Roses.h5')img = Image.open('3.jpg')img = img.resize((64,64), Image.ANTIALIAS)number_data = img.getdata()number_data_array = np.array(number_data)number_data_array = number_data_array.reshape(1, 64, 64, 3).astype(float)number_data_array_normalize = number_data_array / 255prediction = my_model.predict(number_data_array_normalize)index = np.argmax(prediction)if index == 0:print('紫藤')elif index == 1:print('玫瑰')

这张图片里的紫色的花应该是葡萄风信子(支付宝识别的)