ansible部署脚本及安装包下载

轻量包:基本涵盖用到了的中间件

https://hz-package.hzins.com/ansible_light.tar.gz

全量包:除了中间件,还包含5.16和6.3版本的cloudera manager和CDH

https://hz-package.hzins.com/ansible_full.tar.gz

目录说明

.

├── code

│ ├── 01.server-init.yaml

│ ├── ……

│ └── roles

├── hosts

│ ├── global.yaml

│ └── hosts

├── package

│ ├── ansible

│ ├── apollo

│ ├── azkaban

│ ├── ……

│ ├── redis

│ ├── spark

│ └── sql

└── script

├── config.sh

├── middware_setup.sh

└── tmp

code: ansible剧本

hosts:ansible inventory配置目录

package:离线安装包

script:一键部署脚本(middware_setup.sh )目录

一、系统参数初始化

参考第一课 环境配置

ansible命令

ansible-playbook 01.server-init.yaml -e "hosts=172.19.0.191,172.19.0.192,172.19.0.193 systeminit=yes"

二、Jdk安装

ansible命令

ansible-playbook 02.jdk.yaml -e "jdk_host=172.19.0.191,172.19.0.192,172.19.0.193 Download_Url=http://172.19.0.191"

三、Mysql安装

1、下载安装包

(1)服务器执行命令下载:

mkdir -p /usr/local/srccd /usr/local/srcwget https://hz-package.hzins.com/mysql-5.7.33-linux-glibc2.12-x86_64.tar.gz

2、部署前系统环境设置

(1)关闭 SELinux

vim /etc/selinux/config

SELINUX=disabled

(2)关闭 firewalld防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld ##查看状态

(3)设置swap分区,默认为1

vim /proc/sys/vm/swappiness ##设置为1

cat /proc/sys/vm/swappiness ##查看是否为1

(4)文件系统建议使用xfs

(5)修改操作系统限制

vim /etc/security/limits.conf ##在末尾添加

* soft nofile 65535

* hard nofile 65535

* soft nproc 65535

* hard nproc 65535

检查命令ulimit -a

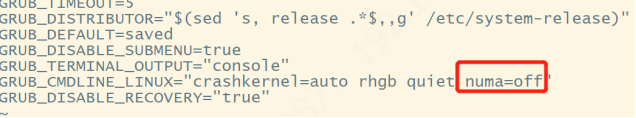

(6)关闭NUMA

vi /etc/default/grub ##在 GRUB_CMDLINE_LINUX 参数的末尾增加 : numa=off

以上操作完成后,重启操作系统使配置生效。

3、部署MYSQL

(1)建立mysql用户

groupadd mysql

useradd -g mysql mysql -s /sbin/nologin

(2)解压、软链接

cd /usr/local/src

tar -xvzf mysql-5.7.33-linux-glibc2.12-x86_64.tar.gz

ln -s /usr/local/src/mysql-5.7.33-linux-glibc2.12-x86_64 /usr/local/mysql

(3)建立数据和日志目录

mkdir -p /data/mysql3306/

mkdir -p /data/tmp/mysql3306

mkdir -p /data/dblog/mysql3306/{binlog,relaylog}

touch /data/dblog/mysql3306/error.log

chown -R mysql:mysql /data/mysql3306/

chown -R mysql:mysql /data/tmp/mysql3306

chown -R mysql:mysql /data/dblog/

(4)编辑配置文件

vim /etc/my3306.cnf

[client]

port = 3306

socket = /tmp/mysql.sock

[mysql]

no-auto-rehash

prompt = "\\u@\\d \\R:\\m> "

default-character-set = utf8

[mysqld]

########basic settings########

server-id = 120

port = 3306

user = mysql

basedir = /usr/local/mysql

datadir = /data/mysql3306

tmpdir = /data/tmp/mysql3306

socket = /tmp/mysql.sock

skip-external-locking

skip-name-resolve

back_log = 600

connect_timeout = 20

character_set_server = utf8

collation-server = utf8_general_ci

skip-character-set-client-handshake=1

default-storage-engine = InnoDB

character-set-client-handshake = FALSE

init_connect ='set names utf8'

skip_name_resolve = 1

max_connections = 1000

max_connect_errors = 5000

interactive_timeout = 400

connect_timeout = 20

wait_timeout = 400

max_allowed_packet = 512M

group_concat_max_len = 10240

transaction_isolation = READ-COMMITTED

log_bin = /data/dblog/mysql3306/binlog/mysql-bin

explicit_defaults_for_timestamp = 0

#transaction_write_set_extraction=MURMUR32

#sql_mode = "STRICT_TRANS_TABLES,NO_ENGINE_SUBSTITUTION,NO_ZERO_DATE,NO_ZERO_IN_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER"

sql_mode = "NO_ENGINE_SUBSTITUTION"

optimizer_switch="index_merge=on,index_merge_union=on,index_merge_sort_union=on,index_merge_intersection=on,engine_condition_pushdown=on,index_condition_pushdown=on,mrr=on,mrr_cost_based=on,block_nested_loop=on,batched_key_access=off,materialization=on,semijoin=on,loosescan=on,firstmatch=on,duplicateweedout=on,subquery_materialization_cost_based=on,use_index_extensions=on,condition_fanout_filter=on,derived_merge=off"

max_execution_time=60000

log_timestamps=SYSTEM

#######per_thread_buffers############

join_buffer_size = 134217728

tmp_table_size = 67108864

read_buffer_size = 16777216

read_rnd_buffer_size = 33554432

sort_buffer_size = 33554432

table_open_cache = 8000

table_definition_cache = 4096

########slow log settings########

slow_query_log = 1

slow_query_log_file = /data/dblog/mysql3306/mysql.slow

long_query_time = 1

#log_queries_not_using_indexes = 1

#log_slow_admin_statements = 1

#log_slow_slave_statements = 1

#log_throttle_queries_not_using_indexes = 10

#min_examined_row_limit = 100

#####error log settings########

log_error = /data/dblog/mysql3306/error.log

####binlog settings####

log_bin = /data/dblog/mysql3306/binlog/mysql-bin

expire_logs_days = 20

binlog_format = row

max_binlog_size = 1024M

binlog_cache_size = 4M

binlog_stmt_cache_size = 1M

binlog_rows_query_log_events=ON

########replication settings########

skip-slave-start = 1

slave-net-timeout = 10

#######mysql5.7###########

slave_parallel-type = LOGICAL_CLOCK

slave_parallel_workers = 8

#########################

master_info_repository = TABLE

relay_log_info_repository = TABLE

log_slave_updates = 1

relay_log_recovery = 1

relay_log_purge = 0

relay-log = /data/dblog/mysql3306/relaylog/relay-bin

sync_master_info = 1

sync_relay_log_info = 1

sync_relay_log = 1

#read_only = 1

#super_read_only = 1

########innodb settings########

innodb_data_home_dir = /data/mysql3306

innodb_data_file_path = ibdata1:1024M:autoextend:max:10G

#innodb_data_file_path = ibdata1:1024M:autoextend

innodb_page_size = 16384

innodb_buffer_pool_size = 48G

innodb_buffer_pool_instances = 16

innodb_lru_scan_depth = 2000

innodb_lock_wait_timeout = 10

innodb_read_io_threads = 16

innodb_write_io_threads = 16

innodb_io_capacity = 4000

innodb_io_capacity_max = 10000

innodb_flush_method = O_DIRECT

innodb_file_per_table = 1

innodb_file_format = Barracuda

innodb_file_format_max = Barracuda

innodb_log_file_size = 4G

innodb_log_buffer_size = 16777216

innodb_log_files_in_group = 3

innodb_undo_logs = 128

innodb_undo_tablespaces = 0

innodb_sort_buffer_size = 67108864

innodb_autoinc_lock_mode = 2

innodb_flush_neighbors = 0

innodb_large_prefix = 1

innodb_purge_threads = 8

innodb_purge_batch_size = 300

innodb_thread_concurrency = 64

innodb_print_all_deadlocks = 1

innodb_strict_mode = 1

innodb_max_dirty_pages_pct = 75

innodb_old_blocks_pct = 37

innodb_old_blocks_time = 1000

innodb_stats_on_metadata = off

innodb_buffer_pool_load_at_startup = 1

innodb_buffer_pool_dump_at_shutdown = 1

#mysql5.7 new

#innodb_buffer_pool_dump_pct = 40

#innodb_page_cleaners = 4

#innodb_undo_log_truncate = 1

#innodb_max_undo_log_size = 2G

#innodb_purge_rseg_truncate_frequency = 128

#双1参数

innodb_flush_log_at_trx_commit = 1

sync_binlog = 1

innodb_support_xa = 1

########gtid settings########

gtid_mode = on

enforce_gtid_consistency = 1

#binlog_gtid_simple_recovery = 1

########semi sync replication settings########

#plugin_dir = /usr/lib64/mysql/plugin/

#plugin_load = "rpl_semi_sync_master=semisync_master.so;rpl_semi_sync_slave=semisync_slave.so"

#loose_rpl_semi_sync_master_enabled = 1

#loose_rpl_semi_sync_slave_enabled = 1

#loose_rpl_semi_sync_master_timeout = 5000

[mysqldump]

quick

max_allowed_packet = 512M

[myisamchk]

key_buffer_size = 20M

sort_buffer_size = 20M

read_buffer = 16M

write_buffer = 16M

[mysqlhotcopy]

interactive-timeout

[mysqld_safe]

###numa####

#flush_caches = 1

#numa_interleave = 1

open-files-limit = 65535

#malloc-lib = /usr/local/lib/libtcmalloc.so

#malloc-lib= /usr/local/mysql/lib/mysql/libjemalloc.so

注意:server-id在同一主从环境中,每个节点server-id 需要不一样。

(5)启动MySQL

cd /usr/local/mysql/bin

##初始化mysql

./mysqld --defaults-file=/etc/my3306.cnf --basedir=/usr/local/mysql --datadir=/data/mysql3306 --user=mysql --initialize

###查看root初始密码

vim /data/dblog/mysql3306/error.log

##可以看到如下信息

[Note] A temporary password is generated for root@localhost: /#T#mZhZQ7gp ##此即root初始密码

##启动mysql

/usr/local/mysql/bin/mysqld_safe --defaults-file=/etc/my3306.cnf &

##查看mysql是否启动成功

ps -ef|grep mysql

##复制二进制文件

cp mysql /usr/bin

##用root初始密码登录

mysql -uroot -p

#需要先修改密码才能进行其他操作

mysql>alter user 'root'@'localhost' identified by "1qaz@2wsx";

4、ansible安装命令

ansible-playbook 03.mysql.yaml -e " mysql_host=172.19.0.191 Download_Url=http://172.19.0.191 max_mem=80 mysql_port=3306"

四、Apollo安装

1、 上传安装包至服务器

/data/software/apollo/

2. 解压至/data/目录

tar -zxvf apollo.tar.gz -C /data

3. 初始化数据库

CREATE DATABASE IF NOT EXISTS dss_dms_apolloconfigdb DEFAULT CHARACTER SET utf8mb4;

CREATE DATABASE IF NOT EXISTS dss_dms_apolloportaldb DEFAULT CHARACTER SET utf8mb4;

#创建数据库账号

create user apollo@'%' identified by '******';

#授权

grant all privileges on dss_dms_apolloconfigdb.* to apollo@'%' ;

grant all privileges on dss_dms_apolloportaldb.* to apollo@'%' ;

4. 修改配置文件

vim /data/apollo/apollo-adminservice/config/application-github.properties

# DataSource

spring.datasource.url = jdbc:mysql://middware:3307/dss_dms_apolloconfigdb?characterEncoding=utf8

spring.datasource.username = apollo

spring.datasource.password = Hzyw123

vim /data/apollo/apollo-configservice/config/application-github.properties

# DataSource

spring.datasource.url = jdbc:mysql://middware:3307/dss_dms_apolloconfigdb?characterEncoding=utf8

spring.datasource.username = apollo

spring.datasource.password = Hzyw123

vim /data/apollo/apollo-portal/config/application-github.properties

# DataSource

spring.datasource.url = jdbc:mysql://middware:3307/dss_dms_apolloportaldb?characterEncoding=utf8

spring.datasource.username = apollo

spring.datasource.password = Hzyw123

vim /data/apollo/apollo-portal/config/apollo-env.properties

# 修改为configservice地址

prod.meta=http://172.19.0.191:8080

5. 启动

sh /data/apollo/apollo-adminservice/scripts/startup.sh

sh /data/apollo/apollo-configservice/scripts/startup.sh

sh /data/apollo/apollo-portal/scripts/startup.sh

6. 验证

登录web页面

http://apollo-portal-server:8070

apollo/admin

7.ansible安装命令

ansible-playbook 04.apollo.yaml -e "config_admin_hosts=172.19.0.192,172.19.0.193 portal_hosts=172.19.0.191 Download_Url=http://192.168.56.102 mysql_host=192.168.56.102"

五、Azkaban安装

1. 上传安装包至azkaban服务器

/data/software/azkaban.tar.gz

2. 解压至安装目录

tar -zxvf azkaban.tar.gz

cd azkaban

mkdir -p /data/azkaban

#executor可部署多台服务器

tar -zxvf azkaban-exec.tar.gz -C /data/azkaban

#web部署到单台服务器

tar -zxvf azkaban-web.tar.gz -C /data/azkaban

cp azkaban-exec.service /usr/lib/systemd/system/

cp azkaban-web.service /usr/lib/systemd/system/

3. 初始化数据库

#创建数据库

CREATE DATABASE IF NOT EXISTS `dss_dms_azkaban` default character set utf8mb4;

#创建账号

create user azkaban@'%' identified by '******';

#账号授权

grant all privileges on dss_dms_azkaban.* to azkaban@'%';

#初始化表

在dss_dms_azkaban库下,执行sql创建表结构

create-all-sql-3.84.4.sql

4. 修改配置文件

修改azkaban-exec 数据库配置

database.type=mysql

mysql.port=3307

mysql.host=10.100.16.20

mysql.database=azkaban

mysql.user=azkaban

mysql.password=******

mysql.numconnections=100

# Azkaban Executor settings

executor.maxThreads=10

# 任务并行度

executor.flow.threads=10

# job并行度

flow.num.job.threads=3

修改azkaban-web配置

database.type=mysql

mysql.port=3307

mysql.host=middware

mysql.database=azkaban

mysql.user=azkaban

mysql.password=******

mysql.numconnections=100

5. 启动executor

systemctl start azkaban-exec

6. 激活executor,如果有多台executor,则都需激活

cd /data/azkaban/azkaban-exec/

curl -G "localhost:$(<./executor.port)/executor?action=activate" && echo

7. 启动azkaban-web

systemctl start azkaban-web

8. 登录web

http://azkaban-web:8081

azkaban/azkaban

9. ansible命令安装

ansible-playbook 05.azkaban.yaml -e "azkaban_exec_hosts=172.19.0.192,172.19.0.193 azkaban_web_hosts=172.19.0.191 Download_Url=http://172.19.0.191 azkaban_web_port=18081 mysql_host=172.19.0.191" -v

六、Eureka安装

1. 上传安装包至服务器

/data/software/eureka1.tar.gz

/data/software/eureka2.tar.gz

/data/software/eureka3.tar.gz

2. 解压至/data/目录

tar -zxvf /data/software/eureka1.tar.gz -C /data

tar -zxvf /data/software/eureka2.tar.gz -C /data

tar -zxvf /data/software/eureka3.tar.gz -C /data

3. 启动

sh /data/eureka/eureka1/bin/bin/server.sh start

sh /data/eureka/eureka2/bin/bin/server.sh start

sh /data/eureka/eureka3/bin/bin/server.sh start

4.ansible命令安装

ansible-playbook 06.eureka.yaml -e "eureka1=172.19.0.191 eureka2='172.19.0.192' eureka3='172.19.0.193' Download_Url=http://172.19.0.191" -v

七、Zookeeper安装

1、下载zookeeper安装包

mkdir -p /usr/local/src

cd /usr/local/src

wget https://hz-package.hzins.com/zookeeper-3.4.14.tar.gz

2、解压zookeeper安装包

cd /usr/local/src

tar -zxvf zookeeper-3.4.14.tar.gz -C /data/

3、创建数据目录和日志目录

mkdir -p /data/zookeeper-3.4.14/logs

mkdir -p /data/zookeeper-3.4.14/data

4、创建zookeeper用户

#创建用户

useradd zookeeper

#更改目录权限

chown -R zookeeper:zookeeper /data/zookeeper-3.4.14

#建立软连接

ln -s /data/zookeeper-3.4.14 /data/zookeeper

5、配置zoo.cfg文件

vim /data/zookeeper/conf/zoo.cfg

tickTime=2000 #心跳基本时间单位,毫秒级,ZK基本上所有的时间都是这个时间的整数倍

initLimit=10 #tickTime的个数

syncLimit=5

dataDir=/data/zookeeper/data #内存数据库快照存放地址,如果没有指定事务日志存放地址(dataLogDir)

dataLogDir=/data/zookeeper/logs #事务日志存储在该路径下,比较重要,这个日志存储的设备效率会影响ZK的写吞吐量。

clientPort=2181 #ZK监听客户端连接的端口

autopurge.snapRetainCount=30 #自动清理snapshot和事务日志,保留30个文件

autopurge.purgeInterval=24 #自动清理snapshot和事务日志,24小时清理1次

globalOutstandingLimit=100000 #所有连接到服务器上但是还没有返回响应的请求个数

server.135=172.19.2.135:2888:3888 #zk服务器节点地址,端口号2888表示该服务器与集群中leader交换信息的端口,默认为2888, 3888表示选举时服务器相互通信的端口

server.136=172.19.2.136:2888:3888

server.137=172.19.2.137:2888:3888

6、配置myid文件

myid文件内容对应zoo.conf的server.id配置,分别配置3台机器

cd /data/zookeeper/data

touch myid

echo "135">>myid #在172.19.2.135这台机器上配置的id是135,对应172.19.2.136就是136,根据zoo.conf的配置来

7、配置service服务

vim /usr/lib/systemd/system/zookeeper.service

#zookeeper.service文件内容

[Unit]

Description=Zookeeper server manager

[Service]

Type=forking

User=zookeeper

Group=zookeeper

Environment=ZOO_LOG_DIR=/data/zookeeper/logs

Environment=JAVA_HOME=/usr/local/jdk1.8.0_201

ExecStart=/data/zookeeper/bin/zkServer.sh start /data/zookeeper/conf/zoo.cfg

ExecStop=/data/zookeeper/bin/zkServer.sh stop /data/zookeeper/conf/zoo.cfg

ExecReload=/data/zookeeper/bin/zkServer.sh restart /data/zookeeper/conf/zoo.cfg

Restart=on-failure

TimeoutSec=300

LimitNOFILE=655350

LimitNPROC=655350

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

8、启动zookeeper服务

systemctl start zookeeper

9、ansible安装

ansible-playbook 07.zookeeper.yaml -e "zk_hosts=192.168.56.102 zk_client_port=2181 jvm_mem=1024 zk_ver=3.4.14"

八、Kafka安装

1、下载kafka安装包

mkdir -p /usr/local/src

cd /usr/local/src

wget https://hz-package.hzins.com/kafka_2.12-2.2.0.tgz

2、解压kafka安装包

cd /usr/local/src

tar -zxvf kafka_2.12-2.2.0.tgz -C /data/

3、创建数据目录和日志目录

mkdir -p /data/kafka_2.12-2.2.0/logs

mkdir -p /data/kafka_2.12-2.2.0/data

4、创建kafka用户

#创建用户

useradd kafka

#更改目录权限

chown -R kafka:kafka /data/kafka_2.12-2.2.0

#建立软连接

ln -s /data/kafka_2.12-2.2.04 /data/kafka

5、修改主要配置文件

cd /data/kafka/config/

vim server.properties

broker.id=135 #每一个broker在集群中的唯一表示,要求是正数,kafka及其根据id来识别broker机器。当该服务器的IP地址发生改变时,broker.id没有变化,则不会影响consumers的消息情况。(此处直接保持与ZooKeeper的myid值一致)

port=9092 #broker server服务端口

listeners=PLAINTEXT://172.19.2.135:9092 #监听列表(以逗号分隔 不同的协议(如plaintext,trace,ssl、不同的IP和端口)),hostname如果设置为0.0.0.0则绑定所有的网卡地址;如果hostname为空则绑定默认的网卡。

num.network.threads=3 #broker处理消息的最大线程数,一般情况下不需要去修改

num.io.threads=8 #broker处理磁盘IO的线程数,数值应该大于硬盘数

socket.send.buffer.bytes=102400 #socket的发送缓冲区,socket的调优参数SO_SNDBUFF

socket.receive.buffer.bytes=102400 #socket的接受缓冲区,socket的调优参数SO_RCVBUFF

socket.request.max.bytes=104857600 #socket请求的最大数值,防止serverOOM,message.max.bytes必然要小于socket.request.max.bytes,会被topic创建时的指定参数覆盖

log.dirs=/data/kafka/data #kafka数据的存放地址

num.partitions=1 #每个topic的分区个数

num.recovery.threads.per.data.dir=1 #每个数据目录用来日志恢复的线程数目

log.cleanup.policy=delete #日志清理策略

log.retention.hours=168 #每个日志文件删除之前保存的时间

log.segment.bytes=1073741824 #topic的分区是以一堆segment文件存储的,这个控制每个segment的大小,会被topic创建时的指定参数覆盖

log.retention.check.interval.ms=300000 #检查日志分段文件的间隔时间,以确定是否文件属性是否到达删除要求。

zookeeper.connect=172.19.2.135:2181,172.19.2.136:2181,172.19.2.137:2181 #zookeeper集群的地址,多个之间用逗号分割

zookeeper.connection.timeout.ms=6000 #客户端在建立通zookeeper连接中的最大等待时间

offsets.topic.replication.factor=2 #topic的offset的备份份数

kafka.logs.dir=/data/kafka/logs #日志存放目录

6、配置service服务

vim /usr/lib/systemd/system/kafka.service

#kafka.service文件内容

[Unit]

Description=Apache Kafka server (broker)

Documentation=http://kafka.apache.org/documentation.html

After=network.target zookeeper.target

[Service]

Type=simple

User=kafka

Group=kafka

Environment=JAVA_HOME=/usr/local/jdk1.8.0_201

Environment=KAFKA_HEAP_OPTS=-Xmx1024m -Xms1024m

ExecStart=/data/kafka/bin/kafka-server-start.sh /data/kafka/config/server.properties

ExecStop=/data/kafka/bin/kafka-server-stop.sh

Restart=on-failure

LimitNOFILE=655350

LimitNPROC=655350

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

systemctl daemon-reload

8、启动kafka服务

systemctl start kafka

9、ansible安装命令

ansible-playbook 08.kafka.yaml -e "kafka_hosts=192.168.56.102 zk_on=true remote_zk_hosts=192.168.56.102 jvm_mem=1024 Download_Url=http://192.168.56.102"

九、ES安装

1、下载安装包

(1)服务器执行命令下载:

wget https://hz-package.hzins.com/elasticsearch-7.2.1-linux-x86_64.tar.gz

2、部署前系统环境设置

(1)修改操作系统限制

echo "* soft nofile 65535" >> /etc/security/limits.conf

echo "* hard nofile 65535" >> /etc/security/limits.conf

echo "vm.max_map_count=262144" >> /etc/sysctl.conf

sysctl -p

3、解压(解压目录/data/)

tar xf elasticsearch-7.2.1-linux-x86_64.tar.gz -C /data/

4、创建用户以及修改目录权限

useradd elasticsearch

chown -R elasticsearch:elasticsearch /data/elasticsearch-7.2.1

5、elasticsearch.yml核心配置解释

cluster.name: cluster1

node.name: node1

node.master: true

node.data: true

path.data: /data/elasticsearch-7.2.1/data

path.logs: /data/elasticsearch-7.2.1/logs

network.host: 172.19.0.236

discovery.zen.minimum_master_nodes: 2

gateway.recover_after_nodes: 1

cluster.initial_master_nodes: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

discovery.seed_hosts: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

transport.tcp.port: 9300

http.port: 9200

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-credentials: true

xpack.security.enabled: false

cluster.name: 集群名称,唯一确定一个集群。

node.name:节点名称,一个集群中的节点名称是唯一固定的,不同节点不能同名。

node.master: 主节点属性值

node.data: 数据节点属性值

path.data: es数据存放位置

path.logs: es日志存放位置

network.host: 本节点的ip

discovery.zen.minimum_master_nodes: 2

设置这个参数来保证集群中的节点可以知道其它N个有master资格的节点。默认为1,对于大的集群来说,可以设置大一点的值(2-4)

gateway.recover_after_nodes: 1 设置集群中N个节点启动时进行数据恢复,默认为1。

cluster.initial_master_nodes:指定集群初次选举中用到的具有主节点资格的节点,称为集群引导,只在第一次形成集群时需要。

discovery.seed_hosts:节点发现需要配置一些种子节点,与7.X之前老版本:disvoery.zen.ping.unicast.hosts类似,一般配置集群中的全部节点

transport.port:9300——集群之间通信的端口,若不指定默认:9300

http.port: 本节点的http端口

http.cors.enabled: true 是否开启跨域访问

http.cors.allow-origin: “*” 开启跨域访问后的地址限制,*表示无限制

http.cors.allow-credentials 是否返回设置的跨域Access-Control-Allow-Credentials头,如果设置为true,那么会返回给客户端

xpack.security.enabled: false 是否开启xpack组件

6、所有节点es内存修改

vim /data/elasticsearch-7.2.1/config/jvm.options

推荐为服务器内存的一半

7、172.21.0.236 yml文件配置

rm -rf /data/elasticsearch-7.2.1/config/elasticsearch.yml

vim /data/elasticsearch-7.2.1/config/elasticsearch.yml

cluster.name: cluster1

node.name: node1

node.master: true

node.data: true

path.data: /data/elasticsearch-7.2.1/data

path.logs: /data/elasticsearch-7.2.1/logs

network.host: 172.19.0.236

discovery.zen.minimum_master_nodes: 2

gateway.recover_after_nodes: 1

cluster.initial_master_nodes: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

discovery.seed_hosts: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

transport.tcp.port: 9300

http.port: 9200

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-credentials: true

xpack.security.enabled: false

8、172.21.0.237 yml文件配置

rm -rf /data/elasticsearch-7.2.1/config/elasticsearch.yml

vim /data/elasticsearch-7.2.1/config/elasticsearch.yml

cluster.name: cluster1

node.name: node1

node.master: true

node.data: true

path.data: /data/elasticsearch-7.2.1/data

path.logs: /data/elasticsearch-7.2.1/logs

network.host: 172.19.0.237

discovery.zen.minimum_master_nodes: 2

gateway.recover_after_nodes: 1

cluster.initial_master_nodes: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

discovery.seed_hosts: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

transport.tcp.port: 9300

http.port: 9200

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-credentials: true

xpack.security.enabled: false

9、172.21.0.238 yml文件配置

rm -rf /data/elasticsearch-7.2.1/config/elasticsearch.yml

vim /data/elasticsearch-7.2.1/config/elasticsearch.yml

cluster.name: cluster1

node.name: node1

node.master: true

node.data: true

path.data: /data/elasticsearch-7.2.1/data

path.logs: /data/elasticsearch-7.2.1/logs

network.host: 172.19.0.238

discovery.zen.minimum_master_nodes: 2

gateway.recover_after_nodes: 1

cluster.initial_master_nodes: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

discovery.seed_hosts: ["172.21.0.236:9300","172.21.0.237:9300","172.21.0.238:9300"]

transport.tcp.port: 9300

http.port: 9200

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-credentials: true

xpack.security.enabled: false

10、service文件编写(所有主机相同)

vim /lib/systemd/system/elasticsearch.service

[Unit]

Description=Elasticsearch

Documentation=http://www.elastic.co

Wants=network-online.target

After=network-online.target

[Service]

RuntimeDirectory=elasticsearch

PrivateTmp=true

Environment=JAVA_HOME=/data/elasticsearch-7.2.1/jdk

Environment=ES_HOME=/data/elasticsearch-7.2.1

Environment=ES_PATH_CONF=/data/elasticsearch-7.2.1/config

Environment=PID_DIR=/var/run/elasticsearch

WorkingDirectory=/data/elasticsearch-7.2.1

User=elasticsearch

Group=elasticsearch

ExecStart=/data/elasticsearch-7.2.1/bin/elasticsearch -p ${PID_DIR}/elasticsearch.pid --quiet

# StandardOutput is configured to redirect to journalctl since

# some error messages may be logged in standard output before

# elasticsearch logging system is initialized. Elasticsearch

# stores its logs in /var/log/elasticsearch and does not use

# journalctl by default. If you also want to enable journalctl

# logging, you can simply remove the "quiet" option from ExecStart.

StandardOutput=journal

StandardError=inherit

# Specifies the maximum file descriptor number that can be opened by this process

LimitNOFILE=65535

# Specifies the maximum number of processes

LimitNPROC=4096

# Specifies the maximum size of virtual memory

LimitAS=infinity

# Specifies the maximum file size

LimitFSIZE=infinity

# Disable timeout logic and wait until process is stopped

TimeoutStopSec=0

# SIGTERM signal is used to stop the Java process

KillSignal=SIGTERM

# Send the signal only to the JVM rather than its control group

KillMode=process

# Java process is never killed

SendSIGKILL=no

# When a JVM receives a SIGTERM signal it exits with code 143

SuccessExitStatus=143

[Install]

WantedBy=multi-user.target

# Built for packages-7.2.1 (packages)

11、启动服务

systemctl daemon-reload

systemctl start elasticsearch

12、ansible 安装命令

单机

ansible-playbook 09.elasticsearch.yaml -e "es_hosts=192.168.56.102 cluster_name=cluster-es cluster_on=false Download_Url=http://192.168.56.102 jvm_mem=2"

集群

ansible-playbook 09.elasticsearch.yaml -e 'es_hosts=172.19.10.100,172.19.10.101,172.19.10.102,172.19.10.103 cluster_name=cluster-es master_ip=172.19.10.100,172.19.10.101 cluster_on=true Download_Url=http://192.168.56.102 jvm_mem=2'

十、redis安装

1、下载安装包

(1)服务器执行命令下载:

cd /usr/local/src/

wget https://hz-package.hzins.com/redis-3.2.9.tar.gz

2、解压安装包

tar zxf redis-3.2.9.tar.gz

3、编译安装Redis

cd redis-3.2.9

yum install gcc -y # 安装gcc编译环境

yum install jemalloc # 安装内存分配器,jemalloc在处理内存碎片化上优于Redis默认内存分配器libc

make MALLOC=/usr/lib64/libjemalloc.so.1 # 通过rpm -ql jemalloc可以查看so文件路径

make install # make的时候安装已经完成,make install会把redis命令放在/usr/local/bin下

4、服务器内核参数调整

(1)服务器内存分配策略,减少OOM

echo "vm.overcommit_memory=1" >> /etc/sysctl.conf

sysctl vm.overcommit_memory=1

(2)关闭THP,减少延迟

echo never > /sys/kernel/mm/transparent_hugepage/enabled #该命令执行后还需写入到/etc/rc.local

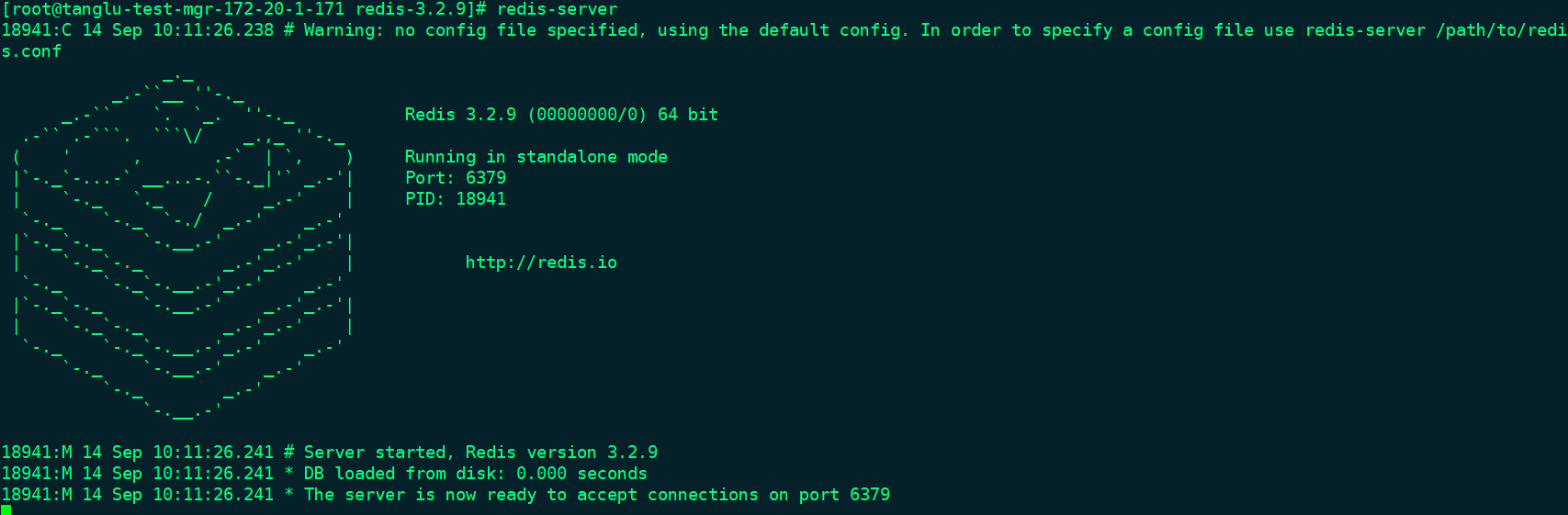

5、启动测试

运行redis-server进行启动测试,观察是否有报错

6、Redis主从配置

※ 创建/etc/redis.conf配置文件

※ 通常情况下只关注和修改带有注释项的即可,正式配置时请删掉注释信息

bind 172.20.1.171 #redis服务需要监听在服务器哪个IP上,每个节点修改为本机IP即可

protected-mode yes

daemonize yes

port 6379 #redis服务端口

tcp-backlog 511

timeout 0

tcp-keepalive 300

supervised no

pidfile /var/run/redis_6379.pid

loglevel notice

logfile "/data/redis/logs/redis.log" #日志存放路径,该目录需要手动创建

databases 16

requirepass 123456 #redis密码

maxclients 10000 #最大客户端连接数

maxmemory 1GB #redis最大占用内存,建议配置为服务器最大内存的60%

maxmemory-policy volatile-lru

# RDB持久化配置

# save 900 1

# save 300 10

# save 60 10000

stop-writes-on-bgsave-error yes #如果快照保存失败,所有节点停止写入

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb #RDB文件名

dir /data/redis/rdb/ #RDB存放目录,需要手动创建

# 主从配置

slaveof <masterip> <masterport> #该项只在从节点配置,指定主库的ip和端口

masterauth 123456 #主库的密码,所有节点配置一致

slave-serve-stale-data yes #如果从库与主库失联,是否继续响应客户端请求,No则响应SYNC with master in progress

slave-read-only yes #该项只在从节点配置,从库开启只读

repl-ping-slave-period 10 #从库向主库发送ping请求的间隔

repl-timeout 60 #主从超时时间

repl-disable-tcp-nodelay no #是否用nodelay方式传输数据,no可以降低数据传输到从库的延迟,但使用更多的带宽

repl-backlog-size 10mb #从服务离线之后,主服务器会把离线之后的写入命令存储在一个特定大小的队列中,避免短时间断开服务却进行全量同步的问题

repl-backlog-ttl 3600 #当所有从库都与主库断开连接后达到多少秒释放backlog

slave-priority 100 #从库优先级,数字越小优先级越高,0代表不会被哨兵选为主

# min-slaves-to-write 3 #如果从库少于N个,主库就停止写入,需配合min-slaves-max-lag一起使用。比如至少需要3个从库、并且延时小于等于10秒的,主库才能写入

# min-slaves-max-lag 10 #如果从库延迟小于N秒,主库才能写入数据,需配合min-slaves-to-write一起使用。比如至少需要3个从库、并且延时小于等于10秒的,主库才能写入

repl-diskless-sync yes #开启无盘复制,主从全量同步时,主库并不会在本地创建RDB 文件,而是创建一个子进程通过Socket将RDB文件写入到从服务器,节约IO资源

repl-diskless-sync-delay 5

# AOF持久化配置

appendonly no

appendfilename "appendonly.aof"

appendfsync everysec

# appendfsync always

# appendfsync no

no-appendfsync-on-rewrite no #如果正在导出rdb数据则停止aof的写入,aof将保存在一个队列中,等rdb备份完成后再执行队列,不会丢失数据

auto-aof-rewrite-percentage 100 #aof文件体积与上次相比增长率达到100%就进行重写(重写相当于记总账,比如对同一个key做了100次操作,我们只需要最后一次的操作,重写就会把多余的操作给忽略掉,节省内存

auto-aof-rewrite-min-size 64mb #和auto-aof-rewrite-percentage组合使用,aof文件达到64M时进行重写

aof-load-truncated yes

aof-rewrite-incremental-fsync yes #当Redis保存AOF文件时,每生成32MB数据就执行一次fsync操作,这样分批提交可以避免高延迟

#慢日志配置

slowlog-log-slower-than 1000000 #慢查询的评定时间,单位为微妙,这里代表1秒

slowlog-max-len 1000 #慢日志最大记录条数

#客户端输出缓冲区配置,内网环境可以全部为0

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 0 0 0

client-output-buffer-limit pubsub 0 0 0

hz 10 #通常使用默认10即可(可选范围1到500)。该值设置Redis每秒处理后台任务的频率,如清理过期Key、关闭超时连接等。调高该值可以让CPU空闲时间更频繁的去处理后台的任务,但通常不建议调高到100以上

7、启动Redis

redis-server /etc/redis.conf

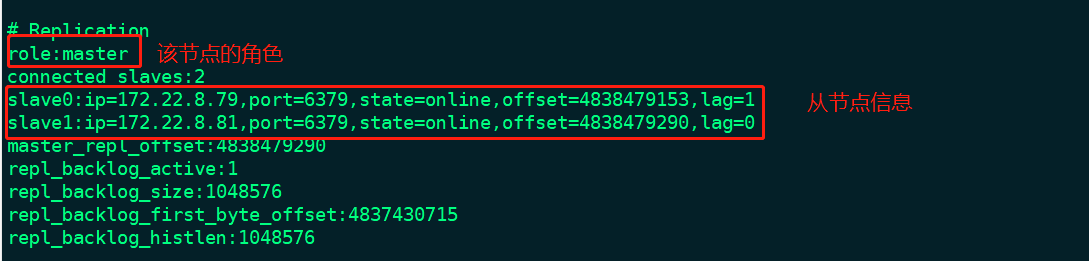

8、连接redis,检查三个节点主从状态

redis-cli -a 123456 -h 172.20.1.171 info

redis-cli -a 123456 -h 172.20.1.172 info

redis-cli -a 123456 -h 172.20.1.173 info

执行info命令后关注Replication字段,确定主从节点正常,即master下能看到两个slave节点

9、哨兵配置

(1)创建/etc/sentinel.conf配置文件

bind 172.20.1.171 #哨兵服务需要监听在服务器哪个IP上,修改为本机IP即可

daemonize yes

protected-mode yes

port 26379 #哨兵服务端口

logfile /usr/local/redis/log/sentinel.log #哨兵日志路径

dir /usr/local/redis/data/

sentinel monitor mymaster 172.20.1.171 6379 2 #配置要监控的主节点名字、IP、端口以及选举票数。哨兵会通过master自动发现其他slave服务器。选举票数建议为节点数/2+1,3节点下配置为2

sentinel down-after-milliseconds mymaster 30000 #判断master节点是否存活,超过这个时长就将其下线。单位是毫秒,默认是30秒

sentinel parallel-syncs mymaster 1 #哨兵发生切换后允许多少台slave服务器对新master进行同时同步,该项用于减轻压力

sentinel failover-timeout mymaster 180000 #主节点出现故障时,故障转移超时时间,超出这个时间范围代表切换失败,默认是3分钟

sentinel auth-pass mymaster 123456 #redis主节点的密码

(2)启动哨兵

redis-sentinel /etc/sentinel.conf

(3)连接哨兵

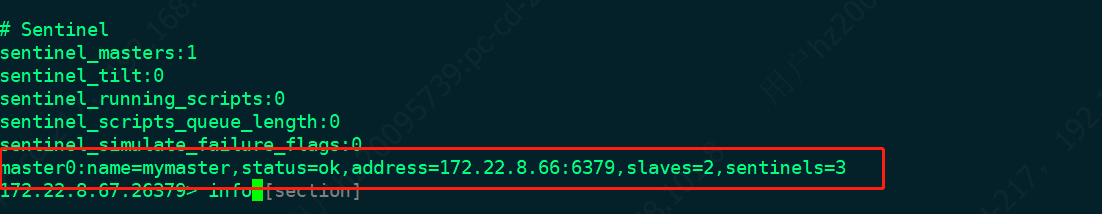

redis-cli -h 172.20.1.171 -a 123456 -p 26379 info

redis-cli -h 172.20.1.172 -a 123456 -p 26379 info

redis-cli -h 172.20.1.173 -a 123456 -p 26379 info

执行info命令后关注Sentinel字段,确定status=ok,并且adress信息和slave数量都是正常的

10、ansible命令安装

ansible-playbook 10.redis.yaml -e "redis_master=192.168.56.102 redis_slave=empty Download_Url=http://192.168.56.102 max_mem=60" -v

十一、Fastdfs安装

1. 上传安装包至服务器

/data/software/fastdfs/V5.05.tar.gz

/data/software/fastdfs/libfastcommon.tar.gz

2. 编译安装fastdfs

#安装编译环境

yum install -y gcc

#解压libfastcommon

tar -zxvf libfastcommon.tar.gz && cd libfastcommon

bash make.sh && bash make.sh install

#解压V5.05.tar.gz

tar -zxvf V5.05.tar.gz && cd fastdfs-5.05

bash make.sh && bash make.sh install

3. 添加至systemctl管理

vim /usr/lib/systemd/system/fdfs_trackerd.service

[Unit]

After=network.target

[Service]

User=root

Type=forking

PIDFile=/data/fastdfs/tracker/data/fdfs_trackerd.pid

ExecStop=/bin/kill -s QUIT $MAINPID

ExecStopPost=/usr/bin/rm -f /data/fastdfs/tracker/data/fdfs_trackerd.pid

PrivateTmp=true

ExecStart=/usr/bin/fdfs_trackerd /etc/fdfs/tracker.conf start

ExecStartPost=/bin/sleep 0.1

LimitNOFILE=65535

LimitNPROC=65535

LimitCORE=infinity

[Install]

WantedBy=multi-user.target

4. 编辑/etc/fdfs/storage_ids.conf

#解压配置文件至/etc目录

tar -zxvf fdfs.tar.gz -C /etc

#修改/etc/fdfs/storage_ids.conf,替换ip地址

#替换其余conf文件的地址

sed -i 's/tracker_server=trackerd2/#tracker_server=trackerd2/g' ./*

sed -i 's/tracker_server=10.100.16.20/#tracker_server=当前主机IP地址/g' ./*

5. 创建目录

mkdir -p /data/fastdfs/client/ /data/fastdfs/file1/ /data/fastdfs/policy1/ /data/fastdfs/privacy1/ /data/fastdfs/storage /data/fastdfs/storage1/ /data/fastdfs/storage2/ /data/fastdfs/tmp1/ /data/fastdfs/tracker/ /data/fastdfs/voice1/

6. 启动

#启动tracker

systemctl daemon-reload

systemctl start fdfs_trackerd

#启动storage

/usr/bin/fdfs_storaged /etc/fdfs/tmp1.conf start &

/usr/bin/fdfs_storaged /etc/fdfs/file1.conf start &

/usr/bin/fdfs_storaged /etc/fdfs/policy1.conf start &

/usr/bin/fdfs_storaged /etc/fdfs/privacy1.conf start &

/usr/bin/fdfs_storaged /etc/fdfs/voice1.conf start &

7. ansible命令安装

ansible-playbook 10.fastdfs.yaml -e "fastdfs_host=192.168.56.102 Download_Url=http://192.168.56.102" -v

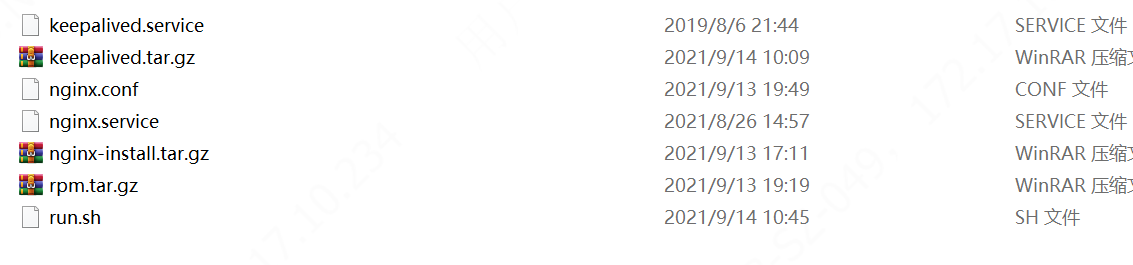

十二、Nginx安装

1、下载解压安装包

cd /usr/local/src

wget https://hz-package.hzins.com/nginx.tar.gz

tar -xvzf nginx.tar.gz

2、执行安装脚本

bash run.sh

3、systemctl管理

将上面2个文件放入/lib/systemd/system下

mv nginx.service /lib/systemd/system/

mv keepalived.service /lib/systemd/system/

启动nginx

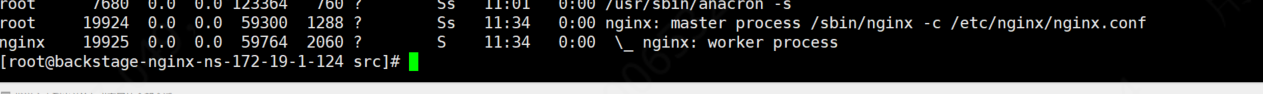

systemctl start nginx

4、测试访问nginx

nginx基础已成功搭建。

5、ansible命令安装

ansible-playbook 12.tengine.yaml -e "tengine_host=192.168.56.102 Download_Url=http://192.168.56.102 nginx_port=81" --tags="insNG2.3"