使用kubeadm搭建的集群默认证书有效期是1年,续费证书其实是一件很快的事情。但是就怕出事了才发现,毕竟作为专业搬砖工程师,每天都很忙的。

鉴于此,监控集群证书有效期是一件不得不做的事情。Prometheus作为云原生领域的王者,如果能用它来监控证书有效期并能及时告警,那就再好不过了。

ssl_exporter就是来做这个事情的。ssh_exporter是一个Prometheus Exporter能提供多种针对 SSL 的检测手段,包括:https 证书生效/失效时间、文件证书生效/失效时间,OCSP 等相关指标。

下面就来监听集群证书的有效期。

安装

apiVersion: v1kind: Servicemetadata:labels:name: ssl-exportername: ssl-exporterspec:ports:- name: ssl-exporterprotocol: TCPport: 9219targetPort: 9219selector:app: ssl-exporter---apiVersion: apps/v1kind: Deploymentmetadata:name: ssl-exporterspec:replicas: 1selector:matchLabels:app: ssl-exportertemplate:metadata:name: ssl-exporterlabels:app: ssl-exporterspec:initContainers:# Install kube ca cert as a root CA- name: caimage: alpinecommand:- sh- -c- |set -eapk add --update ca-certificatescp /var/run/secrets/kubernetes.io/serviceaccount/ca.crt /usr/local/share/ca-certificates/kube-ca.crtupdate-ca-certificatescp /etc/ssl/certs/* /ssl-certsvolumeMounts:- name: ssl-certsmountPath: /ssl-certscontainers:- name: ssl-exporterimage: ribbybibby/ssl-exporter:v0.6.0ports:- name: tcpcontainerPort: 9219volumeMounts:- name: ssl-certsmountPath: /etc/ssl/certsvolumes:- name: ssl-certsemptyDir: {}

执行kubectl apply -f .安装即可。

待Pod正常运行,如下:

# kubectl get po -n monitoring -l app=ssl-exporterNAME READY STATUS RESTARTS AGEssl-exporter-7ff4759679-f4qbs 1/1 Running 0 21m

然后配置prometheus抓取规则。

由于我的Prometheus是通过Prometheus Operator部署的,所以通过additional的方式进行抓取。

首先创建一个文件prometheus-additional.yaml,其内容如下:

- job_name: ssl-exportermetrics_path: /probestatic_configs:- targets:- kubernetes.default.svc:443relabel_configs:- source_labels: [__address__]target_label: __param_target- source_labels: [__param_target]target_label: instance- target_label: __address__replacement: ssl-exporter.monitoring:9219

然后创建secret,命令如下:

kubectl delete secret additional-config -n monitoringkubectl -n monitoring create secret generic additional-config --from-file=prometheus-additional.yaml

然后修改prometheus-prometheus.yaml配置文件,新增如下内容:

additionalScrapeConfigs:name: additional-configkey: prometheus-additional.yaml

prometheus-prometheus.yaml的整体配置如下:

apiVersion: monitoring.coreos.com/v1kind: Prometheusmetadata:labels:prometheus: k8sname: k8snamespace: monitoringspec:alerting:alertmanagers:- name: alertmanager-mainnamespace: monitoringport: webbaseImage: quay.io/prometheus/prometheusnodeSelector:kubernetes.io/os: linuxpodMonitorNamespaceSelector: {}podMonitorSelector: {}replicas: 2resources:requests:memory: 400MiruleSelector:matchLabels:prometheus: k8srole: alert-rulessecurityContext:fsGroup: 2000runAsNonRoot: truerunAsUser: 1000additionalScrapeConfigs:name: additional-configkey: prometheus-additional.yamlserviceAccountName: prometheus-k8sserviceMonitorNamespaceSelector: {}serviceMonitorSelector: {}version: v2.11.0storage:volumeClaimTemplate:spec:storageClassName: managed-nfs-storageresources:requests:storage: 10Gi

然后重新执行prometheus-prometheus.yaml文件,命令如下:

kubectl apply -f prometheus-prometheus.yaml

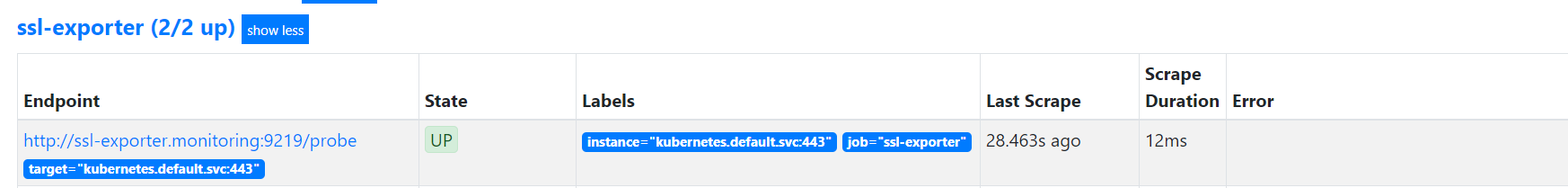

现在可以在prometheus的web界面看到正常的抓取任务了,如下:

然后通过(ssl_cert_not_after-time())/3600/24即可看到证书还有多久失效。

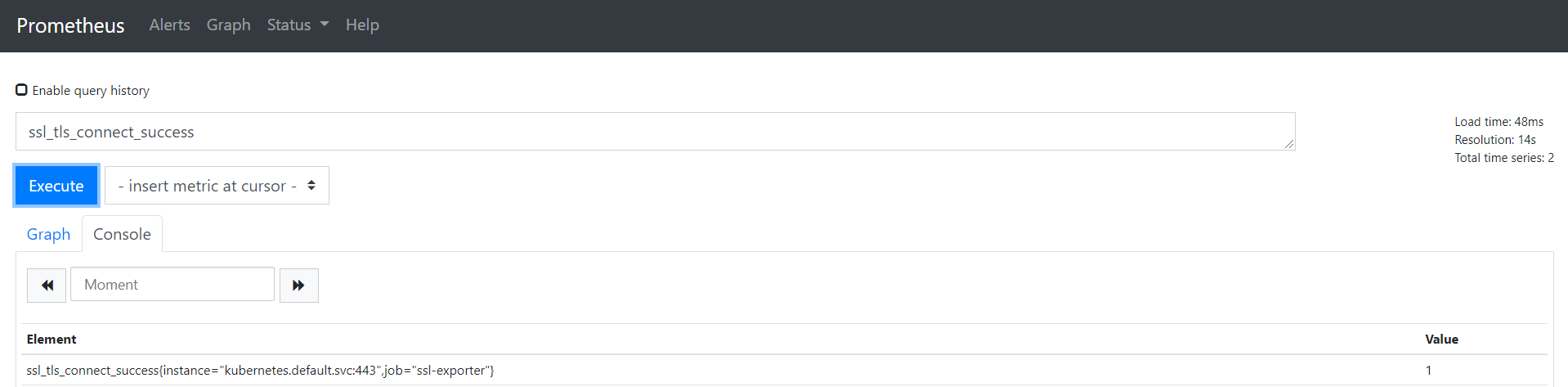

通过ssl_tls_connect_success可以观测ssl链接是否正常。

告警

上面已经安装ssl_exporter成功,并且能正常监控数据了,下面就配置一些告警规则,以便于运维能快速知道这个事情。

apiVersion: monitoring.coreos.com/v1kind: PrometheusRulemetadata:name: monitoring-ssl-tls-rulesnamespace: monitoringlabels:prometheus: k8srole: alert-rulesspec:groups:- name: check_ssl_validityrules:- alert: "K8S集群证书在30天后过期"expr: (ssl_cert_not_after-time())/3600/24 <30for: 1hlabels:severity: criticalannotations:description: 'K8S集群的证书还有{{ printf "%.1f" $value }}天就过期了,请尽快更新证书'summary: "K8S集群证书证书过期警告"- name: ssl_connect_statusrules:- alert: "K8S集群证书可用性异常"expr: ssl_tls_connect_success == 0for: 1mlabels:severity: criticalannotations:summary: "K8S集群证书连接异常"description: "K8S集群 {{ $labels.instance }} 证书连接异常"

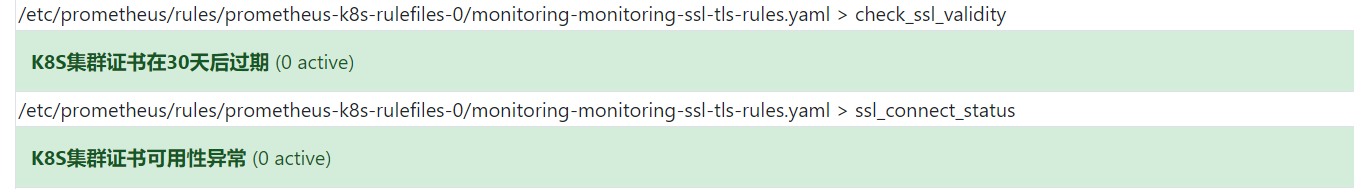

如下展示规则正常,在异常的时候就可以接收到告警了。