安装

export NODE1=192.168.1.21docker volume create --name etcd-dataexport DATA_DIR="etcd-data"REGISTRY=quay.io/coreos/etcddocker run -d \-p 2379:2379 \-p 2380:2380 \--volume=${DATA_DIR}:/etcd-data \--name etcd ${REGISTRY}:latest \/usr/local/bin/etcd \--data-dir=/etcd-data --name node1 \--initial-advertise-peer-urls http://${NODE1}:2380 --listen-peer-urls http://0.0.0.0:2380 \--advertise-client-urls http://${NODE1}:2379 --listen-client-urls http://0.0.0.0:2379 \--initial-cluster node1=http://${NODE1}:2380

访问

# API 版本为 3export ETCDCTL_API=3ENDPOINTS=127.0.0.1:2379etcdctl --endpoints=$ENDPOINTS member list

使用

# 简单使用etcdctl --endpoints=$ENDPOINTS put foo "Hello World!"etcdctl --endpoints=$ENDPOINTS get fooetcdctl --endpoints=$ENDPOINTS --write-out="json" get foo# 前缀匹配etcdctl --endpoints=$ENDPOINTS put web1 value1etcdctl --endpoints=$ENDPOINTS put web2 value2etcdctl --endpoints=$ENDPOINTS put web3 value3etcdctl --endpoints=$ENDPOINTS get web --prefix# 删除etcdctl --endpoints=$ENDPOINTS put key myvalueetcdctl --endpoints=$ENDPOINTS del keyetcdctl --endpoints=$ENDPOINTS put k1 value1etcdctl --endpoints=$ENDPOINTS put k2 value2etcdctl --endpoints=$ENDPOINTS del k --prefix# 交互式操作etcdctl --endpoints=$ENDPOINTS put user1 badetcdctl --endpoints=$ENDPOINTS txn --interactivecompares:value("user1") = "bad"success requests (get, put, delete):del user1failure requests (get, put, delete):put user1 good# 租约etcdctl --endpoints=$ENDPOINTS lease grant 300# lease 2be7547fbc6a5afa granted with TTL(300s)etcdctl --endpoints=$ENDPOINTS put sample value --lease=2be7547fbc6a5afaetcdctl --endpoints=$ENDPOINTS get sampleetcdctl --endpoints=$ENDPOINTS lease keep-alive 2be7547fbc6a5afaetcdctl --endpoints=$ENDPOINTS lease revoke 2be7547fbc6a5afa# or after 300 secondsetcdctl --endpoints=$ENDPOINTS get sample# 锁etcdctl --endpoints=$ENDPOINTS lock mutex1# another client with the same name blocksetcdctl --endpoints=$ENDPOINTS lock mutex1# leader 选举etcdctl --endpoints=$ENDPOINTS elect one p1# another client with the same name blocksetcdctl --endpoints=$ENDPOINTS elect one p2# etcd 状态etcdctl --write-out=table --endpoints=$ENDPOINTS endpoint status# 备份 etcdENDPOINTS=$HOST_1:2379etcdctl --endpoints=$ENDPOINTS snapshot save my.dbetcdctl --write-out=table --endpoints=$ENDPOINTS snapshot status my.db

Kubernetes etcd 备份

https://kubernetes.io/zh/docs/tasks/administer-cluster/configure-upgrade-etcd/

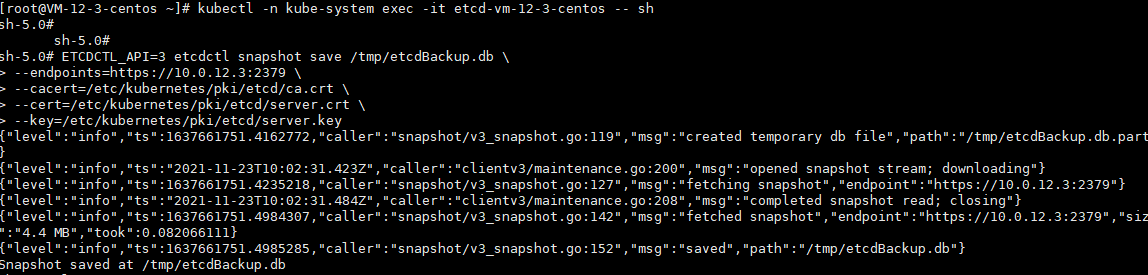

kubectl -n kube-system get pods etcd-name -o=jsonpath='{.spec.containers[0].command}' | jqETCDCTL_API=3 etcdctl snapshot save <backup-file-location> \--endpoints=https://127.0.0.1:2379 \--cacert=<trusted-ca-file> \--cert=<cert-file> \--key=<key-file># 进入容器kubectl -n kube-system exec -it etcd-vm-12-3-centos -- shETCDCTL_API=3 etcdctl snapshot save /tmp/etcdBackup.db \--endpoints=https://10.0.12.3:2379 \--cacert=/etc/kubernetes/pki/etcd/ca.crt \--cert=/etc/kubernetes/pki/etcd/server.crt \--key=/etc/kubernetes/pki/etcd/server.key# 查看状态ETCDCTL_API=3 \etcdctl --write-out=table snapshot status /tmp/etcdBackup.db

恢复

说明: 如果集群中正在运行任何 API 服务器,则不应尝试还原 etcd 的实例。相反,请按照以下步骤还原 etcd:

- 停止 所有 API 服务实例

- 在所有 etcd 实例中恢复状态

- 重启所有 API 服务实例

我们还建议重启所有组件(例如 kube-scheduler、kube-controller-manager、kubelet),以确保它们不会 依赖一些过时的数据。请注意,实际中还原会花费一些时间。 在还原过程中,关键组件将丢失领导锁并自行重启。

export ETCDCTL_CACERT=/etc/kubernetes/pki/etcd/ca.crtexport ETCDCTL_CERT=/etc/kubernetes/pki/etcd/server.crtexport ETCDCTL_KEY=/etc/kubernetes/pki/etcd/server.keyexport ETCDCTL_API=3etcdctl snapshot restore /tmp/etcdBackup.db