本文档安装参考: kubernetes学习视频

第一部分: 基础环境配置

1、服务器基础环境配置

1.1)配置/etc/hosts主机名

[root@master01 ~]# cat /etc/hosts::1 localhost localhost.localdomain localhost6 localhost6.localdomain6127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4127.0.0.1 Aliyun Aliyun172.17.94.47 master01172.17.94.50 master02172.17.94.47 master03172.17.94.48 worker01172.17.94.51 worker02172.17.94.41 k8s-master-slb172.17.94.52 manager

1.2)配置yum源及安装基础工具

#配置yum源(阿里云,由于服务器为阿里云ECS,配置yum源省略)

yum -y install wget vim

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

wget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

yum makecache fast

#安装基础软件包

yum -y install git wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release openssh-server socat ipvsadm conntrack ntpdate ipset libseccomp chrony

1.3)关闭防火墙

systemctl stop firewalld && systemctl disable firewalld

yum install iptables-services -y

sed -i 's/SELINUX=enforcing/SELINUX=disabled/' /etc/sysconfig/selinux

setenforce 0

systemctl disable --now NetworkManager

systemctl disable --now dnsmasq

1.4) 关闭交换分区

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab

sysctl -w vm.swappiness=0

1.5)配置Limit限制

[ $(cat /etc/security/limits.conf|grep '* soft nproc 65535'|wc -l) -eq 0 ] && echo '* soft nproc 65535' >>/etc/security/limits.conf

[ $(cat /etc/security/limits.conf|grep '* hard nproc 65535'|wc -l) -eq 0 ] && echo '* hard nproc 65535' >>/etc/security/limits.conf

[ $(cat /etc/security/limits.conf|grep '* soft nofile 65535'|wc -l) -eq 0 ] && echo '* soft nofile 65535' >>/etc/security/limits.conf

[ $(cat /etc/security/limits.conf|grep '* hard nofile 65535'|wc -l) -eq 0 ] && echo '* hard nofile 65535' >>/etc/security/limits.conf

ulimit -SHn 65535

1.6)修改内核参数

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory = 1

vm.panic_on_com = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_max = 2310720

net.ipv4.tcp_keeaplive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_max_tw_buckets = 36000

net..ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_contrack_max = 65536

net.ipv4.tcp_timestamps = 0

net.core.someaxconn = 16384

EOF

#执行

sysctl --system

1.7)在manager节点上配置ssh免密登录到其他节点

ssh-keygen -t rsa

for i in master01 master02 master03 worker01 worker02 haproxy01 haproxy02;do ssh-copy-id -i .ssh/id_rsa.pub root@$i;done

1.8)在manager节点下载软件及部署文件

#下载部署文件

cd /root

git clone https://gitee.com/xhaihua/k8s-ha-install.git

cd k8s-ha-install

git checkout -b k8s-1.19

git pull origin k8s-1.19

#准备kubernetes1.19.5的文件

cd /usr/local/src

wget https://storage.googleapis.com/kubernetes-release/release/v1.19.5/kubernetes-server-linux-amd64.tar.gz

tar xf kubernetes-server-linux-amd64.tar.gz

cp kubernetes/server/bin/kube-apiserver /usr/local/bin/

cp kubernetes/server/bin/kube-controller-manager /usr/local/bin/

cp kubernetes/server/bin/kube-scheduler /usr/local/bin/

cp kubernetes/server/bin/kubelet /usr/local/bin/

cp kubernetes/server/bin/kube-proxy /usr/local/bin/

cp kubernetes/server/bin/kubectl /usr/local/bin/

#拷贝软件到其他节点

cat > /usr/local/src/scp01.sh <<-EOF

#!/bin/bash

MasterNodes='master01 master02 master03'

WorkerNodes="worker01 worker02"

for NODE in $MasterNodes;do

echo $NODE

scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin

done

for NODE in $WorkerNodes;do

echo $NODE

scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin

done

EOF

sh /usr/local/src/scp01.sh

1.9) 所有服务器内核及软件升级

Centos7需要升级你和至4.18+

#在manager节点上下载,然后传到其他节点

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

for i in master01 master02 master03 worker01 worker02;do scp kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm root@$i:/usr/local/src;done

#所有节点安装内核

cd /usr/local/src

yum -y localinstall kernel-ml*

#所有节点更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user.namspace.enable=1" --update-kernel="$(grubby --default-kernel)"

#重启查看

init 6

[root@worker02 ~]# uname -r

4.19.12-1.el7.elrepo.x86_64

#执行更新

yum -y upgrade

1.10)加载ipvs模块

yum -y install ipvsadm ipset sysstat conntrack libseccomp

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack ipip ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ];then

/sbin/modprobe \${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

1.11)安装cfssl组件 (manager节点)

#安装cfssl

wget http://download.51yuki.cn/cfssl_linux-amd64

wget http://download.51yuki.cn/cfssljson_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

1.12)创建目录 (所有节点)

mkdir -p /opt/cni/bin /etc/kubernetes/pki /etc/etcd/ssl /etc/kubernetes/manifests/

mkdir -p /etc/systemd/system/kubelet.service.d /var/lib/kubelet /var/log/kubernetes

mkdir -p /etc/net/conf.d

2) 安装及配置docker-ce

2.1)安装docker-ce

(除manager节点的所有节点)

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list docker-ce.x86_64 --showduplicates | sort -r

yum -y install docker-ce-19.03.9-3.el7

2.2)配置docker-ce

mkdir /etc/docker /data/docker -p

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://ziqva2l2.mirror.aliyuncs.com"],

"graph": "/data/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

2.3)启动docker-ce

systemctl enable docker && systemctl restart docker && systemctl status docker

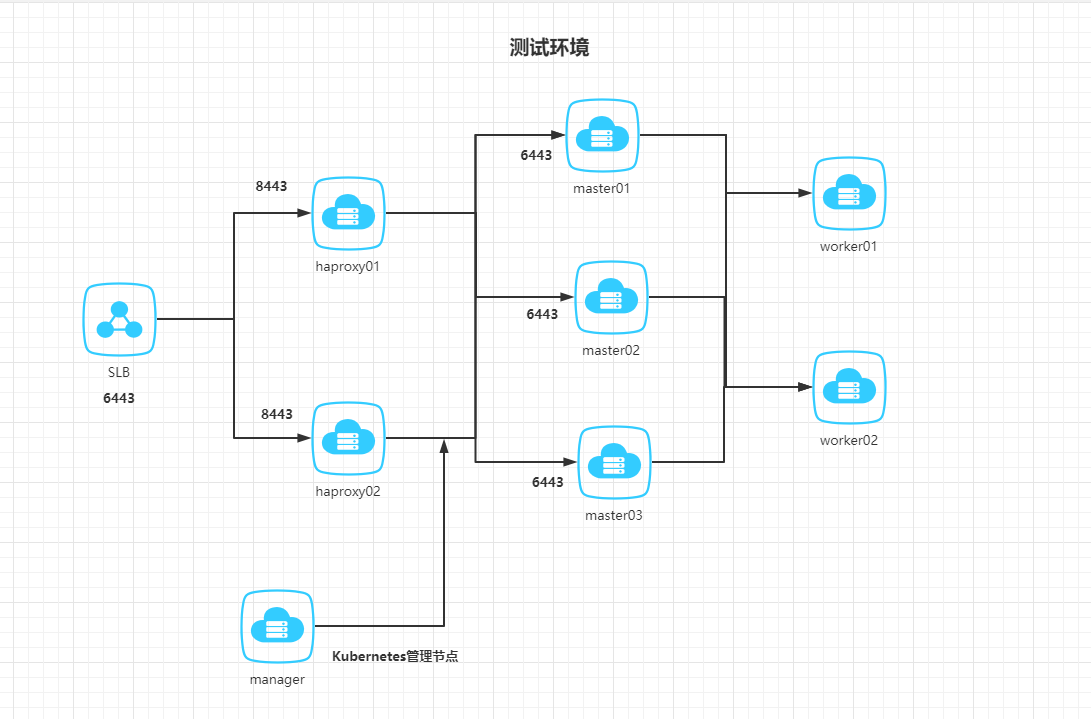

第二部分 部署高可用kuberneter1.19.5集群

1、安装及部署etcd集群

1.1)生成证书(manager)

mkdir -p /etc/etcd/ssl /etc/kubernetes

cd /root/k8s-ha-install/pki/

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

cfssl gencert -ca=/etc/etcd/ssl/etcd-ca.pem -ca-key=/etc/etcd/ssl/etcd-ca-key.pem -config=ca-config.json --hostname=127.0.0.1,master01,master02,master03,172.17.94.49,172.17.94.50,172.17.94.47 -profile=kubernetes etcd-csr.json |cfssljson -bare /etc/etcd/ssl/etcd

cd /etc/etcd/ssl/

for i in master01 master02 master03;do scp /etc/etcd/ssl/* root@$i:/etc/etcd/ssl/;done

1.2)下载软件,并推送到master节点

manager节点上操作

cd /usr/local/src

wget https://github.com/etcd-io/etcd/releases/download/v3.4.12/etcd-v3.4.12-linux-amd64.tar.gz

tar xf etcd-v3.4.12-linux-amd64.tar.gz

cd etcd-v3.4.12-linux-amd64

for i in master01 master02 master03;do scp etcd etcdctl root@$i:/usr/local/bin;done

1.3)配置etcd服务

#创建目录(每个master节点)

mkdir -p /etc/kubernetes/pki/etcd

ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

mkdir -p /data/etcd/cfg /var/lib/etcd

chmod -R 700 /var/lib/etcd

#配置config文件(每个master节点的ip地址和name不一样)

#master01

cat >/data/etcd/cfg/etcd.config.yml <<-EOF

name: 'master01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeta-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.17.94.49:2380'

listen-client-urls: 'https://172.17.94.49:2379,https://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.17.94.49:2380'

advertise-client-urls: 'https://172.17.94.49:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-svc:

initial-cluster: 'master01=https://172.17.94.49:2380,master02=https://172.17.94.50:2380,k8s-master03=https://172.17.94.47:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy_dial-timeout: 1800

proxy_write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

#master02

cat > /data/etcd/cfg/etcd.config.yml <<-EOF

name: 'master02'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeta-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.17.94.50:2380'

listen-client-urls: 'https://172.17.94.50:2379,https://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.17.94.50:2380'

advertise-client-urls: 'https://172.17.94.50:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-svc:

initial-cluster: 'master01=https://172.17.94.49:2380,master02=https://172.17.94.50:2380,master03=https://172.17.94.47:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy_dial-timeout: 1800

proxy_write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

#master03

cat > /data/etcd/cfg/etcd.config.yml <<-EOF

name: 'master03'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeta-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://172.17.94.47:2380'

listen-client-urls: 'https://172.17.94.47:2379,https://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://172.17.94.47:2380'

advertise-client-urls: 'https://172.17.94.47:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-svc:

initial-cluster: 'master01=https://172.17.94.49:2380,master02=https://172.17.94.50:2380,master03=https://172.17.94.47:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy_dial-timeout: 1800

proxy_write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'

key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF

1.4)启动etcd服务并验证

#启动文件(所有master节点一样)

cat > /usr/lib/systemd/system/etcd.service <<-EOF

[Unit]

Description=Etcd Server

Documentation=https://coreos.com/etcd/docs/latest/

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/bin/etcd --config-file=/data/etcd/cfg/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动服务

#启动etcd服务

systemctl daemon-reload

systemctl enable --now etcd.service

systemctl start etcd.service

#验证

etcdctl --endpoints='172.17.94.49:2379,172.17.94.50:2379,172.17.94.47:2379' --cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem --cert=/etc/kubernetes/pki/etcd/etcd.pem --key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status --write-out=table

2、安装部署kube-apiserver服务

2.1)准备证书及推送到master节点

cd /root/k8s-ha-install/pki

cfssl gencert -initca ca-csr.json |cfssljson -bare /etc/kubernetes/pki/ca

#生成apiserver证书

(包含slb地址:172.17.94.41)

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json -hostname=10.96.0.1,172.17.94.41,172.17.94.49,172.17.94.50,\

172.17.94.47,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,\

kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local \

-profile=kubernetes apiserver-csr.json |cfssljson -bare /etc/kubernetes/pki/apiserver

#生成apiserver聚合证书

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

#传证书到master节点

for i in master01 master02 master03;do scp /etc/kubernetes/pki/*.pem root@$i:/etc/kubernetes/pki/;done

#配置ServiceAccount key

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

for i in master01 master02 master03;do scp /etc/kubernetes/pki/sa* root@$i:/etc/kubernetes/pki/;done

2.2)准备kube-apiserver启动服务

#在管理节点上操作

cat >/usr/lib/systemd/system/kube-apiserver.service <<-EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-apiserver \

--v=2 \

--logtostderr=true \

--allow-privileged=true \

--bind-address=0.0.0.0 \

--secure-port=6443 \

--insecure-port=0 \

--advertise-address=172.17.94.49 \

--service-cluster-ip-range=10.96.0.0/12 \

--service-node-port-range=30000-50000 \

--etcd-servers=https://172.17.94.49:2379,https://172.17.94.50:2379,https://172.17.94.47:2379 \

--etcd-cafile=/etc/kubernetes/pki/etcd/etcd-ca.pem \

--etcd-certfile=/etc/kubernetes/pki/etcd/etcd.pem \

--etcd-keyfile=/etc/kubernetes/pki/etcd/etcd-key.pem \

--client-ca-file=/etc/kubernetes/pki/ca.pem \

--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \

--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \

--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \

--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/pki/sa.pub \

--kubelet-preferred-address-types=InternalIP,ExternalIP,HostName \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \

--authorization-mode=Node,RBAC \

--enable-bootstrap-token-auth=true \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \

--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \

--requestheader-group-headers=X-Remote-Group \

--requestheader-allowed-names=aggregator \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-username-headers=X-Remote-User

Restart=on-failure

RestartSec=10

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

for i in master01 master02 master03;do scp /usr/lib/systemd/system/kube-apiserver.service root@$i:/usr/lib/systemd/system/;done

#登录对应的master节点,修改IP地址(三个master节点)

--advertise-address=$IP \

#重启kube-apiserver服务(三个master节点)

systemctl daemon-reload && systemctl enable kube-apiserver.service && systemctl start kube-apiserver.service

3) 安装haproxy服务(在2台Haporxy节点)

#安装haproxy

yum -y install haproxy

#配置haproxy

vim /etc/haproxy/haproxy

global

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

log 127.0.0.1 local0 err

stats timeout 30s

daemon

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy Statistics

stats auth admin:admin123

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 172.17.94.55:6443 check

server k8s-master02 172.17.94.58:6443 check

server k8s-master03 172.17.94.53:6443 check

#启动服务

systemctl enable haproxy && systemctl start haproxy && systemctl status haproxy

配置阿里云slb服务

通过阿里云slb的内网IP,通过四层反向代理haproxy的8443端口

4) 安装配置kube-controller-manager服务

4.1)生成证书并拷贝到master节点

cd /root/k8s-ha-install/pki

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes controller-manager-csr.json |cfssljson -bare /etc/kubernetes/pki/controller-manager

[root@manager pki]# for i in master01 master02 master03 ;do scp /etc/kubernetes/pki/controller-manager*.pem root@$i:/etc/kubernetes/pki/;done

4.2)生成kubeconfig文件

注意: 此IP地址为SLB地址

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://172.17.94.41:6443 \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/etc/kubernetes/pki/controller-manager.pem \

--client-key=/etc/kubernetes/pki/controller-manager-key.pem \

--embed-certs=true --kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes --user=system:kube-controller-manager \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

[root@manager pki]# for i in master01 master02 master03;do scp /etc/kubernetes/controller-manager.kubeconfig root@$i:/etc/kubernetes/;done

4.3)配置kube-controller-manager服务

[Unit]

Description=Kubernetes Controller Manager

Documenttation=https://github.com/Kubernetes/Kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-controller-manager \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--root-ca-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-cert-file=/etc/kubernetes/pki/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/pki/ca-key.pem \

--service-account-private-key-file=/etc/kubernetes/pki/sa.key \

--kubeconfig=/etc/kubernetes/controller-manager.kubeconfig \

--leader-elect=true \

--use-service-account-credentials=true \

--node-monitor-grace-period=40s \

--node-monitor-period=5s \

--pod-eviction-timeout=2m0s \

--controllers=*,bootstrapsigner,tokencleaner \

--allocate-node-cidr=true \

--cluster-cidr=10.244.0.0/16 \

--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

[root@manager Service]# cd /root/k8s-ha-install/Service

[root@manager Service]# for i in master01 master02 master03;do scp kube-controller-manager.service root@$i:/usr/lib/systemd/system/;done

4.4)启动服务,并验证

#启动服务

systemctl daemon-reload

systemctl enable kube-controller-manager.service

systemctl start kube-controller-manager.service

systemctl status kube-controller-manager

# 查看日志

$ journalctl -f -u kube-controller-manager

# 查看leader

[root@master01 cfg]# kubectl get endpoints kube-controller-manager --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master01_da6919a8-ed97-48ec-9880-ac345f91543f","leaseDurationSeconds":15,"acquireTime":"2021-05-30T15:11:29Z","renewTime":"2021-05-31T01:48:13Z","leaderTransitions":2}'

creationTimestamp: "2021-05-30T14:32:26Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:control-plane.alpha.kubernetes.io/leader: {}

manager: kube-controller-manager

operation: Update

time: "2021-05-30T14:32:26Z"

name: kube-controller-manager

namespace: kube-system

resourceVersion: "113635"

selfLink: /api/v1/namespaces/kube-system/endpoints/kube-controller-manager

uid: f9966a88-c706-41e0-8bb8-c4f45c3bf104

5) 安装及配置kube-scheduler服务

5.1)生成证书

cd /data/k8s-ha-install/pki

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json -profile=kubernetes scheduker-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

#推送

for i in master01 master02 master03;do scp /etc/kubernetes/pki/scheduler*.pem root@$i:/etc/kubernetes/pki/;done

5.2) 生成kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://172.17.94.41:6443 \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/pki/scheduler.pem \

--client-key=/etc/kubernetes/pki/scheduler-key.pem --embed-certs=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-context system:kube-scheduler@kubernetes --cluster=kubernetes \

--user=system:kube-scheduler --kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

#推送

for i in master01 master02 master03;do scp /etc/kubernetes/scheduler.kubeconfig root@$i:/etc/kubernetes/;done

5.3) 配置kube-scheduler服务

[Unit]

Description=Kubernetes Scheduler

Documentation=http://github.com/Kubernetes/Kubernetes

After=network.target

[Service]

ExecStart=/usr/local/bin/kube-scheduler \

--v=2 \

--logtostderr=true \

--address=127.0.0.1 \

--leader-elect=true \

--kubeconfig=/etc/kubernetes/scheduler.kubeconfig

Restart=always

RestartSec=10s

#推送

for i in master01 master02 master03;do scp kube-scheduler.service root@$i:/usr/lib/systemd/system/;done

5.4)启动kube-scheduler服务

# 启动服务

systemctl daemon-reload && systemctl enable kube-scheduler.service && systemctl restart kube-scheduler.service

# 检查状态

systemctl status kube-scheduler

# 查看日志

journalctl -f -u kube-scheduler

# 查看leader

[root@master01 cfg]# kubectl get endpoints kube-scheduler --namespace=kube-system -o yaml

apiVersion: v1

kind: Endpoints

metadata:

annotations:

control-plane.alpha.kubernetes.io/leader: '{"holderIdentity":"master02_492e0b37-7d4f-49e8-9920-bef3d8002294","leaseDurationSeconds":15,"acquireTime":"2021-05-30T15:11:30Z","renewTime":"2021-05-31T01:47:27Z","leaderTransitions":2}'

creationTimestamp: "2021-05-30T14:34:09Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.: {}

f:control-plane.alpha.kubernetes.io/leader: {}

manager: kube-scheduler

operation: Update

time: "2021-05-30T14:34:09Z"

name: kube-scheduler

namespace: kube-system

resourceVersion: "113506"

selfLink: /api/v1/namespaces/kube-system/endpoints/kube-scheduler

uid: c8eafbc8-e296-4b48-974f-63ee4878bb54

6) 在manager节点上安装kubectl

6.1)生成证书

cd /root/k8s-ha-install/pki

#生成admin证书

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json |cfssljson -bare /etc/kubernetes/pki/admin

6.2)生成admin.kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://172.17.94.41:6443 \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-credentials kubernetes-admin --client-certificate=/etc/kubernetes/pki/admin.pem \

--client-key=/etc/kubernetes/pki/admin-key.pem --embed-certs=true \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-context kubernetes-admin@kubernetes --cluster=kubernetes \

--user=kubernetes-admin --kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/etc/kubernetes/admin.kubeconfig

#拷贝

cp /etc/kubernetes/admin.kubeconfig /root/.kube/config

6.3)验证

[root@manager Service]# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-1 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

7) 安装及配置kubelet服务

7.1)安装配置bootstrap

功能: 为客户端自动颁发证书

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://172.17.94.41:6443 \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config set-credentials tls-bootstrap-token-user --token=c8adef.2e4d610cf3e7426e \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config set-context tls-bootstrap-token-user@kubernetes --cluster=kubernetes \

--user=tls-bootstrap-token-user --kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/etc/kubernetes/bootstrap-kubelet.kubeconfig

for i in master01 master02 master03 worker01 worker02;do scp /etc/kubernetes/bootstrap-kubelet.kubeconfig root@$i:/etc/kubernetes/;done

#在manager01节点配置

#注意里面的toke-id和token-secret需要和刚刚生成的botstrap.kubeconfig文件里的保持一致

cat > bootstrap.secret.yaml <<-EOF

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8adef

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8adef

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

EOF

#执行

kubectl create -f bootstrap.secret.yaml

7.2)拷贝证书到node节点

#!/bin/bash

for NODE in master01 master02 master03 worker01 worker02;do

ssh $NODE mkdir -p /etc/kubernetes/pki /etc/etcd/ssl

for FILE in etcd-ca.pem etcd.pem etcd-key.pem;do

scp /etc/etcd/ssl/$FILE $NODE:/etc/etcd/ssl/

done

for FILE in pki/ca.pem pki/ca-key.pem pki/front-proxy-ca.pem bootstrap-kubelet.kubeconfig;do

scp /etc/kubernetes/$FILE $NODE:/etc/kubernetes/${FILE};

done

done

7.3)安装部署kubelet

(master节点和worker节点都安装)

#所有节点创建文件

mkdir -p /var/lib/kubelet /var/log/kubernetes /etc/systemd/system/kubelet.service.d /etc/kubernetes/manifests/

#管理节点配置kubelet.service,然后推送到其他节点

cd /root/k8s-ha-install/Service

for i in master01 master02 master03 worker01 worker02;do scp kubelet.service root@$i:/usr/lib/systemd/system/;done

#拷贝kubelet service的配置文件

cd /root/k8s-ha-install/config

for i in master01 master02 master03 worker01 worker02;do scp 10-kubelet.conf root@$i:/etc/systemd/system/kubelet.service.d/;done

#配置kubelet.conf文件

cd /root/k8s-ha-install/config

for i in master01 master02 master03 worker01 worker02;do scp kubelet-conf.yml root@$i:/etc/kubernetes/;done

7.4)启动所有节点的kublet

systemctl daemon-reload && systemctl enable kubelet.service && systemctl restart kubelet.service

# 在master上Approve bootstrap请求

kubectl get csr

kubectl certificate approve <name>

# 查看服务状态

systemctl status kubelet.service

# 查看日志

journalctl -f -u kubelet

#拷贝证书(从管理节点)

[root@manager pki]# for i in master01 master02 master03 worker01 worker02;do scp /etc/kubernetes/pki/* root@$i:/etc/kubernetes/pki/;done

7.5) 验证

[root@manager config]# kubectl get node

NAME STATUS ROLES AGE VERSION

master01 NotReady <none> 4m51s v1.19.5

master02 NotReady <none> 4s v1.19.5

master03 NotReady <none> 4m50s v1.19.5

worker01 NotReady <none> 5m16s v1.19.5

worker02 NotReady <none> 4m29s v1.19.5

8)安装及配置kube-proxy

8.1)生成证书

cd /root/k8s-ha-install/pki/

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare /etc/kubernetes/pki/kube-proxy

for i in master01 master02 master03 worker01 worker02;do scp /etc/kubernetes/pki/kube-proxy*.pem root@$i:/etc/kubernetes/pki/;done

8.2)生成kube-proxy.kubeconfig文件

kubectl -n kube-system create serviceaccount kube-proxy

kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxy

SECRET=$(kubectl -n kube-system get sa/kube-proxy --output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET --output=jsonpath='{.data.token}'|base64 -d)

PKI_DIR=/etc/kubernetes/pki

K8S_DIR=/etc/kubernetes/

kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/pki/ca.pem \

--embed-certs=true --server=https://172.17.94.41:6443 \

--kubeconfig=${K8S_DIR}/kube-proxy.kubeconfig

kubectl config set-credentials kubernetes --token=${JWT_TOKEN} \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

kubectl config set-context kubernetes --cluster=kubernetes --user=kubernetes \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

kubectl config use-context kubernetes --kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig

#推送

for i in master01 master02 master03 worker01 worker02;do scp /etc/kubernetes/kube-proxy.kubeconfig root@$i:/etc/kubernetes/;done

8.3)准备kube-proxy文件

cd /root/k8s-ha-install/config

#配置文件

for i in master01 master02 master03 worker01 worker02;do scp kube-proxy.conf root@$i:/etc/kubernetes/;done

#启动文件

for i in master01 master02 master03 worker01 worker02;do scp kube-proxy.service root@$i:/usr/lib/systemd/system/;done

8.4) 启动服务

# 启动服务

$ systemctl daemon-reload && systemctl enable kube-proxy.service && systemctl restart kube-proxy.service && systemctl status kube-proxy.service

# 查看状态

$ systemctl status kube-proxy.service

# 查看日志

$ journalctl -f -u kube-proxy

第三部分:安装Addons插件

1)安装calico组件

[root@manager calico]# pwd

/root/k8s-ha-install/calico

[root@manager calico]# kubectl apply -f calico.yaml

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

2)安装coredns组件

[root@master01 calico]# cd ../coredns/

[root@master01 coredns]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

3) 安装metric-server组件

[root@master01 coredns]# cd ../metrics-server/

[root@master01 metrics-server]# kubectl apply -f metrics-server.yaml

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

serviceaccount/metrics-server created

deployment.apps/metrics-server created

service/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

4)安装dashboard组件

kubectl create ns kubernetes-dashboard

cd /etc/kubernetes/pki/

openssl genrsa -out dashboard.xhaihua.cn.key 2048

openssl req -days 3650 -new -key dashboard.xhaihua.cn.key -out xhaihua.cn.csr -subj /C=CN/ST=Shanghai/L=Shanghai/O=Smokelee/OU=xxxxx/CN=dashboard.xhaihua.cn

openssl x509 -req -in xhaihua.cn.csr -signkey dashboard.xhaihua.cn.key -out dashboard.xhaihua.cn.crt

kubectl -n kubernetes-dashboard create secret tls kubernetes-dashboard-certs --key dashboard.xhaihua.cn.key --cert dashboard.xhaihua.cn.crt

[root@master01 kubernetes]# cd /root/k8s-ha-install/dashboard-2.0.4/

[root@master01 dashboard-2.0.4]# kubectl apply -f recommended.yaml

Warning: kubectl apply should be used on resource created by either kubectl create --save-config or kubectl apply

namespace/kubernetes-dashboard configured

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

[root@master01 dashboard-2.0.4]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-55gg5 1/1 Running 0 9m42s

calico-node-5bssl 1/1 Running 0 9m42s

calico-node-gk7hz 1/1 Running 0 9m42s

calico-node-hvx7v 1/1 Running 0 9m42s

calico-node-mzzqz 1/1 Running 3 9m42s

calico-node-tf2h6 1/1 Running 3 9m42s

coredns-7bf4bd64bd-tt25q 1/1 Running 0 4m24s

coredns-7bf4bd64bd-v67fs 1/1 Running 0 4m24s

metrics-server-589847c86f-jmjfr 1/1 Running 0 3m30s

[root@master01 dashboard-2.0.4]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b59f7d4df-xvjqk 1/1 Running 0 82s

kubernetes-dashboard-665f4c5ff-nwhdf 1/1 Running 0 82s

[root@master01 cfg]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

master01 131m 3% 1697Mi 22%

master02 121m 3% 1661Mi 21%

master03 105m 2% 1563Mi 20%

worker01 63m 1% 1079Mi 14%

worker02 70m 1% 1142Mi 15%

创建管理员token,可以查看任何空间权限

kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin \

--serviceaccount=kubernetes-dashboard:kubernetes-dashboard

[root@master01 dashboard-2.0.4]# kubectl get secret -n kubernetes-dashboard

NAME TYPE DATA AGE

default-token-b4m9j kubernetes.io/service-account-token 3 4m4s

kubernetes-dashboard-certs kubernetes.io/tls 2 4m3s

kubernetes-dashboard-csrf Opaque 1 2m45s

kubernetes-dashboard-key-holder Opaque 2 2m45s

kubernetes-dashboard-token-5sw48 kubernetes.io/service-account-token 3 2m45s

[root@master01 dashboard-2.0.4]# kubectl describe secret kubernetes-dashboard-token-5sw48 -n kubernetes-dashboard

Name: kubernetes-dashboard-token-5sw48

Namespace: kubernetes-dashboard

Labels: <none>

Annotations: kubernetes.io/service-account.name: kubernetes-dashboard

kubernetes.io/service-account.uid: 7b25b4f5-67fb-42b4-a498-2d23e693f2da

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1411 bytes

namespace: 20 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6Inl3ZG1TWjNkZFhJNlkyZUtrMk1HUG82UzhpeEMyUW9lam0tOHpfWGpvQXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi01c3c0OCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjdiMjViNGY1LTY3ZmItNDJiNC1hNDk4LTJkMjNlNjkzZjJkYSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.TfZfAwvE4skR-vE72BPSHlr6WT5m_I6GP3-rACNtvAU8A7DO7VL7r746N74BuC87USUrTiA6qYlTbGQ0CiZwmZD3NdkOw8w2HMHdZtap8p2W-BGB1IYWHcPaq8Av97fUnHKDuGbKzmpISK7vIcgcGXNfK2Xx_dcVVER2CmN73x78xGvsUJKQE7PH2f7vzyEe8SXXf1ZsdjIKw4JhVd1ammt4UnCDhwqlEWMXcJISuWaEklJHmndGUrboehO2lXf6VX-7OoxCGvsUMVXnFbjnMao0kq8R5TyxOLYtlDuBlcs905_08ht018UDIcszGVUiRAhRhNh1X4lOAfa_M7625Q

5)部署ingress服务

cd /usr/local/src

wget http://download.51yuki.cn/helm-v3.3.4-linux-amd64.tar.gz

tar xf helm-v3.3.4-linux-amd64.tar.gz

cd linux-amd64/

cp helm /usr/bin/

#下载ingress-nginx

cd /usr/local/src

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm pull ingress-nginx/ingress-nginx

#每次可能不一样

tar xf ingress-nginx-3.31.0.tgz

cd ingress-nginx

#定义节点标签

kubectl label node worker01 role="ingress-nginx"

kubectl label node worker02 role="ingress-nginx"

# 修改values.yaml

controller:

image:

repository: registry.cn-shanghai.aliyuncs.com/xhaihua/controller

#digest: sha256:46ba23c3fbaafd9e5bd01ea85b2f921d9f2217be082580edc22e6c704a83f02f

tag: v0.46.0

dnsPolicy: ClusterFirstWithHostNet

hostNetwork: true

nodeSelector:

kubernetes.io/os: linux

role: "ingress-nginx"

kind: DaemonSet

resources: (根据需求调整)

# limits:

# cpu: 100m

# memory: 90Mi

requests:

cpu: 500m

memory: 500Mi

type: ClusterIP

patch:

enabled: true

image:

repository: egistry.cn-shanghai.aliyuncs.com/xhaihua/kube-webhook-certgen

tag: v1.5.1

#部署ingress-nginx

cd /usr/local/src/ingress-nginx

kubectl create ns ingress-nginx

helm install ingress-nginx -n ingress-nginx .

#查看