基于centos8.3系统安装部署kubernetes1.20.5 (单节点)

1)环境介绍

- 操作系统: Centos8.3

http://mirrors.aliyun.com/centos/8.3.2011/isos/x86_64/CentOS-8.3.2011-x86_64-dvd1.iso

节点名称:

master01 192.168.31.51 master节点

devops02 192.168.31.52 worker节点

devops03 192.168.31.53 worker节点

devops04 192.168.31.54 worker节点

devops05 192.168.31.55 worker节点,NFS节点操作系统版本

CentOS Linux release 8.3.201

2)系统初始化 (所有节点)

关闭防火墙

systemctl stop firewalld && systemctl disable firewalldsed -i 's/enforcing/disabled/' /etc/selinux/configsetenforce 0

关闭swap,注释swap分区

[root@master ~]# swapoff -a

[root@master ~]# cat /etc/fstab

#

# /etc/fstab

# Created by anaconda on Fri Dec 25 03:30:01 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk/'.

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info.

#

# After editing this file, run 'systemctl daemon-reload' to update systemd

# units generated from this file.

#

/dev/mapper/cl-root / xfs defaults 0 0

UUID=75c36a78-fe92-49b4-a2ff-3a3a7c1aff76 /boot ext4 defaults 1 2

# /dev/mapper/cl-swap swap swap defaults 0 0

添加阿里源

rm -rfv /etc/yum.repos.d/CentOS-Base.repo

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-8.repo

安装基础软件

yum -y install vim wget lrzsz bash-completion net-tools gcc nfs-utils

添加主机名

[root@master01 ~]# vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.31.51 master01.xhaihua.cn master01

192.168.31.52 devops02.xhaihua.cn devops02

192.168.31.53 devops03.xhaihua.cn devops03

192.168.31.54 devops04.xhaihua.cn devops04

192.168.31.55 devops05.xhaihua.cn devops05

内核及相关参数优化

[ $(cat /etc/security/limits.conf|grep '* soft nproc 65535'|wc -l) -eq 0 ] && echo '* soft nproc 65535' >>/etc/security/limits.conf

[ $(cat /etc/security/limits.conf|grep '* hard nproc 65535'|wc -l) -eq 0 ] && echo '* hard nproc 65535' >>/etc/security/limits.conf

[ $(cat /etc/security/limits.conf|grep '* soft nofile 65535'|wc -l) -eq 0 ] && echo '* soft nofile 65535' >>/etc/security/limits.conf

[ $(cat /etc/security/limits.conf|grep '* hard nofile 65535'|wc -l) -eq 0 ] && echo '* hard nofile 65535' >>/etc/security/limits.conf

ulimit -SHn 65535

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory = 1

vm.panic_on_com = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_max = 2310720

net.ipv4.tcp_keeaplive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_contrack_max = 65536

net.ipv4.tcp_timestamps = 0

net.core.someaxconn = 16384

EOF

sysctl --system

开启ipvs

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack ipip ip_tables ip_set xt_set ipt_set ipt_rpfilter ipt_REJECT"

for kernel_module in ${ipvs_modules}; do

/sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ];then

/sbin/modprobe ${kernel_module}

fi

done

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

3) 安装docker-ce (所有节点)

cd /etc/yum.repos.d/

wget https://mirrors.tuna.tsinghua.edu.cn/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce

准备docker的存储空间(单独用一块硬盘)

fdisk /dev/sdb (分区) n p 1 回车 回车 w

mkfs.xfs /dev/sdb1

blkid (查看/dev/sdb1)的UUID

mkdir /data/docker

vim /etc/fstab (挂载 UUID="" /data/docker xfs defaults 0 0 )

mount -a

mkdir -p /etc/docker

cat >/etc/docker/daemon.json <<-EOF

{

"registry-mirrors": ["https://ziqva2l2.mirror.aliyuncs.com"],

"graph": "/data/docker",

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "300m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

systemctl daemon-reload

systemctl enable docker

systemctl start docker

4) 安装kubectl、kubelet、kubeadm(所有节点)

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum -y install kubectl kubelet kubeadm

systemctl enable kubelet

5) 初始化kubernetes集群 (master节点操作)

[root@master ~]# kubeadm init --kubernetes-version=1.20.5 \

> --apiserver-advertise-address=192.168.1.25 \

> --image-repository registry.aliyuncs.com/google_containers \

> --service-cidr=10.10.0.0/16 --pod-network-cidr=10.244.0.0/16

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

添加worker节点(查看)

kubeadm join 192.168.31.51:6443 --token qbok6t.sjzybzfbxn3cgrrt --discovery-token-ca-cert-hash sha256:151b85df6273d770dbbbe830a99ba3780c3b87f18d5b6bb006248affea6be3de

kubectl命令自动补充

source <(kubectl completion bash) && echo 'source <(kubectl completion bash)' >> ~/.bashrc

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

6)安装calico网络(master节点操作)

git clone https://gitee.com/xhaihua/xhaihua-devops.git

kubectl apply -f https://gitee.com/xhaihua/xhaihua-devops/blob/master/calico.yaml

7) 添加worker节点 (在其他节点上操作)

kubeadm join 192.168.31.51:6443 --token qbok6t.sjzybzfbxn3cgrrt --discovery-token-ca-cert-hash sha256:151b85df6273d770dbbbe830a99ba3780c3b87f18d5b6bb006248affea6be3de

如果要在worker节点上使用kubectl,操作如下

node 节点对 kubernetes 进行配置

拷贝 master 节点服务器的 /etc/kubernetes/admin.conf 到 node 节点服务器的 /etc/kubernetes/ 目录下

[root@node2 ~]# scp root@master:/etc/kubernetes/admin.conf /etc/kubernetes/

[root@node1 ~]# mkdir -p $HOME/.kube

[root@node1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@node1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/confi

8) 查看集群状态 (master节点操作)

[root@master01 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

devops02 Ready <none> 59m v1.20.5

devops03 Ready <none> 59m v1.20.5

devops04 Ready <none> 59m v1.20.5

devops05 Ready <none> 59m v1.20.5

master01 Ready control-plane,master 61m v1.20.5

[root@master01 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-69496d8b75-lg8xj 1/1 Running 0 49m

calico-node-6k7df 1/1 Running 0 49m

calico-node-7l78l 1/1 Running 0 49m

calico-node-7wxf8 1/1 Running 0 49m

calico-node-8r8wj 1/1 Running 0 49m

calico-node-mm9rm 1/1 Running 0 49m

coredns-7f89b7bc75-v6k9v 1/1 Running 0 57m

coredns-7f89b7bc75-vj2fr 1/1 Running 0 57m

etcd-master01 1/1 Running 0 61m

kube-apiserver-master01 1/1 Running 1 61m

kube-controller-manager-master01 1/1 Running 0 61m

kube-proxy-9q8qx 1/1 Running 0 59m

kube-proxy-fkljx 1/1 Running 0 59m

kube-proxy-j7pt7 1/1 Running 0 59m

kube-proxy-qpl5v 1/1 Running 0 59m

kube-proxy-xdjhn 1/1 Running 0 60m

kube-scheduler-master01 1/1 Running 0 61m

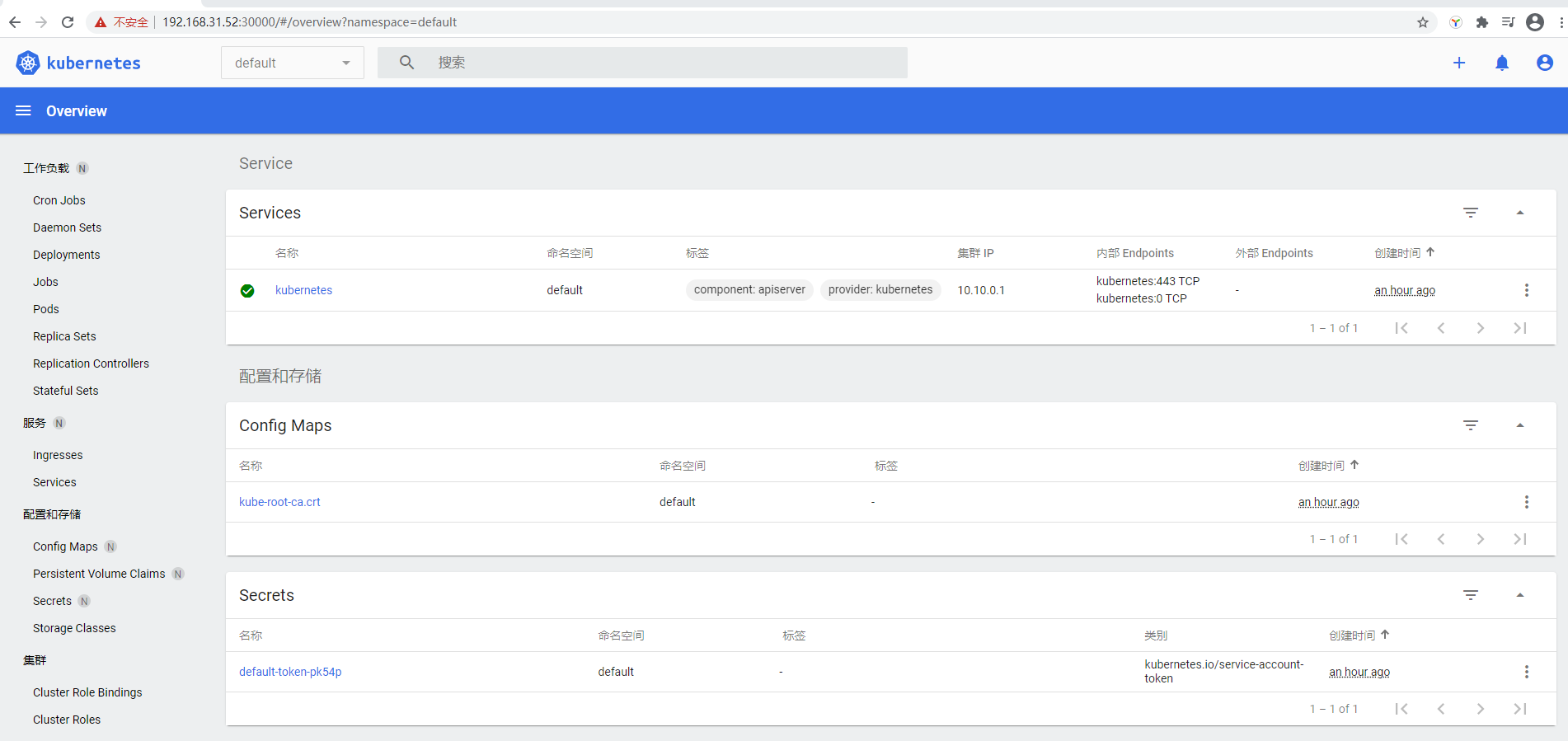

9) 安装kubernetes-dashboard (master节点操作)

官方部署dashboard的服务没使用nodeport,将yaml文件下载到本地,在service里添加nodeport,我已经修改了,nodeport端口为:30000

kubectl apply -f https://gitee.com/xhaihua/xhaihua-devops/blob/master/dashboard.yaml

[root@master01 ~]# kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-79c5968bdc-7xvbb 1/1 Running 0 51m

kubernetes-dashboard-7448ffc97b-p2szd 1/1 Running 0 51m

[root@master01 ~]# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.10.39.194 <none> 8000/TCP 51m

kubernetes-dashboard NodePort 10.10.121.249 <none> 443:30000/TCP 51m

通过页面访问,推荐使用firefox浏览器(https 无证书 谷歌浏览器可能无法访问)

https://192.168.31.52:30000

Dashboard 支持 Kubeconfig 和 Token 两种认证方式,我们这里选择Token认证方式登录

kubectl apply -f https://gitee.com/xhaihua/xhaihua-devops/blob/master/admin-user.yaml

kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes-dashboard get secret | grep admin-user | awk '{print $1}')

10) 安装helm3 (master节点操作)

wget https://get.helm.sh/helm-v3.2.0-rc.1-linux-amd64.tar.gz

cp linux-amd64/helm /usr/bin/

helm version

version.BuildInfo{Version:"v3.2.0-rc.1", GitCommit:"7bffac813db894e06d17bac91d14ea819b5c2310", GitTreeState:"clean", GoVersion:"go1.13.10"}

#添加repo

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add elastic https://helm.elastic.co

helm repo add gitlab https://charts.gitlab.io

helm repo add harbor https://helm.goharbor.io

helm repo add bitnami https://charts.bitnami.com/bitnami

helm repo add incubator https://kubernetes-charts-incubator.storage.googleapis.com

#添加国内仓库

helm repo add stable http://mirror.azure.cn/kubernetes/charts

helm repo add aliyun https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

helm repo update

helm repo list

11) 使用helm部署nginx ingress (master节点操作)

https://kubernetes.github.io/ingress-nginx/deploy/#using-helm

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

helm repo update

[root@master01 manifests]# helm search repo ingress-nginx

NAME CHART VERSION APP VERSION DESCRIPTION

ingress-nginx/ingress-nginx 3.25.0 0.44.0 Ingress controller for Kubernetes using NGINX a...

#下载软件包

helm pull ingress-nginx/ingress-nginx

tar xf ingress-nginx-3.25.0.tgz

#更改value.yaml

controller:

name: controller

image:

repository: registry.cn-beijing.aliyuncs.com/dotbalo/controller

tag: "v0.40.2"

#digest: sha256:3dd0fac48073beaca2d67a78c746c7593f9c575168a17139a9955a82c63c4b9a

pullPolicy: IfNotPresent

# www-data -> uid 101

runAsUser: 101

allowPrivilegeEscalation: true

hostNetwork: true

dnsPolicy: ClusterFirstWithHostNet

kind: DaemonSet

nodeSelector:

kubernetes.io/os: linux

ingress: "true"

resources:

# limits:

# cpu: 100m

# memory: 90Mi

requests:

cpu: 200m

memory: 290Mi

type: ClusterIP

patch:

enabled: true

image:

repository: registry.cn-beijing.aliyuncs.com/dotbalo/kube-webhook-certgen

tag: v1.3.0

pullPolicy: IfNotPresent

#设置label

[root@master01 ingress-nginx]# kubectl label node devops02 ingress=true

node/devops02 labeled

[root@master01 ingress-nginx]# kubectl label node devops03 ingress=true

node/devops03 labeled

[root@master01 ingress-nginx]# kubectl create ns ingress-nginx

namespace/ingress-nginx created

[root@master01 ingress-nginx]# helm install ingress-nginx -n ingress-nginx .

NAME: ingress-nginx

LAST DEPLOYED: Fri Mar 26 17:58:26 2021

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export POD_NAME=$(kubectl --namespace ingress-nginx get pods -o jsonpath="{.items[0].metadata.name}" -l "app=ingress-nginx,component=controller,release=ingress-nginx")

kubectl --namespace ingress-nginx port-forward $POD_NAME 8080:80

echo "Visit http://127.0.0.1:8080 to access your application."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

kubernetes.io/ingress.class: nginx

name: example

namespace: foo

spec:

rules:

- host: www.example.com

http:

paths:

- backend:

serviceName: exampleService

servicePort: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls

[root@master01 ingress-nginx]# kubectl get all -n ingress-nginx

NAME READY STATUS RESTARTS AGE

pod/ingress-nginx-controller-2mr4r 1/1 Running 0 43s

pod/ingress-nginx-controller-fgg2n 1/1 Running 0 43s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/ingress-nginx-controller ClusterIP 10.10.219.177 <none> 80/TCP,443/TCP 43s

service/ingress-nginx-controller-admission ClusterIP 10.10.213.170 <none> 443/TCP 43s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/ingress-nginx-controller 2 2 2 2 2 ingress=true,kubernetes.io/os=linux 43s

12)使用helm部署nfs storageclass (master节点操作)

[root@master01 ingress-nginx]# helm search repo nfs

NAME CHART VERSION APP VERSION DESCRIPTION

stable/nfs-client-provisioner 1.2.11 3.1.0 DEPRECATED - nfs-client is an automatic provisi...

stable/nfs-server-provisioner 1.1.3 2.3.0 DEPRECATED - nfs-server-provisioner is an out-o...

由于nfs服务端我们已经部署好了,因此只部署nfs-client即可

[root@master01 ingress-nginx]# helm pull stable/nfs-client-provisioner

[root@master01 ingress-nginx]# tar xf nfs-client-provisioner-1.2.11.tgz

[root@master01 ingress-nginx]# cd nfs-client-provisioner/

[root@master01 nfs-client-provisioner]# ls

Chart.yaml ci README.md templates values.yaml

#修改values.yaml

nfs:

server: 192.168.31.55

path: /k8s

mountOptions:

storageClass:

create: true

# Set a provisioner name. If unset, a name will be generated.

# provisionerName:

# Set StorageClass as the default StorageClass

# Ignored if storageClass.create is false

defaultClass: true

# Set a StorageClass name

# Ignored if storageClass.create is false

name: nfs-provisioner

[root@master01 nfs-client-provisioner]# kubectl create namespace nfs-client-provisioner

namespace/nfs-client-provisioner created

[root@master01 nfs-client-provisioner]# helm install nfs-client-provisioner . -n nfs-client-provisioner

WARNING: This chart is deprecated

NAME: nfs-client-provisioner

LAST DEPLOYED: Fri Mar 26 18:11:41 2021

NAMESPACE: nfs-client-provisioner

STATUS: deployed

REVISION: 1

TEST SUITE: None

[root@master01 nfs-client-provisioner]# kubectl get sc -n nfs-client-provisioner

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-provisioner (default) cluster.local/nfs-client-provisioner Delete Immediate true 54s

[root@master01 nfs-client-provisioner]# kubectl -n nfs-client-provisioner get all

NAME READY STATUS RESTARTS AGE

pod/nfs-client-provisioner-57974884d5-mc2nv 1/1 Running 0 76s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nfs-client-provisioner 1/1 1 1 15h

NAME DESIRED CURRENT READY AGE

replicaset.apps/nfs-client-provisioner-57974884d5 1 1 1 15h

1.20新版本问题

I0218 10:05:03.223179 1 leaderelection.go:194] successfully acquired lease kube-system/shisuyun-nfs

I0218 10:05:03.223336 1 controller.go:631] Starting provisioner controller shisuyun/nfs_nfs-client-provisioner-6db9db9dcf-c6phr_ba4b22b4-71d0-11eb-82ab-12d5aefc2432!

I0218 10:05:03.324107 1 controller.go:680] Started provisioner controller shisuyun/nfs_nfs-client-provisioner-6db9db9dcf-c6phr_ba4b22b4-71d0-11eb-82ab-12d5aefc2432!

I0218 10:05:03.324440 1 controller.go:987] provision "mysql/nfs-test" class "nfs": started

E0218 10:05:03.332013 1 controller.go:1004] provision "mysql/nfs-test" class "nfs": unexpected error getting claim reference: selfLink was empty, can't make reference

在/etc/kubernetes/manifests/kube-apiserver.yaml配置添加参数

- --feature-gates=RemoveSelfLink=false

[root@master01 ~]# kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yaml

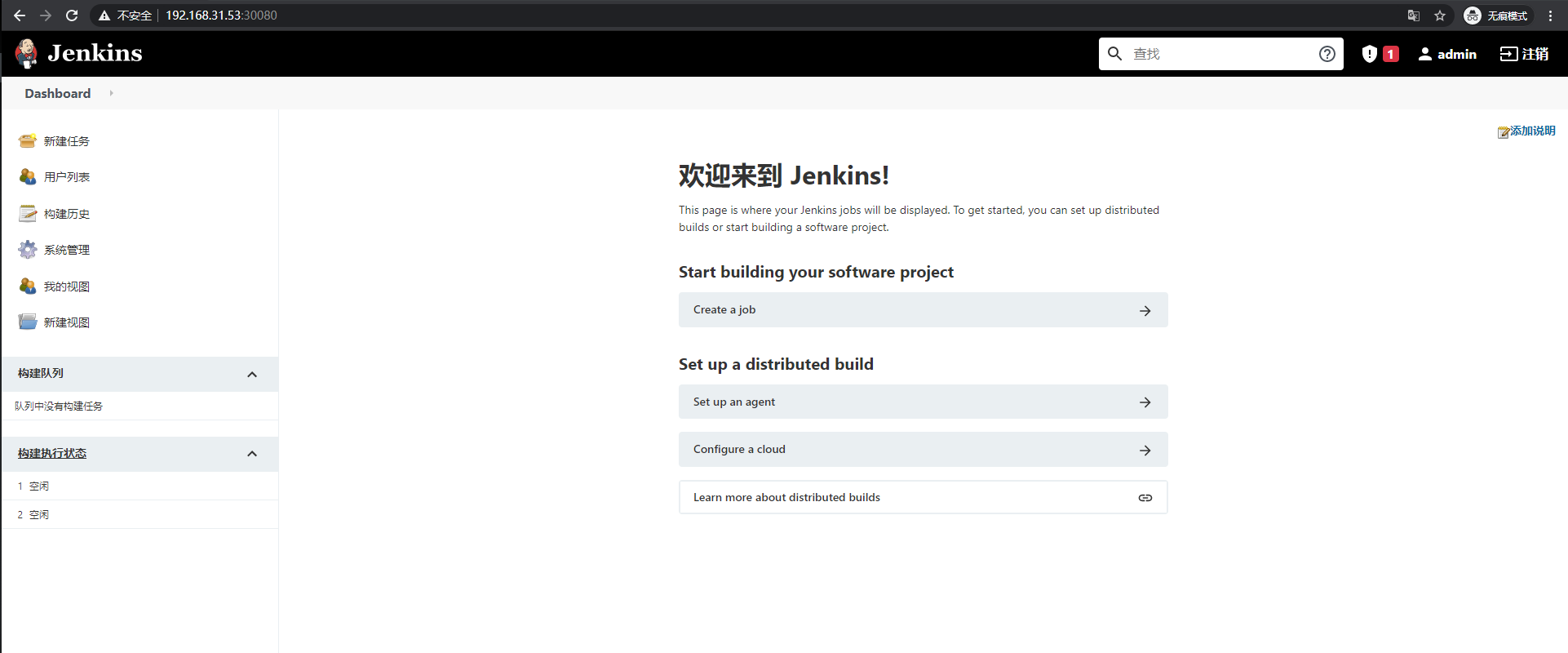

13)使用helm部署jenkins服务

[root@master01 ~]# helm install jenkins \

> --namespace devops \

> --set master.image=jenkins/jenkins \

> --set master.tag=latest \

> --set master.adminUser=admin \

> --set master.adminPassword="admin@123" \

> --set master.serviceType=NodePort \

> --set master.nodePort=30080 \

> --set master.initContainerEnv[0].name=JENKINS_UC \

> --set master.initContainerEnv[0].value="https://updates.jenkins-zh.cn/update-center.json" \

> --set master.initContainerEnv[1].name=JENKINS_UC_DOWNLOAD \

> --set master.initContainerEnv[1].value="https://mirrors.tuna.tsinghua.edu.cn/jenkins" \

> --set persistence.storageClass=nfs-provisioner \

> --set persistence.size=30Gi \

> stable/jenkins

WARNING: This chart is deprecated

NAME: jenkins

LAST DEPLOYED: Sat Mar 27 09:20:23 2021

NAMESPACE: devops

STATUS: deployed

REVISION: 1

NOTES:

*******************

****DEPRECATED*****

*******************

* The Jenkins chart is deprecated. Future development has been moved to https://github.com/jenkinsci/helm-charts

1. Get your 'admin' user password by running:

printf $(kubectl get secret --namespace devops jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo

2. Get the Jenkins URL to visit by running these commands in the same shell:

export NODE_PORT=$(kubectl get --namespace devops -o jsonpath="{.spec.ports[0].nodePort}" services jenkins)

export NODE_IP=$(kubectl get nodes --namespace devops -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP:$NODE_PORT/login

3. Login with the password from step 1 and the username: admin

4. Use Jenkins Configuration as Code by specifying configScripts in your values.yaml file, see documentation: http:///configuration-as-code and examples: https://github.com/jenkinsci/configuration-as-code-plugin/tree/master/demos

For more information on running Jenkins on Kubernetes, visit:

https://cloud.google.com/solutions/jenkins-on-container-engine

For more information about Jenkins Configuration as Code, visit:

https://jenkins.io/projects/jcasc/

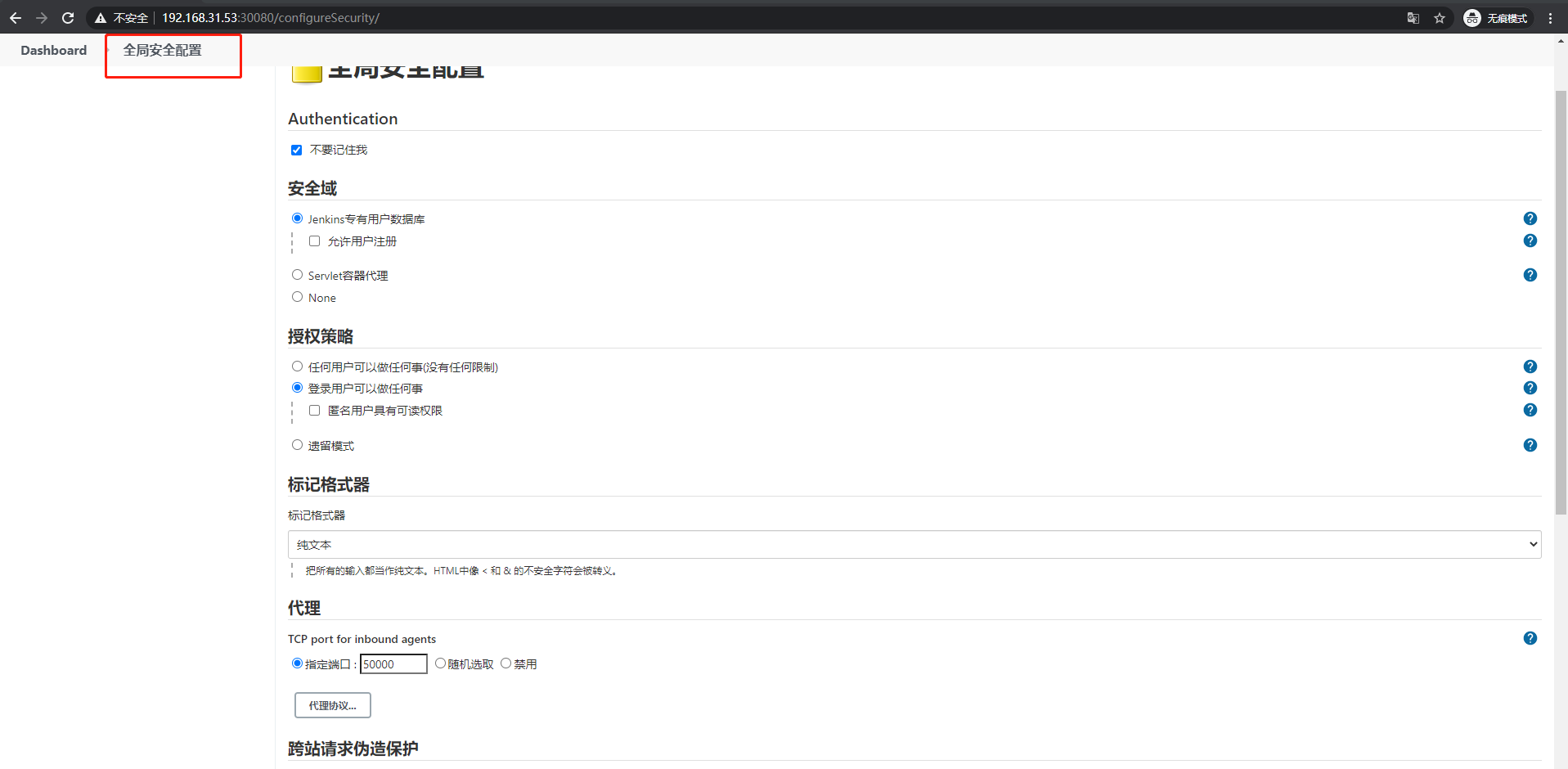

修改插件源为国内地址

[root@master01 ~]# kubectl exec -it jenkins-6b4cc54659-txkgt -n devops -- sh

$ echo $JENKINS_HOME

/var/jenkins_home

$ mkdir $JENKINS_HOME/update-center-rootCAs

cat >$JENKINS_HOME/update-center-rootCAs/jenkins-update-center-cn-root-ca.crt <<EOF

-----BEGIN CERTIFICATE-----

MIICcTCCAdoCCQD/jZ7AgrzJKTANBgkqhkiG9w0BAQsFADB9MQswCQYDVQQGEwJD

TjELMAkGA1UECAwCR0QxCzAJBgNVBAcMAlNaMQ4wDAYDVQQKDAV2aWhvbzEMMAoG

A1UECw> wDZGV2M> > > REwDwYDVQQDDAhkZW1vLmNvbTEjMCEGCSqGSIb3DQEJARYUYWRt

aW5AamVua2lucy16aC5jb20wH> > > > hcNMTkxMTA5MTA0MDA5WhcNMjIxMTA4MTA0MDA5

WjB9MQswCQYDVQQGEwJDTjELMAkGA1UECAwCR0QxCzAJBgNVBAcMAlNaMQ4wDAYD

VQQKDAV2aWhvbzEMMAoGA1UECwwDZGV2MREwDwYDVQQDDAhkZW1vLmNvbTEjMCEG

C> SqGSIb3D> > > QEJARYUYWRtaW5AamVua2lucy16aC5jb20wgZ8wDQYJKoZIhvcNAQEB

BQADgY0AM> IGJA> > > oGBAN+6jN8rCIjVkQ0Q7ZbJLk4IdcHor2WdskOQMhlbR0gOyb4g

RX+CorjDRjDm6mj2OohqlrtRxLGYxBnXFeQGU7wWjQHyfKDghtP51G/672lXFtzB

KXukHByHjtzrDxAutKTdolyBCuIDDGJmRk+LavIBY3/Lxh6f0ZQSeCSJYiyxAgMB

AAEwD> > > > QYJKoZIhvcNAQELBQADgYEAD92l26PoJcbl9GojK2L3pyOQjeeDm/vV9e3R

E> > > > gwGmoIQzlubM0mjxpCz1J73nesoAcuplTEps/46L7yoMjptCA3TU9FZAHNQ8dbz

a0vm4CF9841/FIk8tsLtwCT6ivkAi0lXGwhX0FK7FaAyU0nNeo/EPvDwzTim4XDK

9j1WGpE=

-----END CERTIFICATE-----

EOF

cp /var/jenkins_home/hudson.model.UpdateCenter.xml /var/jenkins_home/hudson.model.UpdateCenter.xml.bak

sed -i 's#https://updates.jenkins.io/update-center.json#https://mirrors.huaweicloud.com/jenkins/updates/update-center.json#g' $JENKINS_HOME/hudson.model.UpdateCenter.xml

访问jenkins

http://192.168.31.52:30080

#安装常用的插件

14)使用helm部署gitlab服务

git clone https://gitee.com/xhaihua/xhaihua-devops.git

cd gitlab-ce/

#根据实际需求,修改values.yaml文件

[root@master01 gitlab-ce]# ls

charts Chart.yaml README.md requirements.lock requirements.yaml templates values.yaml

[root@master01 gitlab-ce]# helm install gitlab-ce -n devops -f values.yaml .

WARNING: This chart is deprecated

NAME: gitlab-ce

LAST DEPLOYED: Sat Mar 27 10:51:35 2021

NAMESPACE: devops

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

##############################################################################

This chart has been deprecated in favor of the official GitLab chart:

http://docs.gitlab.com/ce/install/kubernetes/gitlab_omnibus.html

##############################################################################

1. Get your GitLab URL by running:

export NODE_IP=$(kubectl get nodes --namespace devops -o jsonpath="{.items[0].status.addresses[0].address}")

echo http://$NODE_IP/

2. Login as the root user:

Username: root

Password: admin@123

3. Point a DNS entry at your install to ensure that your specified

external URL is reachable:

http://gitlab.xhaihua.com/

[root@master01 gitlab-ce]# kubectl get pods -n devops

NAME READY STATUS RESTARTS AGE

gitlab-ce-gitlab-ce-6788667775-2s8wr 1/1 Running 0 6m20s

gitlab-ce-postgresql-86c64dcbbd-49ch5 1/1 Running 0 6m20s

gitlab-ce-redis-5474c7444-vqlqs 1/1 Running 0 6m20s

jenkins-6b4d45d947-vfkdd 2/2 Running 0 67m

[root@master01 gitlab-ce]# kubectl get svc -n devops

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

gitlab-ce-gitlab-ce NodePort 10.10.147.198 <none> 22:31739/TCP,80:30511/TCP,443:31419/TCP 6m23s

gitlab-ce-postgresql ClusterIP 10.10.171.57 <none> 5432/TCP 6m23s

gitlab-ce-redis ClusterIP 10.10.15.213 <none> 6379/TCP 6m23s

jenkins NodePort 10.10.192.104 <none> 8080:30080/TCP 67m

jenkins-agent ClusterIP 10.10.2.205 <none> 50000/TCP 67m

[root@master01 gitlab-ce]# kubectl get pvc -n devops

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

gitlab-ce-gitlab-ce-data Bound pvc-7e443360-6602-4824-a2ec-081f916b8d31 10Gi RWO nfs-provisioner 6m28s

gitlab-ce-gitlab-ce-etc Bound pvc-9caf856e-855a-4f0d-b581-ccd57fc4c861 1Gi RWO nfs-provisioner 6m28s

gitlab-ce-postgresql Bound pvc-068ff479-4ab7-4af8-9082-8a499c0f8a4b 10Gi RWO nfs-provisioner 6m28s

gitlab-ce-redis Bound pvc-c9bc9c5b-39e2-4be4-aeb9-233b16dbc2cf 10Gi RWO nfs-provisioner 6m28s

jenkins Bound pvc-2adb0d20-6999-4024-ad52-ba99122fb5e1 30Gi RWO nfs-provisioner 67m