Kubernetes笔记—集群搭建

参考:

权威指南.pdf

kubernetes(k8s)课程.pdf

云平台搭建文档

Docker-Desktop中

1、Docker Desktop中开启、配置k8s

参考链接https://github.com/AliyunContainerService/k8s-for-docker-desktop/tree/v1.18.6

https://github.com/AliyunContainerService/k8s-for-docker-desktop/tree/v1.19.3

配置 Kubernetes

下载解压后,使用powershell运行load_images.ps1。 验证:docker images<br /> <br /> 可选操作: 切换Kubernetes运行上下文至 docker-desktop (之前版本的 context 为 docker-for-desktop)<br /> kubectl config use-context docker-desktop

验证 Kubernetes 集群状态

kubectl cluster-info

kubectl get nodes

配置 Kubernetes 控制台

kubectl create -f kubernetes-dashboard.yaml或

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.0-rc5/aio/deploy/recommended.yaml

检查 kubernetes-dashboard 应用状态kubectl get pod -n kubernetes-dashboard

开启 API Server 访问代理 kubectl proxy

通过如下 URL 访问 Kubernetes dashboard

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/

配置控制台访问令牌

对于Windows环境,在powershell下,一起运行:

$TOKEN=((kubectl -n kube-system describe secret default | Select-String “token:”) -split “ +”)[1]

kubectl config set-credentials docker-for-desktop —token=”${TOKEN}”

echo $TOKEN

得到TOKEN,复制粘贴到输入栏中,OK

问题:1、新电脑中,k8s一直处于starting状态,启动不起来。猜想原因:版本问题

github上下载的阿里云团队的文件,.proprties文件中的k8s参数对应于1.18.8,而新电脑中的docker对应的k8s版本是1.19.3,因此需要在.proprties文件中修改参数。具体见下图:

将1.18.8(或者其他)改为1.19.3,

将coredns的数字改为1.7.0,

将etcd的数字改为3.4.13

更改后,重新执行.\load_images.ps1)

MiniKube方式

阿里

https://github.com/AliyunContainerService/minikube

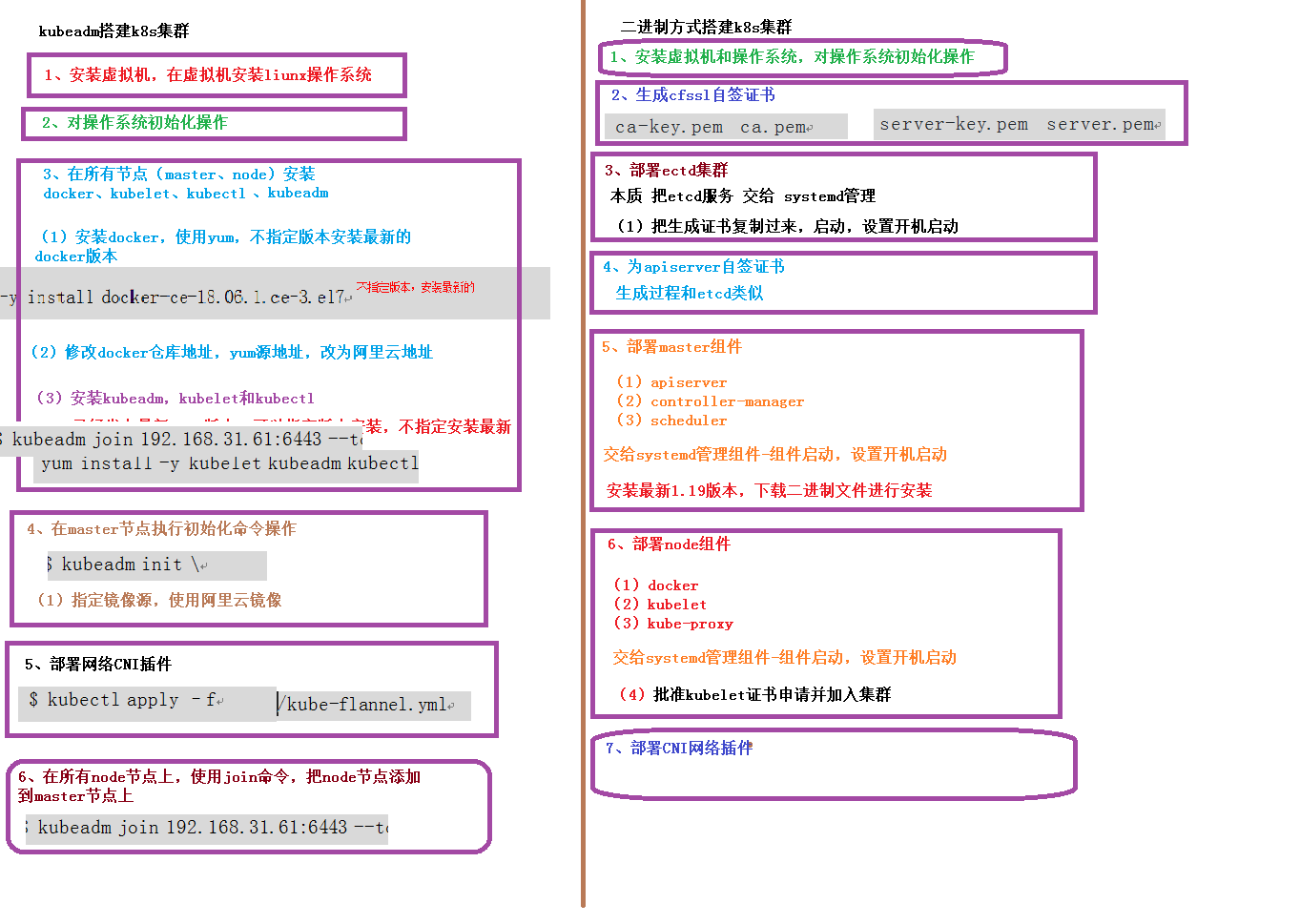

kubeadm方式搭建

简介:

kubelet不使用容器化,其他组件容器化。

# 创建一个Master节点$ kubeadm init# 将一个Node节点加入到当前集群中$ kubeadm join <Master节点的IP和端口>

基本安装条件

- 硬件配置:2GB 或更多 RAM,2 个 CPU 或更多 CPU,硬盘 30GB 或更多

- 集群中所有机器之间网络互通

安装目标

(1)在所有节点上安装 Docker 和 kubeadm

(2)部署 Kubernetes Master

(3)部署容器网络插件

(4)部署 Kubernetes Node,将节点加入 Kubernetes 集群中

(5)部署 Dashboard Web 页面,可视化查看 Kubernetes 资源

系统初始化工作

#关闭防火墙$ systemctl stop firewalld$ systemctl disable firewalld#关闭 selinux:$ sed -i 's/enforcing/disabled/' /etc/selinux/config# 永久$ setenforce 0# 临时#临时关闭 swap:$ swapoff -a# 永久关闭swap$ sed -ri 's/.*swap.*/#&/' /etc/fstab#或者手动注释掉(##)swap内容$ vim /etc/fstab

#设置主机名$ hostnamectl set-hostname <hostname>#在master添加hosts$ cat >> /etc/hosts << EOF192.168.31.61 k8s-master192.168.31.62 k8s-node1192.168.31.63 k8s-node2EOF#将桥接的 IPv4 流量传递到 iptables 的链$ cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOF$ sysctl --system# 生效#时间同步$ yum install ntpdate -y$ ntpdate time.windows.com

所有节点安装 Docker/kubeadm/kubelet

安装Docker

$ wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O/etc/yum.repos.d/docker-ce.repo$ yum -y install docker-ce-18.06.1.ce-3.el7$ systemctl enable docker && systemctl start docker$ docker --version

仓库加速

$ cat > /etc/docker/daemon.json << EOF{"registry-mirrors": ["https://tcy950ho.mirror.aliyuncs.com"],#防止出现10248报错"exec-opts": ["native.cgroupdriver=systemd"]}EOF$ systemctl daemon-reload$ systemctl restart docker

添加 yum 源

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttps://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

安装 kubeadm,kubelet 和 kubectl

$ yum install -y kubelet kubeadm kubectl$ systemctl enable kubelet

部署 Kubernetes Master

在Master执行

$ kubeadm init \--apiserver-advertise-address=192.168.31.61 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version v1.17.0 \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16

安装网络插件(CNI)

(所有节点)

$ wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml$ kubectl apply –f kube-flannel.yml

如果是单节点,则到此结束~

加入 Node

在 (Node)上执行。

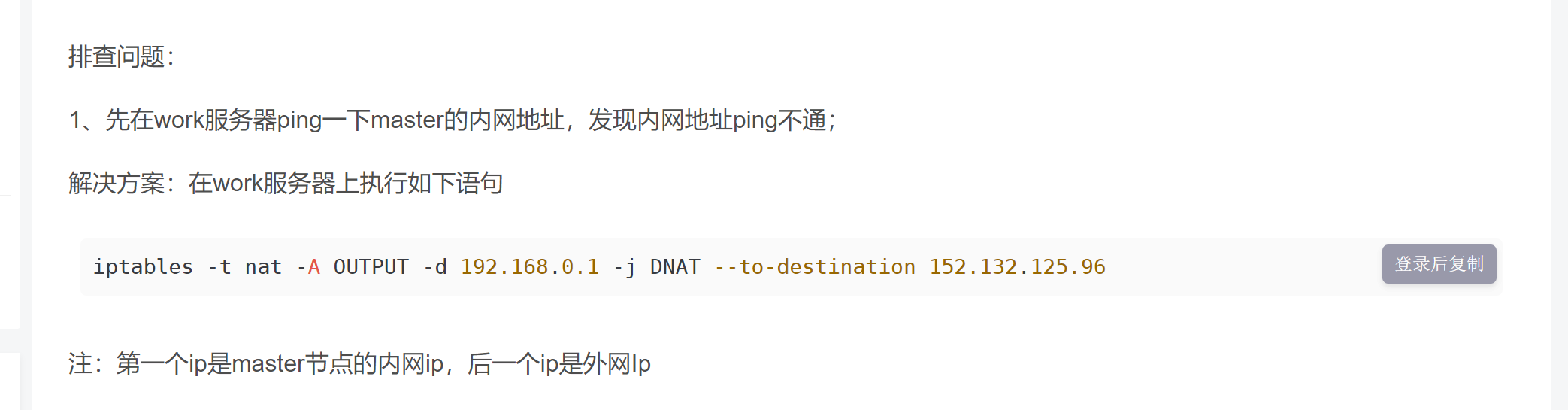

首先确保从Worker节点能ping通 Master节点

例如云服务器中,Worker节点与Master节点不是一个运营商,则需要先在Worker节点执行以下命令iptables -t nat -A OUTPUT -d <master_内网IP> -j DNAT --to-destination <mater_外网IP>

向集群添加新节点,执行上文 kubeadm init 命令中输出的 kubeadm join 命令

$ kubeadm join 192.168.31.61:6443 --token esce21.q6hetwm8si29qxwn \--discovery-token-ca-cert-hashsha256:00603a05805807501d7181c3d60b478788408cfe6cedefedb1f97569708be9c5#如果token过期,可以生成一个$ kubeadm token create

mkdir -p $HOME/.kube#将master节点的配置文件 复制到worker节点scp <master_ip>:/etc/kubernetes/admin.conf /etc/kubernetes/admin.confsudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config$ kubectl get nodes

命令补全功能

yum install bash-completion -ysource /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)echo "source <(kubectl completion bash)" >>~/.bashrc

kubeadm方式小结和补充

kubeadm 会为 Master 组件生成 Pod 配置文件。在 Kubernetes 中,有一种特殊的容器启动方法叫做“Static Pod”。它允许你把要部署的 Pod 的 YAML 文件放在一个指定的目录里。这样,当这台机器上的 kubelet 启动时,它会自动检查这个目录,加载所有的 Pod YAML 文件,然后在这台机器上启动它们。

从这一点也可以看出,kubelet 在 Kubernetes 项目中的地位非常高,在设计上它就是一个完全独立的组件,而其他 Master 组件,则更像是辅助性的系统容器。

[root@zm manifests]# cd /etc/kubernetes/manifests/[root@zm manifests]# lsetcd.yaml kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml

init时,可以使用配置文件方式

作用是定制化参数,如镜像地址等

$ kubeadm init --config kubeadm.yaml

kubeadm的局限性

暂时不能用于生产环境,因为缺少高可用。(2022年呢?)

实践-云服务器

| 主机名 | 角色 | 内网IP | 外网IP |

|---|---|---|---|

| zm | master | 172.23.178.70 | 47.94.156.242 |

| zm-tencent | worker | 10.0.16.3 | 43.13823.201 |

整体上,参考kubeadm方式搭建。

kubeadm init --apiserver-advertise-address=172.23.178.70 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version 1.23.0 \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16

问题:

原因:cgroup问题,需要在/etc/docker/daemon.json中加入一行:"exec-opts": ["native.cgroupdriver=systemd"]

参考:https://www.codetd.com/article/13610139

systemctl daemon-reloadsystemctl restart dockersystemctl restart kubeletkubeadm reset再重新执行kubeadm init命令

将Worker节点加入集群中

首先确认已经打开安全策略。Worker访问Master的6443端口。

执行

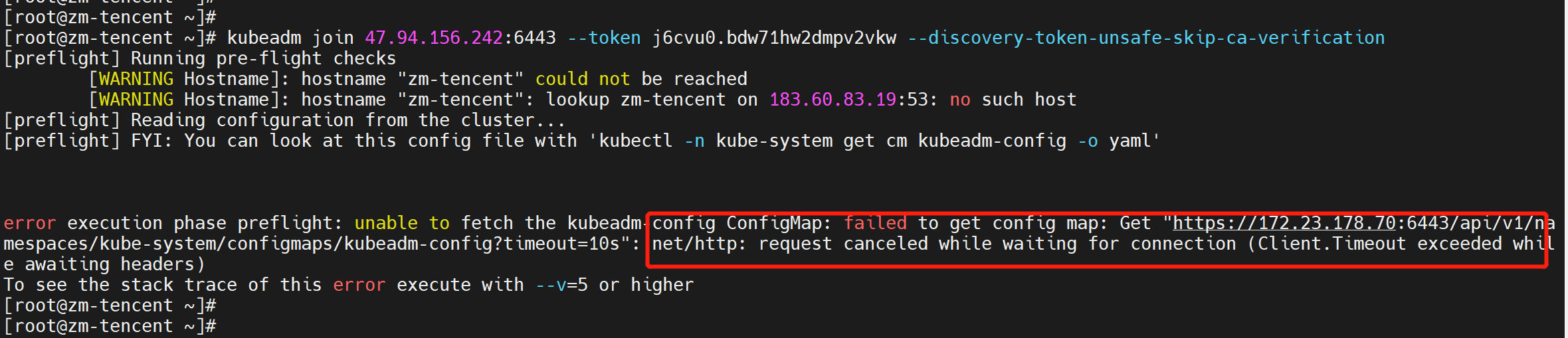

kubeadm join 47.94.156.242:6443 \--token j6cvu0.bdw71hw2dmpv2vkw \--discovery-token-unsafe-skip-ca-verification#当时没保存hash码,,尝试跳过

报错

/proc/sys/net/ipv4/ip_forward contents are not set to 1”

原因:未打开IP转发。(腾讯云 服务器默认不开?)

解决

参考 https://blog.csdn.net/qq_39346534/article/details/107629830#输出应该是 0cat /proc/sys/net/ipv4/ip_forward#打开IP转发echo "1" > /proc/sys/net/ipv4/ip_forward$ 重启service network restartreboot now#输出应该是 1cat /proc/sys/net/ipv4/ip_forward

解决后,重新执行。

报错

error execution phase preflight: unable to fetch the kubeadm-config ConfigMap: failed to get config map: Get “https://172.23.178.70:6443/api/v1/namespaces/kube-system/configmaps/kubeadm-config?timeout=10s”: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

尝试用token和hash值来执行。查看token,并生成hash值。

https://www.hangge.com/blog/cache/detail_2418.html#查看token$ kubeadm token list#如果token过期,可以生成一个$ kubeadm token create#证书hash值openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

执行

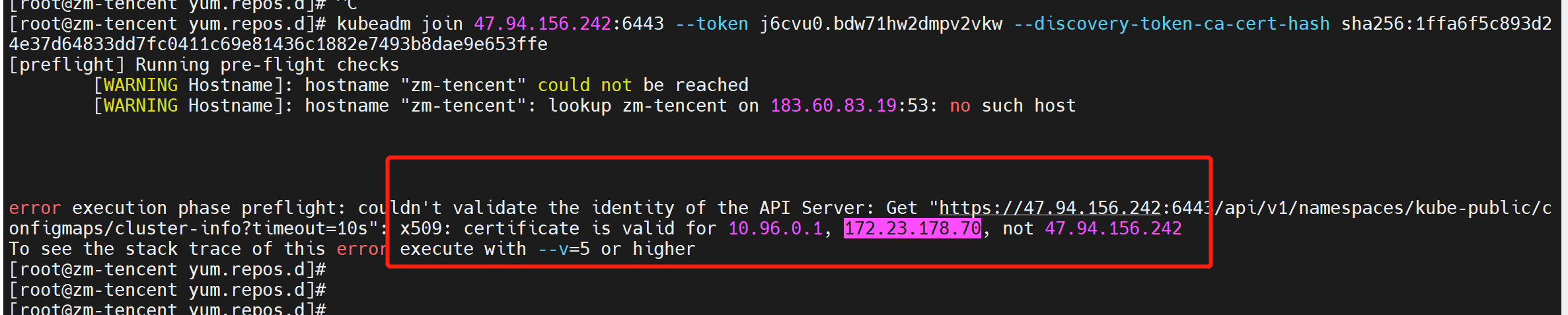

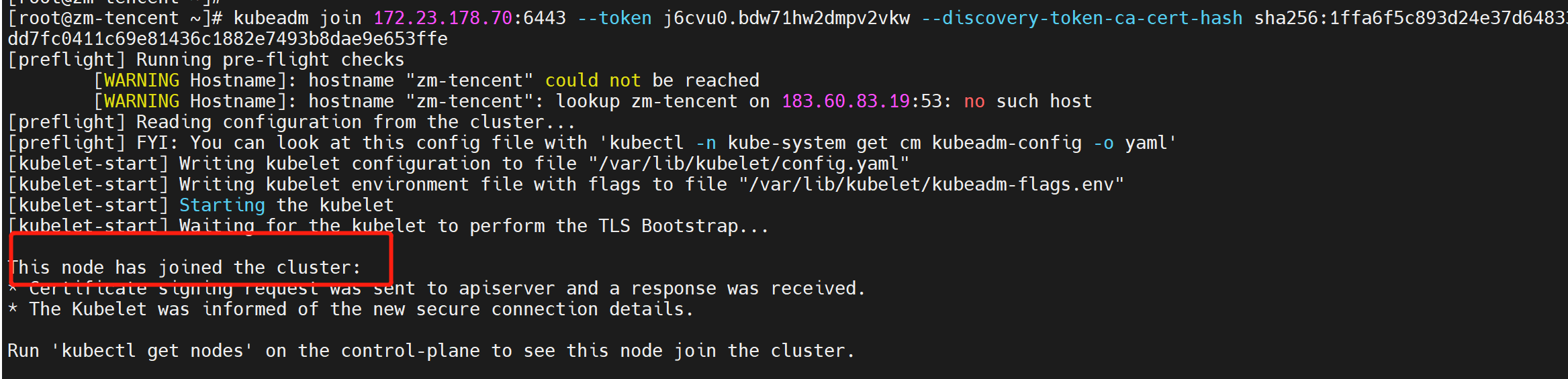

$ kubeadm join 47.94.156.242:6443 \--token j6cvu0.bdw71hw2dmpv2vkw \--discovery-token-ca-cert-hash sha256:1ffa6f5c893d24e37d64833dd7fc0411c69e81436c1882e7493b8dae9e653ffe

又报错:

按照提示,改为master的内网IP

$ kubeadm join 172.23.178.70:6443 \--token j6cvu0.bdw71hw2dmpv2vkw \--discovery-token-ca-cert-hash sha256:1ffa6f5c893d24e37d64833dd7fc0411c69e81436c1882e7493b8dae9e653ffe

报错,信息为:超时

分析原因:腾讯云服务器(worker) 访问 阿里云服务器(master)的内网IP地址,不通。

解决办法:

https://blog.csdn.net/qq_33996921/article/details/103529312

#master节点 内网IP:172.23.178.70, 外网IP47.94.156.242$ iptables -t nat -A OUTPUT -d 172.23.178.70 -j DNAT --to-destination 47.94.156.242#需要reset一下$ kubeadm reset#重新执行$ kubeadm join 172.23.178.70:6443 \--token j6cvu0.bdw71hw2dmpv2vkw \--discovery-token-ca-cert-hash sha256:1ffa6f5c893d24e37d64833dd7fc0411c69e81436c1882e7493b8dae9e653ffe

成功!!

The connection to the server localhost:8080 was refused

https://blog.csdn.net/leenhem/article/details/119736586

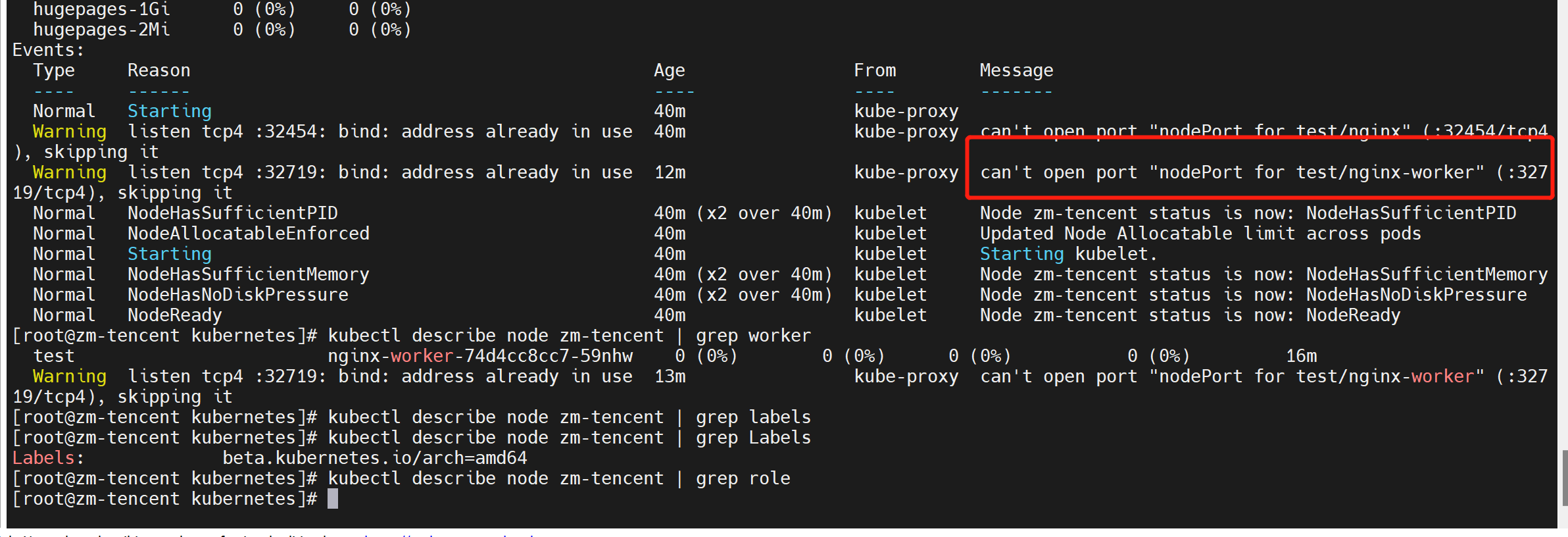

遗留问题:

腾讯云服务器,访问nodeport服务报错连接拒绝。怀疑是防火墙、安全组问题

网络问题??

无法访问

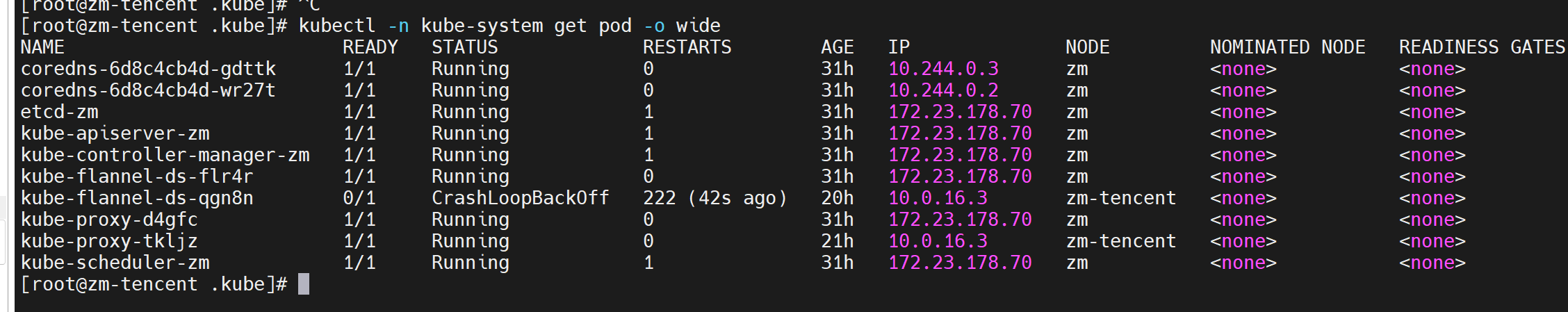

节点上的pod启动不起来。

腾讯云节点上的flannel一直在重启。

腾讯云节点上的flannel的日志:

[root@zm ~]# kubectl -n kube-system logs kube-flannel-ds-qgn8n -fError from server: Get "https://10.0.16.3:10250/containerLogs/kube-system/kube-flannel-ds-qgn8n/kube-flannel?follow=true": dial tcp 10.0.16.3:10250: i/o timeout

通过添加安全组策略,解决了10250这个报错.

但“好景不长”,flannel立马出现了另一个报错:

[root@zm ~]# kubectl -n kube-system logs kube-flannel-ds-ljfcz -fI0416 16:11:48.865888 1 main.go:205] CLI flags config: {etcdEndpoints:http://127.0.0.1:4001,http://127.0.0.1:2379 etcdPrefix:/coreos.com/network etcdKeyfile: etcdCertfile: etcdCAFile: etcdUsername: etcdPassword: version:false kubeSubnetMgr:true kubeApiUrl: kubeAnnotationPrefix:flannel.alpha.coreos.com kubeConfigFile: iface:[] ifaceRegex:[] ipMasq:true subnetFile:/run/flannel/subnet.env publicIP: publicIPv6: subnetLeaseRenewMargin:60 healthzIP:0.0.0.0 healthzPort:0 iptablesResyncSeconds:5 iptablesForwardRules:true netConfPath:/etc/kube-flannel/net-conf.json setNodeNetworkUnavailable:true}W0416 16:11:48.866035 1 client_config.go:614] Neither --kubeconfig nor --master was specified. Using the inClusterConfig. This might not work.E0416 16:12:18.868370 1 main.go:222] Failed to create SubnetManager: error retrieving pod spec for 'kube-system/kube-flannel-ds-ljfcz': Get "https://10.96.0.1:443/api/v1/namespaces/kube-system/pods/kube-flannel-ds-ljfcz": dial tcp 10.96.0.1:443: i/o timeout

疑问:

10.96.0.1是什么??是否存在IP网段重合了?

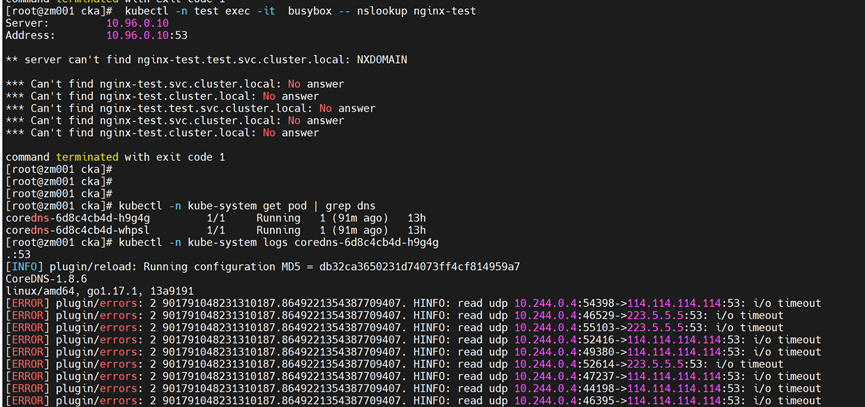

DNS也有问题

[root@zm001 cka]# kubectl -n test exec -it busybox -- nslookup nginx-testServer: 10.96.0.10Address: 10.96.0.10:53** server can't find nginx-test.test.svc.cluster.local: NXDOMAIN*** Can't find nginx-test.svc.cluster.local: No answer*** Can't find nginx-test.cluster.local: No answer*** Can't find nginx-test.test.svc.cluster.local: No answer*** Can't find nginx-test.svc.cluster.local: No answer*** Can't find nginx-test.cluster.local: No answercommand terminated with exit code 1

[root@zm001 cka]# kubectl -n kube-system get pod | grep dnscoredns-6d8c4cb4d-h9g4g 1/1 Running 1 (91m ago) 13hcoredns-6d8c4cb4d-whpsl 1/1 Running 1 (91m ago) 13h[root@zm001 cka]# kubectl -n kube-system logs coredns-6d8c4cb4d-h9g4g.:53[INFO] plugin/reload: Running configuration MD5 = db32ca3650231d74073ff4cf814959a7CoreDNS-1.8.6linux/amd64, go1.17.1, 13a9191[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:54398->114.114.114.114:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:46529->223.5.5.5:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:55103->223.5.5.5:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:52416->114.114.114.114:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:49380->114.114.114.114:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:52614->223.5.5.5:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:47237->114.114.114.114:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:44198->114.114.114.114:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:46395->114.114.114.114:53: i/o timeout[ERROR] plugin/errors: 2 901791048231310187.8649221354387709407. HINFO: read udp 10.244.0.4:47019->223.5.5.5:53: i/o timeout

实践-VMware虚拟机

整体上,参考kubeadm方式搭建。

| 主机名 | 角色 | 内网IP |

|---|---|---|

| zm001 | master | 192.168.78.100 |

| zm002 | worker | 192.168.78.101 |

kubeadm init --apiserver-advertise-address=192.168.78.100 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version 1.23.0 \--service-cidr=10.96.0.0/12 \--pod-network-cidr=10.244.0.0/16

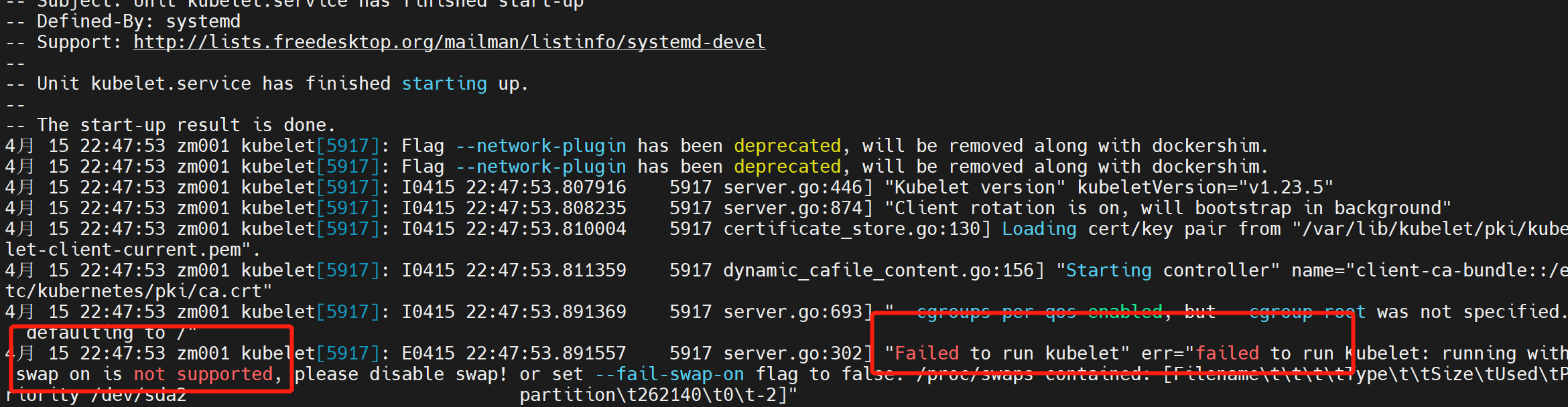

问题:

报错。查看kubelet日志journalctl -xefu kubelet发现报错swap分区的问题

关闭swap分区

https://blog.csdn.net/u013288190/article/details/109028126swapoff -a$ sed -ri 's/.*swap.*/#&/' /etc/fstab

查看kubelet日志,继续报错。

可能需要重启机器,于是执行reboot now

重启后,kubelet状态变为active

kubeadm reset

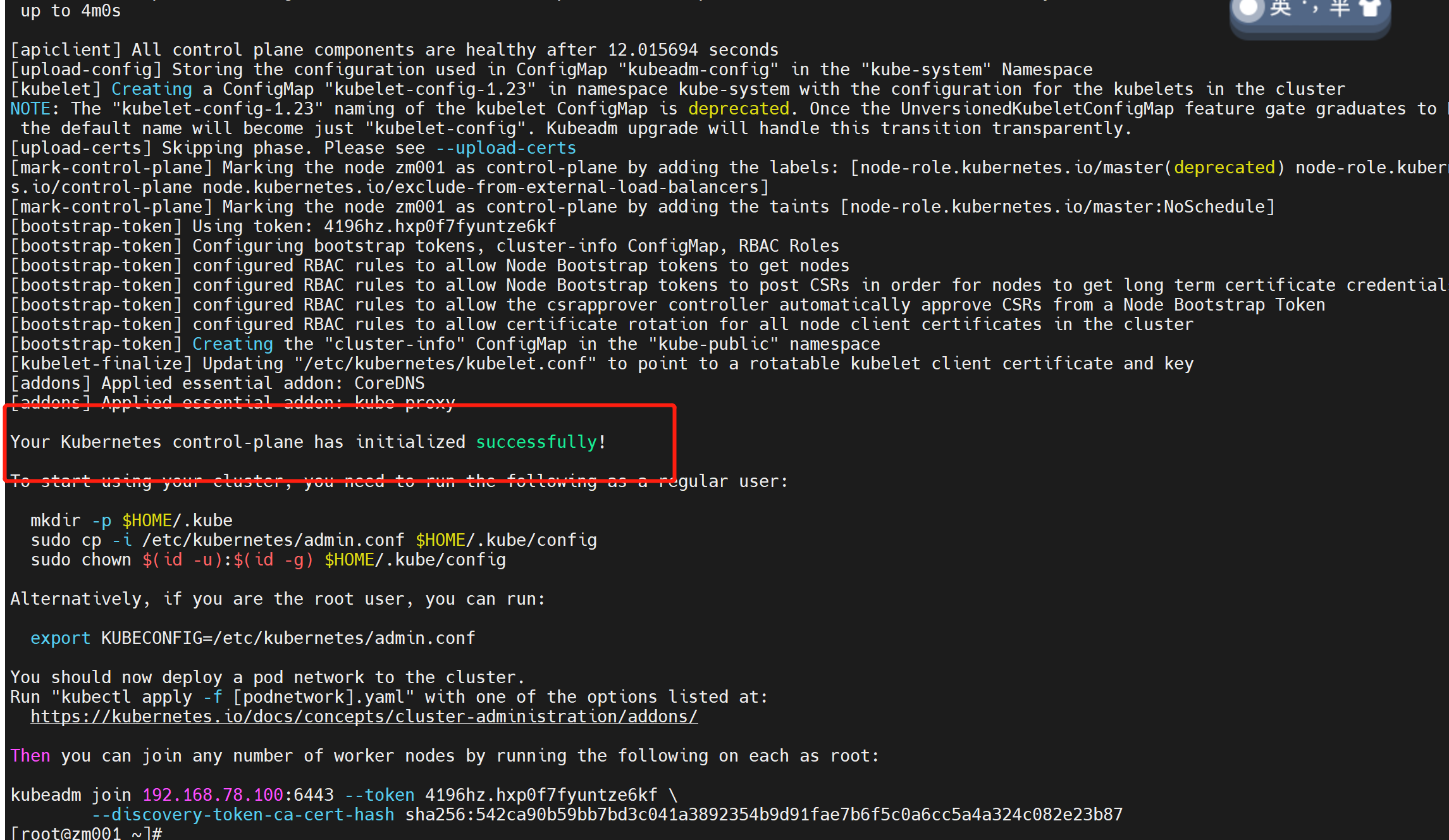

再重新执行kubeadm init命令

成功!!

略过中间环节。。。

kubeadm join 192.168.78.100:6443 --token 4196hz.hxp0f7fyuntze6kf \--discovery-token-ca-cert-hash sha256:542ca90b59bb7bd3c041a3892354b9d91fae7b6f5c0a6cc5a4a324c082e23b87

发现报错。原因是worker节点也存在swap分区问题。

参考上文,处理后,执行kubeadm reset,

[root@zm002 ~]# kubeadm reset[reset] WARNING: Changes made to this host by 'kubeadm init' or 'kubeadm join' will be reverted.[reset] Are you sure you want to proceed? [y/N]: y[preflight] Running pre-flight checksW0415 23:12:23.645534 8310 removeetcdmember.go:80] [reset] No kubeadm config, using etcd pod spec to get data directory[reset] No etcd config found. Assuming external etcd[reset] Please, manually reset etcd to prevent further issues[reset] Stopping the kubelet service[reset] Unmounting mounted directories in "/var/lib/kubelet"[reset] Deleting contents of config directories: [/etc/kubernetes/manifests /etc/kubernetes/pki][reset] Deleting files: [/etc/kubernetes/admin.conf /etc/kubernetes/kubelet.conf /etc/kubernetes/bootstrap-kubelet.conf /etc/kubernetes/controller-manager.conf /etc/kubernetes/scheduler.conf][reset] Deleting contents of stateful directories: [/var/lib/kubelet /var/lib/dockershim /var/run/kubernetes /var/lib/cni]The reset process does not clean CNI configuration. To do so, you must remove /etc/cni/net.dThe reset process does not reset or clean up iptables rules or IPVS tables.If you wish to reset iptables, you must do so manually by using the "iptables" command.If your cluster was setup to utilize IPVS, run ipvsadm --clear (or similar)to reset your system's IPVS tables.The reset process does not clean your kubeconfig files and you must remove them manually.Please, check the contents of the $HOME/.kube/config file.

再次执行kubeadm join,成功

[root@zm002 ~]# kubeadm join 192.168.78.100:6443 --token 4196hz.hxp0f7fyuntze6kf --discovery-token-ca-cert-hash sha256:542ca90b59bb7bd3c041a3892354b9d91fae7b6f5c0a6cc5a4a324c082e23b87[preflight] Running pre-flight checks[WARNING Hostname]: hostname "zm002" could not be reached[WARNING Hostname]: hostname "zm002": lookup zm002 on 114.114.114.114:53: no such host[preflight] Reading configuration from the cluster...[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"[kubelet-start] Starting the kubelet[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...This node has joined the cluster:* Certificate signing request was sent to apiserver and a response was received.* The Kubelet was informed of the new secure connection details.Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

8080 refused

#master上执行scp /etc/kubernetes/admin.conf 192.168.78.101:/etc/kubernetes/admin.conf#worker上执行export KUBECONFIG=/etc/kubernetes/admin.confecho "export KUBECONFIG=/etc/kubernetes/admin.conf" >> ~/.bash_profile

二进制方式

安装要求

在开始之前,部署 Kubernetes 集群机器需要满足以下几个条件:

(1)一台或多台机器,操作系统 CentOS7.x-86_x64

(2)硬件配置:2GB 或更多 RAM,2 个 CPU 或更多 CPU,硬盘 30GB 或更多

(3)集群中所有机器之间网络互通

(4)可以访问外网,需要拉取镜像,如果服务器不能上网,需要提前下载镜像并导入节点

(5)禁止 swap 分区

环境规划

| 软件 | 版本 |

|---|---|

| 操作系统 | CentOS7.8_x64 (mini) |

| Docker | 19-ce |

| Kubernetes | 1.19 |

| 角色 | IP | 组件 |

|---|---|---|

| k8s-master | kube-apiserver, kube-controller-manager, kube-scheduler, etcd |

|

| k8s-node1 | kubelet, kube-proxy, docker etcd |

|

| k8s-node2 | kubelet, kube-proxy, docker etcd |

操作系统初始化

# 关闭防火墙systemctl stop firewalldsystemctl disable firewalld# 关闭 selinuxsed -i 's/enforcing/disabled/' /etc/selinux/config# 永久setenforce 0# 临时# 关闭 swapswapoff -a# 临时sed -ri 's/.*swap.*/#&/' /etc/fstab# 永久# 根据规划设置主机名hostnamectl set-hostname <hostname># 在 master 添加 hostscat >> /etc/hosts << EOF192.168.44.147 m1192.168.44.148 n1EOF# 将桥接的 IPv4 流量传递到 iptables 的链cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsysctl --system# 生效# 时间同步yum install ntpdate -yntpdate time.windows.com

部署etcd集群

准备 cfssl 证书生成工具

cfssl 是一个开源的证书管理工具,使用 json 文件生成证书,相比 openssl 更方便使用。

找任意一台服务器操作,这里用 Master 节点

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64mv cfssl_linux-amd64 /usr/local/bin/cfsslmv cfssljson_linux-amd64 /usr/local/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

生成 Etcd 证书

自签证书颁发机构(CA)

mkdir -p ~/TLS/{etcd,k8s}cd TLS/etcdcat > ca-config.json<< EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json<< EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]}EOF

生成证书

cfssl gencert -initca ca-csr.json | cfssljson -bare ca -ls *pemca-key.pemca.pem

使用自签 CA 签发 Etcd HTTPS 证书

创建证书申请文件:

cat > server-csr.json<< EOF{"CN": "etcd","hosts": ["192.168.31.71","192.168.31.72","192.168.31.73"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]}EOF

生成证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=www server-csr.json | cfssljson -bare serverls server*pemserver-key.pemserver.pem

部署 Etcd 集群

从 Github 下载二进制文件

下载地址:https://github.com/etcd-io/etcd/releases/download/v3.4.9/etcd-v3.4.9-linux-amd64.tar.gz

以下在节点 1 上操作,为简化操作,待会将节点 1 生成的所有文件拷贝到节点 2 和节点 3.

#创建工作目录并解压二进制包mkdir /opt/etcd/{bin,cfg,ssl} –ptar zxvf etcd-v3.4.9-linux-amd64.tar.gzmv etcd-v3.4.9-linux-amd64/{etcd,etcdctl} /opt/etcd/bin/#创建 etcd 配置文件cat > /opt/etcd/cfg/etcd.conf << EOF#[Member]ETCD_NAME="etcd-1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.31.71:2380"ETCD_LISTEN_CLIENT_URLS="https://192.168.31.71:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.31.71:2380"ETCD_ADVERTISE_CLIENT_URLS="https://192.168.31.71:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://192.168.31.71:2380,etcd-2=https://192.168.31.72:2380,etcd-3=https://192.168.31.73:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF

ETCD_NAME:节点名称,集群中唯一

ETCD_DATA_DIR:数据目录

ETCD_LISTEN_PEER_URLS:集群通信监听地址

ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址

ETCD_INITIAL_CLUSTER:集群节点地址

ETCD_INITIAL_CLUSTER_TOKEN:集群 Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的当前状态,new 是新集群,existing 表示加入

已有集群

#systemd 管理 etcdcat > /usr/lib/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServerAfter=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyEnvironmentFile=/opt/etcd/cfg/etcd.confExecStart=/opt/etcd/bin/etcd \--cert-file=/opt/etcd/ssl/server.pem \--key-file=/opt/etcd/ssl/server-key.pem \--peer-cert-file=/opt/etcd/ssl/server.pem \--peer-key-file=/opt/etcd/ssl/server-key.pem \--trusted-ca-file=/opt/etcd/ssl/ca.pem \--peer-trusted-ca-file=/opt/etcd/ssl/ca.pem \--logger=zapRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

#拷贝刚才生成的证书cp ~/TLS/etcd/ca*pem ~/TLS/etcd/server*pem /opt/etcd/ssl/#启动并设置开机启动systemctl daemon-reloadsystemctl start etcdsystemctl enable etcd#将上面节点 1 所有生成的文件拷贝到节点 2 和节点 3scp -r /opt/etcd/ root@192.168.31.72:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.31.72:/usr/lib/systemd/system/scp -r /opt/etcd/ root@192.168.31.73:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.31.73:/usr/lib/systemd/system/

#然后在节点 2 和节点 3 分别修改 etcd.conf 配置文件中的节点名称和当前服务器 IP:vi /opt/etcd/cfg/etcd.conf#[Member]ETCD_NAME="etcd-1"# 修改此处,节点 2 改为 etcd-2,节点 3 改为 etcd-3ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://192.168.31.71:2380"# 修改此处为当前服务器 IPETCD_LISTEN_CLIENT_URLS="https://192.168.31.71:2379" # 修改此处为当前服务器 IP#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.31.71:2380" # 修改此处为当前服务器 IPETCD_ADVERTISE_CLIENT_URLS="https://192.168.31.71:2379" # 修改此处为当前服务器IPETCD_INITIAL_CLUSTER="etcd-1=https://192.168.31.71:2380,etcd-2=https://192.168.31.72:2380,etcd-3=https://192.168.31.73:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"#最后启动 etcd 并设置开机启动,同上。systemctl daemon-reloadsystemctl start etcdsystemctl enable etcd

安装Docker

下载地址:https://download.docker.com/linux/static/stable/x86_64/docker-19.03.9.tgz

以下在所有节点操作。这里采用二进制安装,用 yum 安装也一样。

#解压二进制包tar zxvf docker-19.03.9.tgzmv docker/* /usr/bin#systemd 管理 dockercat > /usr/lib/systemd/system/docker.service << EOF[Unit]Description=Docker Application Container EngineDocumentation=https://docs.docker.comAfter=network-online.target firewalld.serviceWants=network-online.target[Service]Type=notifyExecStart=/usr/bin/dockerdExecReload=/bin/kill -s HUP $MAINPIDLimitNOFILE=infinityLimitNPROC=infinityLimitCORE=infinityTimeoutStartSec=0Delegate=yesKillMode=processRestart=on-failureStartLimitBurst=3StartLimitInterval=60s[Install]WantedBy=multi-user.targetEOF#创建配置文件mkdir /etc/dockercat > /etc/docker/daemon.json << EOF{"registry-mirrors": ["https://b9pmyelo.mirror.aliyuncs.com"]}EOF# 启动 并设置开机自启动systemctl daemon-reloadsystemctl start dockersystemctl enable docker

部署Master Node

生成 kube-apiserver 证书

#自签证书颁发机构(CA)cat > ca-config.json<< EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOFcat > ca-csr.json<< EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]}EOF#生成证书cfssl gencert -initca ca-csr.json | cfssljson -bare ca -ls *pemca-key.pemca.pem

#使用自签 CA 签发 kube-apiserver HTTPS 证书cd TLS/k8scat > server-csr.json<< EOF{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","192.168.31.71","192.168.31.72","192.168.31.73","192.168.31.74","192.168.31.81","192.168.31.82","192.168.31.88","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOF#生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare serverls server*pemserver-key.pemserver.pem

部署 kube-apiserver

下载文件

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG/CHANGELOG-1.18.md#v1183

#解压二进制包mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}tar zxvf kubernetes-server-linux-amd64.tar.gzcd kubernetes/server/bincp kube-apiserver kube-scheduler kube-controller-manager /opt/kubernetes/bincp kubectl /usr/bin/#部署 kube-apiservercat > /opt/kubernetes/cfg/kube-apiserver.conf << EOFKUBE_APISERVER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--etcd-servers=https://192.168.31.71:2379,https://192.168.31.72:2379,https://192.168.31.73:2379 \\--bind-address=192.168.31.71 \\--secure-port=6443 \\--advertise-address=192.168.31.71 \\--allow-privileged=true \\--service-cluster-ip-range=10.0.0.0/24 \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \\--authorization-mode=RBAC,Node \\--enable-bootstrap-token-auth=true \\--token-auth-file=/opt/kubernetes/cfg/token.csv \\--service-node-port-range=30000-32767 \\--kubelet-client-certificate=/opt/kubernetes/ssl/server.pem \\--kubelet-client-key=/opt/kubernetes/ssl/server-key.pem \\--tls-cert-file=/opt/kubernetes/ssl/server.pem\\--tls-private-key-file=/opt/kubernetes/ssl/server-key.pem \\--client-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-key-file=/opt/kubernetes/ssl/ca-key.pem \\--etcd-cafile=/opt/etcd/ssl/ca.pem \\--etcd-certfile=/opt/etcd/ssl/server.pem \\--etcd-keyfile=/opt/etcd/ssl/server-key.pem \\--audit-log-maxage=30 \\--audit-log-maxbackup=3 \\--audit-log-maxsize=100 \\--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"EOF#拷贝证书cp ~/TLS/k8s/ca*pem ~/TLS/k8s/server*pem /opt/kubernetes/ssl/

启用 TLS Bootstrapping 机制

#创建上述配置文件中 token 文件cat > /opt/kubernetes/cfg/token.csv << EOFc47ffb939f5ca36231d9e3121a252940,kubelet-bootstrap,10001,"system:node-bootstrapper"EOF

#systemd 管理 apiservercat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-apiserver.confExecStart=/opt/kubernetes/bin/kube-apiserver \$KUBE_APISERVER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF

#启动并设置开机启动systemctl daemon-reloadsystemctl start kube-apiserversystemctl enable kube-apiserver

#授权 kubelet-bootstrap 用户允许请求证书kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrap

部署 kube-controller-manager

#创建配置文件cat > /opt/kubernetes/cfg/kube-controller-manager.conf << EOFKUBE_CONTROLLER_MANAGER_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--leader-elect=true \\--master=127.0.0.1:8080 \\--bind-address=127.0.0.1 \\--allocate-node-cidrs=true \\--cluster-cidr=10.244.0.0/16 \\--service-cluster-ip-range=10.0.0.0/24 \\--cluster-signing-cert-file=/opt/kubernetes/ssl/ca.pem \\--cluster-signing-key-file=/opt/kubernetes/ssl/ca-key.pem\\--root-ca-file=/opt/kubernetes/ssl/ca.pem \\--service-account-private-key-file=/opt/kubernetes/ssl/ca-key.pem \\--experimental-cluster-signing-duration=87600h0m0s"EOF#systemd 管理 controller-managercat > /usr/lib/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-controller-manager.confExecStart=/opt/kubernetes/bin/kube-controller-manager\$KUBE_CONTROLLER_MANAGER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF#启动并设置开机启动systemctl daemon-reloadsystemctl start kube-controller-managersystemctl enable kube-controller-manager

部署 kube-scheduler

#创建配置文件cat > /opt/kubernetes/cfg/kube-scheduler.conf << EOFKUBE_SCHEDULER_OPTS="--logtostderr=false \--v=2 \--log-dir=/opt/kubernetes/logs \--leader-elect \--master=127.0.0.1:8080 \--bind-address=127.0.0.1"EOF#systemd 管理 schedulercat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetes[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-scheduler.confExecStart=/opt/kubernetes/bin/kube-scheduler \$KUBE_SCHEDULER_OPTSRestart=on-failure[Install]WantedBy=multi-user.targetEOF#启动并设置开机启动systemctl daemon-reloadsystemctl start kube-schedulersystemctl enable kube-scheduler#查看集群状态kubectl get cs

部署Worker Node

在所有 worker node 创建工作目录:mkdir -p /opt/kubernetes/{bin,cfg,ssl,logs}从 master 节点拷贝:cd kubernetes/server/bincp kubelet kube-proxy /opt/kubernetes/bin# 本地拷贝

部署 kubelet

#创建配置文件cat > /opt/kubernetes/cfg/kubelet.conf << EOFKUBELET_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--hostname-override=k8s-master \\--network-plugin=cni \\--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \\--config=/opt/kubernetes/cfg/kubelet-config.yml \\--cert-dir=/opt/kubernetes/ssl \\--pod-infra-container-image=lizhenliang/pause-amd64:3.0"EOF#配置参数文件cat > /opt/kubernetes/cfg/kubelet-config.yml << EOFkind: KubeletConfigurationapiVersion: kubelet.config.k8s.io/v1beta1address: 0.0.0.0port: 10250readOnlyPort: 10255cgroupDriver: cgroupfsclusterDNS:- 10.0.0.2clusterDomain: cluster.localfailSwapOn: falseauthentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /opt/kubernetes/ssl/ca.pemauthorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30sevictionHard:imagefs.available: 15%memory.available: 100Minodefs.available: 10%nodefs.inodesFree: 5%maxOpenFiles: 1000000maxPods: 110EOF#生成 bootstrap.kubeconfig 文件KUBE_APISERVER="https://192.168.31.71:6443" # apiserver IP:PORTTOKEN="c47ffb939f5ca36231d9e3121a252940" # 与 token.csv 里保持一致# 生成 kubelet bootstrap kubeconfig 配置文件kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=bootstrap.kubeconfigkubectl config set-credentials "kubelet-bootstrap" \--token=${TOKEN} \--kubeconfig=bootstrap.kubeconfigkubectl config set-context default \--cluster=kubernetes \--user="kubelet-bootstrap" \--kubeconfig=bootstrap.kubeconfigkubectl config use-context default --kubeconfig=bootstrap.kubeconfig#拷贝到配置文件路径:cp bootstrap.kubeconfig /opt/kubernetes/cfg#systemd 管理 kubeletcat > /usr/lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletAfter=docker.service[Service]EnvironmentFile=/opt/kubernetes/cfg/kubelet.confExecStart=/opt/kubernetes/bin/kubelet \$KUBELET_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF#启动并设置开机启动systemctl daemon-reloadsystemctl start kubeletsystemctl enable kubelet

批准 kubelet 证书申请并加入集群

# 查看 kubelet 证书请求kubectl get csr# 批准申请kubectl certificate approve node-csr-uCEGPOIiDdlLODKts8J658HrFq9CZ--K6M4G7bjhk8A# 查看节点kubectl get node

部署 kube-proxy

#创建配置文件# 切换工作目录cd TLS/k8s# 创建证书请求文件cat > /opt/kubernetes/cfg/kube-proxy.conf << EOFKUBE_PROXY_OPTS="--logtostderr=false \\--v=2 \\--log-dir=/opt/kubernetes/logs \\--config=/opt/kubernetes/cfg/kube-proxy-config.yml"EOF#配置参数文件cat > /opt/kubernetes/cfg/kube-proxy-config.yml << EOFkind: KubeProxyConfigurationapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0metricsBindAddress: 0.0.0.0:10249clientConnection:kubeconfig: /opt/kubernetes/cfg/kube-proxy.kubeconfighostnameOverride: k8s-masterclusterCIDR: 10.0.0.0/24EOF#生成 kube-proxy.kubeconfig 文件cat > kube-proxy-csr.json<< EOF{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]}EOF# 生成证书cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxyls kube-proxy*pemkube-proxy-key.pemkube-proxy.pem#生成 kubeconfig 文件:KUBE_APISERVER="https://192.168.31.71:6443"kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/ssl/ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=kube-proxy.kubeconfigkubectl config set-credentials kube-proxy \--client-certificate=./kube-proxy.pem \--client-key=./kube-proxy-key.pem \--embed-certs=true \--kubeconfig=kube-proxy.kubeconfigkubectl config set-context default \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=kube-proxy.kubeconfigkubectl config use-context default --kubeconfig=kube-proxy.kubeconfig#拷贝到配置文件指定路径:cp kube-proxy.kubeconfig /opt/kubernetes/cfg/#systemd 管理 kube-proxycat > /usr/lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes ProxyAfter=network.target[Service]EnvironmentFile=/opt/kubernetes/cfg/kube-proxy.confExecStart=/opt/kubernetes/bin/kube-proxy \$KUBE_PROXY_OPTSRestart=on-failureLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF# 启动并设置开机启动systemctl daemon-reloadsystemctl start kube-proxysystemctl enable kube-proxy

部署 CNI 网络

#解压二进制包并移动到默认工作目录:mkdir /opt/cni/bintar zxvf cni-plugins-linux-amd64-v0.8.6.tgz -C /opt/cni/bin#部署 CNI 网络:wgethttps://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlsed -i -r "s#quay.io/coreos/flannel:.*-amd64#lizhenliang/flannel:v0.12.0-amd64#g" kube-flannel.yml#默认镜像地址无法访问,修改为 docker hub 镜像仓库。kubectl apply -f kube-flannel.ymlkubectl get pods -n kube-systemkubectl get node#部署好网络插件,Node 准备就绪

授权 apiserver 访问 kubelet

cat > apiserver-to-kubelet-rbac.yaml<< EOFapiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubeletrules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metrics- pods/logverbs:- "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: system:kube-apiservernamespace: ""roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubeletsubjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kubernetesEOFkubectl apply -f apiserver-to-kubelet-rbac.yaml

新增加 Worker Node

#在 master 节点将 Worker Node 涉及文件拷贝到新节点 192.168.31.72/73scp -r /opt/kubernetes root@192.168.31.72:/opt/scp -r /usr/lib/systemd/system/{kubelet,kube-proxy}.service root@192.168.31.72:/usr/lib/systemd/systemscp -r /opt/cni/ root@192.168.31.72:/opt/ scp /opt/kubernetes/ssl/ca.pem root@192.168.31.72:/opt/kubernetes/ssl#删除 kubelet 证书和 kubeconfig 文件rm /opt/kubernetes/cfg/kubelet.kubeconfigrm -f /opt/kubernetes/ssl/kubelet*# 修改主机名vi /opt/kubernetes/cfg/kubelet.conf--hostname-override=k8s-node1vi /opt/kubernetes/cfg/kube-proxy-config.ymlhostnameOverride: k8s-node1#启动并设置开机启动systemctl daemon-reloadsystemctl start kubeletsystemctl enable kubeletsystemctl start kube-proxysystemctl enable kube-proxy#在 Master 上批准新 Node kubelet 证书申请kubectl get csrkubectl certificate approve node-csr-4zTjsaVSrhuyhIGqsefxzVoZDCNKei-aE2jyTP81UroKubectl get node

Rancher部署

胡珊

https://www.yuque.com/qinghou/bobplatform/vs5wfu

https://www.jianshu.com/p/870ef7ba8723

安装Docker

一键安装,适合能连互联网。

#https://developer.aliyun.com/article/110806curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

镜像加速;重启docker

sudo mkdir -p /etc/dockersudo tee /etc/docker/daemon.json <<-'EOF'{"registry-mirrors": ["https://tcy950ho.mirror.aliyuncs.com"]}EOFsudo systemctl daemon-reloadsudo systemctl restart docker

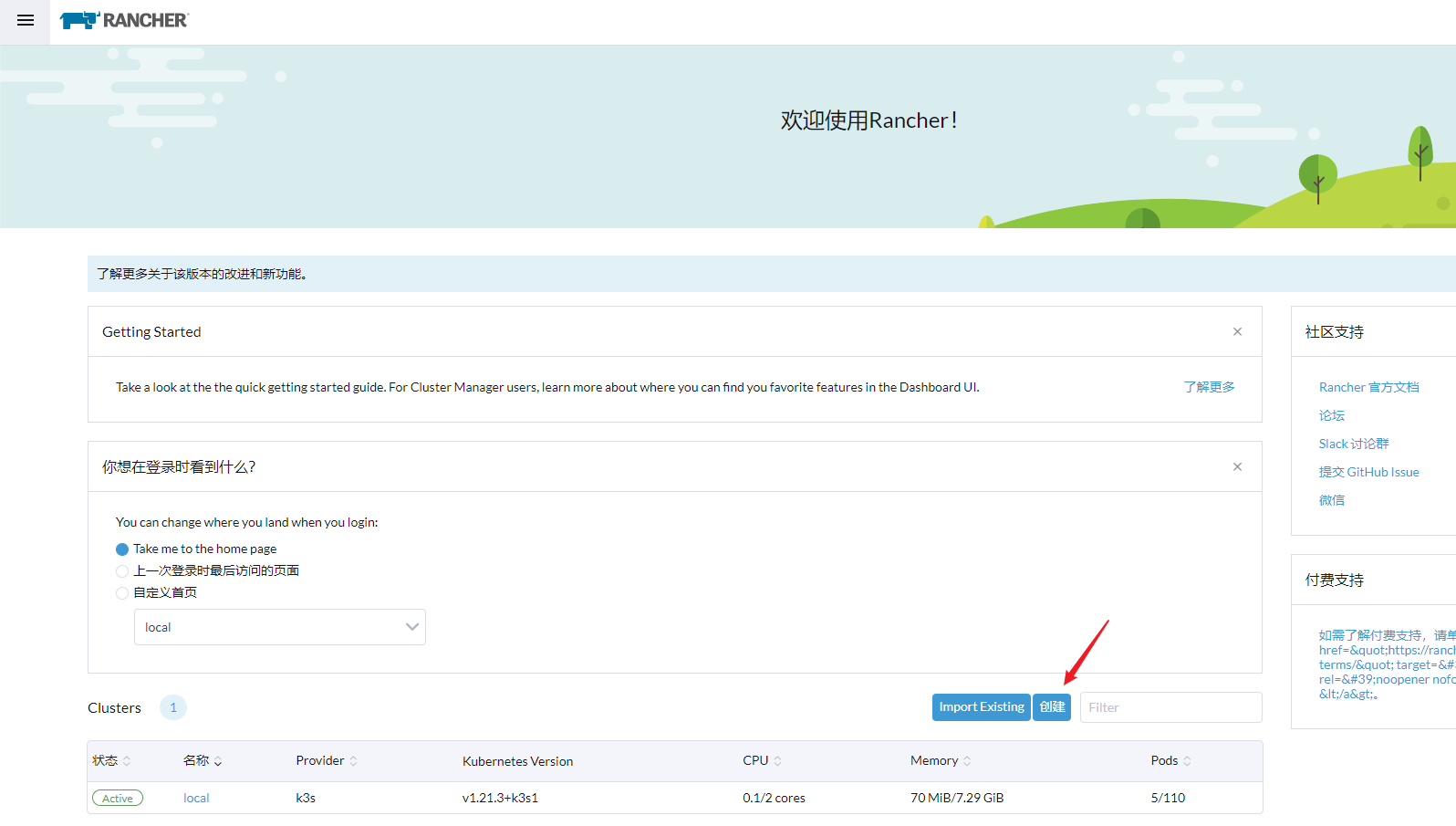

创建集群

启动rancher容器

#腾讯云docker run -d --restart=unless-stopped --privileged --name rancher -p 81:80 -p 444:443 rancher/rancher#无法访问。防火墙是关闭状态安全组也是放行状态#阿里云docker run -d --restart=unless-stopped --privileged --name rancher -p 82:80 -p 445:443 rancher/rancherdocker logs container-id 2>&1 | grep "Bootstrap Password:"

浏览器输入地址。

按照提示修改密码。

创建集群

按提示操作。集群状态变为“active”即可。

安装kubectl

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectlchmod +x ./kubectlmv ./kubectl /usr/local/bin/kubectl

执行kubectl get ns 命令报错 8080refused解决:

界面上,下载kubeconfig文件;

创建文件/root/.kube/config,将kubeconfig文件内容写入。

mkdir $HOME/.kubevi $HOME/.kube/configapiVersion: v1kind: Configclusters:- name: "local"cluster:server: "https://47.94.156.242:445/k8s/clusters/local"certificate-authority-data: "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUJwekNDQ\VUyZ0F3SUJBZ0lCQURBS0JnZ3Foa2pPUFFRREFqQTdNUnd3R2dZRFZRUUtFeE5rZVc1aGJXbGoKY\kdsemRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR1Z1WlhJdFkyRXdIa\GNOTWpJdwpOREV4TURFek9EVXdXaGNOTXpJd05EQTRNREV6T0RVd1dqQTdNUnd3R2dZRFZRUUtFe\E5rZVc1aGJXbGpiR2x6CmRHVnVaWEl0YjNKbk1Sc3dHUVlEVlFRREV4SmtlVzVoYldsamJHbHpkR\1Z1WlhJdFkyRXdXVEFUQmdjcWhrak8KUFFJQkJnZ3Foa2pPUFFNQkJ3TkNBQVQzOEhLdGlYQ00wN\jJuVXRadlkyR1JQTG9WYU5EWjlrSDZFbFFYUTRwWQpnTHRTdFRHcTFaUEg3K0MvaWhTamNJNkZON\GlVU25ic3JyaFY3RTlxZ0VwU28wSXdRREFPQmdOVkhROEJBZjhFCkJBTUNBcVF3RHdZRFZSMFRBU\UgvQkFVd0F3RUIvekFkQmdOVkhRNEVGZ1FVd2lESmt2cnAzS2JWTGlxRjRNaHoKN1hkb25xQXdDZ\1lJS29aSXpqMEVBd0lEU0FBd1JRSWdEWHgvY3ZaQUUxSy9HU3AxNExhYmk2akR4ZHlsb0w4QQpiO\DIwYTAwOUhhZ0NJUURVWVVIbndIVC9IT0Q3ZFVLVm9Jc0g5aUlWK3NIZzgrL2NjTkw4QWR3Y2FnP\T0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQ=="users:- name: "local"user:token: "kubeconfig-user-vndmjcbbvq:4c5d2ktkngzlpjwtm6gk64qrq76sqpzw2sr8894lsb25llfbrh2kqf"contexts:- name: "local"context:user: "local"cluster: "local"current-context: "local"

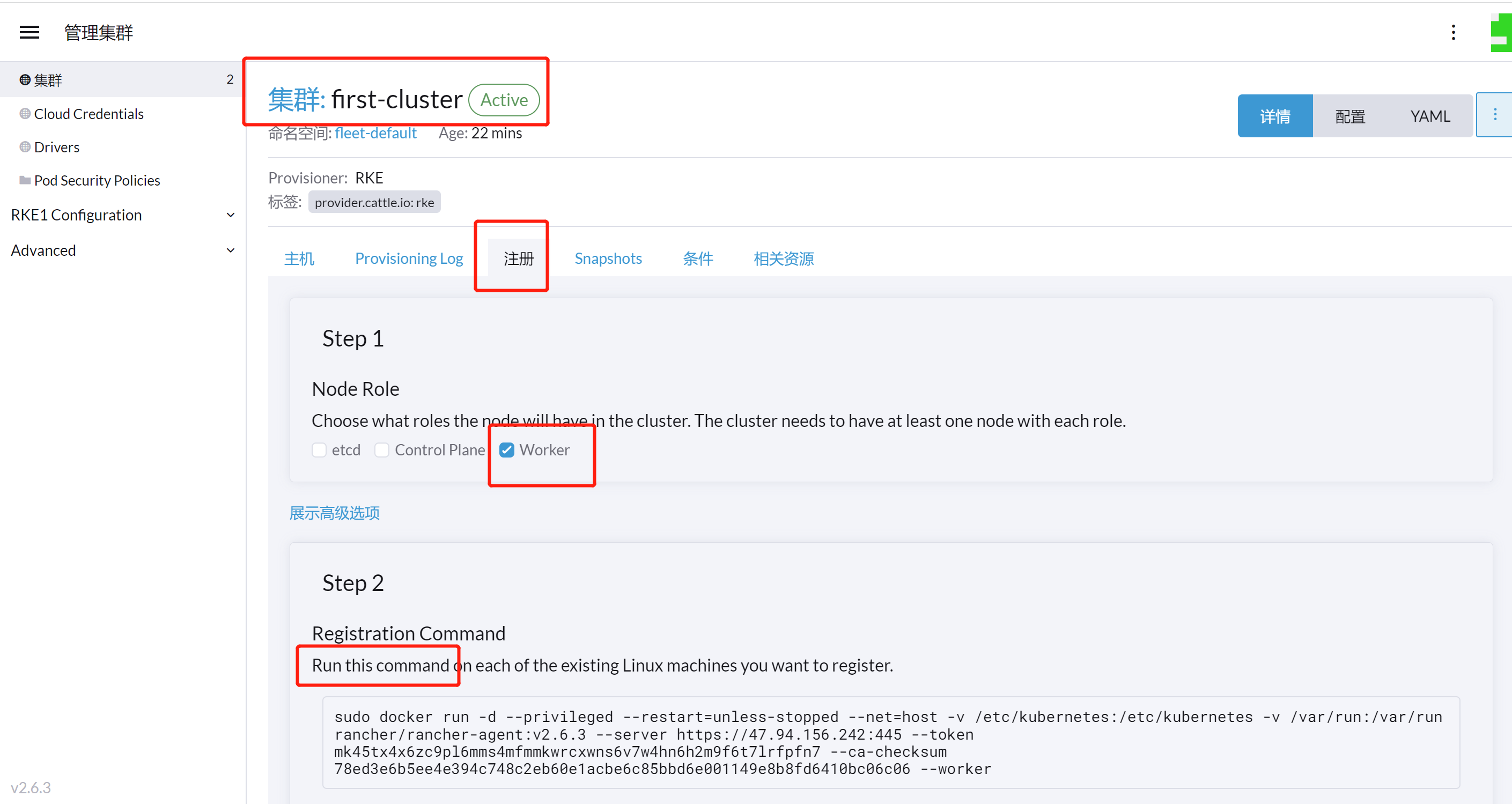

添加Worker节点

点击集群名,点击注册,选择节点角色Worker,在Worker节点上执行命令。

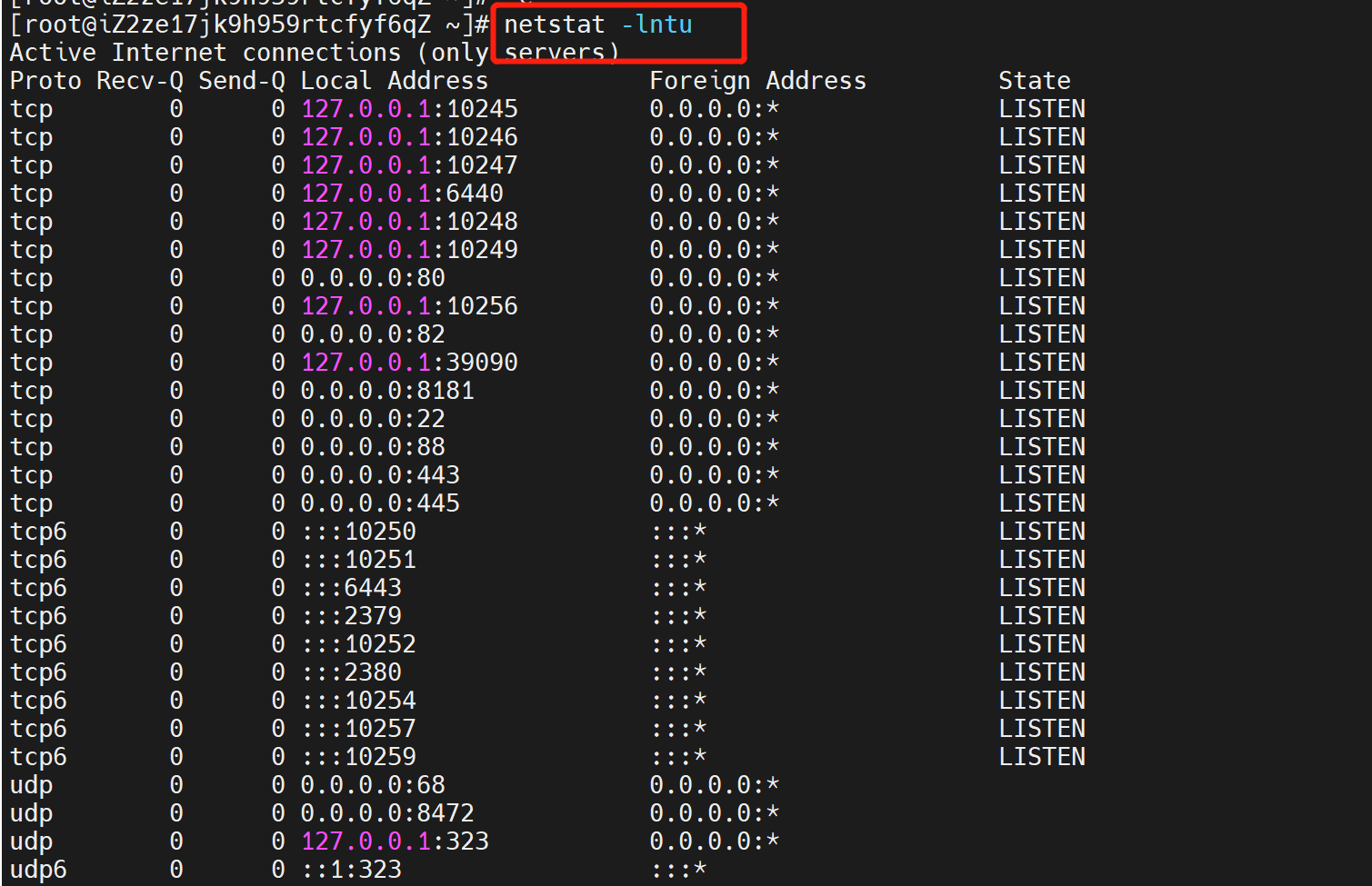

问题

1、

无法访问nodeport http://47.94.156.242:31080/

云服务器中curl,结果如下:

[root@iZ2ze17jk9h959rtcfyf6qZ ~]# curl localhost:31080curl: (7) Failed to connect to ::1: No route to host

原因:防火墙??开放端口?

但阿里云安全组设置中,已经包含了所有外部主机、所有端口

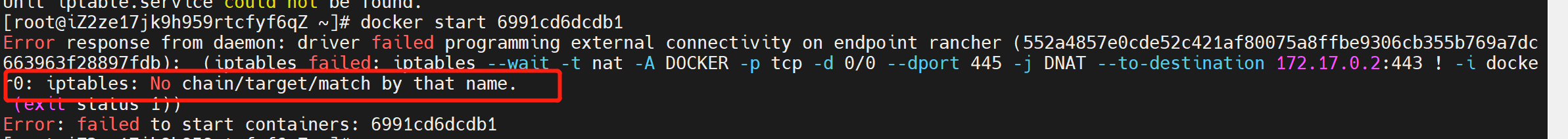

2、docker0: iptables: No chain/target/match by that name.

发现rancher页面无法访问,docker ps -a发现,rancher容器已经关闭,exit(1)

执行 **docer start <container_id>**,报错

原因:启动firewalld之后,iptables被激活,此时没有docker chain,需要重启docker后被加入到iptable里面。

执行systemctl restart docker

再执行docer start <container_id>

3、rancher 添加worker 时,显示一直在注册中,

在worker节点查看容器rancher-agent日志,显示

WARNING: bridge-nf-call-ip6tables is disabled

解决:

vi /etc/sysctl.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1sysctl -p

Container runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized

解决:

sudo mkdir -p /etc/cni/net.dsudo cat > /etc/cni/net.d/10-flannel.conflist <<EOF{"name": "cbr0","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}EOF

Unable to register node “vm-16-3-centos” with API server: Post https://127.0.0.1:6443/api/v1/nodes: read tcp 127.0.0.1:57898->127.0.0.1:6443: read: connection reset by peer

离线部署

离线部署kubernetes集群: 离线安装Kubernetes v1.17.1 - 离线部署 - 简书 (jianshu.com)