环境:VS 2015

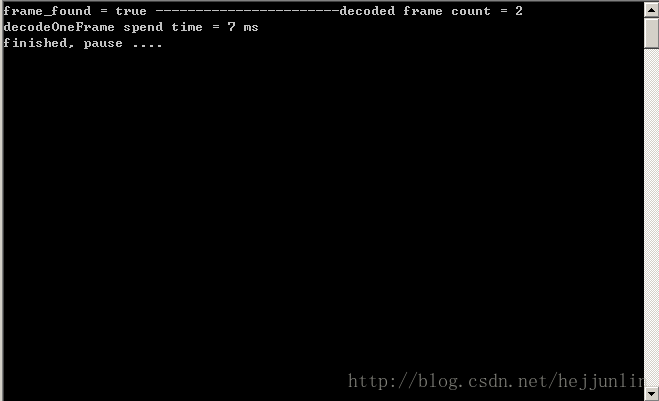

运行程序,生成RGB图像数据:

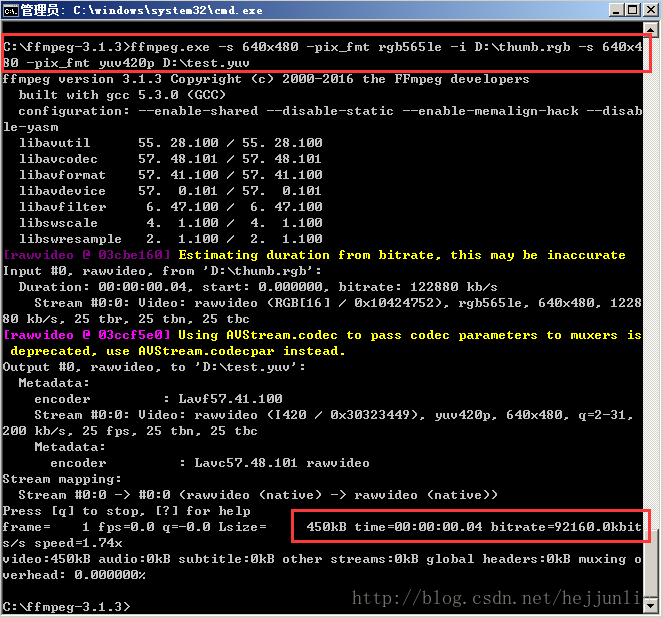

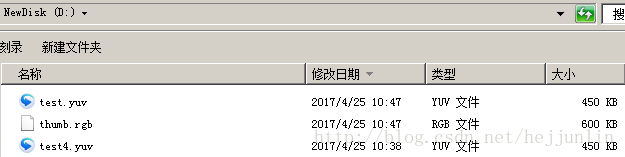

将RGB图像数据转换成yuv格式,通过ffmpeg命令,如下:

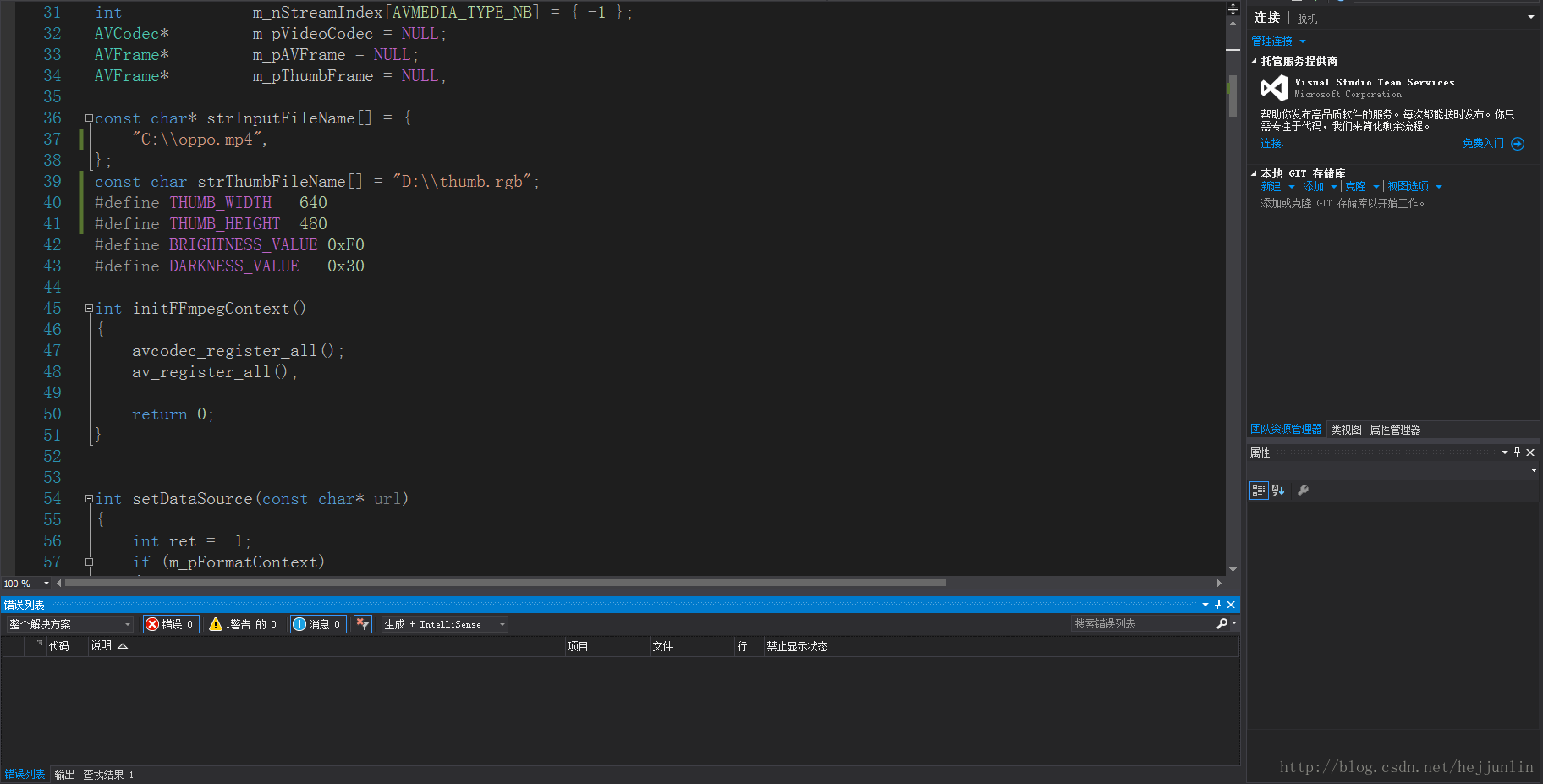

完整代码:

#include "stdafx.h"extern "C"{#include "libavformat\avformat.h"#include "libswscale\swscale.h"}#define DEBUG_SPEND_TIME 1#ifdef DEBUG_SPEND_TIME#ifdef _WIN32#include "windows.h"#include "mmsystem.h"#pragma comment(lib, "winmm.lib")#else#include <sys/time.h>#endif#endifAVFormatContext* m_pFormatContext = NULL;AVCodecContext* m_pCodecContext = NULL;int m_nStreamIndex[AVMEDIA_TYPE_NB] = { -1 };AVCodec* m_pVideoCodec = NULL;AVFrame* m_pAVFrame = NULL;AVFrame* m_pThumbFrame = NULL;const char* strInputFileName[] = {"C:\\oppo.mp4",};const char strThumbFileName[] = "D:\\thumb.rgb";#define THUMB_WIDTH 640#define THUMB_HEIGHT 480#define BRIGHTNESS_VALUE 0xF0#define DARKNESS_VALUE 0x30int initFFmpegContext(){avcodec_register_all();av_register_all();return 0;}int setDataSource(const char* url){int ret = -1;if (m_pFormatContext){avformat_free_context(m_pFormatContext);m_pFormatContext = NULL;}m_pFormatContext = avformat_alloc_context();if (!m_pFormatContext){return -1;}ret = avformat_open_input(&m_pFormatContext, url, NULL, NULL);if (ret != 0){delete m_pFormatContext;return ret;}ret = avformat_find_stream_info(m_pFormatContext, NULL);if (ret != 0){delete m_pFormatContext;return ret;}m_nStreamIndex[AVMEDIA_TYPE_VIDEO] = av_find_best_stream(m_pFormatContext, AVMEDIA_TYPE_VIDEO, -1, -1, NULL, 0);m_nStreamIndex[AVMEDIA_TYPE_AUDIO] = av_find_best_stream(m_pFormatContext, AVMEDIA_TYPE_AUDIO, -1, -1, NULL, 0);return ret;}int openDecoder(){int ret = -1;m_pCodecContext = m_pFormatContext->streams[m_nStreamIndex[AVMEDIA_TYPE_VIDEO]]->codec;if (m_pCodecContext){m_pVideoCodec = avcodec_find_decoder(m_pCodecContext->codec_id);ret = avcodec_open2(m_pCodecContext, m_pVideoCodec, NULL);if (ret != 0){return ret;}avcodec_flush_buffers(m_pCodecContext);}return ret;}void closeDecoder(){if (m_pVideoCodec){avcodec_close(m_pCodecContext);m_pCodecContext = NULL;}}int decodeOneFrame(AVFrame* pFrame){int ret = 0;bool frame_found = false;int decoded_frame_count = 0;AVPacket pkt;do{int got_frame = 0;ret = av_read_frame(m_pFormatContext, &pkt);if (ret < 0){break;}if (pkt.flags != AV_PKT_FLAG_KEY){av_free_packet(&pkt);continue;}if (pkt.stream_index == m_nStreamIndex[AVMEDIA_TYPE_VIDEO]){ret = avcodec_decode_video2(m_pCodecContext, pFrame, &got_frame, &pkt);if (got_frame && ret >=0){if (pFrame->width != m_pCodecContext->width || pFrame->height != m_pCodecContext->height){m_pCodecContext->width = pFrame->width;m_pCodecContext->height = pFrame->height;decoded_frame_count++;av_free_packet(&pkt);continue;}decoded_frame_count++;//skip black and white pituresuint32_t y_value = 0;uint32_t y_half = 0;uint32_t y_count = 0;int pixel_count = pFrame->width * pFrame->height;bool bHalf = false;for (int i = 0; i < pixel_count; i+=3){uint8_t y_temp = (uint8_t)(*(uint8_t*)((uint8_t*)(pFrame->data[0]) + i));y_value += y_temp;y_count++;if (!bHalf && i > pixel_count / 6){y_half = y_value / y_count;bHalf = true;}}y_value /= y_count;if (y_half == y_value){printf("decoded frame count = %d y_half=%d == y_value=%d, skip this frame!\n", decoded_frame_count, y_half, y_value);continue;}if (y_value < BRIGHTNESS_VALUE && y_value > DARKNESS_VALUE){frame_found = true;printf("frame_found = true -----------------------decoded frame count = %d\n", decoded_frame_count);}}#ifdef SAVE_YUV_FRAMEchar szName[128];sprintf(szName, "D:\\test_%d.yuv", frame_count);// save the yuvFILE *pFile = fopen(szName, "ab");if (pFile){fwrite(pFrame->data[0], 1, pFrame->width * pFrame->height, pFile);fwrite(pFrame->data[1], 1, pFrame->width * pFrame->height * 1 / 4, pFile);fwrite(pFrame->data[2], 1, pFrame->width * pFrame->height * 1 / 4, pFile);fclose(pFile);}#endif}av_free_packet(&pkt);} while ((!frame_found) && (ret >= 0));av_free_packet(&pkt);return ret;}int getThumbnail(AVFrame* pInputFrame, AVFrame* pOutputFrame, int desW, int desH){if (pInputFrame == NULL || pOutputFrame == NULL){return -1;}SwsContext* pSwsContext = NULL;pSwsContext = sws_getCachedContext(pSwsContext, pInputFrame->width, pInputFrame->height, (AVPixelFormat)pInputFrame->format,desW, desH, AV_PIX_FMT_RGB565, SWS_BICUBIC, NULL, NULL, NULL);if (pSwsContext == NULL){return -1;}m_pThumbFrame->width = desW;m_pThumbFrame->height = desH;m_pThumbFrame->format = AV_PIX_FMT_RGB565;av_frame_get_buffer(m_pThumbFrame, 16);sws_scale(pSwsContext, pInputFrame->data, pInputFrame->linesize, 0, pInputFrame->height, m_pThumbFrame->data, m_pThumbFrame->linesize);sws_freeContext(pSwsContext);return 0;}int getFrameAt(int64_t timeUs, int width, int height){int ret = -1;AVFrame* pFrame = NULL;ret = avformat_seek_file(m_pFormatContext, -1, INT16_MIN, timeUs, INT16_MAX, 0);pFrame = av_frame_alloc();m_pThumbFrame = av_frame_alloc();ret = openDecoder();if (ret != 0){av_frame_free(&pFrame);av_frame_free(&m_pThumbFrame);return ret;}#ifdef DEBUG_SPEND_TIME#ifdef _WIN32DWORD start_time = timeGetTime();#elsestruct timeval start, end;gettimeofday(&start, NULL);#endif#endifret = decodeOneFrame(pFrame);if (ret < 0){av_frame_free(&pFrame);av_frame_free(&m_pThumbFrame);return ret;}#ifdef DEBUG_SPEND_TIME#ifdef _WIN32DWORD end_time = timeGetTime();printf("decodeOneFrame spend time = %d ms\n", end_time - start_time);#elsegettimeofday(&end, NULL);int spend_time = (end.tv_sec - start.tv_sec) * 1000 + (end.tv_usec - start.tv_usec) / 1000;printf("spend_time = %d ms\n", spend_time);#endif#endifret = getThumbnail(pFrame, m_pThumbFrame, width, height);if (ret < 0){av_frame_free(&pFrame);av_frame_free(&m_pThumbFrame);return ret;}// save the rgb565FILE *pFile = fopen(strThumbFileName, "ab");if (pFile){fwrite(m_pThumbFrame->data[0], 1, m_pThumbFrame->width * m_pThumbFrame->height * 2, pFile);fclose(pFile);}av_frame_free(&pFrame);av_frame_free(&m_pThumbFrame);closeDecoder();return ret;}int _tmain(int argc, _TCHAR* argv[]){int ret = -1;initFFmpegContext();int file_count = sizeof(strInputFileName) / sizeof(strInputFileName[0]);for (int i = 0; i < file_count; i++){const char* pFileName = strInputFileName[i];ret = setDataSource(pFileName);getFrameAt(-1, THUMB_WIDTH, THUMB_HEIGHT);}//pauseprintf("finished, pause ....\n");getchar();return 0;}