垂直分表: 将一张宽表(字段很多的表), 按照字段的访问频次进行拆分,就是按照表单结构进行 拆。

垂直分库: 根据不同的业务,将表进行分类, 拆分到不同的数据库. 这些库可以部署在不同的服 务器,分摊访问压力.

水平分库: 将一张表的数据 ( 按照数据行) 分到多个不同的数据库.每个库的表结构相同

水平分表: 将一张表的数据 ( 按照数据行) , 分配到同一个数据库的多张表中,每个表都只有一部 分数据.

接下来阿粉就实战使用SpringBoot和Mysql 来说实现分库分表,直接先从Sharding 开始,毕竟是jar包的方式,相对来说比较简单。

搭建Sharding环境完成分库分表

我们首先先从分表来开始我们使用Sharding-JDBC的操作。

Sharding-JDBC分表

第一步创建数据库及其对应的相同的两张表结构的表

我们先从我们的mysql上创建我们的数据库,直接起名叫做order库

然后我们分别创建两个表,分别是order_1 和order2。

这两张表是订单表拆分后的表,我们通过Sharding-Jdbc向订单表插入数据,按照一定的分片规则,主键 为偶数的落入order_1表 ,为奇数的落入order_2表, 再通过Sharding-Jdbc 进行查询.

DROP TABLE IF EXISTS order_1;CREATE TABLE order_1 (order_id BIGINT(20) PRIMARY KEY AUTO_INCREMENT ,user_id INT(11) ,product_name VARCHAR(128),COUNT INT(11));

DROP TABLE IF EXISTS order_2;CREATE TABLE order_2 (order_id BIGINT(20) PRIMARY KEY AUTO_INCREMENT ,user_id INT(11) ,product_name VARCHAR(128),COUNT INT(11));

第二步

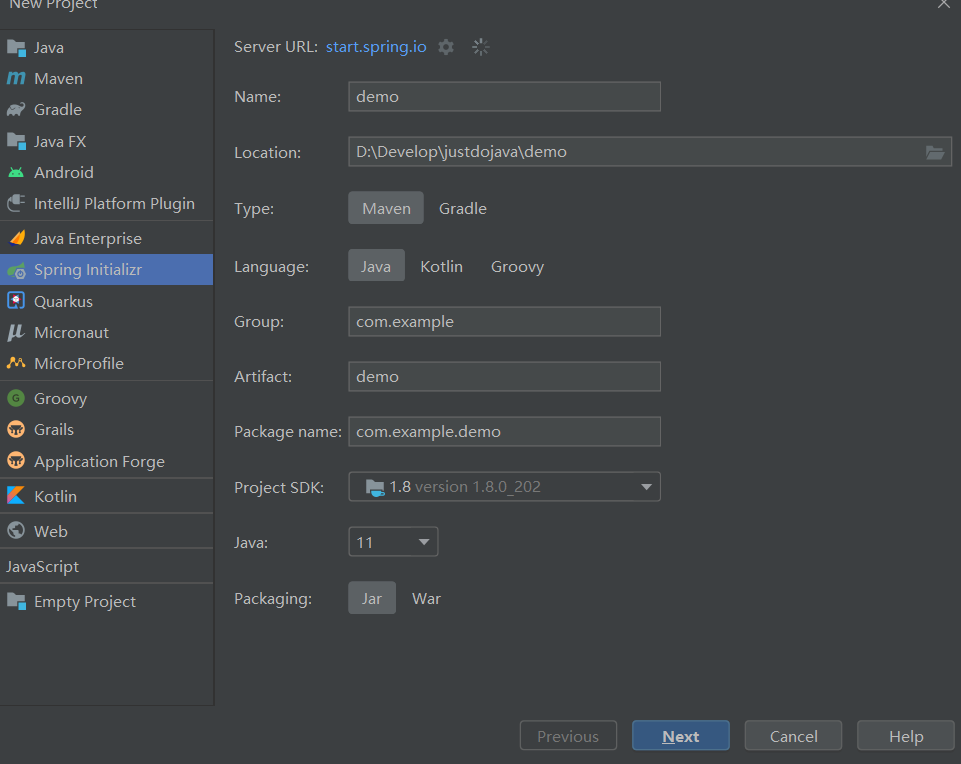

创建一个SpringBoot的项目,然后配置Sharding的依赖

依赖如下:

<dependency><groupId>mysql</groupId><artifactId>mysql-connector-java</artifactId></dependency><dependency><groupId>org.mybatis.spring.boot</groupId><artifactId>mybatis-spring-boot-starter</artifactId></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid-spring-boot-starter</artifactId></dependency><dependency><groupId>org.apache.shardingsphere</groupId><artifactId>sharding-jdbc-spring-boot-starter</artifactId></dependency><dependency><groupId>org.mybatis</groupId><artifactId>mybatis-typehandlers-jsr310</artifactId></dependency><dependency><groupId>junit</groupId><artifactId>junit</artifactId><scope>test</scope></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId></dependency><!-- https://mvnrepository.com/artifact/javax.xml.bind/jaxb-api --><dependency><groupId>javax.xml.bind</groupId><artifactId>jaxb-api</artifactId><version>2.3.0-b170201.1204</version></dependency><!-- https://mvnrepository.com/artifact/javax.activation/activation --><dependency><groupId>javax.activation</groupId><artifactId>activation</artifactId><version>1.1</version></dependency><!-- https://mvnrepository.com/artifact/org.glassfish.jaxb/jaxb-runtime --><dependency><groupId>org.glassfish.jaxb</groupId><artifactId>jaxb-runtime</artifactId><version>2.3.0-b170127.1453</version></dependency>

第三步

第三步也是我们这里相对来说比较重要的一步,那就是配置分片规则,因为这里的分表是直接把数据进行水平拆分成到2个表中,所以属于水平切分数据表的操作,配置如下:

- 基础配置

spring:application:name: sharding-jdbc-simplehttp:encoding:enabled: truecharset: UTF-8force: truemain:allow-bean-definition-overriding: true

- 配置数据源

shardingsphere:datasource:names: db1db1:type: com.alibaba.druid.pool.DruidDataSourcedriver-class-name: com.mysql.jdbc.Driverurl: jdbc:mysql://127.0.0.1:3306/order?characterEncoding=UTF-8&useSSL=falseusername: rootpassword: 123456sharding:tables:order:actual-data-nodes: db1.pay_order_$->{1..2}key-generator:column: order_idtype: SNOWFLAKEtable-strategy:inline:sharding-column: order_idalgorithm-expression: pay_order_$->{order_id % 2 + 1}props:sql:show: trueserver:servlet:context-path: /sharding-jdbcmybatis:configuration:map-underscore-to-camel-case: true

上面的配置,就是完整的配置Sharding-JDBC配置了,其中还包括了 Mybatis 的一个配置,以及SQL日志打印。

接下来我们直接写一个Junit测试,然后在我们的数据库中直接插入数据看一下,偶数订单在表1中,基数订单在表2中。

Junit测试

@Mapper@Componentpublic interface OrderDao {/*** 新增订单* */@Insert("INSERT INTO order(user_id,product_name,COUNT) VALUES(#{user_id},#{product_name},#{count})")int insertOrder(@Param("user_id") int user_id,@Param("product_name") String product_name,@Param("count") int count);}//测试public class OrderTest {@AutowiredOrderDao orderDao;@Testpublic void testInsertOrder(){for (int i = 0; i < 10; i++) {orderDao.insertOrder(100+i,"大冰箱"+i,10);}}}

当我们执行完毕的时候,我们去数据库里面去看一下这个数据是不是分开保存到两个不同表,在看之前先看看打印的sql日志。

SQLStatement: InsertStatement(super=DMLStatement(super=AbstractSQLStatement(type=DML, tables=Tables(tables=[Table(name=order, alias=Optional.absent())]), routeConditions=Conditions(orCondition=OrCondition(andConditions=[AndCondition(conditions=[])])), encryptConditions=Conditions(orCondition=OrCondition(andConditions=[])), sqlTokens=[TableToken(tableName=order, quoteCharacter=NONE, schemaNameLength=0), SQLToken(startIndex=17)], parametersIndex=3, logicSQL=INSERT INTO order(user_id,product_name,COUNT) VALUES(?,?,?)), deleteStatement=false, updateTableAlias={}, updateColumnValues={}, whereStartIndex=0, whereStopIndex=0, whereParameterStartIndex=0, whereParameterEndIndex=0), columnNames=[user_id, product_name, COUNT], values=[InsertValue(columnValues=[org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@d611f1c, org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@4f2d014a, org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@51fc862e])])2022-06-13 13:47:59.923 INFO 7384 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: INSERT INTO order_1 (user_id, product_name, COUNT, order_id) VALUES (?, ?, ?, ?) ::: [107, 大冰箱7, 10, 743103497175564288]2022-06-13 13:47:59.976 INFO 7384 --- [ main] ShardingSphere-SQL : Rule Type: sharding2022-06-13 13:47:59.976 INFO 7384 --- [ main] ShardingSphere-SQL : Logic SQL: INSERT INTO order(user_id,product_name,COUNT) VALUES(?,?,?)2022-06-13 13:47:59.976 INFO 7384 --- [ main] ShardingSphere-SQL : SQLStatement: InsertStatement(super=DMLStatement(super=AbstractSQLStatement(type=DML, tables=Tables(tables=[Table(name=order, alias=Optional.absent())]), routeConditions=Conditions(orCondition=OrCondition(andConditions=[AndCondition(conditions=[])])), encryptConditions=Conditions(orCondition=OrCondition(andConditions=[])), sqlTokens=[TableToken(tableName=order, quoteCharacter=NONE, schemaNameLength=0), SQLToken(startIndex=17)], parametersIndex=3, logicSQL=INSERT INTO order(user_id,product_name,COUNT) VALUES(?,?,?)), deleteStatement=false, updateTableAlias={}, updateColumnValues={}, whereStartIndex=0, whereStopIndex=0, whereParameterStartIndex=0, whereParameterEndIndex=0), columnNames=[user_id, product_name, COUNT], values=[InsertValue(columnValues=[org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@d611f1c, org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@4f2d014a, org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@51fc862e])])2022-06-13 13:47:59.977 INFO 7384 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: INSERT INTO order_2 (user_id, product_name, COUNT, order_id) VALUES (?, ?, ?, ?) ::: [108, 大冰箱8, 10, 743103497402056705]2022-06-13 13:48:00.036 INFO 7384 --- [ main] ShardingSphere-SQL : Rule Type: sharding2022-06-13 13:48:00.036 INFO 7384 --- [ main] ShardingSphere-SQL : Logic SQL: INSERT INTO order(user_id,product_name,COUNT) VALUES(?,?,?)2022-06-13 13:48:00.036 INFO 7384 --- [ main] ShardingSphere-SQL : SQLStatement: InsertStatement(super=DMLStatement(super=AbstractSQLStatement(type=DML, tables=Tables(tables=[Table(name=order, alias=Optional.absent())]), routeConditions=Conditions(orCondition=OrCondition(andConditions=[AndCondition(conditions=[])])), encryptConditions=Conditions(orCondition=OrCondition(andConditions=[])), sqlTokens=[TableToken(tableName=order, quoteCharacter=NONE, schemaNameLength=0), SQLToken(startIndex=17)], parametersIndex=3, logicSQL=INSERT INTO order(user_id,product_name,COUNT) VALUES(?,?,?)), deleteStatement=false, updateTableAlias={}, updateColumnValues={}, whereStartIndex=0, whereStopIndex=0, whereParameterStartIndex=0, whereParameterEndIndex=0), columnNames=[user_id, product_name, COUNT], values=[InsertValue(columnValues=[org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@d611f1c, org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@4f2d014a, org.apache.shardingsphere.core.parse.old.parser.expression.SQLPlaceholderExpression@51fc862e])])2022-06-13 13:48:00.036 INFO 7384 --- [ main] ShardingSphere-SQL : Actual SQL: db1 ::: INSERT INTO order_1 (user_id, product_name, COUNT, order_id) VALUES (?, ?, ?, ?) ::: [109, 大冰箱9, 10, 743103497649520640]

我们再看看数据库:

order2:

order1:

非常完美,直接成功,接下来就是直接执行查询,然后去查询我们对应表中的数据。

我们再来一个测试看一下:

@Testpublic void testFindOrderByIds(){List<Long> ids = new ArrayList<>();ids.add(743103495833387008L);ids.add(743103495321681921L);List<Map> list = orderDao.findOrderByIds(ids);System.out.println(list);}

同样的,我们给定1表和2表中的一个order_id 来进行 In 查询,看是否能正确返回我们想要的数据:

/*** 根据ID 查询订单* */@Select({"<script>"+"select * from order p where p.order_id in " +"<foreach collection='orderIds' item='id' open='(' separator = ',' close=')'>#{id}</foreach>"+"</script>"})List<Map> findOrderByIds(@Param("orderIds") List<Long> orderIds);

接下来就是看结果的时刻,

[{user_id=101, COUNT=10, order_id=743103495833387008, product_name=大冰箱1}, {user_id=100, COUNT=10, order_id=743103495321681921, product_name=大冰箱0}]

很成功,我们使用Sharding-JDBC 进行单库水平切分表的操作已经完成了。