1.概念

1.补全api主要分为四类

- Term Suggester(纠错补全,输入错误的情况下补全正确的单词)

- Phrase Suggester(自动补全短语,输入一个单词补全整个短语)

- Completion Suggester(完成补全单词,输出如前半部分,补全整个单词)

- Context Suggester(上下文补全)

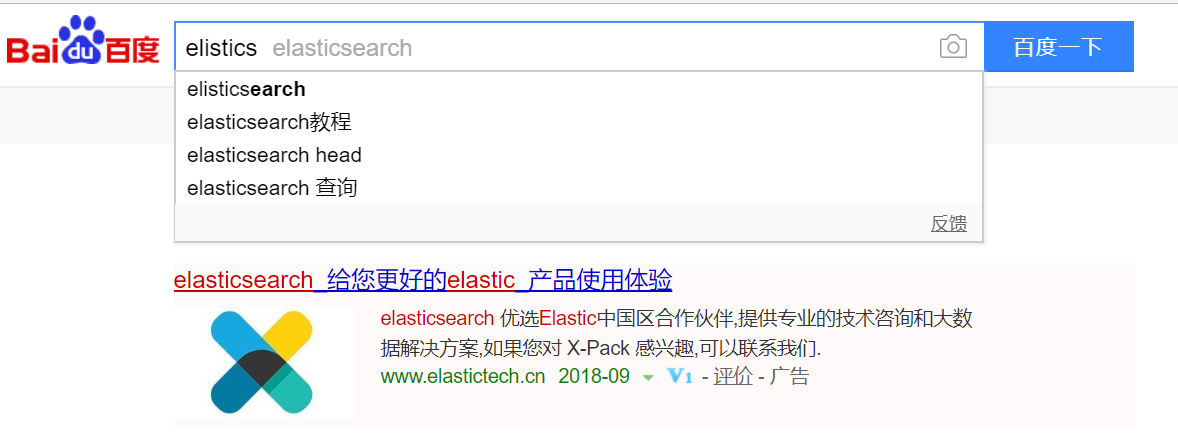

整体效果类似百度搜索,如图:

2.Term Suggester(纠错补全)

2.1.api

1.建立索引

PUT /book4{"mappings": {"english": {"properties": {"passage": {"type": "text"}}}}}

2.2.插入数据

curl -H "Content-Type: application/json" -XPOST 'http:localhost:9200/_bulk' -d'{ "index" : { "_index" : "book4", "_type" : "english" } }{ "passage": "Lucene is cool"}{ "index" : { "_index" : "book4", "_type" : "english" } }{ "passage": "Elasticsearch builds on top of lucene"}{ "index" : { "_index" : "book4", "_type" : "english" } }{ "passage": "Elasticsearch rocks"}{ "index" : { "_index" : "book4", "_type" : "english" } }{ "passage": "Elastic is the company behind ELK stack"}{ "index" : { "_index" : "book4", "_type" : "english" } }{ "passage": "elk rocks"}{ "index" : { "_index" : "book4", "_type" : "english" } }{ "passage": "elasticsearch is rock solid"}'

2.3.看下储存的分词有哪些

post /_analyze{"text": ["Lucene is cool","Elasticsearch builds on top of lucene","Elasticsearch rocks","Elastic is the company behind ELK stack","elk rocks","elasticsearch is rock solid"]}

结果:

{"tokens": [{"token": "lucene","start_offset": 0,"end_offset": 6,"type": "<ALPHANUM>","position": 0},{"token": "is","start_offset": 7,"end_offset": 9,"type": "<ALPHANUM>","position": 1},{"token": "cool","start_offset": 10,"end_offset": 14,"type": "<ALPHANUM>","position": 2},{"token": "elasticsearch","start_offset": 15,"end_offset": 28,"type": "<ALPHANUM>","position": 103},{"token": "builds","start_offset": 29,"end_offset": 35,"type": "<ALPHANUM>","position": 104},{"token": "on","start_offset": 36,"end_offset": 38,"type": "<ALPHANUM>","position": 105},{"token": "top","start_offset": 39,"end_offset": 42,"type": "<ALPHANUM>","position": 106},{"token": "of","start_offset": 43,"end_offset": 45,"type": "<ALPHANUM>","position": 107},{"token": "lucene","start_offset": 46,"end_offset": 52,"type": "<ALPHANUM>","position": 108},{"token": "elasticsearch","start_offset": 53,"end_offset": 66,"type": "<ALPHANUM>","position": 209},{"token": "rocks","start_offset": 67,"end_offset": 72,"type": "<ALPHANUM>","position": 210},{"token": "elastic","start_offset": 73,"end_offset": 80,"type": "<ALPHANUM>","position": 311},{"token": "is","start_offset": 81,"end_offset": 83,"type": "<ALPHANUM>","position": 312},{"token": "the","start_offset": 84,"end_offset": 87,"type": "<ALPHANUM>","position": 313},{"token": "company","start_offset": 88,"end_offset": 95,"type": "<ALPHANUM>","position": 314},{"token": "behind","start_offset": 96,"end_offset": 102,"type": "<ALPHANUM>","position": 315},{"token": "elk","start_offset": 103,"end_offset": 106,"type": "<ALPHANUM>","position": 316},{"token": "stack","start_offset": 107,"end_offset": 112,"type": "<ALPHANUM>","position": 317},{"token": "elk","start_offset": 113,"end_offset": 116,"type": "<ALPHANUM>","position": 418},{"token": "rocks","start_offset": 117,"end_offset": 122,"type": "<ALPHANUM>","position": 419},{"token": "elasticsearch","start_offset": 123,"end_offset": 136,"type": "<ALPHANUM>","position": 520},{"token": "is","start_offset": 137,"end_offset": 139,"type": "<ALPHANUM>","position": 521},{"token": "rock","start_offset": 140,"end_offset": 144,"type": "<ALPHANUM>","position": 522},{"token": "solid","start_offset": 145,"end_offset": 150,"type": "<ALPHANUM>","position": 523}]}

2.4.term suggest api(搜索单个字段)

搜索下试试,给出错误单词Elasticsearaach

POST /book4/_search{"suggest" : {"my-suggestion" : {"text" : "Elasticsearaach","term" : {"field" : "passage","suggest_mode": "popular"}}}}

response:

{"took": 26,"timed_out": false,"_shards": {"total": 5,"successful": 5,"skipped": 0,"failed": 0},"hits": {"total": 0,"max_score": 0,"hits": []},"suggest": {"my-suggestion": [{"text": "elasticsearaach","offset": 0,"length": 15,"options": [{"text": "elasticsearch","score": 0.84615386,"freq": 3}]}]}}

2.5.搜索多个字段分别给出提示:

POST _search{"suggest": {"my-suggest-1" : {"text" : "tring out Elasticsearch","term" : {"field" : "message"}},"my-suggest-2" : {"text" : "kmichy","term" : {"field" : "user"}}}}

该term建议者提出基于编辑距离条款。在建议术语之前分析提供的建议文本。建议的术语是根据分析的建议文本标记提供的。该term建议者不走查询到的是是的请求部分。

常见建议选项:

text |

建议文字。建议文本是必需的选项,需要全局或按建议设置。 |

|---|---|

field |

从中获取候选建议的字段。这是一个必需的选项,需要全局设置或根据建议设置。 |

analyzer |

用于分析建议文本的分析器。默认为建议字段的搜索分析器。 |

size |

每个建议文本标记返回的最大更正。 |

sort |

定义如何根据建议文本术语对建议进行排序。两个可能的值: - score:先按分数排序,然后按文档频率排序,再按术语本身排序。- frequency:首先按文档频率排序,然后按相似性分数排序,然后按术语本身排序。 |

suggest_mode |

建议模式控制包含哪些建议或控制建议的文本术语,建议。可以指定三个可能的值: - missing:仅提供不在索引词典中,但是在原文档中的词。这是默认值。- popular:仅提供在索引词典中出现的词语。- always:索引词典中出没出现的词语都要给出建议。 |

其他术语建议选项:

lowercase_terms |

在文本分析之后,建议文本术语小写。 |

|---|---|

max_edits |

最大编辑距离候选建议可以具有以便被视为建议。只能是介于1和2之间的值。任何其他值都会导致抛出错误的请求错误。默认为2。 |

prefix_length |

必须匹配的最小前缀字符的数量才是候选建议。默认为1.增加此数字可提高拼写检查性能。通常拼写错误不会出现在术语的开头。(旧名“prefix_len”已弃用) |

min_word_length |

建议文本术语必须具有的最小长度才能包含在内。默认为4.(旧名称“min_word_len”已弃用) |

shard_size |

设置从每个单独分片中检索的最大建议数。在减少阶段,仅根据size选项返回前N个建议。默认为该 size选项。将此值设置为高于该值的值size可能非常有用,以便以性能为代价获得更准确的拼写更正文档频率。由于术语在分片之间被划分,因此拼写校正频率的分片级文档可能不准确。增加这些将使这些文档频率更精确。 |

max_inspections |

用于乘以的因子, shards_size以便在碎片级别上检查更多候选拼写更正。可以以性能为代价提高准确性。默认为5。 |

min_doc_freq |

建议应出现的文档数量的最小阈值。可以指定为绝对数字或文档数量的相对百分比。这可以仅通过建议高频项来提高质量。默认为0f且未启用。如果指定的值大于1,则该数字不能是小数。分片级文档频率用于此选项。 |

max_term_freq |

建议文本令牌可以存在的文档数量的最大阈值,以便包括在内。可以是表示文档频率的相对百分比数(例如0.4)或绝对数。如果指定的值大于1,则不能指定小数。默认为0.01f。这可用于排除高频术语的拼写检查。高频术语通常拼写正确,这也提高了拼写检查的性能。分片级文档频率用于此选项。 |

string_distance |

用于比较类似建议术语的字符串距离实现。可以指定五个可能的值: internal- 默认值基于damerau_levenshtein,但高度优化用于比较索引中术语的字符串距离。damerau_levenshtein - 基于Damerau-Levenshtein算法的字符串距离算法。levenshtein - 基于Levenshtein编辑距离算法的字符串距离算法。 jaro_winkler - 基于Jaro-Winkler算法的字符串距离算法。 ngram - 基于字符n-gram的字符串距离算法。 |

3.phase sguesster:短语纠错

phrase 短语建议,在term的基础上,会考量多个term之间的关系,比如是否同时出现在索引的原文里,相邻程度,以及词频等

示例1:

POST book4/_search{"suggest" : {"myss":{"text": "Elasticsearch rock","phrase": {"field": "passage"}}}}

{"took": 11,"timed_out": false,"_shards": {"total": 5,"successful": 5,"skipped": 0,"failed": 0},"hits": {"total": 0,"max_score": 0,"hits": []},"suggest": {"myss": [{"text": "Elasticsearch rock","offset": 0,"length": 18,"options": [{"text": "elasticsearch rocks","score": 0.3467123}]}]}}

4. Completion suggester 自动补全

针对自动补全场景而设计的建议器。此场景下用户每输入一个字符的时候,就需要即时发送一次查询请求到后端查找匹配项,在用户输入速度较高的情况下对后端响应速度要求比较苛刻。因此实现上它和前面两个Suggester采用了不同的数据结构,索引并非通过倒排来完成,而是将analyze过的数据编码成FST和索引一起存放。对于一个open状态的索引,FST会被ES整个装载到内存里的,进行前缀查找速度极快。但是FST只能用于前缀查找,这也是Completion Suggester的局限所在。

1.建立索引

POST /book5{"mappings": {"music" : {"properties" : {"suggest" : {"type" : "completion"},"title" : {"type": "keyword"}}}}}

插入数据:

POST /book5{"mappings": {"music" : {"properties" : {"suggest" : {"type" : "completion"},"title" : {"type": "keyword"}}}}}

Input 指定输入词 Weight 指定排序值(可选)

PUT music/music/5nupmmUBYLvVFwGWH3cu?refresh{"suggest" : {"input": [ "test", "book" ],"weight" : 34}}

指定不同的排序值:

PUT music/_doc/6Hu2mmUBYLvVFwGWxXef?refresh{"suggest" : [{"input": "test","weight" : 10},{"input": "good","weight" : 3}]}

示例1:查询建议根据前缀查询

POST book5/_search?pretty{"suggest": {"song-suggest" : {"prefix" : "te","completion" : {"field" : "suggest"}}}}

{"took": 8,"timed_out": false,"_shards": {"total": 5,"successful": 5,"skipped": 0,"failed": 0},"hits": {"total": 0,"max_score": 0,"hits": []},"suggest": {"song-suggest": [{"text": "te","offset": 0,"length": 2,"options": [{"text": "test my book1","_index": "book5","_type": "music","_id": "6Xu6mmUBYLvVFwGWpXeL","_score": 1,"_source": {"suggest": "test my book1"}},{"text": "test my book1","_index": "book5","_type": "music","_id": "6nu8mmUBYLvVFwGWSndC","_score": 1,"_source": {"suggest": "test my book1"}},{"text": "test my book1 english","_index": "book5","_type": "music","_id": "63u8mmUBYLvVFwGWZHdC","_score": 1,"_source": {"suggest": "test my book1 english"}}]}]}}

示例2:对建议查询结果去重

{"suggest": {"song-suggest" : {"prefix" : "te","completion" : {"field" : "suggest" ,"skip_duplicates": true}}}}

示例3:查询建议文档存储短语

POST /book5/music/63u8mmUBYLvVFwGWZHdC?refresh{"suggest" : {"input": [ "book1 english", "test english" ],"weight" : 20}}

查询:

POST book5/_search?pretty{"suggest": {"song-suggest" : {"prefix" : "test","completion" : {"field" : "suggest" ,"skip_duplicates": true}}}}

结果:

{"took": 7,"timed_out": false,"_shards": {"total": 5,"successful": 5,"skipped": 0,"failed": 0},"hits": {"total": 0,"max_score": 0,"hits": []},"suggest": {"song-suggest": [{"text": "test","offset": 0,"length": 4,"options": [{"text": "test english","_index": "book5","_type": "music","_id": "63u8mmUBYLvVFwGWZHdC","_score": 20,"_source": {"suggest": {"input": ["book1 english","test english"],"weight": 20}}},{"text": "test my book1","_index": "book5","_type": "music","_id": "6Xu6mmUBYLvVFwGWpXeL","_score": 1,"_source": {"suggest": "test my book1"}}]}]}}

5. 总结和建议

因此用好Completion Sugester并不是一件容易的事,实际应用开发过程中,需要根据数据特性和业务需要,灵活搭配analyzer和mapping参数,反复调试才可能获得理想的补全效果。

回到篇首搜索框的补全/纠错功能,如果用ES怎么实现呢?我能想到的一个的实现方式:

- 在用户刚开始输入的过程中,使用Completion Suggester进行关键词前缀匹配,刚开始匹配项会比较多,随着用户输入字符增多,匹配项越来越少。如果用户输入比较精准,可能Completion Suggester的结果已经够好,用户已经可以看到理想的备选项了。

- 如果Completion Suggester已经到了零匹配,那么可以猜测是否用户有输入错误,这时候可以尝试一下Phrase Suggester。

如果Phrase Suggester没有找到任何option,开始尝试term Suggester。

精准程度上(Precision)看: Completion > Phrase > term, 而召回率上(Recall)则反之。从性能上看,Completion Suggester是最快的,如果能满足业务需求,只用Completion Suggester做前缀匹配是最理想的。 Phrase和Term由于是做倒排索引的搜索,相比较而言性能应该要低不少,应尽量控制suggester用到的索引的数据量,最理想的状况是经过一定时间预热后,索引可以全量map到内存。