环境:

操作系统:Win10

Scala:2.12.14

Spark:3.1.2

Hadoop:3.2.0

Spark的安装很简单,直接Maven里面加依赖就行了

<dependencies><!-- https://mvnrepository.com/artifact/org.apache.spark/spark-core --><dependency><groupId>org.apache.spark</groupId><artifactId>spark-core_2.12</artifactId><version>3.1.2</version></dependency></dependencies>

但是会报一些错误比如

WARNING: An illegal reflective access operation has occurred WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/C:/Users/Administrator/.m2/repository/org/apache/spark/spark-unsafe_2.12/3.1.2/spark-unsafe_2.12-3.1.2.jar) to constructor java.nio.DirectByteBuffer(long,int) WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

大概就是说本地没有Hadoop资源。

所以我们还要安装Hadoop。

TODO

参考:Install Hadoop 3.3.0 on Windows 10 Step by Step Guide

================

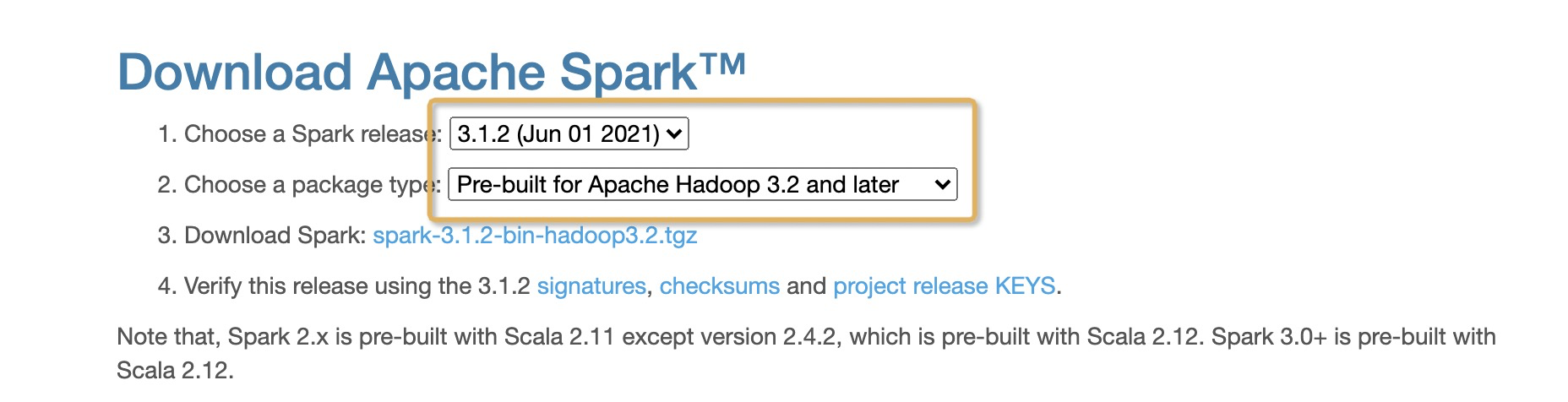

新路子,直接安装prebuild的Hadoop+Spark

https://blog.csdn.net/hil2000/article/details/90747665

import org.apache.spark.{SparkConf, SparkContext}object Spark01_WordCount {def main(args: Array[String]): Unit = {// Application// Spark 框架// TODO 建立和 Spark 框架的连接//JDBC: Connectionval sparkConf = new SparkConf().setMaster("local").setAppName("WordCount")val sc = new SparkContext(sparkConf)// TODO 执行业务操作// TODO 关闭连接sc.stop()}}