[root@liabio kubevirt-0.33.0]# export DOCKER_PREFIX=registry.cn-hangzhou.aliyuncs.com/smallsoup[root@liabio kubevirt-0.33.0]# export DOCKER_TAG=kubeinfo[root@liabio kubevirt-0.33.0]# make && make pushhack/dockerized "DOCKER_PREFIX=registry.cn-hangzhou.aliyuncs.com/smallsoup DOCKER_TAG=kubeinfo IMAGE_PULL_POLICY= VERBOSITY= ./hack/build-manifests.sh && \hack/bazel-fmt.sh && hack/bazel-build.sh"selecting docker as container runtimego version go1.13.14 linux/amd64Unable to find image 'imega/jq:1.6' locally1.6: Pulling from imega/jq7962620bcd88: Pulling fs layer7962620bcd88: Verifying Checksum7962620bcd88: Download complete7962620bcd88: Pull completeDigest: sha256:39d079b17c958870d03cac5b7b70300dc2e86fc97f9c31ae9a505fcd97418faeStatus: Downloaded newer image for imega/jq:1.6go version go1.13.14 linux/amd64digest files not found: won't use shasums, falling back to tagsINFO: Analyzed target //:gazelle (0 packages loaded, 0 targets configured).INFO: Found 1 target...ERROR: Process exited with status 1hack/print-workspace-status.sh: line 34: KUBEVIRT_GIT_VERSION: unbound variableTarget //:gazelle failed to buildUse --verbose_failures to see the command lines of failed build steps.INFO: Elapsed time: 0.568s, Critical Path: 0.06sINFO: 0 processes.FAILED: Build did NOT complete successfullyFAILED: Build did NOT complete successfullymake: *** [Makefile:9: all] Error 1

export DOCKER_PREFIX=registry.cn-hangzhou.aliyuncs.com/smallsoup

export DOCKER_TAG=kubeinfo

https://github.com/kubevirt/kubevirt/releases/

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/demo-content.yaml

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/kubevirt-cr.yaml

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/kubevirt-cr.yaml.j2

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/kubevirt-operator.yaml.j2

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/kubevirt-operatorsource.yaml

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/kubevirtoperator.v0.33.0.clusterserviceversion.yaml

wget https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/virtctl-v0.33.0-linux-x86_64

部署demo

https://github.com/kubevirt/demo

在部署KubeVirt之前,我们将创建一个小的配置,以根据您的环境调整KubeVirt。 如果没有硬件虚拟化支持,则为您的VM专门启用软件仿真。创建namespace:

[root@liabio kubevirt]# kubectl create namespace kubevirt

# Either nesting as described [below](#setting-up-minikube) will be used, or we configure emulation if

# no nesting is available:

[root@liabio kubevirt]# minikube ssh -- test -e /dev/kvm \

|| kubectl create configmap -n kubevirt kubevirt-config --from-literal debug.useEmulation=true

现在,您终于可以使用我们的操作员(与安装程序相比)来部署KubeVirt:

[root@liabio kubevirt]# kubectl apply -f https://github.com/kubevirt/kubevirt/releases/download/v0.33.0/kubevirt-operator.yaml

[root@liabio kubevirt]# kubectl apply -f kubevirt-operator.yaml

namespace/kubevirt created

customresourcedefinition.apiextensions.k8s.io/kubevirts.kubevirt.io created

priorityclass.scheduling.k8s.io/kubevirt-cluster-critical created

clusterrole.rbac.authorization.k8s.io/kubevirt.io:operator created

serviceaccount/kubevirt-operator created

role.rbac.authorization.k8s.io/kubevirt-operator created

rolebinding.rbac.authorization.k8s.io/kubevirt-operator-rolebinding created

clusterrole.rbac.authorization.k8s.io/kubevirt-operator created

clusterrolebinding.rbac.authorization.k8s.io/kubevirt-operator created

deployment.apps/virt-operator created

将看到如下的pod的创建,以及一些CRD、RBAC、优先级priorityclass等资源对象的创建:

[root@liabio kubevirt]# kubectl get pod -n kubevirt

NAME READY STATUS RESTARTS AGE

virt-operator-7d8998bdc7-f467b 1/1 Running 0 42m

virt-operator-7d8998bdc7-mwz9b 1/1 Running 0 42m

创建CR:

[root@liabio kubevirt]# kubectl apply -f kubevirt-cr.yaml

kubevirt.kubevirt.io/kubevirt created

[root@liabio kubevirt]# kubectl get pod -n kubevirt

NAME READY STATUS RESTARTS AGE

kubevirt-4f10d8a1712324b71d0ff3612f097563b4912e51-jobph76q98xsj 0/1 Completed 0 8s

virt-operator-7d8998bdc7-jj5j5 1/1 Running 0 119s

virt-operator-7d8998bdc7-vzrh2 1/1 Running 0 119s

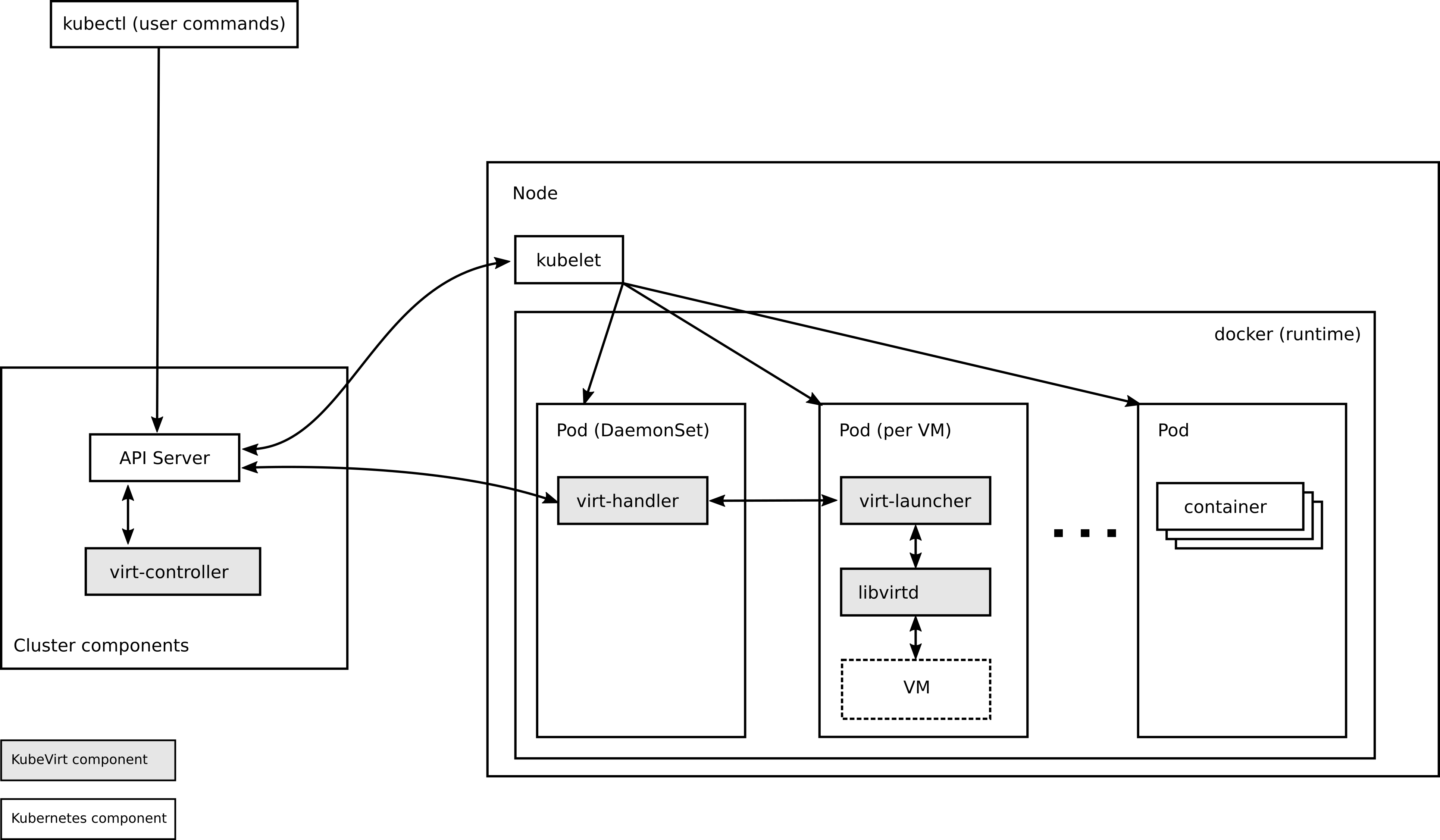

可以看到多出来一个job,这个job将会创建出virt-api、virt-controller、virt-handler。

最初的部署可能会花费很长时间,因为必须从Internet上拉出多个Pod。 我们将观察operator的状态,以确定何时完成部署:

[root@liabio kubevirt]# kubectl wait --timeout=180s --for=condition=Available -n kubevirt kv/kubevirt

kubevirt.kubevirt.io/kubevirt condition met

几分钟之后会看到以下pod的创建(当然这取决于你网络的速度)

virt-api-5c549c7c9c-58gnh 1/1 Running 0 8m33s

virt-api-5c549c7c9c-vvvdq 1/1 Running 0 8m33s

virt-controller-c897fcb4c-4bccq 1/1 Running 0 8m8s

virt-controller-c897fcb4c-n9pxr 1/1 Running 0 8m8s

virt-handler-r976k 1/1 Running 0 8m8s

到这一步,恭喜你,KubeVirt已成功部署。

删除

先删除CR:

[root@liabio kubevirt]# kubectl delete -f kubevirt-cr.yaml

kubevirt.kubevirt.io "kubevirt" deleted

此时剩下如下的pod:

[root@liabio kubevirt]# kubectl get pod -n kubevirt

NAME READY STATUS RESTARTS AGE

virt-operator-7d8998bdc7-f467b 1/1 Running 0 87m

virt-operator-7d8998bdc7-mwz9b 1/1 Running 0 87m

再删除operator:

[root@liabio kubevirt]# kubectl delete -f kubevirt-operator.yaml

namespace "kubevirt" deleted

customresourcedefinition.apiextensions.k8s.io "kubevirts.kubevirt.io" deleted

priorityclass.scheduling.k8s.io "kubevirt-cluster-critical" deleted

clusterrole.rbac.authorization.k8s.io "kubevirt.io:operator" deleted

serviceaccount "kubevirt-operator" deleted

role.rbac.authorization.k8s.io "kubevirt-operator" deleted

rolebinding.rbac.authorization.k8s.io "kubevirt-operator-rolebinding" deleted

clusterrole.rbac.authorization.k8s.io "kubevirt-operator" deleted

clusterrolebinding.rbac.authorization.k8s.io "kubevirt-operator" deleted

deployment.apps "virt-operator" deleted

此时为空的namespace:

[root@liabio kubevirt]# kubectl get pod -n kubevirt

No resources found in kubevirt namespace.

[root@liabio kubevirt]#

安装virtctl

提供了一个附加的二进制文件,可以快速访问VM的串行和图形端口,并处理启动/停止操作。 该工具称为virtctl,可以从KubeVirt的发行页面中检索该工具:

[root@liabio kubevirt]# curl -L -o virtctl https://github.com/kubevirt/kubevirt/releases/download/v0.28.0/virtctl-v0.28.0-linux-amd64

[root@liabio kubevirt]# chmod +x virtctl

[root@liabio kubevirt]# mv virtctl /usr/local/bin/

用krew安装

如果安装了krew,则可以将virtctl作为kubectl插件安装:

$ kubectl krew install virt

启动和停止虚拟机

部署KubeVirt之后,就可以启动虚拟机了:

如果virtctl是通过krew安装的,请使用kubectl virt …而不是./virtctl …

_

创建虚拟机

$ wget https://raw.githubusercontent.com/kubevirt/demo/master/manifests/vm.yaml

[root@liabio kubevirt]# kubectl apply -f vm.yaml

virtualmachine.kubevirt.io/testvm created

部署后,您可以使用常用动词来管理VM:

[root@liabio kubevirt]# kubectl describe vm testvm

Name: testvm

Namespace: default

Labels: <none>

Annotations: kubevirt.io/latest-observed-api-version: v1alpha3

kubevirt.io/storage-observed-api-version: v1alpha3

API Version: kubevirt.io/v1alpha3

Kind: VirtualMachine

Metadata:

Creation Timestamp: 2020-09-17T09:23:14Z

Generation: 1

Managed Fields:

API Version: kubevirt.io/v1alpha3

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubectl.kubernetes.io/last-applied-configuration:

f:spec:

.:

f:running:

f:template:

.:

f:metadata:

.:

f:labels:

.:

f:kubevirt.io/domain:

f:kubevirt.io/size:

f:spec:

.:

f:domain:

.:

f:devices:

.:

f:disks:

f:interfaces:

f:resources:

.:

f:requests:

.:

f:memory:

f:networks:

f:volumes:

Manager: kubectl

Operation: Update

Time: 2020-09-17T09:23:14Z

API Version: kubevirt.io/v1alpha3

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

f:kubevirt.io/latest-observed-api-version:

f:kubevirt.io/storage-observed-api-version:

f:status:

Manager: virt-controller

Operation: Update

Time: 2020-09-17T09:23:14Z

Resource Version: 9957134

Self Link: /apis/kubevirt.io/v1alpha3/namespaces/default/virtualmachines/testvm

UID: ffcb1911-4c6a-4abb-8f2b-d1588deb3594

Spec:

Running: false

Template:

Metadata:

Creation Timestamp: <nil>

Labels:

kubevirt.io/domain: testvm

kubevirt.io/size: small

Spec:

Domain:

Devices:

Disks:

Disk:

Bus: virtio

Name: rootfs

Disk:

Bus: virtio

Name: cloudinit

Interfaces:

Masquerade:

Name: default

Machine:

Type: q35

Resources:

Requests:

Memory: 64M

Networks:

Name: default

Pod:

Volumes:

Container Disk:

Image: kubevirt/cirros-registry-disk-demo

Name: rootfs

Cloud Init No Cloud:

userDataBase64: SGkuXG4=

Name: cloudinit

Events: <none>

要启动您可以使用的VM,这将创建一个VM实例(VMI)

[root@liabio kubevirt]# virtctl start testvm

VM testvm was scheduled to start

有兴趣的读者现在可以选择检查实例:

[root@liabio kubevirt]# kubectl describe vmi testvm

Name: testvm

Namespace: default

Labels: kubevirt.io/domain=testvm

kubevirt.io/size=small

Annotations: kubevirt.io/latest-observed-api-version: v1alpha3

kubevirt.io/storage-observed-api-version: v1alpha3

API Version: kubevirt.io/v1alpha3

Kind: VirtualMachineInstance

Metadata:

Creation Timestamp: 2020-09-17T09:25:33Z

Finalizers:

foregroundDeleteVirtualMachine

Generation: 5

Managed Fields:

API Version: kubevirt.io/v1alpha3

Fields Type: FieldsV1

fieldsV1:

f:metadata:

f:annotations:

.:

f:kubevirt.io/latest-observed-api-version:

f:kubevirt.io/storage-observed-api-version:

f:labels:

.:

f:kubevirt.io/domain:

f:kubevirt.io/size:

f:ownerReferences:

f:spec:

.:

f:domain:

.:

f:devices:

.:

f:disks:

f:interfaces:

f:firmware:

.:

f:uuid:

f:machine:

.:

f:type:

f:resources:

.:

f:requests:

.:

f:memory:

f:networks:

f:volumes:

f:status:

.:

f:activePods:

.:

f:57e980e8-9312-474d-a6dc-945a86056c44:

f:conditions:

f:guestOSInfo:

f:phase:

f:qosClass:

Manager: virt-controller

Operation: Update

Time: 2020-09-17T09:25:33Z

Owner References:

API Version: kubevirt.io/v1alpha3

Block Owner Deletion: true

Controller: true

Kind: VirtualMachine

Name: testvm

UID: ffcb1911-4c6a-4abb-8f2b-d1588deb3594

Resource Version: 9957612

Self Link: /apis/kubevirt.io/v1alpha3/namespaces/default/virtualmachineinstances/testvm

UID: 1a5c5bc5-d15d-4e62-aeb5-c5809f39f04c

Spec:

Domain:

Devices:

Disks:

Disk:

Bus: virtio

Name: rootfs

Disk:

Bus: virtio

Name: cloudinit

Interfaces:

Masquerade:

Name: default

Features:

Acpi:

Enabled: true

Firmware:

Uuid: 5a9fc181-957e-5c32-9e5a-2de5e9673531

Machine:

Type: q35

Resources:

Requests:

Cpu: 100m

Memory: 64M

Networks:

Name: default

Pod:

Volumes:

Container Disk:

Image: kubevirt/cirros-registry-disk-demo

Image Pull Policy: Always

Name: rootfs

Cloud Init No Cloud:

userDataBase64: SGkuXG4=

Name: cloudinit

Status:

Active Pods:

57e980e8-9312-474d-a6dc-945a86056c44:

Conditions:

Last Probe Time: <nil>

Last Transition Time: 2020-09-17T09:25:33Z

Message: 0/1 nodes are available: 1 Insufficient devices.kubevirt.io/kvm.

Reason: Unschedulable

Status: False

Type: PodScheduled

Guest OS Info:

Phase: Scheduling

Qos Class: Burstable

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal SuccessfulCreate 5m9s virtualmachine-controller Created virtual machine pod virt-launcher-testvm-5kjw8

再次关闭VM:

[root@liabio kubevirt]# virtctl stop testvm

VM testvm was scheduled to stop

此时可以查到m对象,但是pod已经被删除:

[root@liabio kubevirt]# kubectl get vm

NAME AGE VOLUME

testvm 76m

[root@liabio kubevirt]# kubectl delete vm testvm

virtualmachine.kubevirt.io "testvm" deleted

之后删除VM:

[root@liabio kubevirt]# kubectl delete vm testvm

也可以创建你自己的VM:

kubectl apply -f $YOUR_VM_SPEC

查看到vm的pod一直处于Pending状态:

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling <unknown> default-scheduler 0/1 nodes are available: 1 Insufficient devices.kubevirt.io/kvm.

[root@liabio kubevirt]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

virt-launcher-testvm-5kjw8 0/2 Pending 0 65m <none> <none> <none> <none>

[root@liabio kubevirt]# kubectl describe pod virt-launcher-testvm-5kjw8

查看node的yaml可以看到devices.kubevirt.io/kvm为0.

allocatable:

cpu: "2"

devices.kubevirt.io/kvm: "0"

devices.kubevirt.io/tun: "110"

devices.kubevirt.io/vhost-net: "110"

ephemeral-storage: "38644306266"

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 3745392Ki

pods: "110"

capacity:

cpu: "2"

devices.kubevirt.io/kvm: "110"

devices.kubevirt.io/tun: "110"

devices.kubevirt.io/vhost-net: "110"

ephemeral-storage: 41931756Ki

hugepages-1Gi: "0"

hugepages-2Mi: "0"

memory: 3847792Ki

pods: "110"

在安装之前需要先做如下准备工作,安装libvirt和qemu软件包:

查看节点是否支持kvm硬件辅助虚拟化:

[root@liabio ~]# yum -y install qemu-kvm libvirt virt-install

[root@liabio ~]# ls /dev/kvm

ls: cannot access '/dev/kvm': No such file or directory

[root@liabio ~]# virt-host-validate qemu

QEMU: Checking for hardware virtualization : FAIL (Only emulated CPUs are available, performance will be significantly limited)

QEMU: Checking if device /dev/vhost-net exists : PASS

QEMU: Checking if device /dev/net/tun exists : PASS

QEMU: Checking for cgroup 'cpu' controller support : PASS

QEMU: Checking for cgroup 'cpuacct' controller support : PASS

QEMU: Checking for cgroup 'cpuset' controller support : PASS

QEMU: Checking for cgroup 'memory' controller support : PASS

QEMU: Checking for cgroup 'devices' controller support : PASS

QEMU: Checking for cgroup 'blkio' controller support : PASS

WARN (Unknown if this platform has IOMMU support)

未知此平台是否具有IOMMU支持;

可以看到上面的输出,CPU不支持虚拟化。也可以用以下的命令查看:

egrep --color -i "svm|vmx" /proc/cpuinfo

如果你没有看到任何输出,这意味着你的系统不支持虚拟化。

请注意,cpuinfo 中的这些 CPU 标志(vmx 或 svm)表示你的系统支持 VT。在某些 CPU 型号中,默认情况下,可能会在 BIOS 中禁用 VT 支持。在这种情况下,你应该检查 BIOS 设置以启用 VT 支持。

如不支持,则先生成让kubevirt使用软件虚拟化的配置:

kubectl create configmap -n kubevirt kubevirt-config --from-literal debug.useEmulation=true

这个参数会让virt-launcher pod的request参数中没有了devices.kubevirt.io/kvm: “1”的请求量。所以pod就可以被调度器调度。

被调度后,看到了virt-launcher一直在被删、重建的死循环:

virt-launcher-testvm-jctjs 0/2 PodInitializing 0 35s 192.168.155.127 liabio <none> <none>

[root@liabio kubevirt]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

miniprog-56d87d84cf-4p7xl 1/1 Running 0 45d 192.168.155.87 liabio <none> <none>

mysql-79bd6fcfd-chxr4 1/1 Running 1 45d 192.168.155.88 liabio <none> <none>

redis-0 1/1 Running 0 45d 192.168.155.89 liabio <none> <none>

solo-9bf6f7764-knb4r 1/1 Running 0 45d 10.0.9.52 liabio <none> <none>

virt-launcher-testvm-jctjs 2/2 Running 0 36s 192.168.155.127 liabio <none> <none>

[root@liabio kubevirt]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

miniprog-56d87d84cf-4p7xl 1/1 Running 0 45d 192.168.155.87 liabio <none> <none>

mysql-79bd6fcfd-chxr4 1/1 Running 1 45d 192.168.155.88 liabio <none> <none>

redis-0 1/1 Running 0 45d 192.168.155.89 liabio <none> <none>

solo-9bf6f7764-knb4r 1/1 Running 0 45d 10.0.9.52 liabio <none> <none>

virt-launcher-testvm-jctjs 2/2 Terminating 0 38s 192.168.155.127 liabio <none> <none>

[root@liabio kubevirt]# kubectl get no liabio -oyaml | grep kvm

f:devices.kubevirt.io/kvm: {}

f:devices.kubevirt.io/kvm: {}

devices.kubevirt.io/kvm: "0"

devices.kubevirt.io/kvm: "110"

[root@liabio kubevirt]# kubectl get pod -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

miniprog-56d87d84cf-4p7xl 1/1 Running 0 45d 192.168.155.87 liabio <none> <none>

mysql-79bd6fcfd-chxr4 1/1 Running 1 45d 192.168.155.88 liabio <none> <none>

redis-0 1/1 Running 0 45d 192.168.155.89 liabio <none> <none>

solo-9bf6f7764-knb4r 1/1 Running 0 45d 10.0.9.52 liabio <none> <none>

virt-launcher-testvm-kvtz6 0/2 Init:0/1 0 1s <none> liabio <none> <none>

[root@liabio kubevirt]#

遇到的问题

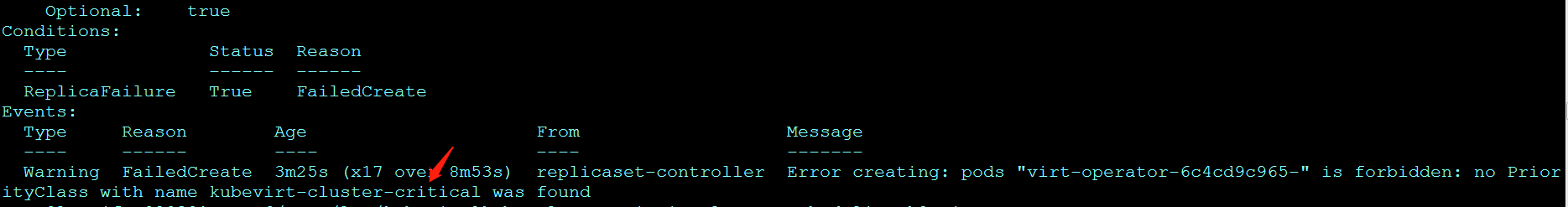

在1.13.2集群中创建operator时,replicasets创建成功,但pod没有创建出来,describe replicasets可以看到报错,由于没有kubevirt-cluster-critical 这个priorityclasses对象导致。

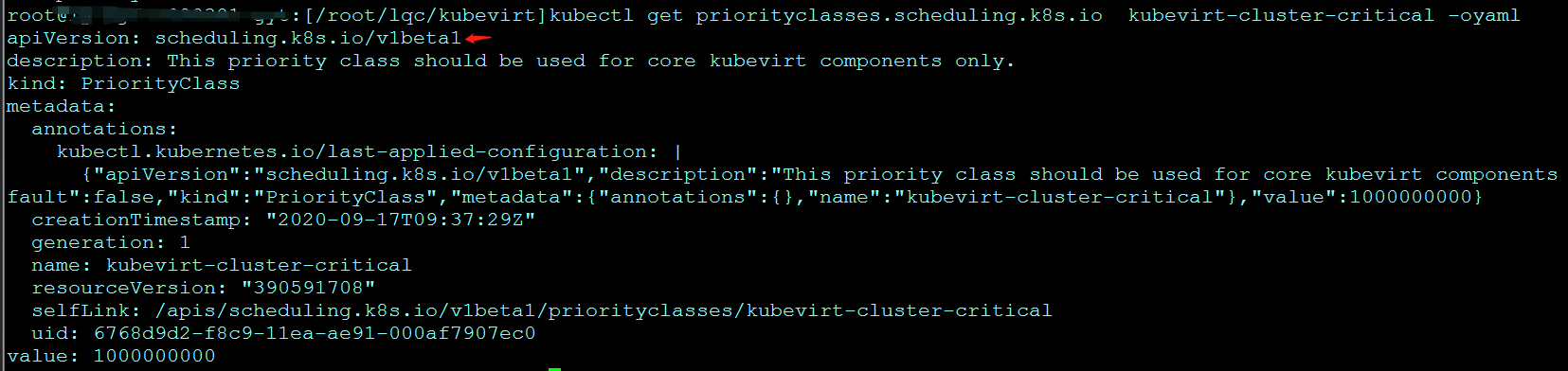

在1.13.2集群中部署时,由于apiVersion只有scheduling.k8s.io/v1beta1,可以用以下命令在集群中查看:

[root@liabio kubevirt]# kubectl api-versions

scheduling.k8s.io/v1beta1

而0.33.0自带的名为的kubevirt-cluster-critical的priorityclasses.scheduling.k8s.io对象的apiVersion只有scheduling.k8s.io/v1,所以需要自己手动修改一下这个对象的apiVersion,重新创建。

启动顺序

[root@liabio kubevirt]# kubectl get pod -n kubevirt

NAME READY STATUS RESTARTS AGE

kubevirt-4f10d8a1712324b71d0ff3612f097563b4912e51-jobrnphxpp9qh 0/1 Completed 0 6s

virt-operator-7d8998bdc7-t94jq 1/1 Running 0 11s

virt-operator-7d8998bdc7-z49q7 0/1 Running 0 11s

[root@liabio kubevirt]# kubectl get pod -n kubevirt -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

virt-api-5c549c7c9c-fvcms 0/1 ContainerCreating 0 2s <none> liabio <none> <none>

virt-api-5c549c7c9c-vpqqx 0/1 ContainerCreating 0 2s <none> liabio <none> <none>

virt-operator-7d8998bdc7-t94jq 1/1 Running 0 14s 192.168.155.119 liabio <none> <none>

virt-operator-7d8998bdc7-z49q7 1/1 Running 0 14s 192.168.155.120 liabio <none> <none>

[root@liabio kubevirt]# kubectl get pod -n kubevirt -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

virt-api-5c549c7c9c-fvcms 0/1 Running 0 6s 192.168.155.123 liabio <none> <none>

virt-api-5c549c7c9c-vpqqx 0/1 Running 0 6s 192.168.155.122 liabio <none> <none>

virt-operator-7d8998bdc7-t94jq 1/1 Running 0 18s 192.168.155.119 liabio <none> <none>

virt-operator-7d8998bdc7-z49q7 1/1 Running 0 18s 192.168.155.120 liabio <none> <none>

[root@liabio kubevirt]# kubectl get pod -n kubevirt -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

virt-api-5c549c7c9c-fvcms 1/1 Running 0 45s 192.168.155.123 liabio <none> <none>

virt-api-5c549c7c9c-vpqqx 1/1 Running 0 45s 192.168.155.122 liabio <none> <none>

virt-controller-c897fcb4c-2l98l 1/1 Running 0 26s 192.168.155.124 liabio <none> <none>

virt-controller-c897fcb4c-hlfgj 1/1 Running 0 26s 192.168.155.126 liabio <none> <none>

virt-handler-mm54j 1/1 Running 0 26s 192.168.155.125 liabio <none> <none>

virt-operator-7d8998bdc7-t94jq 1/1 Running 0 57s 192.168.155.119 liabio <none> <none>

virt-operator-7d8998bdc7-z49q7 1/1 Running 0 57s 192.168.155.120 liabio <none> <none>

[root@liabio kubevirt]#

job将virt-api创出来pod running后,virt-controller、virt-handler才开始启动。

可以看到VirtualMachineInstance对象一直被重建,这个是virt-controller中virtualmachine-controller做的。

[root@liabio kubevirt]# kubectl get VirtualMachine

NAME AGE VOLUME

testvm 16m

[root@liabio kubevirt]# kubectl get VirtualMachineInstance

NAME AGE PHASE IP NODENAME

testvm 5s Scheduling

[root@liabio kubevirt]# kubectl get pod

NAME READY STATUS RESTARTS AGE

virt-launcher-testvm-zh44c 2/2 Terminating 0 9s

可以describe VirtualMachine看到:

Normal SuccessfulCreate 14m (x13 over 18m) virtualmachine-controller Started the virtual machine by creating the new virtual machine instance testvm

Normal SuccessfulDelete 8m26s (x25 over 15m) virtualmachine-controller (combined from similar events): Stopped the virtual machine by deleting the virtual machine instance a31f09bc-80ee-43e7-82e0-25d0a1db78bd

Normal SuccessfulDelete 4m13s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance 20a8422a-5a9d-4eb1-933d-8c90aa9a8085

Normal SuccessfulDelete 3m56s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance ed7ab0b1-6292-4b5d-8290-4270cdaf59e9

Normal SuccessfulDelete 3m35s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance 1e45a456-8e22-41ed-a4c8-63fe07399b84

Normal SuccessfulDelete 3m15s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance ff55d73e-deab-4df9-9aa4-b4437da7fb6f

Normal SuccessfulDelete 2m52s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance 2863235a-2e7f-4f76-9569-e206540cc4e9

Normal SuccessfulDelete 2m35s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance c620eb03-a08c-4441-aeec-bd9ee8dbd1f9

Normal SuccessfulDelete 2m16s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance 901f23b6-bbe4-4bc2-bd8a-1ae370e555d4

Normal SuccessfulDelete 113s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance 5b84b4e7-da3b-43ce-97e3-4c90a419a0f7

Normal SuccessfulDelete 95s virtualmachine-controller Stopped the virtual machine by deleting the virtual machine instance c207c61b-3c4d-4935-91c7-ad14e4b0cd52

Normal SuccessfulCreate 27s (x12 over 4m3s) virtualmachine-controller Started the virtual machine by creating the new virtual machine instance testvm

Normal SuccessfulDelete 19s (x4 over 72s) virtualmachine-controller (combined from similar events): Stopped the virtual machine by deleting the virtual machine instance f4ae794c-dafa-41cd-8edd-c33714177529

[root@liabio kubevirt]#

[root@liabio kubevirt]#

[root@liabio kubevirt]# kubectl describe VirtualMachine testvm

VMI的状态由Scheduling、Scheduled、Failed变化:VMController会删除Faied或者Finished的VMI,导致lVMIController删除pod重建。

status:

activePods:

b260f7c7-f64f-49d0-a744-cb26fc1cf0aa: liabio

conditions:

- lastProbeTime: null

lastTransitionTime: null

status: "True"

type: LiveMigratable

guestOSInfo: {}

migrationMethod: BlockMigration

nodeName: liabio

phase: Failed

qosClass: Burstable

[root@liabio kubevirt]# kubectl get vmi testvm -oyaml

其实要找到为什么VMI会Failed。

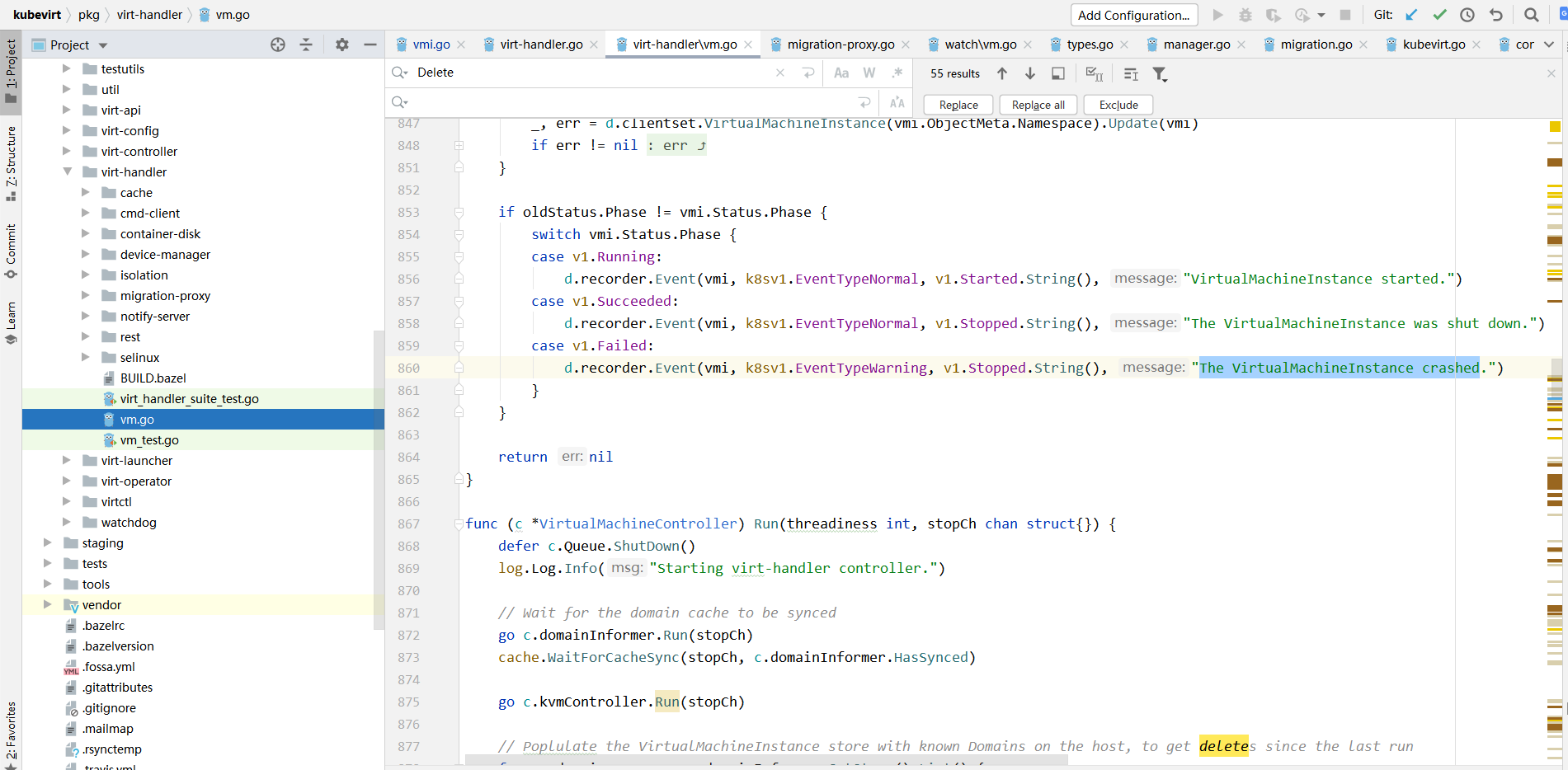

在event中查看线索,查看到大量的crashed信息,下面截出一段:

kubectl get event -oyaml | grep -C50 "The VirtualMachineInstance crashed"

- apiVersion: v1

count: 1

eventTime: null

firstTimestamp: "2020-09-18T00:03:07Z"

involvedObject:

apiVersion: kubevirt.io/v1alpha3

kind: VirtualMachineInstance

name: testvm

namespace: default

resourceVersion: "10183584"

uid: d5b3ab1d-6e59-4bdc-a6cd-3a3b33fc64a7

kind: Event

lastTimestamp: "2020-09-18T00:03:07Z"

message: The VirtualMachineInstance crashed.

metadata:

creationTimestamp: "2020-09-18T00:03:07Z"

managedFields:

- apiVersion: v1

fieldsType: FieldsV1

fieldsV1:

f:count: {}

f:firstTimestamp: {}

f:involvedObject:

f:apiVersion: {}

f:kind: {}

f:name: {}

f:namespace: {}

f:resourceVersion: {}

f:uid: {}

f:lastTimestamp: {}

f:message: {}

f:reason: {}

f:source:

f:component: {}

f:host: {}

f:type: {}

manager: virt-handler

operation: Update

time: "2020-09-18T00:03:07Z"

name: testvm.1635b7d9a373e1b0

namespace: default

resourceVersion: "10183599"

selfLink: /api/v1/namespaces/default/events/testvm.1635b7d9a373e1b0

uid: b47851eb-4891-406e-9243-3e498a9ab70c

reason: Stopped

reportingComponent: ""

reportingInstance: ""

source:

component: virt-handler

host: liabio

type: Warning

查看源码,这个event是virt-handler记录的。

查看virt-launcher日志:

[root@liabio kubevirt]# kubectl logs -f virt-launcher-testvm-t7dt4 volumerootfs

Fri Sep 18 00:43:48 2020

error: socket does not exist anymore

^C

[root@liabio kubevirt]# kubectl logs -f virt-launcher-testvm-t7dt4 compute

{"component":"virt-launcher","level":"info","msg":"Collected all requested hook sidecar sockets","pos":"manager.go:68","timestamp":"2020-09-18T00:43:39.129320Z"}

{"component":"virt-launcher","level":"info","msg":"Sorted all collected sidecar sockets per hook point based on their priority and name: map[]","pos":"manager.go:71","timestamp":"2020-09-18T00:43:39.152525Z"}

{"component":"virt-launcher","level":"info","msg":"Connecting to libvirt daemon: qemu:///system","pos":"libvirt.go:374","timestamp":"2020-09-18T00:43:39.258243Z"}

{"component":"virt-launcher","level":"info","msg":"Connecting to libvirt daemon failed: virError(Code=38, Domain=7, Message='Failed to connect socket to '/var/run/libvirt/libvirt-sock': No such file or directory')","pos":"libvirt.go:382","timestamp":"2020-09-18T00:43:39.270697Z"}

{"component":"virt-launcher","level":"info","msg":"libvirt version: 6.0.0, package: 16.fc31 (Unknown, 2020-04-07-15:55:55, )","subcomponent":"libvirt","thread":"39","timestamp":"2020-09-18T00:43:39.529000Z"}

{"component":"virt-launcher","level":"info","msg":"hostname: testvm","subcomponent":"libvirt","thread":"39","timestamp":"2020-09-18T00:43:39.529000Z"}

{"component":"virt-launcher","level":"error","msg":"internal error: Child process (/usr/sbin/dmidecode -q -t 0,1,2,3,4,17) unexpected exit status 1: /dev/mem: No such file or directory","pos":"virCommandWait:2709","subcomponent":"libvirt","thread":"39","timestamp":"2020-09-18T00:43:39.529000Z"}

{"component":"virt-launcher","level":"info","msg":"Connected to libvirt daemon","pos":"libvirt.go:390","timestamp":"2020-09-18T00:43:39.772366Z"}

{"component":"virt-launcher","level":"info","msg":"Registered libvirt event notify callback","pos":"client.go:382","timestamp":"2020-09-18T00:43:39.785963Z"}

{"component":"virt-launcher","level":"info","msg":"Marked as ready","pos":"virt-launcher.go:71","timestamp":"2020-09-18T00:43:39.786213Z"}

{"component":"virt-launcher","level":"info","msg":"Received signal terminated","pos":"virt-launcher.go:413","timestamp":"2020-09-18T00:43:49.407920Z"}

{"component":"virt-launcher","level":"info","msg":"stopping cmd server","pos":"server.go:448","timestamp":"2020-09-18T00:43:49.670136Z"}

{"component":"virt-launcher","level":"error","msg":"failed to read libvirt logs","pos":"libvirt_helper.go:205","reason":"read |0: file already closed","timestamp":"2020-09-18T00:43:49.671871Z"}

{"component":"virt-launcher","level":"error","msg":"Connection to libvirt lost","pos":"libvirt.go:275","timestamp":"2020-09-18T00:43:49.905269Z"}

{"component":"virt-launcher","level":"error","msg":"timeout on stopping the cmd server, continuing anyway.","pos":"server.go:459","timestamp":"2020-09-18T00:43:50.670311Z"}

{"component":"virt-launcher","level":"info","msg":"Exiting...","pos":"virt-launcher.go:442","timestamp":"2020-09-18T00:43:50.670540Z"}

[root@liabio kubevirt]#