理解Docker0

学习新知识,首先清空所有环境(镜像、容器)

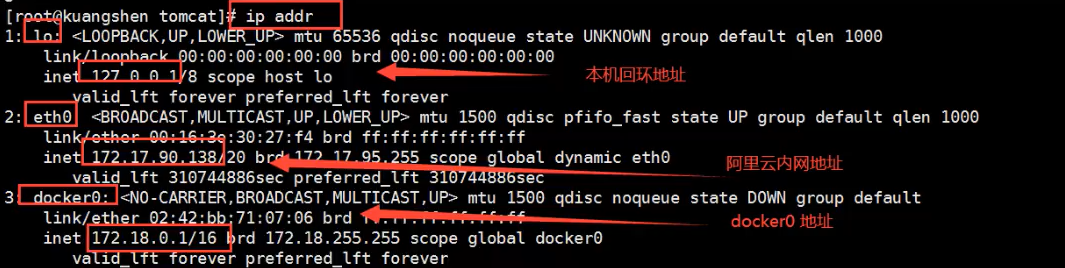

查看本地网卡: ip addr

可以看见有三个网络

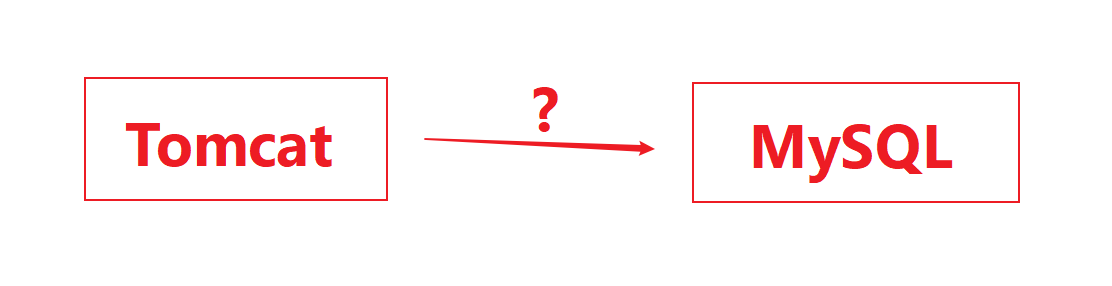

# 问题:docker 是怎样处理网络访问的?

#开启一个tomcat容器,端口随机root@kylin:~# docker run -d -P --name tomcat01 tomcat#查看容器内部ip地址 ip addr ,发现容器启动的时候会得到一个内部ip地址 eth0@if65 ,这个是docker分配的root@kylin:~# docker exec -it tomcat01 ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft forever64: eth0@if65: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:ac:11:00:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0valid_lft forever preferred_lft forever#思考:Linux能不能ping通容器内部的这个ip?root@kylin:~# ping 172.17.0.2PING 172.17.0.2 (172.17.0.2) 56(84) bytes of data.64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.061 ms64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.049 ms#Linux可以ping 通 docker 容器内部

原理:

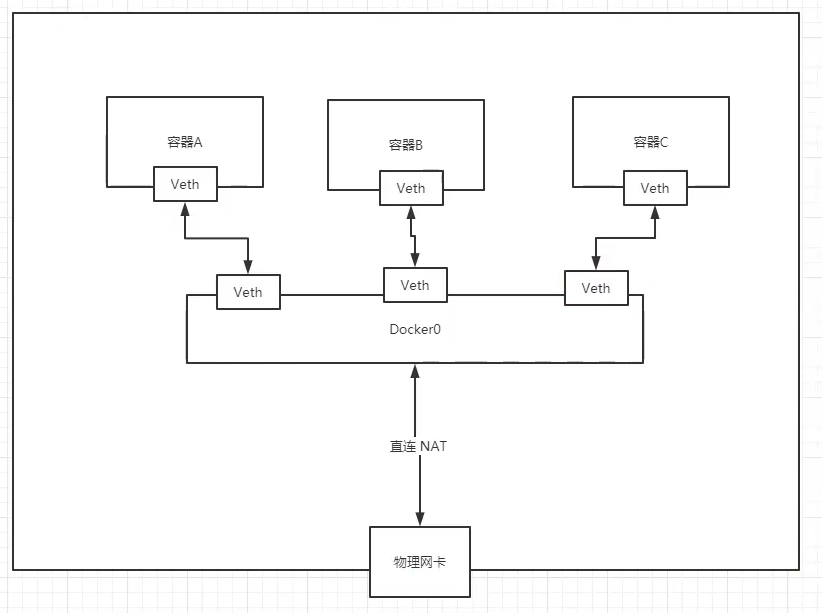

我们每安装一个docker 容器,docker就会给docker容器分配一个ip,我们只要安装了docker,就会有一个网卡docker0,采用的是桥接模式,使用的技术是 veth-pair 技术,在主机查看网卡信息:多了一对 65 64 网卡

root@kylin:~# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000link/ether 00:16:3e:16:25:20 brd ff:ff:ff:ff:ff:ffinet 172.31.106.145/20 brd 172.31.111.255 scope global dynamic eth0valid_lft 315188155sec preferred_lft 315188155secinet6 fe80::216:3eff:fe16:2520/64 scope linkvalid_lft forever preferred_lft forever3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:a3:4b:1b:fe brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:a3ff:fe4b:1bfe/64 scope linkvalid_lft forever preferred_lft forever65: vethcb4a334@if64: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 1e:f9:86:06:14:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::1cf9:86ff:fe06:1402/64 scope linkvalid_lft forever preferred_lft forever

再启动一个容器测试,又多了一对 67-66网卡

root@kylin:~# ip addr1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00inet 127.0.0.1/8 scope host lovalid_lft forever preferred_lft foreverinet6 ::1/128 scope hostvalid_lft forever preferred_lft forever2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000link/ether 00:16:3e:16:25:20 brd ff:ff:ff:ff:ff:ffinet 172.31.106.145/20 brd 172.31.111.255 scope global dynamic eth0valid_lft 315188027sec preferred_lft 315188027secinet6 fe80::216:3eff:fe16:2520/64 scope linkvalid_lft forever preferred_lft forever3: docker0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group defaultlink/ether 02:42:a3:4b:1b:fe brd ff:ff:ff:ff:ff:ffinet 172.17.0.1/16 brd 172.17.255.255 scope global docker0valid_lft forever preferred_lft foreverinet6 fe80::42:a3ff:fe4b:1bfe/64 scope linkvalid_lft forever preferred_lft forever65: vethcb4a334@if64: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether 1e:f9:86:06:14:02 brd ff:ff:ff:ff:ff:ff link-netnsid 0inet6 fe80::1cf9:86ff:fe06:1402/64 scope linkvalid_lft forever preferred_lft forever67: veth781ac81@if66: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master docker0 state UP group defaultlink/ether aa:01:41:a1:b6:87 brd ff:ff:ff:ff:ff:ff link-netnsid 1inet6 fe80::a801:41ff:fea1:b687/64 scope linkvalid_lft forever preferred_lft forever

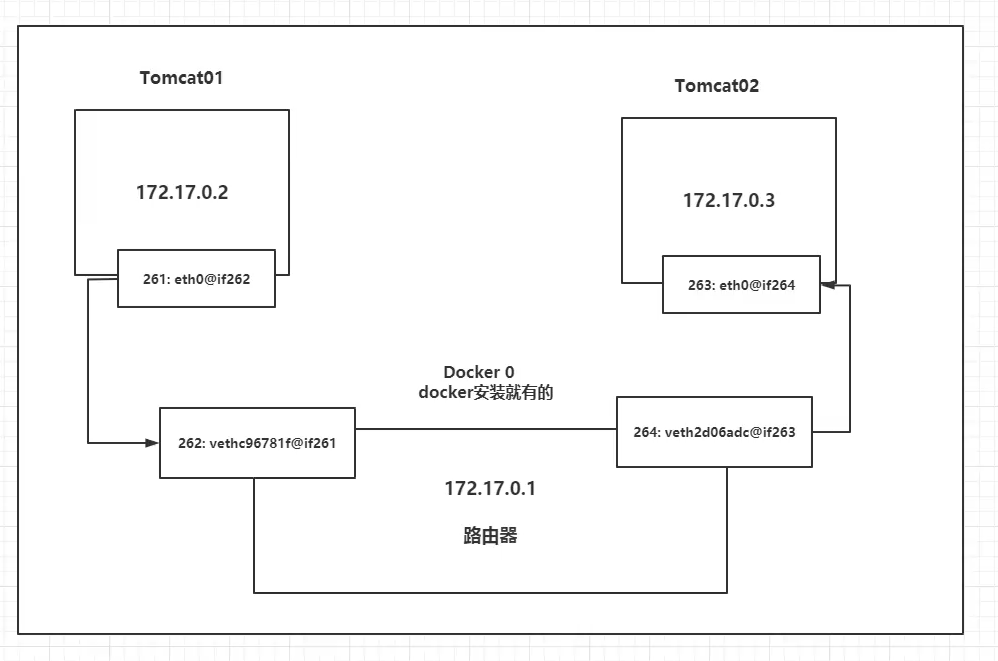

我们发现这个容器的网卡,都是一对一对的

veth-pair 技术一对的虚拟设备接口,他们都是成对出现的,一端连着协议,一端彼此相连

正因为有这个特性,因此 让 veth-pair 充当一个桥梁,连接各种虚拟网络设备的

OpenStack ,docker容器之间的连接,ovs的连接,都是使用veth-pair技术我们来测试下 tomcat01 和 tomcat02 是否可以ping通 ```shell

tomcat01 172.17.0.2

tomcat02 172.17.0.3

tomcat01 ping tomcat02

root@kylin:~# docker exec -it tomcat01 ping 172.17.0.3 PING 172.17.0.3 (172.17.0.3) 56(84) bytes of data. 64 bytes from 172.17.0.3: icmp_seq=1 ttl=64 time=0.085 ms 64 bytes from 172.17.0.3: icmp_seq=2 ttl=64 time=0.062 ms 64 bytes from 172.17.0.3: icmp_seq=3 ttl=64 time=0.070 ms

tomcat02 ping tomcat01

root@kylin:~# docker exec -it tomcat02 ping 172.17.02 PING 172.17.02 (172.17.0.2) 56(84) bytes of data. 64 bytes from 172.17.0.2: icmp_seq=1 ttl=64 time=0.058 ms 64 bytes from 172.17.0.2: icmp_seq=2 ttl=64 time=0.063 ms 64 bytes from 172.17.0.2: icmp_seq=3 ttl=64 time=0.062 ms

结论:

容器之间是可以互相ping通的

绘制一个网络模型图 <br />

结论: tomcat01 和 tomcat02 是公用的同一个路由器:docker0<br />所有的容器不指定网络的情况下,都是docker0 路由的,docker会给我们的容器分配一个默认的可以ip

<a name="daaa5388"></a>

## 小节

docker使用的是Linux的桥接,宿主机中是一个docker容器的网桥 docker0<br />

docker中所有的网络接口都是虚拟的。虚拟的转发效率高!(速度快)<br />只要容器删除,对应的一对网桥就没了。

<a name="1s16b"></a>

## --link(不推荐使用)

**思考一个场景:**我们编写了一个微服务,database url=ip: ,我们希望项目不重启,但是数据库ip可以换,那怎样处理这个问题?可以用名字进行访问服务

```shell

root@kylin:~# docker exec -it tomcat01 ping tomcat02

ping: tomcat02: Name or service not known

#如何解决? 在启动服务的时候加上 --link

root@kylin:~# docker run -d -P --name tomcat03 --link tomcat02 tomcat

dd4bab54caff90ee3baafe4f5da91869089818426ede6b79429ab82b5967879a

root@kylin:~# docker exec -it tomcat03 ping tomcat02 #tomcat03成功ping通tomcat02

PING tomcat02 (172.17.0.3) 56(84) bytes of data.

64 bytes from tomcat02 (172.17.0.3): icmp_seq=1 ttl=64 time=0.095 ms

64 bytes from tomcat02 (172.17.0.3): icmp_seq=2 ttl=64 time=0.061 ms

#但是tomcat02 ping不通tomcat03

root@kylin:~# docker exec -it tomcat02 ping tomcat03

ping: tomcat03: Name or service not known

探究: docker network inspect 网卡id 查看网卡的各种信息

"Containers": {

"aaefe7048c1ac703ffb4806be6107cc04d731fdae079e962ddc7c24373e24c2e": {

"Name": "tomcat02",

"EndpointID": "d3342b66fd0c633ea449f25d428f0dcbfa2d2b6d70893f43662a951e5c3b229c",

"MacAddress": "02:42:ac:11:00:03",

"IPv4Address": "172.17.0.3/16",

"IPv6Address": ""

},

"b8bbebd043ff58d0eae87aff04febc8f57b2e19f358fc82a6c87fe0984a718cf": {

"Name": "tomcat01",

"EndpointID": "583537beced285101b160e110795ded529e144663e94e75fd1254ee876ca6ba2",

"MacAddress": "02:42:ac:11:00:02",

"IPv4Address": "172.17.0.2/16",

"IPv6Address": ""

},

"dd4bab54caff90ee3baafe4f5da91869089818426ede6b79429ab82b5967879a": {

"Name": "tomcat03",

"EndpointID": "5888c173bc1fc4c936e5dd0edb0430be06ad319b881de6e87cfb59c59a8e3dab",

"MacAddress": "02:42:ac:11:00:05",

"IPv4Address": "172.17.0.5/16",

"IPv6Address": ""

},

"e7c84a271c2187410b83fdd592af488420cc612d0c6bfa6b456a0bc398c6808a": {

"Name": "boring_pike",

"EndpointID": "e92e56baa444184d649d822bf3a5cf61f23b2c8431b75ef473c20ca5e159ad1c",

"MacAddress": "02:42:ac:11:00:04",

"IPv4Address": "172.17.0.4/16",

"IPv6Address": ""

}

}

其实这个tomcat03 就是在本地配置了tomcat02 的网络配置(hosts)

# 查看 tomcat03 中的hosts配置

root@kylin:~# docker exec -it tomcat03 cat /etc/hosts

127.0.0.1 localhost

::1 localhost ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

172.17.0.3 tomcat02 aaefe7048c1a #注意看,增加了tomcat02的映射

172.17.0.5 dd4bab54caff

—link 就是我们在hosts配置中增加了一个 172.17.0.3 tomcat02 aaefe7048c1a

我们现在玩docker,已经不建议使用—link了,不使用docker0,

docker0问题:他不支持容器名连接访问

我们都使用自定义网络,比较方便

自定义网络(推荐)

网络模式

bridge : 桥接模式 docker(默认)

none : 不配置网络

host : 和宿主机共享网络

container : 容器网络连通 (用的少!局限很大)

测试

# 我们直接启动的命令 --net bridge (不加网络配置,就是使用这个默认的) ,而这个就是我们的docker0

docker run -d -P --name tomcat02 --net bridge tomcat

#docker0 特点: 默认域名不能访问,但--link可以打通

#我们可以自定义一个网络

- 创建一个网络

docker network create

# 创建自定义网络:

# --subnet 192.168.0.0/16 子网范围定义

# --gateway 192.168.0.1 网关

docker network create --driver bridge --subnet 192.168.0.0/16 --gateway 192.168.0.1 mynet

#创建成功:

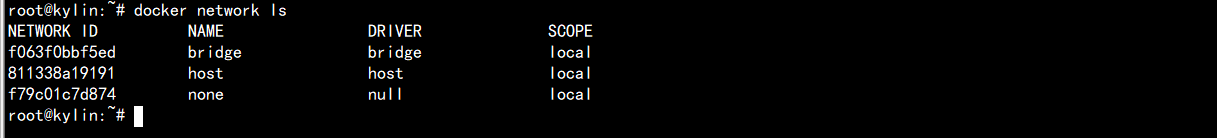

root@kylin:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

f063f0bbf5ed bridge bridge local

811338a19191 host host local

b4ddfc75aeda mynet bridge local

f79c01c7d874 none null local

#查看一下自己配的网络

root@kylin:~# docker network inspect mynet

[

{

"Name": "mynet",

"Id": "b4ddfc75aeda5bdec4f3517701fe658ed360771529364ef658ab94de39f9dacf",

"Created": "2020-08-10T10:20:34.776115561+08:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": false,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "192.168.0.0/16",

"Gateway": "192.168.0.1"

}

]

},

"Internal": false,

"Attachable": false,

"Ingress": false,

"ConfigFrom": {

"Network": ""

},

"ConfigOnly": false,

"Containers": {},

"Options": {},

"Labels": {}

}

]

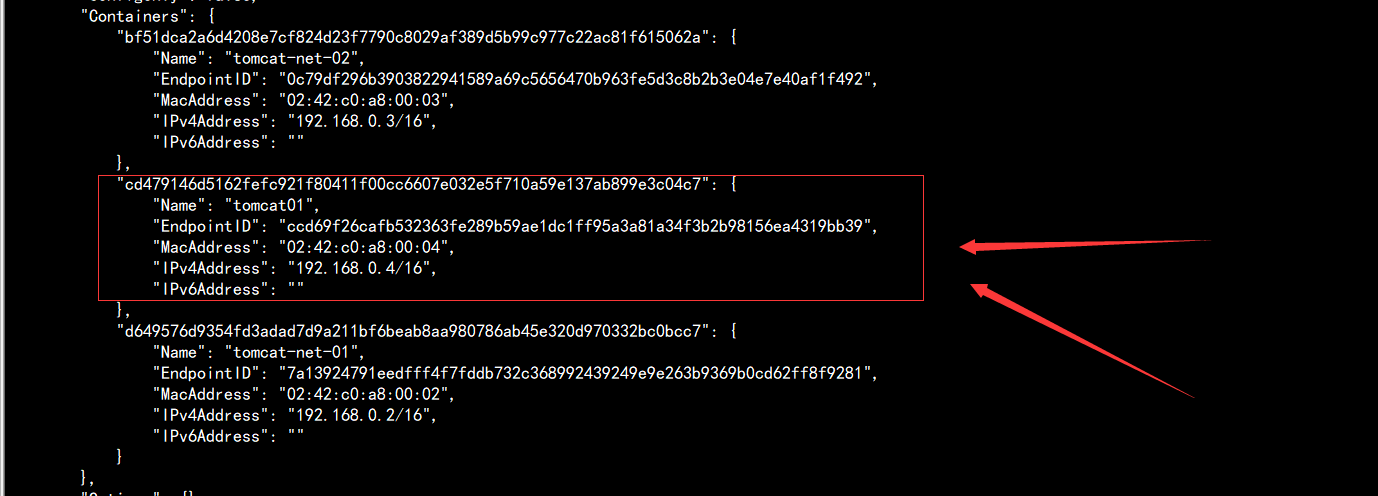

- 开启两个tomcat,开启时使用自定义的网络mynet ```shell root@kylin:~# docker run -d -P —name tomcat-net-01 —net mynet tomcat d649576d9354fd3adad7d9a211bf6beab8aa980786ab45e320d970332bc0bcc7

root@kylin:~# docker run -d -P —name tomcat-net-02 —net mynet tomcat bf51dca2a6d4208e7cf824d23f7790c8029af389d5b99c977c22ac81f615062a

3. 查看一下 mynet的信息

```shell

"Containers": {

"bf51dca2a6d4208e7cf824d23f7790c8029af389d5b99c977c22ac81f615062a": {

"Name": "tomcat-net-02",

"EndpointID": "0c79df296b3903822941589a69c5656470b963fe5d3c8b2b3e04e7e40af1f492",

"MacAddress": "02:42:c0:a8:00:03",

"IPv4Address": "192.168.0.3/16",

"IPv6Address": ""

},

"d649576d9354fd3adad7d9a211bf6beab8aa980786ab45e320d970332bc0bcc7": {

"Name": "tomcat-net-01",

"EndpointID": "7a13924791eedfff4f7fddb732c368992439249e9e263b9369b0cd62ff8f9281",

"MacAddress": "02:42:c0:a8:00:02",

"IPv4Address": "192.168.0.2/16",

"IPv6Address": ""

}

- 开始ping ```shell root@kylin:~# docker exec -it tomcat-net-01 ping 192.168.0.3 PING 192.168.0.3 (192.168.0.3) 56(84) bytes of data. 64 bytes from 192.168.0.3: icmp_seq=1 ttl=64 time=0.092 ms 64 bytes from 192.168.0.3: icmp_seq=2 ttl=64 time=0.086 ms

root@kylin:~# docker exec -it tomcat-net-01 ping tomcat-net-02 PING tomcat-net-02 (192.168.0.3) 56(84) bytes of data. 64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=1 ttl=64 time=0.053 ms 64 bytes from tomcat-net-02.mynet (192.168.0.3): icmp_seq=2 ttl=64 time=0.072 ms

可以看见,不管是使用ip地址还是使用服务名称,都可以找到对应的主机(192.168.0.3)<br />我们自定义的网络docker都已经帮我们维护好了对应的关系,推荐我们平时这样使用网络

好处:<br />MySQL 不同的集群使用不同的网络,保证集群是安全和健康的<br />Redis 不同的集群使用不同的网络,保证集群是安全和健康的

<a name="R6Ptk"></a>

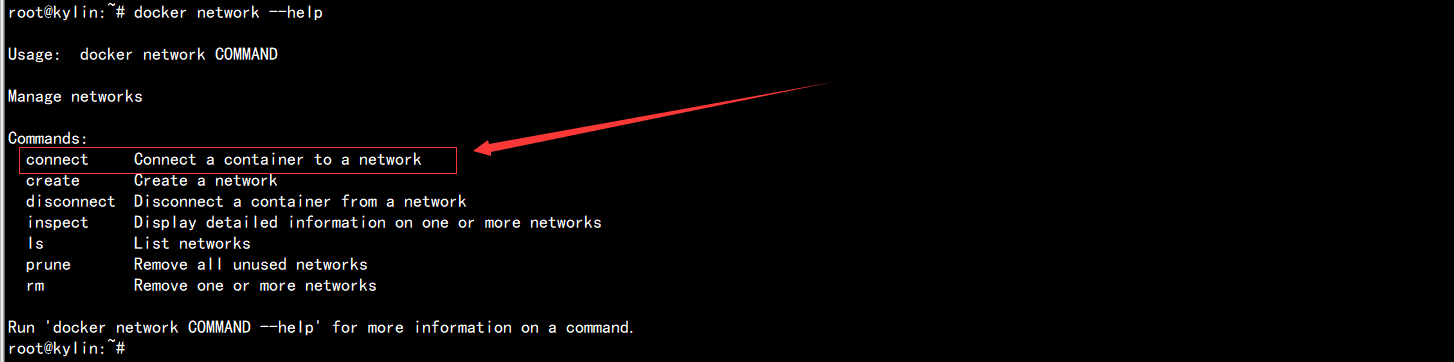

# 网络连通

使用docker network connect<br /><br />

```shell

root@kylin:~# docker network connect --help

Usage: docker network connect [OPTIONS] NETWORK CONTAINER

Connect a container to a network

Options:

--alias strings Add network-scoped alias for the container

--driver-opt strings driver options for the network

--ip string IPv4 address (e.g., 172.30.100.104)

--ip6 string IPv6 address (e.g., 2001:db8::33)

--link list Add link to another container

--link-local-ip strings Add a link-local address for the container

#测试打通 tomcat01 到 mynet

root@kylin:~# docker network connect mynet tomcat01

可以看到,docker 直接把 tomcat01 的网络加入到了 mynet 中

官方说法:一个容器两个ip,类似于阿里云的公网ip和私网ip

测试: 分别使用 tomcat01(打通) 和 tomcat02(没打通) 测试

# 01 打通

root@kylin:~# docker exec -it tomcat01 ping tomcat-net-01

PING tomcat-net-01 (192.168.0.2) 56(84) bytes of data.

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=1 ttl=64 time=0.077 ms

64 bytes from tomcat-net-01.mynet (192.168.0.2): icmp_seq=2 ttl=64 time=0.068 ms

# 02 打不通

root@kylin:~# docker exec -it tomcat02 ping tomcat-net-01

\\ping: tomcat-net-01: Name or service not known

结论: 假设要跨网络操作别人,就需要使用docker network connect 连通 !。。。