1. Kubernetes概念

大规模容器编排系统,K8S是一个开源容器管理工具,负责容器部署,容器扩缩容以及负载均衡。

特性

- 自我修复:

Kubernetes重新启动失败的容器、替换容器、杀死不响应用户定义的运行状况检查的容器,并且在准备好服务之前不将其通告给其他客户端。 - 弹性伸缩:

Kubernetes允许指定每个容器所需的CPU和内存,当容器请求资源时,K8S可以做出更好的决策来管理资源。 - 自动部署和回滚:

可以使用Kubernetes描述已部署容器的所需状态,可以以受控制的速率将实时状态更改为期望状态。

例如可以自动化Kubernetes来为你的部署创建新容器,删除现有容器并将它们的所有资源用于新容器。 - 服务发现和负载均衡:

Kubernetes可以使用DNS名称或自己的IP地址公开容器,

如果进入容器的流量很大,Kubernetes可以负载均衡并分配网络流量,从而使部署稳定。 - 密钥和配置管理:

Kubernetes运行你存储和管理敏感信息,例如免密、OAuth令牌和ssh密钥。

你可以在不重建容器镜像的情况下部署和更新必要和应用程序配置,也无需在堆栈配置中暴露密钥。 - 存储编排:

运行你自动挂载你选择的存储系统,例如本地存储,公共云提供商等。 - 批处理:

K8S提供了一个可弹性运行分布式系统的框架,能满足你的扩展要求、故障转移、部署模式等。如灰度部署。

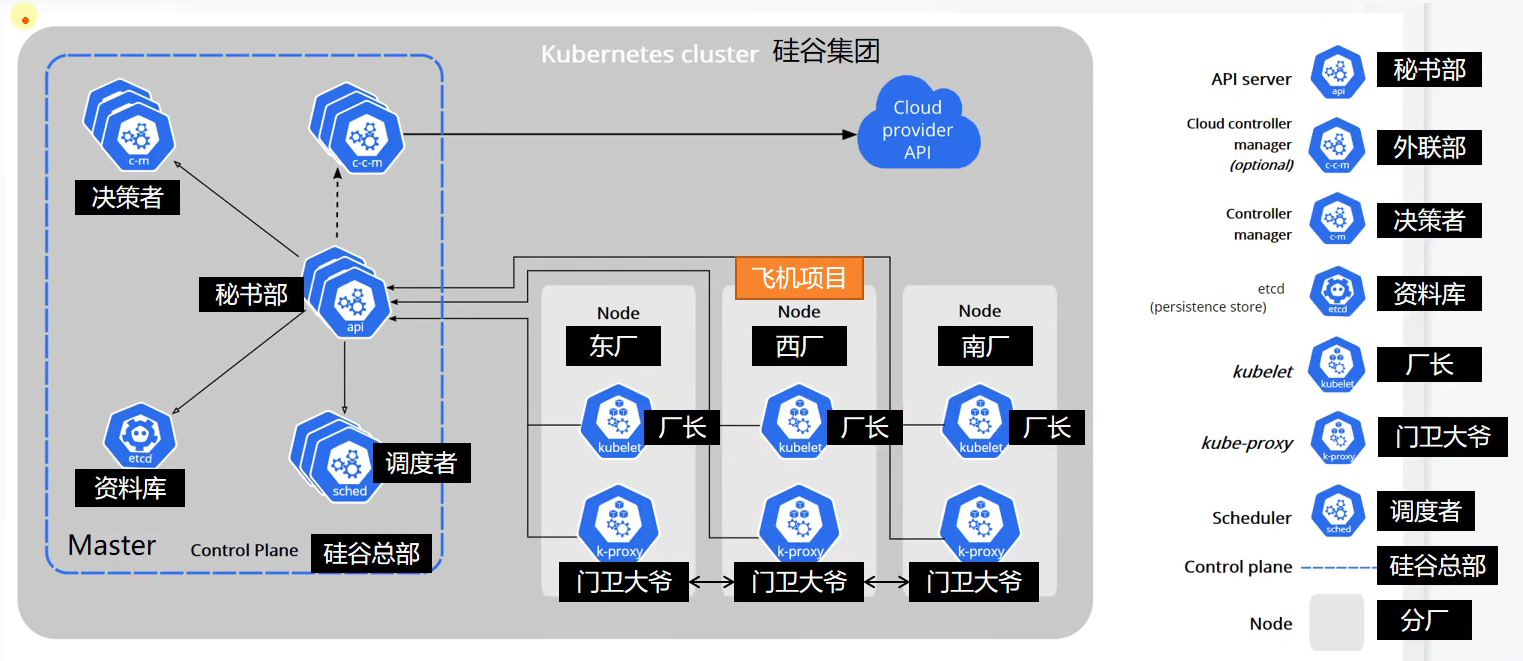

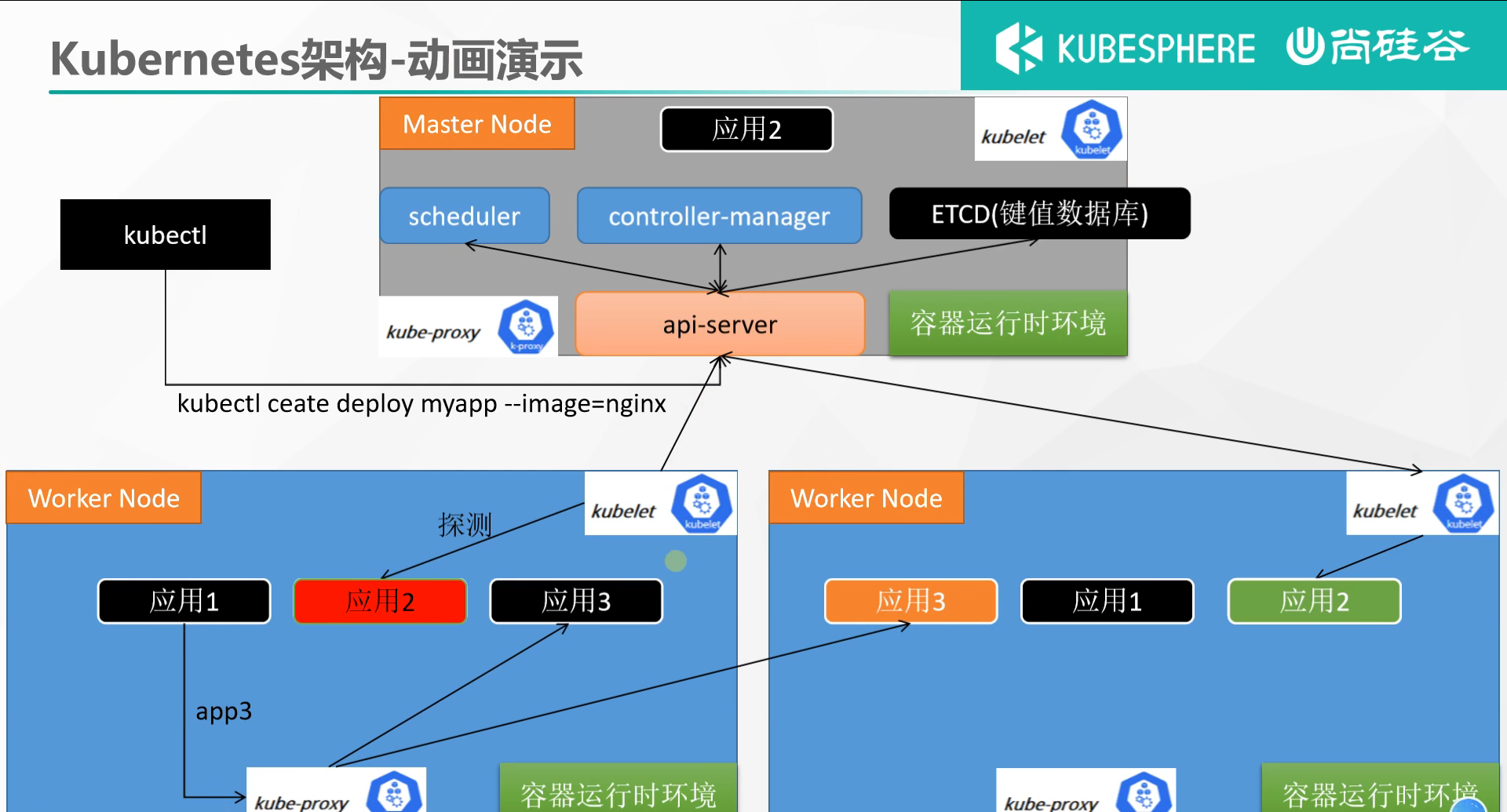

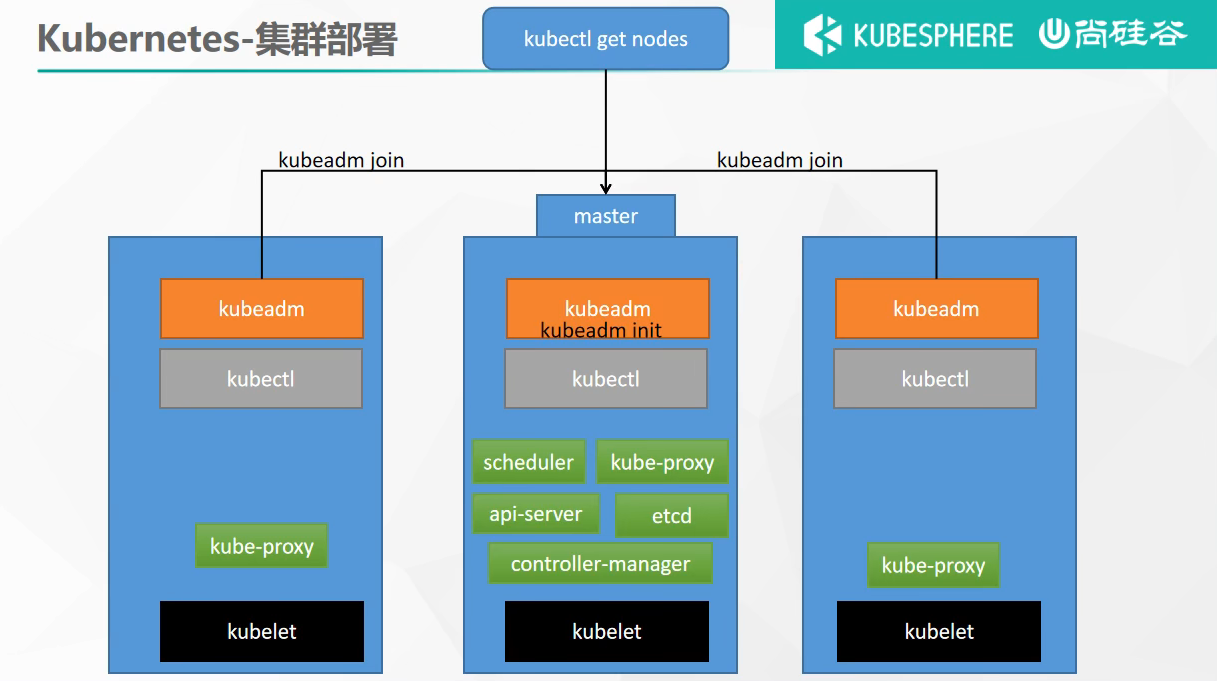

架构

1、工作方式

Kubernetes Cluster = M Master Node + N Worker Node:M主节点+N工作节点; M、N>=1

2、组件架构

https://www.bilibili.com/video/BV13Q4y1C7hS?p=29&t=2.1

2. Kubernetes集群搭建

1、安装docker

2、安装kubelet、kubeadm、kubectl

sudo tee ./images.sh <<-'EOF'#!/bin/bashimages=(kube-apiserver:v1.23.5kube-proxy:v1.23.5kube-controller-manager:v1.23.5kube-scheduler:v1.23.5coredns:1.7.0etcd:3.4.13-0pause:3.2)for imageName in ${images[@]} ; dodocker pull registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/$imageNamedoneEOF

3、使用kubeadm引导集群

#所有机器添加master域名映射,以下需要修改为自己的echo "192.168.3.200 cluster-endpoint" >> /etc/hosts#主节点初始化kubeadm init \--apiserver-advertise-address=192.168.3.200 \--control-plane-endpoint=cluster-endpoint \--kubernetes-version v1.20.9 \--service-cidr=10.96.0.0/16 \--pod-network-cidr=192.168.0.0/16# 这行不改,除非重叠#所有上面两个ip,跟虚拟机的ip 网络范围都不能不重叠

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:rm -rf ~/.kubemkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:# 网络组件https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of control-plane nodes by copying certificate authoritiesand service account keys on each node and then running the following as root:# 添加主节点(24H内有效)kubeadm join cluster-endpoint:6443 --token mzr0s1.6yqpdsjbsab29vbm \--discovery-token-ca-cert-hash sha256:9ef69cd5d6363af50b3d9d711b2aa6dde4e7dd3ed0684eec499950055ce0e749 \--control-planeThen you can join any number of worker nodes by running the following on each as root:# 添加工作节点(24H内有效)kubeadm join cluster-endpoint:6443 --token mzr0s1.6yqpdsjbsab29vbm \--discovery-token-ca-cert-hash sha256:9ef69cd5d6363af50b3d9d711b2aa6dde4e7dd3ed0684eec499950055ce0e749

生成新的join命令 kubeadm token create —print-join-command

4、安装Calico网络组件

# 科学上网 国外ip走代理,删除yum源里的docker和kubernetescurl https://docs.projectcalico.org/manifests/calico.yaml -Okubectl apply -f calico.yaml# 官网安装方式kubectl create -f https://projectcalico.docs.tigera.io/manifests/tigera-operator.yamlkubectl create -f https://projectcalico.docs.tigera.io/manifests/custom-resources.yamlvim /etc/sysctl.confnet.ipv4.conf.all.rp_filter=1net.ipv4.ip_forward=1# 等10分钟

5、验证集群

kubectl get nodeskubectl get pods -A

6、部署dashboard

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.5.0/aio/deploy/recommended.yaml# 修改yaml文件(2处),在spec:下的container:上一行添加 nodeName: k8s-master(master的主机名)# 搜索args,添加一行 token-ttl=86400kubectl apply -f kubectl apply -f recommended.yaml

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

kubectl get svc -owide -n kubernetes-dashboard

#创建访问账号,准备一个yaml文件; vi dash.yamlapiVersion: v1kind: ServiceAccountmetadata:name: admin-usernamespace: kubernetes-dashboard---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: admin-userroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:- kind: ServiceAccountname: admin-usernamespace: kubernetes-dashboard

kubectl -n kubernetes-dashboard get secret $(kubectl -n kubernetes-dashboard get sa/admin-user -o jsonpath="{.secrets[0].name}") -o go-template="{{.data.token | base64decode}}"

eyJhbGciOiJSUzI1NiIsImtpZCI6IjRsekEyTWNwRi16QnFQSnZXWjAtbkRsbklQbHhaWV9tV2VIMlV1ZGNaUjgifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWJwejJqIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiI5YTU5Y2U5Ny04Y2I3LTRhZjAtOWQyNC0wYzJkZTJiY2ZjMGIiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.gHZco5GlK9kOgDjTmF32r11sUvi6ER-HBHEOtyeChsXxhK0fHdtbMTGZFZ3jUulxcLv_BlKUJnVZUiwyfuHXcgOSfpyNL1wkV9ETL53O5SnLaxJCZdXU45c5Z-Z5cRQr870zTpDyFhjeU81TlPgK8SJX7oRCqZGzzzNerATJ99uar4Hpu5u2K_SRBk61G65ILq8jZ3lcsh9xsZjnc2gHCMxHEvgAdTiCe3OKFXy6QoskohioLkWdmWMFeoWvgc2C5z6iuevHquyb_QZ0mTTh-yEwL_eMl5aKbUqzYFsN-w-vGDqK9hsWGbUmSvjvDsJ_2_z3NcCaY1nJQHoYgznUmw

3. Kubernetes实战

1、资源创建方式

kubectl create ns hellokubectl delete ns hello

apiVersion: v1kind: Namespacemetadata:name: hello

kubectl apply -f hello.yamlkubectl delete -f hello.yaml # 删除这个配置文件创建的资源

3、Pod

运行中的一组容器,Pod是K8S中应用的最小单位,一个pod对应docker里的一组容器

kubectl run mynginx --image=nginxkubectl get pod -n defaultkubectl describe pod mynginxkubectl delete pod mynginx

apiVersion: v1kind: Podmetadata:labels:run: mynginxname: mynginx# namespace: defaultspec:containers:- image: nginxname: mynginxkubectl apply -f mynginx.yamlkubectl delete -f mynginx.yaml

kubectl logs mynginx # 查看pod日志kubectl logs -f mynginx # 动态查看日志kubectl get pod -o wide # 可以查到ipcurl 192.168.123.233# 修改Pod里镜像的配置,和docker一样kubectl exec -it mynginx -- /bin/bash

每一个Pod,K8S都会为其分配一个IP,使用IP:镜像端口 访问

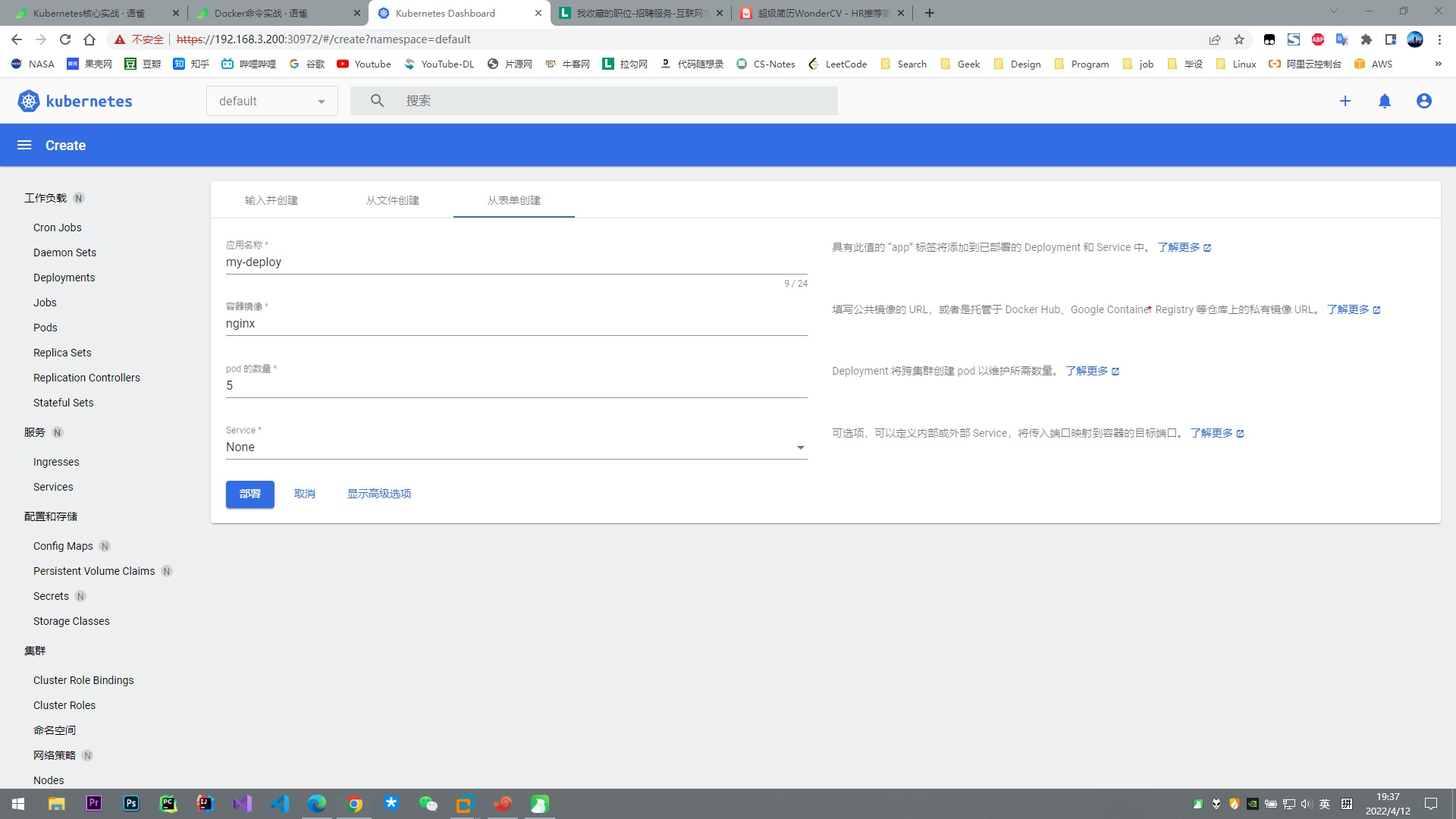

dashboard创建Pod:右上角点加号,三种方式创建Pod

4、Deployment

控制Pod,使Pod拥有多副本,自愈、扩缩容等能力

1 多副本

kubectl run mynginx --image=nginxkubectl create deployment mytomcat --image=tomcat# 后者用 kubectl delete pod 删不掉,会另起一个新Pod,这就叫自愈能力

kubectl get deployment # 可简写为deploykubectl delete deployment mytomcat

kubectl create deploy my-dep --image=nginx --replicas=3

apiVersion: apps/v1kind: Deploymentmetadata:labels:app: my-depname: my-depspec:replicas: 3selector:matchLabels:app: my-deptemplate:metadata:labels:app: my-depspec:containers:- image: nginxname: nginx

2 扩缩容

kubectl scale deploy/my-dep --replicas=5# 或输入 kubectl edit deploy my-dep,会打开配置文件,修改里面的replicas# 或在dashboard的deployments里选 缩放

3 自愈&故障转移

- 一台机器下线5分钟后,会在其它机器上起应有的Pod

- 删除Pod后,自动拉起

4 滚动更新

kubectl set image deployment/my-dep nginx=nginx:1.16.1 --record

5 版本回退

docker image inspect nginx | grep -i version# 获取Pod的yamlkubectl get pod my-dep-5b7868d854-g9dvs -oyaml# 获取Deployment的yamlkubectl get deploy my-dep -oyaml

更多:

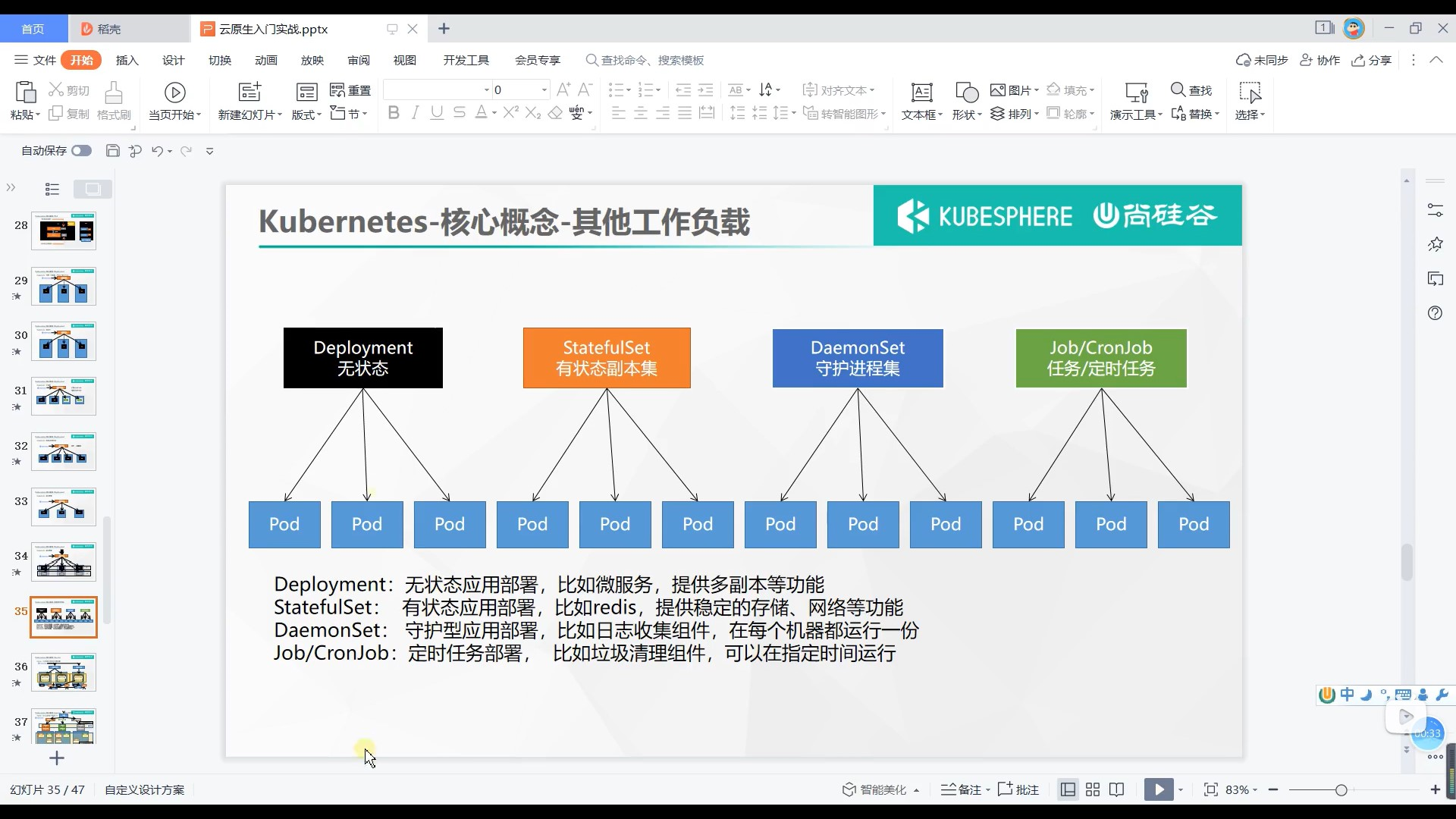

除了Deployment,k8s还有

StatefulSet、DaemonSet、Job等类型的资源。都称为工作负载。 有状态应用使用StatefulSet部署,无状态应用使用Deployment部署 https://kubernetes.io/zh/docs/concepts/workloads/controllers/

5、Service

将一组Pods公开为网络服务的抽象方法。 Service:Pod的服务发现与负载均衡

# 暴露Deploymentkubectl expose deployment my-dep --port=8000 --target-port=80 [--type=ClusterIP]kubectl get service # 查看封装成一个服务的IP# 效果:集群内使用service的ip:端口,可以负载均衡的访问每个pod# 其他deployment通过 curl my-dep.default.svc:8000 或 curl my-dep:8000 也能访问# sev等价于service deploy等价于deployment

apiVersion: v1kind: Servicemetadata:labels:app: my-depname: my-depspec:ports:- port: 8000protocol: TCPtargetPort: 80selector:app: my-deptype: ClusterIP

kubectl expose deployment my-dep --port=8000 --target-port=80 [--type=NodePort]kubectl get svc # 会有两个端口,后面那个可以从外部访问# http://192.168.3.200:31253/ 访问任意一台机器的该端口,都能的到3种不同的返回值,即负载均衡

6、ingress

Service的统一网关入口

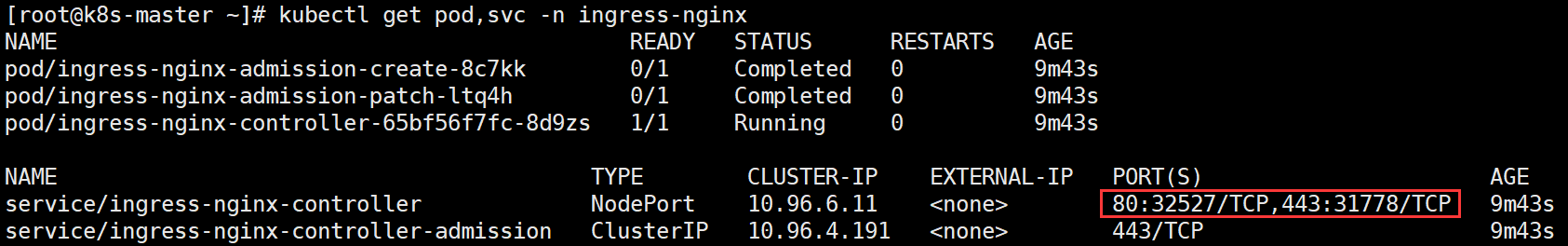

1 安装

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml# 修改镜像vim deploy.yaml#将image的值改为如下值:registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0# 国外IP走代理kubectl apply -f deploy.yaml# 有两个ingress-nginx-admission是completed状态# 检查安装的结果kubectl get pod,svc -n ingress-nginx# 最后别忘记把svc暴露的端口要放行

http://192.168.3.200:32527

http://192.168.3.200:32527

https://192.168.3.200:31778

2 使用

# 有两个deployment,一个hello-server 一个nginx-demoapiVersion: apps/v1kind: Deploymentmetadata:name: hello-serverspec:replicas: 2selector:matchLabels:app: hello-servertemplate:metadata:labels:app: hello-serverspec:containers:- name: hello-serverimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-serverports:- containerPort: 9000---apiVersion: apps/v1kind: Deploymentmetadata:labels:app: nginx-demoname: nginx-demospec:replicas: 2selector:matchLabels:app: nginx-demotemplate:metadata:labels:app: nginx-demospec:containers:- image: nginxname: nginx---apiVersion: v1kind: Servicemetadata:labels:app: nginx-demoname: nginx-demospec:selector:app: nginx-demoports:- port: 8000protocol: TCPtargetPort: 80---apiVersion: v1kind: Servicemetadata:labels:app: hello-servername: hello-serverspec:selector:app: hello-serverports:- port: 8000protocol: TCPtargetPort: 9000

域名访问

apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: ingress-host-barspec:ingressClassName: nginxrules:- host: "hello.atguigu.com"http:paths:- pathType: Prefixpath: "/"backend:service:name: hello-serverport:number: 8000- host: "demo.atguigu.com"http:paths:- pathType: Prefixpath: "/nginx" # 如果有路径,下面的服务也会收到该路径,若下面的服务不能处理,就是404backend:service:name: nginx-demo ## java,比如使用路径重写,去掉前缀nginxport:number: 8000

问题: path: “/nginx” 与 path: “/“ 为什么会有不同的效果? demo配置的path是”/nginx”,”demo.atguigu.com/“是ingress的404,”demo.atguigu.com/nginx”是pod的404

路径重写

apiVersion: networking.k8s.io/v1kind: Ingressmetadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: /$2 # $2表示取第二个匹配的项name: ingress-host-barspec:ingressClassName: nginxrules:- host: "hello.atguigu.com"http:paths:- pathType: Prefixpath: "/"backend:service:name: hello-serverport:number: 8000- host: "demo.atguigu.com"http:paths:- pathType: Prefixpath: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务不能处理就是404backend:service:name: nginx-demo ## java,比如使用路径重写,去掉前缀nginxport:number: 8000

流量限制

apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: ingress-limit-rateannotations:nginx.ingress.kubernetes.io/limit-rps: "1"spec:ingressClassName: nginxrules:- host: "haha.atguigu.com"http:paths:- pathType: Exactpath: "/"backend:service:name: nginx-demoport:number: 8000# 限制每秒访问一次,这时如果刷新过快,会返回503 Service Temporarily Unavailable

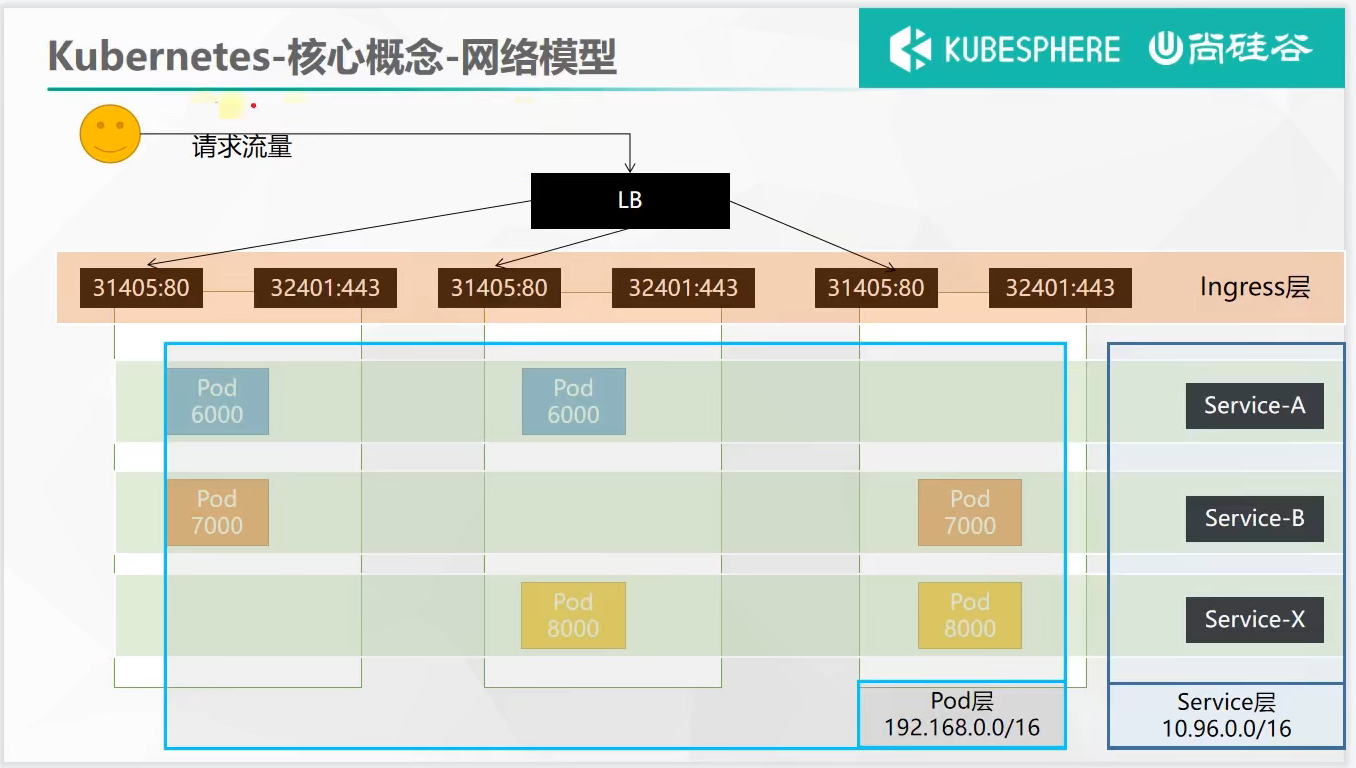

网络模型总结:Pod层 —> Service层 —> Ingress层

在集群内可以访问任意Pod或Service的IP,外部访问要先到Ingress层

7、存储抽象

如果按照原来docker的方式映射,一个Pod在worker1的磁盘上有数据,该Pod挂了后k8s在worker2重启该Pod,这时就无法读到worker1磁盘上的数据了。

因此需要将存储层抽象出来,如GlusterFS、NFS、CephFS等。

环境准备

1 所有节点

yum install -y nfs-utils

2 主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exportsmkdir -p /nfs/datasystemctl enable rpcbind --nowsystemctl enable nfs-server --now#配置生效exportfs -r

3 从节点

showmount -e 192.168.3.200 # 显示主节点可以被挂载的目录#执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmountmkdir -p /nfs/data # 也可以不同名mount -t nfs 192.168.3.200:/nfs/data /nfs/data# 写入一个测试文件echo "hello nfs server" > /nfs/data/test.txt# 该文件三个机器都有了

4 原生方式的数据挂载

apiVersion: apps/v1kind: Deploymentmetadata:labels:app: nginx-pv-demoname: nginx-pv-demospec:replicas: 2selector:matchLabels:app: nginx-pv-demotemplate:metadata:labels:app: nginx-pv-demospec:containers:- image: nginxname: nginxvolumeMounts:- name: html # volumeMounts的name与volumes的name要对应mountPath: /usr/share/nginx/htmlvolumes:- name: htmlnfs: # 也可以是ceph等server: 192.168.3.200path: /nfs/data/nginx-pv # 这个目录必须已存在,即2个pod都使用这里面的文件

如果pod没起来,可以用kubectl describe pod查看状况

mkdir /nfs/data/nginx-pv

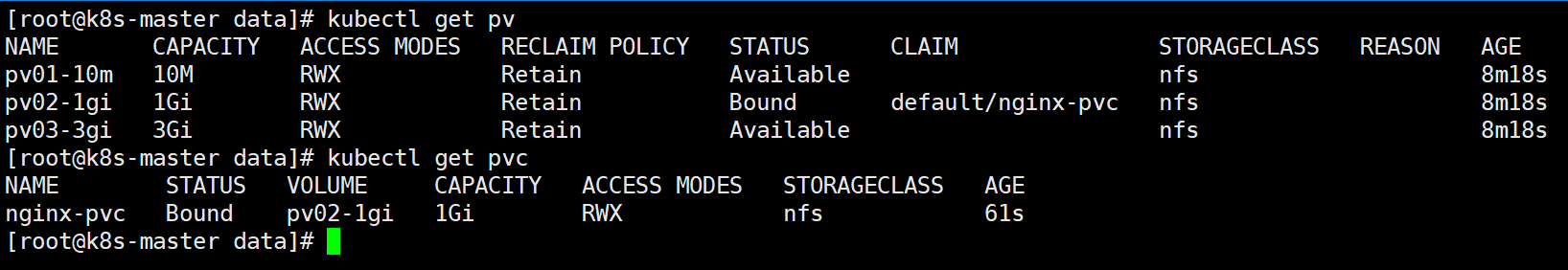

PV & PVC

PV:持久卷(Persistent Volume),将需要持久化的应用数据保存到指定位置 PVC:持久卷声明(Persistent Volume Claim),声明需要使用的持久卷规格

原生方式挂载的缺点:起Pod前需要手动创建目录,删Pod后需要手动删除目录,不能动态分配容量

1、创建PV池

静态供应(提前创建好固定大小的几个PV) 动态供应:KubeSphere根据PVC自动创建大小合适的PV

mkdir -p /nfs/data/01mkdir -p /nfs/data/02mkdir -p /nfs/data/03

apiVersion: v1kind: PersistentVolumemetadata:name: pv01-10mspec:capacity:storage: 10MaccessModes:- ReadWriteManystorageClassName: nfsnfs:path: /nfs/data/01server: 192.168.3.200---apiVersion: v1kind: PersistentVolumemetadata:name: pv02-1gispec:capacity:storage: 1GiaccessModes:- ReadWriteManystorageClassName: nfsnfs:path: /nfs/data/02server: 192.168.3.200---apiVersion: v1kind: PersistentVolumemetadata:name: pv03-3gispec:capacity:storage: 3GiaccessModes:- ReadWriteManystorageClassName: nfsnfs:path: /nfs/data/03server: 192.168.3.200

kubectl get persistentvolume # 或简写pv

PVC的创建与绑定

kind: PersistentVolumeClaimapiVersion: v1metadata:name: nginx-pvcspec:accessModes:- ReadWriteManyresources:requests:storage: 200MistorageClassName: nfs

将PCV删除之后,Bound状态的PV会变为Released状态

apiVersion: apps/v1kind: Deploymentmetadata:labels:app: nginx-deploy-pvcname: nginx-deploy-pvcspec:replicas: 2selector:matchLabels:app: nginx-deploy-pvctemplate:metadata:labels:app: nginx-deploy-pvcspec:containers:- image: nginxname: nginxvolumeMounts:- name: htmlmountPath: /usr/share/nginx/htmlvolumes:- name: htmlpersistentVolumeClaim:claimName: nginx-pvc

ConfigMap

适合挂载配置文件,并且可以自动更新

1、redis示例

# 创建配置,redis保存到k8s的etcdkubectl create cm redis-conf --from-file=redis.conf # configmap简称cm,可以get出来# redis.conf是已存在的文件,被转存到k8s的etcd里面,原文件可删除

kubectl get cm redis-conf -oyaml 获取redis-conf这个cm的yaml描述文件

apiVersion: v1data: #data是所有真正的数据,key:默认是文件名 value:配置文件的内容redis.conf: |appendonly yeskind: ConfigMapmetadata:name: redis-confnamespace: default

2、创建Pod

apiVersion: v1kind: Podmetadata:name: redisspec:containers:- name: redisimage: rediscommand:- redis-server- "/redis-master/redis.conf" #指的是redis容器内部的位置ports:- containerPort: 6379volumeMounts:- mountPath: /dataname: data- mountPath: /redis-mastername: configvolumes:- name: dataemptyDir: {}- name: configconfigMap: # 这里原来是nfs,现在是CMname: redis-confitems:- key: redis.confpath: redis.conf

这时便可以用kubectl edit cm redis-conf来修改Pod里redis的配置,自动更新

使用redis-cli进入redis命令行,用config get 配置名查看配置项

Secret

Secret对象类型用来保存敏感信息,例如密码、OAuth令牌和SSH密钥。将这些信息存放在secret中比放在Pod里更加安全和灵活,相当于ConfigMap加密保存

kubectl create secret docker-registry sgy-docker \--docker-username=sgy111222333 \--docker-password=sgy123sgy123 \--docker-email=sgy111222333@outlook.com##命令格式kubectl create secret docker-registry regcred \--docker-server=<你的镜像仓库服务器> \--docker-username=<你的用户名> \--docker-password=<你的密码> \--docker-email=<你的邮箱地址>

apiVersion: v1kind: Podmetadata:name: private-nginxspec:containers:- image: sgy111222333/nginx_sgy:0.0.1_onlinename: nginx-sgy# 不带下面这些会报ImagePullBackOffimagePullSecrets:- name: sgy-docker

K8S总结

工作负载:

Deployments:有自愈、故障转移、滚动升级等特性,底层是一个个Pod

Pod是K8S的最小原子单位,Pod里面有一个个container

Daemon Sets是每台机器都有

Stateful Set是有状态副本集,适合部署mysql,redis等(需要记录数据的中间件)

Deployments是无状态副本集,部署无状态应用(不需要记录数据)

每个Pod对应一个IP

服务:

Service可以根据标签选中一组Pod,合为一个IP,且service可以负载均衡

service之上有ingress,所有流量先到ingress,再到service,可以限流、重写url

配置和存储:

Config Maps:挂载配置文件,动态更新

PVC与PV:挂载目录,申请空间

Secrets:存密钥等,相当于CM又经过Base64加密