一、配置HDFS

1. hadoop-env.sh

# The java implementation to use. By default, this environment# variable is REQUIRED on ALL platforms except OS X!export JAVA_HOME=/opt/mubai/module/jdk1.8.0_261

2. core-site.xml

<property>

<name>fs.defaultFS</name>

<value>hdfs://172.25.187.216:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/mubai/module/hadoop-3.1.4/data/tmp</value>

</property>

<property>

<name>hadoop.http.staticuser.user</name>

<value>mubai</value>

</property>

3. hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.http-address</name>

<value>mubai01:9870</value>

</property>

4. 格式化namenode

bin/hdfs namenode -format

格式化namenode的过程不允许有任何的中断,而且namenode只能格式化一次,否则会出现namenode和datanode的cluster-id不一致的情况。

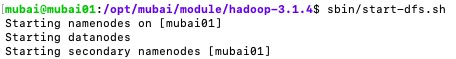

5. 启动HDFS

sbin/start-dfs.sh

6. 检查进程

jps

7. 检查web端

在本机的浏览器输入mubai01:9870(9870是hdfs的管理端口,3.0之前的版本为50070)

二、配置Yarn

1. 配置mapred-site.xml

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

2. 配置yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

3. 启动Yarn

sbin/start-yarn.sh

4. 启动历史服务器

sbin/mr-jobhistory-daemon.sh start historyserver

5. 检查进程

三、伪分布式运行WordCount

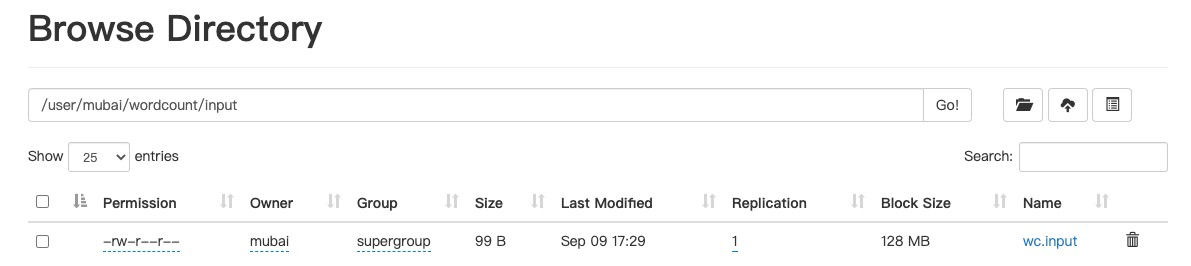

1. 准备目录和资源

hadoop fs -mkdir /user/mubai

hadoop fs -mkdir /user/mubai/wordcount

hadoop fs -mkdir /user/mubai/wordcount/input

hadoop fs -put /opt/mubai/workspace/Hadoop/wordcount/input/wc.input /user/mubai/wordcount/input

wc.input:

hadoop hadoop

hadoop

hive hive

I am learing big data frameworks

spark spark spark

mysql is garbege

2. 运行wordcount

hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.1.4.jar wordcount /user/mubai/wordcount/input/wc.input /user/mubai/wordcount/output

output文件夹不能建立,不然会抱文件已存在的异常