更新日期:2022-03

0. 环境

| node1 | master | Ubuntu 20.04(ARM64) | 192.168.50.11 |

|---|---|---|---|

| node2 | worker | Ubuntu 20.04(ARM64) | 192.168.50.12 |

| node3 | worker | Ubuntu 20.04(ARM64) | 192.168.50.13 |

| node4 | Storage | Ubuntu 20.04(ARM64) | 192.168.50.147 |

1. 关闭交换分区并配置内核参数

所有节点(不包括 Storage)均需要执行

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstabsudo swapoff -a

修改内核参数(二选一执行)

方法 1

# Enable kernel modules

sudo modprobe overlay

sudo modprobe br_netfilter

sudo sysctl --system

方法 2

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

# Add some settings to sysctl

sudo tee /etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

# Reload sysctl

sudo sysctl --system

2. 部署 Container Runtime

所有节点(除了 node4)均需要执行

sudo apt-get install -y apt-transport-https ca-certificates curl

sudo apt install docker.io

修改 docker daemon 设置

sudo tee /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://cymwrg5y.mirror.aliyuncs.com"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

重启 Docker

sudo systemctl daemon-reload

sudo systemctl restart docker

3. 添加 Kubernetes 仓库

所有节点(除了 node4)均需要执行

# Google 和 Aliyun 二选一

# Google

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

# Aliyun

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo tee /etc/apt/sources.list.d/kubernetes.list <<-'EOF'

deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

EOF

4. 安装 kubeadm

Master节点

sudo apt-get update

sudo apt-get install -y kubectl=1.21.10-00 kubeadm=1.21.10-00 kubelet=1.21.10-00

sudo apt-mark hold kubectl kubeadm kubelet

Worker 节点

# Worker node doesn't need kubectl

sudo apt-get update

sudo apt-get install -y kubeadm=1.21.10-00 kubelet=1.21.10-00

# 阻止自动更新(apt upgrade时忽略)。所以更新的时候先unhold,更新完再hold。

sudo apt-mark hold kubeadm kubelet

确认安装版本

kubectl version --client && kubeadm version

5. 部署 Master 相关组件

有两种方法可以部署 Master 相关的组件,一种是使用init 配置文件,另外一种是使用命令行参数,建议使用 init 配置文件进行部署。

使用 init 配置文件

获取并修改 kubeadm-init 配置文件

- advertiseAddress:API Service 的 IP地址

- imagePullPolicy: IfNotPresent

- name: master 的主机名

- imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

- kubernetesVersion: 1.21.0

修改后的yaml 文件 ```yaml apiVersion: kubeadm.k8s.io/v1beta2 bootstrapTokens:sudo kubeadm config print init-defaults > init-config.yaml - groups:

- system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages:

- signing

- authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 192.168.50.11 bindPort: 6443 nodeRegistration: criSocket: /var/run/dockershim.sock imagePullPolicy: IfNotPresent name: master taints: null

apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.21.10 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12

scheduler: {}

根据cgroupDriver 实际情况选择,docker 配置为 systemd 则不需要执行下面的配置

kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1

cgroupDriver: systemd

cgroupDriver: cgroupfs

这里 cgroupDriver 需要说明的是,kubeadm 默认会将kubelet 的 cgroupDriver 字段设置为 systemd,<br />如果设置为 systemd ,kubelet 会启动不了,所以需要保持 docker 和 kubelet cgroupDriver 保持一致。

```shell

sudo kubeadm init --config init-config.yaml

使用命令行参数

sudo kubeadm init \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version 1.21.10 \

--pod-network-cidr=$POD_NETWORK \

--service-cidr=10.96.0.0/16 \

--apiserver-advertise-address=192.168.50.11

Master 组件创建完成后,需要配置 kubeconig 文件等

# 对于普通用户

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 对于 root 用户

export KUBECONFIG=/etc/kubernetes/admin.conf

6. 配置 Worker node 加入集群

执行在 Master node 生成的命令加入集群

sudo kubeadm join MASTERIP:6443 --token TOEKN \

--discovery-token-ca-cert-hash sha256:SHA256

token 有 24h 有效期,过期后可以重新生成 token

# 方法 1

kubeadm token create --print-join-command

# 方法 2

# 获取 token

kubeadm token create

# 列出 token

kubeadm token list | awk -F" " '{print $1}' | tail -n 1

# 获取 CA 公钥的哈希值

openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^ .* //'

# 从节点加入集群

kubeadm join masterIP:6443 --token TOKEN --discovery-token-ca-cert-hash sha256:HASHNUMBER

获取 kubeadm 帮助

cat kubeadm-help

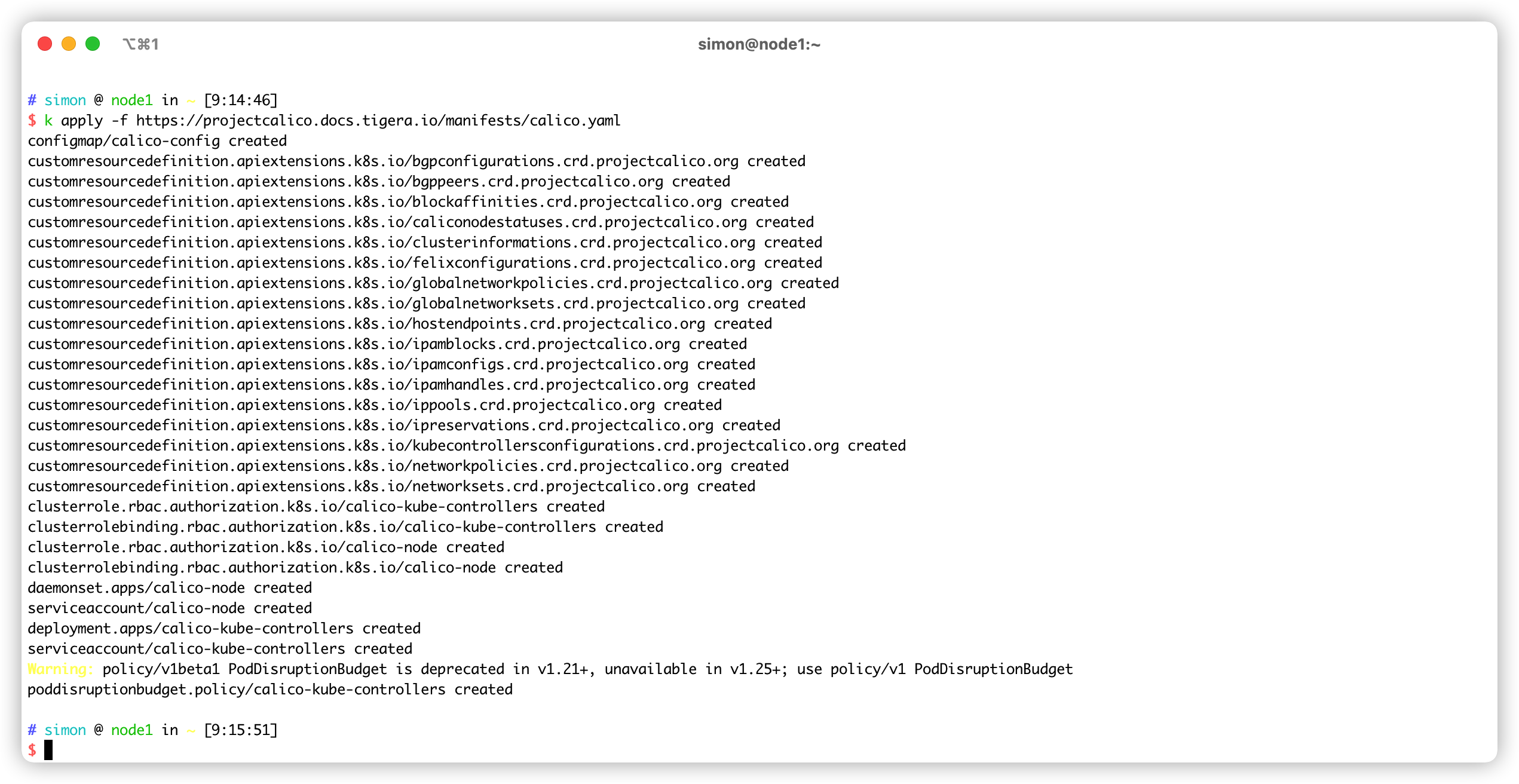

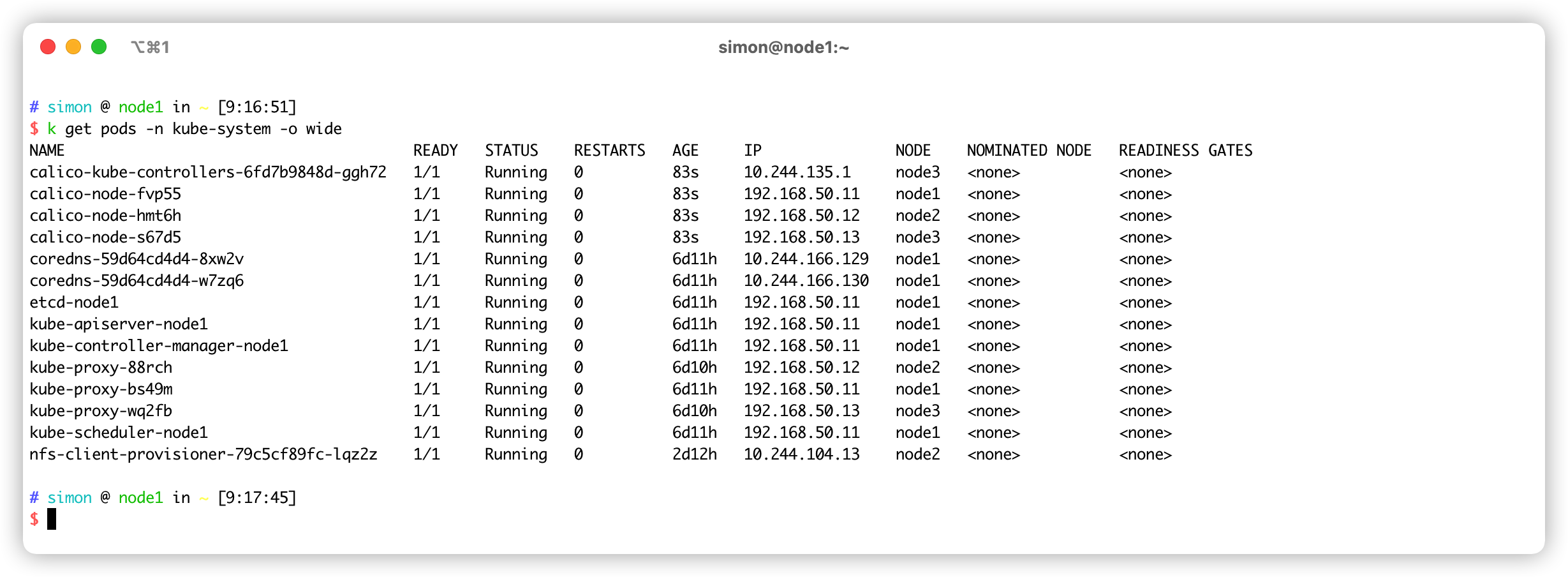

7. 部署网络插件

目前有 flannel 、calico 等插件可选

方式 1

kubectl apply -f https://docs.projectcalico.org/v3.21/manifests/calico.yaml

# Install Calico with Kubernetes API datastore, 50 nodes or less

kubectl apply -f https://projectcalico.docs.tigera.io/manifests/calico.yaml

方式 2

kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

wget https://docs.projectcalico.org/manifests/custom-resources.yaml

#修改 pod network为 10.244.0.0/16

kubectl apply -f custom-resources.yaml

网络插件运行后,coredns pod 也会从 pending 状态变成 running 状态

8. 脚本

#!/bin/bash

set -eux

set -o pipefail

K8S_VERSION=1.21.10

MASTER_IP=192.168.50.11

POD_NETWORK=10.244.0.0/16

PACKAGE_VERSION=$K8S_VERSION-00

swapoff(){

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

sudo swapoff -a

echo "Disable Swap successfully"

}

sysctl_config(){

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

br_netfilter

EOF

sudo tee /etc/sysctl.d/kubernetes.conf<<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

echo "reload sysctl successfully"

}

set_timezone(){

sudo timedatectl set-timezone Asia/Shanghai

sudo systemctl restart rsyslog

}

config_container(){

sudo cp -a /etc/apt/sources.list /etc/apt/sources.list.bak

sudo sed -i 's\ports.ubuntu.com\mirrors.aliyun.com\g' /etc/apt/sources.list

sudo apt update

sudo apt-get install -y apt-transport-https ca-certificates curl nfs-common

sudo apt -y install docker.io

sudo tee /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://cymwrg5y.mirror.aliyuncs.com"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

# 这里需要输入 sudo 密码,输入错误,脚本会停止

sudo systemctl daemon-reload

sudo systemctl restart docker

}

add_k8s_repo(){

#curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

#echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | sudo tee /etc/apt/sources.list.d/kubernetes.list

curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add -

sudo tee /etc/apt/sources.list.d/kubernetes.list <<-'EOF'

deb https://mirrors.aliyun.com/kubernetes/apt kubernetes-xenial main

EOF

}

install_master_package(){

sudo apt-get update

sudo apt-get install -y kubectl=$PACKAGE_VERSION kubeadm=$PACKAGE_VERSION kubelet=$PACKAGE_VERSION

sudo apt-mark hold kubectl kubeadm kubelet

}

install_worker_package(){

sudo apt-get update

sudo apt-get install -y kubeadm=$PACKAGE_VERSION kubelet=$PACKAGE_VERSION

sudo apt-mark hold kubeadm kubelet

}

bootstrap(){

sudo docker pull registry.aliyuncs.com/google_containers/coredns:1.8.0

sudo docker tag \

registry.aliyuncs.com/google_containers/coredns:1.8.0 \

registry.aliyuncs.com/google_containers/coredns/coredns:v1.8.0

sudo docker rmi registry.aliyuncs.com/google_containers/coredns:1.8.0

sudo kubeadm config images pull --kubernetes-version v$K8S_VERSION --image-repository registry.aliyuncs.com/google_containers

#sudo kubeadm config images pull --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.23.1

sudo kubeadm init \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version $K8S_VERSION \

--pod-network-cidr=$POD_NETWORK \

--service-cidr=10.96.0.0/16 \

--apiserver-advertise-address=$MASTER_IP

#sudo kubeadm config images pull --kubernetes-verson $K8S_VERSION

#sudo kubeadm init \

# --kubernetes-version $K8S_VERSION \

# --pod-network-cidr=$POD_NETWORK \

# --service-cidr=10.96.0.0/16 \

# --apiserver-advertise-address=$MASTER_IP

}

kubeconfig(){

#执行脚本使用 bash 或者./,不能使用 sudo 执行,否则会文件会拷贝到 root

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

#sudo echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /root/.bashrc

}

install_calico(){

kubectl create -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

sudo wget https://docs.projectcalico.org/manifests/custom-resources.yaml

sudo sed -i 's/192.168.0.0/10.244.0.0/g' custom-resources.yaml

#sudo sed 's/[0-9]\+.[0-9]\+.[0-9]\+.[0-9]\+/$POD_NETWORD/g' custom-resources.yaml

kubectl apply -f custom-resources.yaml

}

master(){

swapoff

set_timezone

sysctl_config

config_container

add_k8s_repo

install_master_package

bootstrap

kubeconfig

install_calico

}

worker(){

swapoff

set_timezone

sysctl_config

config_container

add_k8s_repo

install_worker_package

}

#master

worker

9. 优化配置

zsh

sudo apt-get install zsh -y

chsh -s $(which zsh)

wget https://github.com/robbyrussell/oh-my-zsh/raw/master/tools/install.sh -O - | sh

git clone https://github.com/zsh-users/zsh-autosuggestions ${ZSH_CUSTOM:-~/.oh-my-zsh}/plugins/zsh-autosuggestions

git clone https://github.com/zsh-users/zsh-syntax-highlighting.git ${ZSH_CUSTOM:-~/.oh-my-zsh}/plugins/zsh-syntax-highlighting

sudo apt-get install autojump

vim ~/.zshrc

ZSH_THEME="ys"

plugins=(git zsh-autosuggestions zsh-syntax-highlighting)

. /usr/share/autojump/autojump.sh

source ~/.zshrc

自动补全

echo 'source <(kubectl completion zsh)' >> ~/.zshrc

echo 'compdef __start_kubectl k kc' >>~/.zshrc

别名

echo 'alias k=kubectl' >>~/.zshrc

echo 'alias kc="kubectl"' >> ~/.zshrc

echo 'alias ka="kubectl apply -f"' >> ~/.zshrc

echo 'alias kd="kubectl delete -f"' >> ~/.zshrc

10. 配置存储

NFS

RBAC

apiVersion: v1

kind: ServiceAccount

metadata:

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

Deployment

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

labels:

app: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: kube-system

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: nfs-client-provisioner

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: registry.cn-beijing.aliyuncs.com/kubesphereio/nfs-subdir-external-provisioner:v4.0.2

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: k8s-sigs.io/nfs-subdir-external-provisioner

- name: NFS_SERVER

value: 192.168.50.147

- name: NFS_PATH

value: /nfs

volumes:

- name: nfs-client-root

nfs:

server: 192.168.50.147

path: /nfs

Class

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: managed-nfs-storage

annotations:

storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME'

parameters:

archiveOnDelete: "false"

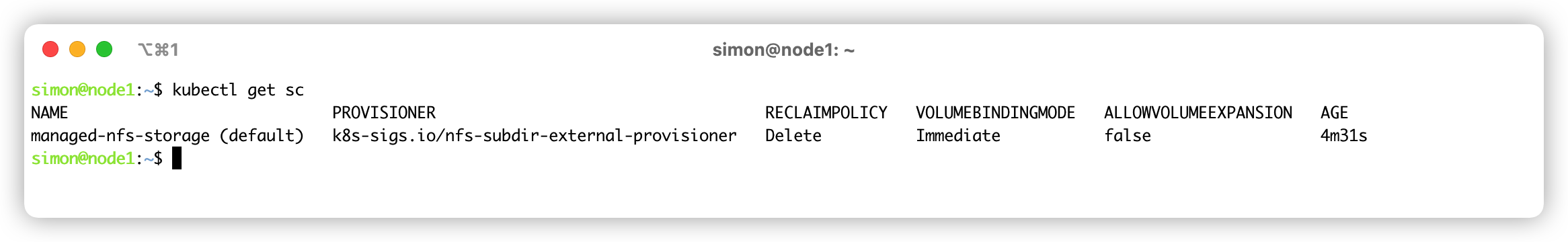

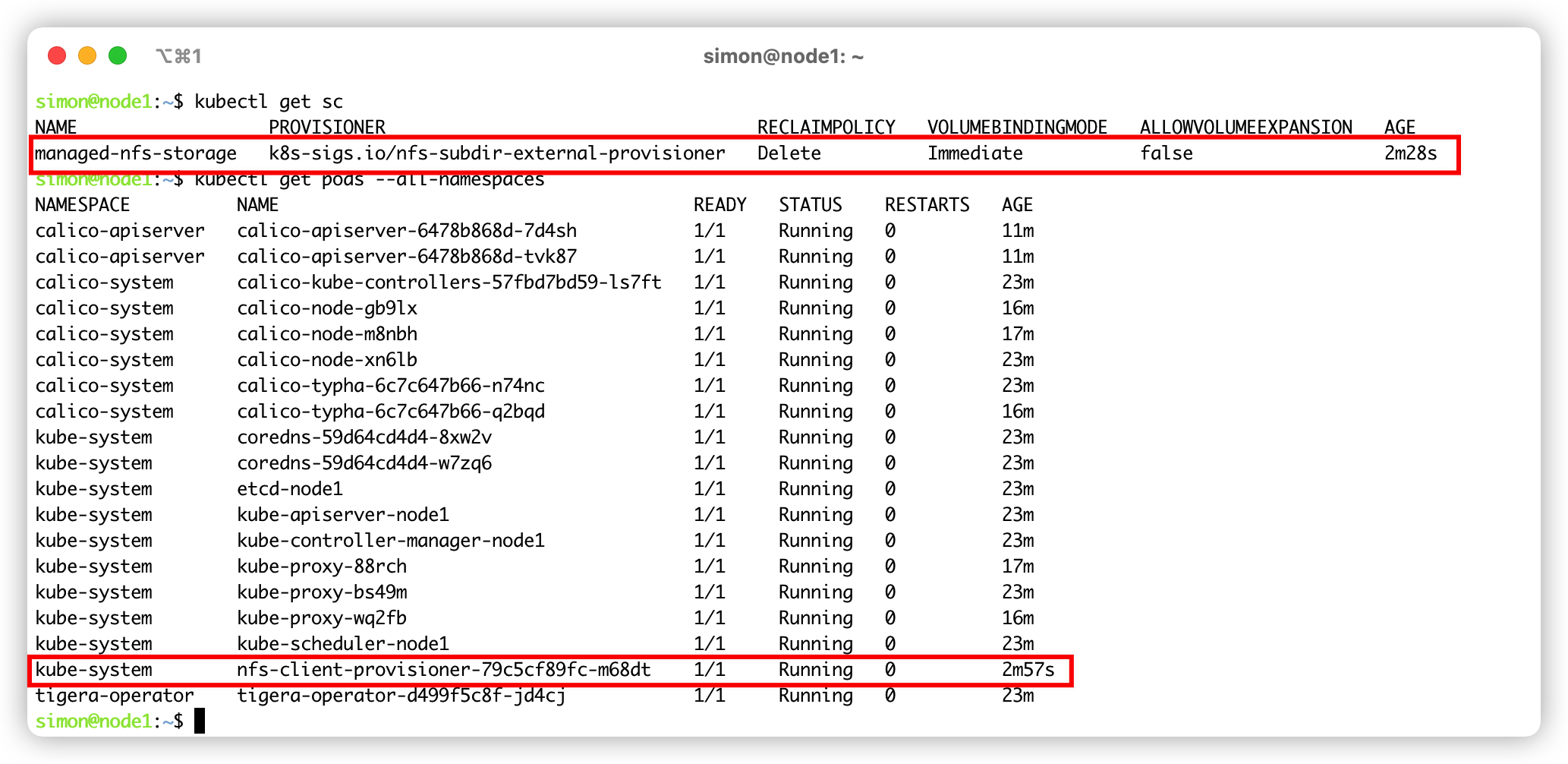

检查

指定默认 Storageclass

kubectl patch storageclass managed-nfs-storage -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

回收策略

【https://blog.csdn.net/zenglingmin8/article/details/121737953】

11. 远程管理集群

拷贝 master 节点的 kubeconfig 文件到本地用户家目录

./kube/config,修改server配置段server: [https://kubernetes.default.svc:6443](https://kubernetes.default.svc:6443)可以替换的域名: kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster kubernetes.default.svc.cluster.local

调整 kubeconfig 文件权限

chmod 600 $HOME/.kube/config调整域名解析

解析 kubernetes.default.svc 的 IP 为集群映射的公网 IP 地址

cat /etc/hosts

X.X.X.X kubernetes.default.svc

12. Kuboard

curl -o kuboard-v3.yaml https://addons.kuboard.cn/kuboard/kuboard-v3-storage-class.yaml

修改 node ip 和 Storageclass name 后执行

kubectl apply -f kuboard-v3.yaml

13. 参考文档

https://k8s.iswbm.com/c01/p01_depoly-kubernetes-cluster-with-kubelet.html

https://www.cnblogs.com/cosmos-wong/p/15709412.html

https://kubernetes.io/zh/docs/tasks/tools/included/optional-kubectl-configs-zsh/

[

](https://computingforgeeks.com/deploy-and-use-openebs-container-storage-on-kubernetes/)