k8s监控实战-部署prometheus

目录

k8s监控实战-部署prometheus

- 1 prometheus前言相关

- 1.1 Prometheus的特点

- 1.2 基本原理

- 1.2.1 原理说明

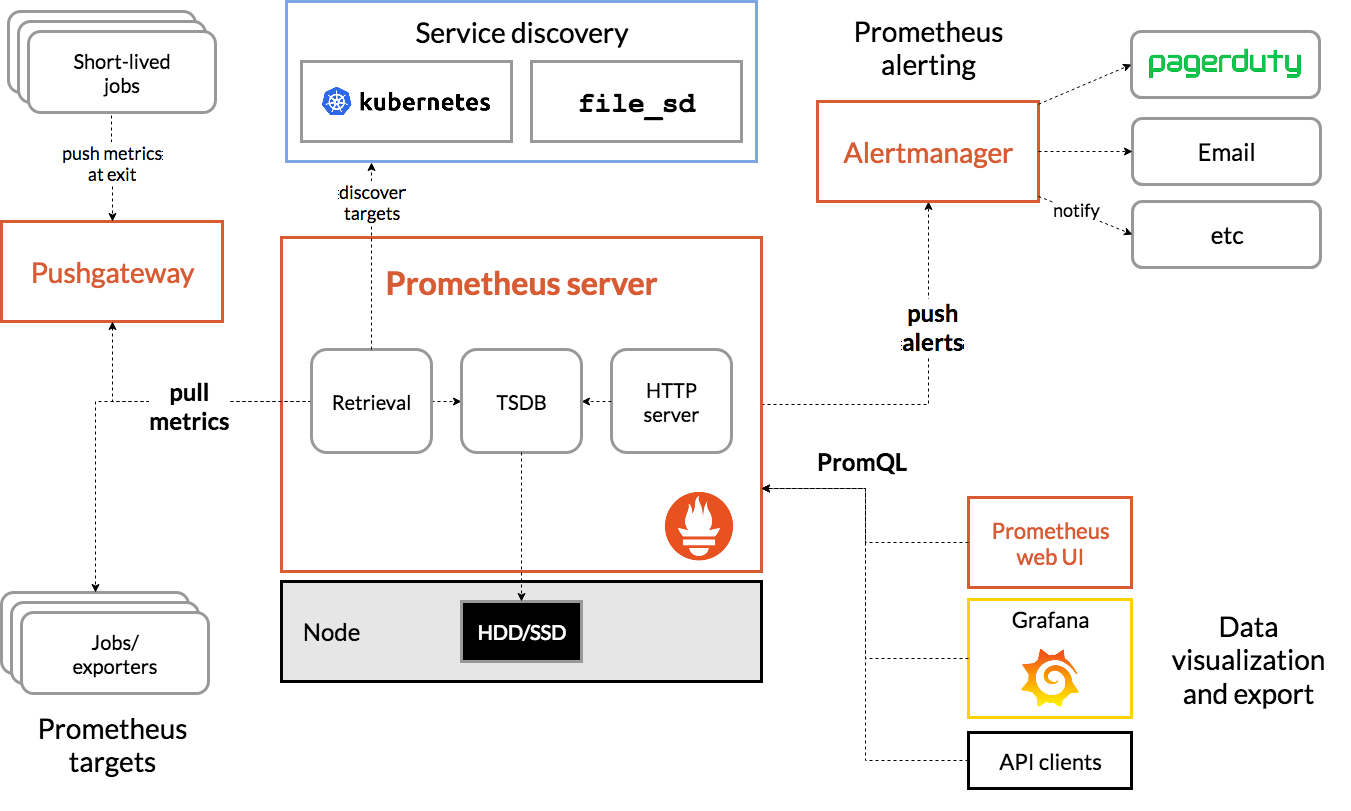

- 1.2.2 架构图:

- 1.2.3 三大套件

- 1.2.4 架构服务过程

- 1.2.5 常用的exporter

- 2 部署4个exporter

- 2.1 部署kube-state-metrics

- 2.1.1 准备docker镜像

- 2.1.2 准备rbac资源清单

- 2.1.3 准备Dp资源清单

- 2.1.4 应用资源配置清单

- 2.2 部署node-exporter

- 2.2.1 准备docker镜像

- 2.2.2 准备ds资源清单

- 2.2.3 应用资源配置清单:

- 2.3 部署cadvisor

- 2.3.1 准备docker镜像

- 2.3.2 准备ds资源清单

- 2.3.3 应用资源配置清单:

- 2.4 部署blackbox-exporter

- 2.4.1 准备docker镜像

- 2.4.2 准备cm资源清单

- 2.4.3 准备dp资源清单

- 2.4.4 准备svc资源清单

- 2.4.5 准备ingress资源清单

- 2.4.6 添加域名解析

- 2.4.7 应用资源配置清单

- 2.4.8 访问域名测试

- 2.1 部署kube-state-metrics

- 3 部署prometheus server

- 3.1 准备prometheus server环境

- 3.1.1 准备docker镜像

- 3.1.2 准备rbac资源清单

- 3.1.3 准备dp资源清单

- 3.1.4 准备svc资源清单

- 3.1.5 准备ingress资源清单

- 3.1.6 添加域名解析

- 3.2 部署prometheus server

- 3.2.1 准备目录和证书

- 3.2.2 创建prometheus配置文件

- 3.2.3 应用资源配置清单

- 3.2.4 浏览器验证

- 3.1 准备prometheus server环境

- 4 使服务能被prometheus自动监控

- 4.1 让traefik能被自动监控

- 4.1.1 修改traefik的yaml

- 4.1.2 应用配置查看

- 4.2 用blackbox检测TCP/HTTP服务状态

- 4.2.1 被检测服务准备

- 4.2.2 添加tcp的annotation

- 4.2.3 添加http的annotation

- 4.3 添加监控jvm信息

1 prometheus前言相关

由于docker容器的特殊性,传统的zabbix无法对k8s集群内的docker状态进行监控,所以需要使用prometheus来进行监控

prometheus官网:官网地址1.1 Prometheus的特点

- 4.1 让traefik能被自动监控

- 1 prometheus前言相关

多维度数据模型,使用时间序列数据库TSDB而不使用mysql。

- 灵活的查询语言PromQL。

- 不依赖分布式存储,单个服务器节点是自主的。

- 主要基于HTTP的pull方式主动采集时序数据

- 也可通过pushgateway获取主动推送到网关的数据。

- 通过服务发现或者静态配置来发现目标服务对象。

-

1.2 基本原理

1.2.1 原理说明

Prometheus的基本原理是通过各种exporter提供的HTTP协议接口

周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。

不需要任何SDK或者其他的集成过程,非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。

互联网公司常用的组件大部分都有exporter可以直接使用,如Nginx、MySQL、Linux系统信息等。1.2.2 架构图:

1.2.3 三大套件

Server 主要负责数据采集和存储,提供PromQL查询语言的支持。

- Alertmanager 警告管理器,用来进行报警。

- Push Gateway 支持临时性Job主动推送指标的中间网关。

1.2.4 架构服务过程

- Prometheus Daemon负责定时去目标上抓取metrics(指标)数据

每个抓取目标需要暴露一个http服务的接口给它定时抓取。

支持通过配置文件、文本文件、Zookeeper、DNS SRV Lookup等方式指定抓取目标。 - PushGateway用于Client主动推送metrics到PushGateway

而Prometheus只是定时去Gateway上抓取数据。

适合一次性、短生命周期的服务 - Prometheus在TSDB数据库存储抓取的所有数据

通过一定规则进行清理和整理数据,并把得到的结果存储到新的时间序列中。 - Prometheus通过PromQL和其他API可视化地展示收集的数据。

支持Grafana、Promdash等方式的图表数据可视化。

Prometheus还提供HTTP API的查询方式,自定义所需要的输出。 - Alertmanager是独立于Prometheus的一个报警组件

支持Prometheus的查询语句,提供十分灵活的报警方式。1.2.5 常用的exporter

prometheus不同于zabbix,没有agent,使用的是针对不同服务的exporter

正常情况下,监控k8s集群及node,pod,常用的exporter有四个:

- kube-state-metrics

收集k8s集群master&etcd等基本状态信息 - node-exporter

收集k8s集群node信息 - cadvisor

收集k8s集群docker容器内部使用资源信息 - blackbox-exporte

收集k8s集群docker容器服务是否存活2 部署4个exporter

老套路,下载docker镜像,准备资源配置清单,应用资源配置清单:2.1 部署kube-state-metrics

2.1.1 准备docker镜像

准备目录docker pull quay.io/coreos/kube-state-metrics:v1.5.0docker tag 91599517197a harbor.zq.com/public/kube-state-metrics:v1.5.0docker push harbor.zq.com/public/kube-state-metrics:v1.5.0

mkdir /data/k8s-yaml/kube-state-metricscd /data/k8s-yaml/kube-state-metrics

2.1.2 准备rbac资源清单

``` cat >rbac.yaml <<’EOF’ apiVersion: v1 kind: ServiceAccount metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: “true” name: kube-state-metrics namespace: kube-system

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: “true” name: kube-state-metrics rules:

- apiGroups:

- “” resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints verbs:

- list

- watch

- apiGroups:

- policy resources:

- poddisruptionbudgets verbs:

- list

- watch

- apiGroups:

- extensions resources:

- daemonsets

- deployments

- replicasets verbs:

- list

- watch

- apiGroups:

- apps resources:

- statefulsets verbs:

- list

- watch

- apiGroups:

- batch resources:

- cronjobs

- jobs verbs:

- list

- watch

- apiGroups:

- autoscaling resources:

- horizontalpodautoscalers verbs:

- list

- watch

apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: “true” name: kube-state-metrics roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kube-state-metrics subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: kube-system

EOF

cat >dp.yaml <<’EOF’ apiVersion: extensions/v1beta1 kind: Deployment metadata: annotations: deployment.kubernetes.io/revision: “2” labels: grafanak8sapp: “true” app: kube-state-metrics name: kube-state-metrics namespace: kube-system spec: selector: matchLabels:<a name="d4352174"></a>#### 2.1.3 准备Dp资源清单

strategy: rollingUpdate:grafanak8sapp: "true"app: kube-state-metrics

type: RollingUpdate template: metadata:maxSurge: 25%maxUnavailable: 25%

spec:labels:grafanak8sapp: "true"app: kube-state-metrics

EOFcontainers:- name: kube-state-metricsimage: harbor.zq.com/public/kube-state-metrics:v1.5.0imagePullPolicy: IfNotPresentports:- containerPort: 8080name: http-metricsprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /healthzport: 8080scheme: HTTPinitialDelaySeconds: 5periodSeconds: 10successThreshold: 1timeoutSeconds: 5serviceAccountName: kube-state-metrics

kubectl apply -f http://k8s-yaml.zq.com/kube-state-metrics/rbac.yaml kubectl apply -f http://k8s-yaml.zq.com/kube-state-metrics/dp.yaml<a name="c5368dd5"></a>#### 2.1.4 应用资源配置清单任意node节点执行

创建报错:no matches for kind “Deployment“ in version “extensions/v1beta1“bash kubectl create -f rbac.yaml kubectl create -f dp.yaml error: unable to recognize “dp.yaml”: no matches for kind “Deployment” in version “extensions/v1beta1” 解决:

修改yaml文件:

apiVersion: extensions/v1beta1 kind: Deployment metadata:

修改如下:

apiVersion: apps/v1 kind: Deployment

这个主要是由于版本升级的原因

我的 k8s 版本是 1.18.5

在这个版本中 Deployment 已经启用extensions/v1beta1

DaemonSet, Deployment, StatefulSet, and ReplicaSet resources will no longer be served from extensions/v1beta1, apps/v1beta1, or apps/v1beta2 by default in v1.16.

参考小笔记:[https://www.yuque.com/r/note/8407f01c-eaa3-40e6-a1f0-0272130aded6](https://www.yuque.com/r/note/8407f01c-eaa3-40e6-a1f0-0272130aded6)<br />**验证测试**

kubectl get pod -n kube-system -o wide|grep kube-state-metrices ~]# curl http://172.7.21.4:8080/healthz ok

返回OK表示已经成功运行。<a name="4f11b12b"></a>### 2.2 部署node-exporter**由于node-exporter是监控node的,需要每个节点启动一个,所以使用ds控制器**<a name="f941603a"></a>#### 2.2.1 准备docker镜像

docker pull prom/node-exporter:v0.15.0 docker tag 12d51ffa2b22 harbor.zq.com/public/node-exporter:v0.15.0 docker push harbor.zq.com/public/node-exporter:v0.15.0

准备目录

mkdir /data/k8s-yaml/node-exporter cd /data/k8s-yaml/node-exporter

<a name="27259428"></a>#### 2.2.2 准备ds资源清单

cat >ds.yaml <<’EOF’ apiVersion: extensions/v1beta1 kind: Deployment metadata: name: node-exporter namespace: kube-system labels: daemon: “node-exporter” grafanak8sapp: “true” spec: selector: matchLabels: daemon: “node-exporter” grafanak8sapp: “true” template: metadata: name: node-exporter labels: daemon: “node-exporter” grafanak8sapp: “true” spec: volumes:

- name: prochostPath:path: /proctype: ""- name: syshostPath:path: /systype: ""containers:- name: node-exporterimage: harbor.zq.com/public/node-exporter:v0.15.0imagePullPolicy: IfNotPresentargs:- --path.procfs=/host_proc- --path.sysfs=/host_sysports:- name: node-exporterhostPort: 9100containerPort: 9100protocol: TCPvolumeMounts:- name: sysreadOnly: truemountPath: /host_sys- name: procreadOnly: truemountPath: /host_prochostNetwork: true

EOF

> 主要用途就是将宿主机的`/proc`,`sys`目录挂载给容器,是容器能获取node节点宿主机信息<a name="0c12cd01"></a>#### 2.2.3 应用资源配置清单:任意node节点

kubectl apply -f http://k8s-yaml.zq.com/node-exporter/ds.yaml kubectl get pod -n kube-system -o wide|grep node-exporter

创建报错:no matches for kind “Deployment“ in version “extensions/v1beta1“```bashkubectl create -f ds.yamlerror: unable to recognize "ds.yam": no matches for kind "Deployment" in version "extensions/v1beta1"解决:修改yaml文件:---apiVersion: extensions/v1beta1kind: Deploymentmetadata:修改如下:---apiVersion: apps/v1kind: Deployment这个主要是由于版本升级的原因我的 k8s 版本是 1.18.5在这个版本中 Deployment 已经启用extensions/v1beta1DaemonSet, Deployment, StatefulSet, and ReplicaSet resources will no longer be served from extensions/v1beta1, apps/v1beta1, or apps/v1beta2 by default in v1.16.

参考小笔记:https://www.yuque.com/r/note/8407f01c-eaa3-40e6-a1f0-0272130aded6

2.3 部署cadvisor

2.3.1 准备docker镜像

docker pull google/cadvisor:v0.28.3docker tag 75f88e3ec333 harbor.zq.com/public/cadvisor:0.28.3docker push harbor.zq.com/public/cadvisor:0.28.3

准备目录

mkdir /data/k8s-yaml/cadvisorcd /data/k8s-yaml/cadvisor

2.3.2 准备ds资源清单

cadvisor由于要获取每个node上的pod信息,因此也需要使用daemonset方式运行

cat >ds.yaml <<'EOF'apiVersion: apps/v1kind: DaemonSetmetadata:name: cadvisornamespace: kube-systemlabels:app: cadvisorspec:selector:matchLabels:name: cadvisortemplate:metadata:labels:name: cadvisorspec:hostNetwork: true#------pod的tolerations与node的Taints配合,做POD指定调度----tolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule#-------------------------------------containers:- name: cadvisorimage: harbor.zq.com/public/cadvisor:v0.28.3imagePullPolicy: IfNotPresentvolumeMounts:- name: rootfsmountPath: /rootfsreadOnly: true- name: var-runmountPath: /var/run- name: sysmountPath: /sysreadOnly: true- name: dockermountPath: /var/lib/dockerreadOnly: trueports:- name: httpcontainerPort: 4194protocol: TCPreadinessProbe:tcpSocket:port: 4194initialDelaySeconds: 5periodSeconds: 10args:- --housekeeping_interval=10s- --port=4194terminationGracePeriodSeconds: 30volumes:- name: rootfshostPath:path: /- name: var-runhostPath:path: /var/run- name: syshostPath:path: /sys- name: dockerhostPath:path: /data/dockerEOF

2.3.3 应用资源配置清单:

应用清单前,先在每个node上做以下软连接,否则服务可能报错

mount -o remount,rw /sys/fs/cgroup/ln -s /sys/fs/cgroup/cpu,cpuacct /sys/fs/cgroup/cpuacct,cpu

应用清单

kubectl apply -f http://k8s-yaml.zq.com/cadvisor/ds.yaml

检查:

kubectl -n kube-system get pod -o wide|grep cadvisor

2.4 部署blackbox-exporter

2.4.1 准备docker镜像

docker pull prom/blackbox-exporter:v0.15.1docker tag 81b70b6158be harbor.zq.com/public/blackbox-exporter:v0.15.1docker push harbor.zq.com/public/blackbox-exporter:v0.15.1

准备目录

mkdir /data/k8s-yaml/blackbox-exportercd /data/k8s-yaml/blackbox-exporter

2.4.2 准备cm资源清单

cat >cm.yaml <<'EOF'apiVersion: v1kind: ConfigMapmetadata:labels:app: blackbox-exportername: blackbox-exporternamespace: kube-systemdata:blackbox.yml: |-modules:http_2xx:prober: httptimeout: 2shttp:valid_http_versions: ["HTTP/1.1", "HTTP/2"]valid_status_codes: [200,301,302]method: GETpreferred_ip_protocol: "ip4"tcp_connect:prober: tcptimeout: 2sEOF

2.4.3 准备dp资源清单

cat >dp.yaml <<'EOF'kind: DeploymentapiVersion: extensions/v1beta1metadata:name: blackbox-exporternamespace: kube-systemlabels:app: blackbox-exporterannotations:deployment.kubernetes.io/revision: 1spec:replicas: 1selector:matchLabels:app: blackbox-exportertemplate:metadata:labels:app: blackbox-exporterspec:volumes:- name: configconfigMap:name: blackbox-exporterdefaultMode: 420containers:- name: blackbox-exporterimage: harbor.zq.com/public/blackbox-exporter:v0.15.1imagePullPolicy: IfNotPresentargs:- --config.file=/etc/blackbox_exporter/blackbox.yml- --log.level=info- --web.listen-address=:9115ports:- name: blackbox-portcontainerPort: 9115protocol: TCPresources:limits:cpu: 200mmemory: 256Mirequests:cpu: 100mmemory: 50MivolumeMounts:- name: configmountPath: /etc/blackbox_exporterreadinessProbe:tcpSocket:port: 9115initialDelaySeconds: 5timeoutSeconds: 5periodSeconds: 10successThreshold: 1failureThreshold: 3EOF

如果创建dp.yaml失败,报错:error: unable to decode “dp.yaml”: resource.metadataOnlyObject.ObjectMeta: v1.ObjectMeta.Labels: Annotations: ReadString: expects “ or n, but found 1, error found in #10 byte of …|evision”:1},”labels”|…, bigger context …|nnotations”:{“deployment.kubernetes.io/revision”:1},”labels”:{“app”:”blackbox-exporter”},”name”:”bla|…

未修改:

annotations:

deployment.kubernetes.io/revision: 1

修改为:

annotations:

deployment.kubernetes.io/revision: “true”

参考小笔记:https://www.yuque.com/r/note/27d36e88-0a01-4177-aae5-f2a478777ca3

2.4.4 准备svc资源清单

cat >svc.yaml <<'EOF'kind: ServiceapiVersion: v1metadata:name: blackbox-exporternamespace: kube-systemspec:selector:app: blackbox-exporterports:- name: blackbox-portprotocol: TCPport: 9115EOF

如果没有域名可以使用NodePort暴露端口

kind: ServiceapiVersion: v1metadata:name: blackbox-exporternamespace: kube-systemspec:selector:app: blackbox-exportertype: NodePortports:- name: blackbox-portprotocol: TCPport: 9115targetPort: 9115

2.4.5 准备ingress资源清单

cat >ingress.yaml <<'EOF'apiVersion: extensions/v1beta1kind: Ingressmetadata:name: blackbox-exporternamespace: kube-systemspec:rules:- host: blackbox.zq.comhttp:paths:- path: /backend:serviceName: blackbox-exporterservicePort: blackbox-portEOF

2.4.6 添加域名解析

这里用到了一个域名,添加解析

vi /var/named/zq.com.zoneblackbox A 10.4.7.10systemctl restart named

2.4.7 应用资源配置清单

kubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/cm.yamlkubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/svc.yamlkubectl apply -f http://k8s-yaml.zq.com/blackbox-exporter/ingress.yaml

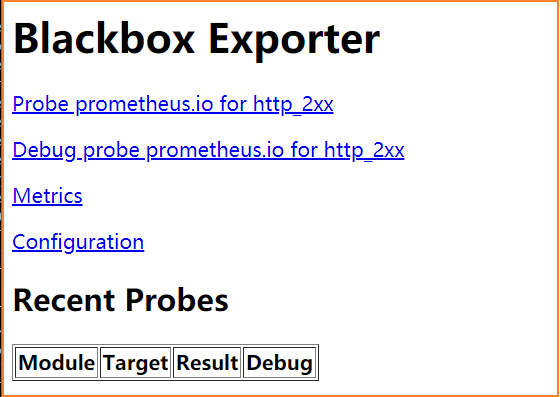

2.4.8 访问域名测试

访问http://blackbox.zq.com,显示如下界面,表示blackbox已经运行成

3 部署prometheus server

3.1 准备prometheus server环境

3.1.1 准备docker镜像

docker pull prom/prometheus:v2.14.0docker tag 7317640d555e harbor.zq.com/infra/prometheus:v2.14.0docker push harbor.zq.com/infra/prometheus:v2.14.0

准备目录

mkdir /data/k8s-yaml/prometheus-servercd /data/k8s-yaml/prometheus-server

3.1.2 准备rbac资源清单

cat >rbac.yaml <<'EOF'apiVersion: v1kind: ServiceAccountmetadata:labels:addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/cluster-service: "true"name: prometheusnamespace: infra---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/cluster-service: "true"name: prometheusrules:- apiGroups:- ""resources:- nodes- nodes/metrics- services- endpoints- podsverbs:- get- list- watch- apiGroups:- ""resources:- configmapsverbs:- get- nonResourceURLs:- /metricsverbs:- get---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/cluster-service: "true"name: prometheusroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheussubjects:- kind: ServiceAccountname: prometheusnamespace: infraEOF

3.1.3 准备dp资源清单

加上

--web.enable-lifecycle启用远程热加载配置文件,配置文件改变后不用重启prometheus 调用指令是curl -X POST http://localhost:9090/-/reloadstorage.tsdb.min-block-duration=10m只加载10分钟数据到内storage.tsdb.retention=72h保留72小时数据

cat >dp.yaml <<'EOF'apiVersion: extensions/v1beta1kind: Deploymentmetadata:annotations:deployment.kubernetes.io/revision: "5"labels:name: prometheusname: prometheusnamespace: infraspec:progressDeadlineSeconds: 600replicas: 1revisionHistoryLimit: 7selector:matchLabels:app: prometheusstrategy:rollingUpdate:maxSurge: 1maxUnavailable: 1type: RollingUpdatetemplate:metadata:labels:app: prometheusspec:containers:- name: prometheusimage: harbor.zq.com/infra/prometheus:v2.14.0imagePullPolicy: IfNotPresentcommand:- /bin/prometheusargs:- --config.file=/data/etc/prometheus.yml- --storage.tsdb.path=/data/prom-db- --storage.tsdb.min-block-duration=10m- --storage.tsdb.retention=72h- --web.enable-lifecycleports:- containerPort: 9090protocol: TCPvolumeMounts:- mountPath: /dataname: dataresources:requests:cpu: "1000m"memory: "1.5Gi"limits:cpu: "2000m"memory: "3Gi"imagePullSecrets:- name: harborsecurityContext:runAsUser: 0serviceAccountName: prometheusvolumes:- name: datanfs:server: hdss7-200path: /data/nfs-volume/prometheusEOF

如果没有nfs可以使用hostPath:挂载

需要修改

- name: datahostPath:path: /data/nfs-volume/prometheustype: Directory

3.1.4 准备svc资源清单

cat >svc.yaml <<'EOF'apiVersion: v1kind: Servicemetadata:name: prometheusnamespace: infraspec:ports:- port: 9090protocol: TCPtargetPort: 9090selector:app: prometheusEOF

apiVersion: v1kind: Servicemetadata:name: prometheusnamespace: infraspec:selector:app: prometheustype: NodePortports:- port: 9090protocol: TCPtargetPort: 9090nodePort: 32133

3.1.5 准备ingress资源清单

cat >ingress.yaml <<'EOF'apiVersion: extensions/v1beta1kind: Ingressmetadata:annotations:kubernetes.io/ingress.class: traefikname: prometheusnamespace: infraspec:rules:- host: prometheus.zq.comhttp:paths:- path: /backend:serviceName: prometheusservicePort: 9090EOF

3.1.6 添加域名解析

这里用到一个域名prometheus.zq.com,添加解析:

vi /var/named/od.com.zoneprometheus A 10.4.7.10systemctl restart named

3.2 部署prometheus server

3.2.1 准备目录和证书

mkdir -p /data/nfs-volume/prometheus/mkdir -p /data/nfs-volume/prometheus/prom-dbcd /data/nfs-volume/prometheus/# 拷贝配置文件中用到的证书:cp /opt/certs/ca.pem ./cp /opt/certs/client.pem ./cp /opt/certs/client-key.pem ./

3.2.2 创建prometheus配置文件

配置文件说明: 此配置为通用配置,除第一个job

etcd是做的静态配置外,其他8个job都是做的自动发现 因此只需要修改etcd的配置后,就可以直接用于生产环境

cat >/data/nfs-volume/prometheus/prometheus.yml <<'EOF'global:scrape_interval: 15sevaluation_interval: 15sscrape_configs:- job_name: 'etcd'tls_config:ca_file: /data/etc/ca.pemcert_file: /data/etc/client.pemkey_file: /data/etc/client-key.pemscheme: httpsstatic_configs:- targets:- '10.4.7.12:2379'- '10.4.7.21:2379'- '10.4.7.22:2379'- job_name: 'kubernetes-apiservers'kubernetes_sd_configs:- role: endpointsscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtbearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/tokenrelabel_configs:- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]action: keepregex: default;kubernetes;https- job_name: 'kubernetes-pods'kubernetes_sd_configs:- role: podrelabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape]action: keepregex: true- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2target_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_name- job_name: 'kubernetes-kubelet'kubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __address__replacement: ${1}:10255- job_name: 'kubernetes-cadvisor'kubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- source_labels: [__meta_kubernetes_node_name]regex: (.+)target_label: __address__replacement: ${1}:4194- job_name: 'kubernetes-kube-state'kubernetes_sd_configs:- role: podrelabel_configs:- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_name- source_labels: [__meta_kubernetes_pod_label_grafanak8sapp]regex: .*true.*action: keep- source_labels: ['__meta_kubernetes_pod_label_daemon', '__meta_kubernetes_pod_node_name']regex: 'node-exporter;(.*)'action: replacetarget_label: nodename- job_name: 'blackbox_http_pod_probe'metrics_path: /probekubernetes_sd_configs:- role: podparams:module: [http_2xx]relabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]action: keepregex: http- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port, __meta_kubernetes_pod_annotation_blackbox_path]action: replaceregex: ([^:]+)(?::\d+)?;(\d+);(.+)replacement: $1:$2$3target_label: __param_target- action: replacetarget_label: __address__replacement: blackbox-exporter.kube-system:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_name- job_name: 'blackbox_tcp_pod_probe'metrics_path: /probekubernetes_sd_configs:- role: podparams:module: [tcp_connect]relabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_blackbox_scheme]action: keepregex: tcp- source_labels: [__address__, __meta_kubernetes_pod_annotation_blackbox_port]action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2target_label: __param_target- action: replacetarget_label: __address__replacement: blackbox-exporter.kube-system:9115- source_labels: [__param_target]target_label: instance- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_name- job_name: 'traefik'kubernetes_sd_configs:- role: podrelabel_configs:- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scheme]action: keepregex: traefik- source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path]action: replacetarget_label: __metrics_path__regex: (.+)- source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port]action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2target_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- source_labels: [__meta_kubernetes_namespace]action: replacetarget_label: kubernetes_namespace- source_labels: [__meta_kubernetes_pod_name]action: replacetarget_label: kubernetes_pod_nameEOF

3.2.3 应用资源配置清单

kubectl apply -f http://k8s-yaml.zq.com/prometheus-server/rbac.yamlkubectl apply -f http://k8s-yaml.zq.com/prometheus-server/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/prometheus-server/svc.yamlkubectl apply -f http://k8s-yaml.zq.com/prometheus-server/ingress.yaml

3.2.4 浏览器验证

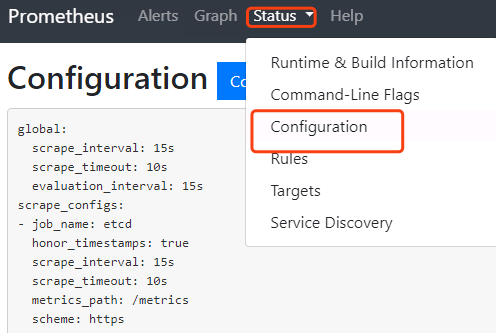

访问http://prometheus.zq.com,如果能成功访问的话,表示启动成功

点击status->configuration就是我们的配置文件

4 使服务能被prometheus自动监控

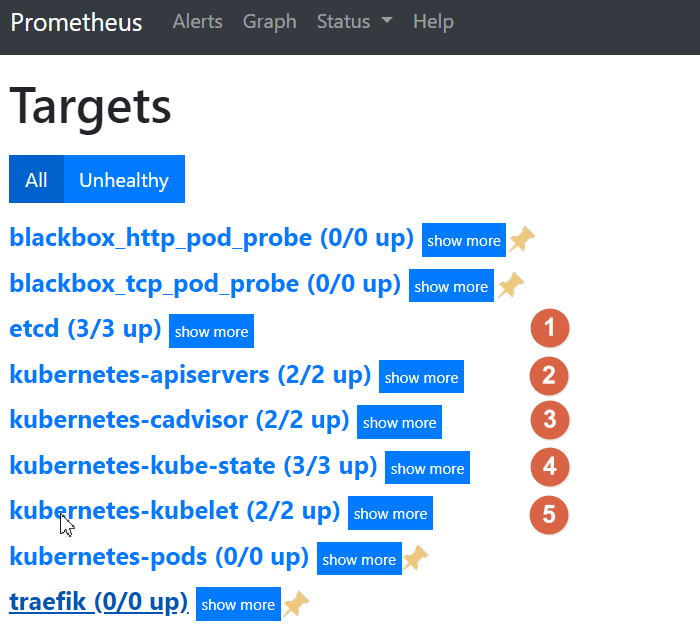

点击status->targets,展示的就是我们在prometheus.yml中配置的job-name,这些targets基本可以满足我们收集数据的需求。

5个编号的job-name已经被发现并获取数据 接下来就需要将剩下的4个ob-name对应的服务纳入监控 纳入监控的方式是给需要收集数据的服务添加annotations

4.1 让traefik能被自动监控

4.1.1 修改traefik的yaml

修改fraefik的yaml文件,跟labels同级,添加annotations配置

vim /data/k8s-yaml/traefik/ds.yaml........spec:template:metadata:labels:k8s-app: traefik-ingressname: traefik-ingress#--------增加内容--------annotations:prometheus_io_scheme: "traefik"prometheus_io_path: "/metrics"prometheus_io_port: "8080"#--------增加结束--------spec:serviceAccountName: traefik-ingress-controller........

任意节点重新应用配置

kubectl delete -f http://k8s-yaml.zq.com/traefik/ds.yamlkubectl apply -f http://k8s-yaml.zq.com/traefik/ds.yaml

4.1.2 应用配置查看

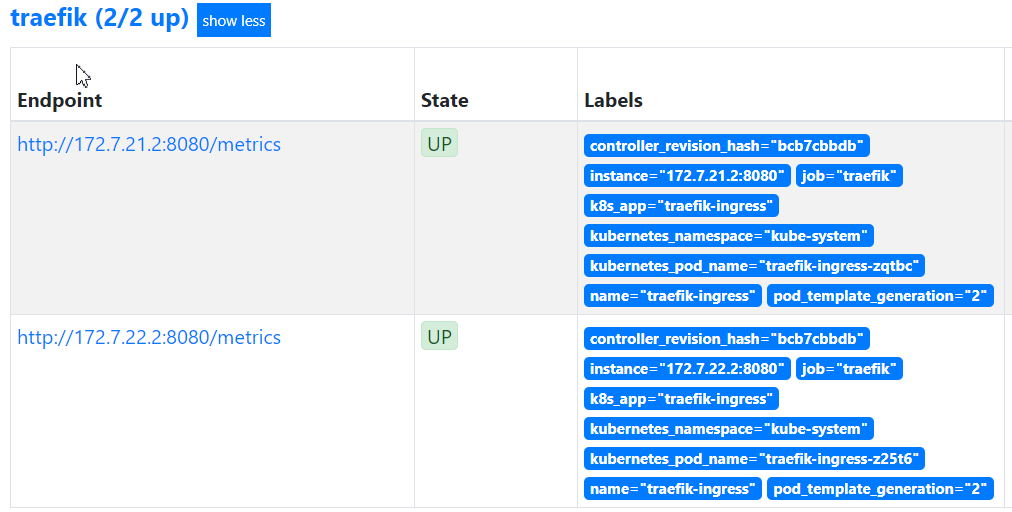

等待pod重启以后,再在prometheus上查看traefik是否能正常获取数据了

4.2 用blackbox检测TCP/HTTP服务状态

blackbox是检测容器内服务存活性的,也就是端口健康状态检查,分为tcp和http两种方法

能用http的情况尽量用http,没有提供http接口的服务才用tcp

4.2.1 被检测服务准备

使用测试环境的dubbo服务来做演示,其他环境类似

- dashboard中开启apollo-portal和test空间中的apollo

- dubbo-demo-service使用tcp的annotation

- dubbo-demo-consumer使用HTTP的annotation

4.2.2 添加tcp的annotation

等两个服务起来以后,首先在dubbo-demo-service资源中添加一个TCP的annotation

任意节点重新应用配置vim /data/k8s-yaml/test/dubbo-demo-server/dp.yaml......spec:......template:metadata:labels:app: dubbo-demo-servicename: dubbo-demo-service#--------增加内容--------annotations:blackbox_port: "20880"blackbox_scheme: "tcp"#--------增加结束--------spec:containers:image: harbor.zq.com/app/dubbo-demo-service:apollo_200512_0746

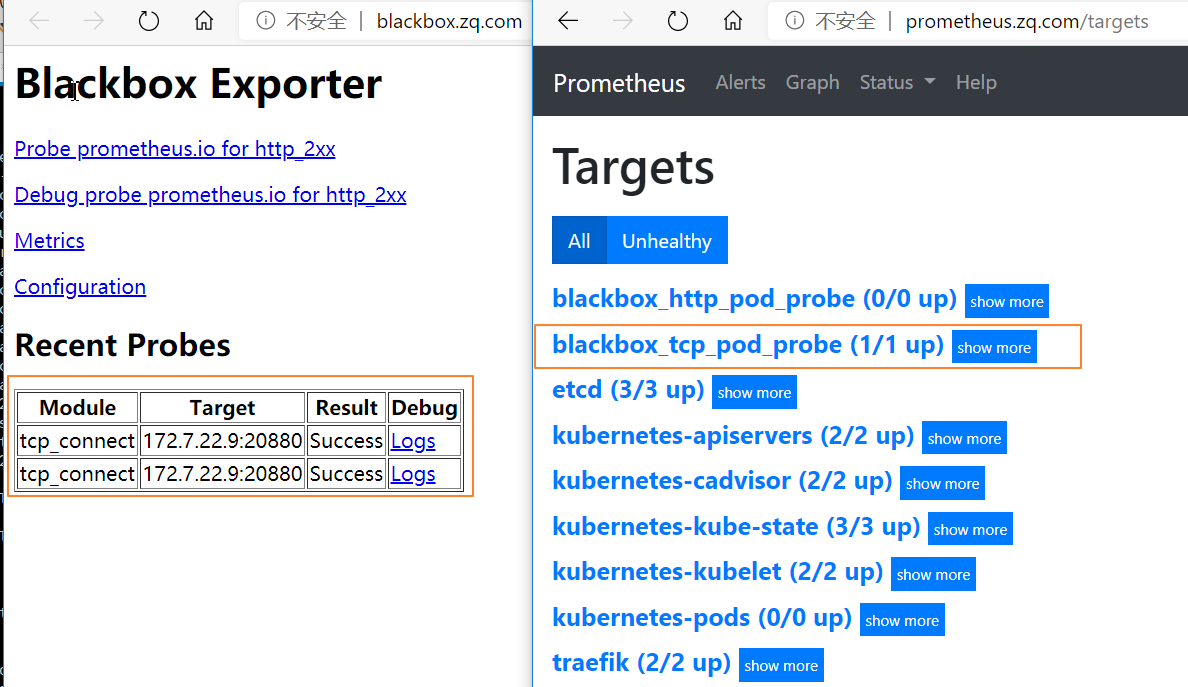

浏览器中查看http://blackbox.zq.com/和http://prometheus.zq.com/targetskubectl delete -f http://k8s-yaml.zq.com/test/dubbo-demo-server/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-server/dp.yaml

我们运行的dubbo-demo-server服务,tcp端口20880已经被发现并在监控中

4.2.3 添加http的annotation

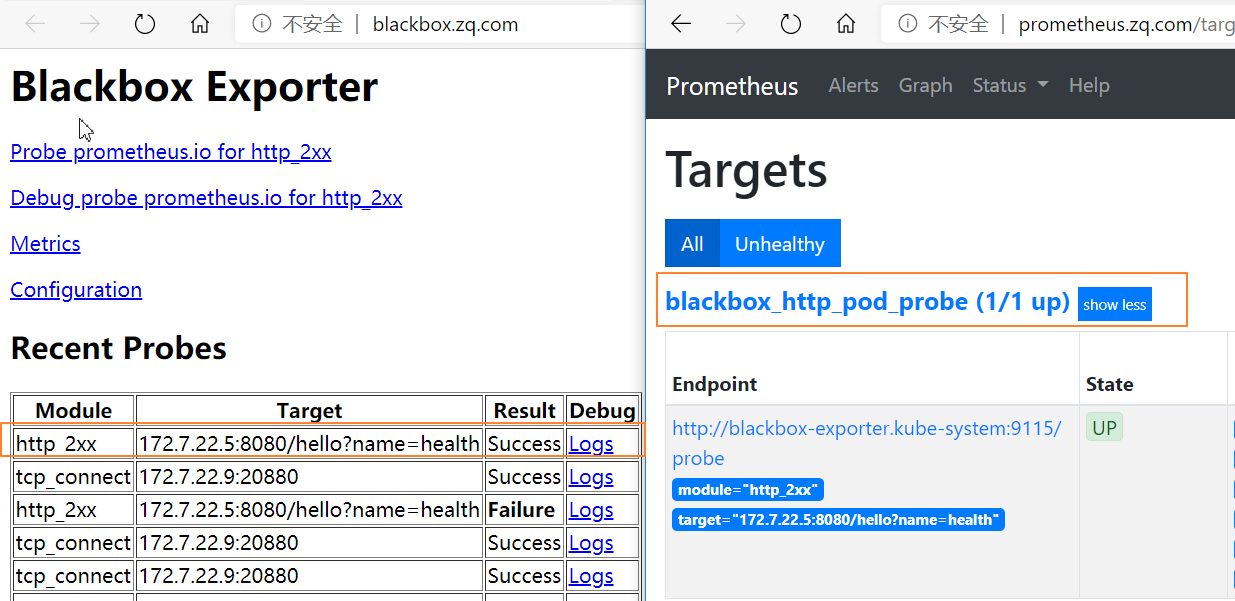

接下来在dubbo-demo-consumer资源中添加一个HTTP的annotation:

任意节点重新应用配置vim /data/k8s-yaml/test/dubbo-demo-consumer/dp.yamlspec:......template:metadata:labels:app: dubbo-demo-consumername: dubbo-demo-consumer#--------增加内容--------annotations:blackbox_path: "/hello?name=health"blackbox_port: "8080"blackbox_scheme: "http"#--------增加结束--------spec:containers:- name: dubbo-demo-consumer......

kubectl delete -f http://k8s-yaml.zq.com/test/dubbo-demo-consumer/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-consumer/dp.yaml

4.3 添加监控jvm信息

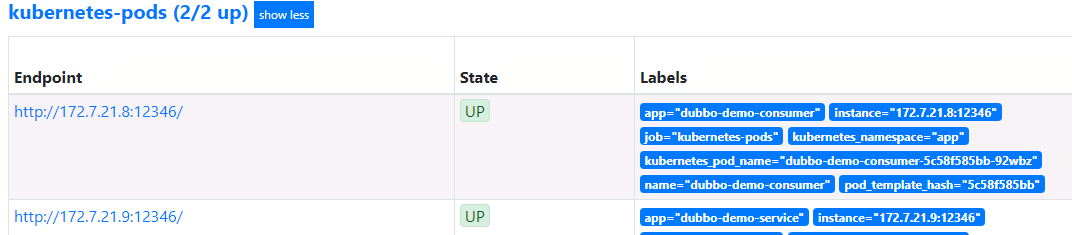

dubbo-demo-service和dubbo-demo-consumer都添加下列annotation注解,以便监控pod中的jvm信息vim /data/k8s-yaml/test/dubbo-demo-server/dp.yamlvim /data/k8s-yaml/test/dubbo-demo-consumer/dp.yamlannotations:#....已有略....prometheus_io_scrape: "true"prometheus_io_port: "12346"prometheus_io_path: "/"

12346是dubbo的POD启动命令中使用jmx_javaagent用到的端口,因此可以用来收集jvm信息

任意节点重新应用配置

kubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-server/dp.yamlkubectl apply -f http://k8s-yaml.zq.com/test/dubbo-demo-consumer/dp.yaml

至此,所有9个服务,都获取了数据