vim /ect/hostname//添加ip mastervim /etc/hostsvim /etc/sysconfig/network-scripts/ifcfg-ens32//添加配置BOOTPROTO="static"IPADDR=192.168.66.133NETWORK=255.255.255.0GATEWAY=192.168.66.2DNS1=192.168.66.2 //与网关相同 https://www.cnblogs.com/lyangfighting/p/9518726.htmlservice network restart //重启网络服务启动报错:// https://blog.csdn.net/dyw_666666/article/details/103117357Restarting network (via systemctl):Job for network.service failed because the control process exited with error code.See "systemctl status network.service" and "journalctl -xe" for details.解决:systemctl stop NetworkManagersystemctl disable NetworkManagersystemctl start network.servicessh-keygen -t rsa //一直回车ssh-copy-id mastercd ~ls -al //查看有无.sshcd .sshmore authorized_keysssh mastermkdir /software //安装包地址tar -zxvf hadoop-2.6.0.tar.gztar -zxvf jdk-8u202-linux-x64.tar.gzmv jdk1.8.0_202 /usr/local/jdkmv hadoop-2.6.0 /usr/local/hadoopcd /usr/local/hadoop/etc/hadoopvim hadoop-env.sh //更改配置 export JAVA_HOME=/usr/local/jdkvim core-site.xml<configuration><property><name>fs.default.name</name><value>hdfs://hdp4:9000</value></property><property><name>hadoop.tmp.dir</name><value>/usr/local/hadoop/tmp</value></property></configuration>vim hdfs-site.xml<configuration><property><name>dfs.replication</name><value>1</value></property><property><name>dfs.permissions</name><value>false</value></property></configuration>cp mapred-site.xml.template mapred-site.xmlvim mapred-site.xml<configuration><property><name>mapreduce.framework.name</name><value>yarn</value></property><property><name>mapreduce.jobhistory.address</name><value>hdp4:10020</value></property></configuration>vim yarn-site.xml<configuration><!-- Site specific YARN configuration properties --><property><name>yarn.resourcemanager.hostname</name><value>hdp4</value></property><property><name>yarn.nodemanager.aux-services</name><value>mapreduce_shuffle</value></property><property><name>mapreduce.job.ubertask.enable</name><value>true</value></property></configuration>JDK环境配置vim /etc/profileexport JAVA_HOME=/user/local/jdkexport HADOOP_HOME=/usr/local/hadoopexport PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATHsource /etc/profilehadoop namenode -format//启动start-dfs.shstart-yarn.sh//关闭防火墙 访问http://ip:50070/sudo systemctl stop firewalld 临时关闭sudo systemctl disable firewalld ,然后reboot 永久关闭sudo systemctl status firewalld 查看防火墙状态。

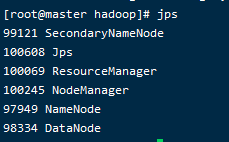

当前进程