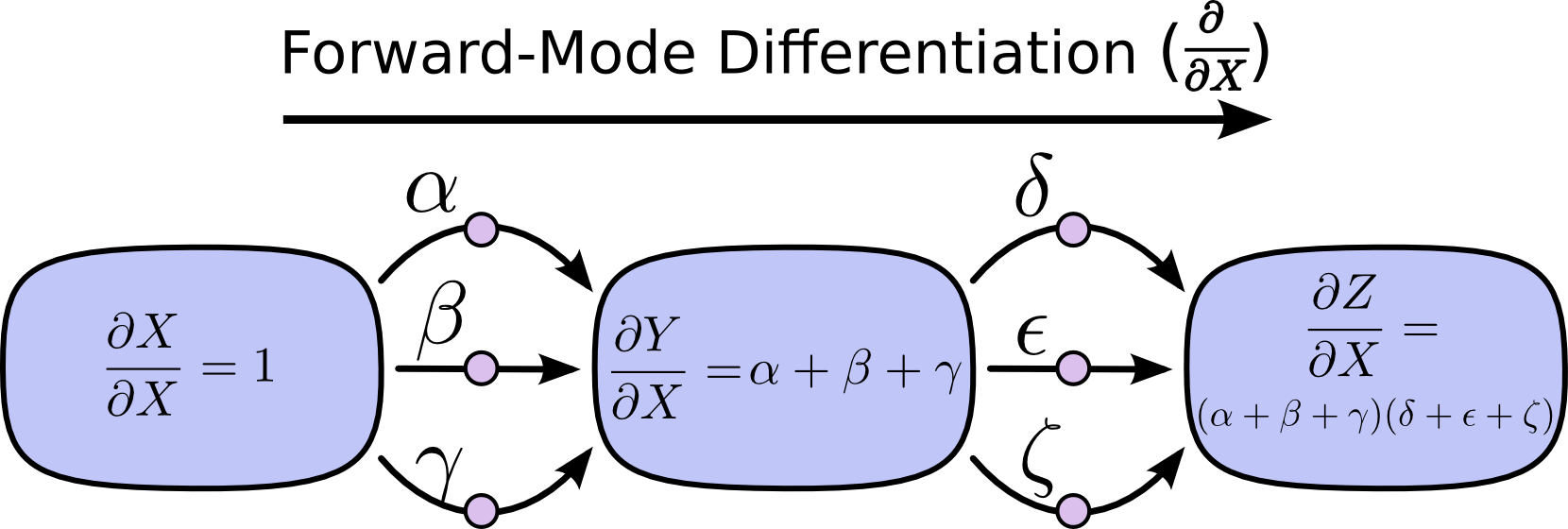

Forward-Mode Differentiation(前向传播)

Forward-mode differentiation tracks how one input affects every node.

前向传播主要是跟踪每一个输入是如何影响计算图(Computational Graphs)中的每一个节点

That is, forward-mode differentiation applies the operator to every node

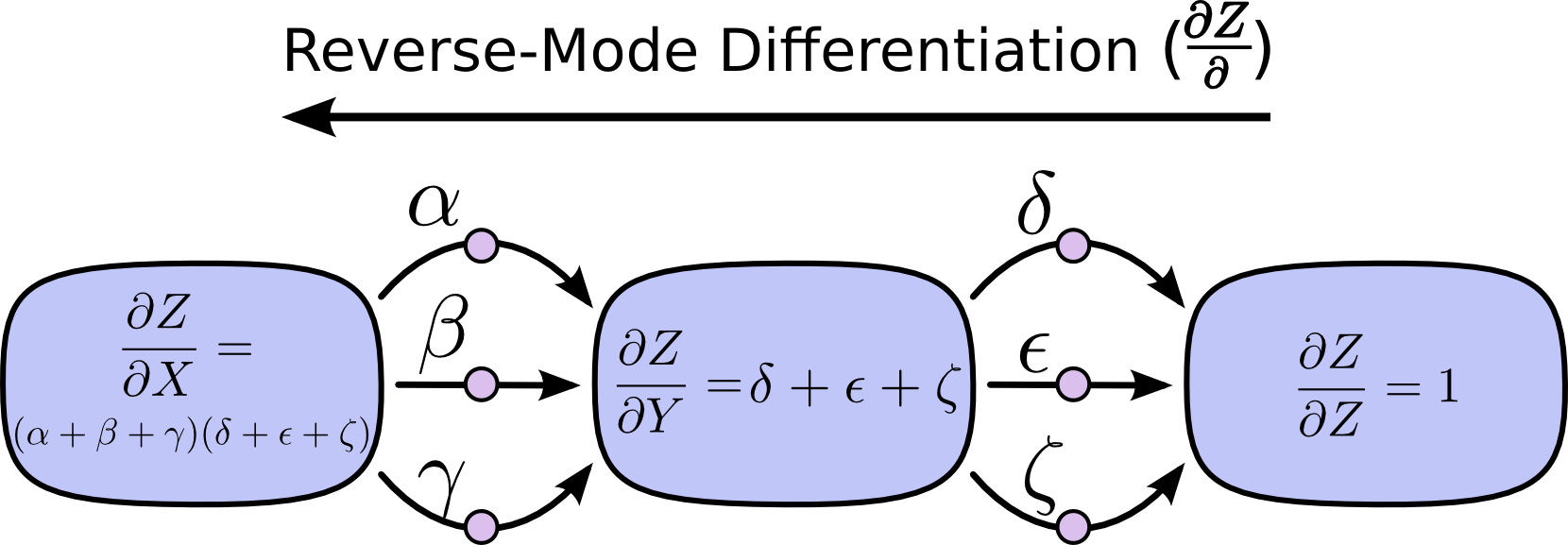

Reverse-mode differentiation(反向传播)

Reverse-mode differentiation tracks how every node affects one output

反向传播主要是跟踪计算图(Computational Graphs)中每一个节点是如何影响每一个输出

reverse mode differentiation applies the operator to every node

Application(应用)

Where the reverse-mode gives the derivatives of one output with respect to all inputs, the forward-mode gives us the derivatives of all outputs with respect to one input

Conclusion

Derivatives are cheaper than you think