1.https://rlss.inria.fr/files/2019/07/RLSS_Multiagent.pdf

2.Multi-agent Reinforcement Learning in

Sequential Social Dilemmas

多智能体的强化学习用于序列化的社会困境

3.https://web.kamihq.com/web/viewer.html?state=%7B%22ids%22%3A%5B%221XfcPDOHoszxKhzHO2oKJ4NgDcVWzP4jc%22%5D%2C%22action%22%3A%22open%22%2C%22userId%22%3A%22112483263868961740692%22%7D&filename=null

A Survey and Critique of Multiagent Deep Reinforcement Learning

主要介绍多智能体在深度强化学习中的应用。

一.介绍了RL,DRL

二.给MDRL的研究方向分类:

1.产生的行为

2.学习沟通

3.学习合作

4.给其他agent建模

三.如何将RL,MAL的技术使用到MDRL

四.MDRL的benchmarks

五.MDRL实践中的挑战

4.Multiagent Reinforcement Learning — DeepMind

一.2007年前后的一些论文及其被引用次数:

0.If multi-agent learning is the answer,

what is the question — 384

1.A hierarchy of prescriptive goals for multiagent learning — 7

2.Agendas for multi-agent learning — 18

3.An economist’s perspective on multi-agent learning — 22

4.Foundations of multi-agent learning_Introduction to the special issue — 10

5.Learning equilibrium as a generalization of learning to optimize — 9

6.Multi-agent learning and the descriptive value of simple models — 28

7.Multiagent learning is not the answer_It is the question — 56

8.No regrets about no-regret — 10

9.Perspectives on multiagent learning — 48

10.The possible and the impossible in multi-agent learning — 29

11.What evolutionary game theory tells us about multiagent learning — 118

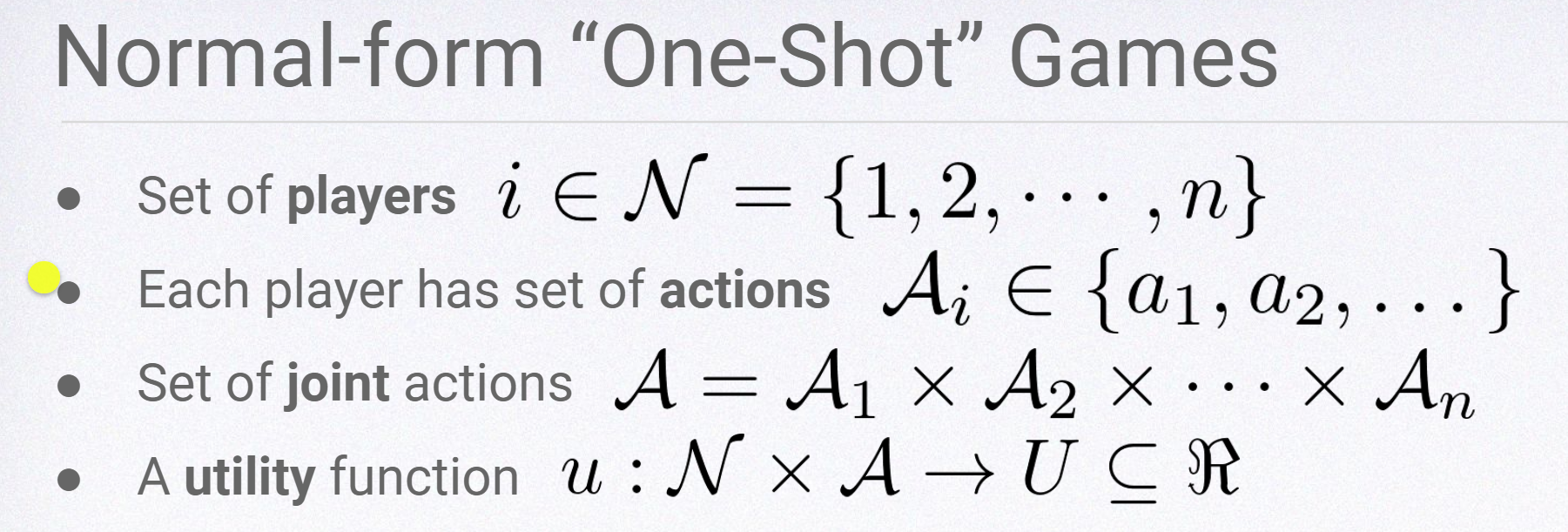

二.Normal-form of “One Shot” Games

三.Two-Player Zero-Sum Games

策略迭代

四.backward induction向后归纳法

markov games

五.MARL介绍

1.first era of MARL — MinMax Q-learning

Scalibility was a major problem.

Follow-ups to Minimax Q:

● Friend-or-Foe Q-Learning (Littman ‘01)

● Correlated Q-learning (Greenwald & Hall ‘03)

● Nash Q-learning (Hu & Wellman ‘03)

● Coco-Q (Sodomka et al. ‘13)

Function approximation:

● LSPI for Markov Games (Lagoudakis & Parr ‘02)

Nash Convergence of Gradient Dynamics in General-Sum Games — 296次引用

2.Second Era — Deep Learning meets MARL

Human-level control through deep reinforcement

learning — 7774

分类:

Idependent Q-learning Approaches

Learning to Communicate

Cooperative Multiagent Tasks

Sequential Social Dilemas

Centralized Critic Decentralized Actor Approaches

3.recent works

Independent Deep Q-networks (Mnih et al. ‘15)

Fictitious Self-Play [Heinrich et al. ‘15, Heinrich & Silver 2016]

Neural Fictitious Self-Play [Heinrich & Silver 2016]

Policy-Space Response Oracles (Lanctot et al. ‘17)

Exploitability Descent (Lockhart et al. ‘19)

DeepStack (Moravcik et al. ‘17)

Libratus (Brown & Sandholm ‘18)

Regret Policy Gradients (Srinivasan et al. ‘18)

5.If multi-agent learning is the answer, what is the question?

4.1 Some MAL techniques

4.1.1 Model-based approaches

4.1.2 Model-free approaches

4.1.3 Regret minimization approaches

4.2Some typical results

Fictitious Self-Play [Heinrich et al. ‘15, Heinrich & Silver 2016] A Survey and Critique of Multiagent Deep Reinforcement Learning

A Survey

and Critique of Multiagent Deep Reinforcement Learning