离线量化方法采用了nvidia的方案,采用了对称量化方案。对激活值量化,利用kl散度选择最优的scale值;对参数选择了max_abs方法进行逐通道量化,每一个卷积核有不同的scale值。

Calibration::Calibration(MNN::NetT* model, uint8_t* modelBuffer, const int bufferSize, const std::string& configPath): _originaleModel(model) {// when the format of input image is RGB/BGR, channels equal to 3, GRAY is 1int channles = 3;rapidjson::Document document;{std::ifstream fileNames(configPath.c_str());std::ostringstream output;output << fileNames.rdbuf();auto outputStr = output.str();document.Parse(outputStr.c_str());if (document.HasParseError()) {MNN_ERROR("Invalid json\n");return;}}auto picObj = document.GetObject();ImageProcess::Config config;config.filterType = BILINEAR;config.destFormat = BGR;{if (picObj.HasMember("format")) {auto format = picObj["format"].GetString();static std::map<std::string, ImageFormat> formatMap{{"BGR", BGR}, {"RGB", RGB}, {"GRAY", GRAY}};if (formatMap.find(format) != formatMap.end()) {config.destFormat = formatMap.find(format)->second;}}}if (config.destFormat == GRAY) {channles = 1;}config.sourceFormat = RGBA;std::string imagePath;_imageNum = 0;{if (picObj.HasMember("mean")) {auto mean = picObj["mean"].GetArray();int cur = 0;for (auto iter = mean.begin(); iter != mean.end(); iter++) {config.mean[cur++] = iter->GetFloat();}}if (picObj.HasMember("normal")) {auto normal = picObj["normal"].GetArray();int cur = 0;for (auto iter = normal.begin(); iter != normal.end(); iter++) {config.normal[cur++] = iter->GetFloat();}}if (picObj.HasMember("width")) {_width = picObj["width"].GetInt();}if (picObj.HasMember("height")) {_height = picObj["height"].GetInt();}if (picObj.HasMember("path")) {imagePath = picObj["path"].GetString();}if (picObj.HasMember("used_image_num")) {_imageNum = picObj["used_image_num"].GetInt();}if (picObj.HasMember("feature_quantize_method")) {std::string method = picObj["feature_quantize_method"].GetString();if (Helper::featureQuantizeMethod.find(method) != Helper::featureQuantizeMethod.end()) {_featureQuantizeMethod = method;} else {MNN_ERROR("not supported feature quantization method: %s\n", method.c_str());return;}}if (picObj.HasMember("weight_quantize_method")) {std::string method = picObj["weight_quantize_method"].GetString();if (Helper::weightQuantizeMethod.find(method) != Helper::weightQuantizeMethod.end()) {_weightQuantizeMethod = method;} else {MNN_ERROR("not supported weight quantization method: %s\n", method.c_str());return;}}DLOG(INFO) << "Use feature quantization method: " << _featureQuantizeMethod;DLOG(INFO) << "Use weight quantization method: " << _weightQuantizeMethod;}std::shared_ptr<ImageProcess> process(ImageProcess::create(config));_process = process;// read images file namesHelper::readImages(_imgaes, imagePath.c_str(), &_imageNum);_initMNNSession(modelBuffer, bufferSize, channles);_initMaps();}

Calibration量化流程如下所示:

- 读取量化配置文件,设置好相应量化参数

- 设置图片预处理,并读取图片

- 初始化好用于统计tensor信息的推理模型

初始化好统计不同tensor的TensorStatistic

初始化Session用于统计Tensor信息void Calibration::_initMNNSession(const uint8_t* modelBuffer, const int bufferSize, const int channels) {_interpreter.reset(MNN::Interpreter::createFromBuffer(modelBuffer, bufferSize));MNN::ScheduleConfig config;_session = _interpreter->createSession(config);_inputTensor = _interpreter->getSessionInput(_session, NULL);_inputTensorDims.resize(4);auto inputTensorDataFormat = MNN::TensorUtils::getDescribe(_inputTensor)->dimensionFormat;DCHECK(4 == _inputTensor->dimensions()) << "Only support 4 dimensions input";if (inputTensorDataFormat == MNN::MNN_DATA_FORMAT_NHWC) {_inputTensorDims[0] = 1;_inputTensorDims[1] = _height;_inputTensorDims[2] = _width;_inputTensorDims[3] = channels;} else if (inputTensorDataFormat == MNN::MNN_DATA_FORMAT_NC4HW4) {_inputTensorDims[0] = 1;_inputTensorDims[1] = channels;_inputTensorDims[2] = _height;_inputTensorDims[3] = _width;} else {DLOG(ERROR) << "Input Data Format ERROR!";}if (_featureQuantizeMethod == "KL") {_interpreter->resizeTensor(_inputTensor, _inputTensorDims);_interpreter->resizeSession(_session);} else if (_featureQuantizeMethod == "ADMM") {DCHECK((_imageNum * 4 * _height * _width) < (INT_MAX / 4)) << "Use Little Number of Images When Use ADMM";_inputTensorDims[0] = _imageNum;_interpreter->resizeTensor(_inputTensor, _inputTensorDims);_interpreter->resizeSession(_session);}_interpreter->releaseModel();}

void Calibration::_initMaps() {_featureInfo.clear();_opInfo.clear(); // 记录算子对应的输入和输出tensor的指针_tensorMap.clear();// std::set<std::string> Helper::gNeedFeatureOp = {"Convolution", "ConvolutionDepthwise", "Eltwise", "Pooling"};// 量化op包括卷积、深度卷积、EltwiseAdd和Pooling// run mnn once, initialize featureMap, opInfo map// 在每个op算子计算前调用beforeMNN::TensorCallBackWithInfo before = [&](const std::vector<MNN::Tensor*>& nTensors, const MNN::OperatorInfo* info) {_opInfo[info->name()].first = nTensors;// 如果该算子为量化op,将所有输入tensor加入会进行量化信息统计的map中,且利用TensorStatistic,对每个tensor进行统计。if (Helper::gNeedFeatureOp.find(info->type()) != Helper::gNeedFeatureOp.end()) {for (auto t : nTensors) {if (_featureInfo.find(t) == _featureInfo.end()) {_featureInfo[t] = std::shared_ptr<TensorStatistic>(new TensorStatistic(t, _featureQuantizeMethod, info->name() + "__input"));}}}return false;};// 在每个op算子计算后调用endMNN::TensorCallBackWithInfo after = [this](const std::vector<MNN::Tensor*>& nTensors,const MNN::OperatorInfo* info) {_opInfo[info->name()].second = nTensors;// 如果该算子为量化op,将所有输出tensor加入会进行量化信息统计的map中,且利用TensorStatistic,对每个tensor进行统计。if (Helper::gNeedFeatureOp.find(info->type()) != Helper::gNeedFeatureOp.end()) {for (auto t : nTensors) {if (_featureInfo.find(t) == _featureInfo.end()) {_featureInfo[t] =std::shared_ptr<TensorStatistic>(new TensorStatistic(t, _featureQuantizeMethod, info->name()));}}}return true;};// 进行推理同时设置两个回调函数,记录待统计的tensor_interpreter->runSessionWithCallBackInfo(_session, before, after);// 记录tensor索引到tensor指针的映射for (auto& op : _originaleModel->oplists) {if (_opInfo.find(op->name) == _opInfo.end()) {continue;}for (int i = 0; i < op->inputIndexes.size(); ++i) {_tensorMap[op->inputIndexes[i]] = _opInfo[op->name].first[i];}for (int i = 0; i < op->outputIndexes.size(); ++i) {_tensorMap[op->outputIndexes[i]] = _opInfo[op->name].second[i];}}if (_featureQuantizeMethod == "KL") {// 输入tensor的统计方法不适用KL// set the tensor-statistic method of input tensor as THRESHOLD_MAXauto inputTensorStatistic = _featureInfo.find(_inputTensor);if (inputTensorStatistic != _featureInfo.end()) {inputTensorStatistic->second->setThresholdMethod(THRESHOLD_MAX);}}}

void Calibration::runQuantizeModel() {// 计算激活值的scale值if (_featureQuantizeMethod == "KL") {_computeFeatureScaleKL();} else if (_featureQuantizeMethod == "ADMM") {_computeFeatureScaleADMM();}// 统计weight的信息统计,创建量化op,生成网络_updateScale();// 对于不支持量化的算子,插入反量化算子转化为float,对输出也插入反量化算子_insertDequantize();}

void Calibration::_computeFeatureScaleKL() {// 计算特征图范围_computeFeatureMapsRange();// 计算特征图分布_collectFeatureMapsDistribution();_scales.clear();for (auto& iter : _featureInfo) {AUTOTIME;// 生成tensor的scale值_scales[iter.first] = iter.second->finishAndCompute();}//_featureInfo.clear();//No need now}

```cpp void resetUpdatedRangeFlags() {

mUpdatedRangeFlags = false;

}

void TensorStatistic::updateRange() { if (mUpdatedRangeFlags) { return; } mUpdatedRangeFlags = true; mOriginTensor->copyToHostTensor(mHostTensor.get()); int batch = mHostTensor->batch(); int channel = mHostTensor->channel(); int width = mHostTensor->width(); int height = mHostTensor->height(); auto area = width * height;

for (int n = 0; n < batch; ++n){auto dataBatch = mHostTensor->host<float>() + n * mHostTensor->stride(0);for (int c = 0; c < channel; ++c){int cIndex = c;// mMergeChannel默认为true,即对激活值不采用逐通道量化,if (mMergeChannel){cIndex = 0;}// 统计最大最小值auto minValue = mRangePerChannel[cIndex].first;auto maxValue = mRangePerChannel[cIndex].second;auto dataChannel = dataBatch + c * mHostTensor->stride(1);for (int v = 0; v < area; ++v){minValue = std::min(minValue, dataChannel[v]);maxValue = std::max(maxValue, dataChannel[v]);}mRangePerChannel[cIndex].first = minValue;mRangePerChannel[cIndex].second = maxValue;}}

}

void Calibration::_computeFeatureMapsRange() { // feed input data according to input images int count = 0; for (const auto& img : _imgaes) { for (auto& iter : _featureInfo) { // 由于输入输出tensor可能会重叠,出现重复统计的情况 // 设置flag表示是否range被统计过 iter.second->resetUpdatedRangeFlags(); } count++; // 图片预处理 Helper::preprocessInput(_process.get(), _width, _height, img, _inputTensor);

MNN::TensorCallBackWithInfo before = [&](const std::vector<MNN::Tensor*>& nTensors,const MNN::OperatorInfo* info) {for (auto t : nTensors) {if (_featureInfo.find(t) != _featureInfo.end()) {// 统计输入tensor的range_featureInfo[t]->updateRange();}}return true;};MNN::TensorCallBackWithInfo after = [&](const std::vector<MNN::Tensor*>& nTensors,const MNN::OperatorInfo* info) {for (auto t : nTensors) {if (_featureInfo.find(t) != _featureInfo.end()) {// 统计输出tensor的range_featureInfo[t]->updateRange();}}return true;};_interpreter->runSessionWithCallBackInfo(_session, before, after);MNN_PRINT("\rComputeFeatureRange: %.2lf %%", (float)count * 100.0f / (float)_imageNum);fflush(stdout);}MNN_PRINT("\n");

}

```cppvoid TensorStatistic::resetDistribution(){for (int i = 0; i < mIntervals.size(); ++i){int cIndex = i;// 类似之前if (mMergeChannel){cIndex = 0;}// 统计最大绝对值,nvidia方案针对relu后的激活值进行量化,因此所有tensor值都为正,此处应该也假设了所有tensor生成的值大于0// 否则此处代码逻辑有问题auto maxValue = std::max(fabsf(mRangePerChannel[cIndex].second), fabsf(mRangePerChannel[cIndex].first));mValidChannel[cIndex] = maxValue > 0.00001f;mIntervals[cIndex] = 0.0f;if (mValidChannel[cIndex]){// 预先计算2048 / max// 之后乘tensor的值可以得到对应的bin的位置mIntervals[cIndex] = (float)mBinNumber / maxValue;}}for (auto &c : mDistribution){// 防止出现某个bin没有元素,平滑操作std::fill(c.begin(), c.end(), 1.0e-07);}// MNN_PRINT("==> %s max: %f\n", mName.c_str(),std::max(fabsf(mRangePerChannel[0].second),// fabsf(mRangePerChannel[0].first)));}void TensorStatistic::updateDistribution(){if (mUpdatedDistributionFlag){return;}mUpdatedDistributionFlag = true;mOriginTensor->copyToHostTensor(mHostTensor.get());int batch = mHostTensor->batch();int channel = mHostTensor->channel();int width = mHostTensor->width();int height = mHostTensor->height();auto area = width * height;for (int n = 0; n < batch; ++n){auto dataBatch = mHostTensor->host<float>() + n * mHostTensor->stride(0);for (int c = 0; c < channel; ++c){int cIndex = c;if (mMergeChannel){cIndex = 0;}if (!mValidChannel[cIndex]){continue;}auto multi = mIntervals[cIndex];auto target = mDistribution[cIndex].data();auto dataChannel = dataBatch + c * mHostTensor->stride(1);for (int v = 0; v < area; ++v){auto data = dataChannel[v];if (data == 0){continue;}// 生成bin的index并将对应bin的值加1int index = static_cast<int>(fabs(data) * multi);index = std::min(index, mBinNumber - 1);target[index] += 1.0f;}}}}void Calibration::_collectFeatureMapsDistribution() {for (auto& iter : _featureInfo) {// 将tensor分布装在2048个bin中作为原始激活值的分布,对每个tensor分布进行初始化iter.second->resetDistribution();}// feed input data according to input images// 对输入输出tensor的分布进行更新MNN::TensorCallBackWithInfo before = [&](const std::vector<MNN::Tensor*>& nTensors, const MNN::OperatorInfo* info) {for (auto t : nTensors) {if (_featureInfo.find(t) != _featureInfo.end()) {_featureInfo[t]->updateDistribution();}}return true;};MNN::TensorCallBackWithInfo after = [&](const std::vector<MNN::Tensor*>& nTensors, const MNN::OperatorInfo* info) {for (auto t : nTensors) {if (_featureInfo.find(t) != _featureInfo.end()) {_featureInfo[t]->updateDistribution();}}return true;};int count = 0;for (const auto& img : _imgaes) {count++;for (auto& iter : _featureInfo) {iter.second->resetUpdatedDistributionFlag();}Helper::preprocessInput(_process.get(), _width, _height, img, _inputTensor);_interpreter->runSessionWithCallBackInfo(_session, before, after);MNN_PRINT("\rCollectFeatureDistribution: %.2lf %%", (float)count * 100.0f / (float)_imageNum);fflush(stdout);}MNN_PRINT("\n");}

// 每个tensor生成对应的scale值std::vector<float> TensorStatistic::finishAndCompute(){std::vector<float> scaleValue(mDistribution.size(), 0.0f);// 默认为trueif (mMergeChannel){if (!mValidChannel[0]){return scaleValue;}float sum = 0.0f;auto &distribution = mDistribution[0];// 生成概率分布std::for_each(distribution.begin(), distribution.end(), [&](float n) { sum += n; });std::for_each(distribution.begin(), distribution.end(), [sum](float &n) { n /= sum; });// 对分布计算阈值,threshold表示bin的位置auto threshold = _computeThreshold(distribution);// 根据threshold计算scale值auto scale = ((float)threshold + 0.5) / mIntervals[0] / 127.0f;// MNN_PRINT("==> %s == %d, %f, %f\n", mName.c_str(),threshold, 1.0f / mIntervals[0], scale * 127.0f);std::fill(scaleValue.begin(), scaleValue.end(), scale);return scaleValue;}for (int c = 0; c < mDistribution.size(); ++c){if (!mValidChannel[c]){continue;}float sum = 0.0f;auto &distribution = mDistribution[c];std::for_each(distribution.begin(), distribution.end(), [&](float n) { sum += n; });std::for_each(distribution.begin(), distribution.end(), [sum](float &n) { n /= sum; });auto threshold = _computeThreshold(distribution);scaleValue[c] = ((float)threshold + 0.5) / mIntervals[c] / 127.0;}return scaleValue;}

int TensorStatistic::_computeThreshold(const std::vector<float> &distribution){// 128即int8的一半,全为正数const int targetBinNums = 128;int threshold = targetBinNums;if (mThresholdMethod == THRESHOLD_KL){float minKLDivergence = 10000.0f;float afterThresholdSum = 0.0f;std::for_each(distribution.begin() + targetBinNums, distribution.end(),[&](float n) { afterThresholdSum += n; });for (int i = targetBinNums; i < mBinNumber; ++i){std::vector<float> quantizedDistribution(targetBinNums);std::vector<float> candidateDistribution(i);std::vector<float> expandedDistribution(i);std::copy(distribution.begin(), distribution.begin() + i, candidateDistribution.begin());// 计算P分布candidateDistribution[i - 1] += afterThresholdSum;afterThresholdSum -= distribution[i];const float binInterval = (float)i / (float)targetBinNums;// merge i bins to target bins// 量化i个bins到int8的128个bins中for (int j = 0; j < targetBinNums; ++j){const float start = j * binInterval;const float end = start + binInterval;const int leftUpper = static_cast<int>(std::ceil(start));if (leftUpper > start){// 假设start 为 2.4,则添加0.6个左界分布到其中const float leftScale = leftUpper - start;quantizedDistribution[j] += leftScale * distribution[leftUpper - 1];}const int rightLower = static_cast<int>(std::floor(end));if (rightLower < end){// 假设start 为 2.4,则添加0.4个右界分布到其中const float rightScale = end - rightLower;quantizedDistribution[j] += rightScale * distribution[rightLower];}std::for_each(distribution.begin() + leftUpper, distribution.begin() + rightLower,[&](float n) { quantizedDistribution[j] += n; });}// expand target bins to i bins// 反量化int8到i个bins中for (int j = 0; j < targetBinNums; ++j){const float start = j * binInterval;const float end = start + binInterval;float count = 0;const int leftUpper = static_cast<int>(std::ceil(start));float leftScale = 0.0f;if (leftUpper > start){// 与之前同理,计算每个int8bin中是否包含左界的一部分,不统计原分布为0的binleftScale = leftUpper - start;if (distribution[leftUpper - 1] != 0){count += leftScale;}}const int rightLower = static_cast<int>(std::floor(end));float rightScale = 0.0f;if (rightLower < end){// 计算每个int8bin中是否包含右界的一部分,不统计原分布为0的binrightScale = end - rightLower;if (distribution[rightLower] != 0){count += rightScale;}}std::for_each(distribution.begin() + leftUpper, distribution.begin() + rightLower, [&](float n) {if (n != 0){count += 1;}});if (count == 0){continue;}// 求均值const float toExpandValue = quantizedDistribution[j] / count;if (leftUpper > start && distribution[leftUpper - 1] != 0){// 左界赋值expandedDistribution[leftUpper - 1] += toExpandValue * leftScale;}if (rightLower < end && distribution[rightLower] != 0){// 右界赋值expandedDistribution[rightLower] += toExpandValue * rightScale;}for (int k = leftUpper; k < rightLower; ++k){// 中间段不为0的bin赋值if (distribution[k] != 0){expandedDistribution[k] += toExpandValue;}}}// 计算kl散度const float curKL = _klDivergence(candidateDistribution, expandedDistribution);// std::cout << "=====> KL: " << i << " ==> " << curKL << std::endl;if (curKL < minKLDivergence){minKLDivergence = curKL;threshold = i;}}}else if (mThresholdMethod == THRESHOLD_MAX){threshold = mBinNumber - 1;}else{// TODO, support other methodMNN_ASSERT(false);}return threshold;}

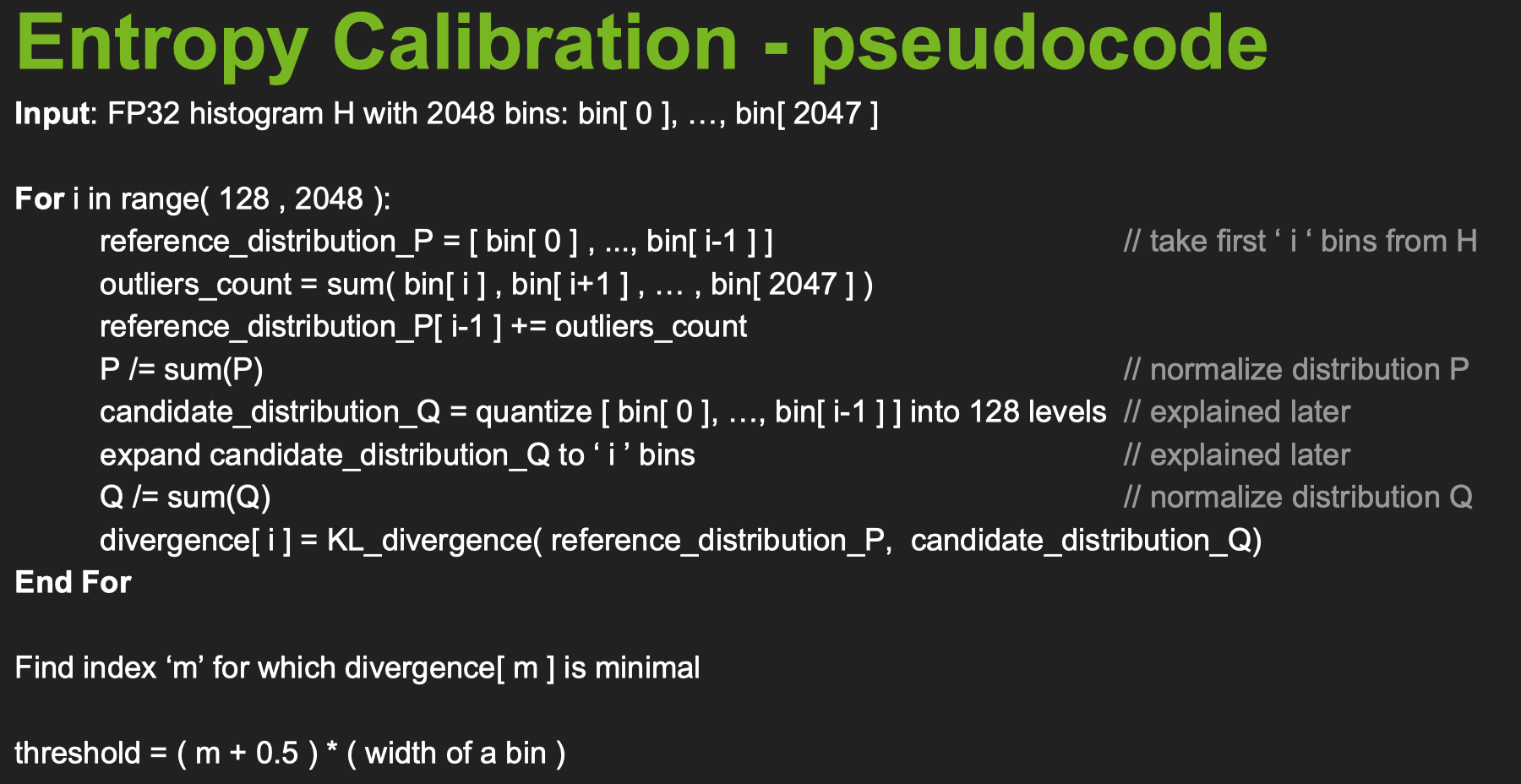

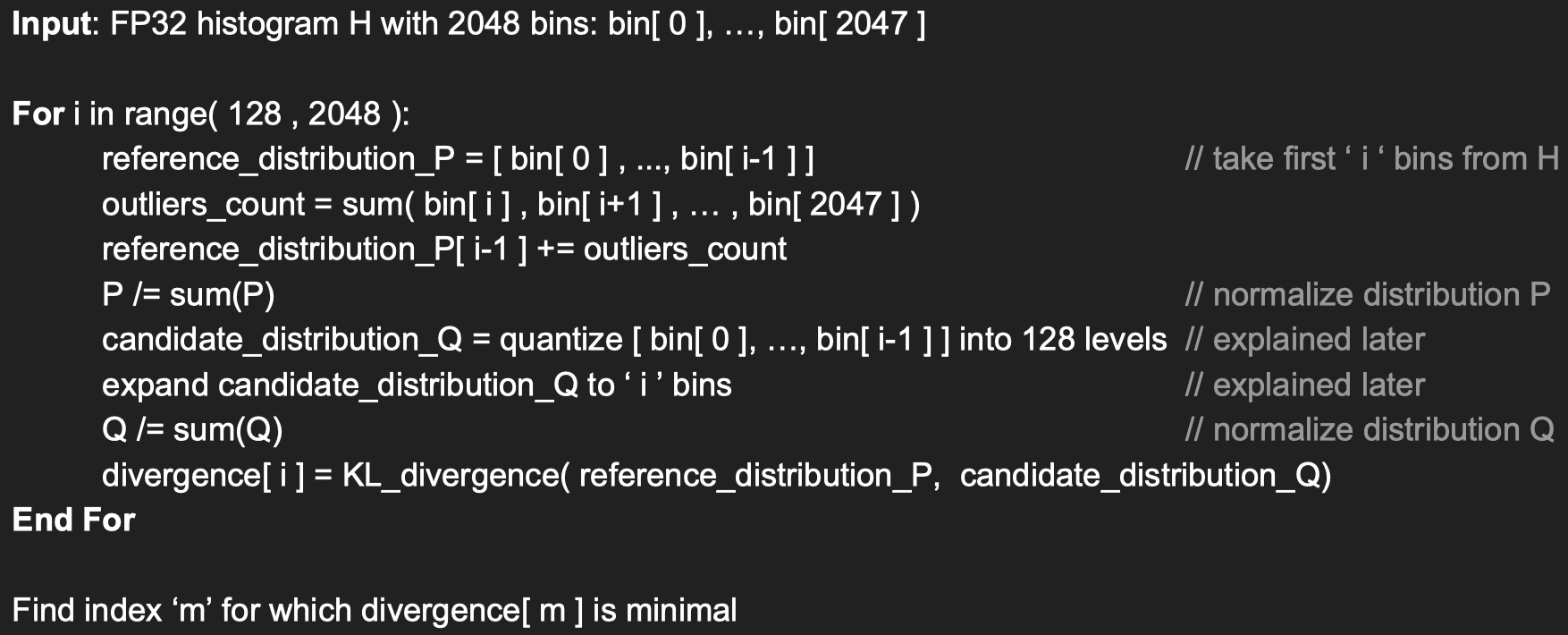

下图为上述代码的伪代码:

P为原始分布将阈值外的分布全部加到最右侧,如果该阈值不错,则P分布与i个bin之前的分布之间KL散度相差不大。

Q为i个bin之前的分布进行量化再反量化后生成的分布,计算P、Q分布的KL散度相当于模拟了量化误差与阈值选取误差的和。

void Calibration::_updateScale() {for (const auto& op : _originaleModel->oplists) {const auto opType = op->type;// 如果不为量化op,忽略if (opType != MNN::OpType_Convolution && opType != MNN::OpType_ConvolutionDepthwise &&opType != MNN::OpType_Eltwise) {continue;}auto tensorsPair = _opInfo.find(op->name);if (tensorsPair == _opInfo.end()) {MNN_ERROR("Can't find tensors for %s\n", op->name.c_str());}if (opType == MNN::OpType_Eltwise) {auto param = op->main.AsEltwise();// Now only support AddInt8 只支持EltwiseType_SUMif (param->type != MNN::EltwiseType_SUM) {continue;}// 记录scale值const auto& inputScale0 = _scales[tensorsPair->second.first[0]];const auto& inputScale1 = _scales[tensorsPair->second.first[1]];const auto& outputScale = _scales[tensorsPair->second.second[0]];const int outputScaleSize = outputScale.size();std::vector<float> outputInvertScale(outputScaleSize);Helper::invertData(outputInvertScale.data(), outputScale.data(), outputScaleSize);op->type = MNN::OpType_EltwiseInt8;op->main.Reset();op->main.type = MNN::OpParameter_EltwiseInt8;auto eltwiseInt8Param = new MNN::EltwiseInt8T;auto input0ScaleParam = new MNN::QuantizedFloatParamT;auto input1ScaleParam = new MNN::QuantizedFloatParamT;auto outputScaleParam = new MNN::QuantizedFloatParamT;input0ScaleParam->tensorScale = inputScale0;input1ScaleParam->tensorScale = inputScale1;outputScaleParam->tensorScale = outputInvertScale;eltwiseInt8Param->inputQuan0 = std::unique_ptr<MNN::QuantizedFloatParamT>(input0ScaleParam);eltwiseInt8Param->inputQuan1 = std::unique_ptr<MNN::QuantizedFloatParamT>(input1ScaleParam);eltwiseInt8Param->outputQuan = std::unique_ptr<MNN::QuantizedFloatParamT>(outputScaleParam);op->main.value = eltwiseInt8Param;continue;}// below is Conv/DepthwiseConvconst auto& inputScale = _scales[tensorsPair->second.first[0]];const auto& outputScale = _scales[tensorsPair->second.second[0]];auto param = op->main.AsConvolution2D();param->common->inputCount = tensorsPair->second.first[0]->channel();const int channles = param->common->outputCount;const int weightSize = param->weight.size();param->symmetricQuan.reset(new MNN::QuantizedFloatParamT);auto& quantizedParam = param->symmetricQuan;quantizedParam->scale.resize(channles);quantizedParam->weight.resize(weightSize);quantizedParam->bias.resize(channles);// 针对两种卷积采用不同的量化方式if (opType == MNN::OpType_Convolution) {QuantizeConvPerChannel(param->weight.data(), param->weight.size(), param->bias.data(),quantizedParam->weight.data(), quantizedParam->bias.data(),quantizedParam->scale.data(), inputScale, outputScale, _weightQuantizeMethod);op->type = MNN::OpType_ConvInt8;} else if (opType == MNN::OpType_ConvolutionDepthwise) {QuantizeDepthwiseConv(param->weight.data(), param->weight.size(), param->bias.data(),quantizedParam->weight.data(), quantizedParam->bias.data(),quantizedParam->scale.data(), inputScale, outputScale, _weightQuantizeMethod);op->type = MNN::OpType_DepthwiseConvInt8;}// 取消relu6if (param->common->relu6) {param->common->relu = true;param->common->relu6 = false;}param->weight.clear();param->bias.clear();}}

void Calibration::_insertDequantize() {// Search All Int Tensorsstd::set<int> int8Tensors; // 统计被量化的tensorstd::set<int> int8Outputs; // 统计被量化的且为最终输出的tensorfor (auto& op : _originaleModel->oplists) {if (Helper::INT8SUPPORTED_OPS.count(op->type) > 0) {for (auto index : op->inputIndexes) {int8Tensors.insert(index);}for (auto index : op->outputIndexes) {int8Tensors.insert(index);int8Outputs.insert(index);}}}for (auto& op : _originaleModel->oplists) {for (auto index : op->inputIndexes) {auto iter = int8Outputs.find(index);if (iter != int8Outputs.end()) {int8Outputs.erase(iter);}}}// Insert Convert For Not Support Int8 Ops// 对不支持量化的op进行处理for (auto iter = _originaleModel->oplists.begin(); iter != _originaleModel->oplists.end();) {auto op = iter->get();const auto opType = op->type;const auto name = op->name;// check whether is output op// if Yes, insert dequantization op after this opif (Helper::INT8SUPPORTED_OPS.find(opType) != Helper::INT8SUPPORTED_OPS.end()) {// this is quantized opiter++;continue;}auto& inputIndexes = op->inputIndexes;const int inputSize = inputIndexes.size();// insert dequantization op before this op// 如果该算子的输入为int8,插入反量化opfor (int i = 0; i < inputSize; ++i) {const auto curInputIndex = inputIndexes[i];if (int8Tensors.find(curInputIndex) == int8Tensors.end()) {continue;}auto input = _tensorMap[curInputIndex];auto inputOpScale = _scales[input];// construct new opauto dequantizationOp = new MNN::OpT;dequantizationOp->main.type = MNN::OpParameter_QuantizedFloatParam;dequantizationOp->name = "___Int8ToFloat___For_" + name + flatbuffers::NumToString(i);dequantizationOp->type = MNN::OpType_Int8ToFloat;auto dequantizationParam = new MNN::QuantizedFloatParamT;dequantizationOp->main.value = dequantizationParam;dequantizationParam->tensorScale = inputOpScale;dequantizationOp->inputIndexes.push_back(curInputIndex);dequantizationOp->outputIndexes.push_back(_originaleModel->tensorName.size());_originaleModel->tensorName.push_back(dequantizationOp->name);// reset current op's input index at iinputIndexes[i] = dequantizationOp->outputIndexes[0];iter = _originaleModel->oplists.insert(iter, std::unique_ptr<MNN::OpT>(dequantizationOp));iter++;}iter++;// LOG(INFO) << "insert quantization op after this op if neccessary";// insert quantization op after this op if neccessary// 如果该算子输出为int8,则插入量化opfor (int i = 0; i < op->outputIndexes.size(); ++i) {const auto outputIndex = op->outputIndexes[i];if (int8Tensors.find(outputIndex) == int8Tensors.end()) {continue;}auto output = _tensorMap[outputIndex];auto curScale = _scales[output];// construct one quantization op(FloatToInt8)auto quantizationOp = new MNN::OpT;quantizationOp->main.type = MNN::OpParameter_QuantizedFloatParam;quantizationOp->name = name + "___FloatToInt8___" + flatbuffers::NumToString(i);quantizationOp->type = MNN::OpType_FloatToInt8;auto quantizationParam = new MNN::QuantizedFloatParamT;quantizationOp->main.value = quantizationParam;const int channels = curScale.size();std::vector<float> quantizationScale(channels);Helper::invertData(quantizationScale.data(), curScale.data(), channels);quantizationParam->tensorScale = quantizationScale;quantizationOp->inputIndexes.push_back(_originaleModel->tensorName.size());quantizationOp->outputIndexes.push_back(outputIndex);_originaleModel->tensorName.push_back(_originaleModel->tensorName[outputIndex]);_originaleModel->tensorName[outputIndex] = quantizationOp->name;op->outputIndexes[i] = quantizationOp->inputIndexes[0];iter = _originaleModel->oplists.insert(iter, std::unique_ptr<MNN::OpT>(quantizationOp));iter++;}}// Insert Turn float Op for output// 对输入算子之后插入反量化opfor (auto index : int8Outputs) {// construct new opauto dequantizationOp = new MNN::OpT;dequantizationOp->main.type = MNN::OpParameter_QuantizedFloatParam;dequantizationOp->name = "___Int8ToFloat___For_" + flatbuffers::NumToString(index);dequantizationOp->type = MNN::OpType_Int8ToFloat;auto dequantizationParam = new MNN::QuantizedFloatParamT;dequantizationOp->main.value = dequantizationParam;dequantizationParam->tensorScale = _scales[_tensorMap[index]];dequantizationOp->inputIndexes.push_back(index);dequantizationOp->outputIndexes.push_back(_originaleModel->tensorName.size());auto originTensorName = _originaleModel->tensorName[index];_originaleModel->tensorName[index] = dequantizationOp->name;_originaleModel->tensorName.emplace_back(originTensorName);_originaleModel->oplists.insert(_originaleModel->oplists.end(), std::unique_ptr<MNN::OpT>(dequantizationOp));}}