- Kubeadm 高可用部署

- 二进制高可用部署

- 1. 环境架构

- 2. 操作系统初始化

- 3. 安装 Docker

- 4. Etcd 集群搭建

- 5. 部署 Haproxy 和 Keepalived

- 6. 准备 k8s 相关证书

- 7. 部署 Master 节点组件

- 8. 部署 Worker 节点组件

- 9. 部署其他节点

- 10. 部署网络插件

- 11. 部署 CoreDNS

- 12. 部署 Metrics Server

- 13. 集群验证

- 运行busybox容器

- 解析kubernetes

- 解析kube-dns.kube-system

Kubeadm 高可用部署

Kubernetes 高可用部署实际上针对的是 apiServer 访问的高可用和 ETCD 集群的数据高可用。apiServer 高可用可以通过搭建多个 Master 节点,然后在 Kubernetes 集群前面增加一个负载均衡来实现。ETCD 的高可用

这里为了节省机器,将负载均衡和 ETCD 集群一同搭建在 Kubernetes Master 节点上了,生产中还是建议将这些服务部署在单独的服务器上。

1. 环境架构

1.1 角色划分

| 主机名 | IP | 角色 |

|---|---|---|

| k8s-master01 | 10.0.0.10 | K8s-master01、Keepalived、Haproxy、Etcd-node01 |

| k8s-master02 | 10.0.0.20 | K8s-master02、Keepalived、Haproxy、Etcd-node02 |

| k8s-master03 | 10.0.0.30 | K8s-master03、Keepalived、Haproxy、Etcd-node03 |

| k8s-node01 | 10.0.0.101 | K8s-node01 |

| k8s-node02 | 10.0.0.102 | K8s-node02 |

| 虚拟IP | 10.0.0.200 | Keepalived提供 |

1.2 拓扑图

2. 操作系统初始化

2.1 关闭防火墙

systemctl disable --now firewalld

2.2 关闭 selinux

setenforce 0sed -i 's/enforcing/disabled/' /etc/selinux/config

2.3 关闭 swap

swapoff -a && sysctl -w vm.swappiness=0sed -ri 's/.*swap.*/#&/' /etc/fstab

2.4 修改主机名和 hosts

hostnamectl set-hostname <hostname>cat >> /etc/hosts << EOF10.0.0.10 k8s-master0110.0.0.20 k8s-master0210.0.0.30 k8s-master0310.0.0.101 k8s-node0110.0.0.102 k8s-node0210.0.0.200 k8s-lbEOF

2.5 时间同步

# 硬件时钟和系统时间同步hwclock -s# 修改时区ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtimeecho "Asia/Shanghai" > /etc/timezone# 安装ntp服务which ntpdate &>/dev/null || yum -y install ntp# 时间同步ntpdate ntp.aliyun.com# 添加时间同步计划任务echo "$((RANDOM%60)) $((RANDOM%24)) * * * /usr/sbin/ntpdate time1.aliyun.com" >> /var/spool/cron/root

2.6 配置 limit

ulimit -SHn 65535# 注意:Ubuntu系统不支持*cat >> /etc/security/limits.conf <<EOF* soft nofile 65536* hard nofile 131072* soft nproc 65535* hard nproc 655350* soft memlock unlimited* hard memlock unlimited* soft core unlimited* hard core unlimitedEOF

2.7 ssh 免密登录(可选)

# 在k8s-master01上执行以下操作ssh-keygenssh-copy-id 127.0.0.1scp -r .ssh k8s-master02:/root/scp -r .ssh k8s-master03:/root/scp -r .ssh k8s-node01:/root/scp -r .ssh k8s-node02:/root/

2.8 升级内核版本

CentOS7 需要升级内核至 4.18+,但也不要太新,太新可能会出现老机器无法启动的情况。

这里选择 5.4 版本,手动下载内核进行安装。

CentOS7 内核下载地址

# 更新软件包并重启yum update -y --exclude=kernel* && reboot# 下载内核(下载速度较慢,建议提前下载好)wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.180-1.el7.elrepo.x86_64.rpmwget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.180-1.el7.elrepo.x86_64.rpm# 手动升级内核yum localinstall -y kernel-lt*# 设置默认启动内核grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfggrubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"# 查看默认内核版本grubby --default-kernel# 重启reboot# 查看内核版本uname -a

2.9 安装 ipvs 并加载到内核模块

在内核 4.19+ 版本 nf_conntrack_ipv4 已经改为 nf_conntrack,另外ip_vs_fo调度算法是内核 4.15 版本之后才有的,如果没有升级内核会报错。

# 安装工具包yum install -y ipvsadm ipset sysstat conntrack libseccomp# 开机加载模块cat > /etc/modules-load.d/ipvs.conf << EOFip_vsip_vs_lcip_vs_wlcip_vs_rrip_vs_wrrip_vs_lblcip_vs_lblcrip_vs_dhip_vs_ship_vs_foip_vs_nqip_vs_sedip_vs_ftpip_vs_shnf_conntrackip_tablesip_setxt_setipt_setipt_rpfilteript_REJECTipipEOF# 开机启动模块自动加载服务systemctl enable --now systemd-modules-load.service

2.10 修改内核参数

cat > /etc/sysctl.d/k8s.conf <<EOFnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1fs.may_detach_mounts = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_probes = 3net.ipv4.tcp_keepalive_intvl =15net.ipv4.tcp_max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 65536net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.tcp_timestamps = 0net.core.somaxconn = 16384EOFsysctl --system# 重启reboot

3. 安装 Docker

# 添加 Docker repo 源wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 查看版本yum list --showduplicates docker-ce# 安装yum -y install docker-ce# 单独挂载一块盘到/var/lib/docker目录# 修改镜像源和使用systemd管理cgroupmkdir /etc/dockercat > /etc/docker/daemon.json <<EOF{"exec-opts": ["native.cgroupdriver=systemd"],"log-driver": "json-file","log-opts": {"max-size": "100m"},"data-root": "/var/lib/docker","storage-driver": "overlay2","registry-mirrors": ["https://mirror.ccs.tencentyun.com","https://docker.mirrors.ustc.edu.cn","https://registry.docker-cn.com"]}EOF# 启动dockersystemctl daemon-reloadsystemctl enable --now docker

4. 安装 kubeadm,kubelet 和 kubectl

# 添加阿里云镜像源cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF# 查看版本yum list --showduplicates kubeadm# 安装yum -y install kubeadm-1.21* kubelet-1.21* kubectl-1.21*# 配置kubelet使用阿里云的pause镜像cat > /etc/sysconfig/kubelet <<EOFKUBELET_EXTRA_ARGS="--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5"EOF# 启动kubeletsystemctl daemon-reloadsystemctl enable --now kubelet

5. Haproxy 部署

5.1 安装

yum -y install haproxy

5.2 编辑配置文件

注意:所有 Master 节点配置文件相同。vim /etc/haproxy/haproxy.cfg

globaldaemonlog 127.0.0.1 local2 err # 全局rsyslog定义chroot /var/lib/haproxypidfile /var/run/haproxy.pidmaxconn 20000 # 每个haproxy进程所接受的最大并发连接数user haproxygroup haproxystats socket /var/lib/haproxy/statsspread-checks 2 # 后端server状态check随机提前或延迟百分比时间,建议2-5(20%-50%)之间defaultslog globalmode http # 使用七层代理option httplog # 在日志中记录http请求、session信息等option dontlognull # 不要在日志中记录空连接option redispatch # 用于cookie保持的环境中。默认情况下,HAProxy会将其请求的后端服务器的serverID插入cookie中,以保证会话的session持久性。如果后端服务器出现故障,客户端的cookie是不会刷新的,这就会造成无法访问。此时,如果设置了此参数,就会将客户的请求强制定向到另外一台健康的后端服务器上,以保证服务正常。timeout connect 10s # haproxy和服务端建立连接的最大时长,设置为1秒就足够了。局域网内建立连接一般都是瞬间的timeout client 3m # 和客户端保持空闲连接的超时时长,高并发下可稍微短一点,可设置为10秒以尽快释放连接timeout server 1m # 和服务端保持空闲连接的超时时长,局域网内建立连接很快,所以尽量设置短一些,特别是并发时,如设置为1-3秒timeout http-request 15s # 等待客户端发送完整请求的最大时长,应该设置较短些防止洪水攻击,优先级高于timeout clienttimeout http-keep-alive 15s # 和客户端保持长连接的最大时长。优先级高于timeoutfrontend monitor-inbind *:33305mode httpoption httplogmonitor-uri /monitorfrontend k8s-masterbind 0.0.0.0:16443bind 127.0.0.1:16443mode tcpoption tcplogtcp-request inspect-delay 5sdefault_backend k8s-masterbackend k8s-mastermode tcpoption tcplogoption tcp-checkbalance roundrobindefault-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100server k8s-master01 10.0.0.10:6443 checkserver k8s-master02 10.0.0.20:6443 checkserver k8s-master03 10.0.0.30:6443 check

5.3 启动服务

systemctl enable --now haproxy.service

6. Keepalived 部署

6.1 编译安装

# 安装依赖包yum install -y gcc openssl-devel libnl3-devel net-snmp-devel# 下载wget https://www.keepalived.org/software/keepalived-2.2.7.tar.gz# 解压tar xvf keepalived-2.2.7.tar.gz -C /usr/local/src# 编译安装cd /usr/local/src/keepalived-2.2.7/./configure --prefix=/usr/local/keepalivedmake && make install# 创建/etc/keepalived目录mkdir /etc/keepalived

6.2 编辑配置文件

vim /etc/keepalived/keepalived.conf

注意:三个Master节点的配置文件稍有不同。

- k8s-master01 配置文件: ```bash global_defs { script_user root # 脚本执行用户 router_id k8s-master01 # keepalived 主机唯一标识,建议使用当前主机名 vrrp_skip_check_adv_addr # 如果收到的通告报文和上一个报文是同一个路由,则跳过检查,默认为检查所有报文 enable_script_security # 如果脚本对任一非root用户来说具有可写权限,则不以root身份运行脚本 }

vrrp_script check_apiserver { script “/etc/keepalived/check_apiserver.sh” interval 5 # 检测间隔时间 weight -10 fall 2 rise 1 timeout 2 # 超时时间 }

vrrp_instance VIP_Nginx { state MASTER interface eth0 mcast_src_ip 10.0.0.10 # 发送多播包的地址,如果没有设置,默认使用绑定网卡的primary ip virtual_router_id 88 # 虚拟路由器惟一标识,同一个虚拟路由器的多个keepalived节点此值必须相同 priority 100 # Keepalived优先级 advert_int 2 authentication { # 使用普通密码认证 auth_type PASS auth_pass K8S_AUTH }

virtual_ipaddress {10.0.0.200 # 虚拟IP}track_script { # 健康检查check_apiserver}

}

- k8s-master02 配置文件:```bashglobal_defs {script_user root # 脚本执行用户router_id k8s-master02 # keepalived 主机唯一标识,建议使用当前主机名vrrp_skip_check_adv_addr # 如果收到的通告报文和上一个报文是同一个路由,则跳过检查,默认为检查所有报文enable_script_security # 如果脚本对任一非root用户来说具有可写权限,则不以root身份运行脚本}vrrp_script check_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5 # 检测间隔时间weight -10fall 2rise 1timeout 2 # 超时时间}vrrp_instance VIP_Nginx {state BACKUPinterface eth0mcast_src_ip 10.0.0.20 # 发送多播包的地址,如果没有设置,默认使用绑定网卡的primary ipvirtual_router_id 88 # 虚拟路由器惟一标识,同一个虚拟路由器的多个keepalived节点此值必须相同priority 95 # Keepalived优先级advert_int 2authentication { # 使用普通密码认证auth_type PASSauth_pass K8S_MASTER_AUTH}virtual_ipaddress {10.0.0.200 # 虚拟IP}track_script { # 健康检查check_apiserver}}

- k8s-master03配置文件: ```bash global_defs { script_user root # 脚本执行用户 router_id k8s-master03 # keepalived 主机唯一标识,建议使用当前主机名 vrrp_skip_check_adv_addr # 如果收到的通告报文和上一个报文是同一个路由,则跳过检查,默认为检查所有报文 enable_script_security # 如果脚本对任一非root用户来说具有可写权限,则不以root身份运行脚本 }

vrrp_script check_apiserver { script “/etc/keepalived/check_apiserver.sh” interval 5 # 检测间隔时间 weight -10 fall 2 rise 1 timeout 2 # 超时时间 }

vrrp_instance VIP_Nginx { state BACKUP interface eth0 mcast_src_ip 10.0.0.30 # 发送多播包的地址,如果没有设置,默认使用绑定网卡的primary ip virtual_router_id 88 # 虚拟路由器惟一标识,同一个虚拟路由器的多个keepalived节点此值必须相同 priority 95 # Keepalived优先级 advert_int 2 authentication { # 使用普通密码认证 auth_type PASS auth_pass K8S_MASTER_AUTH }

virtual_ipaddress {10.0.0.200 # 虚拟IP}track_script { # 健康检查check_apiserver}

}

**最后在每台 Master 节点上添加 api-server 健康检查脚本:**<br />`vim /etc/keepalived/check_apiserver.sh````bash#!/bin/basherr=0for k in $(seq 1 3)docheck_code=$(pgrep haproxy)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 2continueelseerr=0breakfidoneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1elseexit 0fi

添加执行权限:

chmod +x /etc/keepalived/check_apiserver.sh

6.2 启动 Keepalived

systemctl enable --now keepalived

7. Etcd 集群搭建

7.1 准备 cfssl 证书工具

# 下载工具包wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64# 添加执行权限chmod +x cfssl*# 移动并重命名mv cfssl_linux-amd64 /usr/local/bin/cfsslmv cfssljson_linux-amd64 /usr/local/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

7.2 生成 Etcd 证书

创建证书目录:

[root@k8s-master01 ~]# mkdir -p ~/tls/etcd

7.2.1 生成自签CA

# 切换目录cd ~/tls/etcd# 创建证书配置文件cat > etcd-ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOF# 创建证书申请文件cat > etcd-ca-csr.json << EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai","O": "etcd","OU": "Etcd Security"}]}EOF# 生成证书cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca -

7.2.2 生成 Etcd Server 证书

# 创建证书申请文件# hosts字段中的IP为所有etcd节点的集群内部通信IP,为了方便后期扩容可以多写几个预留IPcat > etcd-server-csr.json << EOF{"CN": "etcd","hosts": ["k8s-master01","k8s-master02","k8s-master03","10.0.0.10","10.0.0.20","10.0.0.30","10.0.0.40"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai"}]}EOF# 生成证书cfssl gencert -ca=etcd-ca.pem -ca-key=etcd-ca-key.pem -config=etcd-ca-config.json -profile=www etcd-server-csr.json | cfssljson -bare ./etcd-server

7.3 部署 Etcd 集群

7.3.1 下载&配置

# 下载wget https://github.com/etcd-io/etcd/releases/download/v3.4.18/etcd-v3.4.18-linux-amd64.tar.gz# 解压tar xvf etcd-v* -C /opt/# 软连接mv /opt/etcd-v* /opt/etcd# 创建目录mkdir -p /opt/etcd/{bin,conf,certs}# 移动可执行文件mv /opt/etcd/etcd* /opt/etcd/bin/# 修改属主chown -R root.root /opt/etcd/

7.3.2 创建 etcd 配置文件

# 创建配置文件cat > /opt/etcd/conf/etcd.conf << EOF#[Member]ETCD_NAME="etcd-1"ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://10.0.0.10:2380"ETCD_LISTEN_CLIENT_URLS="https://10.0.0.10:2379"#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.10:2380"ETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.10:2379"ETCD_INITIAL_CLUSTER="etcd-1=https://10.0.0.10:2380,etcd-2=https://10.0.0.20:2380,etcd-3=https://10.0.0.30:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"EOF

ETCD_NAME:节点名称,集群中唯一ETCD_DATA_DIR:数据目录ETCD_LISTEN_PEER_URLS:集群通信监听地址ETCD_LISTEN_CLIENT_URLS:客户端访问监听地址ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址ETCD_ADVERTISE_CLIENT_URLS:客户端通告地址ETCD_INITIAL_CLUSTER:集群节点地址池ETCD_INITIALCLUSTER_TOKEN:集群TokenETCD_INITIALCLUSTER_STATE:加入集群的当前状态,new是新集群,existing表示加入已有集群7.3.3 创建 service 文件

```bash cat > /usr/lib/systemd/system/etcd.service << EOF [Unit] Description=Etcd Server After=network.target After=network-online.target Wants=network-online.target

[Service] Type=notify EnvironmentFile=/opt/etcd/conf/etcd.conf ExecStart=/opt/etcd/bin/etcd \ —cert-file=/opt/etcd/certs/etcd-server.pem \ —key-file=/opt/etcd/certs/etcd-server-key.pem \ —peer-cert-file=/opt/etcd/certs/etcd-server.pem \ —peer-key-file=/opt/etcd/certs/etcd-server-key.pem \ —trusted-ca-file=/opt/etcd/certs/etcd-ca.pem \ —peer-trusted-ca-file=/opt/etcd/certs/etcd-ca.pem \ —logger=zap Restart=on-failure LimitNOFILE=65536

[Install] WantedBy=multi-user.target EOF

<a name="5a288613"></a>#### 7.3.4 拷贝证书到指定目录```bashcp ~/tls/etcd/*pem /opt/etcd/certs/

7.3.5 配置其他节点

# 将etcd目录和service文件拷贝到其他节点scp -r /opt/etcd/ root@k8s-master02:/opt/scp -r /opt/etcd/ root@k8s-master03:/opt/scp /usr/lib/systemd/system/etcd.service root@k8s-master02:/usr/lib/systemd/system/scp /usr/lib/systemd/system/etcd.service root@k8s-master03:/usr/lib/systemd/system/# 文件拷贝完成后修改etcd.conf配置文件中的节点名称和 IP。vim /opt/etcd/conf/etcd.conf#[Member]ETCD_NAME="etcd-2" <== 节点名称ETCD_DATA_DIR="/var/lib/etcd/default.etcd"ETCD_LISTEN_PEER_URLS="https://10.0.0.20:2380" <== 节点IPETCD_LISTEN_CLIENT_URLS="https://10.0.0.20:2379" <== 节点IP#[Clustering]ETCD_INITIAL_ADVERTISE_PEER_URLS="https://10.0.0.20:2380" <== 节点IPETCD_ADVERTISE_CLIENT_URLS="https://10.0.0.20:2379" <== 节点IPETCD_INITIAL_CLUSTER="etcd-1=https://10.0.0.10:2380,etcd-2=https://10.0.0.20:2380,etcd-3=https://10.0.0.30:2380"ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"ETCD_INITIAL_CLUSTER_STATE="new"

7.3.6 加载配置并启动

systemctl daemon-reloadsystemctl enable --now etcd

7.3.7 查看集群状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl \--cacert=/opt/etcd/certs/etcd-ca.pem \--cert=/opt/etcd/certs/etcd-server.pem \--key=/opt/etcd/certs/etcd-server-key.pem \--endpoints="https://10.0.0.10:2379,https://10.0.0.20:2379,https://10.0.0.30:2379" \endpoint health \--write-out=table# 出现下面的表格即说明集群部署成功# 如果有问题请查看日志进行排查:/var/log/message 或 journalctl -u etcd+------------------------+--------+------------+-------+| ENDPOINT | HEALTH | TOOK | ERROR |+------------------------+--------+------------+-------+| https://10.0.0.10:2379 | true | 8.957952ms | || https://10.0.0.30:2379 | true | 9.993095ms | || https://10.0.0.20:2379 | true | 9.813129ms | |+------------------------+--------+------------+-------+

8. 集群初始化

注意:以下操作只在 k8s-master01 节点执行。

Kubernetes 集群支持两种初始化方式,第一种是直接使用命令行指定参数进行初始化,第二种是使用配置文件的方式加载参数进行初始化,由于需要修改的参数较多,这里选用第二种方式,使用配置文件进行初始化。

使用命令kubeadm config print init-defaults > initConfig.yaml可以生成初始化配置文件模板,可以根据需要进行修改。下面是修改后的配置示例文件:

apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: abcdef.0123456789abcdefttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 10.0.0.10 # 主机IPbindPort: 6443 # apiServer端口nodeRegistration:criSocket: /var/run/dockershim.sockname: k8s-master01 # hostnametaints: # 污点- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:certSANs: # 访问apiServer的白名单,包含所有Master/LB/VIP,为了方便后期扩容可以多写几个预留IP- k8s-master01- k8s-master02- k8s-master03- k8s-lb- 10.0.0.200- 10.0.0.222timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetes # 集群名称controlPlaneEndpoint: k8s-lb:16443 # 控制平面endpointcontrollerManager: {}dns:type: CoreDNSetcd:external: # 使用外部etcdendpoints: # etcd后端节点- https://10.0.0.10:2379- https://10.0.0.20:2379- https://10.0.0.30:2379caFile: /opt/etcd/certs/etcd-ca.pemcertFile: /opt/etcd/certs/etcd-server.pemkeyFile: /opt/etcd/certs/etcd-server-key.pemimageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers # 镜像仓库设置kind: ClusterConfigurationkubernetesVersion: v1.21.10 # 版本号networking:dnsDomain: cluster.localpodSubnet: 172.16.0.0/12 # Pod子网设置serviceSubnet: 192.168.0.0/16 # service子网设置scheduler: {}

8.1 更新 initConfig.yaml 文件

kubeadm config migrate --old-config initConfig.yaml --new-config kubeadm-config.yaml

8.2 k8s-Master01 节点初始化

初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他 Master 节点加入 k8s-master01 节点即可。

kubeadm init --config /root/kubeadm-config.yaml --upload-certs# 可以使用下面的命令提前下载镜像kubeadm config images list --config initConfig.yamlkubeadm config images pull --config /root/kubeadm-config.yaml

注意:如果初始化失败,可以排错后进行重置后,然后再次初始化,重置命令如下:

kubeadm reset -f; ipvsadm --clear; rm -rf ~/.kube

初始化成功后,会给出一下提示操作,以及加入集群的 Token 令牌信息,如下所示:

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:kubeadm join k8s-lb:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:c622312d44fbe54fe49ff0b19c72eacf163edaab49e3cb5d0104e15f68d1afc3 \--control-plane --certificate-key 7d996915da0ab463b0ccc5b76877565866d77a43c767753fa94171c73d5b1d47Please note that the certificate-key gives access to cluster sensitive data, keep it secret!As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:kubeadm join k8s-lb:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:c622312d44fbe54fe49ff0b19c72eacf163edaab49e3cb5d0104e15f68d1afc3

8.3 配置 kubeconfig 文件

方式一:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

方式二:

cat >> /root/.bashrc <<EOFexport KUBECONFIG=/etc/kubernetes/admin.confEOFsource /root/.bashrc

8.4 查看节点状态

```bash [root@k8s-master01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master01 NotReady control-plane,master 12m v1.21.10

[root@k8s-master01 ~]# kubectl get pod -n kube-system NAME READY STATUS RESTARTS AGE coredns-6f6b8cc4f6-wrbrp 0/1 Pending 0 18m coredns-6f6b8cc4f6-z6nvb 0/1 Pending 0 18m etcd-k8s-master01 1/1 Running 0 19m kube-apiserver-k8s-master01 1/1 Running 0 19m kube-controller-manager-k8s-master01 1/1 Running 0 19m kube-proxy-klc6q 1/1 Running 0 18m kube-scheduler-k8s-master01 1/1 Running 0 19m

这里节点未就绪是正常的,因为网络插件还没有部署。<a name="Gb1JI"></a>### 8.5 其他 Master 节点加入集群使用提示中的命令,在其他 Master 节点执行:```bashkubeadm join k8s-lb:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:c622312d44fbe54fe49ff0b19c72eacf163edaab49e3cb5d0104e15f68d1afc3 \--control-plane --certificate-key 7d996915da0ab463b0ccc5b76877565866d77a43c767753fa94171c73d5b1d47

注意:这里的 Token 有效期为 24h,过了有效期后可以使用以下命令重新生成 Token:

kubeadm token create --print-join-command# Master节点还需要生成--certificate-keykubeadm init phase upload-certs --upload-certs

8.6 Node 节点加入集群

kubeadm join k8s-lb:16443 --token abcdef.0123456789abcdef \--discovery-token-ca-cert-hash sha256:c622312d44fbe54fe49ff0b19c72eacf163edaab49e3cb5d0104e15f68d1afc3

9 部署网络插件

常用的网络插件有 Calico 和 Flannel,选择其中一种部署即可。(推荐使用 Calico)

9.1 Calico

下载 yaml 文件:

wget https://docs.projectcalico.org/manifests/calico.yaml

下载完后需要修改里面定义Pod网络(CALICO_IPV4POOL_CIDR),与初始化时的 Pod 网络一致。

sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: "172.16.0.0/12"@g' calico.yaml

为了加快部署,将 yaml 中的镜像替换成国内镜像。注意:下面是我个人提前准备好的镜像,如果因为版本问题导致部署失败请自行更换镜像。

sed -r -i '/calico\/cni:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-cni:v3.22.2#' calico.yamlsed -r -i '/calico\/pod2daemon-flexvol:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-pod2daemon-flexvol:v3.22.2#' calico.yamlsed -r -i '/calico\/node:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-node:v3.22.2#' calico.yamlsed -r -i '/calico\/kube-controllers:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-kube-controllers:v3.22.2#' calico.yaml

部署 yaml 文件:

kubectl apply -f calico.yaml# 查看calico pod运行情况kubectl get pod -n kube-system

等 calico pod 全部正常运行,再次查看 Node 节点,发现已经变为 Ready 状态:

[root@k8s-master01 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master01 Ready control-plane,master 23m v1.21.10k8s-master02 Ready control-plane,master 35m v1.21.10k8s-master03 Ready control-plane,master 37m v1.21.10k8s-node01 Ready <none> 45m v1.21.10k8s-node02 Ready <none> 47m v1.21.10

9.2 Flannel

下载 Yaml 文件:

wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

10. 测试集群

创建一个 Nginx deployment 和 svc,访问 http://NodeIP:Port。

kubectl create deployment nginx --image=nginxkubectl expose deployment nginx --port=80 --type=NodePortkubectl get pod,svccurl <pod-ip>:80

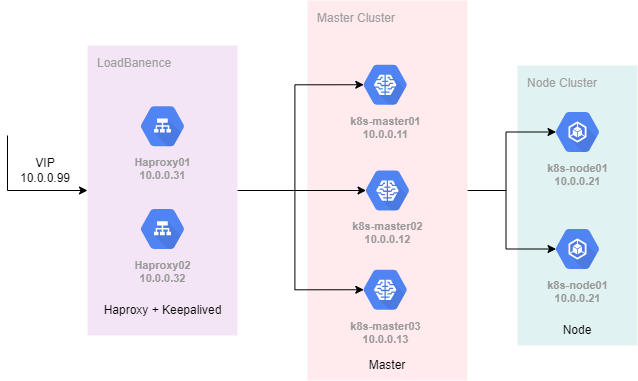

二进制高可用部署

1. 环境架构

1.1 角色划分

| 主机名 | IP | 角色 | 服务组件 |

|---|---|---|---|

| k8s-master01 | 10.0.0.11 | k8s-master节点 | Kube-apiserver、Kube-controller-manager、Kube-scheduler、Etcd、Docker、Kubelet、Kubectl |

| k8s-master02 | 10.0.0.12 | k8s-master节点 | Kube-apiserver、Kube-controller-manager、Kube-scheduler、Etcd、Docker、Kubelet、Kubectl |

| k8s-master03 | 10.0.0.13 | k8s-master节点 | Kube-apiserver、Kube-controller-manager、Kube-scheduler、Etcd、Docker、Kubelet、Kubectl |

| k8s-node01 | 10.0.0.21 | k8s-node节点 | Docker、Kubelet、Kube-proxy |

| k8s-node02 | 10.0.0.22 | k8s-node节点 | Docker、Kubelet、Kube-proxy |

| Haproxy01 | 10.0.0.31 | Haproxy + Keepalived | Keepalived+Haproxy |

| Haproxy02 | 10.0.0.32 | Haproxy + Keepalived | Keepalived+Haproxy |

| 10.0.0.99 | 虚拟IP | Keepalived |

1.2 拓扑图

2. 操作系统初始化

2.1 关闭防火墙

systemctl stop firewalldsystemctl disable firewalld

2.2 关闭 selinux

setenforce 0sed -i 's/enforcing/disabled/' /etc/selinux/config

2.3 关闭 swap

swapoff -a && sysctl -w vm.swappiness=0sed -ri 's/.*swap.*/#&/' /etc/fstab

2.4 修改 hosts

cat >> /etc/hosts << EOF10.0.0.11 k8s-master0110.0.0.12 k8s-master0210.0.0.13 k8s-master0310.0.0.21 k8s-node0110.0.0.22 k8s-node0210.0.0.99 k8s-master-lbEOF

2.5 时间同步

# 硬件时钟和系统时间同步hwclock -s# 修改时区ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtimeecho "Asia/Shanghai" > /etc/timezone# 安装 chrony 时间同步服务yum install -y chrony# 修改时间服务配置文件sed -i '/iburst/d' /etc/chrony.confsed -i '2aserver ntp1.aliyun.com iburst\nserver ntp2.aliyun.com iburst\nserver time1.cloud.tencent.com iburst\nserver time2.cloud.tencent.com iburst' /etc/chrony.conf# 启动服务systemctl enable --now chronyd# 查看是否生效chronyc sources -v

2.6 配置 limit

ulimit -SHn 65535cat >> /etc/security/limits.conf <<EOF* soft nofile 65536* hard nofile 131072* soft nproc 65535* hard nproc 655350* soft memlock unlimited* hard memlock unlimited* soft core unlimited* hard core unlimitedEOF

2.7 ssh 免密登录(可选)

# 在k8s-master01上执行以下操作ssh-keygenssh-copy-id 127.0.0.1scp -r .ssh k8s-master02:/root/scp -r .ssh k8s-master03:/root/scp -r .ssh k8s-node01:/root/scp -r .ssh k8s-node02:/root/

2.8 升级内核版本

CentOS7 需要升级内核至 4.18+,但也不要太新,太新可能会出现老机器无法启动的情况。

这里选择 5.4 版本,手动下载内核进行安装。

CentOS7 内核下载地址

# 更新软件包并重启yum update -y --exclude=kernel*# 下载内核(下载速度较慢,建议提前下载好)wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.xxx-1.el7.elrepo.x86_64.rpmwget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.xxx-1.el7.elrepo.x86_64.rpm# 手动升级内核yum localinstall -y kernel-lt*# 设置默认启动内核grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfggrubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"# 查看默认内核版本grubby --default-kernel# 重启reboot# 查看内核版本uname -a

2.9 安装 ipvs 并加载到内核模块

在内核 4.19+ 版本 nf_conntrack_ipv4 已经改为 nf_conntrack,另外ip_vs_fo调度算法是内核 4.15 版本之后才有的,如果没有升级内核会报错。

# 安装工具包yum install -y ipvsadm ipset sysstat conntrack libseccomp# 开机加载模块cat > /etc/modules-load.d/ipvs.conf << EOFip_vsip_vs_lcip_vs_wlcip_vs_rrip_vs_wrrip_vs_lblcip_vs_lblcrip_vs_dhip_vs_ship_vs_foip_vs_nqip_vs_sedip_vs_ftpip_vs_shnf_conntrackip_tablesip_setxt_setipt_setipt_rpfilteript_REJECTipipEOF# 开机启动模块自动加载服务systemctl enable --now systemd-modules-load.service# 重启后查看模块加载情况lsmod | grep ip_vs

2.10 修改内核参数

cat > /etc/sysctl.d/k8s.conf <<EOFnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1fs.may_detach_mounts = 1net.ipv4.conf.all.route_localnet = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_probes = 3net.ipv4.tcp_keepalive_intvl =15net.ipv4.tcp_max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 65536net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.tcp_timestamps = 0net.core.somaxconn = 16384EOFsysctl --system# 重启reboot

3. 安装 Docker

# 添加 Docker repo 源wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# 查看版本yum list --showduplicates docker-ce# 安装yum -y install docker-ce# 修改镜像源和使用systemd管理cgroup## 建议单独挂载一块盘到/var/lib/docker目录mkdir /etc/dockercat > /etc/docker/daemon.json <<EOF{"exec-opts": ["native.cgroupdriver=systemd"],"log-driver": "json-file","log-opts": {"max-size": "300m","max-file": "2"},"data-root": "/var/lib/docker","storage-driver": "overlay2","max-concurrent-uploads": 5,"max-concurrent-downloads": 10,"registry-mirrors": ["https://mirror.ccs.tencentyun.com","https://docker.mirrors.ustc.edu.cn","https://registry.docker-cn.com"],"live-restore": true}EOF# 启动dockersystemctl daemon-reloadsystemctl enable --now docker

4. Etcd 集群搭建

4.1 准备 cfssl 证书工具

# 下载工具包wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64# 添加执行权限chmod +x cfssl*# 移动并重命名mv cfssl_linux-amd64 /usr/local/bin/cfsslmv cfssljson_linux-amd64 /usr/local/bin/cfssljsonmv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

4.2 生成 Etcd 证书

创建证书目录:

mkdir -p ~/tls/etcd

4.2.1 生成自签CA

# 切换目录cd ~/tls/etcd# 创建证书配置文件cat > etcd-ca-config.json << EOF{"signing": {"default": {"expiry": "87600h"},"profiles": {"www": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOF# 创建证书申请文件cat > etcd-ca-csr.json << EOF{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai","O": "etcd","OU": "Etcd Security"}]}EOF# 生成证书cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare ./etcd-ca -

4.2.2 生成 Etcd Server 证书

# 创建证书申请文件# hosts字段中的IP为所有etcd节点的集群内部通信IP,为了方便后期扩容可以多写几个预留IPcat > etcd-server-csr.json << EOF{"CN": "etcd","hosts": ["k8s-master01","k8s-master02","k8s-master03","10.0.0.11","10.0.0.12","10.0.0.13","10.0.0.14","10.0.0.15"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai"}]}EOF# 生成证书cfssl gencert -ca=etcd-ca.pem -ca-key=etcd-ca-key.pem -config=etcd-ca-config.json -profile=www etcd-server-csr.json | cfssljson -bare ./etcd-server

4.3 部署 Etcd 集群

4.3.1 下载&配置

# 下载安装包(建议手动下载后上传)wget https://github.com/etcd-io/etcd/releases/download/v3.4.18/etcd-v3.4.18-linux-amd64.tar.gz# 解压tar xvf etcd-v* -C /opt/# 重命名mv /opt/etcd-v* /opt/etcd# 创建目录mkdir -p /opt/etcd/{bin,conf,certs}# 移动可执行文件mv /opt/etcd/etcd* /opt/etcd/bin/# 修改属主chown -R root.root /opt/etcd/

4.3.2 创建 etcd 配置文件

# 创建配置文件cat > /opt/etcd/conf/etcd.config.yml <<-EOFname: 'etcd-node01'data-dir: /opt/datawal-dir: /opt/data/walsnapshot-count: 5000heartbeta-interval: 100election-timeout: 1000quota-backend-bytes: 0listen-peer-urls: 'https://10.0.0.11:2380'listen-client-urls: 'https://10.0.0.11:2379,https://127.0.0.1:2379'max-snapshots: 3max-wals: 5initial-advertise-peer-urls: 'https://10.0.0.11:2380'advertise-client-urls: 'https://10.0.0.11:2379'initial-cluster: 'etcd-node01=https://10.0.0.11:2380,etcd-node02=https://10.0.0.12:2380,etcd-node03=https://10.0.0.13:2380'initial-cluster-token: 'etcd-k8s-cluster'initial-cluster-state: 'new'strict-reconfig-check: falseenable-pprof: trueclient-transport-security:cert-file: '/opt/etcd/certs/etcd-server.pem'key-file: '/opt/etcd/certs/etcd-server-key.pem'client-cert-auth: truetrusted-ca-file: '/opt/etcd/certs/etcd-ca.pem'auto-tls: truepeer-transport-security:cert-file: '/opt/etcd/certs/etcd-server.pem'key-file: '/opt/etcd/certs/etcd-server-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/opt/etcd/certs/etcd-ca.pem'auto-tls: truedebug: falselogger: zaplog-level: warnlog-outputs: [default]force-new-cluster: falseEOF

4.3.3 创建 service 文件

cat > /usr/lib/systemd/system/etcd.service << EOF[Unit]Description=Etcd ServerDocumentation=https://etcd.io/docs/After=network.targetAfter=network-online.targetWants=network-online.target[Service]Type=notifyExecStart=/opt/etcd/bin/etcd --config-file=/opt/etcd/conf/etcd.config.ymlRestart=on-failureRestartSec=10LimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

4.3.4 拷贝证书到指定目录

cp ~/tls/etcd/*.pem /opt/etcd/certs/

4.3.5 配置其他节点

将 etcd 目录和 service 文件拷贝到其他节点:

scp -r /opt/etcd/ root@k8s-master02:/opt/scp -r /opt/etcd/ root@k8s-master03:/opt/scp /usr/lib/systemd/system/etcd.service root@k8s-master02:/usr/lib/systemd/system/scp /usr/lib/systemd/system/etcd.service root@k8s-master03:/usr/lib/systemd/system/

文件拷贝完成后修改 etcd.conf 配置文件中的节点名称和 IP:

vim /opt/etcd/conf/etcd.config.ymlname: 'etcd-node02'...listen-peer-urls: 'https://10.0.0.12:2380'listen-client-urls: 'https://10.0.0.12:2379,https://127.0.0.1:2379'...initial-advertise-peer-urls: 'https://10.0.0.12:2380'advertise-client-urls: 'https://10.0.0.12:2379'

4.3.6 加载配置并启动

systemctl daemon-reloadsystemctl enable --now etcd

4.3.7 查看集群状态

ETCDCTL_API=3 /opt/etcd/bin/etcdctl --cacert=/opt/etcd/certs/etcd-ca.pem --cert=/opt/etcd/certs/etcd-server.pem --key=/opt/etcd/certs/etcd-server-key.pem --endpoints="https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379" endpoint health --write-out=table# 出现下面的表格即说明集群部署成功# 如果有问题请查看日志进行排查:/var/log/message 或 journalctl -u etcd+------------------------+--------+------------+-------+| ENDPOINT | HEALTH | TOOK | ERROR |+------------------------+--------+------------+-------+| https://10.0.0.11:2379 | true | 8.957952ms | || https://10.0.0.12:2379 | true | 9.993095ms | || https://10.0.0.13:2379 | true | 9.813129ms | |+------------------------+--------+------------+-------+

5. 部署 Haproxy 和 Keepalived

5.1 安装 Haproxy 和 Keepalived

[root@haproxy-node01 ~]# yum -y install keepalived haproxy[root@haproxy-node02 ~]# yum -y install keepalived haproxy

5.2 配置 Haproxy

两个节点配置相同

[root@haproxy-node01 ~]# vim /etc/haproxy/haproxy.cfgglobaldaemonlog 127.0.0.1 local2 err # 全局rsyslog定义chroot /var/lib/haproxypidfile /var/run/haproxy.pidmaxconn 20000 # 每个haproxy进程所接受的最大并发连接数user haproxygroup haproxystats socket /var/lib/haproxy/statsspread-checks 2 # 后端server状态check随机提前或延迟百分比时间,建议2-5(20%-50%)之间defaultslog globalmode http # 使用七层代理option httplog # 在日志中记录http请求、session信息等option dontlognull # 不要在日志中记录空连接option redispatch # 用于cookie保持的环境中。默认情况下,HAProxy会将其请求的后端服务器的serverID插入cookie中,以保证会话的session持久性。如果后端服务器出现故障,客户端的cookie是不会刷新的,这就会造成无法访问。此时,如果设置了此参数,就会将客户的请求强制定向到另外一台健康的后端服务器上,以保证服务正常。timeout connect 10s # haproxy和服务端建立连接的最大时长,设置为1秒就足够了。局域网内建立连接一般都是瞬间的timeout client 3m # 和客户端保持空闲连接的超时时长,高并发下可稍微短一点,可设置为10秒以尽快释放连接timeout server 1m # 和服务端保持空闲连接的超时时长,局域网内建立连接很快,所以尽量设置短一些,特别是并发时,如设置为1-3秒timeout http-request 15s # 等待客户端发送完整请求的最大时长,应该设置较短些防止洪水攻击,优先级高于timeout clienttimeout http-keep-alive 15s # 和客户端保持长连接的最大时长。优先级高于timeoutfrontend monitor-inbind *:33305mode httpoption httplogmonitor-uri /monitorlisten statsbind *:8006mode httpstats enablestats hide-versionstats uri /statsstats refresh 30sstats realm Haproxy Statisticsstats auth admin:admin123frontend k8s-masterbind 0.0.0.0:6443bind 127.0.0.1:6443mode tcpoption tcplogtcp-request inspect-delay 5sdefault_backend k8s-masterbackend k8s-mastermode tcpoption tcplogoption tcp-checkbalance roundrobindefault-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100server k8s-master01 10.0.0.11:6443 checkserver k8s-master02 10.0.0.12:6443 checkserver k8s-master03 10.0.0.13:6443 check

5.3 启动 Haproxy

[root@haproxy-node01 ~]# systemctl enable --now haproxy[root@haproxy-node02 ~]# systemctl enable --now haproxy

5.4 配置 Keepalived

编辑配置文件:

[root@haproxy-node01 ~]# vim /etc/keepalived/keepalived.confglobal_defs {script_user root # 脚本执行用户router_id haproxy01 # keepalived 主机唯一标识,建议使用当前主机名vrrp_skip_check_adv_addr # 如果收到的通告报文和上一个报文是同一个路由,则跳过检查,默认为检查所有报文enable_script_security # 如果脚本对任一非root用户来说具有可写权限,则不以root身份运行脚本}vrrp_script check_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5 # 检测间隔时间weight -10fall 2rise 1timeout 2 # 超时时间}vrrp_instance VIP_API_SERVER {state MASTERinterface eth0mcast_src_ip 10.0.0.31 # 发送多播包的地址,如果没有设置,默认使用绑定网卡的primary ipvirtual_router_id 88 # 虚拟路由器惟一标识,同一个虚拟路由器的多个keepalived节点此值必须相同priority 100 # Keepalived优先级advert_int 2authentication { # 使用普通密码认证auth_type PASSauth_pass API_AUTH}virtual_ipaddress {10.0.0.99 dev eth0 # 虚拟IP}track_script { # 健康检查check_apiserver}}

准备 Haproxy 检测脚本:

[root@haproxy-node01 ~]# vim /etc/keepalived/check_apiserver.sh#!/bin/basherr=0for k in $(seq 1 3)docheck_code=$(pgrep haproxy)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 1continueelseerr=0breakfidoneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1elseexit 0fi

添加执行权限:

chmod +x /etc/keepalived/check_apiserver.sh

将配置文件拷贝到 haproxy-node02 上:

[root@haproxy-node01 ~]# scp /etc/keepalived/* haproxy-node02:/etc/keepalived/

修改 haproxy-node02 配置文件:

[root@haproxy-node02 ~]# vim /etc/keepalived/keepalived.conf...router_id hapeoxy-node02 # keepalived 主机唯一标识,建议使用当前主机名state BACKUPmcast_src_ip 10.0.0.32 # 发送组播包的地址,如果没有设置,默认使用绑定网卡的primary ippriority 95 # Keepalived优先级...

5.5 启动 Keepalived

systemctl enable --now keepalived

6. 准备 k8s 相关证书

创建证书目录:

mkdir -p ~/tls/k8s/{ca,kube-apiserver,kube-controller-manager,kube-scheduler,kubectl,front-proxy,service-account}

6.1 生成自签 CA

# 切换目录cd ~/tls/k8s/ca# 创建证书配置文件cat > k8s-ca-config.json << EOF{"signing": {"default": {"expiry": "876000h"},"profiles": {"kubernetes": {"expiry": "876000h","usages": ["signing","key encipherment","server auth","client auth"]}}}}EOF# 创建证书申请文件cat > k8s-ca-csr.json << EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai","O": "Kubernetes","OU": "Kubernetes-bin"}],"ca": {"expiry": "876000h"}}EOF# 生成证书cfssl gencert -initca k8s-ca-csr.json | cfssljson -bare ./k8s-ca -

6.2 生成 kube-apiServer 证书

# 切换目录cd ~/tls/k8s/kube-apiserver# 创建证书申请文件cat > kube-apiserver-csr.json << EOF{"CN": "kube-apiserver","hosts": ["k8s-master01","k8s-master02","k8s-master03","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local","127.0.0.1","192.168.0.1","10.0.0.11","10.0.0.12","10.0.0.13","10.0.0.99"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","ST": "Shanghai","L": "Shanghai","O": "Kubernetes","OU": "Kubernetes-bin"}]}EOF# 生成证书cfssl gencert -ca=../ca/k8s-ca.pem -ca-key=../ca/k8s-ca-key.pem -config=../ca/k8s-ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare ./kube-apiserver

6.3 生成 apiServer 聚合证书

# 切换目录cd ~/tls/k8s/front-proxy# 创建CA申请文件cat > front-proxy-ca-csr.json <<-EOF{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"ca": {"expiry": "876000h"}}EOF# 生成front-proxy-ca证书cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare ./front-proxy-ca# 创建证书申请文件cat > front-proxy-client-csr.json <<-EOF{"CN": "front-proxy-client","key": {"algo": "rsa","size": 2048}}EOFcfssl gencert -ca=./front-proxy-ca.pem -ca-key=./front-proxy-ca-key.pem -config=../ca/k8s-ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare ./front-proxy-client

6.4 生成 kube-controller-manager 证书

# 切换工作目录cd ~/tls/k8s/kube-controller-manager# 创建证书请求文件cat > kube-controller-manager-csr.json << EOF{"CN": "system:kube-controller-manager","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai","O": "system:kube-controller-manager","OU": "Kubernetes-bin"}]}EOF# 生成证书cfssl gencert -ca=../ca/k8s-ca.pem -ca-key=../ca/k8s-ca-key.pem -config=../ca/k8s-ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare ./kube-controller-manager

6.5 生成 kube-schedule 证书

# 切换工作目录cd ~/tls/k8s/kube-scheduler# 创建证书请求文件cat > kube-scheduler-csr.json << EOF{"CN": "system:kube-scheduler","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai","O": "system:kube-scheduler","OU": "Kubernetes-bin"}]}EOF# 生成证书cfssl gencert -ca=../ca/k8s-ca.pem -ca-key=../ca/k8s-ca-key.pem -config=../ca/k8s-ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare ./kube-scheduler

6.6 生成 kubectl 证书

# 切换工作目录cd ~/tls/k8s/kubectl# 创建证书请求文件cat > admin-csr.json <<EOF{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Shanghai","ST": "Shanghai","O": "system:masters","OU": "Kubernetes-bin"}]}EOF# 生成证书cfssl gencert -ca=../ca/k8s-ca.pem -ca-key=../ca/k8s-ca-key.pem -config=../ca/k8s-ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare ./admin

6.7 生成 Service Account 密钥对

# 切换工作目录cd ~/tls/k8s/service-account# 创建密钥对openssl genrsa -out ./sa.key 2048openssl rsa -in ./sa.key -pubout -out ./sa.pub

7. 部署 Master 节点组件

7.1 下载 Kubernetes 二进制文件

Kubernetes 下载地址

上面的地址是 Kubernetes github 各版本下载地址,选择对应的版本,下载 Server Binaries 即可,里面包含了 Master 和 Worker Node 的所有二进制文件。(这里选择的是 v1.21.11 版本)

# 回到家目录cd# 创建k8s工作目录mkdir -p /opt/kubernetes/{bin,conf,certs,logs,manifests}# 下载(速度较慢,建议手动下载后上传)wget https://dl.k8s.io/v1.21.11/kubernetes-server-linux-amd64.tar.gz# 解压tar xvf kubernetes-server-linux-amd64.tar.gz# 将命令行工具拷贝到对应目录cp ~/kubernetes/server/bin/kube{-apiserver,-controller-manager,-scheduler,let,-proxy,ctl} /opt/kubernetes/bin# 创建kubectl软连接ln -s /opt/kubernetes/bin/kubectl /usr/local/bin/kubectl

7.2 部署 kube-apiServer

7.2.1 创建 service 文件

cat > /usr/lib/systemd/system/kube-apiserver.service << EOF[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]ExecStart=/opt/kubernetes/bin/kube-apiserver \\--logtostderr=false \\--v=2 \\--log-file=/opt/kubernetes/logs/kube-apiserver.log \\--etcd-servers=https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379 \\--etcd-cafile=/opt/etcd/certs/etcd-ca.pem \\--etcd-certfile=/opt/etcd/certs/etcd-server.pem \\--etcd-keyfile=/opt/etcd/certs/etcd-server-key.pem \\--bind-address=0.0.0.0 \\--secure-port=6443 \\--advertise-address=10.0.0.11 \\--allow-privileged=true \\--service-cluster-ip-range=192.168.0.0/16 \\--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,ResourceQuota,NodeRestriction \\--authorization-mode=RBAC,Node \\--enable-bootstrap-token-auth=true \\--service-node-port-range=30000-32767 \\--kubelet-client-certificate=/opt/kubernetes/certs/kube-apiserver.pem \\--kubelet-client-key=/opt/kubernetes/certs/kube-apiserver-key.pem \\--tls-cert-file=/opt/kubernetes/certs/kube-apiserver.pem \\--tls-private-key-file=/opt/kubernetes/certs/kube-apiserver-key.pem \\--client-ca-file=/opt/kubernetes/certs/k8s-ca.pem \\--service-account-key-file=/opt/kubernetes/certs/sa.pub \\--service-account-issuer=https://kubernetes.default.svc.cluster.local \\--service-account-signing-key-file=/opt/kubernetes/certs/sa.key \\--requestheader-client-ca-file=/opt/kubernetes/certs/front-proxy-ca.pem \\--proxy-client-cert-file=/opt/kubernetes/certs/front-proxy-client.pem \\--proxy-client-key-file=/opt/kubernetes/certs/front-proxy-client-key.pem \\--requestheader-allowed-names=aggregator \\--requestheader-extra-headers-prefix=X-Remote-Extra- \\--requestheader-group-headers=X-Remote-Group \\--requestheader-username-headers=X-Remote-User \\--enable-aggregator-routing=true \\--audit-log-maxage=30 \\--audit-log-maxbackup=3 \\--audit-log-maxsize=100 \\--audit-log-path=/opt/kubernetes/logs/k8s-audit.log"Restart=on-failureRestartSec=10sLimitNOFILE=65535[Install]WantedBy=multi-user.targetEOF

7.2.2 拷贝证书文件

cp ~/tls/k8s/ca/k8s-ca*pem ~/tls/k8s/kube-apiserver/kube-apiserver*pem ~/tls/k8s/front-proxy/front-proxy*.pem ~/tls/k8s/service-account/* /opt/kubernetes/certs/

7.2.3 加载配置并启动

systemctl daemon-reloadsystemctl enable --now kube-apiserversystemctl status kube-apiserver

7.3 部署 kube-controller-manager

7.3.1 拷贝证书文件

cp ~/tls/k8s/kube-controller-manager/kube-controller-manager*.pem /opt/kubernetes/certs/

7.3.2 生成 kubeconfig 文件

KUBE_CONFIG="/opt/kubernetes/conf/kube-controller-manager.kubeconfig"KUBE_APISERVER="https://10.0.0.99:6443"# 设置cluster信息kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/certs/k8s-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}# 设置用户信息kubectl config set-credentials kube-controller-manager \--client-certificate=/opt/kubernetes/certs/kube-controller-manager.pem \--client-key=/opt/kubernetes/certs/kube-controller-manager-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}# 设置上下文信息kubectl config set-context kube-controller-manager@kubernetes \--cluster=kubernetes \--user=kube-controller-manager \--kubeconfig=${KUBE_CONFIG}# 选择要使用的上下文信息kubectl config use-context kube-controller-manager@kubernetes --kubeconfig=${KUBE_CONFIG}

7.3.3 创建 service 文件

注意:**--cluster-cidr=172.16.0.0/12**该参数为集群 Pod 子网,请确保设置正确。

cat > /usr/lib/systemd/system/kube-controller-manager.service << EOF[Unit]Description=Kubernetes Controller ManagerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]ExecStart=/opt/kubernetes/bin/kube-controller-manager \\--v=2 \\--logtostderr=false \\--log-file=/opt/kubernetes/logs/kube-controller-manager.log \\--address=0.0.0.0 \\--root-ca-file=/opt/kubernetes/certs/k8s-ca.pem \\--cluster-signing-cert-file=/opt/kubernetes/certs/k8s-ca.pem \\--cluster-signing-key-file=/opt/kubernetes/certs/k8s-ca-key.pem \\--service-account-private-key-file=/opt/kubernetes/certs/sa.key \\--kubeconfig=/opt/kubernetes/conf/kube-controller-manager.kubeconfig \\--leader-elect=true \\--use-service-account-credentials=true \\--node-monitor-grace-period=40s \\--node-monitor-period=5s \\--pod-eviction-timeout=2m0s \\--controllers=*,bootstrapsigner,tokencleaner \\--allocate-node-cidrs=true \\--cluster-cidr=172.16.0.0/12 \\--requestheader-client-ca-file=/opt/kubernetes/certs/front-proxy-ca.pem \\--cluster-signing-duration=876000h0m0s \\--node-cidr-mask-size=24Restart=alwaysRestartSec=10s[Install]WantedBy=multi-user.targetEOF

7.3.4 加载配置并启动

systemctl daemon-reloadsystemctl enable --now kube-controller-managersystemctl status kube-controller-manager

7.4 部署 kube-scheduler

7.4.1 拷贝证书文件

cp ~/tls/k8s/kube-scheduler/kube-scheduler*.pem /opt/kubernetes/certs/

7.4.2 生成 kubeconfig 文件

KUBE_CONFIG="/opt/kubernetes/conf/kube-scheduler.kubeconfig"KUBE_APISERVER="https://10.0.0.99:6443"# 设置cluster信息kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/certs/k8s-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}# 设置用户信息kubectl config set-credentials kube-scheduler \--client-certificate=/opt/kubernetes/certs/kube-scheduler.pem \--client-key=/opt/kubernetes/certs/kube-scheduler-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}# 设置上下文信息kubectl config set-context kube-scheduler@kubernetes \--cluster=kubernetes \--user=kube-scheduler \--kubeconfig=${KUBE_CONFIG}# 选择要使用的上下文信息kubectl config use-context kube-scheduler@kubernetes --kubeconfig=${KUBE_CONFIG}

7.4.3 创建 service 文件

cat > /usr/lib/systemd/system/kube-scheduler.service << EOF[Unit]Description=Kubernetes SchedulerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]ExecStart=/opt/kubernetes/bin/kube-scheduler \\--logtostderr=false \\--v=2 \\--log-file=/opt/kubernetes/logs/kube-scheduler.log \\--leader-elect=true \\--kubeconfig=/opt/kubernetes/conf/kube-scheduler.kubeconfig \\--bind-address=127.0.0.1Restart=alwaysRestartSec=10s[Install]WantedBy=multi-user.targetEOF

7.4.4 加载配置并启动

systemctl daemon-reloadsystemctl enable --now kube-schedulersystemctl status kube-scheduler

7.5 部署 kubectl

7.5.1 拷贝证书文件

cp ~/tls/k8s/kubectl/admin*.pem /opt/kubernetes/certs/

7.5.2 生成 kubeconfig 文件

KUBE_CONFIG="/opt/kubernetes/conf/kubectl.kubeconfig"KUBE_APISERVER="https://10.0.0.99:6443"# 设置cluster信息kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/certs/k8s-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}# 设置用户信息kubectl config set-credentials kubernetes-admin \--client-certificate=/opt/kubernetes/certs/admin.pem \--client-key=/opt/kubernetes/certs/admin-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}# 设置上下文信息kubectl config set-context kubernetes-admin@kubernetes \--cluster=kubernetes \--user=kubernetes-admin \--kubeconfig=${KUBE_CONFIG}# 选择要使用的上下文信息kubectl config use-context kubernetes-admin@kubernetes --kubeconfig=${KUBE_CONFIG}# 创建kubectl config目录mkdir /root/.kube# 拷贝kubeconfig文件到默认目录cp /opt/kubernetes/conf/kubectl.kubeconfig /root/.kube/config

7.5.3 查看集群状态

[root@k8s-master01 ~]# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORcontroller-manager Healthy okscheduler Healthy oketcd-2 Healthy {"health":"true"}etcd-1 Healthy {"health":"true"}etcd-0 Healthy {"health":"true"}

7.6 为 kubelet-bootstrap 授权

7.6.1 TLS Bootstrapping 配置

vim ~/tls-bootstrapping.yaml

apiVersion: v1kind: Secretmetadata:name: bootstrap-token-8e2e05namespace: kube-systemtype: bootstrap.kubernetes.io/tokenstringData:description: "The default bootstrap token generated by 'kubelet '."token-id: 8e2e05token-secret: 1bfcc0c4bb0dbf2cusage-bootstrap-authentication: "true"usage-bootstrap-signing: "true"auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: kubelet-bootstraproleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:node-bootstrappersubjects:- apiGroup: rbac.authorization.k8s.iokind: Groupname: system:bootstrappers:default-node-token---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: node-autoapprove-bootstraproleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:certificates.k8s.io:certificatesigningrequests:nodeclientsubjects:- apiGroup: rbac.authorization.k8s.iokind: Groupname: system:bootstrappers:default-node-token---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: node-autoapprove-certificate-rotationroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:certificates.k8s.io:certificatesigningrequests:selfnodeclientsubjects:- apiGroup: rbac.authorization.k8s.iokind: Groupname: system:nodes---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubeletrules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metricsverbs:- "*"---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: system:kube-apiservernamespace: ""roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubeletsubjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kube-apiserver

上面的 yaml 文件大致可以分为三个部分,第一个部分是名为bootstrap-token-<token id>的 secret,用于验证 Node 节点身份;第二个部分为中间的三个ClusterRoleBinding,分别实现为 Node 节点进行身份绑定、controller manager自动颁发证书和证书过期后自动续签证书的功能;最后一个部分则创建了一个system:kube-apiserver-to-kubelet的集群角色,并将其与kube-apiserver用户绑定,作用是授权 apiServer 去访问 kubelet。

7.6.2 应用 yaml 文件

kubectl apply -f tls-bootstrapping.yaml

7.6.3 TLS Bootstrapping 原理(选修)

TLS Bootstraping:Kubernetes 集群默认启用 TLS 认证进行连接,Node 节点 kubelet 和 kube-proxy 组件要和 apiServer 进行通信,必须使用集群 CA 签发的有效证书才可以,当 Node 节点很多时,如果手动颁发证书,会给管理员带来巨大的工作量,不利于集群的扩展。因此,Kubernetes 引入了 TLS bootstraping 机制来为 Node 节点自动颁发证书,kubelet 会以一个低权限令牌自动连接到 apiServer 并申请证书,kubelet 的证书由 controllerManager 自动进行验证和签署。

整个过程具体如下:

- kubelet 服务启动后会先去寻找

**bootstrap-kubeconfig**文件。 - kubelet 读取该文件,从中获取到 apiServer 的 URL 地址和一个权限比较低 Token 令牌(仅用于申请证书)。

- kubelet 连接到 apiServer ,并使用这个 Token 令牌做身份验证。

- 在上面

7.6.1章节的 yaml 文件中,第一部分就是集群Secret,验证过程主要就是验证bootstrap-kubeconfig(可以去8.1.1章节查看bootstrap-kubeconfig的创建)文件中的Token令牌,与集群的Secret是否一致。 - 引导令牌使用

abcdef.0123456789abcdef的形式,它需要符合正则表达式[a-z0-9]{6}\.[a-z0-9]{16}。令牌的第一部分(小数点前面部分)是Token ID,它是一种公开信息,使用这个Token ID就可以在集群中找到对应的Secret资源。 第二部分才是真正的Token Secret,只有这两部分都匹配上,才算是验证通过。 - 集群中的 Secret 令牌附带一个额外的身份组

**system:bootstrappers:default-node-token**,用于将 Node 节点绑定到**system:node-bootstrapper**角色(上面7.6.1章节的 yaml 文件中第二部分的ClusterRoleBinding),查看一下这个角色可以发现,这个角色仅拥有 CSR 证书相关的权限。 ```yaml [root@k8s-master01 ~]# kubectl get clusterrole system:node-bootstrapper -o yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: “true” creationTimestamp: “2022-04-10T02:28:47Z” labels: kubernetes.io/bootstrapping: rbac-defaults name: system:node-bootstrapper resourceVersion: “101” uid: 9cbbcca1-2e64-4484-96b4-52043cffb3e9 rules:

- 在上面

- apiGroups:

- certificates.k8s.io resources:

- certificatesigningrequests verbs:

- create

- get

- list

- watch ```

- 验证通过后,kubelet 节点就获取到了

**system:node-bootstrapper**角色的权限,以此来申请和创建 CSR 证书。 - controllerManager 如果配置了,就可以自动对 CSR 进行审核批复,如果没有配置,需要管理员手动使用命令进行批复。

- 配置 controllerManager 实际就是创建一个

ClusterRoleBinding,将集群角色**system:certificates.k8s.io:certificatesigningrequests:nodeclient**绑定到 Node 节点的 Group**system:bootstrappers:default-node-token**上,查看一下这个角色可以发现,该角色拥有certificatesigningrequests/nodeclient的create权限。而这个certificatesigningrequests/nodeclient正是 Kubernetes 中专门为 Node 节点颁发证书的一种资源。 ```yaml [root@k8s-master01 ~]# kubectl get clusterrole system:certificates.k8s.io:certificatesigningrequests:nodeclient -o yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: annotations: rbac.authorization.kubernetes.io/autoupdate: “true” creationTimestamp: “2022-04-10T02:28:47Z” labels: kubernetes.io/bootstrapping: rbac-defaults name: system:certificates.k8s.io:certificatesigningrequests:nodeclient resourceVersion: “107” uid: b0ef0dae-cf2c-42b1-81bc-1b2e2c3541c6 rules:

- 配置 controllerManager 实际就是创建一个

- apiGroups:

- certificates.k8s.io resources:

- certificatesigningrequests/nodeclient verbs:

- create ```

- kubelet 所需要的证书被批复然后发放给 Node 节点。

- kubelet 拿到证书后会自行创建一个

**kubeconfig**,里面包含了密钥和签名证书,并以此开始正常和 apiServier 交互。

注意:要想启用 TLS Bootstrapping 机制,需要在 kube-apiserver 服务中开启** --enable-bootstrap-token-auth=true**参数,上面部署过程中已经开启了此项参数,这里仅作说明。

8. 部署 Worker 节点组件

以下操作也需要在 Master 节点上上进行,即 Master Node 同时也作为 Worker Node,如果不想在 Master Node 上部署 Pod 的话,后续使用下面的命令为 Master Node 打上 taint 。

kubectl taint nodes k8s-master01 node-role.kubernetes.io/master=:NoSchedule

8.1 部署 kubelet

8.1.1 创建 kubeconfig 文件

KUBE_CONFIG="/opt/kubernetes/conf/bootstrap.kubeconfig"KUBE_APISERVER="https://10.0.0.99:6443"TOKEN="8e2e05.1bfcc0c4bb0dbf2c" # 与tls-bootstrapping.yaml里的令牌保持一致# 设置cluster信息kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/certs/k8s-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}# 设置用户信息kubectl config set-credentials kubelet-bootstrap \--token=${TOKEN} \--kubeconfig=${KUBE_CONFIG}# 设置上下文信息kubectl config set-context kubelet-bootstrap@kubernetes \--cluster=kubernetes \--user=kubelet-bootstrap \--kubeconfig=${KUBE_CONFIG}# 选择要使用的上下文信息kubectl config use-context kubelet-bootstrap@kubernetes --kubeconfig=${KUBE_CONFIG}

8.1.2 创建配置参数文件

注意:**clusterDNS**默认为 service 子网第十个 ip,如果 service 子网有调整,请根据自己的设置进行修改。

cat > /opt/kubernetes/conf/kubelet-config.yml << EOFapiVersion: kubelet.config.k8s.io/v1beta1kind: KubeletConfigurationaddress: 0.0.0.0port: 10250readOnlyPort: 10255authentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /opt/kubernetes/certs/k8s-ca.pemauthorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30scgroupDriver: systemdcgroupsPerQOS: trueclusterDNS:- 192.168.0.10clusterDomain: cluster.localcontainerLogMaxFiles: 5containerLogMaxSize: 10MicontentType: application/vnd.kubernetes.protobufcpuCFSQuota: truecpuManagerPolicy: nonecpuManagerReconcilePeriod: 10senableControllerAttachDetach: trueenableDebuggingHandlers: trueenforceNodeAllocatable:- podseventBurst: 10eventRecordQPS: 5evictionHard:imagefs.available: 15%memory.available: 100Minodefs.available: 10%nodefs.inodesFree: 5%evictionPressureTransitionPeriod: 5m0sfailSwapOn: truefileCheckFrequency: 20shairpinMode: promiscuous-bridgehealthzBindAddress: 127.0.0.1healthzPort: 10248httpCheckFrequency: 20simageGCHighThresholdPercent: 85imageGCLowThresholdPercent: 80imageMinimumGCAge: 2m0siptablesDropBit: 15iptablesMasqueradeBit: 14kubeAPIBurst: 10kubeAPIQPS: 5makeIPTablesUtilChains: truemaxOpenFiles: 1000000maxPods: 110nodeStatusUpdateFrequency: 10soomScoreAdj: -999podPidsLimit: -1registryBurst: 10registryPullQPS: 5resolvConf: /etc/resolv.confrotateCertificates: trueruntimeRequestTimeout: 2m0sserializeImagePulls: truestaticPodPath: /opt/kubernetes/manifestsstreamingConnectionIdleTimeout: 4h0m0ssyncFrequency: 1m0svolumeStatsAggPeriod: 1m0sEOF

8.1.3 创建 service 文件

cat > /usr/lib/systemd/system/kubelet.service << EOF[Unit]Description=Kubernetes KubeletDocumentation=https://github.com/kubernetes/kubernetesAfter=docker.serviceRequires=docker.service[Service]ExecStart=/opt/kubernetes/bin/kubelet \\--logtostderr=false \\--v=2 \\--log-file=/opt/kubernetes/logs/kubelet.log \\--network-plugin=cni \\--kubeconfig=/opt/kubernetes/conf/kubelet.kubeconfig \\--bootstrap-kubeconfig=/opt/kubernetes/conf/bootstrap.kubeconfig \\--config=/opt/kubernetes/conf/kubelet-config.yml \\--cert-dir=/opt/kubernetes/certs \\--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5 \\--tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384 \\--image-pull-progress-deadline=30mRestart=alwaysRestartSec=10LimitNOFILE=65536StartLimitInterval=0[Install]WantedBy=multi-user.targetEOF

8.1.4 加载配置并启动

systemctl daemon-reloadsystemctl enable --now kubeletsystemctl status kubelet

8.2 部署 kube-proxy

8.2.1 创建 kubeconfig 文件

# 创建 kube-proxy sa用户kubectl -n kube-system create serviceaccount kube-proxy# 将kube-proxy sa用户绑定到system:node-proxier角色kubectl create clusterrolebinding system:kube-proxy --clusterrole system:node-proxier --serviceaccount kube-system:kube-proxySECRET=$(kubectl -n kube-system get sa/kube-proxy --output=jsonpath='{.secrets[0].name}')TOKEN=$(kubectl -n kube-system get secret/$SECRET --output=jsonpath='{.data.token}' | base64 -d)KUBE_CONFIG="/opt/kubernetes/conf/kube-proxy.kubeconfig"KUBE_APISERVER="https://10.0.0.99:6443"# 设置集群信息kubectl config set-cluster kubernetes \--certificate-authority=/opt/kubernetes/certs/k8s-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}# 设置用户信息kubectl config set-credentials kube-proxy \--token=${TOKEN} \--kubeconfig=${KUBE_CONFIG}# 设置上下文信息kubectl config set-context kube-proxy@kubernetes \--cluster=kubernetes \--user=kube-proxy \--kubeconfig=${KUBE_CONFIG}# 选择要使用的上下文信息kubectl config use-context kube-proxy@kubernetes --kubeconfig=${KUBE_CONFIG}

8.2.2 创建 kube-proxy config 文件

注意:**kubeconfig**文件的路径和**clusterCIDR**pod 子网,如果和上面的操作不一样,请自行修改。

cat > /opt/kubernetes/conf/kube-proxy-config.yml << EOFapiVersion: kubeproxy.config.k8s.io/v1alpha1bindAddress: 0.0.0.0clientConnection:acceptContentTypes: ""burst: 10contentType: application/vnd.kubernetes.protobufkubeconfig: /opt/kubernetes/conf/kube-proxy.kubeconfigqps: 5clusterCIDR: 172.16.0.0/12configSyncPeriod: 15m0sconntrack:max: nullmaxPerCore: 32768min: 131072tcpCloseWaitTimeout: 1h0m0stcpEstablishedTimeout: 24h0m0senableProfiling: falsehealthzBindAddress: 0.0.0.0:10256hostnameOverride: ""iptables:masqueradeAll: falsemasqueradeBit: 14minSyncPeriod: 0ssyncPeriod: 30sipvs:masqueradeAll: trueminSyncPeriod: 5sscheduler: "rr"syncPeriod: 30skind: KubeProxyConfigurationmetricsBindAddress: 127.0.0.1:10249mode: "ipvs"nodePortAddresses: nulloomScoreAdj: -999portRange: ""udpIdleTimeout: 250msEOF

8.2.3 创建 service 文件

cat > /usr/lib/systemd/system/kube-proxy.service << EOF[Unit]Description=Kubernetes ProxyDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]ExecStart=/opt/kubernetes/bin/kube-proxy \\--log-file=/opt/kubernetes/logs/kube-proxy.log \\--v=2 \\--config=/opt/kubernetes/conf/kube-proxy-config.ymlRestart=alwaysRestartSec=10sLimitNOFILE=65536[Install]WantedBy=multi-user.targetEOF

8.2.4 加载配置并启动

systemctl daemon-reloadsystemctl enable --now kube-proxysystemctl status kube-proxy

9. 部署其他节点

9.1 启动另外两个 Master 节点

9.1.1 拷贝文件

scp -r /opt/kubernetes k8s-master02:/opt/scp -r /opt/kubernetes k8s-master03:/opt/scp /usr/lib/systemd/system/kube* k8s-master02:/usr/lib/systemd/system/scp /usr/lib/systemd/system/kube* k8s-master03:/usr/lib/systemd/system/# 清理日志目录文件rm -rf /opt/kubernetes/logs/*# 删除node节点证书rm -rf /opt/kubernetes/certs/kubelet-client*

9.1.2 修改service文件

只需要修改kube-apiserver.service文件即可。

# 修改kube-apiserver.service[root@k8s-master02 ~]# vim /usr/lib/systemd/system/kube-apiserver.service...--advertise-address=10.0.0.12 \...[root@k8s-master03 ~]# vim /usr/lib/systemd/system/kube-apiserver.service...--advertise-address=10.0.0.13 \...

9.1.3 启动服务

systemctl daemon-reloadsystemctl enable --now kube-apiserversystemctl enable --now kube-controller-managersystemctl enable --now kube-schedulersystemctl enable --now kubeletsystemctl enable --now kube-proxy

9.2 加入 Node 节点

9.2.1 拷贝文件

scp -r /opt/kubernetes k8s-node01:/opt/scp -r /opt/kubernetes k8s-node02:/opt/scp /usr/lib/systemd/system/kube{let,-proxy}.service k8s-node01:/usr/lib/systemd/system/scp /usr/lib/systemd/system/kube{let,-proxy}.service k8s-node02:/usr/lib/systemd/system/# 清理日志目录文件rm -rf /opt/kubernetes/logs/*# 删除node节点证书rm -rf /opt/kubernetes/certs/kubelet-client*

9.2.2 启动服务

systemctl daemon-reloadsystemctl enable --now kubeletsystemctl enable --now kube-proxy

9.3 查看节点状态

[root@k8s-master01 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONk8s-master01 NotReady <none> 3m58s v1.21.11k8s-master02 NotReady <none> 54s v1.21.11k8s-master03 NotReady <none> 51s v1.21.11k8s-node01 NotReady <none> 7s v1.21.11k8s-node02 NotReady <none> 4s v1.21.11

所有节点都加入集群成功,这里状态显示为NotReady是正常的,因为还没有部署网络插件。

10. 部署网络插件

这里使用 Calico 网络插件。

10.1 下载 yaml 文件

curl https://projectcalico.docs.tigera.io/manifests/calico-etcd.yaml -O

10.2 修改 yaml 文件

# 修改etcd后端集群地址sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://10.0.0.11:2379,https://10.0.0.12:2379,https://10.0.0.13:2379"#g' calico-etcd.yamlETCD_CA=`cat /opt/etcd/certs/etcd-ca.pem | base64 -w 0`ETCD_CERT=`cat /opt/etcd/certs/etcd-server.pem | base64 -w 0`ETCD_KEY=`cat /opt/etcd/certs/etcd-server-key.pem | base64 -w 0`POD_SUBNET='172.16.0.0/12'# 添加etcd证书配置sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml# 修改Pod子网sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "192.168.0.0/16"@ value: "'${POD_SUBNET}'"@g' calico-etcd.yaml# 指定容器内证书路径sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml# 替换镜像为国内镜像源sed -r -i '/calico\/cni:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-cni:v3.22.2#' calico-etcd.yamlsed -r -i '/calico\/pod2daemon-flexvol:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-pod2daemon-flexvol:v3.22.2#' calico-etcd.yamlsed -r -i '/calico\/node:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-node:v3.22.2#' calico-etcd.yamlsed -r -i '/calico\/kube-controllers:/s#(image: ).*#\1registry.cn-shanghai.aliyuncs.com/wuvikr-k8s/calico-kube-controllers:v3.22.2#' calico-etcd.yaml

下面为修改后的 yaml 文件:(仅供参考,不可以直接拿来使用,需要按上面的步骤修改相应内容。)