1、RAID

1.1、阵列分析

目前常用的 RAID 磁盘阵列的方案有四种:RAID0、RAID1、RAID5、RAID10

| 阵列技术 | 作用 | 优势 | 劣势 |

|---|---|---|---|

| RAID0 | 两块盘合为一块 | 提升硬盘数据的吞吐速度 | 数据不安全,某一块盘换了就读取不了 |

| RAID1 | 一比一复制 | 数据安全 | 磁盘利用率只有50% |

| RAID5 | 把硬盘设备的数据奇偶校验信息保存到其他硬盘设备中 | 0和1的折中结合体,既能提高吞吐速度又能做到数据安全保障 | 提供数据安全保障,但保障程度要比 RAID1 低,磁盘空间利用率要比RAID1高 |

| RAID10 | RAID0和RAID1的结合体,先分别两两制作成 RAID 1 磁盘阵列,再对两个 RAID 1 磁盘阵列实施 RAID 0 技术 | 完美解决数据吞吐速度和安全问题 | 成本高,比较费盘 |

1.2、磁盘阵列的部署

mdadm 命令用于管理 Linux 系统中的软件 RAID 硬盘阵列参数:-a 检测设备名称-n 指定设备数量-l 指定 RAID 级别-C 创建-v 显示过程-f 模拟设备损坏-r 移除设备-Q 查看摘要信息-D 查看详细信息-S 停止 RAID 磁盘阵列

创建RAID10阵列

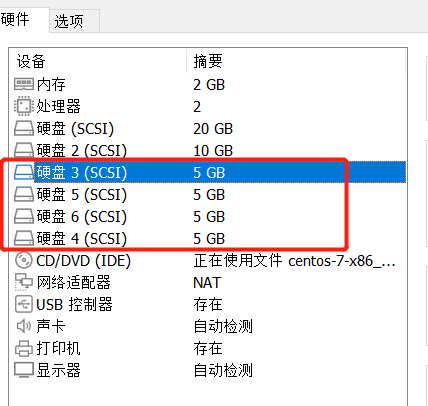

1、将虚拟机上增加4块硬盘

2、将增加的盘创建分区

[root@localhost ~]# fdisk /dev/sdc欢迎使用 fdisk (util-linux 2.23.2)。更改将停留在内存中,直到您决定将更改写入磁盘。使用写入命令前请三思。Device does not contain a recognized partition table使用磁盘标识符 0xdac4b323 创建新的 DOS 磁盘标签。命令(输入 m 获取帮助):n #创建分区Partition type:p primary (0 primary, 0 extended, 4 free)e extendedSelect (default p): p #创建主分区分区号 (1-4,默认 1):起始 扇区 (2048-10485759,默认为 2048):将使用默认值 2048Last 扇区, +扇区 or +size{K,M,G} (2048-10485759,默认为 10485759):5G值超出范围。Last 扇区, +扇区 or +size{K,M,G} (2048-10485759,默认为 10485759):将使用默认值 10485759分区 1 已设置为 Linux 类型,大小设为 5 GiB命令(输入 m 获取帮助):wThe partition table has been altered!Calling ioctl() to re-read partition table.正在同步磁盘。

3、创建RAID区

[root@localhost ~]# mdadm -Cv /dev/md0 -a yes -l 10 -n 4 /dev/sdc /dev/sdd /dev/sde /dev/sdfmdadm: layout defaults to n2mdadm: layout defaults to n2mdadm: chunk size defaults to 512Kmdadm: /dev/sdc appears to be part of a raid array:level=raid10 devices=4 ctime=Thu Apr 28 13:50:25 2022mdadm: partition table exists on /dev/sdc but will be lost ormeaningless after creating arraymdadm: /dev/sdd appears to be part of a raid array:level=raid10 devices=4 ctime=Thu Apr 28 13:50:25 2022mdadm: partition table exists on /dev/sdd but will be lost ormeaningless after creating arraymdadm: /dev/sde appears to be part of a raid array:level=raid10 devices=4 ctime=Thu Apr 28 13:50:25 2022mdadm: partition table exists on /dev/sde but will be lost ormeaningless after creating arraymdadm: /dev/sdf appears to be part of a raid array:level=raid10 devices=4 ctime=Thu Apr 28 13:50:25 2022mdadm: partition table exists on /dev/sdf but will be lost ormeaningless after creating arraymdadm: size set to 5237760KContinue creating array?Continue creating array? (y/n) ymdadm: Defaulting to version 1.2 metadatamdadm: array /dev/md0 started.

4、挂载RAID区

[root@localhost ~]# mkfs.ext4 /dev/md0mke2fs 1.42.9 (28-Dec-2013)文件系统标签=OS type: Linux块大小=4096 (log=2)分块大小=4096 (log=2)Stride=128 blocks, Stripe width=256 blocks655360 inodes, 2618880 blocks130944 blocks (5.00%) reserved for the super user第一个数据块=0Maximum filesystem blocks=215167795280 block groups32768 blocks per group, 32768 fragments per group8192 inodes per groupSuperblock backups stored on blocks:32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632Allocating group tables: 完成正在写入inode表: 完成Creating journal (32768 blocks): 完成Writing superblocks and filesystem accounting information: 完成[root@localhost ~]# mount /dev/md0 /RAID/[root@localhost ~]# df -TH/dev/md0 ext4 11G 38M 9.9G 1% /RAID

5、永久挂载

5、永久挂载[root@localhost ~]# vi /etc/fstab/dev/mapper/centos-root / xfs defaults 0 0UUID=41ff41fd-8e69-450d-9ebb-7551b9a76153 /boot xfs defaults 0 0/dev/mapper/centos-swap swap swap defaults 0 0/dev/sdb1 /nesFs xfs defaults 0 0/dev/md0 /RAID ext4 defaults 0 0

6、查看阵列信息

[root@localhost ~]# mdadm -Dmdadm: No devices given.[root@localhost ~]# mdadm -D /dev/md0/dev/md0:Version : 1.2Creation Time : Thu Apr 28 14:06:40 2022Raid Level : raid10Array Size : 10475520 (9.99 GiB 10.73 GB)Used Dev Size : 5237760 (5.00 GiB 5.36 GB)Raid Devices : 4Total Devices : 4Persistence : Superblock is persistentUpdate Time : Thu Apr 28 14:08:34 2022State : cleanActive Devices : 4Working Devices : 4Failed Devices : 0Spare Devices : 0Layout : near=2Chunk Size : 512KConsistency Policy : resyncName : localhost.localdomain:0 (local to host localhost.localdomain)UUID : 4aeb4a9c:b44cddab:f8595c65:ebdc5a0eEvents : 19Number Major Minor RaidDevice State0 8 32 0 active sync set-A /dev/sdc1 8 48 1 active sync set-B /dev/sdd2 8 64 2 active sync set-A /dev/sde3 8 80 3 active sync set-B /dev/sdf

7、移除阵列

[root@localhost ~]# mdadm -S /dev/md0mdadm: stopped /dev/md0[root@localhost ~]# mdadm -D #再次查看阵列就没有了mdadm: No devices given.

1.3、修复阵列

在RAID10级别的磁盘阵列中,当RAID1磁盘阵列中存在一个故障盘时并不影响RAID10磁盘阵列的使用。当购买了新的硬盘设备后再使用mdadm命令来予以替换即可,在此期间我们可以在/RAID 目录中正常地创建或删除文件。由于我们是在虚拟机中模拟硬盘,所以先重启。再把新的硬盘添加到 RAID 磁盘阵列中

1、模拟损坏/dev/sdc盘损坏

[root@localhost ~]# mdadm /dev/md0 -f /dev/sdcmdadm: set /dev/sdc faulty in /dev/md0[root@localhost ~]#[root@localhost ~]#[root@localhost ~]#[root@localhost ~]# mdadm -Dmdadm: No devices given.[root@localhost ~]# mdadm -D /dev/md0/dev/md0:Version : 1.2Creation Time : Thu Apr 28 14:06:40 2022Raid Level : raid10Array Size : 10475520 (9.99 GiB 10.73 GB)Used Dev Size : 5237760 (5.00 GiB 5.36 GB)Raid Devices : 4Total Devices : 4Persistence : Superblock is persistentUpdate Time : Thu Apr 28 14:22:56 2022State : clean, degradedActive Devices : 3Working Devices : 3Failed Devices : 1Spare Devices : 0Layout : near=2Chunk Size : 512KConsistency Policy : resyncName : localhost.localdomain:0 (local to host localhost.localdomain)UUID : 4aeb4a9c:b44cddab:f8595c65:ebdc5a0eEvents : 21Number Major Minor RaidDevice State- 0 0 0 removed1 8 48 1 active sync set-B /dev/sdd2 8 64 2 active sync set-A /dev/sde3 8 80 3 active sync set-B /dev/sdf0 8 32 - faulty /dev/sdc[root@localhost ~]#

2、重新分区挂载

mdadm /dev/md0 -a /dev/sdb[root@localhost ~]# mdadm -D /dev/md0/dev/md0:Version : 1.2Creation Time : Thu Apr 28 14:06:40 2022Raid Level : raid10Array Size : 10475520 (9.99 GiB 10.73 GB)Used Dev Size : 5237760 (5.00 GiB 5.36 GB)Raid Devices : 4Total Devices : 4Persistence : Superblock is persistentUpdate Time : Thu Apr 28 14:39:06 2022State : cleanActive Devices : 4Working Devices : 4Failed Devices : 0Spare Devices : 0Layout : near=2Chunk Size : 512KConsistency Policy : resyncName : localhost.localdomain:0 (local to host localhost.localdomain)UUID : 4aeb4a9c:b44cddab:f8595c65:ebdc5a0eEvents : 44Number Major Minor RaidDevice State4 8 32 0 active sync set-A /dev/sdc1 8 48 1 active sync set-B /dev/sdd2 8 64 2 active sync set-A /dev/sde3 8 80 3 active sync set-B /dev/sdf[root@localhost ~]#

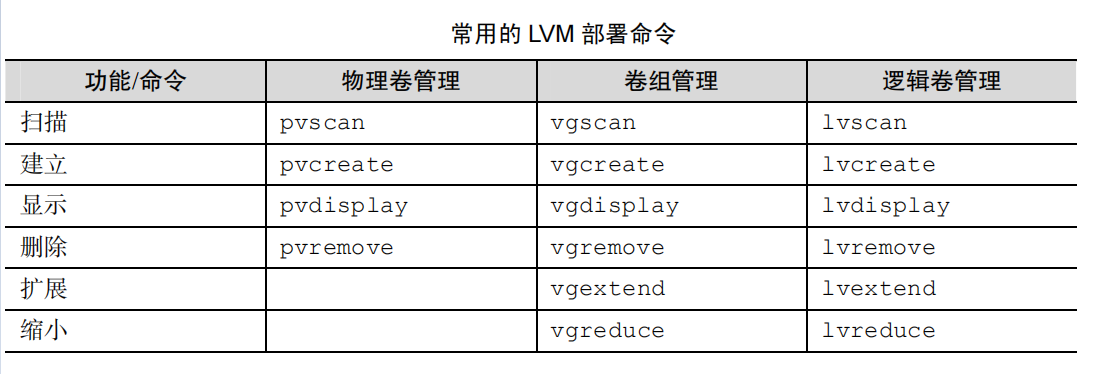

2、LVM

2.1 部署逻辑卷

1、支持LVM 技术

#让两块新增的盘支持LVM技术[root@localhost ~]# pvcreate /dev/sdg /dev/sdhPhysical volume "/dev/sdg" successfully created.Physical volume "/dev/sdh" successfully created.

2、把硬盘设备加入到 storage 卷组

[root@localhost ~]# vgcreate storage /dev/sdg /dev/sdhVolume group "storage" successfully created[root@localhost ~]# vgdisplay--- Volume group ---VG Name centosSystem IDFormat lvm2Metadata Areas 1Metadata Sequence No 3VG Access read/writeVG Status resizableMAX LV 0Cur LV 2Open LV 2Max PV 0Cur PV 1Act PV 1VG Size <19.00 GiBPE Size 4.00 MiBTotal PE 4863Alloc PE / Size 4863 / <19.00 GiBFree PE / Size 0 / 0VG UUID qRB4Xz-Z6BH-NNJz-yKdB-dkyB-3N1W-Vyko4D--- Volume group ---VG Name storageSystem IDFormat lvm2Metadata Areas 2Metadata Sequence No 1VG Access read/writeVG Status resizableMAX LV 0Cur LV 0Open LV 0Max PV 0Cur PV 2Act PV 2VG Size 9.99 GiBPE Size 4.00 MiBTotal PE 2558Alloc PE / Size 0 / 0Free PE / Size 2558 / 9.99 GiBVG UUID gJACUj-0EUM-Ji8B-0quN-8cgC-Md7y-xlnHGu[root@localhost ~]#

3、切割逻辑卷

这里需要注意切割单位的问题。在对逻辑卷进行切割时有两种计量单位。第一种是以容

量为单位,所使用的参数为-L。例如,使用-L 150M 生成一个大小为 150MB 的逻辑卷。另外

一种是以基本单元的个数为单位,所使用的参数为-l。每个基本单元的大小默认为 4MB。例

如,使用-l 37 可以生成一个大小为 37×4MB=148MB 的逻辑卷

#方式一切割[root@localhost ~]# lvcreate -L 150M storageRounding up size to full physical extent 152.00 MiBLogical volume "lvol0" created.[root@localhost ~]##方式二切割[root@localhost ~]# lvcreate -n vo -l 37 storageLogical volume "vo" created.[root@localhost ~]# lvcreate -L 150M storage #显示逻辑卷--- Logical volume ---LV Path /dev/storage/lvol0LV Name lvol0VG Name storageLV UUID 71Avnv-XsAg-1faG-cQqB-ViSM-uUuF-bW9ZMsLV Write Access read/writeLV Creation host, time localhost.localdomain, 2022-04-28 16:44:13 +0800LV Status available# open 0LV Size 152.00 MiBCurrent LE 38Segments 1Allocation inheritRead ahead sectors auto- currently set to 8192Block device 253:2--- Logical volume ---LV Path /dev/storage/voLV Name voVG Name storageLV UUID S2pXqR-2WVH-sAFA-0Gdn-Rhj8-NFsb-2dZ7N5LV Write Access read/writeLV Creation host, time localhost.localdomain, 2022-04-28 16:45:22 +0800LV Status available# open 0LV Size 148.00 MiBCurrent LE 37Segments 1Allocation inheritRead ahead sectors auto- currently set to 8192Block device 253:3

4、将逻辑卷格式化,挂载使用

[root@localhost ~] mkfs.ext4 /dev/storage/lvol0 #格式化为ext4格式mke2fs 1.42.9 (28-Dec-2013)文件系统标签=OS type: Linux块大小=1024 (log=0)分块大小=1024 (log=0)Stride=0 blocks, Stripe width=0 blocks38912 inodes, 155648 blocks7782 blocks (5.00%) reserved for the super user第一个数据块=1Maximum filesystem blocks=3381657619 block groups8192 blocks per group, 8192 fragments per group2048 inodes per groupSuperblock backups stored on blocks:8193, 24577, 40961, 57345, 73729Allocating group tables: 完成正在写入inode表: 完成Creating journal (4096 blocks): 完成Writing superblocks and filesystem accounting information: 完成[root@localhost data]# mount /dev/storage/vo /data/a[root@localhost data]# mount /dev/storage/lvol0 /data/b/[root@localhost data]# df -Th/dev/mapper/storage-vo ext4 140M 1.6M 128M 2% /data/a/dev/mapper/storage-lvol0 ext4 144M 1.6M 132M 2% /data/b

5、永久写入

echo "/dev/mapper/storage-vo /data/a ext4 defaults 0 0" >>/etc/fstabecho "/dev/mapper/storage-lvol0 /data/b ext4 defaults 0 0" >>/etc/fstab

2.2 扩容逻辑卷

1、扩容

[root@localhost vo]# lvextend -L 290M /dev/storage/voRounding size to boundary between physical extents: 292.00 MiB.Size of logical volume storage/vo changed from 148.00 MiB (37 extents) to 292.00 MiB (73 extents).Logical volume storage/vo successfully resized.

2、检查硬盘完整性,并重置硬盘容量。

[root@localhost vo]# e2fsck -f /dev/storage/vo #可不做e2fsck 1.42.9 (28-Dec-2013)/dev/storage/vo is mounted.e2fsck: 无法继续, 中止.[root@localhost vo]# resize2fs /dev/storage/voresize2fs 1.42.9 (28-Dec-2013)Filesystem at /dev/storage/vo is mounted on /data/vo; on-line resizing requiredold_desc_blocks = 2, new_desc_blocks = 3The filesystem on /dev/storage/vo is now 299008 blocks long.

3、查看容量

[root@localhost vo]# mount -a #重新挂载 可不做[root@localhost vo]# df -TH/dev/mapper/storage-vo ext4 293M 2.2M 274M 1% /data/vo

2.3 缩小逻辑卷

相较于扩容逻辑卷,在对逻辑卷进行缩容操作时,其丢失数据的风险更大。所以在生产 环境中执行相应操作时,一定要提前备份好数据。在执 行缩容操作前记得先把文件系统卸载掉。

1、检查文件的完整性

[root@localhost lvol0]# umount /data/vo/ #先取消挂载[root@localhost lvol0]# e2fsck -f /dev/storage/voe2fsck 1.42.9 (28-Dec-2013)第一步: 检查inode,块,和大小第二步: 检查目录结构第3步: 检查目录连接性Pass 4: Checking reference counts第5步: 检查簇概要信息/dev/storage/vo: 12/74000 files (0.0% non-contiguous), 15509/299008 blocks

2、缩小

[root@localhost lvol0]# resize2fs /dev/storage/vo 100Mresize2fs 1.42.9 (28-Dec-2013)Resizing the filesystem on /dev/storage/vo to 102400 (1k) blocks.The filesystem on /dev/storage/vo is now 102400 blocks long.[root@localhost lvol0]# lvreduce -L 100M /dev/storage/voWARNING: Reducing active logical volume to 100.00 MiB.THIS MAY DESTROY YOUR DATA (filesystem etc.)Do you really want to reduce storage/vo? [y/n]: ySize of logical volume storage/vo changed from 292.00 MiB (73 extents) to 100.00 MiB (25 extents).Logical volume storage/vo successfully resized.

3、挂载查看大小

[root@localhost lvol0]# mount /dev/storage/vo /data/vo/[root@localhost lvol0]# df -Th/dev/mapper/storage-vo ext4 93M 1.6M 85M 2% /data/vo

2.4 逻辑卷快照(没研究懂是干嘛的)

LVM 还具备有“快照卷”功能,该功能类似于虚拟机软件的还原时间点功能。例如,可

以对某一个逻辑卷设备做一次快照,如果日后发现数据被改错了,就可以利用之前做好的快

照卷进行覆盖还原。

LVM 的快照卷功能有两个特点:

第一个就是:快照卷的容量必须等同于逻辑卷的容量

第二个就是:快照卷仅一次有效,一旦执行还原操作后则会被立即自动删除。

1、生成快照卷

#先查看卷组的信息[root@localhost vo]# vgdisplay[root@localhost data]# lvcreate -L 100M -s -n SNAP /dev/storage/voLogical volume "SNAP" created.[root@localhost data]# lvdisplay

2、查看效果

再向逻辑卷中添加文件之前使用lvdisplai查看分配给快照的空间是Allocated to snapshot 0.01%再向逻辑卷中添加文件后再使用lvsidplay查看分配给快照空间Allocated to snapshot 5.45%

3、使用功能

[root@localhost dev]# umount /data/vo/[root@localhost dev]# lvconvert --merge /dev/storage/SNAPMerging of volume storage/SNAP started.storage/vo: Merged: 100.00%[root@localhost dev]# mount /dev/storage/vo /data/vo/

4、检查快照

[root@localhost dev]# lvdisplay #发现之前的快照卷没有了

2.5 删除逻辑卷

1、取消挂载

[root@localhost vo]# umount /data/vovi /etc/fstab #将之前永久挂载的配置删除

2、删除逻辑卷

[root@localhost vo]# lvremove /dev/storage/voDo you really want to remove active logical volume vo? [y/n]: yLogical volume "vo" successfully removed

3、删除卷组

[root@localhost vo]# vgremove storageVolume group "storage" successfully removed

4、删除物理卷设备

[root@localhost vo]#pvremove /dev/sdg /dev/sdhLabels on physical volume "/dev/sdg" successfully wipedLabels on physical volume "/dev/sdh" successfully wiped