- What : A K8s-native Pipeline resource

- How : Interacts with Tektoncd Pipeline

- Tektoncd Pipeline 概念

- 关键对象

- 1 [internal] load .dockerignore

#1 digest: sha256:f49a51af104124b84f290288c3c75d630abc40e4c9c142d7add81d4a5bc27a25

#1 name: “[internal] load .dockerignore”

#1 started: 2019-05-13 03:15:13.729899957 +0000 UTC

#1 completed: 2019-05-13 03:15:13.729978457 +0000 UTC

#1 duration: 78.5µs

#1 started: 2019-05-13 03:15:13.730257148 +0000 UTC

#1 transferring context: 2B 0.1s done

#1 completed: 2019-05-13 03:15:13.819507582 +0000 UTC

#1 duration: 89.250434ms - 2 [internal] load build definition from Dockerfile

#2 digest: sha256:e8e4774ce400b75e6256d4d244ab708ca0605ec70ca1bdb12906706b2f8b9bcf

#2 name: “[internal] load build definition from Dockerfile”

#2 started: 2019-05-13 03:15:13.729897877 +0000 UTC

#2 completed: 2019-05-13 03:15:13.729971834 +0000 UTC

#2 duration: 73.957µs

#2 started: 2019-05-13 03:15:13.730138087 +0000 UTC

#2 completed: 2019-05-13 03:15:13.849126781 +0000 UTC

#2 duration: 118.988694ms

#2 transferring dockerfile: 79B 0.1s done - 3 [internal] load metadata for docker.io/library/nginx:1.15.12-alpine

#3 digest: sha256:3911acdeaf648ee164999d40fde38263bb1a03c0cbebb29b3932ba71048579c3

#3 name: “[internal] load metadata for docker.io/library/nginx:1.15.12-alpine”

#3 started: 2019-05-13 03:15:13.85020833 +0000 UTC

#3 completed: 2019-05-13 03:15:16.892017103 +0000 UTC

#3 duration: 3.041808773s - 4 [1/1] FROM docker.io/library/nginx:1.15.12-alpine@sha256:57a226fb6ab6823…

#4 digest: sha256:187fe5addd338c7a02eaa25913f5f94242636ffe65e40af51abf0f773962ea58

#4 name: “[1/1] FROM docker.io/library/nginx:1.15.12-alpine@sha256:57a226fb6ab6823027c0704a9346a890ffb0cacde06bc19bbc234c8720673555”

#4 started: 2019-05-13 03:15:16.892851886 +0000 UTC

#4 completed: 2019-05-13 03:15:16.893075441 +0000 UTC

#4 duration: 223.555µs

#4 started: 2019-05-13 03:15:16.893252939 +0000 UTC

#4 completed: 2019-05-13 03:15:16.893307047 +0000 UTC

#4 duration: 54.108µs

#4 cached: true

#4 resolve docker.io/library/nginx:1.15.12-alpine@sha256:57a226fb6ab6823027c0704a9346a890ffb0cacde06bc19bbc234c8720673555 done - 5 exporting to image

#5 digest: sha256:d70d658f4575e85b83973504e7dd9ca8b44b17f3762aad0ea28cc51661d09c15

#5 name: “exporting to image”

#5 started: 2019-05-13 03:15:16.893354938 +0000 UTC

#5 exporting layers done

#5 exporting manifest sha256:b20ab2f41ceae8a3cca2db4ff5a2e8c801d4bc35d0f86e5050d46493e62d9ca0 done

#5 exporting config sha256:bfe3543077fbf17f57016f39089d53eac42d9d411fabdd06ae1c7bae8b726981 done

#5 pushing layers

#5 pushing layers 5.7s done

#5 pushing manifest for docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1

#5 completed: 2019-05-13 03:15:23.367185755 +0000 UTC

#5 duration: 6.473830817s

#5 pushing manifest for docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1 0.7s done - 总结

What : A K8s-native Pipeline resource

1. 云原生化(Cloud Native):

- 采用声明式 API,运行在 K8s 上

- 使用容器作为 build block (Task 由一组 Step(Container) 组成)

- 将 K8s cluster 作为头等类型

2. 解耦(Decoupled):

- Task/Pipeline 一次定义、任意 K8s 群集部署

- 组成 Pipeline 的 Task 可以单独运行

- Git repos、Storage(Gcs、Oss)存储等资源可以在任务间轻松交换

3. 类型化(Typed):

- 意味着对于诸如 Image 之类的资源,可以轻松地在 Task 之间交换实现(目前支持还比较弱)

How : Interacts with Tektoncd Pipeline

前置条件:一个可运行的 K8s 集群(如本地minikube),部署 Tektoncd 参考²

部署步骤中 Tektoncd 已经将所有需要声明的 yaml 打包在 release.yaml,所以关键执行语句是 :

kubectl apply --filename https://storage.googleapis.com/knative-releases/build-pipeline/latest/release.yaml

部署状态检查:当观察到 webhook 和 controller 两个容器都属于 Running 状态,则框架部署就成功了。

kubectl get pods -n tekton-pipelines

➜ [/Users/user/k8s] git:(master) kubectl get pod -n tekton-pipelines

NAME READY STATUS RESTARTS AGE

tekton-pipelines-controller-7b5c6fb498-xwhgh 1/1 Running 0 3d

tekton-pipelines-webhook-799cb595db-7v8vs 1/1 Running 0 3d

Tektoncd Pipeline 概念

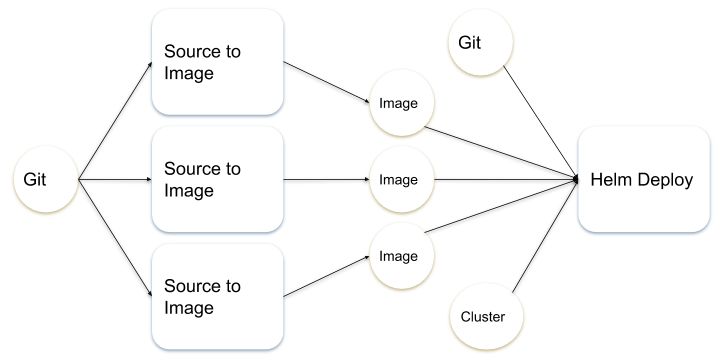

如下所示,软件开发过程中,一个 pipeline 往往会包括代码版本控制、构建、单元测试、部署、自动化集成测试、部署等步骤。

对于运行在 K8s 环境下的 pipeline 而已,从业务的角度看各个阶段的职责本身不会有本质的变化;从技术架构的角度看,基于容器的调度编排得到的 pipeline,优势慢慢显示出来的。

一方面每个阶段,也可以说一个 Task,是使用容器来表达的。众所周知,容器对于应用运行环境的打包、逻辑隔离是杠杠的,这除了有利于每个Task职责的单一外,也有利于pipeline的稳定运行。另一方面,则是 severless 化的架构有利于机器资源的释放、业务成本的节约。

关键对象

关键对象定义分为 Task、PipelineResource、Pipeline 模板一类,还有 TaskRun、PipelineRun 等运行时配置一类。其中 TaskRun 依赖 Task( 指定 ref 或 taskSpec),PipelineRun 依赖 Pipeline(指定ref),职责描述定义如下:

| Name | 职责Task |

|---|---|

| Pipelineresource | Task/Pipeline 入参、出参的抽象接口,默认支持 git、image、 storage、cluster 四种类型 各自有特定的必须字段,如 url、revision 等,它们通常在运行时被映射为 pod 里的一个 container |

| Task | 单个任务的定义模板,其中 steps 资源映射为 K8s 的 pod 对象,包括入参出参资源的定义等 |

| TaskRun | 任务实例,可通过 taskRef 对 Task 进行关联,或者直接由 taskSpec 进行注入 trigger 分为 manual / pipelineRun 两种类型 |

| Pipeline | 流水线,可以按特定的顺序编排 n(>=1)个 Task,组成一个 dag(有向不循环图) |

| PipelineRun | 流水线实例,可以通过 pipelineRef 对 Pipeline 进行关联 trigger 只有 manual 类型 |

Task

任务可以单独存在,也可以作为 pipeline 的一部分。每个任务都在k8s集群上作为Pod运行,每个步骤都声明了自己的容器。

如下这里 steps 字段映射 k8s corev1 中的 container 数组字段,也就是说每个任务需要把执行的逻辑封装到一个 image,再指定 command 和 args。

apiVersion: tekton.dev/v1alpha1

kind: Task

metadata:

namespace: tekton-pipelines

name: echo-hello-world

spec:

steps:

- name: echo

image: ubuntu

command:

- echo

args:

- “hello world”

- name: echo-1

image: ubuntu

command:

- echo

args:

- “hello world-1”

运行效果:

➜ [/Users/user/k8s/knative/tasks] git:(master) ✗ kubectl apply -f task.yaml

task.tekton.dev “echo-hello-world” created

Tips:Task 必须指定command,否则会导致 controller 获取不到 container entrypoint,进而导致拉起 task 失败。

TaskRun

这个时候,虽然我们提交了 Task 对象到 K8s 里,但是 Tektoncd 并不会拉起一个 Task 实例。

上面也有提到,Task 对象仅仅作为模板配置。接下来,通过配置 TaskRun 来触发这个Task对象。

apiVersion: tekton.dev/v1alpha1

kind: TaskRun

metadata:

namespace: tekton-pipelines

name: echo-hello-world-task-run

spec:

taskRef:

name: echo-hello-world

trigger:

type: manual

运行效果:

➜ [/Users/user/k8s/knative/tasks] git:(master) ✗ kubectl apply -f taskrun.yaml

taskrun.tekton.dev “echo-hello-world-task-run” created

➜ [/Users/user/k8s/knative/tasks] git:(master) ✗ kubectl get pod -n tekton-pipelines

NAME READY STATUS RESTARTS AGE

echo-hello-world-task-run-pod-15631a 0/1 Init:2/3 0 8d

➜ [/Users/user/k8s/knative/taskrun] git:(master) ✗ kubectl get pod/echo-hello-world-task-run-pod-15631a -n tekton-pipelines -o yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: 2019-03-28T15:41:53Z

labels:

tekton.dev/task: echo-hello-world

tekton.dev/taskRun: echo-hello-world-task-run

name: echo-hello-world-task-run-pod-15631a

namespace: tekton-pipelines

ownerReferences:

- apiVersion: tekton.dev/v1alpha1

blockOwnerDeletion: true

controller: true

kind: TaskRun

name: echo-hello-world-task-run

uid: 02684736-5170-11e9-80eb-b6729aa295ab

resourceVersion: “646847”

selfLink: /api/v1/namespaces/tekton-pipelines/pods/echo-hello-world-task-run-pod-15631a

uid: 026ad82d-5170-11e9-80eb-b6729aa295ab

spec:

containers:

- args:

- -wait_file

- “”

- -post_file

- /builder/tools/0

- -entrypoint

- echo

- —

- hello world

command:

- /builder/tools/entrypoint

env:

- name: HOME

value: /builder/home

image: ubuntu

imagePullPolicy: Always

name: build-step-echo

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /builder/tools

name: tools

- mountPath: /workspace

name: workspace

- mountPath: /builder/home

name: home

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-4c8qb

readOnly: true

workingDir: /workspace

- args:

- -wait_file

- /builder/tools/0

- -post_file

- /builder/tools/1

- -entrypoint

- echo

- —

- hello world-1

command:

- /builder/tools/entrypoint

env:

- name: HOME

value: /builder/home

image: ubuntu

imagePullPolicy: Always

name: build-step-echo-1

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /builder/tools

name: tools

- mountPath: /workspace

name: workspace

- mountPath: /builder/home

name: home

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-4c8qb

readOnly: true

workingDir: /workspace

- command:

- /ko-app/nop

image: gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/nop@sha256:edf92a9d7e6f74cdc4a61b0ac62458d82e95e3786e479b789f41ffea67783183

imagePullPolicy: IfNotPresent

name: nop

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-4c8qb

readOnly: true

dnsPolicy: ClusterFirst

initContainers:

- command:

- /ko-app/creds-init

env:

- name: HOME

value: /builder/home

image: gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/creds-init@sha256:dd7e347128e0dd1f80e4e419606fa1e204bc7b98fc981d74f068c192df26c410

imagePullPolicy: IfNotPresent

name: build-step-credential-initializer-z22bb

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /workspace

name: workspace

- mountPath: /builder/home

name: home

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-4c8qb

readOnly: true

workingDir: /workspace

- args:

- -c

- cp /ko-app/entrypoint /builder/tools/entrypoint

command:

- /bin/sh

env:

- name: HOME

value: /builder/home

image: gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/entrypoint@sha256:6eaa3e12f13089d2eab2072d5eaf789e1f2001565901b963034333f3dde21b3f

imagePullPolicy: IfNotPresent

name: build-step-place-tools

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /builder/tools

name: tools

- mountPath: /workspace

name: workspace

- mountPath: /builder/home

name: home

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-4c8qb

readOnly: true

workingDir: /workspace

nodeName: minikube

priority: 0

restartPolicy: Never

schedulerName: default-scheduler

securityContext: {}

serviceAccount: default

serviceAccountName: default

terminationGracePeriodSeconds: 30

tolerations:

- effect: NoExecute

key: node.kubernetes.io/not-ready

operator: Exists

tolerationSeconds: 300

- effect: NoExecute

key: node.kubernetes.io/unreachable

operator: Exists

tolerationSeconds: 300

volumes:

- emptyDir: {}

name: tools

- emptyDir: {}

name: workspace

- emptyDir: {}

name: home

- name: default-token-4c8qb

secret:

defaultMode: 420

secretName: default-token-4c8qb

status:

phase: Succeeded

podIP: 172.17.0.10

qosClass: BestEffort

startTime: 2019-03-28T15:41:53Z

….

上面有一段由 Tektoncd 拉起的 taskrun 对应的 pod 的 yaml 字段,从中我们可以看到:

1. 默认生成的 labels 标签,有利于我们在代码里通过调用 pod 的 list 接口,设置 label 参数来获取生成的 pod 详情:

tekton.dev/task: echo-hello-world

tekton.dev/taskRun: echo-hello-world-task-run

2. 这个 pod 包含了 initContainers 和 containers ,其中

initContainers 的镜像为 :

gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/creds-init

gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/entrypoint

containers镜像为 :

ubuntu

ubuntu

gcr.io/tekton-releases/github.com/tektoncd/pipeline/cmd/nop

并且 ubuntu 镜像的参数 command 变成了 /builder/tools/entrypoint , args 有 -wait_file -post_file -entrypoint 等参数(entrypoint 包装了用户指定的 command,用 file 生成顺序来控制 steps 的执行顺序)

3. 通过 volumes 字段,还可以看到工作目录 workspace 和 /builder/home 目录等信息

看到这里,读者对单个 taskRun 生成的 pod 对象应该有了一定的了解。(Tektoncd 通过包装 entrypoint+wait/post file 的机制来控制容器按照特定顺序执行,最后通过 nop container 输出一行日志作为任务的结束。)

Task steps 指定镜像 ubuntu 日志:

➜ [/Users/user/k8s/knative/taskrun] git:(master) ✗ kubectl logs echo-hello-world-task-run-pod-15631a -c build-step-echo -n tekton-pipelines

hello world

➜ [/Users/user/k8s/knative/taskrun] git:(master) ✗ kubectl logs echo-hello-world-task-run-pod-15631a -c build-step-echo-1 -n tekton-pipelines

hello world-1

nop 日志:

➜ [/Users/zefeng.czf/k8s/knative/tasks] git:(master) ✗ kubectl logs echo-hello-world-task-run-pod-15631a -n tekton-pipelines

Build successful

Pipelineresource

目前为止,上面演示了一个非常简单的 Task,指定容器输出一串字符串。在实际工程中,没有入参和出参的任务几乎是不存在的。PipelinesResources 用于定义在任务间流转的 Resource, 默认支持 git、image、 storage、cluster 四中类型,各自有特定的必须字段。

| git | url git 代码库地址 revision (可选)branch或commitId,默认master targetPath (可选)下载代码库到容器里的path |

|---|---|

| image | url image地址 digest image (可选)签名值 |

| storage | 默认只支持 gcs 的存储,如果要支持oss的等存储需要扩展一个类型出来 |

| cluster | 用于 deploy 到 K8s 任意 ns 使用,因与 Build/Ci 无关,暂不梳理 |

demo

apiVersion: tekton.dev/v1alpha1

kind: PipelineResource

metadata:

name: git-source

namespace: tekton-pipelines

spec:

type: git

params:

- name: revision

value: master

- name: url

value: https://github.com/cccfeng/tekton-source.git # 替换你自己的代码库

如何使用定义好的 resource 呢?我们依旧需要先定一个 Task 模板:

apiVersion: tekton.dev/v1alpha1

kind: Task

metadata:

name: build-docker-image-from-git-source

namespace: tekton-pipelines

spec:

inputs:

resources:

- name: docker-source

type: git

params:

- name: pathToDockerFile

default: Dockerfile

- name: pathToContext

default: /workspace/docker-source

outputs:

resources:

- name: builtImage

type: image

steps:

- name: build-and-pushgi

image: docker.io/antblugeng/tekton-pipeline-test:buildkit # 替换自己的buildctl image

command: [“/buildkit.sh”]

args:

- —workspace

- ${inputs.params.pathToContext}

- —buildkit_host

- tcp://buildkitd.server.xxxx.net:1234 # 替换自己的buildkitd server域名

- —filename

- ${inputs.params.pathToDockerFile}

- —context

- .

- —dockerfile

- .

- —name

- docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1

- —push

- “true”

接着定义一个 TaskRun 来触发

apiVersion: tekton.dev/v1alpha1

kind: TaskRun

metadata:

name: build-docker-image-from-git-source-task-run

namespace: tekton-pipelines

spec:

serviceAccount: build-bot

taskRef:

name: build-docker-image-from-git-source

trigger:

type: manual

inputs:

resources:

- name: docker-source

resourceRef:

name: git-source

params:

- name: pathToDockerFile

value: Dockerfile

- name: pathToContext

value: /workspace/docker-source #configure: may change according to your source

outputs:

resources:

- name: builtImage

resourceRef:

name: knative-pipeline-test-image

运行效果:

➜ [/Users/user] kubectl logs -n tekton-pipelines build-docker-image-from-git-source-task-run-pod-01b21c -c build-step-git-source-git-source-jrbxn

{“level”:”warn”,”ts”:1557717305.086845,”logger”:”fallback-logger”,”caller”:”logging/config.go:65”,”msg”:”Fetch GitHub commit ID from kodata failed: \”ref: refs/heads/master\” is not a valid GitHub commit ID”}

{“level”:”info”,”ts”:1557717309.9012158,”logger”:”fallback-logger”,”caller”:”git-init/main.go:100”,”msg”:”Successfully cloned \”https://github.com/cccfeng/tekton-source.git\“ @ \”master\” in path \”/workspace/docker-source\””}

➜ [/Users/user] kubectl logs -n tekton-pipelines build-docker-image-from-git-source-task-run-pod-01b21c -c build-step-build-and-pushgi

time=”2019-05-13T03:15:10Z” level=info msg=”found worker “ubkkw3a71vixufz3lxdislw9w”, labels=map[org.mobyproject.buildkit.worker.executor:oci org.mobyproject.buildkit.worker.snapshotter:native org.mobyproject.buildkit.worker.hostname:build-docker-image-from-git-source-task-run-pod-01b21c], platforms=[linux/amd64]”

time=”2019-05-13T03:15:10Z” level=warning msg=”skipping containerd worker, as “/run/containerd/containerd.sock” does not exist”

time=”2019-05-13T03:15:10Z” level=info msg=”found 1 workers, default=”ubkkw3a71vixufz3lxdislw9w””

time=”2019-05-13T03:15:10Z” level=warning msg=”currently, only the default worker can be used.”

time=”2019-05-13T03:15:10Z” level=info msg=”running server on [::]:1234”

export BUILDKIT_HOST=tcp://buildkitd.server.xxxx.net:1234

buildctl build —exporter=image —frontend dockerfile.v0 —frontend-opt filename=Dockerfile —local context=. —local dockerfile=. —exporter-opt name=docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1 —exporter-opt push=true

1 [internal] load .dockerignore

#1 digest: sha256:f49a51af104124b84f290288c3c75d630abc40e4c9c142d7add81d4a5bc27a25

#1 name: “[internal] load .dockerignore”

#1 started: 2019-05-13 03:15:13.729899957 +0000 UTC

#1 completed: 2019-05-13 03:15:13.729978457 +0000 UTC

#1 duration: 78.5µs

#1 started: 2019-05-13 03:15:13.730257148 +0000 UTC

#1 transferring context: 2B 0.1s done

#1 completed: 2019-05-13 03:15:13.819507582 +0000 UTC

#1 duration: 89.250434ms

2 [internal] load build definition from Dockerfile

#2 digest: sha256:e8e4774ce400b75e6256d4d244ab708ca0605ec70ca1bdb12906706b2f8b9bcf

#2 name: “[internal] load build definition from Dockerfile”

#2 started: 2019-05-13 03:15:13.729897877 +0000 UTC

#2 completed: 2019-05-13 03:15:13.729971834 +0000 UTC

#2 duration: 73.957µs

#2 started: 2019-05-13 03:15:13.730138087 +0000 UTC

#2 completed: 2019-05-13 03:15:13.849126781 +0000 UTC

#2 duration: 118.988694ms

#2 transferring dockerfile: 79B 0.1s done

3 [internal] load metadata for docker.io/library/nginx:1.15.12-alpine

#3 digest: sha256:3911acdeaf648ee164999d40fde38263bb1a03c0cbebb29b3932ba71048579c3

#3 name: “[internal] load metadata for docker.io/library/nginx:1.15.12-alpine”

#3 started: 2019-05-13 03:15:13.85020833 +0000 UTC

#3 completed: 2019-05-13 03:15:16.892017103 +0000 UTC

#3 duration: 3.041808773s

4 [1/1] FROM docker.io/library/nginx:1.15.12-alpine@sha256:57a226fb6ab6823…

#4 digest: sha256:187fe5addd338c7a02eaa25913f5f94242636ffe65e40af51abf0f773962ea58

#4 name: “[1/1] FROM docker.io/library/nginx:1.15.12-alpine@sha256:57a226fb6ab6823027c0704a9346a890ffb0cacde06bc19bbc234c8720673555”

#4 started: 2019-05-13 03:15:16.892851886 +0000 UTC

#4 completed: 2019-05-13 03:15:16.893075441 +0000 UTC

#4 duration: 223.555µs

#4 started: 2019-05-13 03:15:16.893252939 +0000 UTC

#4 completed: 2019-05-13 03:15:16.893307047 +0000 UTC

#4 duration: 54.108µs

#4 cached: true

#4 resolve docker.io/library/nginx:1.15.12-alpine@sha256:57a226fb6ab6823027c0704a9346a890ffb0cacde06bc19bbc234c8720673555 done

5 exporting to image

#5 digest: sha256:d70d658f4575e85b83973504e7dd9ca8b44b17f3762aad0ea28cc51661d09c15

#5 name: “exporting to image”

#5 started: 2019-05-13 03:15:16.893354938 +0000 UTC

#5 exporting layers done

#5 exporting manifest sha256:b20ab2f41ceae8a3cca2db4ff5a2e8c801d4bc35d0f86e5050d46493e62d9ca0 done

#5 exporting config sha256:bfe3543077fbf17f57016f39089d53eac42d9d411fabdd06ae1c7bae8b726981 done

#5 pushing layers

#5 pushing layers 5.7s done

#5 pushing manifest for docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1

#5 completed: 2019-05-13 03:15:23.367185755 +0000 UTC

#5 duration: 6.473830817s

#5 pushing manifest for docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1 0.7s done

可以看到 Task 先执行了 git 库的拉取动作,再执行的 buildkit 指令,打出了 imageId: docker.io/antblugeng/tekton-pipeline-test:buildkit-test-0.0.1

Pipeline

目前我们已经走通 Task/TaskRun/PipelineResource 组合的一种类型,但是单个任务表达的能力有限,Tektoncd 是如何支持 Task 之间的编排、产物之间的传递的呢?事实上,一个 Pipeline 的定义也恰恰是由一组 Task 和 Resources(input&output)组成。

apiVersion: tekton.dev/v1alpha1

kind: Pipeline

metadata:

name: tutorial-pipeline

namespace: tekton-pipelines

spec:

resources:

- name: pipeline-source-repo

type: git

- name: pipeline-web-image

type: image

tasks:

- name: build-skaffold-web

taskRef:

name: build-docker-image-from-git-source

resources:

inputs:

- name: docker-source

resource: pipeline-source-repo

outputs:

- name: builtImage

resource: pipeline-web-image

- name: deploy-web

taskRef:

name: deploy-using-kubectl

resources:

inputs:

- name: pipeline-t-workspace

resource: pipeline-source-repo

- name: image

resource: pipeline-web-image

from:

- build-skaffold-web

params:

- name: path

value: /workspace/pipeline-t-workspace/deployment.yaml #configure: may change according to your source

这里 Pipeline 的 spec 字段中定义了 resources,分别为 git 和 image 类型,这是一共需要定义的入参。

在 tasks 数组里,我们可以看到 build-docker-image-from-git-source 定义了 input 和 output 两种资源,其中 input 为 git 代码库,output 为 image 类型;后一个 task deploy-web 则定义了 input 资源为 git 代码库和 image 两种。

注意:resource: web-image 下面多了一个 from 数组参数,值是上一个 pipeline task 的名称。这表示着第二个任务的 image 资源来自第一个任务的产物输出,也就是 pipeline 在执行时,会确保 deploy-web 在 build-skaffold-web 完成之后执行。

Tips:deploy-using-kubectl task 定义如下:

apiVersion: tekton.dev/v1alpha1

kind: Task

metadata:

namespace: tekton-pipelines

name: deploy-using-kubectl

spec:

inputs:

resources:

- name: pipeline-t-workspace

type: git

- name: image

type: image

params:

- name: path

description: Path to the manifest to apply

steps:

- name: run-kubectl

image: lachlanevenson/k8s-kubectl

command: [“kubectl”]

args:

- “apply”

- “-f”

- “${inputs.params.path}”

PipelineRun

和 Task 一致,Pipeline 定义的是只是模板。如果想到将 Pipeline 资源运行起来,需要定义 PipelineRun 进行触发。

apiVersion: tekton.dev/v1alpha1

kind: PipelineRun

metadata:

name: tutorial-pipeline-run-1

namespace: tekton-pipelines

spec:

serviceAccount: build-bot

pipelineRef:

name: tutorial-pipeline

trigger:

type: manual

resources:

- name: pipeline-source-repo

resourceRef:

name: git-source

- name: pipeline-web-image

resourceRef:

name: knative-pipeline-test-image

运行效果:

code 仓库中 dockerfile 内容 :

FROM docker.io/nginx:1.15.12-alpine

code仓库 deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: tekton-pipelines

name: nginx-deployment

spec:

selector:

matchLabels:

app: nginx

replicas: 1

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image:docker.io/nginx:1.15.12-alpine

ports:

- containerPort: 80

输出日志:

➜ [/Users/zefeng.czf/k8s/knative/pipeline] git:(master) ✗ kubectl apply -f pipelinerun.yaml

pipelinerun.tekton.dev “tutorial-pipeline-run-1” created

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 Pending 0 1s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 Pending 0 1s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 Pending 0 3s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 Init:0/2 0 3s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 Init:1/2 0 4s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 PodInitializing 0 5s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 2/3 Completed 0 6s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 1/3 Completed 0 10s

tutorial-pipeline-run-1-build-skaffold-web-9jm59-pod-6f0480 0/3 Completed 0 22s

tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 0/3 Pending 0 0s

tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 0/3 Pending 0 0s

tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 0/3 Init:0/2 0 0s

tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 0/3 Init:1/2 0 2s

tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 0/3 PodInitializing 0 3s

nginx-deployment-57686978c6-n4crh 0/1 Pending 0 1s

nginx-deployment-57686978c6-n4crh 0/1 Pending 0 1s

nginx-deployment-57686978c6-n4crh 0/1 ContainerCreating 0 1s

tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 0/3 Completed 0 13s

➜ [/Users/zefeng.czf/k8s/knative/taskrun] git:(master) ✗ kubectl logs -f pod/tutorial-pipeline-run-1-deploy-web-br6qm-pod-3ee8d4 -c build-step-run-kubectl -n tekton-pipelines

deployment.apps/nginx-deployment created

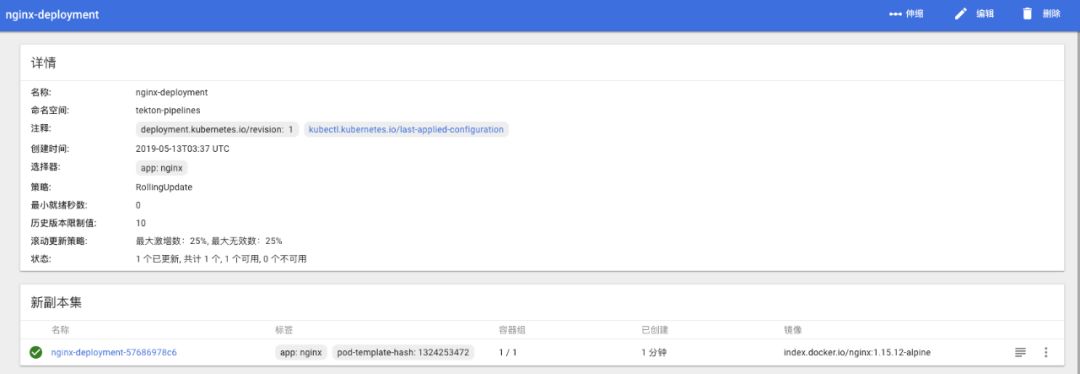

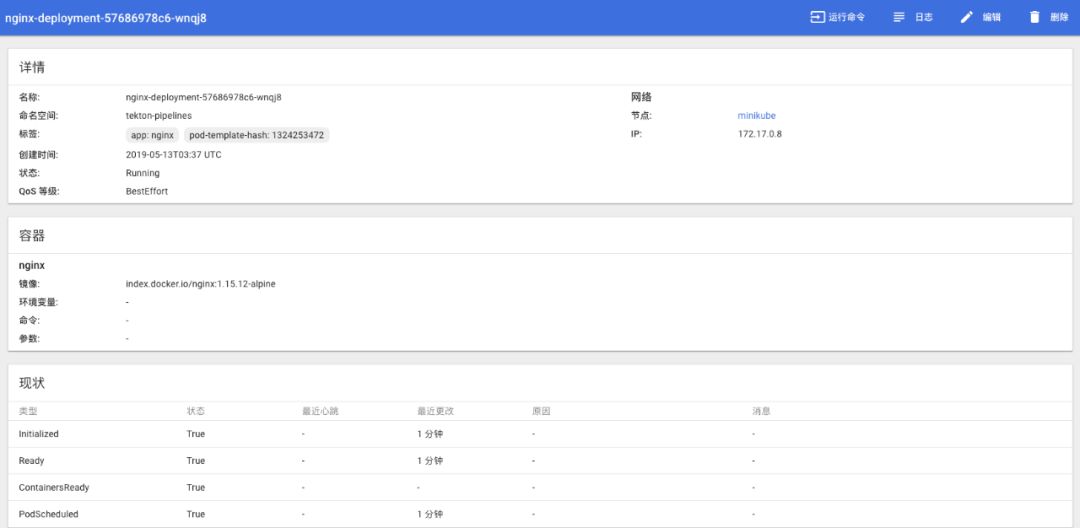

K8s dashboard:

当 Pipeline 拉起时,build-skaffold-web 任务会先执行,负责code2image的逻辑;build-skaffold-web 任务运行完时,deploy-web开始执行,根据中定义的yaml地址进行 /workspace/pipeline-t-workspace/deployment.yaml 执行 kubectl apply动作。

注意授权操作,如果使用的git/docker registry是私有权限的,则需要先初始化相关的账号设置,参考³。

总结

到此,我们走通了 Tektoncd 中 Task 和 Pipeline 两种实例的场景。可以很明确地洞察其中的优劣势:

优势:

- Pipeline 云原生化,声明式API,底层使用k8s作为执行引擎,充分发挥 K8s 的优势;

- 资源定义、模板定义与运行实例分离,职责比较分明,有利于资源、模板等重复使用;

- 资源作为任务输入与输出,可以在任务间传递,减少性能损耗;

- CDF + Google 的背书,社区关注度不断增高;定位为被集成方,支撑 Jenkins-X、TriggerMesh 等大牌 CI/CD产商。

劣势:

- 作为pipeline基础设施,tekton的复杂度较高,强大扩展性导致原生对象的定义稍显复杂,新手友好度稍低;

- 基于tekton的进行pipeline功能开发,要求用户适配 pipeline/task 生成、运行日志、状态等数据的采集和处理;如果只是简单使用 pipeline 编排的功能,可以直接对接到如 Jenkins-X 等上层框架上。

在蚂蚁金服,下一代持续交付架构要面向云原生化设计,与此同时 tektoncd/pipeline 本身就是 cloud native 的。未来蚂蚁会将 Build/Ci/Pipeline 等底层执行节点迁移到基于 tekton体系上,利用 K8s 弹性获取资源(serverless)的能力,提高资源的利用率;另一方面,持续交付架构需要提供强大的任务/流水线编排能力。基于 Tektoncd 的扩展性,新的架构保障了上层业务方各式各样需求接入的灵活性。