DSSM

- introduction

- two line for extending latent semantic models

- clickthrought data

- deep atuo-encoders

- text hashing. This hashing is not for security, while word embedding or sentence embedding.

- translation model for IR, directely translate doc into query. suboptimal

- two line for extending latent semantic models

- Question

- target is for re-construction of document term vectors, rather than differentiating the relevant docs from irrelevant ones for a given query.

- small vocabulary size, only 2000 frequent words

- loss

- loss funcion

is a smoothing factor in the softmax fomulatation, it is for the held-out data set.

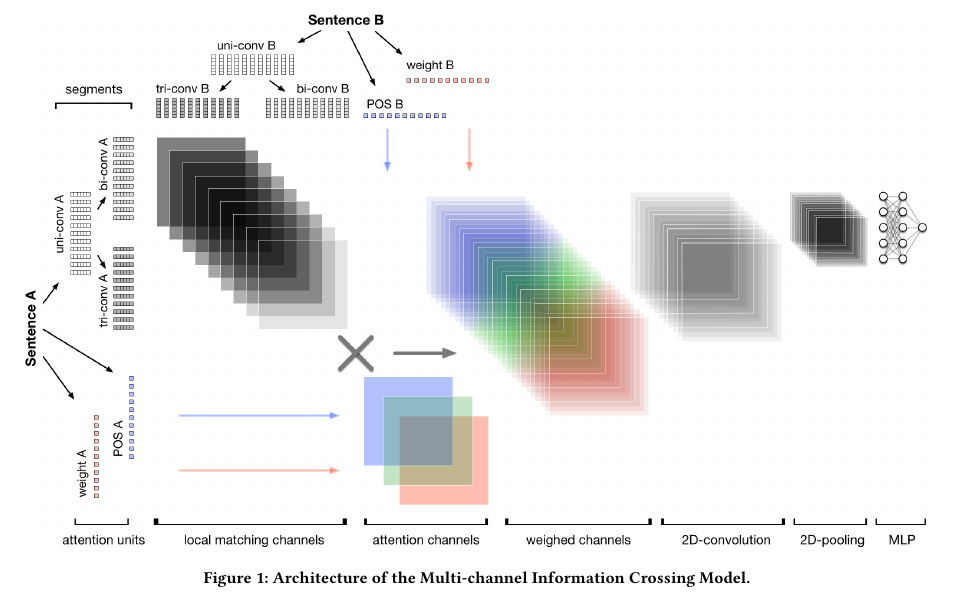

MIX

Question:

- after uni-conv, bi-conv and tir-conv, the sentence is transformed into a one-demension embedding?

- are there 9*3 feature maps in weight channels layer?