- 66. Overview

- 67. Catalog Tables

- 68. Client

- 69. Client Request Filters

- 70. Master

- 71. RegionServer

66. Overview

66.1. NoSQL?

HBase is a type of “NoSQL” database. “NoSQL” is a general term meaning that the database isn’t an RDBMS which supports SQL as its primary access language, but there are many types of NoSQL databases: BerkeleyDB is an example of a local NoSQL database, whereas HBase is very much a distributed database. Technically speaking, HBase is really more a “Data Store” than “Data Base” because it lacks many of the features you find in an RDBMS, such as typed columns, secondary indexes, triggers, and advanced query languages, etc.

However, HBase has many features which supports both linear and modular scaling. HBase clusters expand by adding RegionServers that are hosted on commodity class servers. If a cluster expands from 10 to 20 RegionServers, for example, it doubles both in terms of storage and as well as processing capacity. An RDBMS can scale well, but only up to a point - specifically, the size of a single database server - and for the best performance requires specialized hardware and storage devices. HBase features of note are:

Strongly consistent reads/writes: HBase is not an “eventually consistent” DataStore. This makes it very suitable for tasks such as high-speed counter aggregation.

Automatic sharding: HBase tables are distributed on the cluster via regions, and regions are automatically split and re-distributed as your data grows.

Automatic RegionServer failover

Hadoop/HDFS Integration: HBase supports HDFS out of the box as its distributed file system.

MapReduce: HBase supports massively parallelized processing via MapReduce for using HBase as both source and sink.

Java Client API: HBase supports an easy to use Java API for programmatic access.

Thrift/REST API: HBase also supports Thrift and REST for non-Java front-ends.

Block Cache and Bloom Filters: HBase supports a Block Cache and Bloom Filters for high volume query optimization.

Operational Management: HBase provides build-in web-pages for operational insight as well as JMX metrics.

66.2. When Should I Use HBase?

HBase isn’t suitable for every problem.

First, make sure you have enough data. If you have hundreds of millions or billions of rows, then HBase is a good candidate. If you only have a few thousand/million rows, then using a traditional RDBMS might be a better choice due to the fact that all of your data might wind up on a single node (or two) and the rest of the cluster may be sitting idle.

Second, make sure you can live without all the extra features that an RDBMS provides (e.g., typed columns, secondary indexes, transactions, advanced query languages, etc.) An application built against an RDBMS cannot be “ported” to HBase by simply changing a JDBC driver, for example. Consider moving from an RDBMS to HBase as a complete redesign as opposed to a port.

Third, make sure you have enough hardware. Even HDFS doesn’t do well with anything less than 5 DataNodes (due to things such as HDFS block replication which has a default of 3), plus a NameNode.

HBase can run quite well stand-alone on a laptop - but this should be considered a development configuration only.

66.3. What Is The Difference Between HBase and Hadoop/HDFS?

HDFS is a distributed file system that is well suited for the storage of large files. Its documentation states that it is not, however, a general purpose file system, and does not provide fast individual record lookups in files. HBase, on the other hand, is built on top of HDFS and provides fast record lookups (and updates) for large tables. This can sometimes be a point of conceptual confusion. HBase internally puts your data in indexed “StoreFiles” that exist on HDFS for high-speed lookups. See the Data Model and the rest of this chapter for more information on how HBase achieves its goals.

67. Catalog Tables

The catalog table hbase:meta exists as an HBase table and is filtered out of the HBase shell’s list command, but is in fact a table just like any other.

67.1. hbase:meta

The hbase:meta table (previously called .META.) keeps a list of all regions in the system, and the location of hbase:meta is stored in ZooKeeper.

The hbase:meta table structure is as follows:

Key

- Region key of the format (

[table],[region start key],[region id])

Values

info:regioninfo(serialized HRegionInfo instance for this region)info:server(server:port of the RegionServer containing this region)info:serverstartcode(start-time of the RegionServer process containing this region)

When a table is in the process of splitting, two other columns will be created, called info:splitA and info:splitB. These columns represent the two daughter regions. The values for these columns are also serialized HRegionInfo instances. After the region has been split, eventually this row will be deleted.

Note on HRegionInf

The empty key is used to denote table start and table end. A region with an empty start key is the first region in a table. If a region has both an empty start and an empty end key, it is the only region in the table

In the (hopefully unlikely) event that programmatic processing of catalog metadata is required, see the RegionInfo.parseFrom utility.

67.2. Startup Sequencing

First, the location of hbase:meta is looked up in ZooKeeper. Next, hbase:meta is updated with server and startcode values.

For information on region-RegionServer assignment, see Region-RegionServer Assignment.

68. Client

The HBase client finds the RegionServers that are serving the particular row range of interest. It does this by querying the hbase:meta table. See hbase:meta for details. After locating the required region(s), the client contacts the RegionServer serving that region, rather than going through the master, and issues the read or write request. This information is cached in the client so that subsequent requests need not go through the lookup process. Should a region be reassigned either by the master load balancer or because a RegionServer has died, the client will requery the catalog tables to determine the new location of the user region.

See Runtime Impact for more information about the impact of the Master on HBase Client communication.

Administrative functions are done via an instance of Admin

68.1. Cluster Connections

The API changed in HBase 1.0. For connection configuration information, see Client configuration and dependencies connecting to an HBase cluster.

68.1.1. API as of HBase 1.0.0

It’s been cleaned up and users are returned Interfaces to work against rather than particular types. In HBase 1.0, obtain a Connection object from ConnectionFactory and thereafter, get from it instances of Table, Admin, and RegionLocator on an as-need basis. When done, close the obtained instances. Finally, be sure to cleanup your Connection instance before exiting. Connections are heavyweight objects but thread-safe so you can create one for your application and keep the instance around. Table, Admin and RegionLocator instances are lightweight. Create as you go and then let go as soon as you are done by closing them. See the Client Package Javadoc Description for example usage of the new HBase 1.0 API.

68.1.2. API before HBase 1.0.0

Instances of HTable are the way to interact with an HBase cluster earlier than 1.0.0. Table instances are not thread-safe. Only one thread can use an instance of Table at any given time. When creating Table instances, it is advisable to use the same HBaseConfiguration instance. This will ensure sharing of ZooKeeper and socket instances to the RegionServers which is usually what you want. For example, this is preferred:

HBaseConfiguration conf = HBaseConfiguration.create();HTable table1 = new HTable(conf, "myTable");HTable table2 = new HTable(conf, "myTable");

as opposed to this:

HBaseConfiguration conf1 = HBaseConfiguration.create();HTable table1 = new HTable(conf1, "myTable");HBaseConfiguration conf2 = HBaseConfiguration.create();HTable table2 = new HTable(conf2, "myTable");

For more information about how connections are handled in the HBase client, see ConnectionFactory.

Connection Pooling

For applications which require high-end multithreaded access (e.g., web-servers or application servers that may serve many application threads in a single JVM), you can pre-create a Connection, as shown in the following example:

Example 22. Pre-Creating a Connection

// Create a connection to the cluster.Configuration conf = HBaseConfiguration.create();try (Connection connection = ConnectionFactory.createConnection(conf);Table table = connection.getTable(TableName.valueOf(tablename))) {// use table as needed, the table returned is lightweight}

HTablePoolis DeprecatePrevious versions of this guide discussed

HTablePool, which was deprecated in HBase 0.94, 0.95, and 0.96, and removed in 0.98.1, by HBASE-6580, orHConnection, which is deprecated in HBase 1.0 byConnection. Please use Connection instead.

68.2. WriteBuffer and Batch Methods

In HBase 1.0 and later, HTable is deprecated in favor of Table. Table does not use autoflush. To do buffered writes, use the BufferedMutator class.

In HBase 2.0 and later, HTable does not use BufferedMutator to execute the Put operation. Refer to HBASE-18500 for more information.

For additional information on write durability, review the ACID semantics page.

For fine-grained control of batching of Puts or Deletes, see the batch methods on Table.

68.3. Asynchronous Client

It is a new API introduced in HBase 2.0 which aims to provide the ability to access HBase asynchronously.

You can obtain an AsyncConnection from ConnectionFactory, and then get a asynchronous table instance from it to access HBase. When done, close the AsyncConnection instance(usually when your program exits).

For the asynchronous table, most methods have the same meaning with the old Table interface, expect that the return value is wrapped with a CompletableFuture usually. We do not have any buffer here so there is no close method for asynchronous table, you do not need to close it. And it is thread safe.

There are several differences for scan:

There is still a

getScannermethod which returns aResultScanner. You can use it in the old way and it works like the oldClientAsyncPrefetchScanner.There is a

scanAllmethod which will return all the results at once. It aims to provide a simpler way for small scans which you want to get the whole results at once usually.The Observer Pattern. There is a scan method which accepts a

ScanResultConsumeras a parameter. It will pass the results to the consumer.

Notice that AsyncTable interface is templatized. The template parameter specifies the type of ScanResultConsumerBase used by scans, which means the observer style scan APIs are different. The two types of scan consumers are - ScanResultConsumer and AdvancedScanResultConsumer.

ScanResultConsumer needs a separate thread pool which is used to execute the callbacks registered to the returned CompletableFuture. Because the use of separate thread pool frees up RPC threads, callbacks are free to do anything. Use this if the callbacks are not quick, or when in doubt.

AdvancedScanResultConsumer executes callbacks inside the framework thread. It is not allowed to do time consuming work in the callbacks else it will likely block the framework threads and cause very bad performance impact. As its name, it is designed for advanced users who want to write high performance code. See org.apache.hadoop.hbase.client.example.HttpProxyExample for how to write fully asynchronous code with it.

68.4. Asynchronous Admin

You can obtain an AsyncConnection from ConnectionFactory, and then get a AsyncAdmin instance from it to access HBase. Notice that there are two getAdmin methods to get a AsyncAdmin instance. One method has one extra thread pool parameter which is used to execute callbacks. It is designed for normal users. Another method doesn’t need a thread pool and all the callbacks are executed inside the framework thread so it is not allowed to do time consuming works in the callbacks. It is designed for advanced users.

The default getAdmin methods will return a AsyncAdmin instance which use default configs. If you want to customize some configs, you can use getAdminBuilder methods to get a AsyncAdminBuilder for creating AsyncAdmin instance. Users are free to only set the configs they care about to create a new AsyncAdmin instance.

For the AsyncAdmin interface, most methods have the same meaning with the old Admin interface, expect that the return value is wrapped with a CompletableFuture usually.

For most admin operations, when the returned CompletableFuture is done, it means the admin operation has also been done. But for compact operation, it only means the compact request was sent to HBase and may need some time to finish the compact operation. For rollWALWriter method, it only means the rollWALWriter request was sent to the region server and may need some time to finish the rollWALWriter operation.

For region name, we only accept byte[] as the parameter type and it may be a full region name or a encoded region name. For server name, we only accept ServerName as the parameter type. For table name, we only accept TableName as the parameter type. For list* operations, we only accept Pattern as the parameter type if you want to do regex matching.

68.5. External Clients

Information on non-Java clients and custom protocols is covered in Apache HBase External APIs

69. Client Request Filters

Get and Scan instances can be optionally configured with filters which are applied on the RegionServer.

Filters can be confusing because there are many different types, and it is best to approach them by understanding the groups of Filter functionality.

69.1. Structural

Structural Filters contain other Filters.

69.1.1. FilterList

FilterList represents a list of Filters with a relationship of FilterList.Operator.MUST_PASS_ALL or FilterList.Operator.MUST_PASS_ONE between the Filters. The following example shows an ‘or’ between two Filters (checking for either ‘my value’ or ‘my other value’ on the same attribute).

FilterList list = new FilterList(FilterList.Operator.MUST_PASS_ONE);SingleColumnValueFilter filter1 = new SingleColumnValueFilter(cf,column,CompareOperator.EQUAL,Bytes.toBytes("my value"));list.add(filter1);SingleColumnValueFilter filter2 = new SingleColumnValueFilter(cf,column,CompareOperator.EQUAL,Bytes.toBytes("my other value"));list.add(filter2);scan.setFilter(list);

69.2. Column Value

69.2.1. SingleColumnValueFilter

A SingleColumnValueFilter (see: https://hbase.apache.org/apidocs/org/apache/hadoop/hbase/filter/SingleColumnValueFilter.html) can be used to test column values for equivalence (CompareOperaor.EQUAL), inequality (CompareOperaor.NOT_EQUAL), or ranges (e.g., CompareOperaor.GREATER). The following is an example of testing equivalence of a column to a String value “my value”…

SingleColumnValueFilter filter = new SingleColumnValueFilter(cf,column,CompareOperaor.EQUAL,Bytes.toBytes("my value"));scan.setFilter(filter);

69.2.2. ColumnValueFilter

Introduced in HBase-2.0.0 version as a complementation of SingleColumnValueFilter, ColumnValueFilter gets matched cell only, while SingleColumnValueFilter gets the entire row (has other columns and values) to which the matched cell belongs. Parameters of constructor of ColumnValueFilter are the same as SingleColumnValueFilter.

ColumnValueFilter filter = new ColumnValueFilter(cf,column,CompareOperaor.EQUAL,Bytes.toBytes("my value"));scan.setFilter(filter);

Note. For simple query like “equals to a family:qualifier:value”, we highly recommend to use the following way instead of using SingleColumnValueFilter or ColumnValueFilter:

Scan scan = new Scan();scan.addColumn(Bytes.toBytes("family"), Bytes.toBytes("qualifier"));ValueFilter vf = new ValueFilter(CompareOperator.EQUAL,new BinaryComparator(Bytes.toBytes("value")));scan.setFilter(vf);...

This scan will restrict to the specified column ‘family:qualifier’, avoiding scan unrelated families and columns, which has better performance, and ValueFilter is the condition used to do the value filtering.

But if query is much more complicated beyond this book, then please make your good choice case by case.

69.3. Column Value Comparators

There are several Comparator classes in the Filter package that deserve special mention. These Comparators are used in concert with other Filters, such as SingleColumnValueFilter.

69.3.1. RegexStringComparator

RegexStringComparator supports regular expressions for value comparisons.

RegexStringComparator comp = new RegexStringComparator("my."); // any value that starts with 'my'SingleColumnValueFilter filter = new SingleColumnValueFilter(cf,column,CompareOperaor.EQUAL,comp);scan.setFilter(filter);

See the Oracle JavaDoc for supported RegEx patterns in Java.

69.3.2. SubstringComparator

SubstringComparator can be used to determine if a given substring exists in a value. The comparison is case-insensitive.

SubstringComparator comp = new SubstringComparator("y val"); // looking for 'my value'SingleColumnValueFilter filter = new SingleColumnValueFilter(cf,column,CompareOperaor.EQUAL,comp);scan.setFilter(filter);

69.3.3. BinaryPrefixComparator

69.3.4. BinaryComparator

See BinaryComparator.

69.4. KeyValue Metadata

As HBase stores data internally as KeyValue pairs, KeyValue Metadata Filters evaluate the existence of keys (i.e., ColumnFamily:Column qualifiers) for a row, as opposed to values the previous section.

69.4.1. FamilyFilter

FamilyFilter can be used to filter on the ColumnFamily. It is generally a better idea to select ColumnFamilies in the Scan than to do it with a Filter.

69.4.2. QualifierFilter

QualifierFilter can be used to filter based on Column (aka Qualifier) name.

69.4.3. ColumnPrefixFilter

ColumnPrefixFilter can be used to filter based on the lead portion of Column (aka Qualifier) names.

A ColumnPrefixFilter seeks ahead to the first column matching the prefix in each row and for each involved column family. It can be used to efficiently get a subset of the columns in very wide rows.

Note: The same column qualifier can be used in different column families. This filter returns all matching columns.

Example: Find all columns in a row and family that start with “abc”

Table t = ...;byte[] row = ...;byte[] family = ...;byte[] prefix = Bytes.toBytes("abc");Scan scan = new Scan(row, row); // (optional) limit to one rowscan.addFamily(family); // (optional) limit to one familyFilter f = new ColumnPrefixFilter(prefix);scan.setFilter(f);scan.setBatch(10); // set this if there could be many columns returnedResultScanner rs = t.getScanner(scan);for (Result r = rs.next(); r != null; r = rs.next()) {for (KeyValue kv : r.raw()) {// each kv represents a column}}rs.close();

69.4.4. MultipleColumnPrefixFilter

MultipleColumnPrefixFilter behaves like ColumnPrefixFilter but allows specifying multiple prefixes.

Like ColumnPrefixFilter, MultipleColumnPrefixFilter efficiently seeks ahead to the first column matching the lowest prefix and also seeks past ranges of columns between prefixes. It can be used to efficiently get discontinuous sets of columns from very wide rows.

Example: Find all columns in a row and family that start with “abc” or “xyz”

Table t = ...;byte[] row = ...;byte[] family = ...;byte[][] prefixes = new byte[][] {Bytes.toBytes("abc"), Bytes.toBytes("xyz")};Scan scan = new Scan(row, row); // (optional) limit to one rowscan.addFamily(family); // (optional) limit to one familyFilter f = new MultipleColumnPrefixFilter(prefixes);scan.setFilter(f);scan.setBatch(10); // set this if there could be many columns returnedResultScanner rs = t.getScanner(scan);for (Result r = rs.next(); r != null; r = rs.next()) {for (KeyValue kv : r.raw()) {// each kv represents a column}}rs.close();

69.4.5. ColumnRangeFilter

A ColumnRangeFilter allows efficient intra row scanning.

A ColumnRangeFilter can seek ahead to the first matching column for each involved column family. It can be used to efficiently get a ‘slice’ of the columns of a very wide row. i.e. you have a million columns in a row but you only want to look at columns bbbb-bbdd.

Note: The same column qualifier can be used in different column families. This filter returns all matching columns.

Example: Find all columns in a row and family between “bbbb” (inclusive) and “bbdd” (inclusive)

Table t = ...;byte[] row = ...;byte[] family = ...;byte[] startColumn = Bytes.toBytes("bbbb");byte[] endColumn = Bytes.toBytes("bbdd");Scan scan = new Scan(row, row); // (optional) limit to one rowscan.addFamily(family); // (optional) limit to one familyFilter f = new ColumnRangeFilter(startColumn, true, endColumn, true);scan.setFilter(f);scan.setBatch(10); // set this if there could be many columns returnedResultScanner rs = t.getScanner(scan);for (Result r = rs.next(); r != null; r = rs.next()) {for (KeyValue kv : r.raw()) {// each kv represents a column}}rs.close();

Note: Introduced in HBase 0.92

69.5. RowKey

69.5.1. RowFilter

It is generally a better idea to use the startRow/stopRow methods on Scan for row selection, however RowFilter can also be used.

69.6. Utility

69.6.1. FirstKeyOnlyFilter

This is primarily used for rowcount jobs. See FirstKeyOnlyFilter.

70. Master

HMaster is the implementation of the Master Server. The Master server is responsible for monitoring all RegionServer instances in the cluster, and is the interface for all metadata changes. In a distributed cluster, the Master typically runs on the NameNode. J Mohamed Zahoor goes into some more detail on the Master Architecture in this blog posting, HBase HMaster Architecture .

70.1. Startup Behavior

If run in a multi-Master environment, all Masters compete to run the cluster. If the active Master loses its lease in ZooKeeper (or the Master shuts down), then the remaining Masters jostle to take over the Master role.

70.2. Runtime Impact

A common dist-list question involves what happens to an HBase cluster when the Master goes down. Because the HBase client talks directly to the RegionServers, the cluster can still function in a “steady state”. Additionally, per Catalog Tables, hbase:meta exists as an HBase table and is not resident in the Master. However, the Master controls critical functions such as RegionServer failover and completing region splits. So while the cluster can still run for a short time without the Master, the Master should be restarted as soon as possible.

70.3. Interface

The methods exposed by HMasterInterface are primarily metadata-oriented methods:

Table (createTable, modifyTable, removeTable, enable, disable)

ColumnFamily (addColumn, modifyColumn, removeColumn)

Region (move, assign, unassign) For example, when the

AdminmethoddisableTableis invoked, it is serviced by the Master server.

70.4. Processes

The Master runs several background threads:

70.4.1. LoadBalancer

Periodically, and when there are no regions in transition, a load balancer will run and move regions around to balance the cluster’s load. See Balancer for configuring this property.

See Region-RegionServer Assignment for more information on region assignment.

70.4.2. CatalogJanitor

Periodically checks and cleans up the hbase:meta table. See hbase:meta for more information on the meta table.

70.5. MasterProcWAL

HMaster records administrative operations and their running states, such as the handling of a crashed server, table creation, and other DDLs, into its own WAL file. The WALs are stored under the MasterProcWALs directory. The Master WALs are not like RegionServer WALs. Keeping up the Master WAL allows us run a state machine that is resilient across Master failures. For example, if a HMaster was in the middle of creating a table encounters an issue and fails, the next active HMaster can take up where the previous left off and carry the operation to completion. Since hbase-2.0.0, a new AssignmentManager (A.K.A AMv2) was introduced and the HMaster handles region assignment operations, server crash processing, balancing, etc., all via AMv2 persisting all state and transitions into MasterProcWALs rather than up into ZooKeeper, as we do in hbase-1.x.

See AMv2 Description for Devs (and Procedure Framework (Pv2): HBASE-12439 for its basis) if you would like to learn more about the new AssignmentManager.

70.5.1. Configurations for MasterProcWAL

Here are the list of configurations that effect MasterProcWAL operation. You should not have to change your defaults.

hbase.procedure.store.wal.periodic.roll.msec

Description

Frequency of generating a new WAL

Default

1h (3600000 in msec)

hbase.procedure.store.wal.roll.threshold

Description

Threshold in size before the WAL rolls. Every time the WAL reaches this size or the above period, 1 hour, passes since last log roll, the HMaster will generate a new WAL.

Default

32MB (33554432 in byte)

hbase.procedure.store.wal.warn.threshold

Description

If the number of WALs goes beyond this threshold, the following message should appear in the HMaster log with WARN level when rolling.

procedure WALs count=xx above the warning threshold 64\. check running procedures to see if something is stuck.

Default

64

hbase.procedure.store.wal.max.retries.before.roll

Description

Max number of retry when syncing slots (records) to its underlying storage, such as HDFS. Every attempt, the following message should appear in the HMaster log.

unable to sync slots, retry=xx

Default

3

hbase.procedure.store.wal.sync.failure.roll.max

Description

After the above 3 retrials, the log is rolled and the retry count is reset to 0, thereon a new set of retrial starts. This configuration controls the max number of attempts of log rolling upon sync failure. That is, HMaster is allowed to fail to sync 9 times in total. Once it exceeds, the following log should appear in the HMaster log.

Sync slots after log roll failed, abort.

Default

3

71. RegionServer

HRegionServer is the RegionServer implementation. It is responsible for serving and managing regions. In a distributed cluster, a RegionServer runs on a DataNode.

71.1. Interface

The methods exposed by HRegionRegionInterface contain both data-oriented and region-maintenance methods:

Data (get, put, delete, next, etc.)

Region (splitRegion, compactRegion, etc.) For example, when the

AdminmethodmajorCompactis invoked on a table, the client is actually iterating through all regions for the specified table and requesting a major compaction directly to each region.

71.2. Processes

The RegionServer runs a variety of background threads:

71.2.1. CompactSplitThread

Checks for splits and handle minor compactions.

71.2.2. MajorCompactionChecker

Checks for major compactions.

71.2.3. MemStoreFlusher

Periodically flushes in-memory writes in the MemStore to StoreFiles.

71.2.4. LogRoller

Periodically checks the RegionServer’s WAL.

71.3. Coprocessors

Coprocessors were added in 0.92. There is a thorough Blog Overview of CoProcessors posted. Documentation will eventually move to this reference guide, but the blog is the most current information available at this time.

71.4. Block Cache

HBase provides two different BlockCache implementations to cache data read from HDFS: the default on-heap LruBlockCache and the BucketCache, which is (usually) off-heap. This section discusses benefits and drawbacks of each implementation, how to choose the appropriate option, and configuration options for each.

Block Cache Reporting: U

See the RegionServer UI for detail on caching deploy. See configurations, sizings, current usage, time-in-the-cache, and even detail on block counts and types.

71.4.1. Cache Choices

LruBlockCache is the original implementation, and is entirely within the Java heap. BucketCache is optional and mainly intended for keeping block cache data off-heap, although BucketCache can also be a file-backed cache.

When you enable BucketCache, you are enabling a two tier caching system. We used to describe the tiers as “L1” and “L2” but have deprecated this terminology as of hbase-2.0.0. The “L1” cache referred to an instance of LruBlockCache and “L2” to an off-heap BucketCache. Instead, when BucketCache is enabled, all DATA blocks are kept in the BucketCache tier and meta blocks — INDEX and BLOOM blocks — are on-heap in the LruBlockCache. Management of these two tiers and the policy that dictates how blocks move between them is done by CombinedBlockCache.

71.4.2. General Cache Configurations

Apart from the cache implementation itself, you can set some general configuration options to control how the cache performs. See CacheConfig. After setting any of these options, restart or rolling restart your cluster for the configuration to take effect. Check logs for errors or unexpected behavior.

See also Prefetch Option for Blockcache, which discusses a new option introduced in HBASE-9857.

71.4.3. LruBlockCache Design

The LruBlockCache is an LRU cache that contains three levels of block priority to allow for scan-resistance and in-memory ColumnFamilies:

Single access priority: The first time a block is loaded from HDFS it normally has this priority and it will be part of the first group to be considered during evictions. The advantage is that scanned blocks are more likely to get evicted than blocks that are getting more usage.

Multi access priority: If a block in the previous priority group is accessed again, it upgrades to this priority. It is thus part of the second group considered during evictions.

In-memory access priority: If the block’s family was configured to be “in-memory”, it will be part of this priority disregarding the number of times it was accessed. Catalog tables are configured like this. This group is the last one considered during evictions.

To mark a column family as in-memory, call

HColumnDescriptor.setInMemory(true);

if creating a table from java, or set IN_MEMORY ⇒ true when creating or altering a table in the shell: e.g.

hbase(main):003:0> create 't', {NAME => 'f', IN_MEMORY => 'true'}

For more information, see the LruBlockCache source

71.4.4. LruBlockCache Usage

Block caching is enabled by default for all the user tables which means that any read operation will load the LRU cache. This might be good for a large number of use cases, but further tunings are usually required in order to achieve better performance. An important concept is the working set size, or WSS, which is: “the amount of memory needed to compute the answer to a problem”. For a website, this would be the data that’s needed to answer the queries over a short amount of time.

The way to calculate how much memory is available in HBase for caching is:

number of region servers * heap size * hfile.block.cache.size * 0.99

The default value for the block cache is 0.4 which represents 40% of the available heap. The last value (99%) is the default acceptable loading factor in the LRU cache after which eviction is started. The reason it is included in this equation is that it would be unrealistic to say that it is possible to use 100% of the available memory since this would make the process blocking from the point where it loads new blocks. Here are some examples:

One region server with the heap size set to 1 GB and the default block cache size will have 405 MB of block cache available.

20 region servers with the heap size set to 8 GB and a default block cache size will have 63.3 of block cache.

100 region servers with the heap size set to 24 GB and a block cache size of 0.5 will have about 1.16 TB of block cache.

Your data is not the only resident of the block cache. Here are others that you may have to take into account:

Catalog Tables

The hbase:meta table is forced into the block cache and have the in-memory priority which means that they are harder to evict.

The hbase:meta tables can occupy a few MBs depending on the number of regions.

HFiles Indexes

An HFile is the file format that HBase uses to store data in HDFS. It contains a multi-layered index which allows HBase to seek to the data without having to read the whole file. The size of those indexes is a factor of the block size (64KB by default), the size of your keys and the amount of data you are storing. For big data sets it’s not unusual to see numbers around 1GB per region server, although not all of it will be in cache because the LRU will evict indexes that aren’t used.

Keys

The values that are stored are only half the picture, since each value is stored along with its keys (row key, family qualifier, and timestamp). See Try to minimize row and column sizes.

Bloom Filters

Just like the HFile indexes, those data structures (when enabled) are stored in the LRU.

Currently the recommended way to measure HFile indexes and bloom filters sizes is to look at the region server web UI and checkout the relevant metrics. For keys, sampling can be done by using the HFile command line tool and look for the average key size metric. Since HBase 0.98.3, you can view details on BlockCache stats and metrics in a special Block Cache section in the UI.

It’s generally bad to use block caching when the WSS doesn’t fit in memory. This is the case when you have for example 40GB available across all your region servers’ block caches but you need to process 1TB of data. One of the reasons is that the churn generated by the evictions will trigger more garbage collections unnecessarily. Here are two use cases:

Fully random reading pattern: This is a case where you almost never access the same row twice within a short amount of time such that the chance of hitting a cached block is close to 0. Setting block caching on such a table is a waste of memory and CPU cycles, more so that it will generate more garbage to pick up by the JVM. For more information on monitoring GC, see JVM Garbage Collection Logs.

Mapping a table: In a typical MapReduce job that takes a table in input, every row will be read only once so there’s no need to put them into the block cache. The Scan object has the option of turning this off via the setCaching method (set it to false). You can still keep block caching turned on on this table if you need fast random read access. An example would be counting the number of rows in a table that serves live traffic, caching every block of that table would create massive churn and would surely evict data that’s currently in use.

Caching META blocks only (DATA blocks in fscache)

An interesting setup is one where we cache META blocks only and we read DATA blocks in on each access. If the DATA blocks fit inside fscache, this alternative may make sense when access is completely random across a very large dataset. To enable this setup, alter your table and for each column family set BLOCKCACHE ⇒ 'false'. You are ‘disabling’ the BlockCache for this column family only. You can never disable the caching of META blocks. Since HBASE-4683 Always cache index and bloom blocks, we will cache META blocks even if the BlockCache is disabled.

71.4.5. Off-heap Block Cache

How to Enable BucketCache

The usual deploy of BucketCache is via a managing class that sets up two caching tiers: an on-heap cache implemented by LruBlockCache and a second cache implemented with BucketCache. The managing class is CombinedBlockCache by default. The previous link describes the caching ‘policy’ implemented by CombinedBlockCache. In short, it works by keeping meta blocks — INDEX and BLOOM in the on-heap LruBlockCache tier — and DATA blocks are kept in the BucketCache tier.

Pre-hbase-2.0.0 versions

Fetching will always be slower when fetching from BucketCache in pre-hbase-2.0.0, as compared to the native on-heap LruBlockCache. However, latencies tend to be less erratic across time, because there is less garbage collection when you use BucketCache since it is managing BlockCache allocations, not the GC. If the BucketCache is deployed in off-heap mode, this memory is not managed by the GC at all. This is why you’d use BucketCache in pre-2.0.0, so your latencies are less erratic, to mitigate GCs and heap fragmentation, and so you can safely use more memory. See Nick Dimiduk’s BlockCache 101 for comparisons running on-heap vs off-heap tests. Also see Comparing BlockCache Deploys which finds that if your dataset fits inside your LruBlockCache deploy, use it otherwise if you are experiencing cache churn (or you want your cache to exist beyond the vagaries of java GC), use BucketCache.

In pre-2.0.0, one can configure the BucketCache so it receives the victim of an LruBlockCache eviction. All Data and index blocks are cached in L1 first. When eviction happens from L1, the blocks (or victims) will get moved to L2. Set cacheDataInL1 via (HColumnDescriptor.setCacheDataInL1(true) or in the shell, creating or amending column families setting CACHE_DATA_IN_L1 to true: e.g.

hbase(main):003:0> create 't', {NAME => 't', CONFIGURATION => {CACHE_DATA_IN_L1 => 'true'}}

hbase-2.0.0+ versions

HBASE-11425 changed the HBase read path so it could hold the read-data off-heap avoiding copying of cached data on to the java heap. See Offheap read-path. In hbase-2.0.0, off-heap latencies approach those of on-heap cache latencies with the added benefit of NOT provoking GC.

From HBase 2.0.0 onwards, the notions of L1 and L2 have been deprecated. When BucketCache is turned on, the DATA blocks will always go to BucketCache and INDEX/BLOOM blocks go to on heap LRUBlockCache. cacheDataInL1 support hase been removed.

The BucketCache Block Cache can be deployed off-heap, file or mmaped file mode.

You set which via the hbase.bucketcache.ioengine setting. Setting it to offheap will have BucketCache make its allocations off-heap, and an ioengine setting of file:PATH_TO_FILE will direct BucketCache to use file caching (Useful in particular if you have some fast I/O attached to the box such as SSDs). From 2.0.0, it is possible to have more than one file backing the BucketCache. This is very useful specially when the Cache size requirement is high. For multiple backing files, configure ioengine as files:PATH_TO_FILE1,PATH_TO_FILE2,PATH_TO_FILE3. BucketCache can be configured to use an mmapped file also. Configure ioengine as mmap:PATH_TO_FILE for this.

It is possible to deploy a tiered setup where we bypass the CombinedBlockCache policy and have BucketCache working as a strict L2 cache to the L1 LruBlockCache. For such a setup, set hbase.bucketcache.combinedcache.enabled to false. In this mode, on eviction from L1, blocks go to L2. When a block is cached, it is cached first in L1. When we go to look for a cached block, we look first in L1 and if none found, then search L2. Let us call this deploy format, Raw L1+L2. NOTE: This L1+L2 mode is removed from 2.0.0. When BucketCache is used, it will be strictly the DATA cache and the LruBlockCache will cache INDEX/META blocks.

Other BucketCache configs include: specifying a location to persist cache to across restarts, how many threads to use writing the cache, etc. See the CacheConfig.html class for configuration options and descriptions.

To check it enabled, look for the log line describing cache setup; it will detail how BucketCache has been deployed. Also see the UI. It will detail the cache tiering and their configuration.

BucketCache Example Configuration

This sample provides a configuration for a 4 GB off-heap BucketCache with a 1 GB on-heap cache.

Configuration is performed on the RegionServer.

Setting hbase.bucketcache.ioengine and hbase.bucketcache.size > 0 enables CombinedBlockCache. Let us presume that the RegionServer has been set to run with a 5G heap: i.e. HBASE_HEAPSIZE=5g.

- First, edit the RegionServer’s hbase-env.sh and set

HBASE_OFFHEAPSIZEto a value greater than the off-heap size wanted, in this case, 4 GB (expressed as 4G). Let’s set it to 5G. That’ll be 4G for our off-heap cache and 1G for any other uses of off-heap memory (there are other users of off-heap memory other than BlockCache; e.g. DFSClient in RegionServer can make use of off-heap memory). See Direct Memory Usage In HBase.HBASE_OFFHEAPSIZE=5G

- Next, add the following configuration to the RegionServer’s hbase-site.xml.

<property><name>hbase.bucketcache.ioengine</name><value>offheap</value></property><property><name>hfile.block.cache.size</name><value>0.2</value></property><property><name>hbase.bucketcache.size</name><value>4196</value></property>

- Restart or rolling restart your cluster, and check the logs for any issues.

In the above, we set the BucketCache to be 4G. We configured the on-heap LruBlockCache have 20% (0.2) of the RegionServer’s heap size (0.2 * 5G = 1G). In other words, you configure the L1 LruBlockCache as you would normally (as if there were no L2 cache present).

HBASE-10641 introduced the ability to configure multiple sizes for the buckets of the BucketCache, in HBase 0.98 and newer. To configurable multiple bucket sizes, configure the new property hbase.bucketcache.bucket.sizes to a comma-separated list of block sizes, ordered from smallest to largest, with no spaces. The goal is to optimize the bucket sizes based on your data access patterns. The following example configures buckets of size 4096 and 8192.

<property><name>hbase.bucketcache.bucket.sizes</name><value>4096,8192</value></property>

Direct Memory Usage In HBase

The default maximum direct memory varies by JVM. Traditionally it is 64M or some relation to allocated heap size (-Xmx) or no limit at all (JDK7 apparently). HBase servers use direct memory, in particular short-circuit reading (See Leveraging local data), the hosted DFSClient will allocate direct memory buffers. How much the DFSClient uses is not easy to quantify; it is the number of open HFiles *

hbase.dfs.client.read.shortcircuit.buffer.sizewherehbase.dfs.client.read.shortcircuit.buffer.sizeis set to 128k in HBase — see hbase-default.xml default configurations. If you do off-heap block caching, you’ll be making use of direct memory. The RPCServer uses a ByteBuffer pool. From 2.0.0, these buffers are off-heap ByteBuffers. Starting your JVM, make sure the-XX:MaxDirectMemorySizesetting in conf/hbase-env.sh considers off-heap BlockCache (hbase.bucketcache.size), DFSClient usage, RPC side ByteBufferPool max size. This has to be bit higher than sum of off heap BlockCache size and max ByteBufferPool size. Allocating an extra of 1-2 GB for the max direct memory size has worked in tests. Direct memory, which is part of the Java process heap, is separate from the object heap allocated by -Xmx. The value allocated byMaxDirectMemorySizemust not exceed physical RAM, and is likely to be less than the total available RAM due to other memory requirements and system constraints.You can see how much memory — on-heap and off-heap/direct — a RegionServer is configured to use and how much it is using at any one time by looking at the Server Metrics: Memory tab in the UI. It can also be gotten via JMX. In particular the direct memory currently used by the server can be found on the

java.nio.type=BufferPool,name=directbean. Terracotta has a good write up on using off-heap memory in Java. It is for their product BigMemory but a lot of the issues noted apply in general to any attempt at going off-heap. Check it out.hbase.bucketcache.percentage.in.combinedcache

This is a pre-HBase 1.0 configuration removed because it was confusing. It was a float that you would set to some value between 0.0 and 1.0. Its default was 0.9. If the deploy was using CombinedBlockCache, then the LruBlockCache L1 size was calculated to be

(1 - hbase.bucketcache.percentage.in.combinedcache) * size-of-bucketcacheand the BucketCache size washbase.bucketcache.percentage.in.combinedcache * size-of-bucket-cache. where size-of-bucket-cache itself is EITHER the value of the configurationhbase.bucketcache.sizeIF it was specified as Megabytes ORhbase.bucketcache.size*-XX:MaxDirectMemorySizeifhbase.bucketcache.sizeis between 0 and 1.0.In 1.0, it should be more straight-forward. Onheap LruBlockCache size is set as a fraction of java heap using

hfile.block.cache.size setting(not the best name) and BucketCache is set as above in absolute Megabytes.

71.4.6. Compressed BlockCache

HBASE-11331 introduced lazy BlockCache decompression, more simply referred to as compressed BlockCache. When compressed BlockCache is enabled data and encoded data blocks are cached in the BlockCache in their on-disk format, rather than being decompressed and decrypted before caching.

For a RegionServer hosting more data than can fit into cache, enabling this feature with SNAPPY compression has been shown to result in 50% increase in throughput and 30% improvement in mean latency while, increasing garbage collection by 80% and increasing overall CPU load by 2%. See HBASE-11331 for more details about how performance was measured and achieved. For a RegionServer hosting data that can comfortably fit into cache, or if your workload is sensitive to extra CPU or garbage-collection load, you may receive less benefit.

The compressed BlockCache is disabled by default. To enable it, set hbase.block.data.cachecompressed to true in hbase-site.xml on all RegionServers.

71.5. RegionServer Offheap Read/Write Path

71.5.1. Offheap read-path

In hbase-2.0.0, HBASE-11425 changed the HBase read path so it could hold the read-data off-heap avoiding copying of cached data on to the java heap. This reduces GC pauses given there is less garbage made and so less to clear. The off-heap read path has a performance that is similar/better to that of the on-heap LRU cache. This feature is available since HBase 2.0.0. If the BucketCache is in file mode, fetching will always be slower compared to the native on-heap LruBlockCache. Refer to below blogs for more details and test results on off heaped read path Offheaping the Read Path in Apache HBase: Part 1 of 2 and Offheap Read-Path in Production - The Alibaba story

For an end-to-end off-heaped read-path, first of all there should be an off-heap backed Off-heap Block Cache(BC). Configure ‘hbase.bucketcache.ioengine’ to off-heap in hbase-site.xml. Also specify the total capacity of the BC using hbase.bucketcache.size config. Please remember to adjust value of ‘HBASEOFFHEAPSIZE’ in _hbase-env.sh. This is how we specify the max possible off-heap memory allocation for the RegionServer java process. This should be bigger than the off-heap BC size. Please keep in mind that there is no default for hbase.bucketcache.ioengine which means the BC is turned OFF by default (See Direct Memory Usage In HBase).

Next thing to tune is the ByteBuffer pool on the RPC server side. The buffers from this pool will be used to accumulate the cell bytes and create a result cell block to send back to the client side. hbase.ipc.server.reservoir.enabled can be used to turn this pool ON or OFF. By default this pool is ON and available. HBase will create off heap ByteBuffers and pool them. Please make sure not to turn this OFF if you want end-to-end off-heaping in read path. If this pool is turned off, the server will create temp buffers on heap to accumulate the cell bytes and make a result cell block. This can impact the GC on a highly read loaded server. The user can tune this pool with respect to how many buffers are in the pool and what should be the size of each ByteBuffer. Use the config hbase.ipc.server.reservoir.initial.buffer.size to tune each of the buffer sizes. Default is 64 KB.

When the read pattern is a random row read load and each of the rows are smaller in size compared to this 64 KB, try reducing this. When the result size is larger than one ByteBuffer size, the server will try to grab more than one buffer and make a result cell block out of these. When the pool is running out of buffers, the server will end up creating temporary on-heap buffers.

The maximum number of ByteBuffers in the pool can be tuned using the config ‘hbase.ipc.server.reservoir.initial.max’. Its value defaults to 64 * region server handlers configured (See the config ‘hbase.regionserver.handler.count’). The math is such that by default we consider 2 MB as the result cell block size per read result and each handler will be handling a read. For 2 MB size, we need 32 buffers each of size 64 KB (See default buffer size in pool). So per handler 32 ByteBuffers(BB). We allocate twice this size as the max BBs count such that one handler can be creating the response and handing it to the RPC Responder thread and then handling a new request creating a new response cell block (using pooled buffers). Even if the responder could not send back the first TCP reply immediately, our count should allow that we should still have enough buffers in our pool without having to make temporary buffers on the heap. Again for smaller sized random row reads, tune this max count. There are lazily created buffers and the count is the max count to be pooled.

If you still see GC issues even after making end-to-end read path off-heap, look for issues in the appropriate buffer pool. Check the below RegionServer log with INFO level:

Pool already reached its max capacity : XXX and no free buffers now. Consider increasing the value for 'hbase.ipc.server.reservoir.initial.max' ?

The setting for HBASE_OFFHEAPSIZE in hbase-env.sh should consider this off heap buffer pool at the RPC side also. We need to config this max off heap size for the RegionServer as a bit higher than the sum of this max pool size and the off heap cache size. The TCP layer will also need to create direct bytebuffers for TCP communication. Also the DFS client will need some off-heap to do its workings especially if short-circuit reads are configured. Allocating an extra of 1 - 2 GB for the max direct memory size has worked in tests.

If you are using co processors and refer the Cells in the read results, DO NOT store reference to these Cells out of the scope of the CP hook methods. Some times the CPs need store info about the cell (Like its row key) for considering in the next CP hook call etc. For such cases, pls clone the required fields of the entire Cell as per the use cases. [ See CellUtil#cloneXXX(Cell) APIs ]

71.5.2. Offheap write-path

TODO

71.6. RegionServer Splitting Implementation

As write requests are handled by the region server, they accumulate in an in-memory storage system called the memstore. Once the memstore fills, its content are written to disk as additional store files. This event is called a memstore flush. As store files accumulate, the RegionServer will compact them into fewer, larger files. After each flush or compaction finishes, the amount of data stored in the region has changed. The RegionServer consults the region split policy to determine if the region has grown too large or should be split for another policy-specific reason. A region split request is enqueued if the policy recommends it.

Logically, the process of splitting a region is simple. We find a suitable point in the keyspace of the region where we should divide the region in half, then split the region’s data into two new regions at that point. The details of the process however are not simple. When a split happens, the newly created daughter regions do not rewrite all the data into new files immediately. Instead, they create small files similar to symbolic link files, named Reference files, which point to either the top or bottom part of the parent store file according to the split point. The reference file is used just like a regular data file, but only half of the records are considered. The region can only be split if there are no more references to the immutable data files of the parent region. Those reference files are cleaned gradually by compactions, so that the region will stop referring to its parents files, and can be split further.

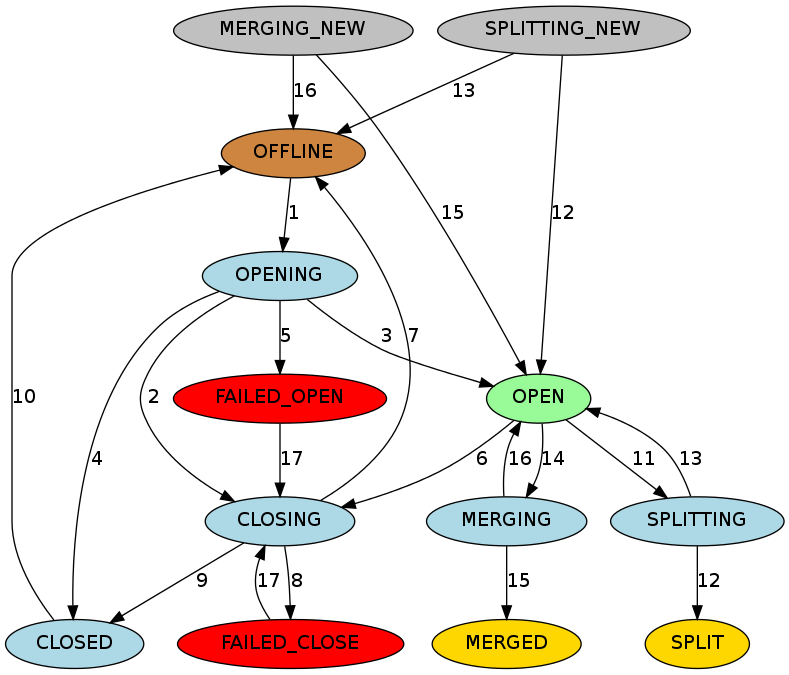

Although splitting the region is a local decision made by the RegionServer, the split process itself must coordinate with many actors. The RegionServer notifies the Master before and after the split, updates the .META. table so that clients can discover the new daughter regions, and rearranges the directory structure and data files in HDFS. Splitting is a multi-task process. To enable rollback in case of an error, the RegionServer keeps an in-memory journal about the execution state. The steps taken by the RegionServer to execute the split are illustrated in RegionServer Split Process. Each step is labeled with its step number. Actions from RegionServers or Master are shown in red, while actions from the clients are show in green.

Figure 1. RegionServer Split Process

Figure 1. RegionServer Split Process

The RegionServer decides locally to split the region, and prepares the split. THE SPLIT TRANSACTION IS STARTED. As a first step, the RegionServer acquires a shared read lock on the table to prevent schema modifications during the splitting process. Then it creates a znode in zookeeper under

/hbase/region-in-transition/region-name, and sets the znode’s state toSPLITTING.The Master learns about this znode, since it has a watcher for the parent

region-in-transitionznode.The RegionServer creates a sub-directory named

.splitsunder the parent’sregiondirectory in HDFS.The RegionServer closes the parent region and marks the region as offline in its local data structures. THE SPLITTING REGION IS NOW OFFLINE. At this point, client requests coming to the parent region will throw

NotServingRegionException. The client will retry with some backoff. The closing region is flushed.The RegionServer creates region directories under the

.splitsdirectory, for daughter regions A and B, and creates necessary data structures. Then it splits the store files, in the sense that it creates two Reference files per store file in the parent region. Those reference files will point to the parent region’s files.The RegionServer creates the actual region directory in HDFS, and moves the reference files for each daughter.

The RegionServer sends a

Putrequest to the.META.table, to set the parent as offline in the.META.table and add information about daughter regions. At this point, there won’t be individual entries in.META.for the daughters. Clients will see that the parent region is split if they scan.META., but won’t know about the daughters until they appear in.META.. Also, if thisPutto.META. succeeds, the parent will be effectively split. If the RegionServer fails before this RPC succeeds, Master and the next Region Server opening the region will clean dirty state about the region split. After the.META.update, though, the region split will be rolled-forward by Master.The RegionServer opens daughters A and B in parallel.

The RegionServer adds the daughters A and B to

.META., together with information that it hosts the regions. THE SPLIT REGIONS (DAUGHTERS WITH REFERENCES TO PARENT) ARE NOW ONLINE. After this point, clients can discover the new regions and issue requests to them. Clients cache the.META.entries locally, but when they make requests to the RegionServer or.META., their caches will be invalidated, and they will learn about the new regions from.META..The RegionServer updates znode

/hbase/region-in-transition/region-namein ZooKeeper to stateSPLIT, so that the master can learn about it. The balancer can freely re-assign the daughter regions to other region servers if necessary. THE SPLIT TRANSACTION IS NOW FINISHED.After the split,

.META.and HDFS will still contain references to the parent region. Those references will be removed when compactions in daughter regions rewrite the data files. Garbage collection tasks in the master periodically check whether the daughter regions still refer to the parent region’s files. If not, the parent region will be removed.

71.7. Write Ahead Log (WAL)

71.7.1. Purpose

The Write Ahead Log (WAL) records all changes to data in HBase, to file-based storage. Under normal operations, the WAL is not needed because data changes move from the MemStore to StoreFiles. However, if a RegionServer crashes or becomes unavailable before the MemStore is flushed, the WAL ensures that the changes to the data can be replayed. If writing to the WAL fails, the entire operation to modify the data fails.

HBase uses an implementation of the WAL interface. Usually, there is only one instance of a WAL per RegionServer. An exception is the RegionServer that is carrying hbase:meta; the meta table gets its own dedicated WAL. The RegionServer records Puts and Deletes to its WAL, before recording them these Mutations MemStore for the affected Store.

The HLo

Prior to 2.0, the interface for WALs in HBase was named

HLog. In 0.94, HLog was the name of the implementation of the WAL. You will likely find references to the HLog in documentation tailored to these older versions.

The WAL resides in HDFS in the /hbase/WALs/ directory, with subdirectories per region.

For more general information about the concept of write ahead logs, see the Wikipedia Write-Ahead Log article.

71.7.2. WAL Providers

In HBase, there are a number of WAL imlementations (or ‘Providers’). Each is known by a short name label (that unfortunately is not always descriptive). You set the provider in hbase-site.xml passing the WAL provder short-name as the value on the hbase.wal.provider property (Set the provider for hbase:meta using the hbase.wal.meta_provider property, otherwise it uses the same provider configured by hbase.wal.provider).

asyncfs: The default. New since hbase-2.0.0 (HBASE-15536, HBASE-14790). This AsyncFSWAL provider, as it identifies itself in RegionServer logs, is built on a new non-blocking dfsclient implementation. It is currently resident in the hbase codebase but intent is to move it back up into HDFS itself. WALs edits are written concurrently (“fan-out”) style to each of the WAL-block replicas on each DataNode rather than in a chained pipeline as the default client does. Latencies should be better. See Apache HBase Improements and Practices at Xiaomi at slide 14 onward for more detail on implementation.

filesystem: This was the default in hbase-1.x releases. It is built on the blocking DFSClient and writes to replicas in classic DFSCLient pipeline mode. In logs it identifies as FSHLog or FSHLogProvider.

multiwal: This provider is made of multiple instances of asyncfs or filesystem. See the next section for more on multiwal.

Look for the lines like the below in the RegionServer log to see which provider is in place (The below shows the default AsyncFSWALProvider):

2018-04-02 13:22:37,983 INFO [regionserver/ve0528:16020] wal.WALFactory: Instantiating WALProvider of type class org.apache.hadoop.hbase.wal.AsyncFSWALProvider

As the AsyncFSWAL hacks into the internal of DFSClient implementation, it will be easily broken by upgrading the hadoop dependencies, even for a simple patch release. So if you do not specify the wal provider explicitly, we will first try to use the asyncfs, if failed, we will fall back to use filesystem. And notice that this may not always work, so if you still have problem starting HBase due to the problem of starting AsyncFSWAL, please specify filesystem explicitly in the config file.

EC support has been added to hadoop-3.x, and it is incompatible with WAL as the EC output stream does not support hflush/hsync. In order to create a non-EC file in an EC directory, we need to use the new builder-based create API for FileSystem, but it is only introduced in hadoop-2.9+ and for HBase we still need to support hadoop-2.7.x. So please do not enable EC for the WAL directory until we find a way to deal with it.

71.7.3. MultiWAL

With a single WAL per RegionServer, the RegionServer must write to the WAL serially, because HDFS files must be sequential. This causes the WAL to be a performance bottleneck.

HBase 1.0 introduces support MultiWal in HBASE-5699. MultiWAL allows a RegionServer to write multiple WAL streams in parallel, by using multiple pipelines in the underlying HDFS instance, which increases total throughput during writes. This parallelization is done by partitioning incoming edits by their Region. Thus, the current implementation will not help with increasing the throughput to a single Region.

RegionServers using the original WAL implementation and those using the MultiWAL implementation can each handle recovery of either set of WALs, so a zero-downtime configuration update is possible through a rolling restart.

Configure MultiWAL

To configure MultiWAL for a RegionServer, set the value of the property hbase.wal.provider to multiwal by pasting in the following XML:

<property><name>hbase.wal.provider</name><value>multiwal</value></property>

Restart the RegionServer for the changes to take effect.

To disable MultiWAL for a RegionServer, unset the property and restart the RegionServer.

71.7.4. WAL Flushing

TODO (describe).

71.7.5. WAL Splitting

A RegionServer serves many regions. All of the regions in a region server share the same active WAL file. Each edit in the WAL file includes information about which region it belongs to. When a region is opened, the edits in the WAL file which belong to that region need to be replayed. Therefore, edits in the WAL file must be grouped by region so that particular sets can be replayed to regenerate the data in a particular region. The process of grouping the WAL edits by region is called log splitting. It is a critical process for recovering data if a region server fails.

Log splitting is done by the HMaster during cluster start-up or by the ServerShutdownHandler as a region server shuts down. So that consistency is guaranteed, affected regions are unavailable until data is restored. All WAL edits need to be recovered and replayed before a given region can become available again. As a result, regions affected by log splitting are unavailable until the process completes.

Procedure: Log Splitting, Step by Step

- The /hbase/WALs/,, directory is renamed.

Renaming the directory is important because a RegionServer may still be up and accepting requests even if the HMaster thinks it is down. If the RegionServer does not respond immediately and does not heartbeat its ZooKeeper session, the HMaster may interpret this as a RegionServer failure. Renaming the logs directory ensures that existing, valid WAL files which are still in use by an active but busy RegionServer are not written to by accident.

The new directory is named according to the following pattern:/hbase/WALs/<host>,<port>,<startcode>-splitting

An example of such a renamed directory might look like the following:

/hbase/WALs/srv.example.com,60020,1254173957298-splitting

- Each log file is split, one at a time.

The log splitter reads the log file one edit entry at a time and puts each edit entry into the buffer corresponding to the edit’s region. At the same time, the splitter starts several writer threads. Writer threads pick up a corresponding buffer and write the edit entries in the buffer to a temporary recovered edit file. The temporary edit file is stored to disk with the following naming pattern:/hbase/<table_name>/<region_id>/recovered.edits/.temp

This file is used to store all the edits in the WAL log for this region. After log splitting completes, the .temp file is renamed to the sequence ID of the first log written to the file.

To determine whether all edits have been written, the sequence ID is compared to the sequence of the last edit that was written to the HFile. If the sequence of the last edit is greater than or equal to the sequence ID included in the file name, it is clear that all writes from the edit file have been completed.

- After log splitting is complete, each affected region is assigned to a RegionServer.

When the region is opened, the recovered.edits folder is checked for recovered edits files. If any such files are present, they are replayed by reading the edits and saving them to the MemStore. After all edit files are replayed, the contents of the MemStore are written to disk (HFile) and the edit files are deleted.

Handling of Errors During Log Splitting

If you set the hbase.hlog.split.skip.errors option to true, errors are treated as follows:

Any error encountered during splitting will be logged.

The problematic WAL log will be moved into the .corrupt directory under the hbase

rootdir,Processing of the WAL will continue

If the hbase.hlog.split.skip.errors option is set to false, the default, the exception will be propagated and the split will be logged as failed. See HBASE-2958 When hbase.hlog.split.skip.errors is set to false, we fail the split but that’s it. We need to do more than just fail split if this flag is set.

How EOFExceptions are treated when splitting a crashed RegionServer’s WALs

If an EOFException occurs while splitting logs, the split proceeds even when hbase.hlog.split.skip.errors is set to false. An EOFException while reading the last log in the set of files to split is likely, because the RegionServer was likely in the process of writing a record at the time of a crash. For background, see HBASE-2643 Figure how to deal with eof splitting logs

Performance Improvements during Log Splitting

WAL log splitting and recovery can be resource intensive and take a long time, depending on the number of RegionServers involved in the crash and the size of the regions. Enabling or Disabling Distributed Log Splitting was developed to improve performance during log splitting.

Enabling or Disabling Distributed Log Splitting

Distributed log processing is enabled by default since HBase 0.92. The setting is controlled by the hbase.master.distributed.log.splitting property, which can be set to true or false, but defaults to true.

Distributed Log Splitting, Step by Step

After configuring distributed log splitting, the HMaster controls the process. The HMaster enrolls each RegionServer in the log splitting process, and the actual work of splitting the logs is done by the RegionServers. The general process for log splitting, as described in Distributed Log Splitting, Step by Step still applies here.

If distributed log processing is enabled, the HMaster creates a split log manager instance when the cluster is started.

The split log manager manages all log files which need to be scanned and split.

The split log manager places all the logs into the ZooKeeper splitWAL node (/hbase/splitWAL) as tasks.

You can view the contents of the splitWAL by issuing the following

zkClicommand. Example output is shown.ls /hbase/splitWAL[hdfs%3A%2F%2Fhost2.sample.com%3A56020%2Fhbase%2FWALs%2Fhost8.sample.com%2C57020%2C1340474893275-splitting%2Fhost8.sample.com%253A57020.1340474893900,hdfs%3A%2F%2Fhost2.sample.com%3A56020%2Fhbase%2FWALs%2Fhost3.sample.com%2C57020%2C1340474893299-splitting%2Fhost3.sample.com%253A57020.1340474893931,hdfs%3A%2F%2Fhost2.sample.com%3A56020%2Fhbase%2FWALs%2Fhost4.sample.com%2C57020%2C1340474893287-splitting%2Fhost4.sample.com%253A57020.1340474893946]

The output contains some non-ASCII characters. When decoded, it looks much more simple:

[hdfs://host2.sample.com:56020/hbase/WALs/host8.sample.com,57020,1340474893275-splitting/host8.sample.com%3A57020.1340474893900,hdfs://host2.sample.com:56020/hbase/WALs/host3.sample.com,57020,1340474893299-splitting/host3.sample.com%3A57020.1340474893931,hdfs://host2.sample.com:56020/hbase/WALs/host4.sample.com,57020,1340474893287-splitting/host4.sample.com%3A57020.1340474893946]

The listing represents WAL file names to be scanned and split, which is a list of log splitting tasks.

The split log manager monitors the log-splitting tasks and workers.

The split log manager is responsible for the following ongoing tasks:Once the split log manager publishes all the tasks to the splitWAL znode, it monitors these task nodes and waits for them to be processed.

Checks to see if there are any dead split log workers queued up. If it finds tasks claimed by unresponsive workers, it will resubmit those tasks. If the resubmit fails due to some ZooKeeper exception, the dead worker is queued up again for retry.

Checks to see if there are any unassigned tasks. If it finds any, it create an ephemeral rescan node so that each split log worker is notified to re-scan unassigned tasks via the

nodeChildrenChangedZooKeeper event.Checks for tasks which are assigned but expired. If any are found, they are moved back to

TASK_UNASSIGNEDstate again so that they can be retried. It is possible that these tasks are assigned to slow workers, or they may already be finished. This is not a problem, because log splitting tasks have the property of idempotence. In other words, the same log splitting task can be processed many times without causing any problem.The split log manager watches the HBase split log znodes constantly. If any split log task node data is changed, the split log manager retrieves the node data. The node data contains the current state of the task. You can use the

zkCligetcommand to retrieve the current state of a task. In the example output below, the first line of the output shows that the task is currently unassigned. ``` get /hbase/splitWAL/hdfs%3A%2F%2Fhost2.sample.com%3A56020%2Fhbase%2FWALs%2Fhost6.sample.com%2C57020%2C1340474893287-splitting%2Fhost6.sample.com%253A57020.1340474893945

unassigned host2.sample.com:57000 cZxid = 0×7115 ctime = Sat Jun 23 11:13:40 PDT 2012 …

<br />Based on the state of the task whose data is changed, the split log manager does one of the following:-Resubmit the task if it is unassigned-Heartbeat the task if it is assigned-Resubmit or fail the task if it is resigned (see [Reasons a Task Will Fail](docs_en_#distributed.log.replay.failure.reasons))-Resubmit or fail the task if it is completed with errors (see [Reasons a Task Will Fail](docs_en_#distributed.log.replay.failure.reasons))-Resubmit or fail the task if it could not complete due to errors (see [Reasons a Task Will Fail](docs_en_#distributed.log.replay.failure.reasons))-Delete the task if it is successfully completed or failed> Reasons a Task Will Fail> -The task has been deleted.> -The node no longer exists.> -The log status manager failed to move the state of the task to `TASK_UNASSIGNED`.> -The number of resubmits is over the resubmit threshold.3.Each RegionServer’s split log worker performs the log-splitting tasks.<br />Each RegionServer runs a daemon thread called the _split log worker_, which does the work to split the logs. The daemon thread starts when the RegionServer starts, and registers itself to watch HBase znodes. If any splitWAL znode children change, it notifies a sleeping worker thread to wake up and grab more tasks. If a worker’s current task’s node data is changed, the worker checks to see if the task has been taken by another worker. If so, the worker thread stops work on the current task.<br />The worker monitors the splitWAL znode constantly. When a new task appears, the split log worker retrieves the task paths and checks each one until it finds an unclaimed task, which it attempts to claim. If the claim was successful, it attempts to perform the task and updates the task’s `state` property based on the splitting outcome. At this point, the split log worker scans for another unclaimed task.<br />How the Split Log Worker Approaches a Task-It queries the task state and only takes action if the task is in `TASK_UNASSIGNED`state.-If the task is in `TASK_UNASSIGNED` state, the worker attempts to set the state to `TASK_OWNED` by itself. If it fails to set the state, another worker will try to grab it. The split log manager will also ask all workers to rescan later if the task remains unassigned.-If the worker succeeds in taking ownership of the task, it tries to get the task state again to make sure it really gets it asynchronously. In the meantime, it starts a split task executor to do the actual work:-Get the HBase root folder, create a temp folder under the root, and split the log file to the temp folder.-If the split was successful, the task executor sets the task to state `TASK_DONE`.-If the worker catches an unexpected IOException, the task is set to state `TASK_ERR`.-If the worker is shutting down, set the task to state `TASK_RESIGNED`.-If the task is taken by another worker, just log it.4.The split log manager monitors for uncompleted tasks.<br />The split log manager returns when all tasks are completed successfully. If all tasks are completed with some failures, the split log manager throws an exception so that the log splitting can be retried. Due to an asynchronous implementation, in very rare cases, the split log manager loses track of some completed tasks. For that reason, it periodically checks for remaining uncompleted task in its task map or ZooKeeper. If none are found, it throws an exception so that the log splitting can be retried right away instead of hanging there waiting for something that won’t happen.<a name="b38b39c5"></a>#### 71.7.6. WAL CompressionThe content of the WAL can be compressed using LRU Dictionary compression. This can be used to speed up WAL replication to different datanodes. The dictionary can store up to 2 elements; eviction starts after this number is exceeded.To enable WAL compression, set the `hbase.regionserver.wal.enablecompression` property to `true`. The default value for this property is `false`. By default, WAL tag compression is turned on when WAL compression is enabled. You can turn off WAL tag compression by setting the `hbase.regionserver.wal.tags.enablecompression` property to 'false'.A possible downside to WAL compression is that we lose more data from the last block in the WAL if it ill-terminated mid-write. If entries in this last block were added with new dictionary entries but we failed persist the amended dictionary because of an abrupt termination, a read of this last block may not be able to resolve last-written entries.<a name="5d8d2087"></a>#### 71.7.7. DurabilityIt is possible to set _durability_ on each Mutation or on a Table basis. Options include:-_SKIP_WAL_: Do not write Mutations to the WAL (See the next section, [Disabling the WAL](docs_en_#wal.disable)).-_ASYNC_WAL_: Write the WAL asynchronously; do not hold-up clients waiting on the sync of their write to the filesystem but return immediately. The edit becomes visible. Meanwhile, in the background, the Mutation will be flushed to the WAL at some time later. This option currently may lose data. See HBASE-16689.-_SYNC_WAL_: The **default**. Each edit is sync’d to HDFS before we return success to the client.-_FSYNC_WAL_: Each edit is fsync’d to HDFS and the filesystem before we return success to the client.Do not confuse the _ASYNC_WAL_ option on a Mutation or Table with the _AsyncFSWAL_ writer; they are distinct options unfortunately closely named<a name="fdf61ad6"></a>#### 71.7.8. Disabling the WALIt is possible to disable the WAL, to improve performance in certain specific situations. However, disabling the WAL puts your data at risk. The only situation where this is recommended is during a bulk load. This is because, in the event of a problem, the bulk load can be re-run with no risk of data loss.The WAL is disabled by calling the HBase client field `Mutation.writeToWAL(false)`. Use the `Mutation.setDurability(Durability.SKIP_WAL)` and Mutation.getDurability() methods to set and get the field’s value. There is no way to disable the WAL for only a specific table.> If you disable the WAL for anything other than bulk loads, your data is at risk.<a name="f40c00d9"></a>## 72. RegionsRegions are the basic element of availability and distribution for tables, and are comprised of a Store per Column Family. The hierarchy of objects is as follows:

Table (HBase table) Region (Regions for the table) Store (Store per ColumnFamily for each Region for the table) MemStore (MemStore for each Store for each Region for the table) StoreFile (StoreFiles for each Store for each Region for the table) Block (Blocks within a StoreFile within a Store for each Region for the table)