在单独container中部署使用

首先,kafka依赖zookeeper,即使只是单机使用,也得按集群的方式来配置……

所以,先下载两个官方images:

docker pull confluentinc/cp-zookeeperdocker pull confluentinc/cp-kafka

然后创建一个compose:

services:zookeeper:image: confluentinc/cp-zookeepercontainer_name: zookeepermem_limit: 1024Menvironment:ZOOKEEPER_CLIENT_PORT: 2181volumes:- ./zookeeper/data:/data- ./zookeeper/datalog:/datalogports: #端口映射- "2181:2181"kafka:image: confluentinc/cp-kafkacontainer_name: kafkamem_limit: 1024Mdepends_on:- zookeeperenvironment:KAFKA_BROKER_NO: 1KAFKA_ADVERTISED_HOST_NAME: 127.0.0.1 # 修改KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://127.0.0.1:9092 # 修改KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1KAFKA_HEAP_OPTS: "-Xmx512M -Xms16M"volumes:- ./kafka/logs:/kafka/logsports:- "9092:9092"## 镜像:开源的web管理kafka集群的界面kafka-manager:image: sheepkiller/kafka-managerenvironment:ZK_HOSTS: 127.0.0.1ports:- "21105:9000"

注意绑定相关端口,不然本地Spring程序无法访问,只能进入容器内访问!(如果直接在服务器上测试的,为安全起见暂不绑定相关端口)。

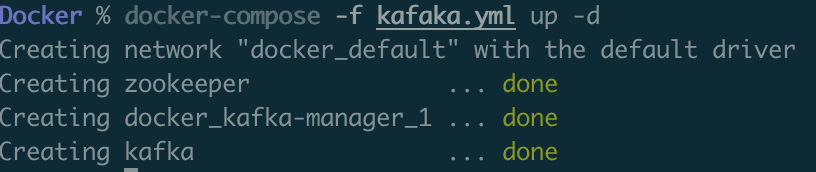

启动compose:

docker-compose -f docker-compose.yml up -d

现在打开两个新的终端窗口,分别用以下命令登录container:

docker exec -it kafka /bin/bash

在其中一个窗口里创建topic并运行producer:

kafka-topics --zookeeper zookeeper:2181 --create --replication-factor 1 --partitions 1 --topic kafkatestkafka-console-producer --broker-list localhost:9092 --topic kafkatest

新版本的kafka,已经不需要依赖zookeeper来创建topic,新版的kafka创建topic指令为下:

kafka-topics --create --bootstrap-server kafka:9092 --replication-factor 1 --partitions 1 --topic kafkatestkafka-console-producer --broker-list localhost:9092 --topic kafkatest

注意,这里的kafka是kafka服务器对应的IP映射的主机名,改成该kafka服务器对应的IP也可。

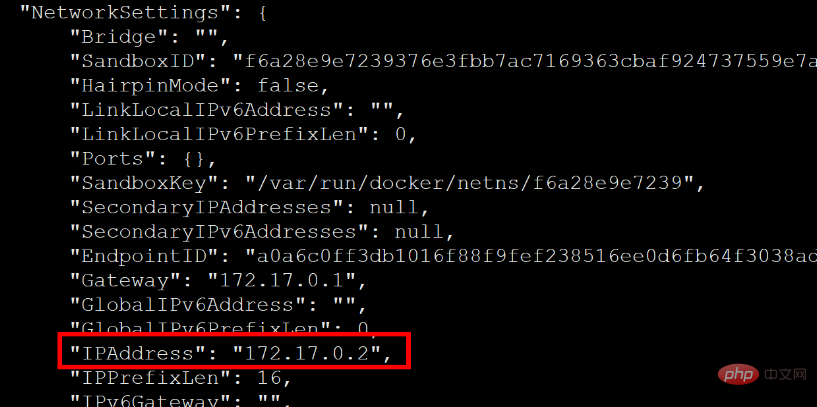

可以在docker中使用如下命令查看容器信息:

docker inspect confluentinc/cp-kafka的容器ID

在另一个窗口里运行consumer:

kafka-console-consumer --bootstrap-server localhost:9092 --topic kafkatest --from-beginning

新版的kafka开启消费者控制台指令为下:

kafka-console-consumer --bootstrap-server localhost:9092 --topic kafkatest --from-beginning

现在,在producer里输入任何内容,都会在consumer里收到。

查看kafka版本号

kafka没有提供version命令,不确定是否有方便的方法,但你可以进入kafka/libs文件夹。你应该看到像kafka_2.10-0.8.2-beta.jar这样的文件,其中2.10是Scala版本,0.8.2-beta是Kafka版本。

docker的话在/usr/share/java/kafka/

跨container的部署

上面的配置只能在单个container里使用,不实用。这是因为kafka advertised配置在localhost上。

需要跨container访问,就需要通过docker的网络访问,要修改这个配置:

version: '2'services:zookeeper:image: confluentinc/cp-zookeepercontainer_name: zookeepermem_limit: 1024Menvironment:ZOOKEEPER_CLIENT_PORT: 2181kafka:image: confluentinc/cp-kafkacontainer_name: kafkamem_limit: 1024Mdepends_on:- zookeeperenvironment:KAFKA_BROKER_NO: 1KAFKA_ADVERTISED_HOST_NAME: domain_name # 修改KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://domain_name:9092 # 修改KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1KAFKA_HEAP_OPTS: "-Xmx512M -Xms16M"

改完重启docker-compose,另外,因为zookeeper重启后,topic不会被持久保存,所以重启后需要重新创建topic。

然后启动两个新的container模拟网络访问:

docker run -it --rm --link kafka:domain_name --network kafka_default --name consumer confluentinc/cp-kafka /bin/bashdocker run -it --rm --link kafka:domain_name --network kafka_default --name producer confluentinc/cp-kafka /bin/bash

注意,需要指定一下docker网络为kafka_default,这是官方image使用的默认网络。

然后分别在consumer和producer两个container里测试:

kafka-console-consumer --bootstrap-server domain_name:9092 --topic kafkatest --from-beginningkafka-console-producer --broker-list domain_name:9092 --topic kafkatest

效果与单container一样。

部署kafka集群的docker-compose.yml

version: '3'services:zoo1:image: zookeeperrestart: alwayscontainer_name: zoo1ports:- "2181:2181"environment:ZOO_MY_ID: 1ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181volumes:- ./zoo1/data:/data- ./zoo1/datalog:/datalogzoo2:image: zookeeperrestart: alwayscontainer_name: zoo2ports:- "2182:2181"environment:ZOO_MY_ID: 2ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181volumes:- ./zoo2/data:/data- ./zoo2/datalog:/datalogzoo3:image: zookeeperrestart: alwayscontainer_name: zoo3ports:- "2183:2181"environment:ZOO_MY_ID: 3ZOO_SERVERS: server.1=zoo1:2888:3888;2181 server.2=zoo2:2888:3888;2181 server.3=zoo3:2888:3888;2181volumes:- ./zoo3/data:/data- ./zoo3/datalog:/datalogkafka1:image: wurstmeister/kafkaports:- "20540:9092"environment:KAFKA_ADVERTISED_HOST_NAME: 192.168.1.73KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.1.73:20540KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181KAFKA_ADVERTISED_PORT: 9092KAFKA_BROKER_ID: 1KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1KAFKA_LOG_DIRS: /kafka/logsvolumes:- ./kafka1/logs:/kafka/logsdepends_on:- zoo1- zoo2- zoo3container_name: kafka1kafka2:image: wurstmeister/kafkaports:- "9093:9092"environment:KAFKA_ADVERTISED_HOST_NAME: 192.168.1.73KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.1.73:9093KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181KAFKA_ADVERTISED_PORT: 9093KAFKA_BROKER_ID: 2KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1KAFKA_LOG_DIRS: /kafka/logsvolumes:- ./kafka2/logs:/kafka/logsdepends_on:- zoo1- zoo2- zoo3container_name: kafka2kafka3:image: wurstmeister/kafkaports:- "9094:9092"environment:KAFKA_ADVERTISED_HOST_NAME: 192.168.1.73KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://192.168.1.73:9094KAFKA_ZOOKEEPER_CONNECT: zoo1:2181,zoo2:2181,zoo3:2181KAFKA_ADVERTISED_PORT: 9094KAFKA_BROKER_ID: 3KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1KAFKA_LOG_DIRS: /kafka/logsvolumes:- ./kafka3/logs:/kafka/logsdepends_on:- zoo1- zoo2- zoo3container_name: kafka3## 镜像:开源的web管理kafka集群的界面kafka-manager:image: sheepkiller/kafka-managerenvironment:ZK_HOSTS: 192.168.1.73ports:- "21105:9000"

从Docker网络之外访问的部署

如果需要从docker网络之外访问,就需要把端口映射到宿主机了。

同样需要修改配置,增加网络映射等:

version: '2'services:zookeeper:image: confluentinc/cp-zookeepercontainer_name: zookeepermem_limit: 1024Menvironment:ZOOKEEPER_CLIENT_PORT: 2181kafka:image: confluentinc/cp-kafkacontainer_name: kafkamem_limit: 1024Mdepends_on:- zookeeperports: # 增加- 9092:9092 # 增加environment:KAFKA_BROKER_NO: 1KAFKA_ADVERTISED_HOST_NAME: domain_nameKAFKA_ADVERTISED_LISTENERS: PLAINTEXT://domain_name:9092KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1KAFKA_HEAP_OPTS: "-Xmx512M -Xms16M"

然后启动两个新的container模拟外部网络访问:

docker run -it --rm --add-host=domain_name:172.17.0.1 --name consumer confluentinc/cp-kafka /bin/bashdocker run -it --rm --add-host=domain_name:172.17.0.1 --name producer confluentinc/cp-kafka /bin/bash

其中172.17.0.1为docker宿主机在默认docker网络(注意不是kafka_default)里的IP,具体可以通过以下命令查看:

ip route

然后分别在consumer和producer两个container里测试:

kafka-console-consumer --bootstrap-server domain_name:9092 --topic kafkatest --from-beginningkafka-console-producer --broker-list domain_name:9092 --topic kafkatest

这里有一个坑需要注意的是:

如果宿主机上有防火墙,需要增加一条规则,允许docker网络访问宿主机的端口,否则会连接失败。比如:

# 取得行号iptables -L INPUT --line-num# xx为最后一行DROP的行号,插到它前面iptables -I INPUT xx -p tcp -m tcp -s 172.17.0.0/16 --dport 9092 -j ACCEPT

效果与前两个例子相同。

docker-compose启动

我有一个 docker-compose.yml 文件,其中包含多个容器:redis,postgres,mysql,worker

在的工作过程中,我经常需要重新启动它才能更新。有没有什么好的方法来重新启动一个容器(例如 worker )而不重新启动其他容器?

解决方案这很简单:使用命令:

docker-compose restart worker

您可以设置等待停止的时间,然后再杀死容器(以秒为单位)

docker-compose restart -t 30 worker

另外还有一种情况就是,本地修改了代码,需要重新打包发布,但是又不想全部docker-compose停止再启动,那么就可以单独修改其中一个。

- 首先通过 docker ps 查询已启动的容器(docker ps -a 查询所有容器,包括未启动的)

命令 docker container ls -a 也一样。 - 将要更新的容器停止docker-compose stop worker (docker-compose stop 是停止yaml包含的所有容器)

- 将第二步已停止的容器删除 docker container rm c2cbf59d4e3c (c2cbf59d4e3c是worker的容器id)

- 查询所有的镜像 docker images

- 备份镜像,防止意外出错可恢复。docker save worker -o /home/bak/worker-bak.tar

- 删除镜像 docker rmi worker

- 将打包好的更新的jar文件按照docker-compose的描述地址放好,再根据文件编译新的镜像 docker build . -f Dockerfile-Worker -t worker

- 启动docker-compose up -d worker

- 重启docker-compose restart worker

Dockerfile-Worker

FROM jdk1.8WORKDIR /appCOPY ./target/worker.jar app.jarCMD java -Xmx512m -Duser.timezone=GMT+8 -jar app.jar

docker-compose.yml

version: '3'services:redis:image: rediscontainer_name: docker_redisvolumes:- ./datadir:/data- ./conf/redis.conf:/usr/local/etc/redis/redis.conf- ./logs:/logsports:- "20520:6379"mysql-db:container_name: mysql-docker # 指定容器的名称image: mysql:5.7.16 # 指定镜像和版本restart: alwayscommand: --default-authentication-plugin=mysql_native_password #这行代码解决无法访问的问题ports:- "3306:3306"environment:MYSQL_ROOT_PASSWORD: ${MYSQL_ROOT_PASSWORD}MYSQL_ROOT_HOST: ${MYSQL_ROOT_HOST}volumes:- "./mysql/data:/var/lib/mysql" # 挂载数据目录- "./mysql/config:/etc/mysql/conf.d" # 挂载配置文件目录register-center:image: register-centervolumes:- /data/logs:/app/logsmem_limit: 600mextra_hosts:- 'node1.nifi-dev.com:192.168.1.10'- 'node2.nifi-dev.com:192.168.1.10'- 'node3.nifi-dev.com:192.168.1.10'environment:- CUR_ENV=sitports:- "20741:8761"config-center:image: config-centervolumes:- /data/logs:/app/logs- /data/skywalking/agent:/agentmem_limit: 2000mextra_hosts:- 'node1.nifi-dev.com:192.168.1.10'- 'node2.nifi-dev.com:192.168.1.10'- 'node3.nifi-dev.com:192.168.1.10'depends_on:- register-centerenvironment:- EUREKA_SERVER_LIST=http://register-center:8761/eureka/command: /wait-for.sh register-center:8761/eureka/apps -- java -javaagent:/agent/skywalking-agent.jar -Dskywalking.agent.service_name=config-center -Dskywalking.collector.backend_service=192.168.1.147:20764 -Xmx256m -jar /app/app.jar --server.port=8760woeker:image: woekervolumes:- /data/logs:/app/logsmem_limit: 600mextra_hosts:- 'node1.nifi-dev.com:192.168.1.10'- 'node2.nifi-dev.com:192.168.1.10'- 'node3.nifi-dev.com:192.168.1.10'environment:- CUR_ENV=sitports:- "20741:8761"