- 一、K8S安装篇

- 2.二进制高可用安装k8s集群(生产级)

- 10.96.0.是k8s service的网段,如果说需要更改k8s service网段,那就需要更改10.96.0.1,

# 如果不是高可用集群,192.168.68.48为Master01的IP - 二、K8S基础篇

- 三、K8S进阶篇

- 创建目录

- 暴露服务

- 重启服务

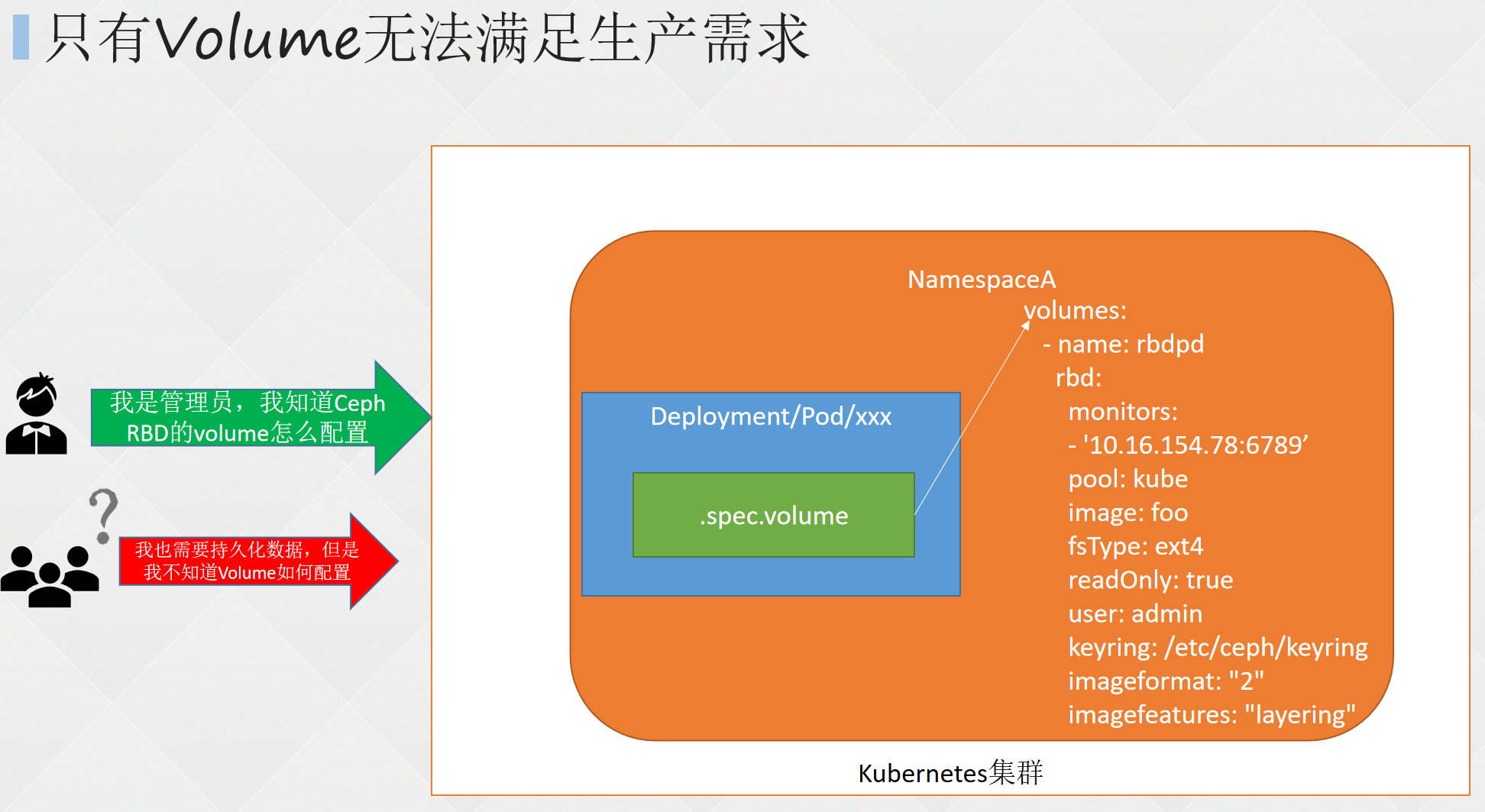

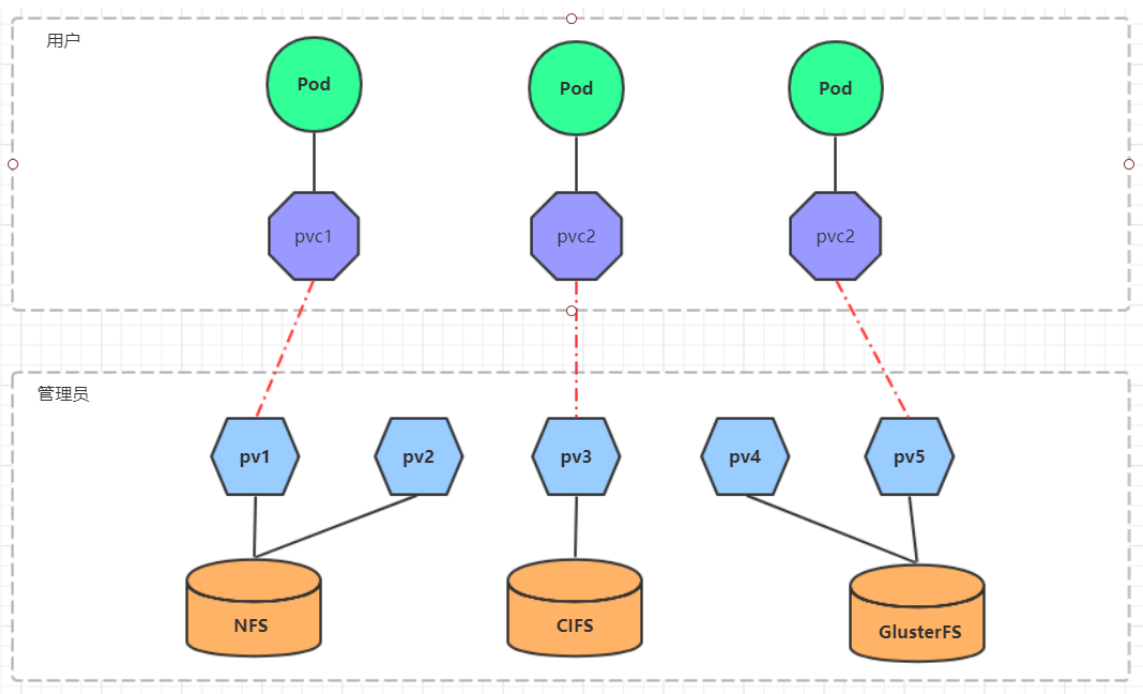

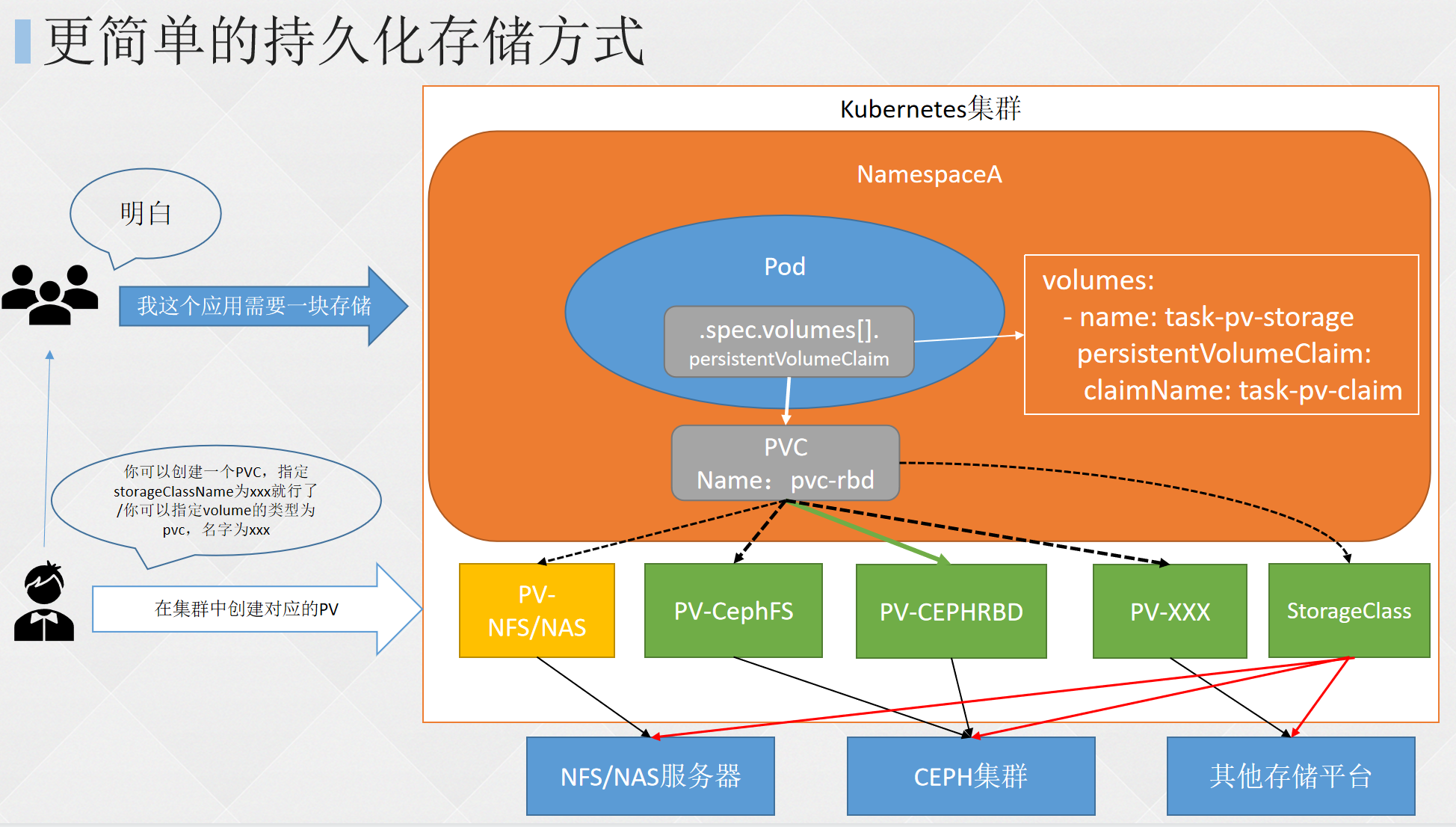

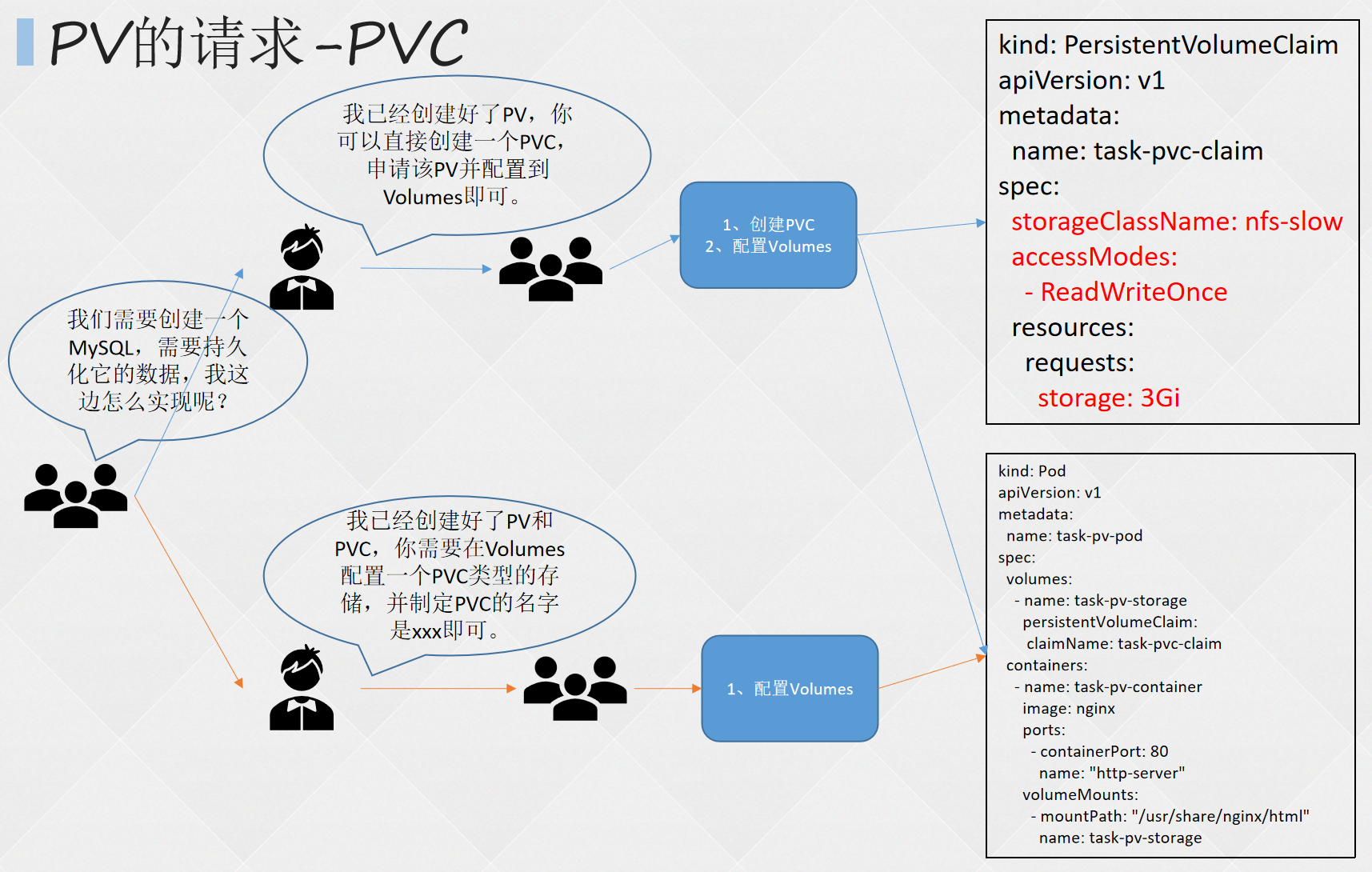

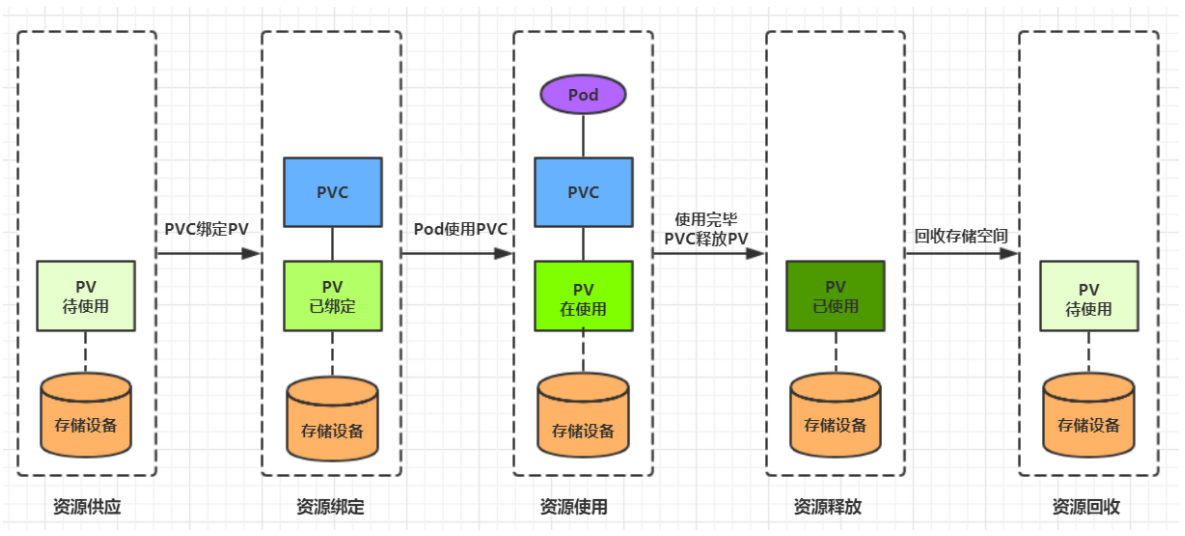

- 创建 pv

- 查看pv

- 创建pvc

- 查看pvc

- 查看pv

- 创建pod

- 查看pod

- 查看pvc

- 查看pv

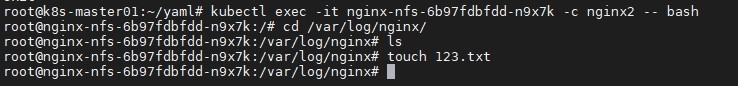

- 查看nfs中的文件存储

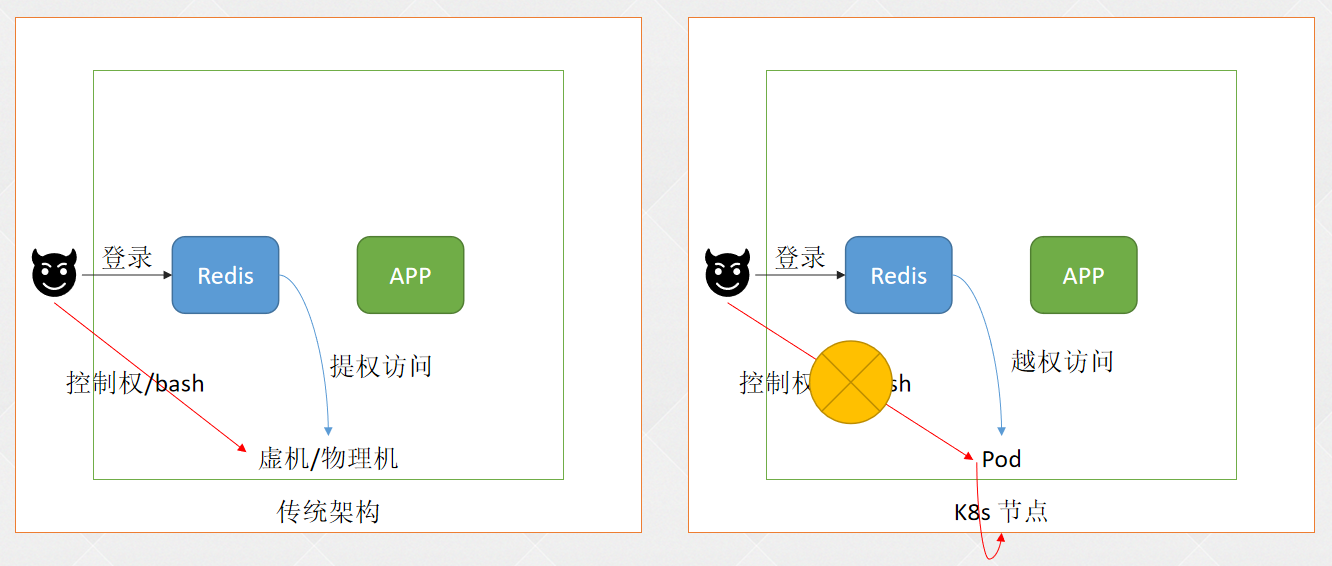

- 3.高级准入控制

- 4.细粒度权限控制

- 四、K8S高级篇

- 五、K8S运维篇

一、K8S安装篇

1.Kubeadm高可用安装k8s集群

0.网段说明

集群安装时会涉及到三个网段:

宿主机网段:就是安装k8s的服务器

Pod网段:k8s Pod的网段,相当于容器的IP

Service网段:k8s service网段,service用于集群容器通信。

一般service网段会设置为10.96.0.0/12

Pod网段会设置成10.244.0.0/12或者172.16.0.1/12

宿主机网段可能是192.168.0.0/24

需要注意的是这三个网段不能有任何交叉。

比如如果宿主机的IP是10.105.0.x

那么service网段就不能是10.96.0.0/12,因为10.96.0.0/12网段可用IP是:

10.96.0.1 ~ 10.111.255.255

所以10.105是在这个范围之内的,属于网络交叉,此时service网段需要更换,

可以更改为192.168.0.0/16网段(注意如果service网段是192.168开头的子网掩码最好不要是12,最好为16,因为子网掩码是12他的起始IP为192.160.0.1 不是192.168.0.1)。

同样的道理,技术别的网段也不能重复。可以通过http://tools.jb51.net/aideddesign/ip_net_calc/计算:

所以一般的推荐是,直接第一个开头的就不要重复,比如你的宿主机是192开头的,那么你的service可以是10.96.0.0/12.

如果你的宿主机是10开头的,就直接把service的网段改成192.168.0.0/16

如果你的宿主机是172开头的,就直接把pod网段改成192.168.0.0/12

注意搭配,均为10网段、172网段、192网段的搭配,第一个开头数字不一样就免去了网段冲突的可能性,也可以减去计算的步骤。

1.1Kubeadm基础环境配置

1.修改apt源

所有节点配置vim /etc/apt/sources.list

deb http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-proposed main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-proposed main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-backports main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-backports main restricted universe multiverse

ubuntu镜像-ubuntu下载地址-ubuntu安装教程-阿里巴巴开源镜像站 (aliyun.com)

2.修改hosts文件

所有节点配置vim /etc/hosts

192.168.68.20 k8s-m01192.168.68.21 k8s-m02192.168.68.22 k8s-m03192.168.68.18 k8s-master-lb # 如果不是高可用集群,该IP为Master01的IP192.168.68.23 k8s-n01192.168.68.24 k8s-n02192.168.68.25 k8s-n03

3.关闭swap分区

所有节点配置vim /etc/fstab

临时禁用:swapoff -a永久禁用:vim /etc/fstab 注释掉swap条目,重启系统

4.时间同步

所有节点

apt install ntpdate -yrm -rf /etc/localtimeln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtimentpdate cn.ntp.org.cn# 加入到crontab -e*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com# 加入到开机自动同步,vim /etc/rc.localntpdate cn.ntp.org.cn

5.配置limit

所有节点ulimit -SHn 65535vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 655360* hard nofile 131072* soft nproc 655350* hard nproc 655350* soft memlock unlimited* hard memlock unlimited

6.修改内核参数

cat >/etc/sysctl.conf <<EOF# Controls source route verificationnet.ipv4.conf.default.rp_filter = 1net.ipv4.ip_nonlocal_bind = 1net.ipv4.ip_forward = 1# Do not accept source routingnet.ipv4.conf.default.accept_source_route = 0# Controls the System Request debugging functionality of the kernelkernel.sysrq = 0# Controls whether core dumps will append the PID to the core filename.# Useful for debugging multi-threadedapplications. kernel.core_uses_pid = 1# Controls the use of TCP syncookiesnet.ipv4.tcp_syncookies = 1# Disable netfilter on bridges.net.bridge.bridge-nf-call-ip6tables = 0net.bridge.bridge-nf-call-iptables = 0net.bridge.bridge-nf-call-arptables = 0# Controls the default maxmimum size of a mesage queuekernel.msgmnb = 65536# # Controls the maximum size of a message, in byteskernel.msgmax = 65536# Controls the maximum shared segment size, in byteskernel.shmmax = 68719476736# # Controls the maximum number of shared memory segments, in pageskernel.shmall = 4294967296# TCP kernel paramaternet.ipv4.tcp_mem = 786432 1048576 1572864net.ipv4.tcp_rmem = 4096 87380 4194304net.ipv4.tcp_wmem = 4096 16384 4194304 net.ipv4.tcp_window_scaling = 1net.ipv4.tcp_sack = 1# socket buffernet.core.wmem_default = 8388608net.core.rmem_default = 8388608net.core.rmem_max = 16777216net.core.wmem_max = 16777216net.core.netdev_max_backlog = 262144net.core.somaxconn = 20480net.core.optmem_max = 81920# TCP connnet.ipv4.tcp_max_syn_backlog = 262144net.ipv4.tcp_syn_retries = 3net.ipv4.tcp_retries1 = 3net.ipv4.tcp_retries2 = 15# tcp conn reusenet.ipv4.tcp_timestamps = 0net.ipv4.tcp_tw_reuse = 0net.ipv4.tcp_tw_recycle = 0net.ipv4.tcp_fin_timeout = 1net.ipv4.tcp_max_tw_buckets = 20000net.ipv4.tcp_max_orphans = 3276800net.ipv4.tcp_synack_retries = 1net.ipv4.tcp_syncookies = 1# keepalive connnet.ipv4.tcp_keepalive_time = 300net.ipv4.tcp_keepalive_intvl = 30net.ipv4.tcp_keepalive_probes = 3net.ipv4.ip_local_port_range = 10001 65000# swapvm.overcommit_memory = 0vm.swappiness = 10#net.ipv4.conf.eth1.rp_filter = 0#net.ipv4.conf.lo.arp_ignore = 1#net.ipv4.conf.lo.arp_announce = 2#net.ipv4.conf.all.arp_ignore = 1#net.ipv4.conf.all.arp_announce = 2EOF

安装常用软件apt install wget jq psmisc vim net-tools telnet lvm2 git -y

所有节点安装ipvsadm

apt install ipvsadm ipset conntrack -ymodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrackvim /etc/modules-load.d/ipvs.conf# 加入以下内容ip_vsip_vs_lcip_vs_wlcip_vs_rrip_vs_wrrip_vs_lblcip_vs_lblcrip_vs_dhip_vs_ship_vs_foip_vs_nqip_vs_sedip_vs_ftpip_vs_shnf_conntrackip_tablesip_setxt_setipt_setipt_rpfilteript_REJECTipip

然后执行systemctl enable —now systemd-modules-load.service即可

开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

cat <<EOF > /etc/sysctl.d/k8s.confnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1fs.may_detach_mounts = 1net.ipv4.conf.all.route_localnet = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_probes = 3net.ipv4.tcp_keepalive_intvl =15net.ipv4.tcp_max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 65536net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.tcp_timestamps = 0net.core.somaxconn = 16384EOF

sysctl —system

所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

reboot

查看

lsmod | grep —color=auto -e ip_vs -e nf_conntrack

8.Master01节点免密钥登录其他节点

安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作,阿里云或者AWS上需要单独一台kubectl服务器。密钥配置如下:

ssh-keygen -t rsa -f /root/.ssh/id_rsa -C "192.168.68.20@k8s-m01" -N "" for i in k8s-m01 k8s-m02 k8s-m03 k8s-n01 k8s-n02 k8s-n03;do ssh-copy-id -i /root/.ssh/id_rsa.pub $i;done

1.2基本组件安装

1.所有节点安装docker

apt install docker.io -y

提示:

由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd

registry-mirros配置镜像加速

mkdir /etc/dockercat > /etc/docker/daemon.json <<EOF{"exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors": ["https://zksmnpbq.mirror.aliyuncs.com"]}EOF

设置开机自启**systemctl daemon-reload && systemctl enable --now docker**

2.所有节点安装k8s组件

apt install kubeadm kubelet kubectl -y

如果无法下载先配置k8s软件源

默认配置的pause镜像使用gcr.io仓库,国内可能无法访问,所以这里配置Kubelet使用阿里云的pause镜像:

# 查看 kubelet 配置systemctl status kubeletcd /etc/systemd/system/kubelet.service.d# 添加 Environment="KUBELET_POD_INFRA_CONTAINER= --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.4.1" 如下cat 10-kubeadm.conf# Note: This dropin only works with kubeadm and kubelet v1.11+[Service]Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"Environment="KUBELET_CGROUP_ARGS=--system-reserved=memory=10Gi --kube-reserved=memory=400Mi --eviction-hard=imagefs.available<15%,memory.available<300Mi,nodefs.available<10%,nodefs.inodesFree<5% --cgroup-driver=systemd"Environment="KUBELET_POD_INFRA_CONTAINER= --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.1"# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamicallyEnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.EnvironmentFile=-/etc/sysconfig/kubeletExecStart=ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_CGROUP_ARGS $KUBELET_EXTRA_ARGS# 重启 kubelet 服务systemctl daemon-reloadsystemctl restart kubelet

3.所有节点安装k8s软件源

apt-get update && apt-get install -y apt-transport-httpscurl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -vim /etc/apt/sources.list.d/kubernetes.listdeb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial mainapt-get updateapt-get install -y kubelet=1.20.15-00 kubeadm=1.20.15-00 kubectl=1.20.15-00

1.3高可用组件安装

(注意:如果不是高可用集群,haproxy和keepalived无需安装)

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

所有Master节点通过apt安装HAProxy和KeepAlived:apt install keepalived haproxy -y

所有Master节点配置HAProxy(详细配置参考HAProxy文档,所有Master节点的HAProxy配置相同):

vim /etc/haproxy/haproxy.cfgglobalmaxconn 2000ulimit-n 16384log 127.0.0.1 local0 errstats timeout 30sdefaultslog globalmode httpoption httplogtimeout connect 5000timeout client 50000timeout server 50000timeout http-request 15stimeout http-keep-alive 15sfrontend monitor-inbind *:33305mode httpoption httplogmonitor-uri /monitorfrontend k8s-masterbind 0.0.0.0:16443bind 127.0.0.1:16443mode tcpoption tcplogtcp-request inspect-delay 5sdefault_backend k8s-masterbackend k8s-mastermode tcpoption tcplogoption tcp-checkbalance roundrobindefault-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100server k8s-m01 192.168.68.20:6443 checkserver k8s-m02 192.168.68.21:6443 checkserver k8s-m03 192.168.68.22:6443 check

所有Master节点配置KeepAlived,配置不一样,注意区分

# vim /etc/keepalived/keepalived.conf ,注意每个节点的IP和网卡(interface参数)

M01节点的配置:

vim /etc/keepalived/keepalived.conf! Configuration File for keepalivedglobal_defs {router_id LVS_DEVELscript_user rootenable_script_security}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2rise 1}vrrp_instance VI_1 {state MASTERinterface ens33mcast_src_ip 192.168.68.20virtual_router_id 51priority 101advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.68.18}track_script {chk_apiserver}}

M02节点的配置:

! Configuration File for keepalivedglobal_defs {router_id LVS_DEVELscript_user rootenable_script_security}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2rise 1}vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.68.21virtual_router_id 51priority 100advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.68.18}track_script {chk_apiserver}}

M03节点的配置:

! Configuration File for keepalivedglobal_defs {router_id LVS_DEVELscript_user rootenable_script_security}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2rise 1}vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.68.22virtual_router_id 51priority 100advert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.68.18}track_script {chk_apiserver}}

所有master节点配置KeepAlived健康检查文件:

vim /etc/keepalived/check_apiserver.sh#!/bin/basherr=0for k in $(seq 1 3)docheck_code=$(pgrep haproxy)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 1continueelseerr=0breakfidoneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1elseexit 0fichmod +x /etc/keepalived/check_apiserver.sh

启动haproxy和keepalived

systemctl daemon-reloadsystemctl enable --now haproxysystemctl enable --now keepalivedsystemctl restart keepalived haproxy

重要:如果安装了keepalived和haproxy,需要测试keepalived是否是正常的

测试VIP

root:kubelet.service.d/ # ping 192.168.68.18 -c 4 [11:34:19]PING 192.168.68.18 (192.168.68.18) 56(84) bytes of data.64 bytes from 192.168.68.18: icmp_seq=1 ttl=64 time=0.032 ms64 bytes from 192.168.68.18: icmp_seq=2 ttl=64 time=0.043 ms64 bytes from 192.168.68.18: icmp_seq=3 ttl=64 time=0.033 ms64 bytes from 192.168.68.18: icmp_seq=4 ttl=64 time=0.038 ms--- 192.168.68.18 ping statistics ---4 packets transmitted, 4 received, 0% packet loss, time 3075msrtt min/avg/max/mdev = 0.032/0.036/0.043/0.004 ms

root:~/ # telnet 192.168.68.18 16443 [11:44:03]Trying 192.168.68.18...Connected to 192.168.68.18.Escape character is '^]'.Connection closed by foreign host.

1.4.集群初始化

1.以下操作只在master01节点执行

M01节点创建kubeadm-config.yaml配置文件如下:

Master01:(# 注意,如果不是高可用集群,192.168.68.18:16443改为m01的地址,16443改为apiserver的端口,默认是6443,

注意更改kubernetesVersion的值和自己服务器kubeadm的版本一致:kubeadm version)

注意:以下文件内容,宿主机网段、podSubnet网段、serviceSubnet网段不能重复

apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups:- system:bootstrappers:kubeadm:default-node-tokentoken: 7t2weq.bjbawausm0jaxuryttl: 24h0m0susages:- signing- authenticationkind: InitConfigurationlocalAPIEndpoint:advertiseAddress: 192.168.68.20bindPort: 6443nodeRegistration:criSocket: /var/run/dockershim.sockname: k8s-m01taints:- effect: NoSchedulekey: node-role.kubernetes.io/master---apiServer:certSANs:- 192.168.68.18timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: 192.168.68.18:16443controllerManager: {}dns:type: CoreDNSetcd:local:dataDir: /var/lib/etcdimageRepository: registry.cn-hangzhou.aliyuncs.com/google_containerskind: ClusterConfigurationkubernetesVersion: v1.23.5networking:dnsDomain: cluster.localpodSubnet: 172.16.0.0/12serviceSubnet: 10.96.0.0/16scheduler: {}

更新kubeadm文件kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

将new.yaml文件复制到其他master节点,for i in k8s-m02 k8s-m03; do scp new.yaml $i:/root/; done

所有Master节点提前下载镜像,可以节省初始化时间(其他节点不需要更改任何配置,包括IP地址也不需要更改):kubeadm config images pull --config /root/new.yaml

2.所有节点设置开机自启动kubelet

systemctl enable --now kubelet(如果启动失败无需管理,初始化成功以后即可启动)

3.Master01节点初始化

M01节点初始化,初始化以后会在/etc/kubernetes目录下生成对应的证书和配置文件,之后其他Master节点加入M01即可:kubeadm init --config /root/new.yaml --upload-certs

如果初始化失败,重置后再次初始化,命令如下:kubeadm reset -f ; ipvsadm --clear ; rm -rf ~/.kube

初始化成功以后,会产生Token值,用于其他节点加入时使用,因此要记录下初始化成功生成的token值(令牌值):

Your Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root:kubeadm join 192.168.68.18:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:d9c52c2db865df54fd9db22e911ffb7adf12d1244103005fc1885933c6d27673 \--control-plane --certificate-key 8da0fbf16c11810bb4930846896d21d633af936839c3941890d3c5cf85b98316Please note that the certificate-key gives access to cluster sensitive data, keep it secret!As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.68.18:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:d9c52c2db865df54fd9db22e911ffb7adf12d1244103005fc1885933c6d27673

4.Master01节点配置环境变量,用于访问Kubernetes集群:

cat <<EOF >> /root/.bashrcexport KUBECONFIG=/etc/kubernetes/admin.confEOFcat <<EOF >> /root/.zshrcexport KUBECONFIG=/etc/kubernetes/admin.confEOF

查看节点状态:kubectl get node

采用初始化安装方式,所有的系统组件均以容器的方式运行并且在kube-system命名空间内,此时可以查看Pod状态:kubectl get po -n kube-system

5.配置k8s命令补全

临时可以使用apt install -y bash-completionsource /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)永久使用编辑~/.bashrc或echo "source <(kubectl completion bash)" >>~/.bashrcvim ~/.bashrc添加以下内容source <(kubectl completion bash)保存退出,输入以下命令source ~/.bashrc

1.Kubectl 自动补全

BASH

apt install -y bash-completionsource <(kubectl completion bash) # 在 bash 中设置当前 shell 的自动补全,要先安装 bash-completion 包。echo "source <(kubectl completion bash)" >> ~/.bashrc # 在您的 bash shell 中永久的添加自动补全source ~/.bashrc

您还可以为 kubectl 使用一个速记别名,该别名也可以与 completion 一起使用:

alias k=kubectlcomplete -F __start_kubectl k

ZSH

apt install -y bash-completionsource <(kubectl completion zsh) # 在 zsh 中设置当前 shell 的自动补全echo "[[ $commands[kubectl] ]] && source <(kubectl completion zsh)" >> ~/.zshrc # 在您的 zsh shell 中永久的添加自动补全source ~/.zshrc

1.5.Master和node节点

注意:以下步骤是上述init命令产生的Token过期了才需要执行以下步骤,如果没有过期不需要执行

Token过期后生成新的token:

kubeadm token create —print-join-command

Master需要生成__—certificate-key

kubeadm init phase upload-certs —upload-certs

Token没有过期直接执行Join就行了

其他master加入集群,master02和master03分别执行

kubeadm join 192.168.68.18:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:d9c52c2db865df54fd9db22e911ffb7adf12d1244103005fc1885933c6d27673 \--control-plane --certificate-key 8da0fbf16c11810bb4930846896d21d633af936839c3941890d3c5cf85b98316

查看当前状态:kubectl get node

Node节点的配置

Node节点上主要部署公司的一些业务应用,生产环境中不建议Master节点部署系统组件之外的其他Pod,测试环境可以允许Master节点部署Pod以节省系统资源。

kubeadm join 192.168.68.18:16443 --token 7t2weq.bjbawausm0jaxury \--discovery-token-ca-cert-hash sha256:d9c52c2db865df54fd9db22e911ffb7adf12d1244103005fc1885933c6d27673

所有节点初始化完成后,查看集群状态

kubectl get node

1.6.网络组件Calico组件的安装

calico-etcd.yaml

---# Source: calico/templates/calico-etcd-secrets.yaml# The following contains k8s Secrets for use with a TLS enabled etcd cluster.# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/apiVersion: v1kind: Secrettype: Opaquemetadata:name: calico-etcd-secretsnamespace: kube-systemdata:# Populate the following with etcd TLS configuration if desired, but leave blank if# not using TLS for etcd.# The keys below should be uncommented and the values populated with the base64# encoded contents of each file that would be associated with the TLS data.# Example command for encoding a file contents: cat <file> | base64 -w 0# etcd-key: null# etcd-cert: null# etcd-ca: null---# Source: calico/templates/calico-config.yaml# This ConfigMap is used to configure a self-hosted Calico installation.kind: ConfigMapapiVersion: v1metadata:name: calico-confignamespace: kube-systemdata:# Configure this with the location of your etcd cluster.etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"# If you're using TLS enabled etcd uncomment the following.# You must also populate the Secret below with these files.etcd_ca: "" # "/calico-secrets/etcd-ca"etcd_cert: "" # "/calico-secrets/etcd-cert"etcd_key: "" # "/calico-secrets/etcd-key"# Typha is disabled.typha_service_name: "none"# Configure the backend to use.calico_backend: "bird"# Configure the MTU to use for workload interfaces and tunnels.# By default, MTU is auto-detected, and explicitly setting this field should not be required.# You can override auto-detection by providing a non-zero value.veth_mtu: "0"# The CNI network configuration to install on each node. The special# values in this config will be automatically populated.cni_network_config: |-{"name": "k8s-pod-network","cniVersion": "0.3.1","plugins": [{"type": "calico","log_level": "info","log_file_path": "/var/log/calico/cni/cni.log","etcd_endpoints": "__ETCD_ENDPOINTS__","etcd_key_file": "__ETCD_KEY_FILE__","etcd_cert_file": "__ETCD_CERT_FILE__","etcd_ca_cert_file": "__ETCD_CA_CERT_FILE__","mtu": __CNI_MTU__,"ipam": {"type": "calico-ipam"},"policy": {"type": "k8s"},"kubernetes": {"kubeconfig": "__KUBECONFIG_FILEPATH__"}},{"type": "portmap","snat": true,"capabilities": {"portMappings": true}},{"type": "bandwidth","capabilities": {"bandwidth": true}}]}---# Source: calico/templates/calico-kube-controllers-rbac.yaml# Include a clusterrole for the kube-controllers component,# and bind it to the calico-kube-controllers serviceaccount.kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: calico-kube-controllersrules:# Pods are monitored for changing labels.# The node controller monitors Kubernetes nodes.# Namespace and serviceaccount labels are used for policy.- apiGroups: [""]resources:- pods- nodes- namespaces- serviceaccountsverbs:- watch- list- get# Watch for changes to Kubernetes NetworkPolicies.- apiGroups: ["networking.k8s.io"]resources:- networkpoliciesverbs:- watch- list---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1metadata:name: calico-kube-controllersroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: calico-kube-controllerssubjects:- kind: ServiceAccountname: calico-kube-controllersnamespace: kube-system------# Source: calico/templates/calico-node-rbac.yaml# Include a clusterrole for the calico-node DaemonSet,# and bind it to the calico-node serviceaccount.kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: calico-noderules:# The CNI plugin needs to get pods, nodes, and namespaces.- apiGroups: [""]resources:- pods- nodes- namespacesverbs:- get# EndpointSlices are used for Service-based network policy rule# enforcement.- apiGroups: ["discovery.k8s.io"]resources:- endpointslicesverbs:- watch- list- apiGroups: [""]resources:- endpoints- servicesverbs:# Used to discover service IPs for advertisement.- watch- list# Pod CIDR auto-detection on kubeadm needs access to config maps.- apiGroups: [""]resources:- configmapsverbs:- get- apiGroups: [""]resources:- nodes/statusverbs:# Needed for clearing NodeNetworkUnavailable flag.- patch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: calico-noderoleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: calico-nodesubjects:- kind: ServiceAccountname: calico-nodenamespace: kube-system---# Source: calico/templates/calico-node.yaml# This manifest installs the calico-node container, as well# as the CNI plugins and network config on# each master and worker node in a Kubernetes cluster.kind: DaemonSetapiVersion: apps/v1metadata:name: calico-nodenamespace: kube-systemlabels:k8s-app: calico-nodespec:selector:matchLabels:k8s-app: calico-nodeupdateStrategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1template:metadata:labels:k8s-app: calico-nodespec:nodeSelector:kubernetes.io/os: linuxhostNetwork: truetolerations:# Make sure calico-node gets scheduled on all nodes.- effect: NoScheduleoperator: Exists# Mark the pod as a critical add-on for rescheduling.- key: CriticalAddonsOnlyoperator: Exists- effect: NoExecuteoperator: ExistsserviceAccountName: calico-node# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.terminationGracePeriodSeconds: 0priorityClassName: system-node-criticalinitContainers:# This container installs the CNI binaries# and CNI network config file on each node.- name: install-cniimage: registry.cn-beijing.aliyuncs.com/dotbalo/cni:v3.22.0command: ["/opt/cni/bin/install"]envFrom:- configMapRef:# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.name: kubernetes-services-endpointoptional: trueenv:# Name of the CNI config file to create.- name: CNI_CONF_NAMEvalue: "10-calico.conflist"# The CNI network config to install on each node.- name: CNI_NETWORK_CONFIGvalueFrom:configMapKeyRef:name: calico-configkey: cni_network_config# The location of the etcd cluster.- name: ETCD_ENDPOINTSvalueFrom:configMapKeyRef:name: calico-configkey: etcd_endpoints# CNI MTU Config variable- name: CNI_MTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Prevents the container from sleeping forever.- name: SLEEPvalue: "false"volumeMounts:- mountPath: /host/opt/cni/binname: cni-bin-dir- mountPath: /host/etc/cni/net.dname: cni-net-dir- mountPath: /calico-secretsname: etcd-certssecurityContext:privileged: true# Adds a Flex Volume Driver that creates a per-pod Unix Domain Socket to allow Dikastes# to communicate with Felix over the Policy Sync API.- name: flexvol-driverimage: registry.cn-beijing.aliyuncs.com/dotbalo/pod2daemon-flexvol:v3.22.0volumeMounts:- name: flexvol-driver-hostmountPath: /host/driversecurityContext:privileged: truecontainers:# Runs calico-node container on each Kubernetes node. This# container programs network policy and routes on each# host.- name: calico-nodeimage: registry.cn-beijing.aliyuncs.com/dotbalo/node:v3.22.0envFrom:- configMapRef:# Allow KUBERNETES_SERVICE_HOST and KUBERNETES_SERVICE_PORT to be overridden for eBPF mode.name: kubernetes-services-endpointoptional: trueenv:# The location of the etcd cluster.- name: ETCD_ENDPOINTSvalueFrom:configMapKeyRef:name: calico-configkey: etcd_endpoints# Location of the CA certificate for etcd.- name: ETCD_CA_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_ca# Location of the client key for etcd.- name: ETCD_KEY_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_key# Location of the client certificate for etcd.- name: ETCD_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_cert# Set noderef for node controller.- name: CALICO_K8S_NODE_REFvalueFrom:fieldRef:fieldPath: spec.nodeName# Choose the backend to use.- name: CALICO_NETWORKING_BACKENDvalueFrom:configMapKeyRef:name: calico-configkey: calico_backend# Cluster type to identify the deployment type- name: CLUSTER_TYPEvalue: "k8s,bgp"# Auto-detect the BGP IP address.- name: IPvalue: "autodetect"# Enable IPIP- name: CALICO_IPV4POOL_IPIPvalue: "Always"# Enable or Disable VXLAN on the default IP pool.- name: CALICO_IPV4POOL_VXLANvalue: "Never"# Set MTU for tunnel device used if ipip is enabled- name: FELIX_IPINIPMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Set MTU for the VXLAN tunnel device.- name: FELIX_VXLANMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# Set MTU for the Wireguard tunnel device.- name: FELIX_WIREGUARDMTUvalueFrom:configMapKeyRef:name: calico-configkey: veth_mtu# The default IPv4 pool to create on startup if none exists. Pod IPs will be# chosen from this range. Changing this value after installation will have# no effect. This should fall within `--cluster-cidr`.- name: CALICO_IPV4POOL_CIDRvalue: "POD_CIDR"# Disable file logging so `kubectl logs` works.- name: CALICO_DISABLE_FILE_LOGGINGvalue: "true"# Set Felix endpoint to host default action to ACCEPT.- name: FELIX_DEFAULTENDPOINTTOHOSTACTIONvalue: "ACCEPT"# Disable IPv6 on Kubernetes.- name: FELIX_IPV6SUPPORTvalue: "false"- name: FELIX_HEALTHENABLEDvalue: "true"securityContext:privileged: trueresources:requests:cpu: 250mlifecycle:preStop:exec:command:- /bin/calico-node- -shutdownlivenessProbe:exec:command:- /bin/calico-node- -felix-live- -bird-liveperiodSeconds: 10initialDelaySeconds: 10failureThreshold: 6timeoutSeconds: 10readinessProbe:exec:command:- /bin/calico-node- -felix-ready- -bird-readyperiodSeconds: 10timeoutSeconds: 10volumeMounts:# For maintaining CNI plugin API credentials.- mountPath: /host/etc/cni/net.dname: cni-net-dirreadOnly: false- mountPath: /lib/modulesname: lib-modulesreadOnly: true- mountPath: /run/xtables.lockname: xtables-lockreadOnly: false- mountPath: /var/run/caliconame: var-run-calicoreadOnly: false- mountPath: /var/lib/caliconame: var-lib-calicoreadOnly: false- mountPath: /calico-secretsname: etcd-certs- name: policysyncmountPath: /var/run/nodeagent# For eBPF mode, we need to be able to mount the BPF filesystem at /sys/fs/bpf so we mount in the# parent directory.- name: sysfsmountPath: /sys/fs/# Bidirectional means that, if we mount the BPF filesystem at /sys/fs/bpf it will propagate to the host.# If the host is known to mount that filesystem already then Bidirectional can be omitted.mountPropagation: Bidirectional- name: cni-log-dirmountPath: /var/log/calico/cnireadOnly: truevolumes:# Used by calico-node.- name: lib-moduleshostPath:path: /lib/modules- name: var-run-calicohostPath:path: /var/run/calico- name: var-lib-calicohostPath:path: /var/lib/calico- name: xtables-lockhostPath:path: /run/xtables.locktype: FileOrCreate- name: sysfshostPath:path: /sys/fs/type: DirectoryOrCreate# Used to install CNI.- name: cni-bin-dirhostPath:path: /opt/cni/bin- name: cni-net-dirhostPath:path: /etc/cni/net.d# Used to access CNI logs.- name: cni-log-dirhostPath:path: /var/log/calico/cni# Mount in the etcd TLS secrets with mode 400.# See https://kubernetes.io/docs/concepts/configuration/secret/- name: etcd-certssecret:secretName: calico-etcd-secretsdefaultMode: 0400# Used to create per-pod Unix Domain Sockets- name: policysynchostPath:type: DirectoryOrCreatepath: /var/run/nodeagent# Used to install Flex Volume Driver- name: flexvol-driver-hosthostPath:type: DirectoryOrCreatepath: /usr/libexec/kubernetes/kubelet-plugins/volume/exec/nodeagent~uds---apiVersion: v1kind: ServiceAccountmetadata:name: calico-nodenamespace: kube-system---# Source: calico/templates/calico-kube-controllers.yaml# See https://github.com/projectcalico/kube-controllersapiVersion: apps/v1kind: Deploymentmetadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersspec:# The controllers can only have a single active instance.replicas: 1selector:matchLabels:k8s-app: calico-kube-controllersstrategy:type: Recreatetemplate:metadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersspec:nodeSelector:kubernetes.io/os: linuxtolerations:# Mark the pod as a critical add-on for rescheduling.- key: CriticalAddonsOnlyoperator: Exists- key: node-role.kubernetes.io/mastereffect: NoScheduleserviceAccountName: calico-kube-controllerspriorityClassName: system-cluster-critical# The controllers must run in the host network namespace so that# it isn't governed by policy that would prevent it from working.hostNetwork: truecontainers:- name: calico-kube-controllersimage: registry.cn-beijing.aliyuncs.com/dotbalo/kube-controllers:v3.22.0env:# The location of the etcd cluster.- name: ETCD_ENDPOINTSvalueFrom:configMapKeyRef:name: calico-configkey: etcd_endpoints# Location of the CA certificate for etcd.- name: ETCD_CA_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_ca# Location of the client key for etcd.- name: ETCD_KEY_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_key# Location of the client certificate for etcd.- name: ETCD_CERT_FILEvalueFrom:configMapKeyRef:name: calico-configkey: etcd_cert# Choose which controllers to run.- name: ENABLED_CONTROLLERSvalue: policy,namespace,serviceaccount,workloadendpoint,nodevolumeMounts:# Mount in the etcd TLS secrets.- mountPath: /calico-secretsname: etcd-certslivenessProbe:exec:command:- /usr/bin/check-status- -lperiodSeconds: 10initialDelaySeconds: 10failureThreshold: 6timeoutSeconds: 10readinessProbe:exec:command:- /usr/bin/check-status- -rperiodSeconds: 10volumes:# Mount in the etcd TLS secrets with mode 400.# See https://kubernetes.io/docs/concepts/configuration/secret/- name: etcd-certssecret:secretName: calico-etcd-secretsdefaultMode: 0440---apiVersion: v1kind: ServiceAccountmetadata:name: calico-kube-controllersnamespace: kube-system---# This manifest creates a Pod Disruption Budget for Controller to allow K8s Cluster Autoscaler to evictapiVersion: policy/v1beta1kind: PodDisruptionBudgetmetadata:name: calico-kube-controllersnamespace: kube-systemlabels:k8s-app: calico-kube-controllersspec:maxUnavailable: 1selector:matchLabels:k8s-app: calico-kube-controllers---# Source: calico/templates/calico-typha.yaml---# Source: calico/templates/configure-canal.yaml---# Source: calico/templates/kdd-crds.yaml

修改calico-etcd.yaml的以下位置sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.68.20:2379,https://192.168.68.21:2379,https://192.168.68.22:2379"#g' calico-etcd.yamlETCD_CA=cat /etc/kubernetes/pki/etcd/ca.crt | base64 | tr -d ‘\n’<br />`ETCD_CERT=`cat /etc/kubernetes/pki/etcd/server.crt | base64 | tr -d '\n'ETCD_KEY=cat /etc/kubernetes/pki/etcd/server.key | base64 | tr -d ‘\n’<br />`sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml`<br />`sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml`<br />`POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'sed -i 's@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: "172.16.0.0/12"@ value: '"${POD_SUBNET}"'@g' calico-etcd.yamlkubectl apply -f calico-etcd.yaml

部署calico 时报错,下载的官方的calico.yaml 文件 【数据存储在Kubernetes API Datastore服务中】https://docs.projectcalico.org/manifests/calico.yaml

报错内容:

Warning: policy/v1beta1 PodDisruptionBudget is deprecated in v1.21+, unavailable in v1.25+; use policy/v1 PodDisruptionBudget

解决方法: 其实报错很很明显了 calico.yaml 文件里的 用的是 v1beta1 PodDisruptionBudget ,而在K8S 1.21 版本之后就不支持v1beta1 PodDisruptionBudget ,改为支持

v1 PodDisruptionBudget

使用 Kubernetes API 数据存储安装 Calico,50 个节点或更少

1.下载 Kubernetes API 数据存储的 Calico 网络清单。curl https://docs.projectcalico.org/manifests/calico.yaml -O

2.如果您使用的是 Pod CIDR ,请跳到下一步。如果您将不同的 pod CIDR 与 kubeadm 配合使用,则无需进行任何更改 - Calico 将根据正在运行的配置自动检测 CIDR。对于其他平台,请确保取消对清单中CALICO_IPV4POOL_CIDR变量的注释,并将其设置为与所选 pod CIDR 相同的值。192.168.0.0/16

- name: CALICO_IPV4POOL_CIDRvalue: "172.16.0.0/12"修改calico.yaml取消注释,value和podSubnet一样

3.根据需要自定义清单。

4.使用以下命令应用清单。kubectl apply -f calico.yaml

使用 Kubernetes API 数据存储安装 Calico,超过 50 个节点

- 下载 Kubernetes API 数据存储的 Calico 网络清单。

- $ curl https://docs.projectcalico.org/manifests/calico-typha.yaml -o calico.yaml

- 如果您使用的是 Pod CIDR ,请跳到下一步。如果您将不同的 pod CIDR 与 kubeadm 配合使用,则无需进行任何更改 - Calico 将根据正在运行的配置自动检测 CIDR。对于其他平台,请确保取消对清单中CALICO_IPV4POOL_CIDR变量的注释,并将其设置为与所选 pod CIDR 相同的值。192.168.0.0/16

- 将副本计数修改为命名 中的所需数字。Deploymentcalico-typha

- apiVersion: apps/v1beta1 kind: Deployment metadata: name: calico-typha … spec: … replicas:

- 我们建议每 200 个节点至少一个副本,并且不超过 20 个副本。在生产环境中,我们建议至少使用三个副本,以减少滚动升级和故障的影响。副本数应始终小于节点数,否则滚动升级将停止。此外,Typha 仅当 Typha 实例数少于节点数时,才有助于扩展。

- 警告:如果将 Typha 部署副本计数设置为 0,则 Felix 将不会启动。typha_service_name

- 如果需要,自定义清单。

- 应用清单。

- $ kubectl apply -f calico.yaml

使用 etcd 数据存储安装 Calico

注意:不建议将etcd数据库用于新安装。但是,如果您将Calico作为OpenStack和Kubernetes的网络插件运行,则可以选择它。

- 下载 etcd 的 Calico 网络清单。

- $ curl https://docs.projectcalico.org/manifests/calico-etcd.yaml -o calico.yaml

- 如果您使用的是 Pod CIDR ,请跳到下一步。如果您将不同的 pod CIDR 与 kubeadm 配合使用,则无需进行任何更改 - Calico 将根据正在运行的配置自动检测 CIDR。对于其他平台,请确保取消对清单中CALICO_IPV4POOL_CIDR变量的注释,并将其设置为与所选 pod CIDR 相同的值。192.168.0.0/16

- 在 命名中, 将 的值设置为 etcd 服务器的 IP 地址和端口。

- ConfigMap

- calico-config

- etcd_endpoints

- 提示:您可以使用逗号作为分隔符指定多个。etcd_endpoint

- 如果需要,自定义清单。

- 使用以下命令应用清单。

- $ kubectl apply -f calico.yaml

1.7.Metrics部署和Dashboard部署

1.Metrics部署

下载并修改名称

docker pull bitnami/metrics-server:0.5.2

docker tag bitnami/metrics-server:0.5.2 k8s.gcr.io/metrics-server/metrics-server:v0.5.2

vim components0.5.2.yaml

$ cat components0.5.2.yamlapiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:k8s-app: metrics-serverrbac.authorization.k8s.io/aggregate-to-admin: "true"rbac.authorization.k8s.io/aggregate-to-edit: "true"rbac.authorization.k8s.io/aggregate-to-view: "true"name: system:aggregated-metrics-readerrules:- apiGroups:- metrics.k8s.ioresources:- pods- nodesverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata:labels:k8s-app: metrics-servername: system:metrics-serverrules:- apiGroups:- ""resources:- pods- nodes- nodes/stats- namespaces- configmapsverbs:- get- list- watch---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:labels:k8s-app: metrics-servername: metrics-server-auth-readernamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: extension-apiserver-authentication-readersubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:k8s-app: metrics-servername: metrics-server:system:auth-delegatorroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:auth-delegatorsubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:labels:k8s-app: metrics-servername: system:metrics-serverroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:metrics-serversubjects:- kind: ServiceAccountname: metrics-servernamespace: kube-system---apiVersion: v1kind: Servicemetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-systemspec:ports:- name: httpsport: 443protocol: TCPtargetPort: httpsselector:k8s-app: metrics-server---apiVersion: apps/v1kind: Deploymentmetadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-systemspec:selector:matchLabels:k8s-app: metrics-serverstrategy:rollingUpdate:maxUnavailable: 0template:metadata:labels:k8s-app: metrics-serverspec:containers:- args:- --cert-dir=/tmp- --secure-port=4443- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname- --kubelet-use-node-status-port- --metric-resolution=15s- --kubelet-insecure-tlsimage: k8s.gcr.io/metrics-server/metrics-server:v0.5.2imagePullPolicy: IfNotPresentlivenessProbe:failureThreshold: 3httpGet:path: /livezport: httpsscheme: HTTPSperiodSeconds: 10name: metrics-serverports:- containerPort: 4443name: httpsprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /readyzport: httpsscheme: HTTPSinitialDelaySeconds: 20periodSeconds: 10resources:requests:cpu: 100mmemory: 200MisecurityContext:readOnlyRootFilesystem: truerunAsNonRoot: truerunAsUser: 1000volumeMounts:- mountPath: /tmpname: tmp-dirnodeSelector:kubernetes.io/os: linuxpriorityClassName: system-cluster-criticalserviceAccountName: metrics-servervolumes:- emptyDir: {}name: tmp-dir---apiVersion: apiregistration.k8s.io/v1kind: APIServicemetadata:labels:k8s-app: metrics-servername: v1beta1.metrics.k8s.iospec:group: metrics.k8s.iogroupPriorityMinimum: 100insecureSkipTLSVerify: trueservice:name: metrics-servernamespace: kube-systemversion: v1beta1versionPriority: 100

**kubectl create -f **components0.5.2.yaml

1.23.5存在问题下面的方法

地址:https://github.com/kubernetes-sigs/metrics-server

将Master01节点的front-proxy-ca.crt复制到所有Node节点**scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node01:/etc/kubernetes/pki/front-proxy-ca.crt****scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node(其他节点自行拷贝):/etc/kubernetes/pki/front-proxy-ca.crt**

增加证书vim components.yaml

- —requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt # change to front-proxy-ca.crt for kubeadm

安装metrics server**kubectl create -f **components.yaml

2.Dashboard部署

地址:https://github.com/kubernetes/dashboardkubectl apply -f recommended.yaml

创建管理员用户vim admin.yaml

apiVersion: v1kind: ServiceAccountmetadata:name: admin-usernamespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata:name: admin-userannotations:rbac.authorization.kubernetes.io/autoupdate: "true"roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-adminsubjects:- kind: ServiceAccountname: admin-usernamespace: kube-system

kubectl apply -f admin.yaml -n kube-system

启动错误:

Events:Type Reason Age From Message---- ------ ---- ---- -------Normal Scheduled 4m52s default-scheduler Successfully assigned kube-system/metrics-server-d9c898cdf-kpc5k to k8s-node03Normal SandboxChanged 4m32s (x2 over 4m34s) kubelet Pod sandbox changed, it will be killed and re-created.Normal Pulling 3m34s (x3 over 4m49s) kubelet Pulling image "k8s.gcr.io/metrics-server/metrics-server:v0.5.2"Warning Failed 3m19s (x3 over 4m34s) kubelet Failed to pull image "k8s.gcr.io/metrics-server/metrics-server:v0.5.2": rpc error: code = Unknown desc = Error response from daemon: Get https://k8s.gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)Warning Failed 3m19s (x3 over 4m34s) kubelet Error: ErrImagePullNormal BackOff 2m56s (x7 over 4m32s) kubelet Back-off pulling image "k8s.gcr.io/metrics-server/metrics-server:v0.5.2"Warning Failed 2m56s (x7 over 4m32s) kubelet Error: ImagePullBackOff

解决方法:

需要手动下载镜像再进行改名(每个节点都需要下载)

镜像地址:bitnami/metrics-server - Docker Image | Docker Hub

docker pull bitnami/metrics-server:0.6.1docker tag bitnami/metrics-server:0.6.1 k8s.gcr.io/metrics-server/metrics-server:v0.6.1

3.登录dashboard

更改dashboard的svc为NodePort:

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

将ClusterIP更改为NodePort(如果已经为NodePort忽略此步骤):

查看端口号:

kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

根据自己的实例端口号,通过任意安装了kube-proxy的宿主机的IP+端口即可访问到dashboard:

访问Dashboard:https://10.103.236.201:18282(请更改18282为自己的端口),选择登录方式为令牌(即token方式)

查看token值:kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

4.一些必须的配置更改

将Kube-proxy改为ipvs模式,因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下:

在master01节点执行

kubectl edit cm kube-proxy -n kube-system

mode: ipvs

更新Kube-Proxy的Pod:kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"date +’%s’\"}}}}}" -n kube-system

验证Kube-Proxy模式

curl 127.0.0.1:10249/proxyMode

1.8dashboard其它选择

Kuboard - Kubernetes 多集群管理界面

地址:https://kuboard.cn/

- 用户名: admin

密 码: Kuboard123

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

查看watch kubectl get pods -n kuboard

访问 Kuboard在浏览器中打开链接 http://your-node-ip-address:30080 192.168.68.18:30080

- 输入初始用户名和密码,并登录

- 用户名:admin

- 密码:Kuboard123

卸载

- 执行 Kuboard v3 的卸载

kubectl apply -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

- kubectl delete -f https://addons.kuboard.cn/kuboard/kuboard-v3.yaml

- 清理遗留数据

在 master 节点以及带有 k8s.kuboard.cn/role=etcd 标签的节点上执行

rm -rf /usr/share/kuboard

2.二进制高可用安装k8s集群(生产级)

2.1高可用基本配置

1.修改apt源

所有节点配置vim /etc/apt/sources.list

deb http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-proposed main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-proposed main restricted universe multiversedeb http://mirrors.aliyun.com/ubuntu/ focal-backports main restricted universe multiversedeb-src http://mirrors.aliyun.com/ubuntu/ focal-backports main restricted universe multiverse

ubuntu镜像-ubuntu下载地址-ubuntu安装教程-阿里巴巴开源镜像站 (aliyun.com)

2.修改hosts文件

所有节点配置vim /etc/hosts

192.168.68.50 k8s-master01192.168.68.51 k8s-master02192.168.68.52 k8s-master03192.168.68.48 k8s-master-lb # 如果不是高可用集群,该IP为Master01的IP192.168.68.53 k8s-node01192.168.68.54 k8s-node02

3.关闭swap分区

所有节点配置vim /etc/fstab

临时禁用:swapoff -a永久禁用:vim /etc/fstab 注释掉swap条目,重启系统

4.时间同步

所有节点

apt install ntpdate -yrm -rf /etc/localtimeln -s /usr/share/zoneinfo/Asia/Shanghai /etc/localtimentpdate cn.ntp.org.cn# 加入到crontab -e 3*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com# 加入到开机自动同步,vim /etc/rc.localntpdate cn.ntp.org.cn

5.配置limit

所有节点ulimit -SHn 65535vim /etc/security/limits.conf

# 末尾添加如下内容

* soft nofile 655360* hard nofile 131072* soft nproc 655350* hard nproc 655350* soft memlock unlimited* hard memlock unlimited

6.修改内核参数

cat >/etc/sysctl.conf <<EOF# Controls source route verificationnet.ipv4.conf.default.rp_filter = 1net.ipv4.ip_nonlocal_bind = 1net.ipv4.ip_forward = 1# Do not accept source routingnet.ipv4.conf.default.accept_source_route = 0# Controls the System Request debugging functionality of the kernelkernel.sysrq = 0# Controls whether core dumps will append the PID to the core filename.# Useful for debugging multi-threadedapplications. kernel.core_uses_pid = 1# Controls the use of TCP syncookiesnet.ipv4.tcp_syncookies = 1# Disable netfilter on bridges.net.bridge.bridge-nf-call-ip6tables = 0net.bridge.bridge-nf-call-iptables = 0net.bridge.bridge-nf-call-arptables = 0# Controls the default maxmimum size of a mesage queuekernel.msgmnb = 65536# # Controls the maximum size of a message, in byteskernel.msgmax = 65536# Controls the maximum shared segment size, in byteskernel.shmmax = 68719476736# # Controls the maximum number of shared memory segments, in pageskernel.shmall = 4294967296# TCP kernel paramaternet.ipv4.tcp_mem = 786432 1048576 1572864net.ipv4.tcp_rmem = 4096 87380 4194304net.ipv4.tcp_wmem = 4096 16384 4194304 net.ipv4.tcp_window_scaling = 1net.ipv4.tcp_sack = 1# socket buffernet.core.wmem_default = 8388608net.core.rmem_default = 8388608net.core.rmem_max = 16777216net.core.wmem_max = 16777216net.core.netdev_max_backlog = 262144net.core.somaxconn = 20480net.core.optmem_max = 81920# TCP connnet.ipv4.tcp_max_syn_backlog = 262144net.ipv4.tcp_syn_retries = 3net.ipv4.tcp_retries1 = 3net.ipv4.tcp_retries2 = 15# tcp conn reusenet.ipv4.tcp_timestamps = 0net.ipv4.tcp_tw_reuse = 0net.ipv4.tcp_tw_recycle = 0net.ipv4.tcp_fin_timeout = 1net.ipv4.tcp_max_tw_buckets = 20000net.ipv4.tcp_max_orphans = 3276800net.ipv4.tcp_synack_retries = 1net.ipv4.tcp_syncookies = 1# keepalive connnet.ipv4.tcp_keepalive_time = 300net.ipv4.tcp_keepalive_intvl = 30net.ipv4.tcp_keepalive_probes = 3net.ipv4.ip_local_port_range = 10001 65000# swapvm.overcommit_memory = 0vm.swappiness = 10#net.ipv4.conf.eth1.rp_filter = 0#net.ipv4.conf.lo.arp_ignore = 1#net.ipv4.conf.lo.arp_announce = 2#net.ipv4.conf.all.arp_ignore = 1#net.ipv4.conf.all.arp_announce = 2EOF

安装常用软件apt install wget jq psmisc vim net-tools telnet lvm2 git -y

所有节点安装ipvsadm

apt install ipvsadm ipset conntrack -ymodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrackvim /etc/modules-load.d/ipvs.conf# 加入以下内容ip_vsip_vs_lcip_vs_wlcip_vs_rrip_vs_wrrip_vs_lblcip_vs_lblcrip_vs_dhip_vs_ship_vs_foip_vs_nqip_vs_sedip_vs_ftpip_vs_shnf_conntrackip_tablesip_setxt_setipt_setipt_rpfilteript_REJECTipip

然后执行systemctl enable —now systemd-modules-load.service即可

开启一些k8s集群中必须的内核参数,所有节点配置k8s内核

cat <<EOF > /etc/sysctl.d/k8s.confnet.ipv4.ip_forward = 1net.bridge.bridge-nf-call-iptables = 1net.bridge.bridge-nf-call-ip6tables = 1fs.may_detach_mounts = 1net.ipv4.conf.all.route_localnet = 1vm.overcommit_memory=1vm.panic_on_oom=0fs.inotify.max_user_watches=89100fs.file-max=52706963fs.nr_open=52706963net.netfilter.nf_conntrack_max=2310720net.ipv4.tcp_keepalive_time = 600net.ipv4.tcp_keepalive_probes = 3net.ipv4.tcp_keepalive_intvl =15net.ipv4.tcp_max_tw_buckets = 36000net.ipv4.tcp_tw_reuse = 1net.ipv4.tcp_max_orphans = 327680net.ipv4.tcp_orphan_retries = 3net.ipv4.tcp_syncookies = 1net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.ip_conntrack_max = 65536net.ipv4.tcp_max_syn_backlog = 16384net.ipv4.tcp_timestamps = 0net.core.somaxconn = 16384EOF

sysctl —system

所有节点配置完内核后,重启服务器,保证重启后内核依旧加载

reboot

查看

lsmod | grep —color=auto -e ip_vs -e nf_conntrack

8.Master01节点免密钥登录其他节点

安装过程中生成配置文件和证书均在Master01上操作,集群管理也在Master01上操作,阿里云或者AWS上需要单独一台kubectl服务器。密钥配置如下:

ssh-keygen -t rsa -f /root/.ssh/id_rsa -C "192.168.68.10@k8s-master01" -N "" for i in k8s-master01 k8s-master02 k8s-node01 k8s-node02 ;do ssh-copy-id -i /root/.ssh/id_rsa.pub $i;done

9.下载安装文件

cd /root/ ; git clone [https://github.com/dotbalo/k8s-ha-install.git](https://github.com/dotbalo/k8s-ha-install.git)

2.2基本组件安装

1.所有节点安装docker

docker20.10版本K8S不支持需要安装19.03

将官方 Docker 版本库的 GPG 密钥添加到系统中:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

将 Docker 版本库添加到APT源:

add-apt-repository “deb [arch=amd64] https://download.docker.com/linux/ubuntu focal stable”

我们用新添加的 Docker 软件包来进行升级更新。

apt update

确保要从 Docker 版本库,而不是默认的 Ubuntu 版本库进行安装:

apt-cache policy docker-ce

安装 Docker :

apt install docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal -y

启动docker

systemctl status docker

提示:

由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd

registry-mirros配置镜像加速

mkdir /etc/dockercat > /etc/docker/daemon.json <<EOF{"exec-opts": ["native.cgroupdriver=systemd"],"registry-mirrors": ["https://zksmnpbq.mirror.aliyuncs.com"]}EOF

设置开机自启**systemctl daemon-reload && systemctl enable --now docker**

2.k8s及etcd安装

以下在master01上执行

1.下载kubernetes安装包

wget [https://dl.k8s.io/v1.23.5/kubernetes-server-linux-amd64.tar.gz](https://dl.k8s.io/v1.23.5/kubernetes-server-linux-amd64.tar.gz)

2.下载etcd安装包

wget https://github.com/etcd-io/etcd/releases/download/v3.5.2/etcd-v3.5.2-linux-amd64.tar.gz

3.解压kubernetes安装文件

**tar -xf kubernetes-server-linux-amd64.tar.gz --strip-components=3 -C /usr/local/bin kubernetes/server/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy}**

4.解压etcd安装文件

**tar -zxvf etcd-v3.5.2-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.5.2-linux-amd64/etcd{,ctl}**

5.版本查看

kubelet --versionetcdctl version

6.将组件发送到其他节点

for NODE in k8s-master02 k8s-master03; do echo $NODE; scp /usr/local/bin/kube{let,ctl,-apiserver,-controller-manager,-scheduler,-proxy} $NODE:/usr/local/bin/; scp /usr/local/bin/etcd* $NODE:/usr/local/bin/; donefor NODE in k8s-node01 k8s-node02; do scp /usr/local/bin/kube{let,-proxy} $NODE:/usr/local/bin/ ; done

所有节点创建/opt/cni/bin目录

mkdir -p /opt/cni/bin

切换分支

Master01切换到1.23.x分支(其他版本可以切换到其他分支)

cd k8s-ha-install && git checkout manual-installation-v1.23.x

2.3生成证书

二进制安装最关键步骤,一步错误全盘皆输,一定要注意每个步骤都要是正确的

1.Master01下载生成证书工具

wget “https://pkg.cfssl.org/R1.2/cfssl_linux-amd64“ -O /usr/local/bin/cfssl

wget “https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64“ -O /usr/local/bin/cfssljson

chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

2.etcd证书

所有Master节点创建etcd证书目录

mkdir /etc/etcd/ssl -p

所有节点创建kubernetes相关目录

mkdir -p /etc/kubernetes/pki

Master01节点生成etcd证书

生成证书的CSR文件:证书签名请求文件,配置了一些域名、公司、单位

cd /root/k8s-ha-install/pki

# 生成etcd CA证书和CA证书的key

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /etc/etcd/ssl/etcd-ca

cfssl gencert \-ca=/etc/etcd/ssl/etcd-ca.pem \-ca-key=/etc/etcd/ssl/etcd-ca-key.pem \-config=ca-config.json \-hostname=127.0.0.1,k8s-master01,k8s-master02,k8s-master03,192.168.68.50,192.168.68.51,192.168.68.52 \-profile=kubernetes \etcd-csr.json | cfssljson -bare /etc/etcd/ssl/etcd执行结果2022/04/08 07:09:20 [INFO] generate received request2022/04/08 07:09:20 [INFO] received CSR2022/04/08 07:09:20 [INFO] generating key: rsa-20482022/04/08 07:09:21 [INFO] encoded CSR2022/04/08 07:09:21 [INFO] signed certificate with serial number 728982787326070968690424877239840705408470785677

将证书复制到其他节点

MasterNodes=’k8s-master02 k8s-master03’

for NODE in k8s-master02 k8s-master03; do

ssh $NODE “mkdir -p /etc/etcd/ssl”

for FILE in etcd-ca-key.pem etcd-ca.pem etcd-key.pem etcd.pem; do

scp /etc/etcd/ssl/${FILE} $NODE:/etc/etcd/ssl/${FILE}

done

done

3.k8s组件证书

Master01生成kubernetes证书

cd /root/k8s-ha-install/pki

cfssl gencert -initca ca-csr.json | cfssljson -bare /etc/kubernetes/pki/ca

10.96.0.是k8s service的网段,如果说需要更改k8s service网段,那就需要更改10.96.0.1,

# 如果不是高可用集群,192.168.68.48为Master01的IP

cfssl gencert -ca=/etc/kubernetes/pki/ca.pem -ca-key=/etc/kubernetes/pki/ca-key.pem -config=ca-config.json -hostname=10.96.0.1,192.168.68.48,127.0.0.1,kubernetes,kubernetes.default,kubernetes.default.svc,kubernetes.default.svc.cluster,kubernetes.default.svc.cluster.local,192.168.68.50,192.168.68.51,192.168.68.52 -profile=kubernetes apiserver-csr.json | cfssljson -bare /etc/kubernetes/pki/apiserver

生成apiserver的聚合证书。Requestheader-client-xxx requestheader-allowwd-xxx:aggerator

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-ca

cfssl gencert -ca=/etc/kubernetes/pki/front-proxy-ca.pem -ca-key=/etc/kubernetes/pki/front-proxy-ca-key.pem -config=ca-config.json -profile=kubernetes front-proxy-client-csr.json | cfssljson -bare /etc/kubernetes/pki/front-proxy-client

返回结果(忽略警告)

2022/04/08 07:26:57 [INFO] generate received request

2022/04/08 07:26:57 [INFO] received CSR

2022/04/08 07:26:57 [INFO] generating key: rsa-2048

2022/04/08 07:26:58 [INFO] encoded CSR

2022/04/08 07:26:58 [INFO] signed certificate with serial number 429580583316239045765562931851686133793149712576

2022/04/08 07:26:58 [WARNING] This certificate lacks a “hosts” field. This makes it unsuitable for

websites. For more information see the Baseline Requirements for the Issuance and Management

of Publicly-Trusted Certificates, v.1.1.6, from the CA/Browser Forum (https://cabforum.org);

specifically, section 10.2.3 (“Information Requirements”).

生成controller-manage的证书

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /etc/kubernetes/pki/controller-manager

# 注意,如果不是高可用集群,192.168.68.48:8443改为master01的地址,8443改为apiserver的端口,默认是6443

# set-cluster:设置一个集群项,

kubectl config set-cluster kubernetes \

—certificate-authority=/etc/kubernetes/pki/ca.pem \

—embed-certs=true \

—server=https://192.168.68.48:8443 \

—kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# set-credentials 设置一个用户项

kubectl config set-credentials system:kube-controller-manager \

—client-certificate=/etc/kubernetes/pki/controller-manager.pem \

—client-key=/etc/kubernetes/pki/controller-manager-key.pem \

—embed-certs=true \

—kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 设置一个环境项,一个上下文

kubectl config set-context system:kube-controller-manager@kubernetes \

—cluster=kubernetes \

—user=system:kube-controller-manager \

—kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

# 使用某个环境当做默认环境

kubectl config use-context system:kube-controller-manager@kubernetes \

—kubeconfig=/etc/kubernetes/controller-manager.kubeconfig

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /etc/kubernetes/pki/scheduler

# 注意,如果不是高可用集群,192.168.68.48:8443改为master01的地址,8443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes \

—certificate-authority=/etc/kubernetes/pki/ca.pem \

—embed-certs=true \

—server=https://192.168.68.48:8443 \

—kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler \

—client-certificate=/etc/kubernetes/pki/scheduler.pem \

—client-key=/etc/kubernetes/pki/scheduler-key.pem \

—embed-certs=true \

—kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config set-context system:kube-scheduler@kubernetes \

—cluster=kubernetes \

—user=system:kube-scheduler \

—kubeconfig=/etc/kubernetes/scheduler.kubeconfig

kubectl config use-context system:kube-scheduler@kubernetes \

—kubeconfig=/etc/kubernetes/scheduler.kubeconfig

cfssl gencert \

-ca=/etc/kubernetes/pki/ca.pem \

-ca-key=/etc/kubernetes/pki/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /etc/kubernetes/pki/admin

# 注意,如果不是高可用集群,192.168.68.48:8443改为master01的地址,8443改为apiserver的端口,默认是6443

kubectl config set-cluster kubernetes —certificate-authority=/etc/kubernetes/pki/ca.pem —embed-certs=true —server=https://192.168.68.48:8443 —kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-credentials kubernetes-admin —client-certificate=/etc/kubernetes/pki/admin.pem —client-key=/etc/kubernetes/pki/admin-key.pem —embed-certs=true —kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config set-context kubernetes-admin@kubernetes —cluster=kubernetes —user=kubernetes-admin —kubeconfig=/etc/kubernetes/admin.kubeconfig

kubectl config use-context kubernetes-admin@kubernetes —kubeconfig=/etc/kubernetes/admin.kubeconfig

创建ServiceAccount Key à secret

openssl genrsa -out /etc/kubernetes/pki/sa.key 2048

返回结果

Generating RSA private key, 2048 bit long modulus (2 primes)

……………………………+++++

……………………………………………………………………………………………..+++++

e is 65537 (0x010001)

openssl rsa -in /etc/kubernetes/pki/sa.key -pubout -out /etc/kubernetes/pki/sa.pub

发送证书至其他节点

for NODE in k8s-master02 k8s-master03; do for FILE in $(ls /etc/kubernetes/pki | grep -v etcd); do scp /etc/kubernetes/pki/${FILE} $NODE:/etc/kubernetes/pki/${FILE};done; for FILE in admin.kubeconfig controller-manager.kubeconfig scheduler.kubeconfig; do scp /etc/kubernetes/${FILE} $NODE:/etc/kubernetes/${FILE};done;done**

查看证书文件

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/

admin.csr apiserver.csr ca.csr controller-manager.csr front-proxy-ca.csr front-proxy-client.csr sa.key scheduler-key.pem

admin-key.pem apiserver-key.pem ca-key.pem controller-manager-key.pem front-proxy-ca-key.pem front-proxy-client-key.pem sa.pub scheduler.pem

admin.pem apiserver.pem ca.pem controller-manager.pem front-proxy-ca.pem front-proxy-client.pem scheduler.csr

[root@k8s-master01 pki]# ls /etc/kubernetes/pki/ |wc -l

23

2.4高可用及etcd配置

1.etcd配置

etcd配置大致相同,注意修改每个Master节点的etcd配置的主机名和IP地址

Master01

vim /etc/etcd/etcd.config.yml

name: 'k8s-master01'data-dir: /var/lib/etcdwal-dir: /var/lib/etcd/walsnapshot-count: 5000heartbeat-interval: 100election-timeout: 1000quota-backend-bytes: 0listen-peer-urls: 'https://192.168.68.50:2380'listen-client-urls: 'https://192.168.68.50:2379,http://127.0.0.1:2379'max-snapshots: 3max-wals: 5cors:initial-advertise-peer-urls: 'https://192.168.68.50:2380'advertise-client-urls: 'https://192.168.68.50:2379'discovery:discovery-fallback: 'proxy'discovery-proxy:discovery-srv:initial-cluster: 'k8s-master01=https://192.168.68.50:2380,k8s-master02=https://192.168.68.51:2380,k8s-master03=https://192.168.68.52:2380'initial-cluster-token: 'etcd-k8s-cluster'initial-cluster-state: 'new'strict-reconfig-check: falseenable-v2: trueenable-pprof: trueproxy: 'off'proxy-failure-wait: 5000proxy-refresh-interval: 30000proxy-dial-timeout: 1000proxy-write-timeout: 5000proxy-read-timeout: 0client-transport-security:cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'client-cert-auth: truetrusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'auto-tls: truepeer-transport-security:cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'auto-tls: truedebug: falselog-package-levels:log-outputs: [default]force-new-cluster: false

Master02

vim /etc/etcd/etcd.config.yml

name: 'k8s-master02'data-dir: /var/lib/etcdwal-dir: /var/lib/etcd/walsnapshot-count: 5000heartbeat-interval: 100election-timeout: 1000quota-backend-bytes: 0listen-peer-urls: 'https://192.168.68.51:2380'listen-client-urls: 'https://192.168.68.51:2379,http://127.0.0.1:2379'max-snapshots: 3max-wals: 5cors:initial-advertise-peer-urls: 'https://192.168.68.51:2380'advertise-client-urls: 'https://192.168.68.51:2379'discovery:discovery-fallback: 'proxy'discovery-proxy:discovery-srv:initial-cluster: 'k8s-master01=https://192.168.68.50:2380,k8s-master02=https://192.168.68.51:2380,k8s-master03=https://192.168.68.52:2380'initial-cluster-token: 'etcd-k8s-cluster'initial-cluster-state: 'new'strict-reconfig-check: falseenable-v2: trueenable-pprof: trueproxy: 'off'proxy-failure-wait: 5000proxy-refresh-interval: 30000proxy-dial-timeout: 1000proxy-write-timeout: 5000proxy-read-timeout: 0client-transport-security:cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'client-cert-auth: truetrusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'auto-tls: truepeer-transport-security:cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'auto-tls: truedebug: falselog-package-levels:log-outputs: [default]force-new-cluster: false

Master03

vim /etc/etcd/etcd.config.yml

name: 'k8s-master03'data-dir: /var/lib/etcdwal-dir: /var/lib/etcd/walsnapshot-count: 5000heartbeat-interval: 100election-timeout: 1000quota-backend-bytes: 0listen-peer-urls: 'https://192.168.68.52:2380'listen-client-urls: 'https://192.168.68.52:2379,http://127.0.0.1:2379'max-snapshots: 3max-wals: 5cors:initial-advertise-peer-urls: 'https://192.168.68.52:2380'advertise-client-urls: 'https://192.168.68.52:2379'discovery:discovery-fallback: 'proxy'discovery-proxy:discovery-srv:initial-cluster: 'k8s-master01=https://192.168.68.50:2380,k8s-master02=https://192.168.68.51:2380,k8s-master03=https://192.168.68.52:2380'initial-cluster-token: 'etcd-k8s-cluster'initial-cluster-state: 'new'strict-reconfig-check: falseenable-v2: trueenable-pprof: trueproxy: 'off'proxy-failure-wait: 5000proxy-refresh-interval: 30000proxy-dial-timeout: 1000proxy-write-timeout: 5000proxy-read-timeout: 0client-transport-security:cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'client-cert-auth: truetrusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'auto-tls: truepeer-transport-security:cert-file: '/etc/kubernetes/pki/etcd/etcd.pem'key-file: '/etc/kubernetes/pki/etcd/etcd-key.pem'peer-client-cert-auth: truetrusted-ca-file: '/etc/kubernetes/pki/etcd/etcd-ca.pem'auto-tls: truedebug: falselog-package-levels:log-outputs: [default]force-new-cluster: false

创建Service

所有Master节点创建etcd service并启动

vim /usr/lib/systemd/system/etcd.service

[Unit]Description=Etcd ServiceDocumentation=https://coreos.com/etcd/docs/latest/After=network.target[Service]Type=notifyExecStart=/usr/local/bin/etcd --config-file=/etc/etcd/etcd.config.ymlRestart=on-failureRestartSec=10LimitNOFILE=65536[Install]WantedBy=multi-user.targetAlias=etcd3.service

所有Master节点创建etcd的证书目录

mkdir /etc/kubernetes/pki/etcd

ln -s /etc/etcd/ssl/* /etc/kubernetes/pki/etcd/

systemctl daemon-reload

systemctl enable —now etcd

启动异常

Job for etcd.service failed because the control process exited with error code.

See “systemctl status etcd.service” and “journalctl -xe” for details.

~ # journalctl -xe 1 ↵ root@k8s-master02Apr 09 08:24:08 k8s-master02 multipathd[707]: sda: failed to get sysfs uid: Invalid argumentApr 09 08:24:08 k8s-master02 multipathd[707]: sda: failed to get sgio uid: No such file or directoryApr 09 08:24:12 k8s-master02 systemd[1]: etcd.service: Scheduled restart job, restart counter is at 3989.-- Subject: Automatic restarting of a unit has been scheduled-- Defined-By: systemd-- Support: http://www.ubuntu.com/support---- Automatic restarting of the unit etcd.service has been scheduled, as the result for-- the configured Restart= setting for the unit.Apr 09 08:24:12 k8s-master02 systemd[1]: Stopped Etcd Service.-- Subject: A stop job for unit etcd.service has finished-- Defined-By: systemd-- Support: http://www.ubuntu.com/support---- A stop job for unit etcd.service has finished.---- The job identifier is 308572 and the job result is done.Apr 09 08:24:12 k8s-master02 systemd[1]: Starting Etcd Service...-- Subject: A start job for unit etcd.service has begun execution-- Defined-By: systemd-- Support: http://www.ubuntu.com/support---- A start job for unit etcd.service has begun execution.---- The job identifier is 308572.Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"info","ts":"2022-04-09T08:24:12.320+0800","caller":"etcdmain/config.go:339","msg":"loaded server configuration, other configuration command line flags a>Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"info","ts":"2022-04-09T08:24:12.321+0800","caller":"etcdmain/etcd.go:72","msg":"Running: ","args":["/usr/local/bin/etcd","--config-file=/etc/etcd/etcd.c>Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"info","ts":"2022-04-09T08:24:12.321+0800","caller":"embed/etcd.go:131","msg":"configuring peer listeners","listen-peer-urls":["https://192.168.68.51:238>Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"info","ts":"2022-04-09T08:24:12.321+0800","caller":"embed/etcd.go:368","msg":"closing etcd server","name":"k8s-master02","data-dir":"/var/lib/etcd","adv>Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"info","ts":"2022-04-09T08:24:12.321+0800","caller":"embed/etcd.go:370","msg":"closed etcd server","name":"k8s-master02","data-dir":"/var/lib/etcd","adve>Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"warn","ts":"2022-04-09T08:24:12.321+0800","caller":"etcdmain/etcd.go:145","msg":"failed to start etcd","error":"cannot listen on TLS for 192.168.68.51:2>Apr 09 08:24:12 k8s-master02 etcd[109903]: {"level":"fatal","ts":"2022-04-09T08:24:12.321+0800","caller":"etcdmain/etcd.go:203","msg":"discovery failed","error":"cannot listen on TLS for 192.168.68.51:2380>Apr 09 08:24:12 k8s-master02 systemd[1]: etcd.service: Main process exited, code=exited, status=1/FAILURE-- Subject: Unit process exited-- Defined-By: systemd-- Support: http://www.ubuntu.com/support---- An ExecStart= process belonging to unit etcd.service has exited.---- The process' exit code is 'exited' and its exit status is 1.Apr 09 08:24:12 k8s-master02 systemd[1]: etcd.service: Failed with result 'exit-code'.-- Subject: Unit failed-- Defined-By: systemd-- Support: http://www.ubuntu.com/support---- The unit etcd.service has entered the 'failed' state with result 'exit-code'.Apr 09 08:24:12 k8s-master02 systemd[1]: Failed to start Etcd Service.-- Subject: A start job for unit etcd.service has failed-- Defined-By: systemd-- Support: http://www.ubuntu.com/support---- A start job for unit etcd.service has finished with a failure.---- The job identifier is 308572 and the job result is failed.Apr 09 08:24:13 k8s-master02 multipathd[707]: sda: add missing pathApr 09 08:24:13 k8s-master02 multipathd[707]: sda: failed to get udev uid: Invalid argumentApr 09 08:24:13 k8s-master02 multipathd[707]: sda: failed to get sysfs uid: Invalid argumentApr 09 08:24:13 k8s-master02 multipathd[707]: sda: failed to get sgio uid: No such file or directory

1.修改/etc/multipath.conf文件

sudo vi /etc/multipath.conf

2.添加以下内容,sda视本地环境做调整

blacklist {

devnode “^sda”

}

3.重启multipath-tools服务

sudo service multipath-tools restart

[

](https://blog.csdn.net/zhangchenbin/article/details/117886448)

查看etcd状态

export ETCDCTL_API=3

etcdctl —endpoints=”192.168.68.50:2379,192.168.68.51:2379,192.168.68.52:2379” —cacert=/etc/kubernetes/pki/etcd/etcd-ca.pem —cert=/etc/kubernetes/pki/etcd/etcd.pem —key=/etc/kubernetes/pki/etcd/etcd-key.pem endpoint status —write-out=table

2.高可用配置

高可用配置(注意:如果不是高可用集群,haproxy和keepalived无需安装)

如果在云上安装也无需执行此章节的步骤,可以直接使用云上的lb,比如阿里云slb,腾讯云elb等

公有云要用公有云自带的负载均衡,比如阿里云的SLB,腾讯云的ELB,用来替代haproxy和keepalived,因为公有云大部分都是不支持keepalived的,另外如果用阿里云的话,kubectl控制端不能放在master节点,推荐使用腾讯云,因为阿里云的slb有回环的问题,也就是slb代理的服务器不能反向访问SLB,但是腾讯云修复了这个问题。

Slb -> haproxy -> apiserver

所有Master节点安装keepalived和haproxy

apt install keepalived haproxy -y

所有Master配置HAProxy,配置一样

vim /etc/haproxy/haproxy.cfg

globalmaxconn 2000ulimit-n 16384log 127.0.0.1 local0 errstats timeout 30sdefaultslog globalmode httpoption httplogtimeout connect 5000timeout client 50000timeout server 50000timeout http-request 15stimeout http-keep-alive 15sfrontend k8s-masterbind 0.0.0.0:8443bind 127.0.0.1:8443mode tcpoption tcplogtcp-request inspect-delay 5sdefault_backend k8s-masterbackend k8s-mastermode tcpoption tcplogoption tcp-checkbalance roundrobindefault-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100server k8s-master01 192.168.68.50:6443 checkserver k8s-master02 192.168.68.51:6443 checkserver k8s-master03 192.168.68.52:6443 check

Master01 keepalived

所有Master节点配置KeepAlived,配置不一样,注意区分 [root@k8s-master01 pki]# vim /etc/keepalived/keepalived.conf ,注意每个节点的IP和网卡(interface参数)

! Configuration File for keepalivedglobal_defs {router_id LVS_DEVEL}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2rise 1}vrrp_instance VI_1 {state MASTERinterface ens33mcast_src_ip 192.168.68.50virtual_router_id 51priority 101nopreemptadvert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.68.48}track_script {chk_apiserver} }

Master02 keepalived

! Configuration File for keepalivedglobal_defs {router_id LVS_DEVEL}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2rise 1}vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.68.51virtual_router_id 51priority 100nopreemptadvert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.68.48}track_script {chk_apiserver} }

Master03 keepalived

! Configuration File for keepalivedglobal_defs {router_id LVS_DEVEL}vrrp_script chk_apiserver {script "/etc/keepalived/check_apiserver.sh"interval 5weight -5fall 2rise 1}vrrp_instance VI_1 {state BACKUPinterface ens33mcast_src_ip 192.168.68.52virtual_router_id 51priority 100nopreemptadvert_int 2authentication {auth_type PASSauth_pass K8SHA_KA_AUTH}virtual_ipaddress {192.168.68.48}track_script {chk_apiserver} }

健康检查配置

所有master节点

[root@k8s-master01 keepalived]# cat /etc/keepalived/check_apiserver.sh

#!/bin/basherr=0for k in $(seq 1 3)docheck_code=$(pgrep haproxy)if [[ $check_code == "" ]]; thenerr=$(expr $err + 1)sleep 1continueelseerr=0breakfidoneif [[ $err != "0" ]]; thenecho "systemctl stop keepalived"/usr/bin/systemctl stop keepalivedexit 1elseexit 0fi

chmod +x /etc/keepalived/check_apiserver.sh

所有master节点启动haproxy和keepalived

[root@k8s-master01 keepalived]# systemctl daemon-reload

[root@k8s-master01 keepalived]# systemctl enable —now haproxy

[root@k8s-master01 keepalived]# systemctl enable —now keepalived

VIP测试

[root@k8s-master01 pki]# ping 192.168.68.48

PING 192.168.0.236 (192.168.0.236) 56(84) bytes of data.

64 bytes from 192.168.0.236: icmp_seq=1 ttl=64 time=1.39 ms

64 bytes from 192.168.0.236: icmp_seq=2 ttl=64 time=2.46 ms

64 bytes from 192.168.0.236: icmp_seq=3 ttl=64 time=1.68 ms

64 bytes from 192.168.0.236: icmp_seq=4 ttl=64 time=1.08 ms

重要:如果安装了keepalived和haproxy,需要测试keepalived是否是正常的

telnet 192.168.68.48 8443

如果ping不通且telnet没有出现 ],则认为VIP不可以,不可在继续往下执行,需要排查keepalived的问题,比如防火墙和selinux,haproxy和keepalived的状态,监听端口等

所有节点查看防火墙状态必须为disable和inactive:systemctl status firewalld

所有节点查看selinux状态,必须为disable:getenforce

master节点查看haproxy和keepalived状态:systemctl status keepalived haproxy

master节点查看监听端口:netstat -lntp

2.5k8s组件配置

1.Apiserver

所有Master节点创建kube-apiserver service,# 注意,如果不是高可用集群,192.168.0.236改为master01的地址

Master01配置

注意本文档使用的k8s service网段为10.96.0.0/12,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

[root@k8s-master01 pki]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]ExecStart=/usr/local/bin/kube-apiserver \--v=2 \--logtostderr=true \--allow-privileged=true \--bind-address=0.0.0.0 \--secure-port=6443 \--insecure-port=0 \--advertise-address=192.168.68.50 \--service-cluster-ip-range=10.96.0.0/12 \--service-node-port-range=30000-32767 \--etcd-servers=https://192.168.68.50:2379,https://192.168.68.51:2379,https://192.168.68.52:2379 \--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \--etcd-certfile=/etc/etcd/ssl/etcd.pem \--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \--client-ca-file=/etc/kubernetes/pki/ca.pem \--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \--service-account-key-file=/etc/kubernetes/pki/sa.pub \--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \--service-account-issuer=https://kubernetes.default.svc.cluster.local \--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \--authorization-mode=Node,RBAC \--enable-bootstrap-token-auth=true \--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \--requestheader-allowed-names=aggregator \--requestheader-group-headers=X-Remote-Group \--requestheader-extra-headers-prefix=X-Remote-Extra- \--requestheader-username-headers=X-Remote-User# --token-auth-file=/etc/kubernetes/token.csvRestart=on-failureRestartSec=10sLimitNOFILE=65535[Install]WantedBy=multi-user.target

Master02配置

注意本文档使用的k8s service网段为10.96.0.0/12,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

[root@k8s-master01 pki]# cat /usr/lib/systemd/system/kube-apiserver.service

[Unit]Description=Kubernetes API ServerDocumentation=https://github.com/kubernetes/kubernetesAfter=network.target[Service]ExecStart=/usr/local/bin/kube-apiserver \--v=2 \--logtostderr=true \--allow-privileged=true \--bind-address=0.0.0.0 \--secure-port=6443 \--insecure-port=0 \--advertise-address=192.168.68.51 \--service-cluster-ip-range=10.96.0.0/12 \--service-node-port-range=30000-32767 \--etcd-servers=https://192.168.68.50:2379,https://192.168.68.51:2379,https://192.168.68.52:2379 \--etcd-cafile=/etc/etcd/ssl/etcd-ca.pem \--etcd-certfile=/etc/etcd/ssl/etcd.pem \--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \--client-ca-file=/etc/kubernetes/pki/ca.pem \--tls-cert-file=/etc/kubernetes/pki/apiserver.pem \--tls-private-key-file=/etc/kubernetes/pki/apiserver-key.pem \--kubelet-client-certificate=/etc/kubernetes/pki/apiserver.pem \--kubelet-client-key=/etc/kubernetes/pki/apiserver-key.pem \--service-account-key-file=/etc/kubernetes/pki/sa.pub \--service-account-signing-key-file=/etc/kubernetes/pki/sa.key \--service-account-issuer=https://kubernetes.default.svc.cluster.local \--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \--authorization-mode=Node,RBAC \--enable-bootstrap-token-auth=true \--requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem \--proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.pem \--proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client-key.pem \--requestheader-allowed-names=aggregator \--requestheader-group-headers=X-Remote-Group \--requestheader-extra-headers-prefix=X-Remote-Extra- \--requestheader-username-headers=X-Remote-User# --token-auth-file=/etc/kubernetes/token.csvRestart=on-failureRestartSec=10sLimitNOFILE=65535[Install]WantedBy=multi-user.target

Master03配置

注意本文档使用的k8s service网段为10.96.0.0/12,该网段不能和宿主机的网段、Pod网段的重复,请按需修改

cat /usr/lib/systemd/system/kube-apiserver.service